Performance Measurement Systems in Continuous Improvement Environments: Obstacles to Their Effectiveness

Abstract

:1. Introduction

2. Methods

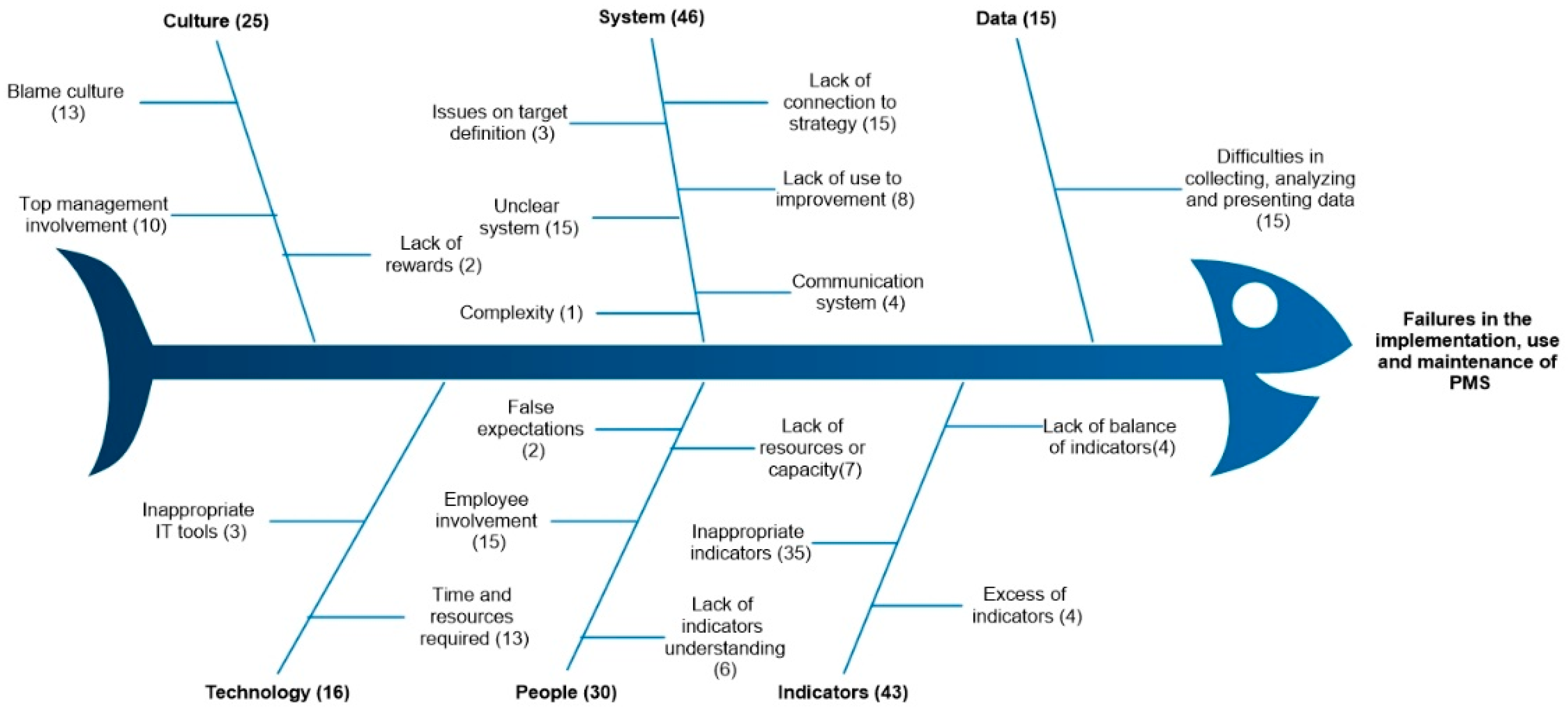

- System (Method): related with the methods used to implement and maintain the PMS;

- Data (Material): problems related with data and information management;

- People (Man): issues related with the utilization of the PMS by people;

- Technology (Machines): related with the resources and tools needed to implement and maintain the PMS;

- Indicators (Measurement): problems related with performance measures and indicators;

- Culture (Mother Nature): issues related with the culture of the organization.

3. Results

4. Discussion

4.1. System

- 1.

- Lack of connection to the strategy: This can be an obstacle to the effective functioning of a PMS and can originate in three ways:

- a.

- b.

- c.

- 2.

- Lack of use for improvement: Not using the PMS for continuous improvement makes it useless; it should be used as a support tool for the daily management of the organization [22]. The PMS alone will not translate into automatic improvements; it only allows identifying where improvements can and should be made [20,27]. If it does not have an effective improvement process associated with it, it will become irrelevant to the people in the organization [28,29,30,31].

- 3.

- Issues on target definitions: This factor arises from the difficulty in defining targets and comparing performance with them [32]. Failure to define targets will impact people’s motivation and the ability of the PMS to be used for the continuous improvement of the organization. This failure may occur when targets are not based on stakeholder interests, process boundaries and process improvement resources [29]. It may also occur if there is not a correct deployment of objectives from the top level of the organization to the level where the real improvement activities reside [29].

- 4.

- Unclear system: vagueness in the performance measurement system can lead to a different use of the PMS from what was intended, dooming it to failure. This lack of clarity can arise in several aspects of the PMS:

- a.

- Failure to define measurement frequency: performance measurement occurs too often or too rarely [23];

- b.

- c.

- Failures in the definition of the PMS: the system has not yet reached the maturity (full definition) required to be implemented [22,33] and there may be failures in the definition of operational performance, in relating performance to the process, in defining the boundaries of the process [13]. There may also be vagueness related to the hierarchical structure and its deployment in the PMS [25,31,33,34] causing uncertainty of responsibility on performance measurement [35]. One of the causes mentioned is the direct use of another existing PMS model [14] which results in a PMS that is not adjusted to the organization [19].

- 5.

- Communication system: Communication of performance measurement to employees plays an important role in involving employees in the PMS and maintaining its relevance. It is essential to ensure good communication between those who report and those who use the metrics [36]. This communication fails when it is not clear, simple, periodic and formal [19]. In order to be simpler, it must be visual [31]. Equally acting as an obstacle to the implementation and maintenance of a PMS is the fact that new processes and their impacts are not explained to employees [35], which can lead to a lack of commitment and lack of awareness.

- 6.

- Complexity: The more complex a system is, the more difficult it is to manage, the more resources and effort it takes to maintain it [14]. The complexity can also make it more difficult to communicate the system and its processes to employees, making their involvement more difficult.

4.2. Indicators

- 1.

- Inappropriate indicators: Performance indicators can be one of the factors that hinder the implementation, use and maintenance of a PMS, being pointed out as main reasons:

- a.

- b.

- c.

- Outdated indicators: historical indicators with dated and irrelevant information [39];

- d.

- Indicators that promote wrong behavior: indicators that promote wrong performance, indicators of courtesy instead of indicators of performance, indicators of behavior instead of indicators of achievement, and indicators that encourage competition rather than teamwork [37];

- e.

- f.

- g.

- h.

- 2.

- 3.

4.3. People

- 1.

- False expectations: the expectations created by people regarding the PMS can represent an obstacle to maintaining it because they can be disappointed [42]. The organization will not improve just because the PMS has been implemented. An effective improvement process must be associated with it. If only performance is measured and nothing is achieved to improve it, the PMS can be abandoned, as it will not respond to false expectations of automatic improvement.

- 2.

- Lack of resources or capacity: for an effective implementation and maintenance of a PMS, it is essential that employees are educated and trained, with all the necessary skills, to understand and use the PMS correctly. The lack of training or understanding of the PMS represents an obstacle to its implementation and maintenance [14,21], [28,38,41,43] as it can lead to an incorrect use of the PMS, leading to its distortion and consequent abandonment.

- 3.

- Employee Commitment/Involvement: this involvement can fail when there is fear of performance measurement [13], which can result in increased resistance to the implementation and use of the PMS [20,39] and/or manipulation of the performance data [24,44]. Failure to motivate employees to use the PMS means that there is no commitment to change [34], and if the PMS is not relevant to people [19,30] resistance to its use increases [22,38], condemning it to failure. Conflicts and friction between employees may also arise as a result of performance measurement [35].

- 4.

- Lack of indicator understanding: the non-understanding of performance indicators by employees may result from indicators that are not relevant to people [24], lack of training of employees to use the PMS [19] or high complexity in communicating information [35]. This can lead to a misuse of performance indicators through an incorrect interpretation of the meaning of the indicators [13]. Poor understanding of indicators can also lead to increase the resistance to use them [26,31].

4.4. Culture

- 1.

- Blame culture: using a PMS as a tool to coerce employees is referred thirteen times. Using performance measurement as a way of control and to put pressure on employees will create a blame culture [13,14,28,33,35,42], that will make the employees feel threatened [27,30,31,40,45]. This is one of the causes for the resistance of the employees to the PMS and for their lack of involvement in these practices [31]. In organizations with a blame culture, the PMS will not be used as tool to enable continuous improvement, and it may become a tool for punishing errors [19].

- 2.

- Lack of commitment from top management: the lack of commitment from top management with the PMS [14,20,21,31,34,41], or the fact that it is considered a low priority [19,22] can convey the message to other employees that the PMS is not important. Additionally, a wrong comprehension or utilization of information by the top management [13,38] can pass the message to the rest of the organization that the top management is not fully committed to the PMS.

- 3.

4.5. Technology

- 1.

- Inadequate IT tools: the lack of adequate IT tools represents an obstacle to the implementation and maintenance of a PMS [33,35,36] because it can lead to increased difficulty in collecting, analyzing and presenting data. This difficulty causes an increase in the time and resources required to implement and maintain the PMS.

- 2.

- Time and resources required: the time and resources required to implement and maintain a PMS can represent an important obstacle to its effectiveness [17,20,45]. Required resources can be underestimated by top management causing a lack of resources allocated to the PMS [14,34]. The organization may be limited in terms of the costs and resources it can allocate to the PMS [21,22,24,39,41,42,43]. Due to the lack of resources allocated to the PMS, this can be seen as a burden for the organization because it removes employees from their real responsibilities [42].

4.6. Data

- 1.

- Difficulty in collecting, analyzing and presenting data: can occur for the following reasons:

- a.

- b.

- c.

- d.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Imai, M. Gemba Kaizen, 2nd ed.; McGraw-Hill: New York, NY, USA, 1997. [Google Scholar]

- Shin, W.S.; Dahlgaard, J.J.; Dahlgaard-Park, S.M.; Kim, M.G. A Quality Scorecard for the era of Industry 4.0. Total Qual. Manag. Bus. Excell. 2018, 29, 959–976. [Google Scholar] [CrossRef]

- Ante, G.; Facchini, F.; Mossa, G.; Digiesi, S. Developing a key performance indicators tree for lean and smart production systems. IFAC-PapersOnLine 2018, 51, 13–18. [Google Scholar] [CrossRef]

- Muhammad, U.; Ferrer, B.R.; Mohammed, W.M.; Lastra, J.L.M. An approach for implementing key performance indicators of a discrete manufacturing simulator based on the ISO 22400 standard. In Proceedings of the 2018 IEEE Industrial Cyber-Physical Systems (ICPS), Saint Petersburg, Russia, 15–18 May 2018; pp. 629–636. [Google Scholar] [CrossRef]

- Womack, J.; Jones, D. Lean Thinking: Banish Waste and Create Wealth in Your Corporation; Simon & Schuster: New York, NY, USA, 1996. [Google Scholar]

- Dinis-Carvalho, J.; Macedo, H. Toyota Inspired Excellence Models. IFIP Adv. Inf. Commun. Technol. 2021, 610, 235–246. [Google Scholar] [CrossRef]

- Gupta, S.; Jain, S.K. A literature review of lean manufacturing. Int. J. Manag. Sci. Eng. Manag. 2013, 8, 241–249. [Google Scholar] [CrossRef]

- Kaplan, R.S.; Norton, D.P. The Balanced Scorecard Translating Strategy into Action; Harvard Business School Press: Boston, MA, USA, 1996. [Google Scholar]

- Rossi, A.H.G.; Marcondes, G.B.; Pontes, J.; Leitão, P.; Treinta, F.T.; De Resende, L.M.M.; Mosconi, E.; Yoshino, R.T. Lean Tools in the Context of Industry 4.0: Literature Review, Implementation and Trends. Sustainability 2022, 14, 12295. [Google Scholar] [CrossRef]

- Nagy, J.; Oláh, J.; Erdei, E.; Máté, D.; Popp, J. The Role and Impact of Industry 4.0 and the Internet of Things on the Business Strategy of the Value Chain—The Case of Hungary. Sustainability 2018, 10, 3491. [Google Scholar] [CrossRef] [Green Version]

- Neely, A.; Gregory, M.; Platts, K. Performance measurement system design: A literature review and research agenda. Int. J. Oper. Prod. Manag. 1995, 15, 80–116. [Google Scholar] [CrossRef]

- Bititci, U.S. Managing Business Performance; Wiley: New York, NY, USA, 2015. [Google Scholar]

- Zairi, M. Measuring Performance for Business Results; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1994. [Google Scholar]

- McCunn, P. The Balanced Scorecard...the Eleventh Commandment. Manag. Account. 1998, 76, 34–36. Available online: https://www.proquest.com/trade-journals/balanced-scorecard-eleventh-commandment/docview/195676560/se-2?accountid=39260 (accessed on 31 May 2022).

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G.; Group, T.P. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, 2–3. [Google Scholar] [CrossRef] [Green Version]

- Liliana, L. A new model of Ishikawa diagram for quality assessment. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2016; Volume 161. [Google Scholar] [CrossRef]

- Bourne, M.; Neely, A.; Platts, K.; Mills, J. The success and failure of performance measurement initiatives: Perceptions of participating managers. Int. J. Oper. Prod. Manag. 2002, 22, 1288–1310. [Google Scholar] [CrossRef] [Green Version]

- Watts, T.; McNair-Connolly, C.J. New performance measurement and management control systems. J. Appl. Account. Res. 2012, 13, 226–241. [Google Scholar] [CrossRef] [Green Version]

- Franco, M.; Bourne, M. Factors that play a role in ‘managing through measures. Manag. Decis. 2003, 41, 698–710. [Google Scholar] [CrossRef]

- Bourne, M. Handbook of Performance Measurement; GEE Publishing: London, UK, 2004. [Google Scholar]

- Charan, P.; Shankar, R.; Baisya, R.K. Modelling the barriers of supply chain performance measurement system implementation in the Indian automobile supply chain. Int. J. Logist. Syst. Manag. 2009, 5, 614–630. [Google Scholar] [CrossRef]

- de Waal, A.A.; Counet, H. Lessons learned from performance management systems implementations. Int. J. Product. Perform. Manag. 2009, 58, 367–390. [Google Scholar] [CrossRef]

- Franceschini, F.; Galetto, M.; Maisano, D. Management by Measurement: Designing Key Indicators and Performance Measurement Systems; SpingerScience & Business Media: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Ghalayini, A.M.; Noble, J.S. The changing basis of performance measurement. Int. J. Oper. Prod. Manag. 1996, 16, 63–80. [Google Scholar] [CrossRef]

- Dixon, J.R.; Nanni, A.J.; Vollmann, T.E. The New Performance Challenge; Dow Jones-Irwin: New York, NY, USA, 1990. [Google Scholar]

- Hatten, K.J.; Rosenthal, S.R. Why-and How-to Systematize Performance Measurement. J. Organ. Excell. 2001, 20, 59–73. [Google Scholar] [CrossRef]

- Bourne, M.; Neely, A.; Mills, J.; Platts, K. Why some performance measurement initiatives fail: Lessons from the change management literature. Int. J. Bus. Perform. Manag. 2003, 5, 245–269. [Google Scholar] [CrossRef]

- Kennerley, M.; Neely, A. A framework of the factors affecting the evolution of performance measurement systems. Int. J. Oper. Prod. Manag. 2002, 22, 1222–1245. [Google Scholar] [CrossRef] [Green Version]

- Schneiderman, A.M. Why balanced scorecards fail. J. Strateg. Perform. Meas. 1999, 2, 6–11. [Google Scholar]

- Neely, A.; Bourne, M. Why Measurement Initiatives Fail. Meas. Bus. Excell. 2000, 4, 3–7. [Google Scholar] [CrossRef]

- Meekings, A. Unlocking the potential of performance measurement: A practical implementation guide. Public Money Manag. 1995, 15, 5–12. [Google Scholar] [CrossRef]

- Giovannoni, E.; Maraghini, M.P. The challenges of integrated performance measurement systems: Integrating mechanisms for integrated measures. Account. Audit. Account. J. 2013, 26, 978–1008. [Google Scholar] [CrossRef]

- Bititci, U.; Garengo, P.; Dörfler, V.; Nudurupati, S. Performance Measurement: Challenges for Tomorrow. Int. J. Manag. Rev. 2012, 14, 305–327. [Google Scholar] [CrossRef] [Green Version]

- Townley, B.; Cooper, D.J.; Oakes, L. Performance Measures and the Rationalization of Organizations. Organ. Stud. 2003, 24, 1045–1071. [Google Scholar] [CrossRef]

- Okwir, S.; Nudurupati, S.S.; Ginieis, M.; Angelis, J. Performance measurement and management systems: A perspective from complexity theory. Int. J. Manag. Rev. 2018, 20, 731–754. [Google Scholar] [CrossRef] [Green Version]

- Lohman, C.; Fortuin, L.; Wouters, M. Designing a performance measurement system: A case study. Eur. J. Oper. Res. 2004, 156, 267–286. [Google Scholar] [CrossRef]

- Brown, M.G. Keeping Score: Using the Right Metrics to Drive World-Class Performance; CRC Press: Boca Raton, FL, USA, 1996. [Google Scholar]

- Radu-Alexandru, Ș.; Mihaela, H. Performance Management Systems—Proposing and Testing a Conceptual Model. Stud. Bus. Econ. 2019, 14, 231–244. [Google Scholar] [CrossRef] [Green Version]

- Nudurupati, S.S.; Bititci, U.S.; Kumar, V.; Chan, F.T.S. State of the art literature review on performance measurement. Comput. Ind. Eng. 2011, 60, 279–290. [Google Scholar] [CrossRef] [Green Version]

- Wouters, M.; Wilderom, C. Developing performance-measurement systems as enabling formalization: A longitudinal field study of a logistics department. Account. Organ. Soc. 2008, 33, 488–516. [Google Scholar] [CrossRef]

- Sousa, S.D.; Aspinwall, E.M.; Rodrigues, A.G. Performance measures in English small and medium enterprises: Survey results. Benchmarking Int. J. 2006, 13, 120–134. [Google Scholar] [CrossRef]

- Gabris, G.T. Recognizing Management Technique Dysfunctions: How Management Tools Often Create More Problems than They Solve. Public Product. Rev. 1986, 10, 3–19. [Google Scholar] [CrossRef]

- da Costa, M.L.R.; de Souza Giani, E.G.; Galdamez, E.V.C. Vision of the balanced Scorecard in micro, small and medium enterprises. Sist. Gestão 2019, 14, 131–141. [Google Scholar] [CrossRef]

- Simons, R. Control in an Age of Empowerment; Harvard Business Review Press: Boston, MA, USA, 1995. [Google Scholar]

- Bourne, M. Researching performance measurement system implementation: The dynamics of success and failure. Prod. Plan. Control 2005, 16, 101–113. [Google Scholar] [CrossRef]

- Amaro, P. The Integration of Lean Thinking in the Culture of Portuguese Organizations: Enablers and Inhibitors; University of Minho: Braga, Portugal, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cunha, F.; Dinis-Carvalho, J.; Sousa, R.M. Performance Measurement Systems in Continuous Improvement Environments: Obstacles to Their Effectiveness. Sustainability 2023, 15, 867. https://doi.org/10.3390/su15010867

Cunha F, Dinis-Carvalho J, Sousa RM. Performance Measurement Systems in Continuous Improvement Environments: Obstacles to Their Effectiveness. Sustainability. 2023; 15(1):867. https://doi.org/10.3390/su15010867

Chicago/Turabian StyleCunha, Flávio, José Dinis-Carvalho, and Rui M. Sousa. 2023. "Performance Measurement Systems in Continuous Improvement Environments: Obstacles to Their Effectiveness" Sustainability 15, no. 1: 867. https://doi.org/10.3390/su15010867