1. Introduction

Mapping land cover distribution and monitoring its dynamics have been identified as an important goal in environmental studies [

1,

2,

3,

4,

5]. Land cover maps provide fundamental information for many applications, including global change analysis, crop yield estimation, and forest management [

6,

7,

8]. Land cover maps can be easily generated using remote sensing images, but ensuring their accuracy is much more difficult [

9]. To improve the accuracy of classification, most land cover products use multi-temporal images as their inputs [

10,

11,

12,

13,

14]. Currently, the primary method of land cover classification using multi-temporal data involves obtaining metrics from time series (i.e., the phenological characteristics of different vegetation), and the slope, elevation, maximum, minimum, mean, standard deviation values and tasseled cap transformation of spectral-temporal features and spectral indices [

15,

16,

17,

18,

19,

20,

21]. Then the metrics are classified in a supervised approach. While these metrics have verified that the spectral-temporal features defining the various land cover classes are well captured, the use of the multi-temporal images introduces other issues that must be solved.

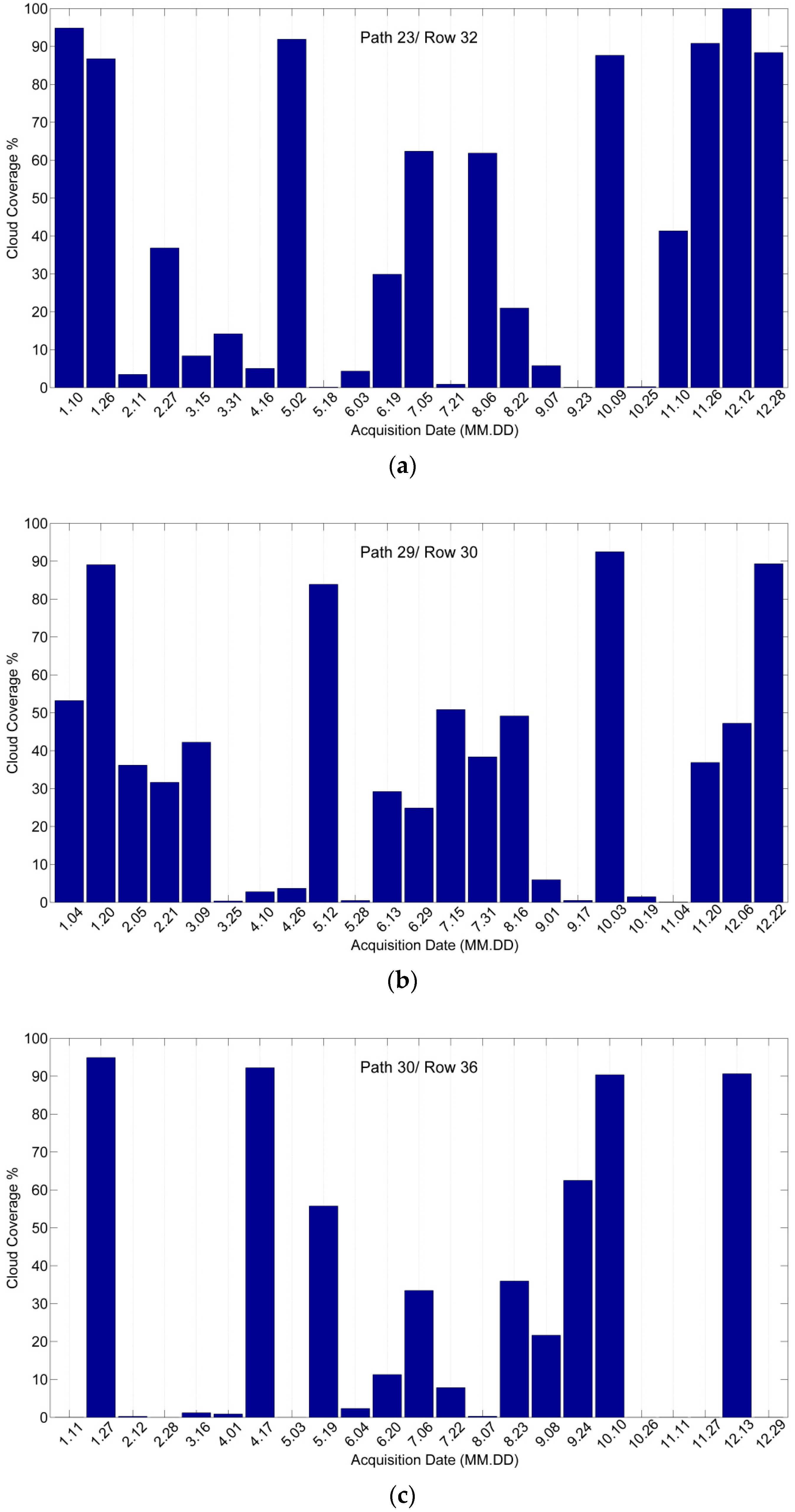

To date, most classification methods require input images with few clouds. However, this standard cannot be met by sensors with low temporal frequency, such as the Landsat series of satellites. Global land images derived from the Enhanced Thematic Mapper Plus have about 35% cloud coverage on average [

22], indicating that cloud cover is common in optical remote sensing images. The presence of cloud cover increases the difficulty of image analysis and limits the utility of optical remote sensing images. Two solutions are commonly used for cloud removal in multi-temporal images. One method is to replace the cloudy temporal data with data from images without clouds or snow taken in the same season but different years. As a result, most land cover products are mapped at intervals of 5 or 10 years, which significantly reduces “currency” [

23,

24,

25]. The other method entails filling cloudy locations using per-pixel temporal compositing procedures via adjacent temporal interpolation, a time-series curve filter or inversion of n-day observations to estimate reflectance based on the bidirectional reflectance distribution function [

26,

27,

28,

29]. These methods do not increase information content, but may introduce gross errors, particularly when continuous temporal data are unavailable. It is worth noting that, because of the low temporal frequency of the Landsat satellite, the data obtained are rarely completely cloudless and most images contain cloud cover. When cloud coverage reaches a threshold such as 30%, the temporal image is deemed unusable and the remaining 70% of the image will also be discarded [

30]. In other words, for a given satellite time series, the temporal dimension of each pixel may be not the same, despite using the same period. Therefore, only methods that work with unequal time series will be able to fully exploit the available data.

Using multi-spectral time-series data also causes data redundancy problems, because there is a high correlation between time-series images of unchanged regions [

31,

32,

33]. This point has been widely adopted in the research of change detection using remotely-sensed data [

34,

35,

36,

37,

38]. Therefore, it is necessary to reduce the dimension of multi-spectral time series prior to land cover classification, particularly for methods that use full-band satellite image time series as input [

15,

39,

40]. Dimensionality reduction (DR) techniques project high-dimension data into a low-dimensional space to maximize valuable information while minimizing noise [

41,

42]. Numerous DR approaches for processing remote sensing hyperspectral images have been proposed [

43,

44,

45,

46], which can be broadly divided into two types: linear and nonlinear methods. Due to multiple scattering effects off different ground components, the reflectance in remote sensing images is not linearly proportional to surface area. Multi-spectral and time-series data have intrinsic nonlinear characteristics [

39]. However, little research has been devoted to the application of nonlinear DR techniques to multi-spectral remote sensing time series.

At present, most of multi-temporal land cover classification methods are based on spectral-temporal information without consideration of dependencies between adjacent pixels. As we all known, spatial information is very helpful for the improvement of classification accuracy [

47,

48,

49,

50,

51]. Spectral-spatial classification of hyperspectral images shows improvements in classification accuracy compared to only spectral-based methods [

47,

48,

49,

50,

51]. Therefore, how to combine spatial information in time series land cover classification is a problem worthy of studying. Image segmentation is the main method employed to obtain spatial information [

52,

53]. There are a lot of algorithms for image segmentation. However, this method cannot be directly applied to multi-spectral time-series images because temporal information cannot be used in this context [

54]. Therefore, it is urgent to have a method to preform segmentation based on spectral-temporal information for multi-spectral time series images.

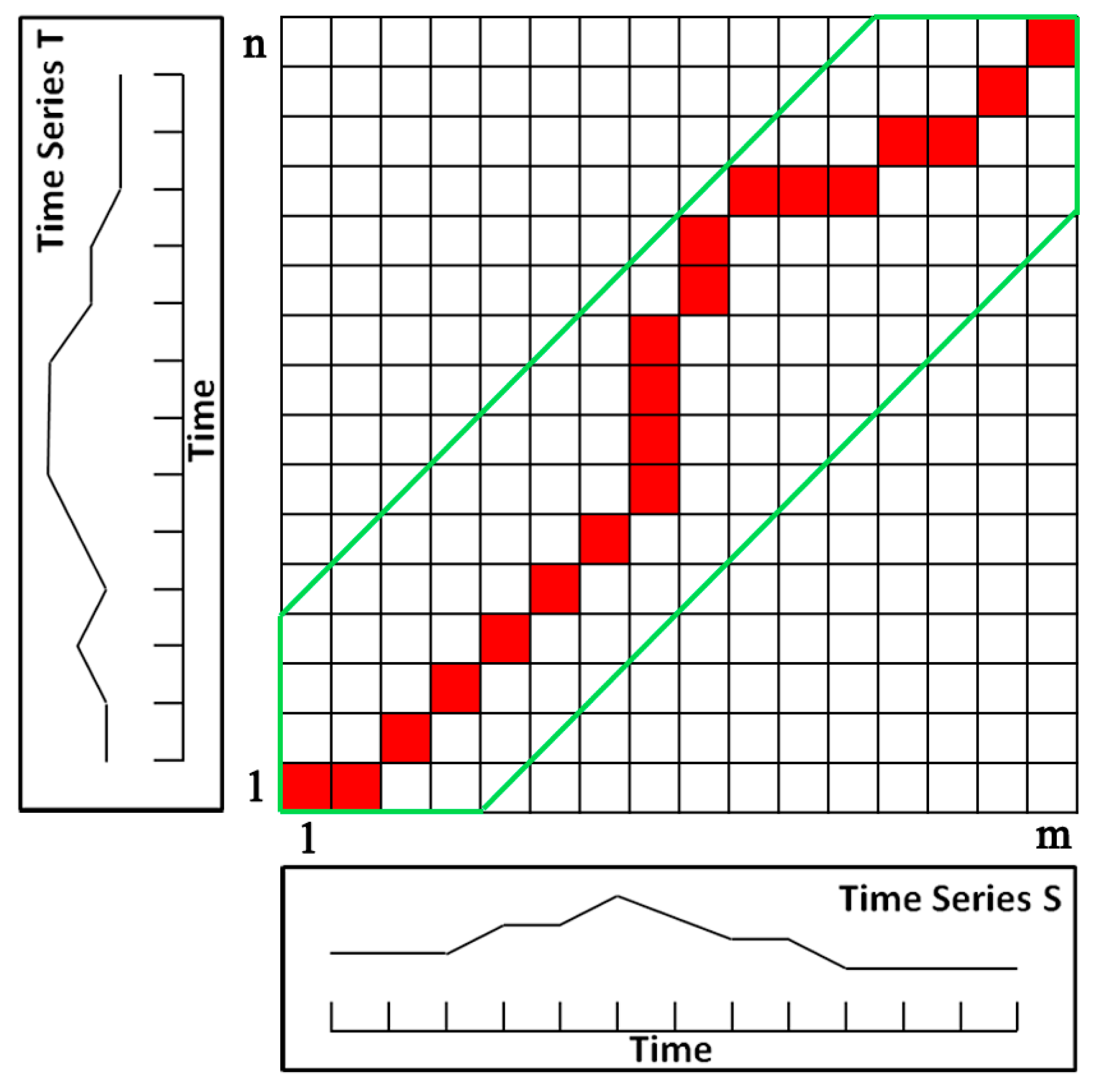

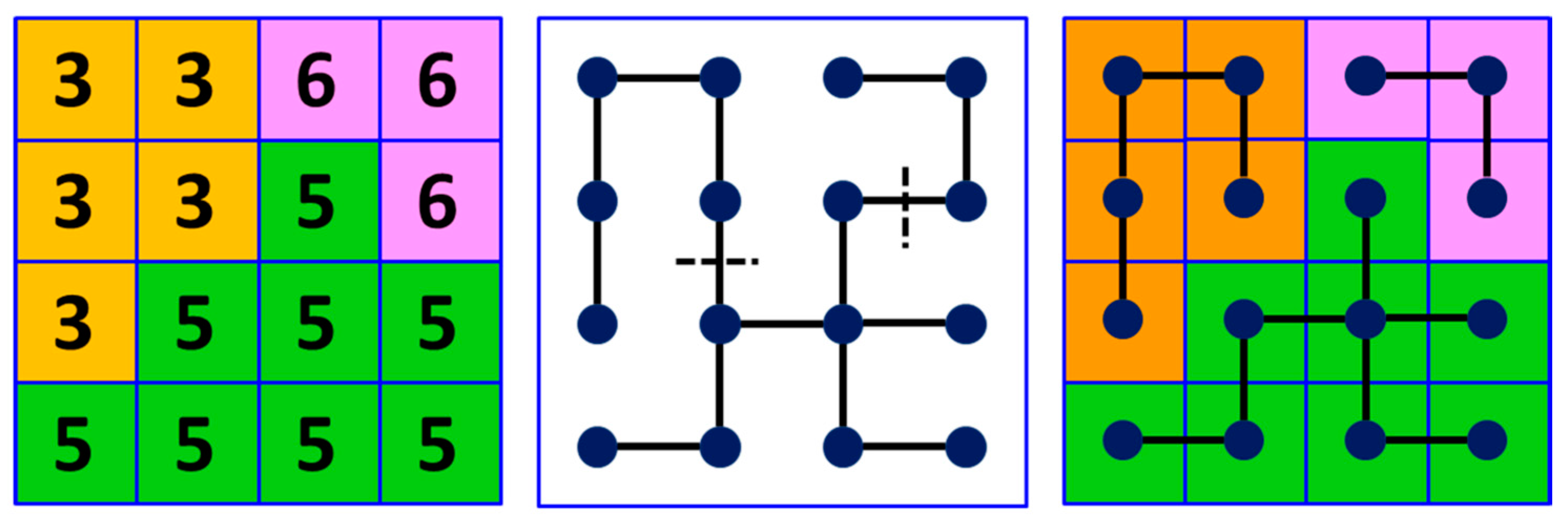

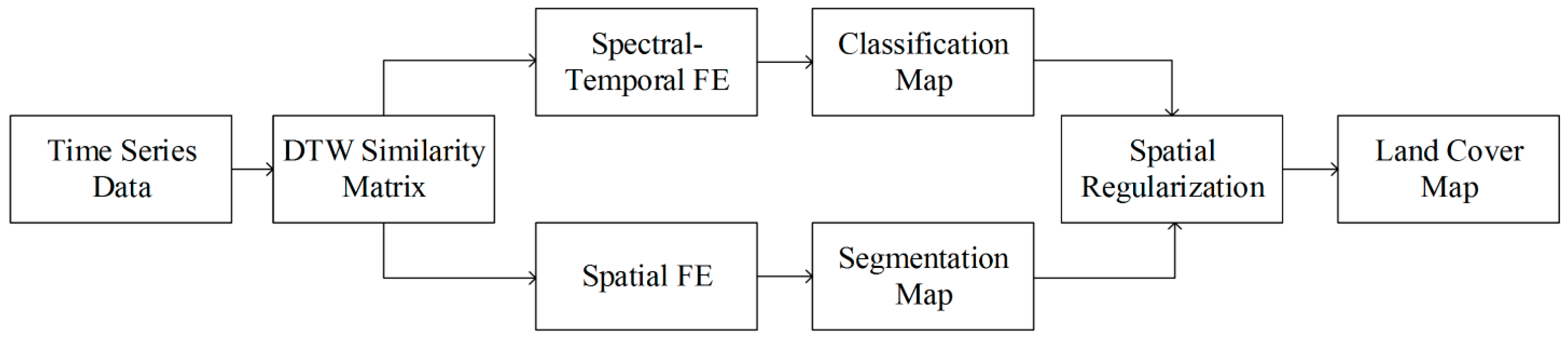

In this paper, we investigated the potential for extracting spectral-temporal and spatial features from satellite time-series data to reliably classify different land cover categories. Our focus is on an automatic and stable classification approach without human intervention to improve the accuracy and reduce the mapping period for land cover products. In addition, the temporal dimension is completely integrated into modeling. Our overall research objectives were to solve the high dimensionality problem of multi-spectral time series using all the available data, and to determine how to extract and combine the spatial characteristics of time-series land cover classifications. To achieve these objectives, we developed a new automated time-series land cover classification method based on extracting spectral-temporal and spatial features using all the available data. This methodology is applicable to all of satellite time series but is illustrated using Landsat time series data in this study. Specifically, the dynamic time warping (DTW) similarity measure was used on satellite image time series to mine all the available data. Then, a modification of a nonlinear DR method developed for hyperspectral data was used to extract spectral-temporal features from multi-spectral satellite time series, and an image segmentation method was modified to extract spatial features from multi-spectral satellite time series. Finally, a classification system based on spatial regularization is established to generate land cover map using spectral-temporal and spatial features.

4. Experiments

A series of quantitative classification experiments were undertaken in each study area to verify the performance of the proposed method. Five classification methods were used for comparison, as shown in

Table 1. The reasons for choosing these five methods are as follows. First, LE-DTW and STS are the methods proposed in this paper. The comparison of these two methods is to illustrate whether spatial information is useful for improving the accuracy of time-series land cover classification. Second, principal component analysis (PCA) is a typical linear DR method [

44], and is compared to LE-DTW to explore the internal structure of satellite time-series data. Third, LE-SAM-R is a refined LE algorithm using the spectral angle mapper (SAM) with satellite time-series data after temporal interpolation [

39]. We compared it with LE-DTW to illustrate which metric is more effective for satellite time-series data mining. Fourth, temporal interpolation (TI) only needs to interpolate the original time series data without DR, and then the interpolated data is directly input to the classifier to obtain the classification results. It is compared with LE-DTW to show whether there is a large amount of data redundancy in satellite images time series.

Because all of TI, PCA and LE-SAM-R require cloud-free land surface observations, we use temporal interpolation with the raw data using data from earlier and later dates. Specifically, if the band values for a pixel were covered by clouds on a certain date, these values were replaced by a temporally adjacent data point or the average of two temporally adjacent measurements if both the prior and following points are available.

Two popular supervised classifier random forests (RFs) [

88] and support vector machine (SVM) [

89,

90] were used to generate pixel-based classification maps in experiments. The RF classifier is composed of multiple tree classifiers. In addition, each tree classifier casts an equal vote to choose the most popular classification of the input vector [

91,

92]. A total of 500 trees were built using RFs in this paper. In addition, the SVM classifier with a radial basis function (RBF) kernel was performed by using LIBSVM [

93].

The same parameter settings were adopted for all three sets of experiments. Training data and testing data for classification in the three study areas were extracted from the 2014 CDL. In each study area, only the classes which covering over 2% on the CDL data were considered. In addition, 1% of the CDL pixels were randomly selected as training samples and the rest of pixels were used as testing data. A total of 1600, 1000, and 1250 training pixels were used for the Illinois, South Dakota, and Texas study areas, respectively. The traditional classification accuracy statistics obtained from confusion matrices, including the overall and single-class accuracies and kappa index were used to evaluate the performance of classification [

3,

94]. Sensitivity to training data with different proportions was also undertaken (take RF classifier as an example) by selecting at random from 0.1% to 10% of the CDL pixels in three study areas. To ensure the reliability of the results, all the experiments were repeated 10 times.

4.1. Performance of RF and SVM in Five Classification Methods

The classification results and the classification “stability” for the five classification methods combined with RF classifier and SVM classifier is illustrated in this section. The mean overall accuracies and kappa index values of five classification methods combined with two classifiers using 1% CDL training data are shown in

Table 2. Comparing the two classifiers from the table, we can see that both RF and SVM classifier had similar overall accuracy and kappa index for each classification method in all three study areas. The maximum difference of overall accuracy using RF classifier and SVM classifier for TI, PCA, LE-SAM-R, LE-DTW and STS method in three study areas were 1.93%, 1.53%, 2.66%, 3.22% and 0.63%, respectively, and the corresponding difference of kappa index were 0.0217, 0.0857, 0.0395, 0.0456 and 0.0107. It is worth noting that, in the STS classification method, the difference of overall classification accuracy of two classifiers in three study areas were 0.51%, 0.52% and 0.63%, respectively, and the corresponding difference of kappa index were 0.0096, 0.0079, and 0.0107. Obviously, the performance of the two classifiers are most similar in the STS classification method, meaning that that method is more stable than the other four classification methods.

4.2. Satellite Image Time Series Data Redundancy

The mean overall accuracies and kappa index values of the TI and LE-DTW classification results using 1% CDL training data are shown in

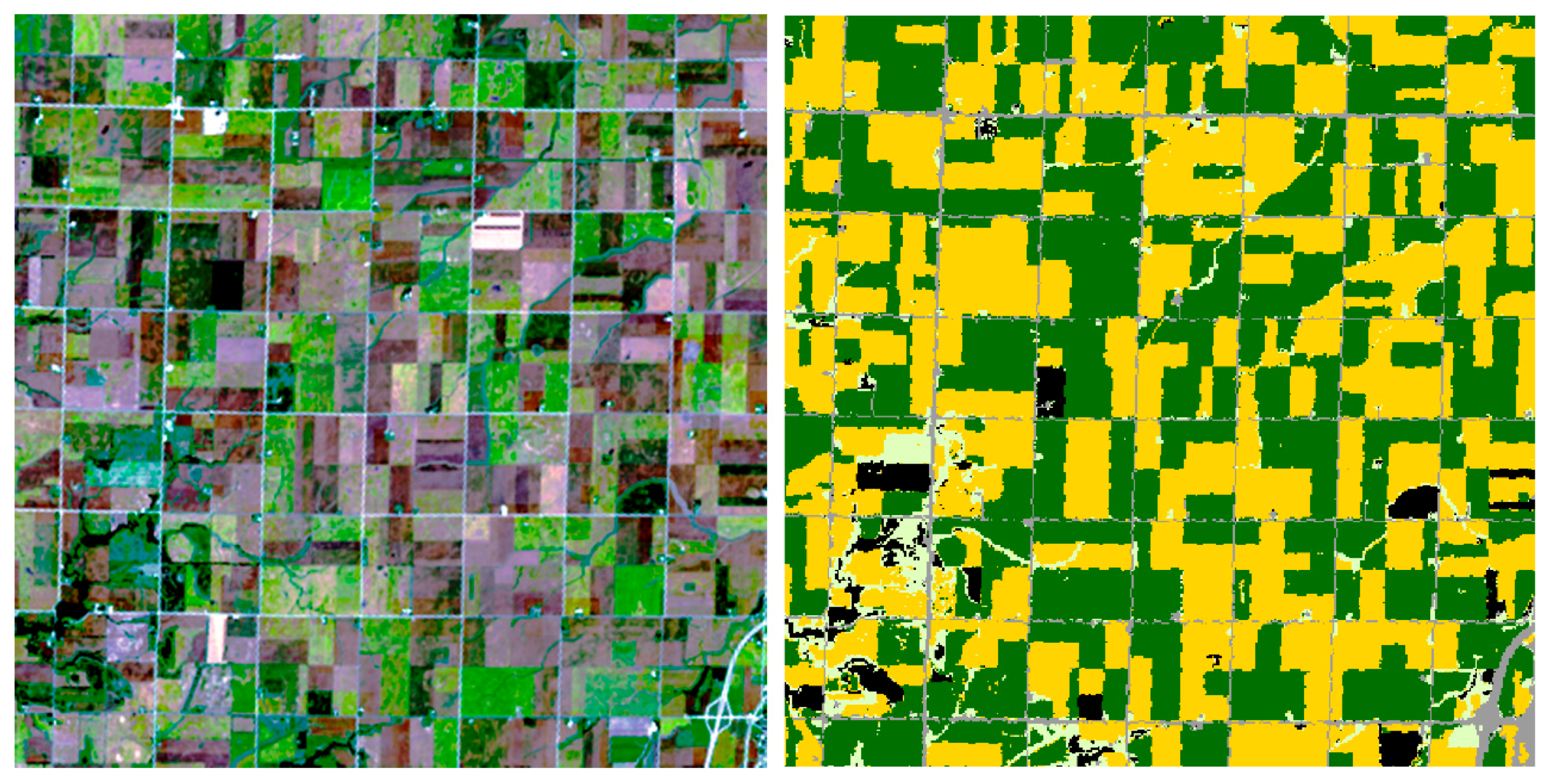

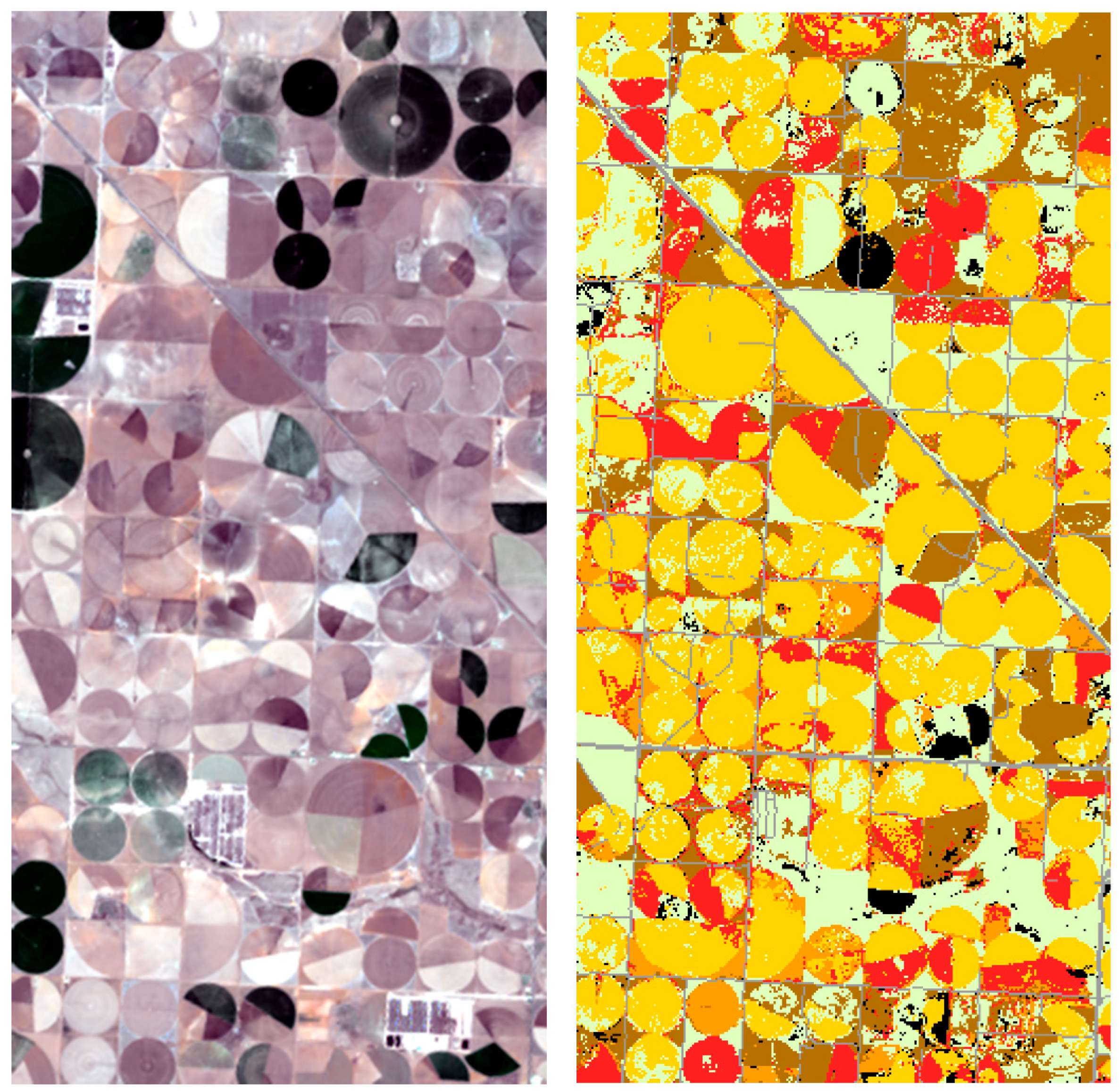

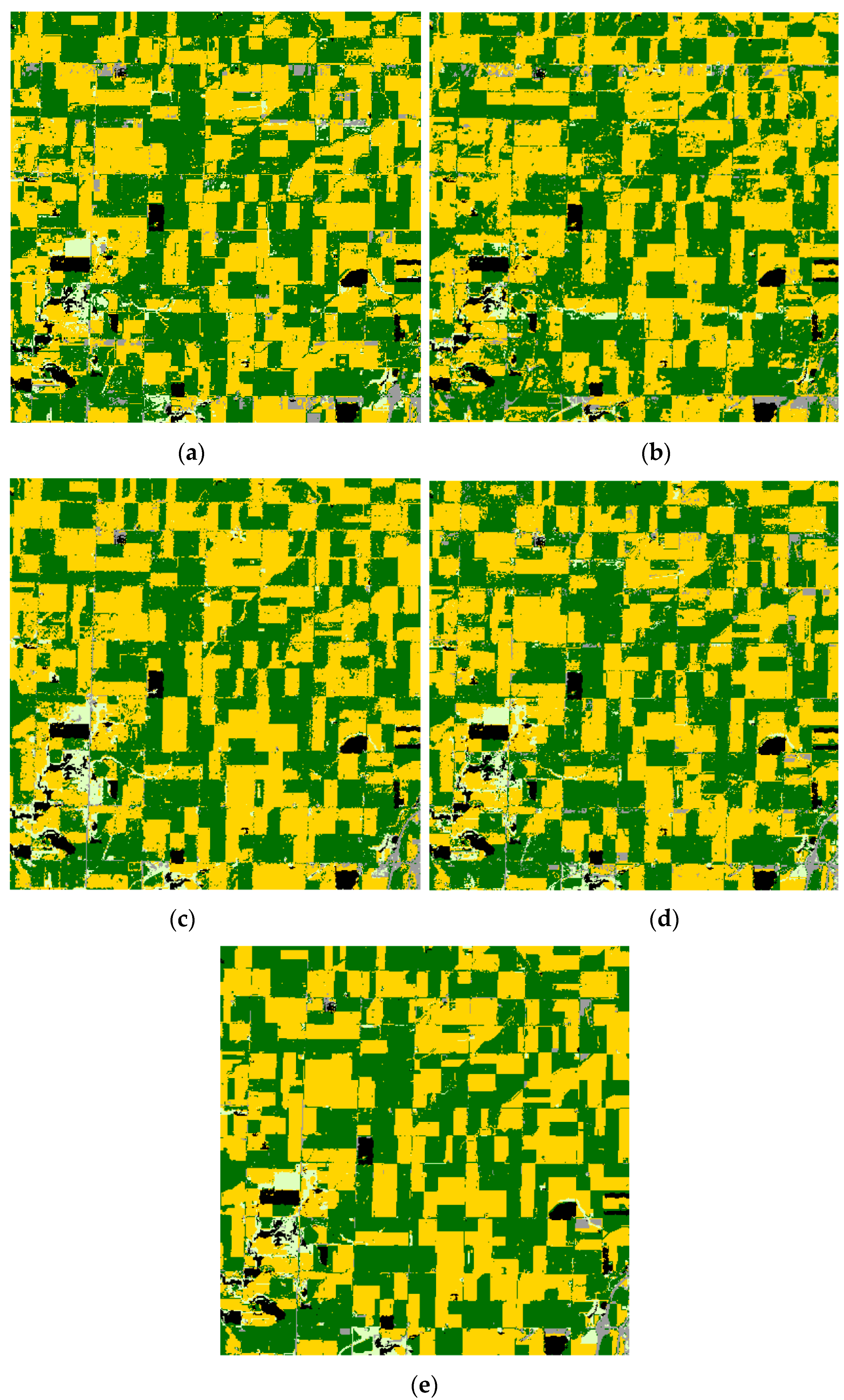

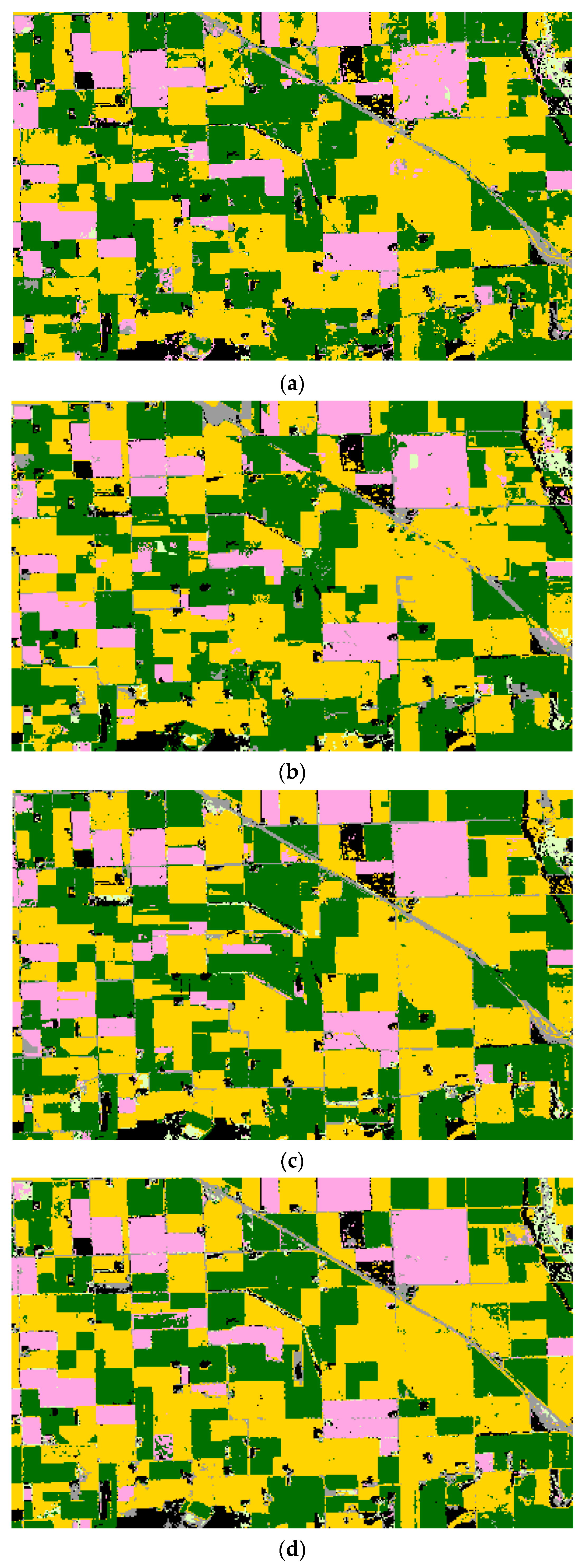

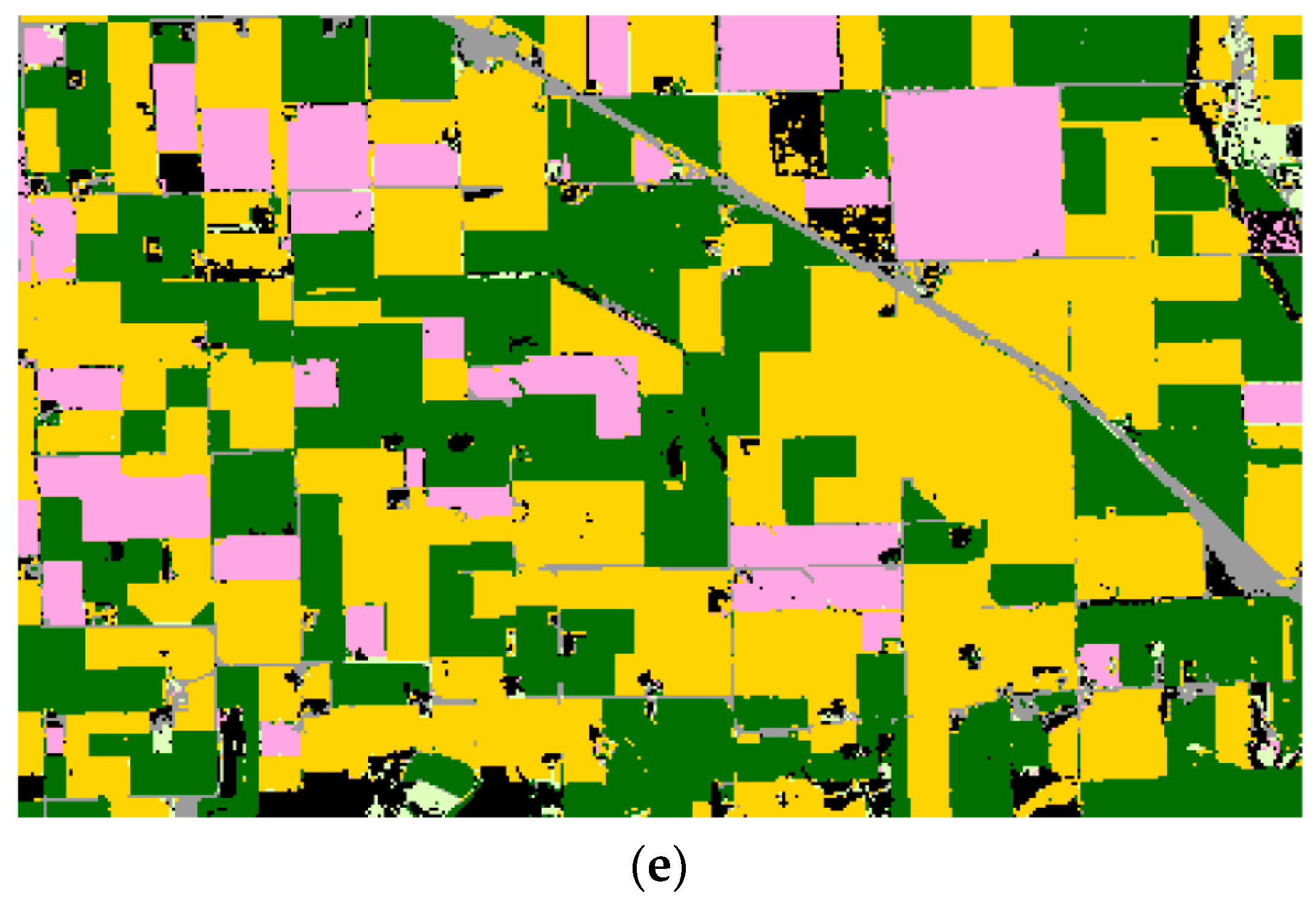

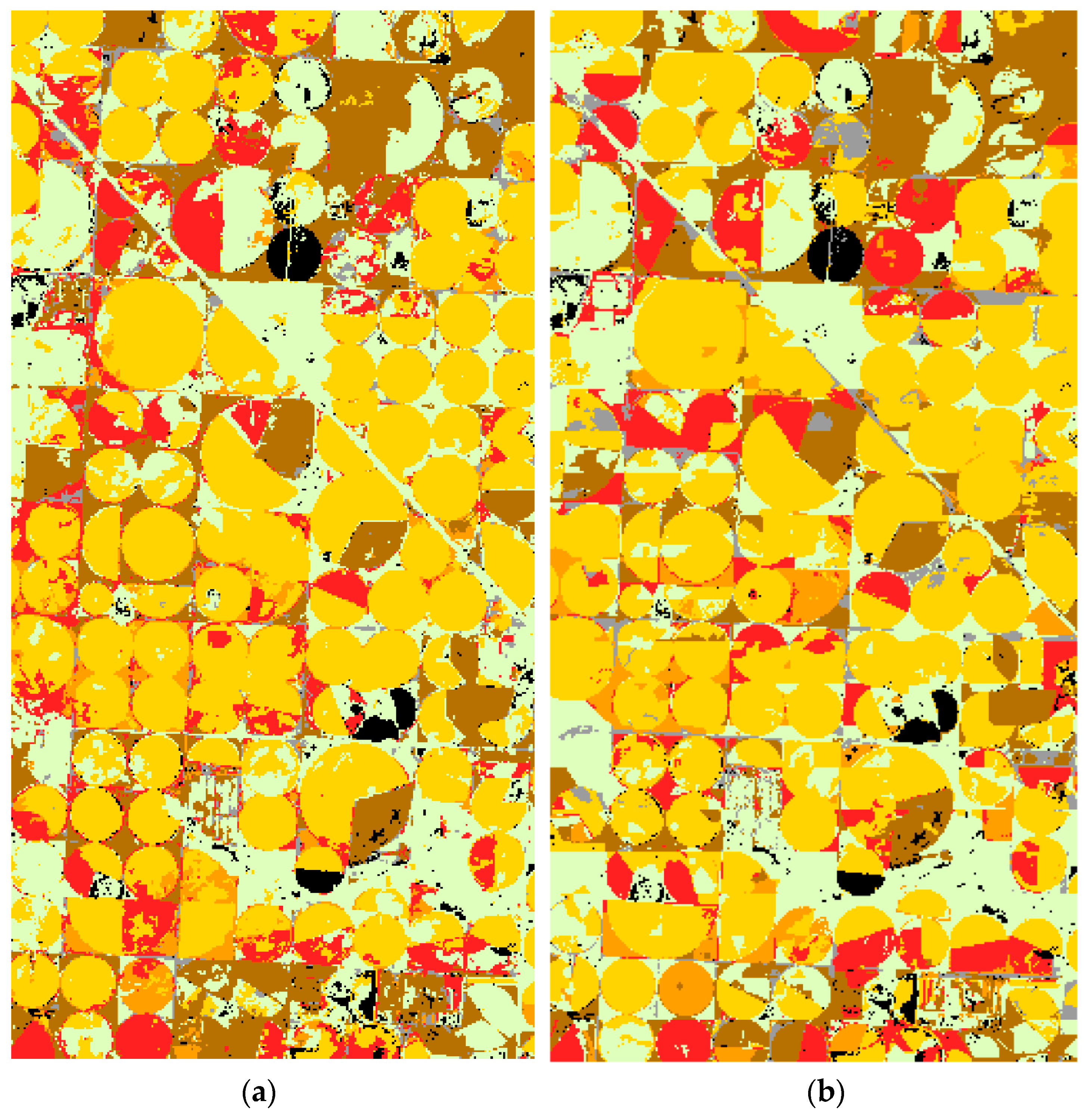

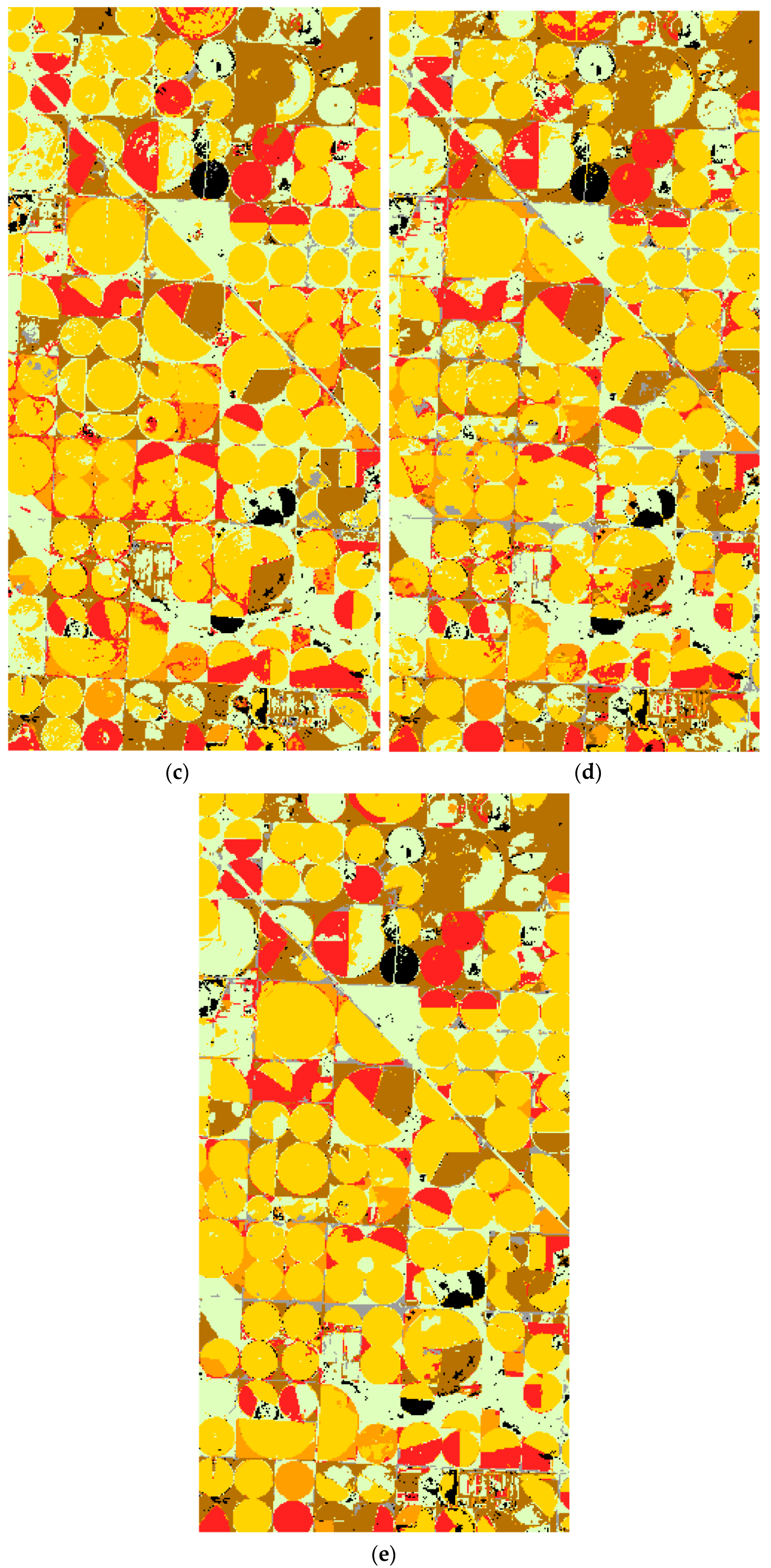

Table 2. The overall classification accuracy of the LE-DTW method is at least 2.72%, 1.77% and 8.40% higher than that of TI in Illinois, South Dakota, and Texas study areas, respectively. In addition, the kappa index of the LE-DTW method are raised more than 0.0658, 0.0325 and 0.1210 compared to the TI method in three study areas. Classification maps constructed using the TI and LE-DTW methods combined with RF classifier in three study areas are shown in

Figure 8a,d,

Figure 9a,d and

Figure 10a,d.

Table 3 and

Table 4 summarize the mean producer’s and user’s accuracies for classification based on the TI and LE-DTW methods combined with RF classifier for each major crop category, respectively. The producer’s accuracy indicates the probability that a pixel is classified correctly, which equal the ratio of all the pixels classified correctly in a class to the sum of true reference pixels for that class [

95]. The user’s accuracy indicates the probability that a pixel is classified to a specific class, which equal the ratio of all the pixels classified correctly in a class to the sum of all of the pixels allocated to that class [

95]. The producer’s and user’s accuracies of TI method for most of the categories in the three study areas were significantly lower than those of the LE-DTW, especially in the CDL classes covering less than 10% of each study area. For example, the producer’s and user’s accuracies of the Illinois grass/pasture class (4.6% of the study area), the South Dakota developed/open space class (5.67% of the study area), and the Texas sorghum class (5.36% of the study area) in the TI method were 25.71%, 9.08% and 31.44% less than those of the LE-DTW method, respectively.

For satellite image time series, the classification accuracy of the data after DR is higher than that of the data without DR. Possible reasons are as follows. First, the time series data contains data redundancy, which cause the Hughes phenomenon [

42]. Second, the temporal interpolation for time series data brings new error, especially the continuous temporal data are unavailable. Third, LE-DTW method provides dimensionality-reduced data that have desirable classification properties. Therefore, prior to land cover classification, it is appropriate to apply the DR techniques to satellite image time series.

4.3. Satellite Image Time Series Nonlinear Characteristics

The mean overall accuracies and kappa index values of the PCA and LE-DTW classification results using 1% CDL training data are shown in

Table 2. The overall classification accuracy of the LE-DTW method combined with RF classifier or SVM classifier is more than 6% greater than that of PCA in all study areas, and its kappa index is also higher than that of PCA by more than 0.04. Classification maps constructed using the PCA methods combined with RF classifier in three study areas are shown in

Figure 8b,

Figure 9b and

Figure 10b.

Table 5 summarize the mean producer’s and user’s accuracies of classification based on the PCA methods combined with RF classifier for each major crop category. For the PCA DR method, the producer’s and user’s accuracies exceeded 76%, 86%, and 50% for all CDL classes covering more than 10% of each study area in Illinois, South Dakota, and Texas, respectively. However, the producer’s and user’s accuracies of the Illinois developed/open space class (5.29% of the study area), the South Dakota grass/pasture class (2.10% of the study area) and the Texas developed/open space class (4.92% of the study area) ranged from only 11% to 45%. For developed/open space class and grass/pasture class, the main reason for the low accuracy include two aspects. One is the small covering area resulting in the small size of the training sample, which means that it is difficult to establish reasonable classification rules when training classifiers. Another is that these natural land and vegetation classes have less pronounced phenology characteristics than the cropland classes.

For the LE-DTW method, the producer’s and user’s accuracies for all the categories in the three study areas were significantly higher than those of the PCA. This was to be expected, because satellite image time series have intrinsic nonlinear characteristics. Thus, LE-DTW, as a nonlinear DR method, is better suited than the linear DR method for solving the high dimensionality problem of satellite image time series. In addition, multi-spectral time-series data require cloud removal before PCA DR. Error generated by this pre-processing may be another reason that PCA has lower accuracy than LE-DTW.

4.4. Satellite Image Time Series Metric

The mean overall accuracies and kappa index values of the LE-SAM-R classification results generated using 1% CDL training data are shown in

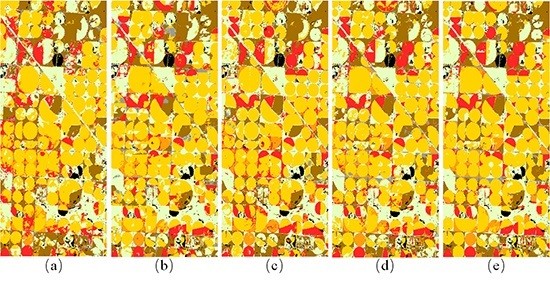

Table 2. The overall classification accuracies and kappa index values of the LE-SAM-R method were lower than those of LE-DTW in all the three study areas. The largest relative improvement using the LE-DTW method was in the Texas study area. LE-DTW combined with RF classifier had a 3.26% greater overall accuracy and a 0.0450 increase in kappa index than LE-SAM-R combined with RF classifier. The classification maps for the LE-SAM-R method combined with RF classifier in the three study areas are shown in

Figure 8c,

Figure 9c and

Figure 10c.

Table 6 summarizes the mean producer and user accuracies of the classification based on the LE-SAM-R method combined with RF classifier for each major crop category. The producer and user accuracies exceeded 81%, 88%, and 70% for all CDL classes covering more than 10% of the study area in Illinois, South Dakota, and Texas, respectively. However, the producer’s accuracies of LE-SAM-R for most categories were also lower than those of the LE-DTW method.

These results suggest that the DTW distance is better suited for similarity measurement of multi-spectral time-series data than the SAM distance. The DTW metric ensures that all the data from cloudless regions in each temporal image are used. This is of great importance to those sensors that have low-frequency observations. It is worth noting that the LE-DTW method does not need to reconstruct the value of the data in the cloud-covered regions, whereas the LE-SAM-R method requires this reconstruction process. In other words, the LE-DTW method uses all the available data directly. Therefore, the LE-DTW method is a dimensionality reduction method which is more suitable for satellite image time series data.

4.5. Satellite Image Time Series Spatial Features

The mean overall accuracies and kappa index values of the STS classification results generated using 1% CDL training data are shown in

Table 2. The STS method provides unambiguously higher overall classification accuracies than LE-DTW in most cases. It is worth noting that, compared to the LE-DTW method (combining RF classifier), the overall classification accuracies improved by 1.66%, 4.01%, and 1.13% in Illinois, South Dakota, and Texas study areas, respectively, using the STS method (combining RF classifier), while the corresponding kappa index values were enhanced by 0.0258, 0.0594 and 0.0136. Similarly, the performance of STS method combined with SVM classifier is better than that of LE-DTW method combined with SVM classifier. Classification maps generated using the STS method combined with RF classifier in the three study areas are shown in

Figure 8e,

Figure 9e and

Figure 10e.

Table 7 summarizes the mean producer and user accuracies of classification based on the STS method combined with RF classifier for each major crop category. Compared to the producer and user accuracies in

Table 6, the corresponding accuracies stated in

Table 7 were higher for the great majority of classes. Furthermore, for CDL classes covering less than 10% of each study area, the producer and user accuracies of the STS method were also greatly improved. For example, the producer’s accuracies of the Illinois developed/open space class (5.29% of the study area), the South Dakota developed/open space class (5.67% of the study area), and the Texas sorghum class (5.36% of the study area) in the STS method were 8.73%, 19.06% and 2.4% greater than those of the LE-DTW method, respectively.

These results show that the MST-DTW method can effectively extract spatial features from satellite image time series using all available data. The STS method combined with spatial information extracted by MST-DTW significantly improves the land cover classification accuracies of multi-spectral time-series data. STS can mine a variety of features from limited data for classification; therefore, it is particularly effective for remote sensing image time series with low temporal resolution.

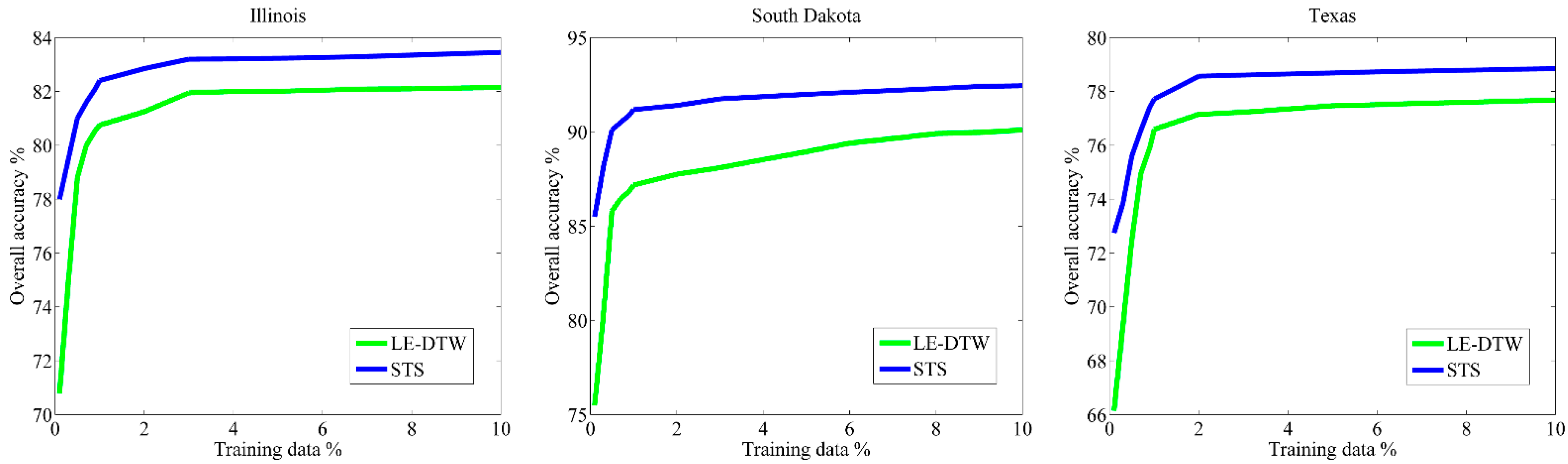

4.6. Classification Sensitivity to the Amount of Training Data

Classification accuracy always directly depends on the amount of the training data [

96]. Careful selection of training samples may help reduce the size of the training data without reducing supervised classification accuracy [

97]. When using fewer training samples, a given classification accuracy should be capacitated by a more optimal classification method than a less one [

39].

Figure 11 illustrates the overall classification accuracies provided by the LE-DTW (green) and STS (blue) using different percentages of training data. At each training percentage, a total of 10 independent classifications were performed. As can be seen from

Figure 11, the overall classification accuracies of STS approach are consistently higher than the LE-DTW approach. Moreover, when using fewer training samples, the classification performances of STS method are more stable than that of LE-DTW method. Both the overall classification accuracies of STS approach and LE-DTW become stable when approximately 1% of the training samples are used. For each set of 10 classifications, the standard deviations of the overall classification accuracies are not illustrated. This is because the standard deviation for each set experiments were less than 1% except for the results using the 0.1% training data which were less than 2.1% in the three study areas. These results show that the STS method using spectral-temporal-spatial features is more optimal than the LE-DTW method using spectral-temporal features only.

5. Conclusions

Obtaining accurate and timely land cover maps is a difficult problem in remote sensing. Such maps require the mining of as much useful information as possible to improve land cover classification accuracy based on limited data. In this study, a novel method is developed for land cover classification using spectral-temporal-spatial data at an annual scale, assuming there is no land cover change within 1 year. This approach utilizes all the available multi-spectral time-series data to construct a graph based on the DTW similarity measure, and then utilizes graph theory-based dimensionality reduction and segmentation methods to extract spectral-temporal features and spatial features for identification and optimization of land cover classes. In addition, the proposed method is an automated classification method, and requires few training samples to perform well. These advantages are significant because land cover classification is labor-intensive and difficult to automate [

98,

99]. Therefore, the classification method introduced in this paper should prove useful for improving the accuracy and reducing the mapping period for land cover classification. The proposed method was applied to Landsat multi-spectral reflectance time-series data, which has a resample period of 16 days. A series of supervised classification experiments using USDA CDL land cover maps as reference data were undertaken in three study areas with land cover complexity and different amounts of invalid data in the United States.

Although the STS classification method provided the anticipated classification results in this study, the computation required for the LE-DTW method in the STS system increases geometrically with the spatial dimensions of the image. In fact, this problem occurs in most manifold learning DR algorithms [

100,

101,

102,

103,

104]. This is an issue, especially when manifold learning DR algorithms are applied to land cover classification at continental to global scale [

13,

105]. Due to the complexity of the MST-DTW algorithm, it is mainly implemented by building a DTW similarity measure matrix in the same manner as LE-DTW, and therefore MST-DTW does not significantly increase the computational intensity of the STS system. Reducing its computational requirements is a direction for future research. This could be accomplished, for example, by employing the landmark points strategy [

106] developed for the ISOMAP (isometric mapping) global nonlinear DR method [

67,

107,

108], GPU (Graphics Processing Unit) enhanced computing [

109], and the STS method to classify and then merge image subsets. In addition, we are now conducting experiments using long-term multi-spectral time-series data with invalid values for land cover change detection with spectral-temporal-spatial features extracted from the STS system.