3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Fields and UAV Flights

2.2. DSM and Orthomosaic Generation

2.3. OBIA Algorithm

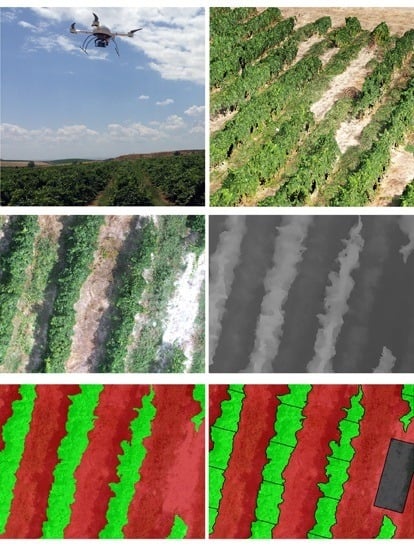

- Vine classification: A chessboard segmentation algorithm was used to segment the DSM in square objects of 0.5 m side size (Figure 3). The grid size was based on the common vine row width for trellis system that is around 0.7 m. Most of the objects that covered vine regions also included pixels of bare soil, making the DSM standard deviation (SDDSM) within those objects very large. Thus, the objects with a SDDSM greater than 0.15 m were classified as “vine candidates”. The 0.15 SDDSM value was selected to be well suited for vine detection based on previous studies. The remaining objects were pre-classified as bare soil (Figure 3).The square objects that covered only vine regions had a low SDDSM. To correctly classify them as “vine candidates”, the fact that they were surrounded by “vine candidates” was taken into account and implemented in the OBIA algorithm.Each individual “vine candidates” was automatically analyzed at the pixel level to refine the vine classification. Firstly, the “vine candidates” objects were segmented at the pixel size objects by using the chessboard segmentation process. Next, the algorithm classified every pixel as vineyard or bare soil by comparing their DSM value with that of the surrounding bare soil square (Figure 3). The 0.8 m value was used as suited threshold to accurately classify actual vine objects, based on previous studies, which also avoided misclassification of cover green as vine.The individual analysis of each “vine candidate” showed to be very suitable for vine classification, as only the surrounding soil altitude was taken into account for the discrimination, which could prevent errors due to field slope if the average soil altitude is considered instead. Moreover, using chessboard segmentation instead of the any other segmentation option, such as multi-resolution algorithm, decreases the computational time of the full process, because segmentation is by far the slowest task of the full OBIA procedure [21]. Thus, this configuration consisting of selecting DMS band as the reference for the segmentation instead of the spectral information, and the chessboard segmentation produced a notable increase in the processing speed without penalizing the segmentation accuracy [21].

- Gap detection in vine rows: Once the vines were classified, the gaps into the rows were detected by following four steps: (1) estimation of row orientation; (2) image gridding based on strips following the row orientation; (3) strip classification; and (4) detection of gaps. Firstly, a new level was created above the previous one to calculate the main orientation of the vines and then to generate a mesh of strips of 0.5 width size with the same orientation as the vine row. Then, a looping process was performed until all the strips were analyzed: the strip in the upper level with the higher percentage of vine objects in the lower one, as well as its neighbors strips, were classified as “vine row”; and continuously, the adjacent strips were classified as “no row” to simplify the process.

- 3.

- Computing the vine geometric features: once the gaps into the row were identified, the vine rows were divided into 2 m length objects, which corresponded to each vine based on vine spacing. This parameter is user configurable to adapt the algorithm to different vine spacing. Before vine geometric feature calculation, the height of every pixel was individually obtained by comparing its DSM value (pixel height) to the average DSM value of the surrounding bare soil area. Then, the algorithm automatically calculated the geometric features (width, length and projected area, height and volume) of each vine, as follow: the highest height value of the pixels that composed the vine was selected as the vine height; and the volume was calculated by adding up the volumes (by multiplying the pixel areas and heights) of all the pixels corresponding to the vine. Finally, the geometric features of each vine, as well the identification and location, were automatically exported as vector (e.g., shapefile format) and table (e.g., Excel or ASCII format) files.

2.4. Validation

2.4.1. Grapevine Classification and Gap Detection

2.4.2. Grapevine Height

3. Results and Discussion

3.1. Vine Classification

3.2. Vine Gap Detection

3.3. Vine Height Quantification

3.4. Potential Algorithm Result Applications

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bramley, R.G.V.; Hamilton, R.P. Understanding variability in winegrape production systems. Aust. J. Grape Wine Res. 2004, 10, 32–45. [Google Scholar] [CrossRef]

- Arnó, J.; Casasnovas, J.A.M.; Dasi, M.R.; Rosell, J.R. Precision viticulture. Research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 2009, 7, 779–790. [Google Scholar] [CrossRef]

- Schieffer, J.; Dillon, C. The economic and environmental impacts of precision agriculture and interactions with agro-environmental policy. Precis. Agric. 2014, 16, 46–61. [Google Scholar] [CrossRef]

- Tey, Y.S.; Brindal, M. Factors influencing the adoption of precision agricultural technologies: A review for policy implications. Precis. Agric. 2012, 13, 713–730. [Google Scholar] [CrossRef]

- Ballesteros, R.; Ortega, J.F.; Hernández, D.; Moreno, M.Á. Characterization of Vitis vinifera L. Canopy Using Unmanned Aerial Vehicle-Based Remote Sensing and Photogrammetry Techniques. Am. J. Enol. Vitic. 2015. [Google Scholar] [CrossRef]

- Hall, A.; Lamb, D.W.; Holzapfel, B.; Louis, J. Optical remote sensing applications in viticulture—A review. Aust. J. Grape Wine Res. 2002, 8, 36–47. [Google Scholar] [CrossRef]

- Johnson, L.F.; Roczen, D.E.; Youkhana, S.K.; Nemani, R.R.; Bosch, D.F. Mapping Vineyard leaf area with multispectral satellite imagery. Comput. Electron. Agric. 2003, 38, 33–44. [Google Scholar] [CrossRef]

- Weiss, M.; Baret, F. Using 3D Point Clouds Derived from UAV RGB Imagery to Describe Vineyard 3D Macro-Structure. Remote Sens. 2017, 9, 111. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an Unmanned Aerial Vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Albetis, J.; Duthoit, S.; Guttler, F.; Jacquin, A.; Goulard, M.; Poilvé, H.; Féret, J.-B.; Dedieu, G. Detection of Flavescence dorée Grapevine Disease Using Unmanned Aerial Vehicle (UAV) Multispectral Imagery. Remote Sens. 2017, 9, 308. [Google Scholar] [CrossRef]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- Rey-Caramés, C.; Diago, M.P.; Martín, M.P.; Lobo, A.; Tardaguila, J. Using RPAS Multi-Spectral Imagery to Characterise Vigour, Leaf Development, Yield Components and Berry Composition Variability within a Vineyard. Remote Sens. 2015, 7, 14458–14481. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2013, 6, 1–15. [Google Scholar] [CrossRef]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, M.; Fabbri, S.; Gabbianelli, G. Using Unmanned Aerial Vehicles (UAV) for High-Resolution Reconstruction of Topography: The Structure from Motion Approach on Coastal Environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef] [Green Version]

- Rosnell, T.; Honkavaara, E. Point cloud generation from Aerial image data acquired by a quadrocopter type micro unmanned aerial vehicle and a digital still camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [PubMed]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-Throughput 3-D Monitoring of Agricultural-Tree Plantations with Unmanned Aerial Vehicle (UAV) Technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [PubMed]

- Jiménez-Brenes, F.M.; López-Granados, F.; de Castro, A.I.; Torres-Sánchez, J.; Serrano, N.; Peña, J.M. Quantifying pruning impacts on olive tree architecture and annual canopy growth by using UAV-based 3D modelling. Plant Methods 2017, 13, 55. [Google Scholar] [CrossRef] [PubMed]

- Matese, A.; Gennaro, S.F.D.; Berton, A. Assessment of a canopy height model (CHM) in a vineyard using UAV-based multispectral imaging. Int. J. Remote Sens. 2017, 38, 2150–2160. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Peña, J.M.; Torres-Sánchez, J.; de Castro, A.I.; Kelly, M.; López-Granados, F. Weed Mapping in Early-Season Maize Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhães, P.S.G. Mapping skips in sugarcane fields using object-based analysis of unmanned aerial vehicle (UAV) images. Comput. Electron. Agric. 2017, 143, 49–56. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; Peña-Barragán, J.M.; Jurado-Expósito, M.; Mesas-Carrascosa, F.J.; López-Granados, F. Evaluation of pixel- and object-based approaches for mapping wild oat (Avena sterilis) weed patches in wheat fields using QuickBird imagery for site-specific management. Eur. J. Agron. 2014, 59, 57–66. [Google Scholar] [CrossRef]

- Mathews, A.J. Object-based spatiotemporal analysis of vine canopy vigor using an inexpensive unmanned aerial vehicle remote sensing system. J. Appl. Remote Sens. 2014, 8, 085199. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Castro, A.-I.D.; Serrano-Pérez, A.; Mesas-Carrascosa, F.-J.; Peña, J.-M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Hellesen, T.; Matikainen, L. An Object-Based Approach for Mapping Shrub and Tree Cover on Grassland Habitats by Use of LiDAR and CIR Orthoimages. Remote Sens. 2013, 5, 558–583. [Google Scholar] [CrossRef]

- Van Den Eeckhaut, M.; Kerle, N.; Poesen, J.; Hervás, J. Object-oriented identification of forested landslides with derivatives of single pulse LiDAR data. Geomorphology 2012, 173–174, 30–42. [Google Scholar] [CrossRef]

- Franklin, S.E.; Ahmed, O.S. Deciduous tree species classification using object-based analysis and machine learning with unmanned aerial vehicle multispectral data. Int. J. Remote Sens. 2017, 1–10. [Google Scholar] [CrossRef]

- Meier, U. BBCH Monograph: Growth Stages for Mono- and Dicotyledonous Plants, 2nd ed.; Blackwell Wissenschafts-Verlag: Berlin, Germany, 2001. [Google Scholar]

- AESA. Aerial Work—Legal Framework. Available online: http://www.seguridadaerea.gob.es/LANG_EN/cias_empresas/trabajos/rpas/marco/default.aspx (accessed on 6 November 2017).

- Torres-Sánchez, J.; López-Granados, F.; Borra-Serrano, I.; Peña, J.M. Assessing UAV-collected image overlap influence on computation time and digital surface model accuracy in olive orchards. Precis. Agric. 2018, 19, 115–133. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed]

- Smit, J.L.; Sithole, G.; Strever, A.E. Vine signal extraction: An application of remote sensing in precision viticulture. S. Afr. J. Enol. Vitic. 2016, 31, 65–74. [Google Scholar] [CrossRef]

- Puletti, N.; Perria, R.; Storchi, P. Unsupervised classification of very high remotely sensed images for grapevine rows detection. Eur. J. Remote Sens. 2014, 47, 45–54. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Delenne, C.; Durrieu, S.; Rabatel, G.; Deshayes, M. From pixel to vine parcel: A complete methodology for vineyard delineation and characterization using remote-sensing data. Comput. Electron. Agric. 2010, 70, 78–83. [Google Scholar] [CrossRef] [Green Version]

- Burgos, S.; Mota, M.; Noll, D.; Cannelle, B. Use of Very High-Resolution Airborne Images to Analyse 3D Canopy Architecture of a Vineyard. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 399. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2016, 183, 49–59. [Google Scholar] [CrossRef]

- Espinoza, C.Z.; Khot, L.R.; Sankaran, S.; Jacoby, P.W. High Resolution Multispectral and Thermal Remote Sensing-Based Water Stress Assessment in Subsurface Irrigated Grapevines. Remote Sens. 2017, 9, 961. [Google Scholar] [CrossRef]

- Llorens, J.; Gil, E.; Llop, J.; Escolà, A. Variable rate dosing in precision viticulture: Use of electronic devices to improve application efficiency. Crop Prot. 2010, 29, 239–248. [Google Scholar] [CrossRef] [Green Version]

- Hall, A.; Lamb, D.W.; Holzapfel, B.P.; Louis, J.P. Within-season temporal variation in correlations between vineyard canopy and winegrape composition and yield. Precis. Agric. 2010, 12, 103–117. [Google Scholar] [CrossRef]

| Field | Grape Variety | Studied Area (m2) | Central Coordinates (X, Y) |

|---|---|---|---|

| A | Merlot | 4925 | 291,009 E; 4,613,392 N |

| B | Albariño | 4415 | 291,303 E; 4,614,055 N |

| C | Chardonnay | 2035 | 290,910 E; 4,616,282 N |

| Sensor Size (mm) | Pixel Size (mm) | Sensor Resolution (pixels) | Focal Length (mm) | Radiometric Resolution (bit) | Image Format |

|---|---|---|---|---|---|

| 17.3 × 13.0 | 0.0043 | 4032 × 3024 | 14 | 8 | JPEG |

| Preference Setting | Control Parameter | Selected Setting |

|---|---|---|

| Alignment parameters | Accuracy | High |

| pair preselection | Disabled | |

| Dense point cloud | Quality | High |

| depth filtering | Mild | |

| DSM | Coordinate system | WGS84/UTM zone 31 N |

| source data | Dense cloud | |

| Orthomosaic | Blending mode | Mosaic |

| Classified Data | ||||

|---|---|---|---|---|

| Vineyard | No Vineyard | |||

| Manual classification | Vineyard | %VV | %VNV | TR1 |

| No vineyard | %NVV | %NVNV | TR2 | |

| TC1 | TC2 | TR1 + TR2 = TC1 + TC2 = 100% | ||

| Field | Date | Overall Accuracy (%) | Kappa |

|---|---|---|---|

| A | July | 95.5 | 0.9 |

| September | 95.4 | 0.9 | |

| B | July | 95.2 | 0.9 |

| September | 96.0 | 0.9 | |

| C | July | 93.6 | 0.8 |

| September | 96.1 | 0.7 |

| Field | Date | True Positive (%) | False Positive (%) | False Negative (%) |

|---|---|---|---|---|

| A | July | 100.0 | 1.12 | 0.0 |

| September | 96.8 | 0.0 | 3.2 | |

| B | July | 100.0 | 1.0 | 0.0 |

| September | 100.0 | 6.0 | 0.0 | |

| C | July | 100.0 | 0.0 | 0.0 |

| September | 100.0 | 46.8 | 0.0 |

| X Center | Y Center | Length (m) | Width (m) | Area (m2) | Vine Max Height (m) | Vine Mean Height (m) | Vine Volume (m3) |

|---|---|---|---|---|---|---|---|

| 290,909.63 | 4,615,191.17 | 1.36 | 0.48 | 0.51 | 2.02 | 1.49 | 0.76 |

| 290,909.85 | 4,615,192.23 | 2.06 | 1.41 | 1.93 | 2.13 | 1.33 | 2.56 |

| … | … | … | … | … | … | … | … |

| 290,910.55 | 4,615,194.39 | 2.05 | 1.21 | 1.32 | 2.22 | 1.53 | 2.02 |

| 290,918.60 | 4,615,225.30 | 2.06 | 1.74 | 2.35 | 2.22 | 1.72 | 4.05 |

| 290,919.09 | 4,615,227.23 | 2.14 | 1.65 | 2.15 | 2.18 | 1.54 | 3.31 |

| 290,919.60 | 4,615,229.19 | 2.03 | 1.37 | 1.46 | 2.00 | 1.41 | 2.06 |

| 290,920.12 | 4,615,231.14 | 2.19 | 1.63 | 2.13 | 2.00 | 1.40 | 2.99 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Castro, A.I.; Jiménez-Brenes, F.M.; Torres-Sánchez, J.; Peña, J.M.; Borra-Serrano, I.; López-Granados, F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sens. 2018, 10, 584. https://doi.org/10.3390/rs10040584

De Castro AI, Jiménez-Brenes FM, Torres-Sánchez J, Peña JM, Borra-Serrano I, López-Granados F. 3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications. Remote Sensing. 2018; 10(4):584. https://doi.org/10.3390/rs10040584

Chicago/Turabian StyleDe Castro, Ana I., Francisco M. Jiménez-Brenes, Jorge Torres-Sánchez, José M. Peña, Irene Borra-Serrano, and Francisca López-Granados. 2018. "3-D Characterization of Vineyards Using a Novel UAV Imagery-Based OBIA Procedure for Precision Viticulture Applications" Remote Sensing 10, no. 4: 584. https://doi.org/10.3390/rs10040584