Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice

Abstract

:1. Introduction

2. Materials and Methods

2.1. Experimental Design

2.2. Field Measurements

2.3. UAV Campaigns and Sensors

2.4. Image Processing

2.4.1. Image Preprocessing

2.4.2. Calculation of Vegetation Indices

2.5. Data Analysis

3. Results

3.1. Variation of Agronomic Variables over the Three Growth Stages

3.2. Comparison between Counterpart Indices from MS and RGB Images

3.3. Comparison between Counterpart Indices from MS and CIR Images

3.4. Evaluation of Red Edge Indices from Multispectral Images

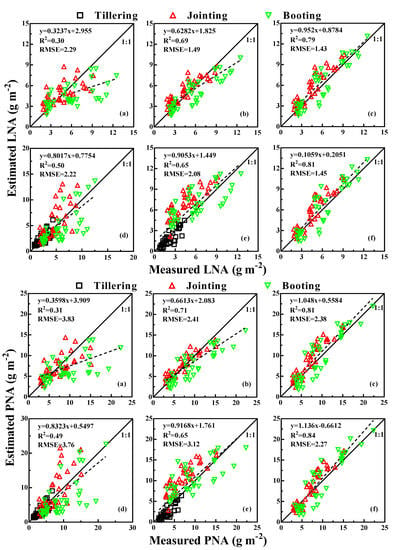

3.5. Model Validation

4. Discussion

4.1. Feasibility of Fitting a Single VI-LNA or VI-PNA Model for All Growth Stages

4.2. Performance of Counterpart Indices from Different Sensors

4.3. Choice of an Appropriate Camera for Precision Agriculture

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Janssen, B.H. Efficient use of nutrients: An art of balancing. Field Crops Res. 1998, 56, 197–201. [Google Scholar] [CrossRef]

- Chu, X.; Guo, Y.; He, J.; Yao, X.; Zhu, Y.; Cao, W.; Cheng, T.; Tian, Y. Comparison of different hyperspectral vegetation indices for estimating canopy leaf nitrogen accumulation in rice. Agron. J. 2014, 106, 1911–1920. [Google Scholar] [CrossRef]

- Wang, W.; Yao, X.; Tian, Y.C.; Liu, X.J.; Jun, N.I.; Cao, W.X.; Zhu, Y. Common Spectral Bands and Optimum Vegetation Indices for Monitoring Leaf Nitrogen Accumulation in Rice and Wheat. J. Integr. Agric. 2012, 11, 2001–2012. [Google Scholar] [CrossRef]

- Zheng, H.; Cheng, T.; Yao, X.; Deng, X.; Tian, Y.; Cao, W.; Zhu, Y. Detection of rice phenology through time series analysis of ground-based spectral index data. Field Crops Res. 2016, 198, 131–139. [Google Scholar] [CrossRef]

- Yao, X.; Ren, H.; Cao, Z.; Tian, Y.; Cao, W.; Zhu, Y.; Cheng, T. Detecting leaf nitrogen content in wheat with canopy hyperspectrum under different soil backgrounds. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 114–124. [Google Scholar] [CrossRef]

- Serrano, L.; Filella, I.; Penuelas, J. Remote sensing of biomass and yield of winter wheat under different nitrogen supplies. Crop Sci. 2000, 40, 723–731. [Google Scholar] [CrossRef]

- Blaes, X.; Chomé, G.; Lambert, M.-J.; Traoré, P.S.; Schut, A.G.; Defourny, P. Quantifying Fertilizer Application Response Variability with VHR Satellite NDVI Time Series in a Rainfed Smallholder Cropping System of Mali. Remote Sens. 2016, 8, 531. [Google Scholar] [CrossRef]

- Huang, S.; Miao, Y.; Zhao, G.; Yuan, F.; Ma, X.; Tan, C.; Yu, W.; Gnyp, M.L.; Lenz-Wiedemann, V.I.; Rascher, U. Satellite remote sensing-based in-season diagnosis of rice nitrogen status in Northeast China. Remote Sens. 2015, 7, 10646–10667. [Google Scholar] [CrossRef]

- Boegh, E.; Soegaard, H.; Broge, N.; Hasager, C.; Jensen, N.; Schelde, K.; Thomsen, A. Airborne multispectral data for quantifying leaf area index, nitrogen concentration, and photosynthetic efficiency in agriculture. Remote Sens. Environ. 2002, 81, 179–193. [Google Scholar] [CrossRef]

- Inoue, Y.; Sakaiya, E.; Zhu, Y.; Takahashi, W. Diagnostic mapping of canopy nitrogen content in rice based on hyperspectral measurements. Remote Sens. Environ. 2012, 126, 210–221. [Google Scholar] [CrossRef]

- Hunt, E.R.; Cavigelli, M.; Cst, D.; Mcmurtrey, J.I.; Walthall, C.L. Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Lelong, C.C.; Burger, P.; Jubelin, G.; Roux, B.; Labbé, S.; Baret, F. Assessment of unmanned aerial vehicles imagery for quantitative monitoring of wheat crop in small plots. Sensors 2008, 8, 3557–3585. [Google Scholar] [CrossRef] [PubMed]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Hunt, E.R.; Daughtry, C.S.T.; Mirsky, S.B.; Hively, W.D. Remote sensing with unmanned aircraft systems for precision agriculture applications. In Proceedings of the Second International Conference on Agro-Geoinformatics, Fairfax, VA, USA, 12–16 August 2013. [Google Scholar]

- Jin, X.; Liu, S.; Baret, F.; Hemerlé, M.; Comar, A. Estimates of plant density of wheat crops at emergence from very low altitude UAV imagery. Remote Sens. Environ. 2017, 198, 105–114. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Mallavan, B.; Prévot, L.; Roux, B. Can commercial digital cameras be used as multispectral sensors? A crop monitoring test. Sensors 2008, 8, 7300–7322. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, J.; Wang, K.; Deng, J.; Harmon, T. Quantifying Nitrogen Status of Rice Using Low Altitude UAV-Mounted System and Object-Oriented Segmentation Methodology. In Proceedings of the ASME 2009 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, San Diego, CA, USA, 30 August–2 September 2009. [Google Scholar]

- Córcoles, J.I.; Ortega, J.F.; Hernández, D.; Moreno, M.A. Estimation of leaf area index in onion (Allium cepa L.) using an unmanned aerial vehicle. Biosyst. Eng. 2013, 115, 31–42. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Gitelson, A.A.; Schepers, J.S.; Walthall, C.L. Application of Spectral Remote Sensing for Agronomic Decisions. Agron. J. 2008, 100, 117–131. [Google Scholar] [CrossRef]

- Jordan, C.F. Derivation of leaf area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hively, W.D.; McCarty, G.W.; Daughtry, C.S.T.; Forrestal, P.J.; Kratochvil, R.J.; Carr, J.L.; Allen, N.F.; Fox-Rabinovitz, J.R.; Miller, C.D. NIR-green-blue high-resolution digital images for assessment of winter cover crop biomass. GISci. Remote Sens. 2011, 48, 86–98. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Bégué, A.; Labbé, S.; Houlès, M.; Martiné, J.F. A light-weight multi-spectral aerial imaging system for nitrogen crop monitoring. Precis. Agric. 2012, 13, 525–541. [Google Scholar] [CrossRef]

- Suárez, L.; Zarco-Tejada, P.; González-Dugo, V.; Berni, J.; Fereres, E. Detecting water stress in orchard crops using PRI from airborne imagery. In Proceedings of the 6th EARSeL SIG IS Workshop Imaging Spectroscopy: Innovative Tool for Scientific and Commercial Environmental Applications, Tel-Aviv, Israel, 16–19 March 2009. [Google Scholar]

- Calderón, R.; Navas-Cortés, J.A.; Lucena, C.; Zarco-Tejada, P.J. High-resolution airborne hyperspectral and thermal imagery for early detection of Verticillium wilt of olive using fluorescence, temperature and narrow-band spectral indices. Remote Sens. Environ. 2013, 139, 231–245. [Google Scholar] [CrossRef]

- Rey, C.; Martín, M.P.; Lobo, A.; Luna, I.; Diago, M.P.; Millan, B.; Tardáguila, J. Multispectral imagery acquired from a UAV to assess the spatial variability of a Tempranillo vineyard. In Precision Agriculture ’13; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013. [Google Scholar]

- Candiago, S.; Remondino, F.; de Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Hunt, E.; Hively, W.D.; Daughtry, C.S.; McCarty, G.W.; Fujikawa, S.J.; Ng, T.; Tranchitella, M.; Linden, D.S.; Yoel, D.W. Remote sensing of crop leaf area index using unmanned airborne vehicles. In Proceedings of the Pecora 17 Symposium, Denver, CO, USA, 18–20 November 2008. [Google Scholar]

- Mathews, A.J.; Jensen, J.L.R. Visualizing and Quantifying Vineyard Canopy LAI Using an Unmanned Aerial Vehicle (UAV) Collected High Density Structure from Motion Point Cloud. Remote Sens. 2013, 5, 2164–2183. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of Wheat LAI at Middle to High Levels Using Unmanned Aerial Vehicle Narrowband Multispectral Imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Von Bueren, S.; Burkart, A.; Hueni, A.; Rascher, U.; Tuohy, M.; Yule, I. Comparative validation of UAV based sensors for the use in vegetation monitoring. Biogeosci. Discuss. 2014, 11, 3837–3864. [Google Scholar] [CrossRef] [Green Version]

- Gastal, F.; Lemaire, G.; Durand, J.-L.; Louarn, G. Quantifying crop responses to nitrogen and avenues to improve nitrogen-use efficiency. In Crop Physiology (Second Edition); Academic Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Bremner, J.M.; Mulvancy, C.S. Nitrogen-total. In Methods of Soil Analysis. Part 2 Chemical and Microbiological Properties; American Society of Agronomy: Madison, WI, USA, 1982; pp. 595–624. [Google Scholar]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogram. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Pérez-Ortiz, M.; Peña, J.; Gutiérrez, P.; Torres-Sánchez, J.; Hervás-Martínez, C.; López-Granados, F. A semi-supervised system for weed mapping in sunflower crops using unmanned aerial vehicles and a crop row detection method. Appl. Soft Comput. 2015, 37, 533–544. [Google Scholar] [CrossRef]

- Severtson, D.; Callow, N.; Flower, K.; Neuhaus, A.; Olejnik, M.; Nansen, C. Unmanned aerial vehicle canopy reflectance data detects potassium deficiency and green peach aphid susceptibility in canola. Precis. Agric. 2016, 17, 659–677. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor Correction of a 6-Band Multispectral Imaging Sensor for UAV Remote Sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Woebbecke, D.; Meyer, G.; von Bargen, K.; Mortensen, D. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2016, 74, 75–92. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Datt, B. Visible/near infrared reflectance and chlorophyll content in Eucalyptus leaves. Int. J. Remote Sens. 1999, 20, 2741–2759. [Google Scholar] [CrossRef]

- Cheng, T.; Song, R.; Li, D.; Zhou, K.; Zheng, H.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Spectroscopic Estimation of Biomass in Canopy Components of Paddy Rice Using Dry Matter and Chlorophyll Indices. Remote Sens. 2017, 9, 319. [Google Scholar] [CrossRef]

- Li, F.; Gnyp, M.L.; Jia, L.; Miao, Y.; Yu, Z.; Koppe, W.; Bareth, G.; Chen, X.; Zhang, F. Estimating N status of winter wheat using a handheld spectrometer in the North China Plain. Field Crops Res. 2008, 106, 77–85. [Google Scholar] [CrossRef]

- Tilly, N.; Aasen, H.; Bareth, G. Fusion of Plant Height and Vegetation Indices for the Estimation of Barley Biomass. Remote Sens. 2015, 7, 11449–11480. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Feng, G.; Gao, X.; Li, F.; Liu, B.; Yue, S.; Cheng, S.; Ustin, S.L.; Khosla, R. Active canopy sensing of winter wheat nitrogen status: An evaluation of two sensor systems. Comput. Electron. Agric. 2015, 112, 54–67. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Andrés, V.; Arkebauer, T.J.; Rundquist, D.C.; Galina, K.; Bryan, L. Remote estimation of leaf area index and green leaf biomass in maize canopies. Geophys. Res. Lett. 2003, 30, 335–343. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Elvidge, C.D.; Chen, Z. Comparison of broad-band and narrow-band red and near-infrared vegetation indices. Remote Sens. Environ. 1995, 54, 38–48. [Google Scholar] [CrossRef]

- Perry, E.M.; Roberts, D.A. Sensitivity of Narrow-Band and Broad-Band Indices for Assessing Nitrogen Availability and Water Stress in an Annual Crop. Agron. J. 2008, 100, 1211–1219. [Google Scholar] [CrossRef]

- Knyazikhin, Y.; Schull, M.A.; Stenberg, P.; Mõttus, M.; Rautiainen, M.; Yang, Y.; Marshak, A.; Carmona, P.L.; Kaufmann, R.K.; Lewis, P.; et al. Hyperspectral remote sensing of foliar nitrogen content. Proc. Natl. Acad. Sci. USA 2013, 110, 185–192. [Google Scholar] [CrossRef] [PubMed]

- Bajwa, S.; Mishra, A.; Norman, R. Canopy reflectance response to plant nitrogen accumulation in rice. Precis. Agric. 2010, 11, 488–506. [Google Scholar] [CrossRef]

- Yu, K.; Li, F.; Gnyp, M.L.; Miao, Y.; Bareth, G.; Chen, X. Remotely detecting canopy nitrogen concentration and uptake of paddy rice in the Northeast China Plain. ISPRS J.Photogramm. Remote Sens. 2013, 78, 102–115. [Google Scholar] [CrossRef]

- Luna, I.; Lobo, A. Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua. Remote Sens. 2016, 8, 500. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; Castro, A.I.D.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High Throughput Field Phenotyping of Wheat Plant Height and Growth Rate in Field Plot Trials Using UAV Based Remote Sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Ding, Y.F.; Zhao, C.H.; Wang, Q.S. Effect of application stage of panicle fertilizer on rice grain yield and the utilization of nitrogen. J. Nanjing Agric. Univ. 2003, 26, 5–8. [Google Scholar]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; de Castro, A.I.; Peña-Barragán, J.M. Configuration and specifications of an Unmanned Aerial Vehicle (UAV) for early site specific weed management. PLoS ONE 2013, 8, e58210. [Google Scholar] [CrossRef] [PubMed]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative modelling for leaf nitrogen content of winter wheat using UAV-based hyperspectral data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

| Year | Seedling | N Fertilizer | UAS Flight Date | Field Sampling Date | Growth Stage | Image Acquisition | ||

|---|---|---|---|---|---|---|---|---|

| MS | RGB | CIR | ||||||

| 2015 | 16 May | 23 June | 21 July | 21 July | Tillering | √ | √ | √ |

| 2 August | 5 August | 31 July | Jointing | √ | √ | √ | ||

| 14 August | 14 August | 15 August | Booting | √ | √ | √ | ||

| 2016 | 18 May | 25 June | 21 July | 21 July | Tillering | - | √ | √ |

| 4 August | 6 August | 6 August | Jointing | √ | √ | √ | ||

| 14 August | 14 August | 14 August | Booting | √ | √ | √ | ||

| Camera | Version | Field of View | Image Size | Altitude (m) | Coverage (Single Image) (ha) | Pixel Size (on the Ground) (mm) |

|---|---|---|---|---|---|---|

| RGB | Canon 5D Mark Ш | 74° × 53° | 3840 × 5760 | 50 | 0.75 | 13 |

| Color infrared | Canon PowerShot SX260 | 72° × 52° | 3000 × 4000 | 100 | 1.41 | 36 |

| Multispectral | Mini-MCA | 38° × 31° | 1024 × 1280 | 100 | 0.38 | 54 |

| Index | Name | Formula | References | Camera |

|---|---|---|---|---|

| NExG | Normalized Excess green index | (2*G − R − B)/(G + R + B) | [43,45] | MS, RGB |

| NGRDI | Normalized green-red difference index | (G − R)/(G + R) | [21,44] | MS, RGB |

| GNDVI | Green normalized difference vegetation index | (NIR − G)/(NIR + G) | [46] | MS, CIR |

| ENDVI | Enhanced normalized difference vegetation index | (NIR + G − 2*B)/(NIR + G + 2*B) | (www.maxmax.com) | MS, CIR |

| CIred edge | Red edge chlorophyll index | NIR/RE-1 | [47] | MS |

| DATT | DATT | (NIR − RE)/(NIR − R) | [48] | MS |

| Indices | LNA (g m−2) | PNA (g m−2) | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Tillering | Jointing | Booting | Jointing & Booting | All Three Stages | Tillering | Jointing | Booting | Jointing & Booting | All Three Stages | |||||||||||

| R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | R2 | RMSE | |

| NExG-MCA | - | - | 0.29 | 1.74 | 0.21 | 3.41 | 0.16 | 5.16 | - | - | - | - | 0.33 | 3.09 | 0.19 | 5.06 | 0.17 | 4.63 | - | - |

| NExG-RGB | 0.42 | 1.04 | 0.34 | 4.02 | 0.21 | 4.70 | 0.19 | 6.14 | 0.30 | 2.04 | 0.66 | 1.18 | 0.41 | 6.27 | 0.19 | 9.06 | 0.16 | 8.92 | 0.29 | 7.44 |

| NGRDI-MCA | - | - | 0.41 | 1.74 | 0.36 | 2.58 | 0.30 | 2.29 | - | - | - | - | 0.47 | 2.44 | 0.33 | 4.81 | 0.31 | 3.83 | - | - |

| NGRDI-RGB | 0.58 | 0.92 | 0.52 | 1.61 | 0.51 | 2.50 | 0.41 | 2.66 | 0.50 | 2.22 | 0.58 | 1.62 | 0.56 | 2.60 | 0.46 | 4.63 | 0.37 | 4.53 | 0.49 | 3.76 |

| GNDVI-MCA | - | - | 0.78 | 1.25 | 0.79 | 1.36 | 0.69 | 1.49 | - | - | - | - | 0.84 | 1.67 | 0.77 | 2.56 | 0.71 | 2.41 | - | - |

| GNDVI-CIR | 0.71 | 0.75 | 0.70 | 2.34 | 0.55 | 2.67 | 0.46 | 2.50 | 0.65 | 2.08 | 0.74 | 1.60 | 0.74 | 2.39 | 0.52 | 5.13 | 0.45 | 3.73 | 0.65 | 3.12 |

| ENDVI-MCA | - | - | 0.64 | 1.33 | 0.63 | 1.80 | 0.49 | 2.02 | - | - | - | - | 0.71 | 2.46 | 0.61 | 3.21 | 0.51 | 3.52 | - | - |

| ENDVI-CIR | 0.55 | 1.76 | 0.37 | 2.04 | 0.14 | 3.82 | 0.10 | 2.34 | 0.45 | 2.18 | 0.60 | 2.77 | 0.40 | 4.25 | 0.12 | 7.03 | 0.10 | 4.10 | 0.45 | 3.75 |

| CIred edge | - | - | 0.85 | 1.53 | 0.82 | 1.33 | 0.79 | 1.43 | - | - | - | - | 0.88 | 1.15 | 0.83 | 2.72 | 0.81 | 2.38 | - | - |

| DATT | - | - | 0.86 | 1.90 | 0.83 | 1.38 | 0.81 | 1.45 | - | - | - | - | 0.90 | 1.69 | 0.82 | 2.58 | 0.84 | 2.27 | - | - |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sens. 2018, 10, 824. https://doi.org/10.3390/rs10060824

Zheng H, Cheng T, Li D, Zhou X, Yao X, Tian Y, Cao W, Zhu Y. Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sensing. 2018; 10(6):824. https://doi.org/10.3390/rs10060824

Chicago/Turabian StyleZheng, Hengbiao, Tao Cheng, Dong Li, Xiang Zhou, Xia Yao, Yongchao Tian, Weixing Cao, and Yan Zhu. 2018. "Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice" Remote Sensing 10, no. 6: 824. https://doi.org/10.3390/rs10060824

APA StyleZheng, H., Cheng, T., Li, D., Zhou, X., Yao, X., Tian, Y., Cao, W., & Zhu, Y. (2018). Evaluation of RGB, Color-Infrared and Multispectral Images Acquired from Unmanned Aerial Systems for the Estimation of Nitrogen Accumulation in Rice. Remote Sensing, 10(6), 824. https://doi.org/10.3390/rs10060824