1. Introduction

The current Global Assessment Report on Biodiversity and Ecosystem Services again depicts an alarming picture of the Earth with accelerating rates of biodiversity loss [

1]. Earth observation (EO) has a high potential for biodiversity assessments, mainly for the description of vegetation habitats [

2]. The synoptic view, and the delivery of detailed, objective and cost-efficient information over large areas, makes EO data one of the most useful tools for biodiversity assessments [

3,

4,

5]. Depending on the spectral, spatial and temporal resolution of the EO data, various categorical and biophysical traits can be mapped [

6,

7]. In forest ecosystems, tree species diversity is a key parameter for ecologists, conservationists and also for forest managers [

8,

9]. In addition to the occurrences of tree species, information about the distribution and the spatial pattern of tree species within larger geographic extents is also essential.

In the last few years, the number and variety of commercially and publicly funded EO sensors has increased dramatically. As a result, data with higher spatial, spectral and temporal resolutions are available. Analysis of hyperspectral data demonstrated the added value of the dense spectral sampling for the separation of tree species [

10,

11,

12,

13]. Multi-spectral, very high resolution (VHR) satellite data were successfully used for mapping tree species distribution for up to ten different species [

14,

15,

16,

17]. The small pixel size of VHR data enables the classification of individual tree crowns. However, the use of VHR satellite data or airborne hyperspectral data is often limited by high data costs and limited area coverage.

Studies covering larger regions by combining data with different spatial resolution have thus far only focused on a small number of tree species [

18], respectively, on tree species groups [

19]. Likewise, existing continental-scale forest maps such as the Copernicus high resolution forest layer [

20], only distinguish broadleaf and deciduous forests. Studies analyzing several tree species and covering large geographic extents are still missing [

21].

With the launch of the twin Sentinel-2A and 2B satellites since 2015, high quality data with high spatial, spectral and temporal resolution are now freely available. Despite the fact that individual tree crowns cannot be separated with the 10–20 m data, the rich spectral information with bands in the visible, Red-Edge, Near-Infrared (NIR) and Shortwave-Infrared (SWIR) wavelengths has a high potential for tree species separation [

22,

23,

24,

25,

26,

27,

28,

29]. An additional advantage is the very high revisit interval of the two satellites. The twins cover the entire Earth surface every 5 days, with even higher number of observations in the overlap areas of adjacent orbits.

In many (partly) cloudy areas of the world, the availability of dense time series is paramount to obtaining reliable and cloud-free observations during key phenological periods [

30]. An adequate number of cloud-free observations also enables a better description of the actual situation and historical evolution and moreover helps to detect changes [

31]. Consequently, the use of multi-temporal Sentinel-2 data also improves tree species identification. Nelson [

32], for example, analyzed six tree species classes in Sweden testing all possible combinations of three Sentinel-2 scenes from May, July and August. They achieved overall accuracies of up to 86%. Bolyn et al. [

22] classified eleven forest classes in Belgium with an overall accuracy of 92% using Sentinel-2 scenes from May and October. In a German test site, Wessel et al. [

33] achieved up to 88% overall accuracy for four tree species classes using Sentinel-2 scenes from May, August, and September. Persson et al. [

27] used four scenes from April, May, July, and October for the separation of five tree species in Sweden and obtained an overall accuracy of 88%. In a Mediterranean forest, four forest types were separated with accuracies of over 83% by Puletti et al. [

28] using the Sentinel-2 bands together with vegetation indices. Hościło and Lewandowska [

34] used four scenes to classify eight tree species in southern Poland with an overall accuracy of 76%. Using additional topographic features and a stratification in broadleaf and coniferous species, the accuracy increased to 85%. Grabska et al. [

23] achieved, with five (from 18) Sentinel-2 images, an overall accuracy of 92% for the classification of nine tree species in a Carpathian test site. The most important band was the Red-Edge 2 and most important scene were acquisitions from October. All studies clearly demonstrated the benefit of multi-temporal data and gave some hints about the importance of individual bands and optimum acquisition times. However, the number of identified tree species was still relatively small (2–11), and generally only a few (3–5) Sentinel-2 scenes were analyzed.

The aim of this study is to assess the suitability of dense multi-temporal Sentinel-2 data for a detailed description of tree species and other vegetation/land cover classes in the Wienerwald biosphere reserve in Austria. In protected areas, detailed information on the actual land cover—and possible changes—are of high importance. Up-to-now, the forest description of the biosphere reserve was mainly based on management plans from different forest enterprises. These data do not cover the entire biosphere reserve and are sometimes outdated. The biosphere management would tremendously benefit from consistent and reliable information about the spatial distribution of the major coniferous and broadleaf tree species.

The main objectives of our research were:

To evaluate the potential of multi-temporal Sentinel-2 data for mapping 12 tree species at 10 m spatial resolution for the entire Wienerwald biosphere reserve.

To identify the best acquisition dates and scene combinations for tree species separation.

To identify the most important Sentinel-2 bands for tree species classification and the added value of several vegetation indices.

To evaluate the benefits of stratified classifications.

To apply an additional short-term change detection analysis to monitor forest management activities and to ensure that the final tree species maps are up-to-date.

2. Materials and Methods

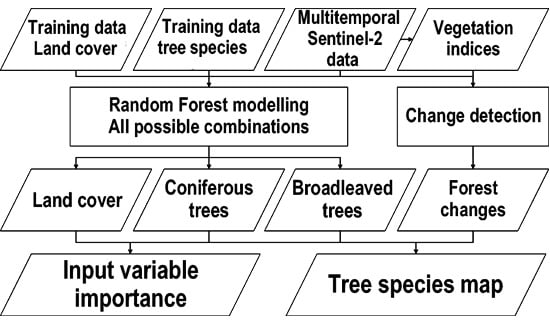

For the land cover classification and the tree species mapping in the Wienerwald biosphere reserve, 18 cloud-free Sentinel-2 scenes acquired between 2015 and 2017 were used. The mapping was done in three steps using different reference data sets (

Figure 1). In the first step, six broad land cover classes were mapped. Subsequently, the individual tree species were identified within the resulting forest strata. In the final step, change detection was applied to identify areas where forest activities took place.

For the broad land cover classification, reference data were visually interpreted in a regular grid using four-band orthoimages with a spatial resolution of 20 cm acquired in the course of the national aerial image campaign and provided by Austria’s Federal Office of Metrology and Surveying. The reference data for the tree species were derived from stand maps and other forest management databases. To enhance the data quality, the reference points were cross-checked by visual interpretation of color-infrared (CIR) orthoimages. With these reference data, the coniferous and broadleaved tree species were classified both separately and together, while testing all possible combinations of the Sentinel-2 data. The best classification results were merged together and areas where changes could be detected were masked out.

2.1. Study Site Wienerwald Biosphere Reserve

The Wienerwald biosphere reserve is one of the largest contiguous deciduous beech woodlands in Central Europe. It is located in the south-west of Vienna (Austria) and covers an area of 105,645 ha. The location of such a large forest on the edge of a metropolitan area is unique. The range of (micro) climatic and geological conditions in the Wienerwald is the main reason for the large diversity of vegetation types [

35]. The Biosphere Reserve has more than 20 types of woodland—with beech, oak and hornbeam being dominant—and more than 23 types of meadow [

36]. Concerning the forest, particularly rare woods can be found, such as Austrian’s largest downy oak forests (

Quercus pubescens) and unique stands of Austrian Black Pine (

Pinus nigra subsp. nigra) occurring in the eastern part of the Wienerwald [

37]. The inlet in

Figure 2 shows the location of the study area within a region characterized by its diversity of nature and culture, and sustainable ecosystem management.

2.2. Reference Data Sets

For the reference data creation, a regular grid (1 km × 1 km) was laid over the entire biosphere reserve as well as some surrounding areas (

Figure 2a). At each point, the grid cell was visually interpreted using CIR orthoimages (

Figure 2b).

Table 1 presents the number of samples and class definitions of the six land cover classes.

Six target classes were distinguished for the land cover classification: deciduous forest, broadleaf forest, grassland, cropland, build-up areas and water bodies. To receive adequate numbers of trainings samples for the classes cropland, build-up areas and water, the grid was extended to surrounding areas in the north and east of the study area. Only clearly interpretable samples which contain only one class were retained for the training of the classification model. In the end, 797 out of 1360 pixels were useable.

For the tree species classification, additional reference samples were necessary and were derived from forest management data. First, pure stands of the 12 tree species were identified in the forest management maps. Next, one or two Sentinel-2 pixels were chosen in the center of each stand and the correctness of the information was checked using CIR orthoimages (

Figure 3).

In this way, on average 85 reference samples per tree species were distinguished, well distributed over the entire biosphere reserve (

Table 2). The variation in the number of available reference data reflects the difficulties to identify sufficiently large and pure stands for some of the species.

2.3. Sentinel-2 Data Sets

The study area is located in the overlapping area of two Sentinel-2 orbits (122 and 79—

Figure 2), and therefore, the number of acquisitions twice as high as normal in this latitude. For the analysis, all available Sentinel-2 scenes were visually checked. Only cloud-free data were selected. From the 188 scenes acquired between June 2015 to end of 2017, 18 scenes were perfectly useable. Summary information about selected scenes can be found in

Table 3. All scenes were atmospherically corrected using Sen2Cor [

38] Version 2.4 using the data service platform operated by BOKU [

39] on the Earth Observation Data Centre (EODC) [

40]. The 20 m bands B5, B6, B7, B8a, B11 and B12 were resampled to 10 m and the 60 m-bands B1, B9 and B10 were excluded from the analyses.

2.4. Random Forest Classification Approach

For all classifications, the ensemble learning random forest (RF) approach developed by Breiman [

41] was used. The two hyper-parameters

mtry (number of predictors randomly sampled for each node) and

ntree (the number of trees) were set to the square root of available input variables (default) and, to 1000, respectively.

One advantage of the bootstrapping is that it yields relatively unbiased ‘out-of-bag’ (OOB) results, as long as representative reference data are provided [

42]. Another benefit is the computation of importance measures which can be used for the evaluation of the input data and subsequent feature reduction. In this study, a recursive feature selection process using the ‘Mean decrease in Accuracy’ (MDA) was applied similarly to other studies [

18,

43,

44]. More information about the algorithm and its advantages, such as the importance measure for the input variables and the integrated bootstrapping, can be found in the literature [

16,

41,

45,

46].

To classify the six land cover classes, first a model based on all Sentinel-2 datasets was developed using the land cover reference data from the visually interpreted regular grid. The tree species models were developed separately for the broadleaf and coniferous species—for testing purposes we also pooled all tree species together. The tree species classification models were based on the tree species reference data set and only applied to areas previously mapped as broadleaf or coniferous forest.

To find the best combinations for the tree species classification, we tested all possible combinations of the 18 Sentinel-2 scenes. We tested for example 18 individual scenes, 153 combinations of two scenes, 816 of three and so on. In total, 262,143 different models for each of the two forest strata were developed. The training of each model, including the feature selection, took on average about 5 min on a standard PC (CPU i7-2600 3.40 GHz, 16 GB RAM), and therefore, a high-performance computing (HPC) environment was used.

The modeling was done with two data sets: one contains only the 10 spectral bands, the second combines the 10 spectral bands with 28 widely used vegetation indices (

Table A1 in the

Appendix A).

2.5. Input Data Evaluation

The classification models were assessed using the OOB results. Previous studies had demonstrated that the OOB results of RF classifiers compare well against an assessment based on a separate validation data set [

42,

47,

48].

To assess the importance of individual Sentinel-2 bands and acquisition times, the ‘Mean decrease in Accuracy’ (MDA) importance values of the final RF models (after feature selection) were normalized for each model to 1 by dividing all values by the maximum value of the specific model. Variables which were eliminated by the feature selection procedure were assigned an importance value of 0. All normalized values were summed up for all tested combinations and divided by the total number of tested combinations (Equation (1)):

where

IMPi is the normalized and aggregated importance value for variable

i (= one band of one specific Sentinel-2 scene);

MDAji the MDA importance value of variable

i in the model

j;

MDAjmax the maximum MDA importance value in the model

j; and

n the number of models (combinations of Sentinel-2 scenes) considering variable

i (= 131,072).

When evaluating the importance of the spectral bands, models involving the vegetation indices were discarded to avoid skewing the results by the chosen indices. This was deemed particularly important as most indices include the NIR band. However, we applied the evaluation also to the models with vegetation indices to investigate the most important vegetation indices for tree species classification.

2.6. Change Detection

As outlined above, for the tree species classification, data from a three-year period (2015 to 2017) were used. To avoid interference of possibly occurring changes during the three years, a simple change detection was applied. Changes in the forest cover were detected by comparing the NDVI values from the respective August scenes of the years 2015, 2016 and 2017. Based on the difference between the NDVI of the actual and the previous year, pixels with absolute differences of ≤0.05 were flagged as ‘change’. Negative values indicate a decrease in leaf biomass and were interpreted as an indicator of forest management activities such as thinning, harvesting or calamities. This interpretation was cross-checked by visual interpretation of the data sets and consultations with the forest management. All areas where forest management activities were detected were masked out from the tree species map.

5. Conclusions

The introduced Sentinel-2 workflow for tree species classification is robust, cost-efficient and scalable. The workflow was already successfully applied in several test areas across Germany. In the highly diverse Wienerwald biosphere reserve in Austria, the classification method achieved high classification accuracies for most of the 12 investigated tree species. However, a noticeable dependence on tree species, but also on the number and quality of the reference data, was found.

The NIR band is useful to separate the two tree species groups, coniferous and broadleaf trees, but much less so for identifying individual species. For the identification of the seven broadleaf species, the two SWIR bands are the most important. To separate the five individual coniferous species, the highest importance was found for the Red band. The use of additional vegetation indices further improved the performance of the classification models, and is therefore highly recommended.

The sensors on-board of Sentinel-2A and 2B provide rich spectral information in 10 spectral bands. The data are extremely useful for tree species mapping over large areas. The data is freely available, and due to the regular and dense acquisition pattern (five-day), images covering all phenological stages can be acquired and used for the classification. Although a few well-placed acquisitions can possibly yield very good classification results, the easiest way to ensure high classification accuracies is to combine and use all available cloud-free images simultaneously. This avoids the definition of the ‘perfect’ acquisition date(s), which far too often cannot be obtained due to local weather conditions.

With two Sentinel-2 satellites and their high revisit rate, the acquisition of several scenes per year should be possible for most Central European regions. However, especially over mountainous regions such as the Alps, the revisit frequency is probably still not high enough. The high cloudiness also prevents the application of methods for the extraction of land surface phenology (LSP) parameters, and their subsequent use in classification models. Unfortunately, in mountainous regions such as the Alps, microwave sensors are also only of limited use, due to strong terrain effects and radar shadows. Hence, it is requested to further increase the temporal revisit frequency of optical sensors and to make better use of virtual constellations, if possible involving the fleet of commercial VHR satellites.

From a methodological point of view, research should focus on further increasing the number of tree species involved in such classifications, while finding ways to handle strong class imbalances and missing values. The handling of mixed classes should also have high priority, as long as sensor resolution does not permit to resolve individual tree crowns. Ultimately, the full benefits of Earth Observation data will only become visible if such maps are produced and regularly updated at continental scale. This requires progress in terms of data standardization and feature extraction, and implementation of suitable code within HPC environments having direct access to the data storage. More research is warranted to identify the bio-physical factors leading to the observed large variations in species-specific classification accuracies.