Towards Automatic Extraction and Updating of VGI-Based Road Networks Using Deep Learning

Abstract

:1. Introduction

- In the second stage, we make use of the outputs from the first stage where VGI data has been used to extract an initial road network to train the CNN architecture. To our best knowledge, ours is the first attempt to use a CNN architecture with reduced context size of ‘8 × 8’ pixels for road classification based on the combination of both probabilistic pixel and patch based prediction for the purpose of road network extraction. This reduces the computational load significantly. Furthermore, we carry out post-processing to rectify the extracted road network to improve the accuracy.

- In the third stage, we connect the isolated road segments to ensure a continuous network with simple features pertaining to road’s spectral, geometric and topological information. The edges of the isolated segments are connected to the closest node in the existing network extracted in the previous steps.

2. Background and Related Works

3. Proposed Methodology

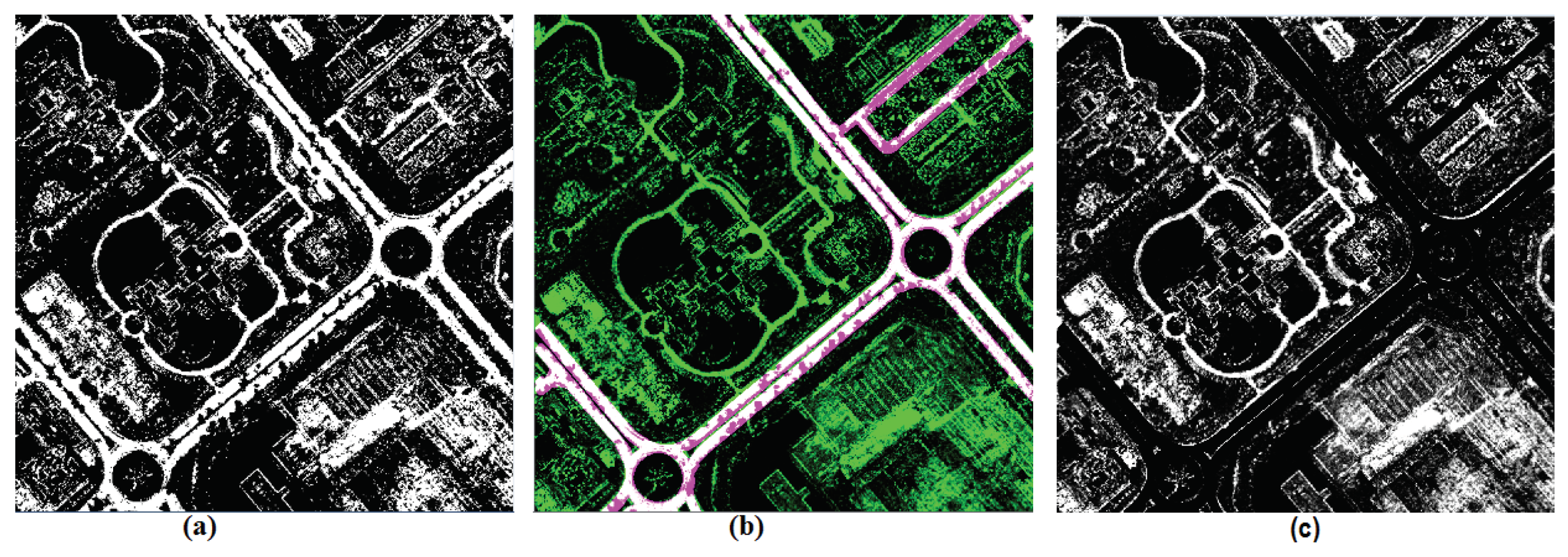

3.1. Stage 1: Training Data Generation from Initial Road Extraction Using VGI

3.2. Stage 2: CNN Approach to Extract Probable Road Segments

3.2.1. CNN Architecture

- CL(N × N, I, F): It represents a convolutional layer with filter size of N × N, I represents no. of image input channels, and F defines the no. of output channels obtained by using different number of filters. The default stride in both vertical and horizontal direction is 1.

- M(N × N, S): It represents max-pooling layer of size ‘N × N’ with ‘S’ unit strides.

- FC(I, O): It represents fully connected layer with ‘I’ input channels and ‘O’ output channels.

- LRNL: It represents local response normalization layer which sufficiently prevents overfitting without needing to perform additional dropout and L2 regularization.

3.2.2. Post-Processing

- Identifying building regions: In our proposed method, in order to remove buildings, we utilize an approach which uses image segmentation for detecting building regions [34]. The method uses image segmentation to segment the images into smaller segments and detect buildings as segments with high contrast to the darkest segment in the neighborhood in the direction of the expected shadows based on the sun angle.

- Vegetation and Shadows: To avoid confusion between vegetation and shadow regions, normalized difference vegetation index (NDVI) values are used. Shadows are expected to have NDVI values lower than 0 and vegetation is expected to have values greater than 0.3. The thresholds are chosen based on manual observation across the test images.

- Removing Parking Lots: To remove the remaining non-road artifacts like parking lots, we analyze the number of branches in skeletons of the segments additionally added to the initial road network extracted using VGI. The skeleton of non-road segments such as parking lots tend to have more number of branch points as compared to road segments which are much smoother.It can be seen that road segments are often smooth and continuous segments which consists of fewer branches in the skeleton. However, areas like parking lots are wider and asymmetric. So, the skeletons of such objects have higher number of branches. We use the ratio of Area to Branch points as the indicator to discriminate between road/non-road segments. We set the threshold to 0.0025 based on the observation of various road segments in the test images.

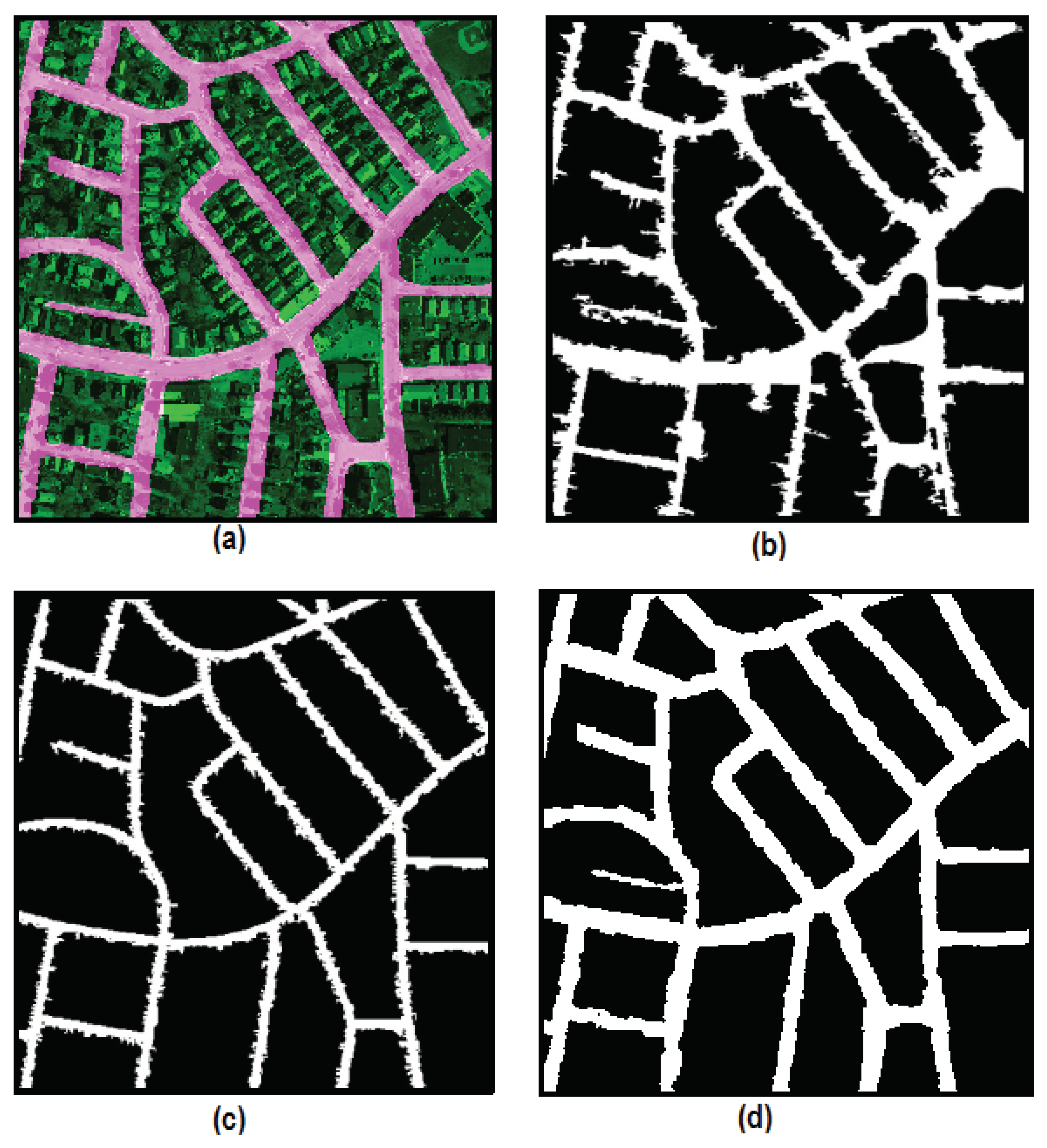

3.3. Stage 3: Completion of Road Network

- Distance: In a segment, we assign each node, a Euclidean distance cost based on its nearest neighbors distance to the nodes belonging to the unconnected segments. Here, the node with the smallest cost based on distance is the one that is likely to get connected to another node in the other unconnected segment, thus linking the two segments together.

- Color-segments: This is second type of disturbance. It represents segments (obtained from SLIC image segmentation process) on a straight path between the two nodes belonging to different segments.

- Noise: This is the last type of disturbance which is defined as the number of edges detected between the two nodes belonging to the different segments. We identify the edges between the nodes using edge detection procedure on a straight path between the two nodes.

4. Experimental Data and Setup

4.1. Datasets

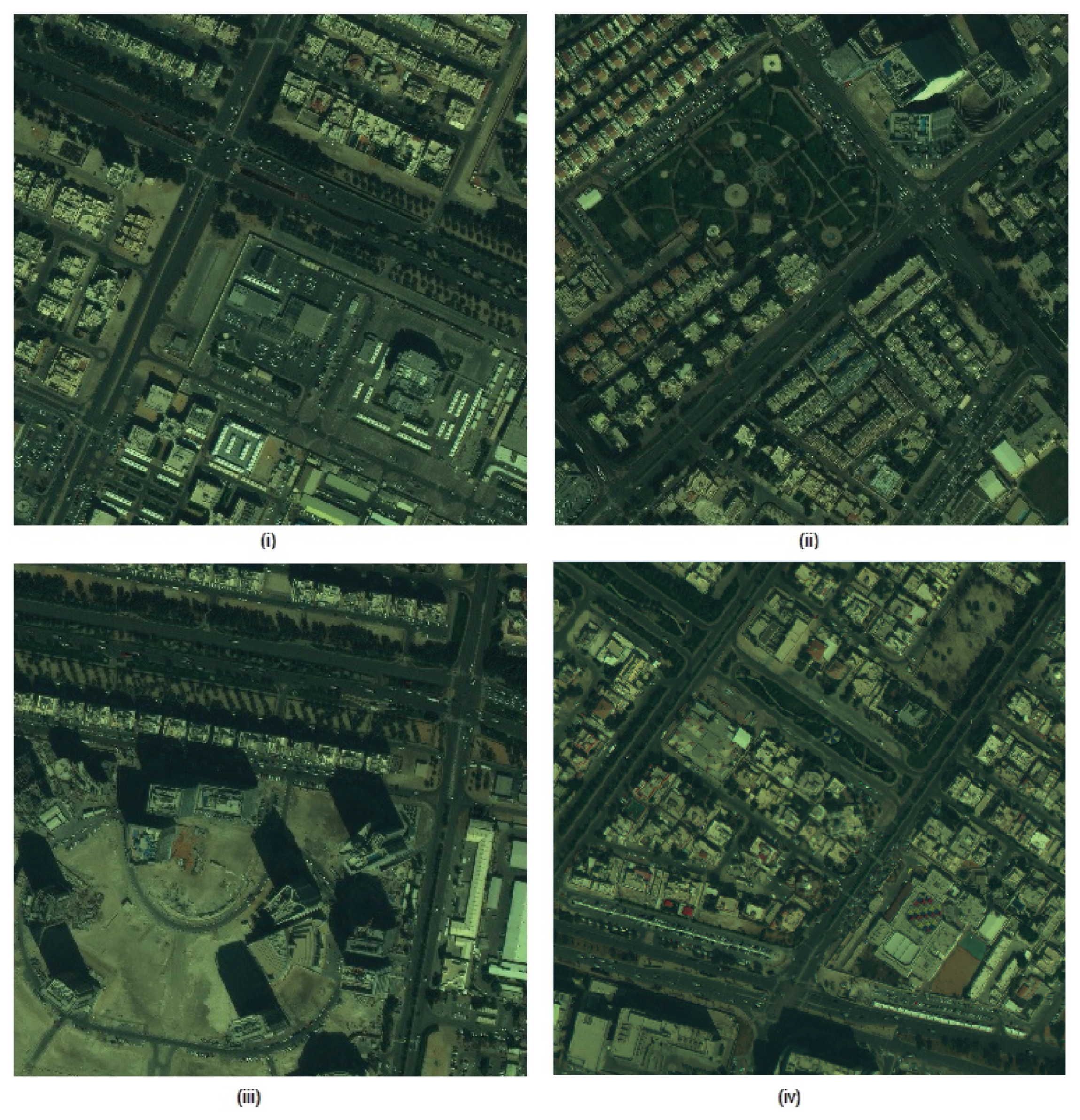

4.1.1. Abu Dhabi Dataset

4.1.2. Massachusetts Dataset

4.2. Experimental Setup

Detection Performance Measures

5. Experimental Results and Discussions

5.1. Results of CNN Plus Post-Processing

5.2. Results of Road Network Completion

5.3. Comparison with Existing Methods

6. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

References

- Manandhar, P.; Marpu, P.R.; Aung, Z. Segmentation based traversing-agent approach for road width extraction from satellite images using volunteered geographic information. Appl. Comput. Inform. 2018. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [PubMed]

- Jiao, L.; Liang, M.; Chen, H.; Yang, S.; Liu, H.; Cao, X. Deep fully convolutional network-based spatial distribution prediction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5585–5599. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Chaib, S.; Liu, H.; Gu, Y.; Yao, H. Deep feature fusion for VHR remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4775–4784. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Scott, G.J.; England, M.R.; Starms, W.A.; Marcum, R.A.; Davis, C.H. Training deep convolutional neural networks for land-cover classification of high-resolution imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 549–553. [Google Scholar] [CrossRef]

- Liu, H.; Yang, S.; Gou, S.; Zhu, D.; Wang, R.; Jiao, L. Polarimetric SAR feature extraction with neighborhood preservation-based deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1456–1466. [Google Scholar] [CrossRef]

- Geng, J.; Wang, H.; Fan, J.; Ma, X. Deep supervised and contractive neural network for SAR image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2442–2459. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate object localization in remote sensing images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

- Kim, P. Convolutional neural network. In MATLAB Deep Learning; Apress: New York, NY, USA, 2017; pp. 121–147. [Google Scholar]

- Paisitkriangkrai, S.; Sherrah, J.; Janney, P.; Van-Den Hengel, A. Effective semantic pixel labelling with convolutional networks and conditional random fields. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 36–43. [Google Scholar]

- Sherrah, J. Fully convolutional networks for dense semantic labelling of high-resolution aerial imagery. arXiv 2016, arXiv:1606.02585. [Google Scholar]

- Jiang, Q.; Cao, L.; Cheng, M.; Wang, C.; Li, J. Deep neural networks-based vehicle detection in satellite images. In Proceedings of the 2015 International Symposium on Bioelectronics and Bioinformatics (ISBB), Beijing, China, 14–17 October 2015; pp. 184–187. [Google Scholar]

- Längkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and segmentation of satellite orthoimagery using convolutional neural networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Noda, K.; Yamaguchi, Y.; Nakadai, K.; Okuno, H.G.; Ogata, T. Audio-visual speech recognition using deep learning. Appl. Intell. 2015, 42, 722–737. [Google Scholar] [CrossRef]

- Young, T.; Hazarika, D.; Poria, S.; Cambria, E. Recent trends in deep learning based natural language processing. IEEE Comput. Intell. Mag. 2018, 13, 55–75. [Google Scholar] [CrossRef]

- Noh, H.; Araujo, A.; Sim, J.; Weyand, T.; Han, B. Large-scale image retrieval with attentive deep local features. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3456–3465. [Google Scholar]

- Wu, G.; Lu, W.; Gao, G.; Zhao, C.; Liu, J. Regional deep learning model for visual tracking. Neurocomputing 2016, 175, 310–323. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual u-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Kahraman, I.; Karas, I.; Akay, A. Road Extraction Techniques from Remote Sensing Images: A Review. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 339–342. [Google Scholar] [CrossRef]

- Li, P.; Zang, Y.; Wang, C.; Li, J.; Cheng, M.; Luo, L.; Yu, Y. Road network extraction via deep learning and line integral convolution. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1599–1602. [Google Scholar]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. RoadTracer: Automatic Extraction of Road Networks From Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Xia, W.; Zhang, Y.Z.; Liu, J.; Luo, L.; Yang, K. Road extraction from high resolution image with deep convolution network—A case study of GF-2 image. In Proceedings of the 2nd International Electronic Conference on Remote Sensing, 22 March–5 April 2018; Volume 2–7, p. 325. [Google Scholar]

- Saito, S.; Yamashita, T.; Aoki, Y. Multiple object extraction from aerial imagery with convolutional neural networks. Electron. Imaging 2016, 2016, 1–9. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Shi, Q.; Liu, X.; Li, X. Road detection from remote sensing images by generative adversarial networks. IEEE Access 2018, 6, 25486–25494. [Google Scholar] [CrossRef]

- Manandhar, P.; Aung, Z.; Marpu, P.R. Segmentation based building detection in high resolution satellite images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3783–3786. [Google Scholar]

- Maurer, T. How to pan-sharpen images using the Gram-Schmidt pan-sharpen method: A recipe. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 1, W1. [Google Scholar] [CrossRef]

- Mnih, V.; Hinton, G.E. Learning to detect roads in high-resolution aerial images. In Proceedings of the 2010 European Conference on Computer Vision (ECCV), Heraklion, Greece, 5–11 September 2010; pp. 210–223. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Li, M.; Stein, A.; Bijker, W.; Zhan, Q. Region-based urban road extraction from VHR satellite images using binary partition tree. Int. J. Appl. Earth Obs. Geoinf. 2016, 44, 217–225. [Google Scholar] [CrossRef]

- Sujatha, C.; Selvathi, D. Connected component-based technique for automatic extraction of road centerline in high resolution satellite images. EURASIP J. Image Video Process. 2015, 2015, 8. [Google Scholar] [CrossRef]

- Maurya, R.; Gupta, P.R.; Shukla, A.S. Road extraction using k-means clustering and morphological operations. In Proceedings of the 2011 IEEE International Conference on Image Information Processing (ICIIP), Shimla, India, 3–5 November 2011; pp. 1–6. [Google Scholar]

- Shu, Y. Deep Convolutional Neural Networks for Object Extraction from High Spatial Resolution Remotely Sensed Imagery. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2014. [Google Scholar]

| First Stage Output | Output of Proposed Approach | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Measure | Img1 | Img2 | Img3 | Img4 | Img5 | Img1 | Img2 | Img3 | Img4 | Img5 |

| Precision | 0.84 | 0.79 | 0.87 | 0.88 | 0.84 | 0.86 | 0.85 | 0.89 | 0.96 | 0.87 |

| Recall | 0.77 | 0.88 | 0.70 | 0.87 | 0.83 | 0.94 | 0.92 | 0.92 | 0.96 | 0.96 |

| Quality | 0.87 | 0.81 | 0.94 | 0.94 | 0.91 | 0.94 | 0.84 | 0.96 | 0.98 | 0.97 |

| F1-score | 0.81 | 0.83 | 0.88 | 0.90 | 0.85 | 0.88 | 0.87 | 0.90 | 0.95 | 0.91 |

| Datasets | Abu Dhabi | Massachusetts | |

|---|---|---|---|

| Methods/Measures | Correctness (%) | Completeness (%) | Correctness (%) |

| Maurya et al. [40] | N/A | 82.3 ± 4.7 | 70.5 ± 4.3 |

| Sujatha and Selvathi [39] | N/A | 83.5 ± 4.3 | 76.6 ± 4.5 |

| Mnih [32] | 78.30 | N/A | 90.1 |

| Shu [41] | 78.2 | N/A | 87.1 |

| Li et al. [38] | 72.25 | N/A | N/A |

| ** Saito et al. [30] | 79.00 | 90.5 | N/A |

| Alshehhi et al. [31] | 80.9 | 92.5 ± 3.2 | 91.7 ± 3.0 |

| Proposed method | 88.61 | 90.8 ± 1.9 | 94.4 ± 3.1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manandhar, P.; Marpu, P.R.; Aung, Z.; Melgani, F. Towards Automatic Extraction and Updating of VGI-Based Road Networks Using Deep Learning. Remote Sens. 2019, 11, 1012. https://doi.org/10.3390/rs11091012

Manandhar P, Marpu PR, Aung Z, Melgani F. Towards Automatic Extraction and Updating of VGI-Based Road Networks Using Deep Learning. Remote Sensing. 2019; 11(9):1012. https://doi.org/10.3390/rs11091012

Chicago/Turabian StyleManandhar, Prajowal, Prashanth Reddy Marpu, Zeyar Aung, and Farid Melgani. 2019. "Towards Automatic Extraction and Updating of VGI-Based Road Networks Using Deep Learning" Remote Sensing 11, no. 9: 1012. https://doi.org/10.3390/rs11091012