Building Extraction from UAV Images Jointly Using 6D-SLIC and Multiscale Siamese Convolutional Networks

Abstract

:1. Introduction

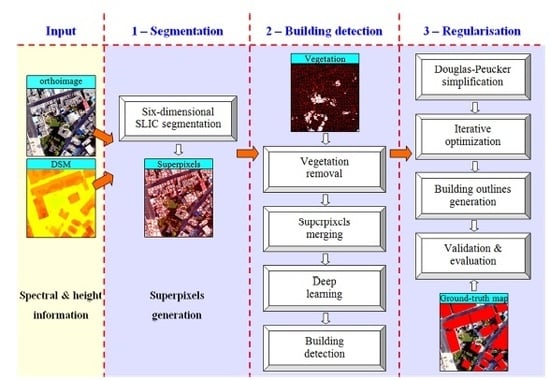

2. Proposed Method

2.1. D-SLIC-Based Superpixel Segmentation

| Algorithm 1: 6D-SLIC segmentation |

| Input: 2D image and . Parameters: minimum area , ground resolution , compactness , weight , maximum number of iterations , number of iterations , minimum distance . Compute approximately equally sized superpixels . Compute every grid interval . Initialize each cluster center . Perturb each cluster center in a 3×3 neighborhood to the lowest 3D gradient position. repeat for each cluster center do Assign the pixels to based on a new distance measure (Equation (2)). end for Update all cluster centers based on Equations (5) and (6). Compute residual error between the previous centers and recomputed centers . Compute . until or Enforcing connectivity. |

2.2. Vegetation Removal

2.3. Building Detection Using MSCNs

2.4. Building Outline Regularization

3. Experimental Evaluation and Discussion

3.1. Data Description

3.2. Evaluation Criteria of Building Extraction Performance

3.3. MSCNs Training

3.4. Comparisons of MSCNs and Random Forest Classifier

3.5. Comparisons of Building Extraction Using Different Parameters

3.6. Comparisons of the Proposed Method and State-of-the-Art Methods

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Gilani, S.A.N.; Awrangjeb, M.; Lu, G. An automatic building extraction and regularisation technique using LiDAR point cloud data and orthoimage. Remote Sens. 2016, 8, 258. [Google Scholar] [CrossRef]

- Wu, G.; Guo, Z.; Shi, X.; Chen, Q.; Xu, Y.; Shibasaki, R.; Shao, X. A boundary regulated network for accurate roof segmentation and outline extraction. Remote Sens. 2018, 10, 1195. [Google Scholar] [CrossRef]

- Castagno, J.; Atkins, E. Roof shape classification from LiDAR and satellite image data fusion using supervised learning. Sensors 2018, 18, 3960. [Google Scholar] [CrossRef] [PubMed]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef]

- Dai, Y.; Gong, J.; Li, Y.; Feng, Q. Building segmentation and outline extraction from UAV image-derived point clouds by a line growing algorithm. Int. J. Digit. Earth 2017, 10, 1077–1097. [Google Scholar] [CrossRef]

- Ahmadi, S.; Zoej, M.J.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. A multidirectional and multiscale morphological index for automatic building extraction from multispectral geoeye-1 imagery. Photogramm. Eng. Remote Sens. 2011, 77, 721–732. [Google Scholar] [CrossRef]

- Ghanea, M.; Moallem, P.; Momeni, M. Automatic building extraction in dense urban areas through GeoEye multispectral imagery. Int. J. Remote Sens. 2014, 35, 5094–5119. [Google Scholar] [CrossRef]

- Chen, R.; Li, X.; Li, J. Object-based features for house detection from RGB high-resolution images. Remote Sens. 2018, 10, 451. [Google Scholar] [CrossRef]

- Yang, H.; Wu, P.; Yao, X.; Wu, Y.; Wang, B.; Xu, Y. Building extraction in very high resolution imagery by dense-attention networks. Remote Sens. 2018, 10, 1768. [Google Scholar] [CrossRef]

- Sampath, A.; Shan, J. Segmentation and reconstruction of polyhedral building roofs from aerial Lidar point clouds. IEEE Trans. Geosci. Remote Sens. 2010, 48, 1554–1567. [Google Scholar] [CrossRef]

- Chen, D.; Zhang, L.; Li, J.; Liu, R. Urban building roof segmentation from airborne lidar point clouds. Int. J. Remote Sens. 2012, 33, 6497–6515. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Shan, J.; Zhang, J.; Li, L. Investigation on the weighted RANSAC approaches for building roof plane segmentation from LiDAR point clouds. Remote Sens. 2016, 8, 5. [Google Scholar] [CrossRef]

- Yan, J.; Shan, J.; Jiang, W. A global optimization approach to roof segmentation from airborne lidar point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 94, 183–193. [Google Scholar] [CrossRef]

- Du, S.; Zhang, Y.; Zou, Z.; Xu, S.; He, X.; Chen, S. Automatic building extraction from LiDAR data fusion of point and grid-based features. ISPRS J. Photogramm. Remote Sens. 2017, 130, 294–307. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, S.; Han, W.; Li, Y. Building detection in an urban area using lidar data and QuickBird imagery. Int. J. Remote Sens. 2012, 33, 5135–5148. [Google Scholar] [CrossRef]

- Awrangjeb, M.; Zhang, C.; Fraser, C.S. Automatic extraction of building roofs using LiDAR data and multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2013, 83, 1–18. [Google Scholar] [CrossRef]

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on Satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels; EPFL Technical Report No. 149300; School of Computer and Communication Sciences, Ecole Polytechnique Fedrale de Lausanne: Lausanne, Switzerland, 2010; pp. 1–15. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Conference on Neural Information Processing Systems (NIPS12), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.-F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Chen, X.; Xiang, S.; Liu, C.-L.; Pan, C.-H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.-Q. Target classification using the deep convolutional networks for SAR images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- He, H.; Chen, M.; Chen, T.; Li, D. Matching of remote sensing images with complex background variations via Siamese convolutional neural network. Remote Sens. 2018, 10, 355. [Google Scholar] [CrossRef]

- He, H.; Chen, M.; Chen, T.; Li, D.; Cheng, P. Learning to match multitemporal optical satellite images using multi-support-patches Siamese networks. Remote Sens. Lett. 2019, 10, 516–525. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrel, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Bittner, K.; Cui, S.; Reinartz, P. Building extraction from remote sensing data using fully convolutional networks. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Hannover, Germany, 6–9 June 2017; pp. 481–486. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Spann, M.; Wilson, R. A quad-tree approach to image segmentation which combines statistical and spatial information. Pattern Recogn. 1985, 18, 257–269. [Google Scholar] [CrossRef]

- Roerdink, J.B.; Meijster, A. The watershed transform: Definitions, algorithms and parallelization and strategies. Fundam. Inform. 2000, 41, 187–228. [Google Scholar]

- Baatz, M.; Schäpe, A. Multiresolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informationsverarbeitung XII; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann-Verlag: Heidelberg, Germany, 2000; pp. 12–23. [Google Scholar]

- Kim, M.; Warner, T.A.; Madden, M.; Atkinson, D.S. Multi-scale GEOBIA with very high spatial resolution digital aerial imagery: Scale, texture and image objects. Int. J. Remote Sens. 2011, 32, 2825–2850. [Google Scholar] [CrossRef]

- Liu, J.; Du, M.; Mao, Z. Scale computation on high spatial resolution remotely sensed imagery multi-scale segmentation. Int. J. Remote Sens. 2017, 38, 5186–5214. [Google Scholar] [CrossRef]

- Belgiu, M.; Draguţ, L. Comparing supervised and unsupervised multiresolution segmentation approaches for extracting buildings from very high resolution imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 67–75. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Csillik, O. Fast segmentation and classification of very high resolution remote sensing data using SLIC superpixels. Remote Sens. 2017, 9, 243. [Google Scholar] [CrossRef]

- Farabet, C.; Couprie, C.; Najman, L.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. 2013, 35, 1915–1929. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. SegNet: A deep convolutional encoder-decoder architecture for robust semantic pixel-wise labeling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Lui, M.Y.; Tuzel, O.; Ramalingam, S.; Chellappa, R. Entropy rate superpixel segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2097–2104. [Google Scholar]

- Van den Bergh, M.; Boix, X.; Roig, G.; de Capitani, B.; Van Gool, L. SEEDS: Superpixels extracted via energy-driven sampling. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 13–26. [Google Scholar]

- Neubert, P.; Protzel, P. Compact watershed and preemptive SLIC: On improving trade-offs of superpixel segmentation algorithms. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 996–1001. [Google Scholar]

- Li, Z.; Chen, J. Superpixel segmentation using linear spectral clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1356–1363. [Google Scholar]

- Neubert, P.; Protzel, P. Superpixel benchmark and comparison. In Proceedings of the Forum Bildverarbeitung 2012; Karlsruher Instituts für Technologie (KIT) Scientific Publishing: Karlsruhe, Germany, 2012; pp. 1–12. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Booth, D.T.; Cox, S.E.; Meikle, T.W.; Fitzgerald, C. The accuracy of ground-cover measurements. Rangel. Ecol. Manag. 2006, 59, 179–188. [Google Scholar] [CrossRef]

- Ok, A.Ö. Robust detection of buildings from a single color aerial image. In Proceedings of the GEOBIA 2008, Calgary, AB, Canada, 5–8 August 2008; Volume XXXVII, Part 4/C1. p. 6. [Google Scholar]

- Meyer, G.E.; Neto, J.C. Verification of color vegetation indices for automated crop imaging applications. Comput. Electron. Agric. 2008, 63, 282–293. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), Lille, France, 6–11 July 2015. [Google Scholar]

- Douglas, D.; Peucker, T. Algorithms for the reduction of the number of points required to represent a digitized line or its caricature. Can. Cartogr. 1973, 10, 112–122. [Google Scholar] [CrossRef]

- Saalfeld, A. Topologically consistent line simplification with the Douglas-Peucker algorithm. Cartogr. Geogr. Inf. Sci. 1999, 26, 7–18. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012. [Google Scholar]

- Awrangjeb, M.; Fraser, C.S. An automatic and threshold-free performance evaluation system for building extraction techniques from airborne Lidar data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4184–4198. [Google Scholar] [CrossRef]

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raska, R. DeepGlobe 2018: A challenge to parse the earth through satellite images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 172–17209. [Google Scholar]

- Brown, M.; Hua, G.; Winder, S. Discriminative learning of local image descriptors. IEEE Trans. Pattern Anal. 2011, 33, 43–57. [Google Scholar] [CrossRef] [PubMed]

- Cohen, J.P.; Ding, W.; Kuhlman, C.; Chen, A.; Di, L. Rapid building detection using machine learning. Appl. Intell. 2016, 45, 443–457. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Metric | SLIC | ERS | SEEDS | preSLIC | LSC | 6D-SLIC |

|---|---|---|---|---|---|---|---|

| (1) | 0.7487 | 0.7976 | 0.7161 | 0.8539 | 0.9039 | 0.9076 | |

| 0.0412 | 0.0640 | 0.0378 | 0.0450 | 0.0385 | 0.0231 | ||

| (2) | 0.7152 | 0.6419 | 0.7070 | 0.5769 | 0.8443 | 0.9286 | |

| 0.1038 | 0.1213 | 0.1027 | 0.1407 | 0.0654 | 0.0443 | ||

| (3) | 0.7323 | 0.8597 | 0.8608 | 0.8669 | 0.8912 | 0.9629 | |

| 0.0681 | 0.0415 | 0.0522 | 0.0539 | 0.0625 | 0.0311 | ||

| (4) | 0.7323 | 0.7918 | 0.8810 | 0.8410 | 0.9313 | 0.9795 | |

| 0.0712 | 0.0304 | 0.0413 | 0.0497 | 0.0395 | 0.0325 |

| Model | Dataset | OA | |||

|---|---|---|---|---|---|

| SCNs3 | Training | 0.9232 | 0.9349 | 0.8674 | 0.9295 |

| Test | 0.8824 | 0.9230 | 0.8219 | 0.9044 | |

| SCNs5 | Training | 0.9440 | 0.9584 | 0.9069 | 0.9515 |

| Test | 0.9088 | 0.9385 | 0.8577 | 0.9246 | |

| SCNs7 | Training | 0.9530 | 0.9686 | 0.9244 | 0.9610 |

| Test | 0.9226 | 0.9553 | 0.8844 | 0.9397 | |

| MSCNs | Training | 0.9670 | 0.9796 | 0.9479 | 0.9735 |

| Test | 0.9584 | 0.9689 | 0.9298 | 0.9638 | |

| MSCNs(layer+) | Training | 0.9672 | 0.9798 | 0.9483 | 0.9736 |

| Test | 0.9594 | 0.9693 | 0.9311 | 0.9645 |

| Feature | Parameters | Description |

|---|---|---|

| Color histogram | quantization_level = 8 | Level of quantization is applied to each image. |

| color_space = “lab” | Image is converted into lab color space. | |

| Bag of SIFT | vocab_size = 50 | Vocabulary size is set as 50. |

| dimension = 128 | Dimension of descriptor is set as 128. | |

| smooth_sigma = 1 | Sigma for Gaussian filtering is set as 1. | |

| color_space = “grayscale” | Image is converted into grayscale. | |

| Hog | vocab_size = 50 | Vocabulary size is set as 50. |

| cell_size = 8 | Cell size is set as 8. | |

| smooth_sigma = 1 | Sigma for Gaussian filtering is set as 1. | |

| color_space = “rgb” | RGB color space is used. |

| Dataset | Metric | SLIC | ERS | SEEDS | preSLIC | LSC | Ours |

|---|---|---|---|---|---|---|---|

| Dataset1 | 0.8833 | 0.8933 | 0.8803 | 0.9113 | 0.9153 | 0.9421 | |

| 0.8927 | 0.9027 | 0.9127 | 0.8977 | 0.9143 | 0.9650 | ||

| 0.7986 | 0.8148 | 0.8119 | 0.8256 | 0.8430 | 0.9109 | ||

| Dataset2 | 0.8994 | 0.9094 | 0.8964 | 0.9304 | 0.9315 | 0.9583 | |

| 0.8907 | 0.9107 | 0.8804 | 0.8960 | 0.9220 | 0.9675 | ||

| 0.8001 | 0.8349 | 0.7991 | 0.8397 | 0.8635 | 0.9285 | ||

| Dataset3 | 0.8104 | 0.8204 | 0.8077 | 0.8414 | 0.8425 | 0.8890 | |

| 0.8213 | 0.8413 | 0.8111 | 0.8266 | 0.8526 | 0.9286 | ||

| 0.6889 | 0.7105 | 0.6798 | 0.7152 | 0.7354 | 0.8321 | ||

| Dataset4 | 0.8317 | 0.8417 | 0.8280 | 0.8667 | 0.8628 | 0.9016 | |

| 0.8446 | 0.8476 | 0.8448 | 0.8493 | 0.8754 | 0.9101 | ||

| 0.7213 | 0.7311 | 0.7187 | 0.7512 | 0.7684 | 0.8279 |

| Dataset | Metric | SLIC | ERS | SEEDS | preSLIC | LSC | Ours |

|---|---|---|---|---|---|---|---|

| Dataset1 | 0.9233 | 0.9243 | 0.8943 | 0.9223 | 0.9233 | 0.9611 | |

| 0.8969 | 0.9167 | 0.9237 | 0.9119 | 0.9273 | 0.9656 | ||

| 0.8347 | 0.8527 | 0.8328 | 0.8468 | 0.8609 | 0.9293 | ||

| Dataset2 | 0.9194 | 0.9364 | 0.9165 | 0.9514 | 0.9495 | 0.9683 | |

| 0.8929 | 0.9227 | 0.8944 | 0.9102 | 0.9330 | 0.9679 | ||

| 0.8281 | 0.8683 | 0.8270 | 0.8698 | 0.8889 | 0.9382 | ||

| Dataset3 | 0.8334 | 0.8474 | 0.8278 | 0.8624 | 0.8605 | 0.9190 | |

| 0.8393 | 0.8533 | 0.8251 | 0.8408 | 0.8636 | 0.9406 | ||

| 0.7187 | 0.7396 | 0.7042 | 0.7413 | 0.7575 | 0.8740 | ||

| Dataset4 | 0.8577 | 0.8687 | 0.8421 | 0.8897 | 0.8838 | 0.9416 | |

| 0.8756 | 0.8696 | 0.8638 | 0.8675 | 0.9045 | 0.9321 | ||

| 0.7645 | 0.7685 | 0.7434 | 0.7833 | 0.8084 | 0.8876 |

| Dataset | Metric | Dai | FCN | U-Net | Ours |

|---|---|---|---|---|---|

| Dataset1 | 0.7931 | 0.9306 | 0.9523 | 0.9611 | |

| 0.9301 | 0.8593 | 0.9547 | 0.9656 | ||

| 0.7485 | 0.8075 | 0.9112 | 0.9293 | ||

| Dataset2 | 0.7971 | 0.9484 | 0.9566 | 0.9683 | |

| 0.9505 | 0.9533 | 0.9587 | 0.9679 | ||

| 0.7653 | 0.9063 | 0.9187 | 0.9382 | ||

| Dataset3 | 0.7471 | 0.8684 | 0.8836 | 0.9190 | |

| 0.8805 | 0.8833 | 0.9005 | 0.9406 | ||

| 0.6783 | 0.7790 | 0.8050 | 0.8740 | ||

| Dataset4 | 0.7431 | 0.8506 | 0.8793 | 0.9416 | |

| 0.8601 | 0.7893 | 0.8965 | 0.9321 | ||

| 0.6630 | 0.6932 | 0.7983 | 0.8876 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, H.; Zhou, J.; Chen, M.; Chen, T.; Li, D.; Cheng, P. Building Extraction from UAV Images Jointly Using 6D-SLIC and Multiscale Siamese Convolutional Networks. Remote Sens. 2019, 11, 1040. https://doi.org/10.3390/rs11091040

He H, Zhou J, Chen M, Chen T, Li D, Cheng P. Building Extraction from UAV Images Jointly Using 6D-SLIC and Multiscale Siamese Convolutional Networks. Remote Sensing. 2019; 11(9):1040. https://doi.org/10.3390/rs11091040

Chicago/Turabian StyleHe, Haiqing, Junchao Zhou, Min Chen, Ting Chen, Dajun Li, and Penggen Cheng. 2019. "Building Extraction from UAV Images Jointly Using 6D-SLIC and Multiscale Siamese Convolutional Networks" Remote Sensing 11, no. 9: 1040. https://doi.org/10.3390/rs11091040