TUM-MLS-2016: An Annotated Mobile LiDAR Dataset of the TUM City Campus for Semantic Point Cloud Interpretation in Urban Areas

Abstract

:1. Introduction

2. Benchmark Datasets from MLS Point Clouds for Semantic Interpretation

- The Oakland 3D dataset [7] is one of the earliest publicly accessible MLS datasets for semantic labeling. The dataset was acquired by a side-looking SICK LMS sensor in push-broom way around the campus of the Carnegie Mellon University Oakland, Pittsburgh, PA. This dataset has by default been separated into the training, validation, and test parts, with a total number of about 1.6 million points. All the points in this dataset were assigned with labels of 44 classes of objects, but only 5 classes among them can be used for the evaluation.

- The Sydney Urban Objects dataset [14] is a dataset containing a variety of common urban road objects. This dataset was collected in the CBD of Sydney, Australia, by a Velodyne HDL-64E LiDAR sensor. The entire dataset consists of 631 individual scans. All points were labeled with four classes of objects, including vehicles, pedestrians, traffic signs and trees. As an evaluation dataset, it was designed to test matching and classification algorithms, with a large variability in viewpoint and occlusion.

- The iQmulus dataset [15] is also an early published MLS dataset, which served the IQmulus and TerraMobilita Contest. This dataset was acquired in the 6th district of Paris by the Stereopolis II system with a Riegl LMS-Q120i LiDAR sensor. The entire dataset has collected more than 300 million points. All the points in this dataset were assigned with labels of 22 classes of objects. However, only a 200 m long subset, including 12 million points of 8 classes, is available for the public evaluation purpose.

- Paris-Lille-3D [9] is a recently published MLS dataset for both semantic labeling and instance segmentation. The dataset was acquired in the streets of Paris and Lille by an MLS system with a Velodyne HDL-32E LiDAR sensor. The entire dataset has collected more than 140 million points, covering approximately 2 km roadways. All the points in this dataset were assigned with labels of 50 classes of objects. For the public evaluation purpose, for benchmarks, labels of 9 classes are provided. Moreover, not only point-wise labels, individual objects like cars and trees are also segmented as instances for evaluation use.

- The SemanticKITTI dataset [16] is one of the newest publicly accessible MLS datasets for semantic segmentation. This dataset was created by annotating the renowned KITTI dataset [17]. This dataset has collected about 4.5 billion points, covering a roadway of 40 km. This dataset is presented by a sequence of scans. The points of each sequential scan were labeled with 25 classes for the evaluation purpose.

- Toronto-3D [10] is a recent MLS dataset for semantic labeling. This dataset was acquired on Avenue Road in Toronto, Canada, via a vehicle-mounted MLS system with a 32-line LiDAR sensor. This dataset has collected approximately 78.3 million points, covering approximately 1 km of roadways. All the points in this dataset were assigned with labels of 7 classes of objects and 1 class of unclassified ones. This dataset has been separated into four parts in default, and each part covers a road length of about 250 m. For the evaluation purpose, theoretically, any part can be used as test data and the rest as training data, or vice versa.

- The Daimler urban segmentation dataset [11] is not an MLS dataset, but can still be considered related (3D): It consists of 5000 rectified stereo image pairs, and 500 frames come with pixel-level semantic class annotations into five classes. Dense disparity maps are provided as a reference computed using semi-global matching.

- Audi’s recent A2D2 dataset [12] is provided for research in the context of autonomous driving. This dataset was acquired in three cities in the south of Germany, namely: Gaimersheim, Ingolstadt, and Munich. In total, six cameras and five Velodyne VLP-16 LiDAR sensors were used. 41,277 images have semantic and instance segmentation labels for 38 categories. The annotation of the point clouds is generated by projecting the points to the 38,481 semantically labeled images with calibrated relative position and orientation of the sensors.

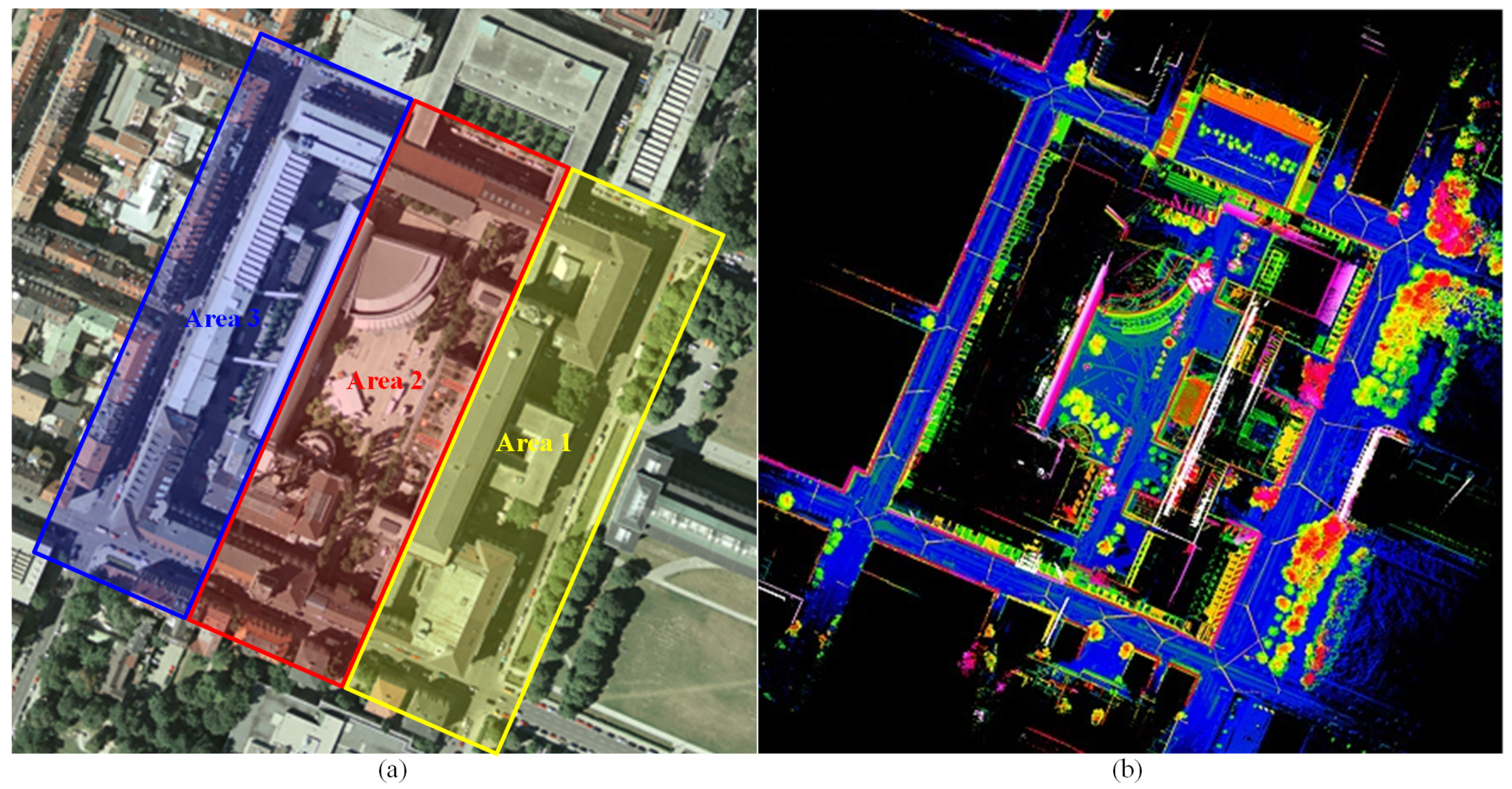

3. TUM-City-Campus MLS Dataset

3.1. Data Acquisition, Preparation, and Annotation

3.2. Benchmark for Semantic Labeling

3.3. Annotated Data for Instance Segmentation

3.4. Annotated Data for Single 360 Laser Scans

4. Evaluation

4.1. Baselines of Semantic Labeling

- PointNet [23]: PointNet is a neural network that directly processes point clouds, which well respects the permutation invariance of points in the input. It provides a unified architecture for applications emerging from object classification.

- PointNet++ [24]: PointNet learns global features with MLPs for raw point clouds. PointNet++ applies PointNet to local neighborhoods of each point to capture local features, and a hierarchical approach is taken to capture both local and global features.

- Detrended geometric features and graph-based optimization (DEGO) [25]: This is a conventional handcrafted feature-based method using eigenvalue-based geometric features [5] with a detrended feature enhancement strategy and a random forest classifier. A post-processing with graph-structured optimization is applied for the refinement of initial labels.

- Hierarchical deep feature learning (HDL) [13]: This is a deep feature learning method based on the original PointNet++ [24], in which hierarchical data augmentation is used to create multi-scale pointsets as input. Pointsets subdivided with various scales will contain different levels of contextual information and be concatenated to a multi-scale deep feature vector, which is then classified by the random forest. The joint manifold-based embedding (JME) and global graph-based optimization (GGO) used in [13] are not included here.

4.2. Evaluation Metrics

5. Experiments And Results

5.1. Training Settings

5.2. Results And Discussion

6. Conclusions

- The creation of benchmark datasets is essential to assess the performance of developed algorithms and methods. The analysis of our proposed large-scale annotated dataset has revealed its good potential for the evaluation of semantic interpretation in complex urban scenarios.

- Experiments have validated the feasibility and quality of the dataset for semantic labeling. The comparison with methods of different strategies also reveals the importance of considering the scale factors in deep learning-based feature descriptions.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Weinmann, M.; Schmidt, A.; Mallet, C.; Hinz, S.; Rottensteiner, F.; Jutzi, B. Contextual classification of point cloud data by exploiting individual 3D neigbourhoods. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 271–278. [Google Scholar] [CrossRef] [Green Version]

- Yang, B.; Dong, Z.; Zhao, G.; Dai, W. Hierarchical extraction of urban objects from mobile laser scanning data. ISPRS J. Photogramm. Remote Sens. 2015, 99, 45–57. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Jia, F.; Wang, C. Learning hierarchical features for automated extraction of road markings from 3-D mobile LiDAR point clouds. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 709–726. [Google Scholar] [CrossRef]

- Gehrung, J.; Hebel, M.; Arens, M.; Stilla, U. An approach to extract moving objects from MLS data using a volumetric background representation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 107. [Google Scholar] [CrossRef] [Green Version]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Xie, Y.; Tian, J.; Zhu, X. Linking Points With Labels in 3D: A Review of Point Cloud Semantic Segmentation. IEEE Geosci. Remote Sens. Mag. 2020. [Google Scholar] [CrossRef] [Green Version]

- Munoz, D.; Bagnell, J.A.; Vandapel, N.; Hebert, M. Contextual classification with functional Max-Margin Markov networks. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 975–982. [Google Scholar]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. Semantic3D.net: A new large-scale point cloud classification benchmark. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-1-W1, 91–98. [Google Scholar] [CrossRef] [Green Version]

- Roynard, X.; Deschaud, J.E.; Goulette, F. Paris-Lille-3D: A large and high-quality ground-truth urban point cloud dataset for automatic segmentation and classification. Int. J. Robot. Res. 2018, 37, 545–557. [Google Scholar] [CrossRef] [Green Version]

- Tan, W.; Qin, N.; Ma, L.; Li, Y.; Du, J.; Cai, G.; Yang, K.; Li, J. Toronto-3D: A Large-scale Mobile LiDAR Dataset for Semantic Segmentation of Urban Roadways. arXiv 2020, arXiv:2003.08284. [Google Scholar]

- Scharwächter, T.; Enzweiler, M.; Franke, U.; Roth, S. Efficient multi-cue scene segmentation. In German Conference on Pattern Recognition; Springer: Berlin, Germany, 2013; pp. 435–445. [Google Scholar]

- Geyer, J.; Kassahun, Y.; Mahmudi, M.; Ricou, X.; Durgesh, R.; Chung, A.S.; Hauswald, L.; Pham, V.H.; Mühlegg, M.; Dorn, S.; et al. A2D2: Audi Autonomous Driving Dataset. arXiv 2020, arXiv:2004.06320. [Google Scholar]

- Huang, R.; Xu, Y.; Hong, D.; Yao, W.; Ghamisi, P.; Stilla, U. Deep point embedding for urban classification using ALS point clouds: A new perspective from local to global. ISPRS J. Photogramm. Remote Sens. 2020, 163, 62–81. [Google Scholar] [CrossRef]

- De Deuge, M.; Quadros, A.; Hung, C.; Douillard, B. Unsupervised feature learning for classification of outdoor 3D scans. In Australasian Conference on Robitics and Automation; University of New South Wales: Kensington, Australia, 2013; Volume 2, p. 1. [Google Scholar]

- Vallet, B.; Brédif, M.; Serna, A.; Marcotegui, B.; Paparoditis, N. TerraMobilita/iQmulus urban point cloud analysis benchmark. Comput. Graph. 2015, 49, 126–133. [Google Scholar] [CrossRef] [Green Version]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A dataset for semantic scene understanding of LiDAR sequences. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets Robotics: The KITTI Dataset. Int. J. Robot. Res. (IJRR) 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- TUM City Campus—MLS Test Dataset. Available online: https://www.pf.bgu.tum.de/en/pub/tst.html (accessed on 8 June 2020).

- Diehm, A.L.; Gehrung, J.; Hebel, M.; Arens, M. Extrinsic self-calibration of an operational mobile LiDAR system. In Laser Radar Technology and Applications XXV; Turner, M.D., Kamerman, G.W., Eds.; International Society for Optics and Photonics, SPIE: Paris, France, 2020; Volume 11410, pp. 46–61. [Google Scholar] [CrossRef]

- Borgmann, B.; Schatz, V.; Kieritz, H.; Scherer-Klöckling, C.; Hebel, M.; Arens, M. Data Processing and Recording Using a Versatile Multi-sensor Vehicle. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 21–28. [Google Scholar] [CrossRef] [Green Version]

- Hackel, T.; Wegner, J.D.; Schindler, K. Fast semantic segmentation of 3D point clouds with strongly varying density. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 177–184. [Google Scholar] [CrossRef]

- Xu, Y.; Heogner, L.; Tuttas, S.; Stilla, U. A voxel- and graph-based strategy for segmenting man-made infrastructures using perceptual grouping laws: Comparison and evaluation. Photogramm. Eng. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Xu, Y.; Ye, Z.; Yao, W.; Huang, R.; Tong, X.; Hoegner, L.; Stilla, U. Classification of LiDAR Point Clouds Using Supervoxel-Based Detrended Feature and Perception-Weighted Graphical Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 1–17. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Wang, F.D.; Xia, G.S. A geometry-attentional network for ALS point cloud classification. ISPRS J. Photogramm. Remote Sens. 2020, 164, 26–40. [Google Scholar] [CrossRef]

| Dataset | Year | Size (km) | # Points | # Classes | Sensor |

|---|---|---|---|---|---|

| Oakland 3D [7] | 2009 | 1.5 | 1.6 M | 44 | Sick LMS |

| Sydney Urban Objects [14] | 2013 | - | 4 | Velodyne HDL-64E | |

| iQmulus [15] | 2015 | 0.20 | 12 M | 22 | Riegl LMS-Q120i |

| Paris-Lille-3D [9] | 2018 | 1.94 | 143.1 M | 50 | Velodyne HDL-32E |

| SemanticKITTI [16] | 2019 | 39.2 | 4.5 B | 28 | Velodyne HDL-64E |

| Toronto-3D [10] | 2020 | 1.00 | 78.3 M | 8 | Velodyne HDL-32 |

| Daimler urban segmentation [11] | 2013 | - | 5 | Stereo optical sensor | |

| A2D2 [12] | 2020 | - | 38 | Five Velodyne VLP-16 | |

| TUM-City-Campus MLS | 2016 | 0.97 | 41 M/1.7 B | 8 | Dual Velodyne HDL-64E |

| Classes | Label Index | Color Code | Content |

|---|---|---|---|

| Man-made terrain | 1 | #0000FF | Roads and impervious ground. |

| Natural terrain | 2 | #006B93 | Grass and bare land. |

| High vegetation | 3 | #00DA24 | Trees. |

| Low vegetation | 4 | #47FF00 | Bushes and flower beds. |

| Buildings | 5 | #B6FF00 | Building facades and roofs. |

| Hardscape | 6 | #FF6C00 | Walls, fences, light poles. |

| Scanning artifacts | 7 | #D96D25 | Power cables and artificial objects. |

| Vehicles | 8 | #FF0000 | Parked cars and buses. |

| Unclassified | 0 | #7F7F7F | Noise, outliers, moving vehicles, pedestrians, and unidentified objects. |

| Method | PointNet [23] | PointNet++ [24] | DEGO [25] | HDL [13] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | ||||||||||||

| Buildings | 0.871 | 0.915 | 0.764 | 0.922 | 0.910 | 0.792 | 0.937 | 0.970 | 0.911 | 0.891 | 0.872 | 0.788 |

| Vehicles | 0.464 | 0.569 | 0.761 | 0.708 | 0.926 | 0.788 | 0.697 | 0.544 | 0.440 | 0.263 | 0.374 | 0.183 |

| Man-made terrain | 0.878 | 0.591 | 0.546 | 0.923 | 0.459 | 0.442 | 0.896 | 0.709 | 0.655 | 0.753 | 0.733 | 0.591 |

| Natural terrain | 0.146 | 0.406 | 0.459 | 0.149 | 0.651 | 0.336 | 0.169 | 0.514 | 0.146 | 0.525 | 0.174 | 0.150 |

| High vegetation | 0.643 | 0.570 | 0.446 | 0.725 | 0.695 | 0.435 | 0.768 | 0.908 | 0.713 | 0.919 | 0.922 | 0.853 |

| Low vegetation | 0.155 | 0.015 | 0.443 | 0.458 | 0.151 | 0.432 | 0.000 | 0.000 | 0.000 | 0.370 | 0.195 | 0.146 |

| Hardscape | 0.035 | 0.020 | 0.764 | 0.364 | 0.163 | 0.792 | 0.833 | 0.011 | 0.011 | 0.296 | 0.443 | 0.215 |

| Scanning artifacts | 0.144 | 0.219 | 0.761 | 0.169 | 0.384 | 0.788 | 0.945 | 0.081 | 0.081 | 0.715 | 0.943 | 0.685 |

| MEAN | 0.417 | 0.413 | 0.618 | 0.552 | 0.542 | 0.601 | 0.656 | 0.467 | 0.370 | 0.592 | 0.582 | 0.452 |

| OA | 0.758 | 0.773 | 0.866 | 0.842 | ||||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Gehrung, J.; Huang, R.; Borgmann, B.; Sun, Z.; Hoegner, L.; Hebel, M.; Xu, Y.; Stilla, U. TUM-MLS-2016: An Annotated Mobile LiDAR Dataset of the TUM City Campus for Semantic Point Cloud Interpretation in Urban Areas. Remote Sens. 2020, 12, 1875. https://doi.org/10.3390/rs12111875

Zhu J, Gehrung J, Huang R, Borgmann B, Sun Z, Hoegner L, Hebel M, Xu Y, Stilla U. TUM-MLS-2016: An Annotated Mobile LiDAR Dataset of the TUM City Campus for Semantic Point Cloud Interpretation in Urban Areas. Remote Sensing. 2020; 12(11):1875. https://doi.org/10.3390/rs12111875

Chicago/Turabian StyleZhu, Jingwei, Joachim Gehrung, Rong Huang, Björn Borgmann, Zhenghao Sun, Ludwig Hoegner, Marcus Hebel, Yusheng Xu, and Uwe Stilla. 2020. "TUM-MLS-2016: An Annotated Mobile LiDAR Dataset of the TUM City Campus for Semantic Point Cloud Interpretation in Urban Areas" Remote Sensing 12, no. 11: 1875. https://doi.org/10.3390/rs12111875