Global Registration of Terrestrial Laser Scanner Point Clouds Using Plane-to-Plane Correspondences

Abstract

:1. Introduction

2. Related Work

3. Data and Method

3.1. Studied Area

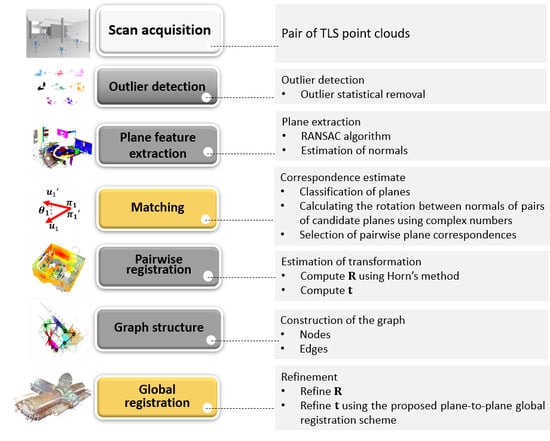

3.2. Method

3.2.1. Plane Segmentation with the RANSAC Algorithm

3.2.2. Estimation of Transformation Parameters from Plane Correspondences

3.2.3. Plane Matching with Complex Numbers

3.2.4. Proposed Global Plane-to-Plane Refinement Solution

4. Experiments and Results

4.1. Pre-Processing Data

4.2. Experimental Evaluation

4.2.1. Plane Matching Evaluation

4.2.2. Global Refinement Evaluation

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Besl, P.; Mckay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Huber, D.; Hebert, M. Fully automatic registration of multiple 3d data sets. Image Vis. Comput. 2003, 21, 637–650. [Google Scholar] [CrossRef] [Green Version]

- Barnea, S.; Filin, S. Keypoint based autonomous registration of terrestrial laser point-clouds. ISPRS J. Photogram. Remote Sens. 2008, 63, 19–35. [Google Scholar] [CrossRef]

- Rusu, R.B.; Blodow, N.; Beetz, M. Fast point feature histograms (FPFH) for 3D registration. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Kobe, Japan, 12–17 May 2009; pp. 3212–3217. [Google Scholar]

- Weinmann, M.; Hinz, S.; Jutzi, B. Fast and automatic image-based registration of TLS data. ISPRS J. Photogram. Remote Sens. 2011, 66, S62–S70. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Keypoint-based 4-points congruent sets–automated marker-less registration of laser scans. ISPRS J. Photogram. Remote Sens. 2014, 96, 149–163. [Google Scholar] [CrossRef]

- Dong, Z.; Yang, B.; Liang, F.; Huang, R.; Scherer, S. Hierarchical registration of unordered TLS point clouds based on binary shape context descriptor. ISPRS J. Photogram. Rem. Sens. 2018, 144, 61–79. [Google Scholar] [CrossRef]

- Xiao, J.; Adler, B.; Zhang, J.; Zhang, H. Planar segment based three-dimensional point cloud registration in outdoor environments. J. Field Robot. 2013, 30, 552–582. [Google Scholar] [CrossRef]

- Ge, X.; Wunderlich, T. Surface-based matching of 3D point clouds with variable coordinates in source and target system. ISPRS J. Photogram. Rem. Sens. 2016, 111, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Al-Durgham, M.; Habib, A. A framework for the registration and segmentation of heterogeneous lidar data. Photogram. Eng. Remote. Sens. 2013, 79, 135–145. [Google Scholar] [CrossRef]

- Cheng, X.; Cheng, X.; Li, Q.; Ma, L. Automatic registration of terrestrial and airborne point clouds using building outline features. IEEE J. Sel. Top. Appl. Earth Observ. Rem. Sens. 2018, 11, 628–638. [Google Scholar] [CrossRef]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Pairwise coarse registration of point clouds in urban scenes using voxel-based 4-planes congruent sets. ISPRS J. Photogram. Remote Sens. 2019, 151, 106–123. [Google Scholar] [CrossRef]

- Ji, Z.; Song, M.; Guan, H.; Yu, Y. Accurate and robust registration of high-speed railway viaduct point clouds using closing conditions and external geometric constraints. ISPRS J. Photogram. Remote Sens. 2015, 106, 55–67. [Google Scholar] [CrossRef]

- Theiler, P.W.; Wegner, J.D.; Schindler, K. Globally consistent registration of terrestrial laser scans via graph optimization. ISPRS J. Photogram. Remote Sens. 2015, 109, 126–138. [Google Scholar] [CrossRef]

- Lu, F.; Milios, E. Globally consistent range scan alignment for environment mapping. Autonom. Robot. 1997, 4, 333–349. [Google Scholar] [CrossRef]

- Bornnmann, D.; Elseberg, J.; Lingmann, K.; Nüchter, A.; Hertzberg, J. Globally consistent 3D mapping with scan matching. Robot. Auton. Syst. 2008, 56, 130–142. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Li, H.; Jia, Y. Go-ICP: Solving 3D registration efficiently and globally optimally. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Kuemmerle, R.; Grisetti, G.; Strasdat, H.; Konolige, K.; Burgard, W. g2o: A general framework for graph optimization. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 3607–3613. [Google Scholar]

- Lourakis, M.A.; Argyros, A. SBA: A Software Package for Generic Sparse Bundle Adjustment. ACM Trans. Math. Softw. 2009, 36, 1–30. [Google Scholar] [CrossRef]

- Wang, S.; Sun, H.Y.; Guo, H.C.; Du, L.; Liu, T.J. Multi-View Laser Point Cloud Global Registration for a Single Object. Sensors 2018, 18, 3729. [Google Scholar] [CrossRef] [Green Version]

- McDonagh, S.; Robert, F. Simultaneous registration of multi-view range images with adaptive kernel density estimation. In Proceedings of the IMA 14th Mathematics of Surfaces, Birmingham, AL, USA, 11–13 September 2013. [Google Scholar]

- Zong-ming, L.; Yu, Z.; Shan, L.; Han-qing, Z.; Dong, Y. Closed-loop detection and pose optimization of non-cooperation rotating targets. Opt. Precis. Eng. 2015, 25, 1036–1043. [Google Scholar] [CrossRef]

- Rusinkiewicz, S.; Levoy, M. Efficient variants of the ICP algorithm. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 145–152. [Google Scholar]

- Yang, B.; Zang, Y. Automated registration of dense terrestrial laser-scanning point clouds using curves. ISPRS J. Photogram. Remote Sens. 2014, 195, 109–121. [Google Scholar] [CrossRef]

- Pathak, K.; Birk, A.; Vaskevicius, N.; Pfingsthorn, M.; Schwertfeger, S.; Poppinga, J. Online three-dimensional SLAM by registration of large planar surface segments and closed-form pose-graph relaxation. J. Field Robot. 2010, 27, 52–84. [Google Scholar] [CrossRef]

- Pavan, N.L.; dos Santos, D.R. Global closed-form refinement for consistent TLS data registration. IEEE Geos. Remote Sens. Lett. 2017, 14, 1131–1135. [Google Scholar] [CrossRef]

- Yan, F.; Nan, L.; Wonka, P. Block assembly for global registration of building scans. ACM Trans. Graph. 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Khoshelham, K. Closed-form solutions for estimating a rigid motion from plane correspondences extracted from point clouds. ISPRS J. Photogram. Remote Sens. 2016, 114, 78–91. [Google Scholar] [CrossRef]

- Guo, Y.; Bennamoun, M.; Sohel, F.; Lu, M.; Wan, J. 3D object recognition in cluttered scenes with local surface features: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2270–2287. [Google Scholar] [CrossRef] [PubMed]

- Förstner, W.; Khoshelham, K. Efficient and accurate registration of point clouds with plane to plane correspondences. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 Octorber 2017. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D Point Cloud Based Object Maps for Household Environments. Robot. Auton. Syst. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Horn, B.K. Closed-form solution of absolute orientation using unit quaternions. J. Opt. Soc. Am. 1987, 4, 629–642. [Google Scholar] [CrossRef]

- Dold, C.; Brenner, C. Registration of terrestrial laser scanning data using planar patches and image data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 78–83. [Google Scholar]

- Khoshelham, K.; Gorte, B.G. Registering point clouds of polyhedral buildings to 2D maps. In Proceedings of the 3rd ISPRS International Workshop 3D-ARCH 2009: 3D Virtual Reconstruction and Visualization of Complex Architectures, Trento, Italy, 25–28 February 2009. [Google Scholar]

- Stillwell, J. Naive Lie Theory; Springer: New York, NY, USA, 2008. [Google Scholar]

- Grisetti, G.; Kummerle, R.; Stachniss, C.; Burgard, W. A Tutorial on Graph-Based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Golub, G.H.; Van Loan, C.F. An analysis of the total least squares problem. SIAM J. Numer. Anal. 1980, 17, 883–893. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- ETH Zurich. Department of Civil, Environmental and Geomatic Engineering. Available online: https://prs.igp.ethz.ch/research/Source_code_and_datasets.html (accessed on 4 February 2020).

| ID | TLS Sensor | Area (m) | Average Overlap (%) | Mean Point Density Points/m2 | Mean Size (Million Points) | Number of Scans | Environment |

|---|---|---|---|---|---|---|---|

| Patio Batel | Faro LS 880 | 150 × 170 | 45 | 12 | 1.5 | 9 | Urban (outdoor) |

| Lape | Faro LS 880 | 15 × 15 × 3 | 70 | 6 | 2.7 | 4 | Office (indoor) |

| Royal exhibition building | Faro Focus S120 | 70 × 30 × 15 | 70 | 11 | 4.5 | 16 (for each floor) | Building (indoor) |

| K-4PCS | Proposed Plane-Based Matching Algorithm | |||||||

|---|---|---|---|---|---|---|---|---|

| Dataset | #Mean extracted keypoints | Mean SR (%) | Mean MT (s) | Mean of RMSER (m) | # Mean corresponding planes | Mean SR (%) | Mean MT (s) | Mean of RMSER (m) |

| Patio Batel | 16.840 | 98.90 | 995 | 0.60 ± 0.31 | 19 | 84.4 | 200 | 0.38 ± 0.15 |

| Lape | 13.850 | 95.82 | 789 | 0.49 ± 0.24 | 9 | 75.5 | 101 | 0.25 ± 0.11 |

| Royal Building | 25.742 | 99.67 | 1045 | 0.28 ± 0.21 | 35 | 95.2 | 127 | 0.16 ± 0.07 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pavan, N.L.; dos Santos, D.R.; Khoshelham, K. Global Registration of Terrestrial Laser Scanner Point Clouds Using Plane-to-Plane Correspondences. Remote Sens. 2020, 12, 1127. https://doi.org/10.3390/rs12071127

Pavan NL, dos Santos DR, Khoshelham K. Global Registration of Terrestrial Laser Scanner Point Clouds Using Plane-to-Plane Correspondences. Remote Sensing. 2020; 12(7):1127. https://doi.org/10.3390/rs12071127

Chicago/Turabian StylePavan, Nadisson Luis, Daniel Rodrigues dos Santos, and Kourosh Khoshelham. 2020. "Global Registration of Terrestrial Laser Scanner Point Clouds Using Plane-to-Plane Correspondences" Remote Sensing 12, no. 7: 1127. https://doi.org/10.3390/rs12071127