Automated 3D Reconstruction Using Optimized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models

Abstract

:1. Introduction

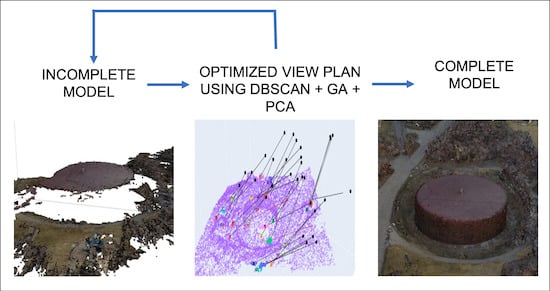

1.1. Iterative Modeling

1.2. Optimized View Planning

1.3. Novel Contributions

- An iterative strategy to UAV-based SfM without the use of previous models to initialize the UAV mission

- Demonstrated automated iterative mapping of different terrains with convergence to a specified orthomosaic resolution in simulated environments

- A field study that illustrates the advantages of iterative inspection of infrastructure using UAVs.

2. Methods

2.1. Iterative Modeling Overview

2.2. Selection of the Area to Be Mapped

2.3. Identification of Model Deficiencies

2.4. Planning of Next Best View

2.4.1. Optimization with Genetic Algorithm

2.4.2. View and Flight Planning

2.5. Convergence Criteria

2.6. Simulated and Field Studies

2.7. Selection of Equipment and Modeling Software

3. Results

3.1. Results from Simulated Environment

3.1.1. Quantitative Results

3.1.2. Qualitative Analysis

3.2. Results from Field Study

3.2.1. Quantitative Analysis

3.2.2. Qualitative Analysis

4. Discussion

4.1. Evaluation of Machine Learning Methods

Convergence Criteria in Field Study

4.2. Convergence Criteria in Complex Environments

4.3. Accuracy of Final Model

5. Conclusions

Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Nikolic, J.; Burri, M.; Rehder, J.; Leutenegger, S.; Huerzeler, C.; Siegwart, R. A UAV system for inspection of industrial facilities. In Proceedings of the IEEE Aerospace Conference Proceedings, Big Sky, MT, USA, 2–9 March 2013. [Google Scholar] [CrossRef] [Green Version]

- Yamazaki, F.; Liu, W.; Yamazaki, F. Remote Sensing Technologies For Post-Earthquake Damage Assessment: A Case Study On The 2016 Kumamoto Earthquake Satreps_Peru View Project Remote Sensing Technologies For Post-Earthquake Damage Assessment: A Case Study On The 2016 Kumamoto Earthquake. In Proceedings of the ASIA Conference on Earthquake Engineering (6ACEE), Cebu City, Phillines, 22–24 September 2016; pp. 22–24. [Google Scholar]

- Hausamann, D.; Zirnig, W.; Schreier, G.; Strobl, P. Monitoring of gas pipelines—A civil UAV application. Aircr. Eng. Aerosp. Technol. 2005, 77, 352–360. [Google Scholar] [CrossRef]

- Sankarasrinivasan, S.; Balasubramanian, E.; Karthik, K.; Chandrasekar, U.; Gupta, R. Health Monitoring of Civil Structures with Integrated UAV and Image Processing System. Procedia Comput. Sci. 2015, 54, 508–515. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Koenderink, J.J.; van Doorn, A.J. Affine structure from motion. J. Opt. Soc. Am. A 1991, 8, 377. [Google Scholar] [CrossRef]

- Cetin, O.; Yilmaz, G. Real-time Autonomous UAV Formation Flight with Collision and Obstacle Avoidance in Unknown Environment. J. Intell. Robot. Syst. 2016, 84, 415–433. [Google Scholar] [CrossRef]

- Qin, S.; Badgwell, T.A. A survey of industrial model predictive control technology. Control Eng. Pract. 2003, 11, 733–764. [Google Scholar] [CrossRef]

- Hudzietz, B.P.; Saripalli, S. An experimental evaluation of 3d terrain mapping with an autonomous helicopter. In Proceedings of the International Conference on Unmanned Aerial Vehicle in Geomatics (UAV-g), Zurich, Switzerland, 14–16 September 2011. [Google Scholar]

- Borra-Serrano, I.; De Swaef, T.; Quataert, P.; Aper, J.; Saleem, A.; Saeys, W.; Somers, B.; Roldán-Ruiz, I.; Lootens, P. Closing the Phenotyping Gap: High Resolution UAV Time Series for Soybean Growth Analysis Provides Objective Data from Field Trials. Remote Sens. 2020, 12, 1644. [Google Scholar] [CrossRef]

- Yang, M.D.; Tseng, H.H.; Hsu, Y.C.; Tsai, H.P. Semantic segmentation using deep learning with vegetation indices for rice lodging identification in multi-date UAV visible images. Remote Sens. 2020, 12, 633. [Google Scholar] [CrossRef] [Green Version]

- Ashapure, A.; Jung, J.; Chang, A.; Oh, S.; Maeda, M.; Landivar, J. A Comparative Study of RGB and Multispectral Sensor-Based Cotton Canopy Cover Modelling Using Multi-Temporal UAS Data. Remote Sens. 2019, 11, 2757. [Google Scholar] [CrossRef] [Green Version]

- Martin, R.; Rojas, I.; Franke, K.; Hedengren, J.; Martin, R.A.; Rojas, I.; Franke, K.; Hedengren, J.D. Evolutionary View Planning for Optimized UAV Terrain Modeling in a Simulated Environment. Remote Sens. 2015, 8, 26. [Google Scholar] [CrossRef] [Green Version]

- Okeson, T.J.; Barrett, B.J.; Arce, S.; Vernon, C.A.; Franke, K.W.; Hedengren, J.D. Achieving Tiered Model Quality in 3D Structure from Motion Models Using a Multi-Scale View-Planning Algorithm for Automated Targeted Inspection. Sensors 2019, 19, 2703. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schmid, K.; Hirschmüller, H.; Dömel, A.; Grixa, I.; Suppa, M.; Hirzinger, G. View planning for multi-view stereo 3D Reconstruction using an autonomous multicopter. J. Intell. Robot. Syst. Theory Appl. 2012, 65, 309–323. [Google Scholar] [CrossRef]

- Martin, R.A.; Blackburn, L.; Pulsipher, J.; Franke, K.; Hedengren, J.D. Potential benefits of combining anomaly detection with view planning for UAV infrastructure modeling. Remote Sens. 2017, 9, 434. [Google Scholar] [CrossRef] [Green Version]

- Hoppe, C.; Wendel, A.; Zollmann, S.; Paar, A.; Pirker, K.; Irschara, A.; Bischof, H.; Kluckner, S. Photogrammetric Camera Network Design for Micro Aerial Vehicles; Technical Report; Institute for Computer Graphics and Vision—Graz University of Technology: Mala Nedelja, Slovenia, 2012. [Google Scholar]

- Morgenthal, G.; Hallermann, N.; Kersten, J.; Taraben, J.; Debus, P.; Helmrich, M.; Rodehorst, V. Framework for automated UAS-based structural condition assessment of bridges. Autom. Constr. 2019, 97, 77–95. [Google Scholar] [CrossRef]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-Dimensional Reconstruction of Structural Surface Model of Heritage Bridges Using UAV-Based Photogrammetric Point Clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef] [Green Version]

- Roy, S.D.; Chaudhury, S.; Banerjee, S. Isolated 3-D object recognition through next view planning. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2000, 30, 67–76. [Google Scholar] [CrossRef]

- Trummer, M.; Munkelt, C.; Denzler, J. Online next-best-view planning for accuracy optimization using an extended E-criterion. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 1642–1645. [Google Scholar] [CrossRef] [Green Version]

- Hollinger, G.A.; Englot, B.; Hover, F.; Mitra, U.; Sukhatme, G.S. Uncertainty-driven view planning for underwater inspection. In Proceedings of the IEEE International Conference on Robotics and Automation, Saint Paul, MN, USA, 14–18 May 2012; pp. 4884–4891. [Google Scholar] [CrossRef] [Green Version]

- Meng, Z.; Qin, H.; Chen, Z.; Chen, X.; Sun, H.; Lin, F.; Ang Jr, M.H. A Two-Stage Optimized Next-View Planning Framework for 3-D Unknown Environment Exploration, and Structural Reconstruction. IEEE Robot. Autom. Lett. 2017, 2, 1680–1687. [Google Scholar] [CrossRef]

- Faigl, J.; Vana, P.; Deckerova, J. Fast Heuristics for the 3-D Multi-Goal Path Planning Based on the Generalized Traveling Salesman Problem With Neighborhoods. IEEE Robot. Autom. Lett. 2019, 4, 2439–2446. [Google Scholar] [CrossRef]

- Palmer, L.M.; Franke, K.W.; Abraham Martin, R.; Sines, B.E.; Rollins, K.M.; Hedengren, J.D. Application and Accuracy of Structure from Motion Computer Vision Models with Full-Scale Geotechnical Field Tests; IFCEE 2015; American Society of Civil Engineers: Reston, VA, USA, 2015; pp. 2432–2441. [Google Scholar] [CrossRef] [Green Version]

- Martin, A.; Heiner, B.; Hedengren, J.D. Targeted 3D modeling from UAV imagery. In Geospatial Informatics, Motion Imagery, and Network Analytics VIII; Palaniappan, K., Seetharaman, G., Doucette, P.J., Eds.; SPIE: Orlando, FL, USA, 2018; p. 13. [Google Scholar] [CrossRef]

- Martin, R.A.; Hall, A.; Brinton, C.; Franke, K.; Hedengren, J.D. Privacy aware mission planning and video masking for UAV systems. In Proceedings of the AIAA Infotech @ Aerospace Conference, San Diego, CA, USA, 4–8 January 2016. [Google Scholar]

- Okeson, T.J. Camera View Planning for Structure from Motion: Achieving Targeted Inspection through More Intelligent View Planning Methods. Ph.D. Thesis, Brigham Young University, Provo, UT, USA, 2018. [Google Scholar]

- Jordan, M.I.; Mitchell, T.M. Machine Learning: Trends, Perspectives, and Prospects. Science 2015, 349, 255–260. [Google Scholar] [CrossRef]

- Grün, A. Progress in photogrammetric point determination by compensation of systematic errors and detection of gross errors. In Informations Relative to Cartography and Geodesy, Series 2: Translations; Verlag des Institut für Angewandte Geodäsie: Frankfurt, Germany, 1978; pp. 113–140, SEE N 80-12455 03-43. [Google Scholar]

- Nocerino, E.; Menna, F.; Remondino, F. Accuracy of typical photogrammetric networks in cultural heritage 3D modeling projects. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 45. [Google Scholar] [CrossRef] [Green Version]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Barber, C.B.; Dobkin, D.P.; Huhdanpaa, H.; Huhdanpaa, H. The quickhull algorithm for convex hulls. ACM Trans. Math. Softw. 1996, 22, 469–483. [Google Scholar] [CrossRef] [Green Version]

- Akkiraju, N.; Edelsbrunner, H.; Facello, M.; Fu, P.; Mucke, E.P.; Varela, C. Alpha shapes: Definition and software. In Proceedings of the 1st International Computational Geometry Software Workshop, Minneapolis, MN, USA, 19–22 June 1995; Volume 63, p. 66. [Google Scholar]

- Öztireli, A.C.; Guennebaud, G.; Gross, M. Feature Preserving Point Set Surfaces based on Non-Linear Kernel Regression. Comput. Graph. Forum 2009, 28, 493–501. [Google Scholar] [CrossRef] [Green Version]

- Bazazian, D.; Casas, J.R.; Ruiz-Hidalgo, J. Fast and Robust Edge Extraction in Unorganized Point Clouds. In Proceedings of the 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Adelaide, SA, Australia, 23–25 November 2015; pp. 1–8. [Google Scholar] [CrossRef] [Green Version]

- Mineo, C.; Pierce, S.G.; Summan, R. Novel algorithms for 3D surface point cloud boundary detection and edge reconstruction. J. Comput. Des. Eng. 2019, 6, 81–91. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Kdd; AAAI: Menlo Park, CA, USA, 1996; Volume 96, pp. 226–231. [Google Scholar]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN Revisited, Revisited. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Spoorthy, D.; Manne, S.R.; Dhyani, V.; Swain, S.; Shahulhameed, S.; Mishra, S.; Kaur, I.; Giri, L.; Jana, S. Automatic Identification of Mixed Retinal Cells in Time-Lapse Fluorescent Microscopy Images using High-Dimensional DBSCAN. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Berlin, Germany, 23–27 July 2019; pp. 4783–4786. [Google Scholar] [CrossRef]

- Baselice, F.; Coppolino, L.; D’Antonio, S.; Ferraioli, G.; Sgaglione, L. A DBSCAN based approach for jointly segment and classify brain MR images. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBS), Milan, Italy, 25–29 August 2015; pp. 2993–2996. [Google Scholar] [CrossRef]

- Paral, P.; Chatterjee, A.; Rakshit, A. Vision Sensor-Based Shoe Detection for Human Tracking in a Human-Robot Coexisting Environment: A Photometric Invariant Approach Using DBSCAN Algorithm. IEEE Sens. J. 2019, 19, 4549–4559. [Google Scholar] [CrossRef]

- Jin, C.H.; Na, H.J.; Piao, M.; Pok, G.; Ryu, K.H. A Novel DBSCAN-Based Defect Pattern Detection and Classification Framework for Wafer Bin Map. IEEE Trans. Semicond. Manuf. 2019, 32, 286–292. [Google Scholar] [CrossRef]

- Tipping, M.E.; Bishop, C.M. Mixtures of Probabilistic Principal Component Analysers; Technical Report 2; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. Field Serv. Robot. 2017, 2, 1–14. [Google Scholar]

- Yu, J.; Petnga, L. Space-based collision avoidance framework for Autonomous Vehicles. Procedia Comput. Sci. 2018, 140, 37–45. [Google Scholar] [CrossRef]

- Zuluaga, J.G.C. Deep Reinforcement Learning for Autonomous Search and Rescue. Ph.D. Thesis, Grand Valley State University, Allendale, MI, USA, 2018. [Google Scholar]

- Kao, D.Y.; Chen, M.C.; Wu, W.Y.; Lin, J.S.; Chen, C.H.; Tsai, F. Drone Forensic Investigation: DJI Spark Drone as A Case Study. Procedia Comput. Sci. 2019, 159, 1890–1899. [Google Scholar] [CrossRef]

- Huuskonen, J.; Oksanen, T. Soil sampling with drones and augmented reality in precision agriculture. Comput. Electron. Agric. 2018, 154, 25–35. [Google Scholar] [CrossRef]

- AgiSoft Metashape Professional (Version 1.5.5). 2019. Available online: https://www.agisoft.com/downloads/installer/ (accessed on 3 July 2020).

- Zhou, Y.; Daakir, M.; Rupnik, E.; Pierrot-Deseilligny, M. A two-step approach for the correction of rolling shutter distortion in UAV photogrammetry. ISPRS J. Photogramm. Remote Sens. 2020, 160, 51–66. [Google Scholar] [CrossRef]

- Shortis, M. Camera Calibration Techniques for Accurate Measurement Underwater. In 3D Recording and Interpretation for Maritime Archaeology; McCarthy, J.K., Benjamin, J., Winton, T., van Duivenvoorde, W., Eds.; Springer: Cham, Switzerland, 2019; pp. 11–27. [Google Scholar] [CrossRef] [Green Version]

| Sensor | CMOS |

| Field of View | 77.7° |

| Image Size (Pixels) | 4864 × 3648 |

| Horizontal Viewing Angle | 62.1° |

| Vertical Viewing Angle | 46.6° |

| 3-Axis Gimbal | −90° to +30° |

| Software | Agisoft Metashape Professional |

| Software version | 1.6.2 |

| OS | Windows 64 bit |

| RAM | 15.86 GB |

| CPU | Intel(r) Core (TM) i7-6700HQ CPU @ 2.60 GHz |

| GPU(s) | None |

| Software | Agisoft Metashape Professional |

| Software version | 1.6.1 |

| OS | Windows 64 bit |

| RAM | 255.97 GB |

| CPU | Intel(r) Core (TM) CPU E5-2680 @ 2.80 GHz |

| GPU(s) | TITAN RTX |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arce, S.; Vernon, C.A.; Hammond, J.; Newell, V.; Janson, J.; Franke, K.W.; Hedengren, J.D. Automated 3D Reconstruction Using Optimized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models. Remote Sens. 2020, 12, 2169. https://doi.org/10.3390/rs12132169

Arce S, Vernon CA, Hammond J, Newell V, Janson J, Franke KW, Hedengren JD. Automated 3D Reconstruction Using Optimized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models. Remote Sensing. 2020; 12(13):2169. https://doi.org/10.3390/rs12132169

Chicago/Turabian StyleArce, Samuel, Cory A. Vernon, Joshua Hammond, Valerie Newell, Joseph Janson, Kevin W. Franke, and John D. Hedengren. 2020. "Automated 3D Reconstruction Using Optimized View-Planning Algorithms for Iterative Development of Structure-from-Motion Models" Remote Sensing 12, no. 13: 2169. https://doi.org/10.3390/rs12132169