LiDAR-Aided Interior Orientation Parameters Refinement Strategy for Consumer-Grade Cameras Onboard UAV Remote Sensing Systems

Abstract

:1. Introduction

2. Related Work

- Confirming the capability of LiDAR data to serve as a good source of control for IOP refinement in two aspects: (i) verifying the stability of the LiDAR system and the quality of reconstructed point clouds over time, and (ii) ensuring that LiDAR data, even over sites with predominantly horizontal surfaces or ones with mild slopes, are sufficient to refine camera IOPs;

- Developing a strategy that can use LiDAR data over any type of terrain to accurately refine camera IOPs without requiring any targets laid out in the surveyed area or any specific flight configuration as long as sufficient overlap and side-lap among images are ensured.

3. Data Acquisition System Specifications and Dataset Description

3.1. Data Acquisition System

3.2. Dataset Description

4. Image and LiDAR-Based Point Cloud Generation

5. Bias Impact Analysis for Camera IOPs

5.1. Analytical Bias Impact Analysis

5.2. Experimental Bias Impact Analysis

6. Methodology for IOP Refinement and Validation

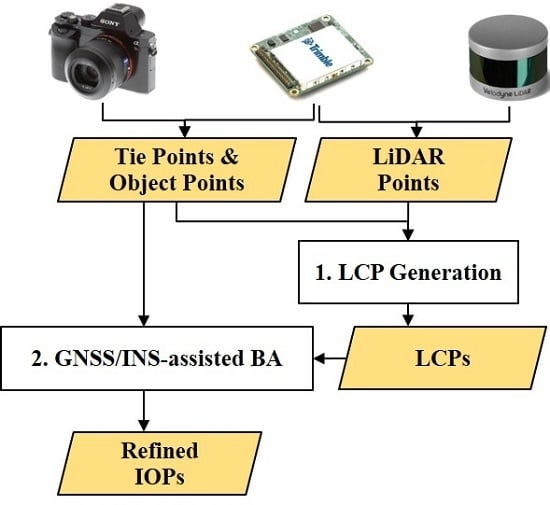

6.1. IOP Refinement

6.2. Camera IOP Accuracy Evaluation

6.3. Camera IOP Consistency Analysis

7. Experimental Results and Discussion

7.1. Accuracy of LiDAR and Image-based Point Cloud

- Image-based point cloud: Similar to the strategy introduced in Section 6.2, the center points of the twelve checkerboard targets are manually identified in the visible images. Then, using the original camera IOPs as well as refined EOPs derived from the GNSS/INS-assisted SfM procedure, 3D coordinates of the target centers are estimated through a multi-light ray intersection. Finally, the differences between the estimated and RTK-GNSS coordinates of the targets are calculated, and the statistics including the mean and STD are reported.

- LiDAR-based point cloud: In order to evaluate the absolute accuracy of the LiDAR point cloud, centers of the highly reflective checkerboard targets are first manually identified from the LiDAR point cloud based on the intensity information, and denoted as initial points. The initial points are expected to have an accuracy of ±3 to ±5 cm due to the noise level of the LiDAR data caused by (i) the GNSS/INS trajectory errors, (ii) laser range/orientation measurements errors, and (iii) the nature of LiDAR pulse returns from highly reflective surfaces. Then, the strategy proposed in Section 6.1 is used to derive the best-fitting plane in the neighborhood of the initial points, and reliable Z coordinates are derived by projecting the initial points onto the defined planes. Afterwards, the accuracy of the LiDAR point clouds is assessed by evaluating the differences between the LiDAR-based and corresponding RTK-GNSS coordinates for the twelve target centers.

7.2. IOP Refinement Results

7.3. Accuracy Analysis of Refined IOPs

7.4. Impact of the Initial Camera Parameters on Refined IOPs

8. Conclusions and Recommendations for Future Work

- LiDAR data are verified to be a reliable source of control for IOP refinement due to two reasons: (i) LiDAR system calibration parameters were shown to be stable over time and the derived point clouds were accurate based on a comparison with the RTK-GNSS measurements of the checkerboard targets, and (ii) LiDAR data over any type of terrain cover were proven to be sufficient for IOP refinement based on the presented bias impact analysis.

- The proposed strategy eliminates the need for any targets or specific flight configuration as long as sufficient overlap and side-lap are ensured among the images. The IOP refinement process can be conducted more frequently and even for each individual data collection mission to ensure a good accuracy of the photogrammetric products when using off-the-shelf digital cameras.

- The IOP refinement results from the seven datasets over the two study sites showed small standard deviation values for the estimated camera parameters as well as low correlation among those parameters (except for ). This indicates that the refined IOPs are estimated accurately. Further, image-based point clouds while using the refined IOPs showed a good agreement with both the LiDAR point cloud and RTK-GNSS measurements of the checkerboard targets, with an accuracy in the range of 3–5 cm at a 41 m flying height. This accuracy was within the expected accuracy considering the errors from the direct georeferencing as well as LiDAR data. In addition, to validate the robustness of the proposed strategy, its sensitivity to the initial camera IOPs was investigated. The results revealed that by using different sets of initial camera parameters, similar refined IOPs are derived.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Habib, A.; Zhou, T.; Masjedi, A.; Zhang, Z.; Flatt, J.E.; Crawford, M. Boresight calibration of GNSS/INS-assisted push-broom hyperspectral scanners on UAV platforms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1734–1749. [Google Scholar] [CrossRef]

- Moghimi, A.; Yang, C.; Anderson, J.A. Aerial hyperspectral imagery and deep neural networks for high-throughput yield phenotyping in wheat. Comput. Electron. Agric. 2020, 172, 105299. [Google Scholar] [CrossRef] [Green Version]

- Ravi, R.; Hasheminasab, S.M.; Zhou, T.; Masjedi, A.; Quijano, K.; Flatt, J.E.; Crawford, M.; Habib, A. UAV-based multi-sensor multi-platform integration for high throughput phenotyping. In Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping IV; SPIE: Baltimore, MD, USA, 2019; p. 110080E. [Google Scholar]

- Masjedi, A.; Zhao, J.; Thompson, A.M.; Yang, K.-W.; Flatt, J.E.; Crawford, M.M.; Ebert, D.S.; Tuinstra, M.R.; Hammer, G.; Chapman, S. Sorghum biomass prediction using UAV-based remote sensing data and crop model simulation. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 7719–7722. [Google Scholar]

- Habib, A.; Xiong, W.; He, F.; Yang, H.L.; Crawford, M. Improving orthorectification of UAV-based push-broom scanner imagery using derived orthophotos from frame cameras. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 10, 262–276. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned aerial vehicles (UAVs): A survey on civil applications and key research challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1. [Google Scholar] [CrossRef] [Green Version]

- Greenwood, W.W.; Lynch, J.P.; Zekkos, D. Applications of UAVs in civil infrastructure. J. Infrastruct. Syst. 2019, 25, 04019002. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Cheng, Y.-T.; Zhou, T.; Ravi, R.; Hasheminasab, S.M.; Flatt, J.E.; Troy, C.; Habib, A. Evaluation of UAV LiDAR for Mapping Coastal Environments. Remote Sens. 2019, 11, 2893. [Google Scholar] [CrossRef] [Green Version]

- Hamilton, S.; Stephenson, J. Testing UAV (drone) aerial photography and photogrammetry for archeology. Lakehead Univ. Tech. Rep. 2016, 1, 1–43. [Google Scholar]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Habib, A.; Pullivelli, A.; Mitishita, E.; Ghanma, M.; Kim, E.M. Stability analysis of low-cost digital cameras for aerial mapping using different georeferencing techniques. Photogramm. Rec. 2006, 21, 29–43. [Google Scholar] [CrossRef]

- Habib, A.; Schenk, T. Accuracy analysis of reconstructed points in object space from direct and indirect exterior orientation methods. In OEEPE Workshop on Integrated Sensor Orientation; Federal Agency for Cartography and Geodesy BKG: Frankfurt, Germany, 2001; pp. 17–18. [Google Scholar]

- Hastedt, H.; Luhmann, T. Investigations on the quality of the interior orientation and its impact in object space for UAV photogrammetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 321–348. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. Camera calibration with one-dimensional objects. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 892–899. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- He, F.; Habib, A. Target-based and Feature-based Calibration of Low-cost Digital Cameras with Large Field-of-view. In Proceedings of the ASPRS 2015 Annual Conference, Tampa, FL, USA, 4–8 May 2015. [Google Scholar]

- Habib, A.; Lari, Z.; Kwak, E.; Al-Durgham, K. Automated detection, localization, and identification of signalized targets and their impact on digital camera calibration. Rev. Bras. Cartogr. 2013, 65, 4. [Google Scholar]

- Remondino, F.; Fraser, C. Digital camera calibration methods: Considerations and comparisons. Int. Arch. Photogramm. Remote Sens. 2006, 36, 266–272. [Google Scholar]

- Duane, C.B. Close-range camera calibration. Photogramm. Eng. 1971, 37, 855–866. [Google Scholar]

- Habib, A.; Morgan, M. Stability analysis and geometric calibration of off-the-shelf digital cameras. Photogramm. Eng. Remote Sens. 2005, 71, 733–741. [Google Scholar] [CrossRef] [Green Version]

- Mitishita, E.; Côrtes, J.; Centeno, J.; Machado, A.M.L.; Martins, M. Study of stability analysis of the interior orientation parameters from the small-format digital camera using on-the-job calibration. In Proceedings of the Canadian Geomatics Conference, Calgary, AB, Canada, 15–18 June 2010. [Google Scholar]

- Honkavaara, E.; Ahokas, E.; Hyyppä, J.; Jaakkola, J.; Kaartinen, H.; Kuittinen, R.; Markelin, L.; Nurminen, K. Geometric test field calibration of digital photogrammetric sensors. ISPRS J. Photogramm. Remote Sens. 2006, 60, 387–399. [Google Scholar] [CrossRef]

- Jacobsen, K. Geometry of digital frame cameras. In Proceedings of the ASPRS Annual Conference, Tampa, FL, USA, 7–11 May 2007. [Google Scholar]

- Cramer, M.; Przybilla, H.J.; Zurhorst, A. UAV cameras: Overview and geometric calibration benchmark. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 85. [Google Scholar] [CrossRef] [Green Version]

- Gneeniss, A.S.; Mills, J.P.; Miller, P.E. In-flight photogrammetric camera calibration and validation via complementary lidar. ISPRS J. Photogramm. Remote Sens. 2015, 100, 3–13. [Google Scholar] [CrossRef] [Green Version]

- Costa, F.A.L.; Mitishita, E.A.; Centeno, J.A.S. A study of integration of LIDAR and photogrammetric data sets by indirect georeferencing and in situ camera calibration. Int. J. Image Data Fusion 2017, 8, 94–111. [Google Scholar] [CrossRef]

- Applanix. Apx-15 UAV Datasheet. Available online: https://www.applanix.com/downloads/products/specs/APX15_UAV.pdf (accessed on 8 June 2020).

- Sony. α7R III Full Specifications and Features. Available online: https://www.sony.com/electronics/interchangeable-lens-cameras/ilce-7m3-body-kit/specifications (accessed on 8 June 2020).

- Elbahnasawy, M. GNSS/INS-Assisted Multi-Camera Mobile Mapping: System Architecture, Modeling, Calibration, and Enhanced Navigation. Ph.D. Dissertation, Purdue University, West Lafayette, IN, USA, 2018. [Google Scholar]

- Velodyne. Puck LITE Data Sheet. Available online: http://www.mapix.com/wp-content/uploads/2018/07/63-9286_Rev-H_Puck-LITE_Datasheet_Web.pdf (accessed on 8 June 2020).

- Light, D.L. The new camera calibration system at the US Geological Survey. Photogramm. Eng. Remote Sens. 1992, 58, 185–188. [Google Scholar]

- Ravi, R.; Lin, Y.-J.; Elbahnasawy, M.; Shamseldin, T.; Habib, A. Simultaneous system calibration of a multi-lidar multicamera mobile mapping platform. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1694–1714. [Google Scholar] [CrossRef]

- Luhmann, T.; Fraser, C.; Maas, H.G. Sensor modelling and camera calibration for close-range photogrammetry. ISPRS J. Photogramm. Remote Sens. 2016, 115, 37–46. [Google Scholar] [CrossRef]

- Trimble. Trimble R10 Model 2 GNSS System. Available online: https://geospatial.trimble.com/sites/geospatial.trimble.com/files/2019-04/022516-332A_TrimbleR10-2_DS_USL_0419_LR.pdf (accessed on 8 June 2020).

- Hasheminasab, S.M.; Zhou, T.; Habib, A. GNSS/INS-Assisted Structure from Motion Strategies for UAV-Based Imagery over Mechanized Agricultural Fields. Remote Sens. 2020, 12, 351. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated aerial triangulation for UAV-based mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef] [Green Version]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1362–1376. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Valeo, C.; Habib, A. Digital Terrain Modeling: Acquisition, Manipulation, and Applications; Artech House Inc.: Norwood, MA, USA, 2005. [Google Scholar]

- Zhou, Y.; Rupnik, E.; Meynard, C.; Thom, C.; Pierrot-Deseilligny, M. Simulation and Analysis of Photogrammetric UAV Image Blocks—Influence of Camera Calibration Error. Remote Sens. 2020, 12, 22. [Google Scholar] [CrossRef] [Green Version]

- Rangel, J.M.G.; Gonçalves, G.R.; Pérez, J.A. The impact of number and spatial distribution of GCPs on the positional accuracy of geospatial products derived from low-cost UASs. Int. J. Remote Sens. 2018, 39, 7154–7171. [Google Scholar] [CrossRef]

- Renaudin, E.; Habib, A.; Kersting, A.P. Featured-Based Registration of Terrestrial Laser Scans with Minimum Overlap Using Photogrammetric Data. Etri J. 2011, 33, 517–527. [Google Scholar] [CrossRef]

| Dataset Name | Date | Study Site | Camera Focus Settings | Flying Height (m) | Ground Speed (m/s) | Lateral Distance 1 (m) | Overlap/ Side-Lap 2 (%) | Number of Images |

|---|---|---|---|---|---|---|---|---|

| A-1 | 20200304 | Site I | Auto focus | 41 | 4.0 | 6.0 | 83/89 | 209 |

| A-2 | 20200501 | 210 | ||||||

| B-1 | 20200304 | Site I | Manual focus (32 m) | 41 | 4.0 | 6.0 | 83/89 | 209 |

| B-2 | 20200501 | 210 | ||||||

| C-1 | 20200317 | Site I | Manual focus (41 m) | 41 | 4.0 | 6.0 | 83/89 | 211 |

| C-2 | 20200501 | 211 | ||||||

| D | 20200221 | Site II | Auto focus | 41 | 4.0 | 9.5 | 78/77 | 255 |

(pixel) | (pixel) | (pixel) | (10−10 pixel−2) | (10−17 pixel−4) | (10−7 pixel−1) | (10−8 pixel−1) | |

|---|---|---|---|---|---|---|---|

| Reference IOPs | 27.55 | −8.70 | 8025.11 | 8.01 | −5.23 | 1.48 | −6.92 |

| Biased IOPs () | 8005.11 | 8.01 | 1.48 | ||||

| Biased IOPs () | 8025.11 | 7.01 | 1.48 | ||||

| Biased IOPs () | 8025.11 | 8.01 | 5.48 |

| Statistics Criteria | (m) | (m) | (m) | |

|---|---|---|---|---|

| Bias in | Mean | −0.01 | 0.01 | 0.10 |

| STD | 0.00 | 0.00 | 0.01 | |

| Bias in | Mean | 0.00 | 0.00 | −0.03 |

| STD | 0.05 | 0.05 | 0.03 | |

| Bias in | Mean | 0.00 | 0.01 | 0.01 |

| STD | 0.02 | 0.02 | 0.05 |

| Dataset | Statistics Criteria | Image-Based vs. RTK-GNSS | LiDAR-Based vs. RTK-GNSS | ||||

|---|---|---|---|---|---|---|---|

| (m) | (m) | (m) | (m) | (m) | (m) | ||

| A-1 | Mean | 0.02 | 0.02 | 0.09 | 0.03 | 0.01 | 0.03 |

| STD | 0.02 | 0.04 | 0.03 | 0.02 | 0.03 | 0.01 | |

| A-2 | Mean | 0.04 | 0.00 | 0.17 | −0.01 | 0.00 | 0.01 |

| STD | 0.06 | 0.10 | 0.03 | 0.03 | 0.02 | 0.02 | |

| B-1 | Mean | 0.00 | 0.00 | 0.08 | −0.02 | 0.01 | 0.03 |

| STD | 0.02 | 0.03 | 0.02 | 0.03 | 0.05 | 0.02 | |

| B-2 | Mean | −0.01 | 0.00 | 0.10 | −0.01 | 0.01 | 0.01 |

| STD | 0.03 | 0.03 | 0.02 | 0.03 | 0.03 | 0.02 | |

| C-1 | Mean | 0.00 | 0.01 | 0.06 | −0.01 | −0.02 | 0.03 |

| STD | 0.04 | 0.04 | 0.02 | 0.04 | 0.03 | 0.02 | |

| C-2 | Mean | −0.02 | 0.01 | 0.08 | −0.02 | 0.02 | 0.01 |

| STD | 0.04 | 0.04 | 0.03 | 0.03 | 0.02 | 0.02 | |

| D | Mean | 0.01 | −0.02 | 0.07 | −0.02 | 0.01 | −0.02 |

| STD | 0.04 | 0.05 | 0.02 | 0.03 | 0.04 | 0.02 | |

| Dataset | (pixel) | (10−10 pixel−2) | (10−17 pixel−4) | (10−7 pixel−1) | (10−8 pixel−1) | |

|---|---|---|---|---|---|---|

| Original | 8025.11 | 8.01 | −5.23 | 1.48 | −6.92 | |

| A-1 | 0.38 | 8025.51 ± 0.69 | 8.33 ± 0.12 | −5.64 ± 0.05 | 1.15 ± 0.04 | −7.30 ± 0.43 |

| A-2 | 0.34 | 8026.13 ± 0.57 | 7.76 ± 0.09 | −5.54 ± 0.04 | 1.10 ± 0.03 | −7.84 ± 0.31 |

| B-1 | 0.25 | 8033.71 ± 0.44 | 8.53 ± 0.07 | −5.47 ± 0.03 | 1.21 ± 0.02 | −8.66 ± 0.27 |

| B-2 | 0.24 | 8034.45 ± 0.39 | 8.33 ± 0.06 | −5.43 ± 0.03 | 1.14 ± 0.02 | −8.67 ± 0.21 |

| C-1 | 0.28 | 8034.66 ± 0.38 | 8.58 ± 0.06 | −5.42 ± 0.03 | 1.06 ± 0.02 | −9.21 ± 0.23 |

| C-2 | 0.23 | 8036.33 ± 0.37 | 8.46 ± 0.06 | −5.46 ± 0.03 | 1.14 ± 0.02 | −7.27 ± 0.20 |

| D | 0.32 | 8030.31 ± 0.42 | 8.52 ± 0.08 | −5.72 ± 0.03 | 1.46 ± 0.02 | −8.32 ± 0.28 |

| Dataset | (m) | (m) | (m) | (m) |

|---|---|---|---|---|

| A-1 | 0.05 | −0.03 | 0.05 | 0.00 |

| A-2 | 0.05 | −0.02 | 0.01 | 0.00 |

| B-1 | 0.05 | −0.04 | 0.05 | 0.00 |

| B-2 | 0.05 | −0.02 | 0.03 | 0.00 |

| C-1 | 0.04 | −0.03 | 0.04 | 0.02 |

| C-2 | 0.05 | −0.02 | 0.02 | −0.01 |

| D | 0.02 | 0.00 | −0.03 | −0.02 |

| Dataset | Percentage of Variance | Principal Components |

|---|---|---|

| A-1 | 54.4% | (−0.199, 0.979, −0.034) |

| 44.7% | (−0.980, −0.200, 0.022) | |

| 0.9% | (−0.029, 0.029, 0.999) | |

| B-2 | 52.2% | (−0.736, 0.675, −0.039) |

| 47.0% | (0.676, 0.736, −0.007) | |

| 0.8% | (−0.025, 0.032, 0.999) | |

| C-2 | 52.1% | (−0.776, 0.630, −0.038) |

| 47.1% | (0.630, 0.776, −0.008) | |

| 0.8% | (−0.025, 0.030, 0.999) | |

| D | 58.4% | (−0.664, 0.748, 0.010) |

| 41.4% | (0.748, 0.664, 0.006) | |

| 0.2% | (0.002, 0.011, 0.999) |

| Dataset | Statistics Criteria | (m) | (m) | (m) |

|---|---|---|---|---|

| A-1 | Mean | 0.01 | 0.01 | 0.04 |

| STD | 0.01 | 0.02 | 0.03 | |

| A-2 | Mean | −0.01 | 0.01 | 0.01 |

| STD | 0.01 | 0.02 | 0.02 | |

| B-1 | Mean | 0.01 | −0.01 | 0.03 |

| STD | 0.01 | 0.01 | 0.03 | |

| B-2 | Mean | −0.01 | 0.00 | 0.02 |

| STD | 0.01 | 0.02 | 0.02 | |

| C-1 | Mean | 0.00 | 0.01 | 0.04 |

| STD | 0.01 | 0.01 | 0.02 | |

| C-2 | Mean | 0.00 | 0.01 | 0.01 |

| STD | 0.02 | 0.02 | 0.03 | |

| D | Mean | 0.00 | −0.01 | −0.03 |

| STD | 0.01 | 0.02 | 0.01 |

(pixel) | (10−10 pixel−2) | (10−17 pixel−4) | (10−7 pixel−1) | (10−8 pixel−1) | |

|---|---|---|---|---|---|

| IOP-1 | 8025.11 | 8.01 | −5.23 | 1.48 | −6.92 |

| IOP-2 | 8030.45 | 8.41 | −5.06 | 1.36 | −8.76 |

| Dataset | Initial IOPs | (pixel) | (10−10 pixel−2) | (10−17 pixel−4) | (10−7 pixel−1) | (10−8 pixel−1) |

|---|---|---|---|---|---|---|

| A-1 | IOP-1 | 8025.51 | 8.33 | −5.64 | 1.15 | −7.30 |

| IOP-2 | 8027.22 | 8.39 | −5.64 | 1.13 | −6.39 | |

| A-2 | IOP-1 | 8026.13 | 7.76 | −5.54 | 1.10 | −7.84 |

| IOP-2 | 8029.07 | 7.62 | −5.48 | 1.07 | −7.66 | |

| B-1 | IOP-1 | 8033.71 | 8.53 | −5.47 | 1.21 | −8.66 |

| IOP-2 | 8037.52 | 8.51 | −5.43 | 1.24 | −8.36 | |

| B-2 | IOP-1 | 8034.45 | 8.33 | −5.43 | 1.14 | −8.67 |

| IOP-2 | 8039.53 | 8.39 | −5.64 | 1.13 | −6.39 | |

| C-1 | IOP-1 | 8034.66 | 8.58 | −5.42 | 1.06 | −9.21 |

| IOP-2 | 8038.37 | 8.53 | −5.39 | 1.02 | −8.98 | |

| C-2 | IOP-1 | 8036.33 | 8.46 | −5.46 | 1.14 | −7.27 |

| IOP-2 | 8042.69 | 8.39 | −5.42 | 1.16 | −7.21 | |

| D | IOP-1 | 8030.31 | 8.52 | −5.72 | 1.46 | −8.32 |

| IOP-2 | 8033.09 | 8.51 | −5.71 | 1.45 | −8.39 |

| Dataset | Principal Distance Comparison | Distortion Parameters Comparison | ||||

|---|---|---|---|---|---|---|

(pixel) | Impact on Z Coordinate (cm) | Impact on x Coordinate (pixel) | Impact on y Coordinate (pixel) | |||

| RMSE | Maximum | RMSE | Maximum | |||

| A-1 | 1.71 | −0.87 | 0.20 | 0.91 | 0.17 | 0.77 |

| A-2 | 2.94 | −1.50 | 0.15 | 0.36 | 0.09 | 0.26 |

| B-1 | 3.81 | −1.94 | 0.12 | 0.71 | 0.07 | 0.50 |

| B-2 | 5.08 | −2.59 | 0.08 | 0.57 | 0.06 | 0.42 |

| C-1 | 4.71 | −2.40 | 0.10 | 0.47 | 0.05 | 0.30 |

| C-2 | 6.36 | −3.24 | 0.05 | 0.38 | 0.04 | 0.26 |

| D | 2.78 | −1.42 | 0.05 | 0.26 | 0.02 | 0.16 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, T.; Hasheminasab, S.M.; Ravi, R.; Habib, A. LiDAR-Aided Interior Orientation Parameters Refinement Strategy for Consumer-Grade Cameras Onboard UAV Remote Sensing Systems. Remote Sens. 2020, 12, 2268. https://doi.org/10.3390/rs12142268

Zhou T, Hasheminasab SM, Ravi R, Habib A. LiDAR-Aided Interior Orientation Parameters Refinement Strategy for Consumer-Grade Cameras Onboard UAV Remote Sensing Systems. Remote Sensing. 2020; 12(14):2268. https://doi.org/10.3390/rs12142268

Chicago/Turabian StyleZhou, Tian, Seyyed Meghdad Hasheminasab, Radhika Ravi, and Ayman Habib. 2020. "LiDAR-Aided Interior Orientation Parameters Refinement Strategy for Consumer-Grade Cameras Onboard UAV Remote Sensing Systems" Remote Sensing 12, no. 14: 2268. https://doi.org/10.3390/rs12142268