Bridge Inspection Using Unmanned Aerial Vehicle Based on HG-SLAM: Hierarchical Graph-Based SLAM

Abstract

:1. Introduction

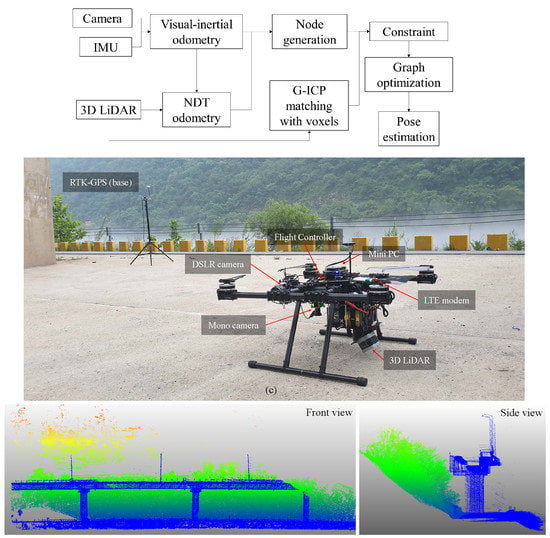

- To the best of our knowledge, this paper presents the first experimental validation that encompasses autonomous bridge inspection with a UAV that utilizes a graph-based SLAM method fusing data from multiple sensors, such as a camera, an inertial measurement unit (IMU), and a 3D LiDAR. It proposes a methodology for each component of the proposed framework for applying a UAV to actual bridge inspection.

- The proposed graph structure for SLAM offers two types of optimization with two different sensors on the aerial vehicle: local (VI odometry (VI-O) and NDT) and global (G-ICP). Although node generation based on VI odometry alone tends to easily diverge depending on the environment, VI odometry combined with NDT-based odometry can robustly generate nodes with hierarchical optimization. Therefore, the robustness of node generation can be guaranteed.

- The proposed method was tested on two different types of large-scale bridges presenting different flight scenarios (Figure 2). The experimental results are compared with other state-of-the-art algorithms based on the ground-truth measurements of the bridges. Our experimental results can be seen on a public media site (experiment video: https://youtu.be/K1BCIGGsxg8).

2. Materials and Methods

2.1. Proposed Hierarchical Graph-Based Slam

2.1.1. Graph Structure

2.1.2. Graph Optimization

2.2. High-Level Control Scheme

3. Field Experimental Results

3.1. Platform and Experimental Setup

3.2. Experimental Results

4. Discussion and Conclusions

- Transient strong winds below the sea-crossing bridge: Even if accurate position estimation is possible under a bridge, if a wind gust of over 10 m/s suddenly occurs, the risk that the UAV will fall markedly increases. Addressing this problem will require improvement of the hardware of the aircraft itself or an improved control algorithm that can better cope with strong winds.

- Difficulty in precise synchronization of the obtained images and pose estimates: In the current system, the image and pose data are stored separately, and the operator manually synchronizes them later. In this case, the possibility that the data may not be properly synchronized arises. Therefore, in the future, the entire image acquisition system will be improved and integrated into the Robot Operating System (ROS) to enable accurate synchronization.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- DeVault, J.E. Robotic system for underwater inspection of bridge piers. IEEE Instrum. Meas. Mag. 2000, 3, 32–37. [Google Scholar] [CrossRef]

- Prasanna, P.; Dana, K.J.; Gucunski, N.; Basily, B.B.; La, H.M.; Lim, R.S.; Parvardeh, H. Automated crack detection on concrete bridges. IEEE Trans. Autom. Sci. Eng. 2014, 13, 591–599. [Google Scholar] [CrossRef]

- Oh, J.K.; Jang, G.; Oh, S.; Lee, J.H.; Yi, B.J.; Moon, Y.S.; Lee, J.S.; Choi, Y. Bridge inspection robot system with machine vision. Autom. Constr. 2009, 18, 929–941. [Google Scholar] [CrossRef]

- Lee, J.H.; Lee, J.M.; Kim, H.J.; Moon, Y.S. Machine vision system for automatic inspection of bridges. In Proceedings of the Congress on Image and Signal Processing, Hainan, China, 27–30 May 2008; pp. 363–366. [Google Scholar]

- Murphy, R.R.; Steimle, E.; Hall, M.; Lindemuth, M.; Trejo, D.; Hurlebaus, S.; Medina-Cetina, Z.; Slocum, D. Robot-assisted bridge inspection. J. Intell. Robot. Syst. 2011, 64, 77–95. [Google Scholar] [CrossRef]

- Lim, R.S.; La, H.M.; Shan, Z.; Sheng, W. Developing a crack inspection robot for- bridge maintenance. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 6288–6293. [Google Scholar]

- Akutsu, A.; Sasaki, E.; Takeya, K.; Kobayashi, Y.; Suzuki, K.; Tamura, H. A comprehensive study on development of a small-sized self-propelled robot for bridge inspection. Struct. Infrastruct. Eng. 2017, 13, 1056–1067. [Google Scholar] [CrossRef]

- Peel, H.; Luo, S.; Cohn, A.; Fuentes, R. Localisation of a mobile robot for bridge bearing inspection. Auto. Constr. 2018, 94, 244–256. [Google Scholar] [CrossRef]

- Sutter, B.; Lelevé, A.; Pham, M.T.; Gouin, O.; Jupille, N.; Kuhn, M.; Lulé, P.; Michaud, P.; Rémy, P. A semi-autonomous mobile robot for bridge inspection. Auto. Constr. 2018, 91, 111–119. [Google Scholar] [CrossRef] [Green Version]

- La, H.M.; Gucunski, N.; Dana, K.; Kee, S.H. Development of an autonomous bridge deck inspection robotic system. J. Field Robot. 2017, 34, 1489–1504. [Google Scholar] [CrossRef] [Green Version]

- Ikeda, T.; Yasui, S.; Fujihara, M.; Ohara, K.; Ashizawa, S.; Ichikawa, A.; Okino, A.; Oomichi, T.; Fukuda, T. Wall contact by octo-rotor UAV with one DoF manipulator for bridge inspection. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 5122–5127. [Google Scholar]

- Pham, N.H.; La, H.M. Design and implementation of an autonomous robot for steel bridge inspection. In Proceedings of the 54th Annual Allerton Conference on Communication, Control, and Computing (Allerton), Monticello, IL, USA, 27–30 September 2016; pp. 556–562. [Google Scholar]

- Mazumdar, A.; Asada, H.H. Mag-foot: A steel bridge inspection robot. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Louis, MO, USA, 11–15 October 2009; pp. 1691–1696. [Google Scholar]

- Sanchez Cuevas, P.J.; Ramon-Soria, P.; Arrue, B.; Ollero, A.; Heredia, G. Robotic system for inspection by contact of bridge beams using UAVs. Sensors 2019, 19, 305. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jung, S.; Shin, J.U.; Myeong, W.; Myung, H. Mechanism and system design of MAV (Micro Aerial Vehicle)-type wall-climbing robot for inspection of wind blades and non-flat surfaces. In Proceedings of the International Conference on Control, Automation and Systems (ICCAS), Busan, Korea, 13–16 October 2015; pp. 1757–1761. [Google Scholar]

- Myeong, W.; Jung, S.; Yu, B.; Chris, T.; Song, S.; Myung, H. Development of Wall-climbing Unmanned Aerial Vehicle System for Micro-Inspection of Bridges. In Proceedings of the Workshop on The Future of Aerial Robotics: Challenges and Opportunities, International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019. [Google Scholar]

- Myeong, W.; Myung, H. Development of a wall-climbing drone capable of vertical soft landing using a tilt-rotor mechanism. IEEE Access. 2018, 7, 4868–4879. [Google Scholar] [CrossRef]

- Yang, C.H.; Wen, M.C.; Chen, Y.C.; Kang, S.C. An optimized unmanned aerial system for bridge inspection. In Proceedings of the International Symposium on Automation and Robotics in Construction, Oulu, Finland, 15–18 June 2015; Volume 32, pp. 1–6. [Google Scholar]

- Dorafshan, S.; Maguire, M.; Hoffer, N.V.; Coopmans, C. Challenges in bridge inspection using small unmanned aerial systems: Results and lessons learned. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Miami, FL, USA, 13–16 June 2017; pp. 1722–1730. [Google Scholar]

- Hallermann, N.; Morgenthal, G. Visual inspection strategies for large bridges using Unmanned Aerial Vehicles (UAV). In Proceedings of the 7th International Conference on Bridge Maintenance, Safety and Management, Shanghai, China, 7–11 July 2014; pp. 661–667. [Google Scholar]

- Metni, N.; Hamel, T. A UAV for bridge inspection: Visual servoing control law with orientation limits. Auto. Constr. 2007, 17, 3–10. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: LiDAR Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems (RSS), Pittsburgh, PA, USA, 12–16 July 2014. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and ground-optimized LiDAR odometry and mapping on variable terrain. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Barcelona, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Behley, J.; Stachniss, C. Efficient Surfel-Based SLAM using 3D Laser Range Data in Urban Environments. In Proceedings of the Robotics: Science and Systems (RSS), Pittsburgh, PA, USA, 26–30 June 2018. [Google Scholar]

- Li, Q.; Chen, S.; Wang, C.; Li, X.; Wen, C.; Cheng, M.; Li, J. LO-Net: Deep Real-time LiDAR Odometry. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 8473–8482. [Google Scholar]

- Chen, X.; Milioto, A.; Palazzolo, E.; Giguere, P.; Behley, J.; Stachniss, C. SuMa++: Efficient LiDAR-based semantic SLAM. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 4530–4537. [Google Scholar]

- Wang, N.; Li, Z. DMLO: Deep Matching LiDAR Odometry. arXiv 2020, arXiv:2004.03796. [Google Scholar]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 1–10. [Google Scholar]

- Engel, J.; Schöps, T.; Cremers, D. LSD-SLAM: Large-scale direct monocular SLAM. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 834–849. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Forster, C.; Pizzoli, M.; Scaramuzza, D. SVO: Fast semi-direct monocular visual odometry. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 15–22. [Google Scholar]

- Engel, J.; Koltun, V.; Cremers, D. Direct sparse odometry. IEEE Trans. Pattern. Anal. Mach. Intell. 2017, 40, 611–625. [Google Scholar] [CrossRef] [PubMed]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.L. Vision-Enhanced LiDAR Odometry and Mapping. Master’s Thesis, Carnegie Mellon University, Pittsburgh, PA, USA, 2016. [Google Scholar]

- Zhang, J.; Singh, S. Visual-LiDAR odometry and mapping: Low-drift, robust, and fast. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 25–30 May 2015; pp. 2174–2181. [Google Scholar]

- Ye, H.; Chen, Y.; Liu, M. Tightly coupled 3D LiDAR inertial odometry and mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3144–3150. [Google Scholar]

- CSIRO-HoverMap. 2016. Available online: https://research.csiro.au/robotics/hovermap (accessed on 30 June 2020).

- DIBOTICS. 2015. Available online: http://www.dibotics.com/ (accessed on 30 June 2020).

- Jung, S.; Song, S.; Youn, P.; Myung, H. Multi-Layer Coverage Path Planner for Autonomous Structural Inspection of High-Rise Structures. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Barcelona, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar]

- Jung, S.; Song, S.; Kim, S.; Park, J.; Her, J.; Roh, K.; Myung, H. Toward Autonomous Bridge Inspection: A framework and experimental results. In Proceedings of the International Conference on Ubiquitous Robots (UR), Jeju, Korea, 24–27 June 2019; pp. 208–211. [Google Scholar]

- Biber, P.; Straßer, W. The normal distributions transform: A new approach to laser scan matching. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 3, pp. 2743–2748. [Google Scholar]

- Magnusson, M.; Lilienthal, A.; Duckett, T. Scan registration for autonomous mining vehicles using 3D-NDT. J. Field Robot. 2007, 24, 803–827. [Google Scholar] [CrossRef] [Green Version]

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-ICP. In Proceedings of the Robotics: Science and Systems (RSS), Seattle, WA, USA, 28 June–1 July 2009. [Google Scholar]

- Jung, H.J.; Lee, J.H.; Yoon, S.; Kim, I.H. Bridge Inspection and condition assessment using Unmanned Aerial Vehicles (UAVs): Major challenges and solutions from a practical perspective. Smart. Struct. Syst. 2019, 24, 669–681. [Google Scholar]

- Grisetti, G.; Kummerle, R.; Stachniss, C.; Burgard, W. A tutorial on graph-based SLAM. IEEE Intell. Transp. Syst. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures, Boston, MA, USA, 12–15 November 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Kaess, M.; Ranganathan, A.; Dellaert, F. iSAM: Incremental smoothing and mapping. IEEE Trans. Robot. 2008, 24, 1365–1378. [Google Scholar] [CrossRef]

- Fossen, T.I. Marine Control Systems–Guidance. Navigation, and Control of Ships, Rigs and Underwater Vehicles.; Marine Cybernetics: Trondheim, Norway, 2002; ISBN 82-92356-00-2. [Google Scholar]

- Yang, Y.; Geneva, P.; Eckenhoff, K.; Huang, G. Visual-Inertial Odometry with Point and Line Features. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 2447–2454. [Google Scholar]

- CloudCompare. 2016. Available online: https://www.danielgm.net/cc/ (accessed on 30 June 2020).

| Param. | Value | Param. | Value |

|---|---|---|---|

| Dimensions | 1090 mm × 1090 mm | Height | 450 mm |

| Props. | 26.2 × 8.5 inches | Motor | T-Motor 135 kV |

| Flight time | 30∼35 min | Wind resist. | 8∼10 m/s |

| Battery | 12S 20A | Weight | 12 kg (w/o batt.) |

| Proposed | LOAM | LIO-Mapping | |||||

|---|---|---|---|---|---|---|---|

| Dim. | Actual ( ) | Est. () | Err. (%) | Est. () | Err (%) | Est. () | Err (%) |

| 50 | 49.89 | 0.22 | 49.62 | 0.76 | 49.39 | 1.22 | |

| 50 | 49.75 | 0.50 | 49.35 | 1.30 | 49.41 | 1.18 | |

| 14.5 | 14.27 | 1.58 | 14.23 | 1.86 | 14.17 | 2.27 | |

| 11 | 10.90 | 0.91 | 10.88 | 1.09 | 10.67 | 3.00 | |

| 230 | 227.2 | 1.21 | 234.34 | 1.88 | 233.79 | 1.65 | |

| 23 | 22.7 | 1.30 | 23.49 | 2.13 | 23.43 | 1.87 | |

| 20 | 20.32 | 1.60 | 21.96 | 9.80 | 21.59 | 7.95 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, S.; Choi, D.; Song, S.; Myung, H. Bridge Inspection Using Unmanned Aerial Vehicle Based on HG-SLAM: Hierarchical Graph-Based SLAM. Remote Sens. 2020, 12, 3022. https://doi.org/10.3390/rs12183022

Jung S, Choi D, Song S, Myung H. Bridge Inspection Using Unmanned Aerial Vehicle Based on HG-SLAM: Hierarchical Graph-Based SLAM. Remote Sensing. 2020; 12(18):3022. https://doi.org/10.3390/rs12183022

Chicago/Turabian StyleJung, Sungwook, Duckyu Choi, Seungwon Song, and Hyun Myung. 2020. "Bridge Inspection Using Unmanned Aerial Vehicle Based on HG-SLAM: Hierarchical Graph-Based SLAM" Remote Sensing 12, no. 18: 3022. https://doi.org/10.3390/rs12183022