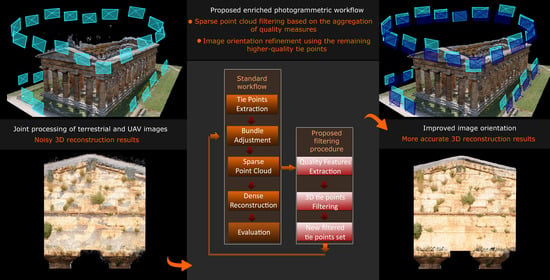

Figure 1.

The flowchart of the proposed pipeline. The standard photogrammetric workflow (left block) is enriched with the filtering procedure developed in Python (right block).

Figure 1.

The flowchart of the proposed pipeline. The standard photogrammetric workflow (left block) is enriched with the filtering procedure developed in Python (right block).

Figure 2.

Several image network configurations in the Modena Cathedral subset: terrestrial-convergent (a), terrestrial-parallel (b), unmanned aerial vehicle (UAV) (c) and terrestrial and UAV combined (d).

Figure 2.

Several image network configurations in the Modena Cathedral subset: terrestrial-convergent (a), terrestrial-parallel (b), unmanned aerial vehicle (UAV) (c) and terrestrial and UAV combined (d).

Figure 3.

Visualization of the a-posteriori standard deviation (σ) precisions computed on the original (not filtered) sparse point clouds: terrestrial-convergent (a), terrestrial-parallel (b), UAV (c) and terrestrial and UAV combined (d). σ values in (a,b) are visualized in the range [1–30 mm], for (c,d) in the range [1–100 mm].

Figure 3.

Visualization of the a-posteriori standard deviation (σ) precisions computed on the original (not filtered) sparse point clouds: terrestrial-convergent (a), terrestrial-parallel (b), UAV (c) and terrestrial and UAV combined (d). σ values in (a,b) are visualized in the range [1–30 mm], for (c,d) in the range [1–100 mm].

Figure 4.

A graphical representation of the considered quality features computed for each 3D tie point: (a) re-projection error; (b) multiplicity; (c) intersection angle; (d) a-posteriori standard deviation.

Figure 4.

A graphical representation of the considered quality features computed for each 3D tie point: (a) re-projection error; (b) multiplicity; (c) intersection angle; (d) a-posteriori standard deviation.

Figure 5.

Some examples of the Modena Cathedral dataset: (a) and (b) terrestrial images; (c) UAV-based image.

Figure 5.

Some examples of the Modena Cathedral dataset: (a) and (b) terrestrial images; (c) UAV-based image.

Figure 6.

An example of quantile-quantile (Q-Q) plots for the unfiltered (a) and filtered (b) a-posteriori standard deviation values (mm) and related Skewness and Kurtosis values for the Modena Cathedral dataset. The quantiles of input sample (vertical axis) are plotted against the standard normal quantiles (horizontal axis). In the filtered case (b), values approximate better to the straight line, assuming a more relevant normal behaviour.

Figure 6.

An example of quantile-quantile (Q-Q) plots for the unfiltered (a) and filtered (b) a-posteriori standard deviation values (mm) and related Skewness and Kurtosis values for the Modena Cathedral dataset. The quantiles of input sample (vertical axis) are plotted against the standard normal quantiles (horizontal axis). In the filtered case (b), values approximate better to the straight line, assuming a more relevant normal behaviour.

Figure 7.

Selected areas for the plane fitting evaluation (

a—

Table 6) and for the cloud-to-cloud distance analysis (

b—

Table 7).

Figure 7.

Selected areas for the plane fitting evaluation (

a—

Table 6) and for the cloud-to-cloud distance analysis (

b—

Table 7).

Figure 8.

Qualitative (visual) evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a,c) and after the proposed filtering method (b,d). Less noisy data and more details are clearly visible in (b,d).

Figure 8.

Qualitative (visual) evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a,c) and after the proposed filtering method (b,d). Less noisy data and more details are clearly visible in (b,d).

Figure 9.

Some examples of the Nettuno temple dataset: terrestrial (a) and (b) and UAV (c) images.

Figure 9.

Some examples of the Nettuno temple dataset: terrestrial (a) and (b) and UAV (c) images.

Figure 10.

Selected five areas for cloud-to-cloud distance analyses between the laser scanning ground truth and the two photogrammetric clouds.

Figure 10.

Selected five areas for cloud-to-cloud distance analyses between the laser scanning ground truth and the two photogrammetric clouds.

Figure 11.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a–c)) and after the proposed filtering method (d–f).

Figure 11.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a–c)) and after the proposed filtering method (d–f).

Figure 12.

Some images of the WWI Fortification dataset: terrestrial (a,b) and UAV images (c).

Figure 12.

Some images of the WWI Fortification dataset: terrestrial (a,b) and UAV images (c).

Figure 13.

Selected five areas for cloud-to-cloud distance analyses and comparisons.

Figure 13.

Selected five areas for cloud-to-cloud distance analyses and comparisons.

Figure 14.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a–c) and after the proposed filtering method (d–f) images. Less noisy data and more details are clearly visible in the results obtained with the proposed method.

Figure 14.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a–c) and after the proposed filtering method (d–f) images. Less noisy data and more details are clearly visible in the results obtained with the proposed method.

Figure 15.

Some examples of the terrestrial and UAV-based subset of the Dortmund benchmark: (a) terrestrial image; (b) UAV-based image.

Figure 15.

Some examples of the terrestrial and UAV-based subset of the Dortmund benchmark: (a) terrestrial image; (b) UAV-based image.

Figure 16.

Selected five areas for cloud-to-cloud distance analyses between the laser scanning ground truth and the two photogrammetric clouds.

Figure 16.

Selected five areas for cloud-to-cloud distance analyses between the laser scanning ground truth and the two photogrammetric clouds.

Figure 17.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a,c) and after the proposed filtering method (b,d).

Figure 17.

Qualitative evaluation and comparisons of the dense point clouds derived from the standard photogrammetric workflow (a,c) and after the proposed filtering method (b,d).

Table 1.

Considered image networks and variation of object coordinate precisions (in mm). In all datasets, the z-axis points upward.

Table 1.

Considered image networks and variation of object coordinate precisions (in mm). In all datasets, the z-axis points upward.

| Network | Numb.

of Images | Numb. of

3D Tie Points | Average σx

[mm] | Average σy

[mm] | Average σz

[mm] |

|---|

| 1a | 15 | ≃104 K | 4.36 | 2.04 | 0.78 |

| 1b | 12 | ≃70 K | 1.63 | 7.02 | 3.52 |

| 1c | 16 | ≃149 K | 17.65 | 32.43 | 40.89 |

| 1d | 43 | ≃440 K | 12.76 | 17.14 | 14.63 |

Table 2.

Improvements (+) and worsening (−) of median values of the considered quality feature when only one feature is used to filter the 3D tie points.

Table 2.

Improvements (+) and worsening (−) of median values of the considered quality feature when only one feature is used to filter the 3D tie points.

| Variations of Single Quality Parameters |

|---|

| | | Re-Proj. Error | Multiplicity | Inters. Angle | A-Post. Std. Dev. |

|---|

| Employed feature for point filtering | Re-proj. Error | +52% | 0% | −24% | −47% |

| Multiplicity | −10% | +50% | +67% | +2% |

| Int. Angle | +2% | +50% | +67% | +10% |

| A-Post. std. dev. | −8% | +33% | +35% | +11% |

Table 3.

Average median improvement on quality parameters after applying different filtering thresholds.

Table 3.

Average median improvement on quality parameters after applying different filtering thresholds.

| | Removed 3D Points | Re-Projection Error | Multiplicity | Inters. Angle | A-Post. St. Dev. |

|---|

| 1 | ~305 k (~75%) | +16% | +60% | +64% | +12% |

| 2 | ~289 k (~71%) | +16% | +50% | +61% | +15% |

| 3 | ~190 k (~47%) | +30% | +33% | +41% | +19% |

| 4 | ~167 k (41%) | +32% | +33% | +34% | +16% |

Table 4.

Median, mean and standard deviation values for the quality features computed on the original (not filtered) sparse point cloud (~405,000 3D tie points).

Table 4.

Median, mean and standard deviation values for the quality features computed on the original (not filtered) sparse point cloud (~405,000 3D tie points).

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (deg) | A-Post. Std. Dev. (mm) |

|---|

| MEDIAN | 0.963 | 2 | 12.017 | 5.222 |

| MEAN | 1.454 | 3.344 | 16.806 | 54.519 |

| STD. DEV. | 1.446 | 2.762 | 16.742 | 244.976 |

Table 5.

Median, mean and standard deviation values for the quality features values computed on the filtered sparse point cloud (ca 280,000 3D tie points).

Table 5.

Median, mean and standard deviation values for the quality features values computed on the filtered sparse point cloud (ca 280,000 3D tie points).

| | Re-Proj. Error (px) | Multiplicity | Inter. Angle (deg) | A-Post. Std. Dev. (mm) |

|---|

| MEDIAN | 0.827 (−14%) | 4 (+50%) | 31.048 (+61%) | 4.532 (−15%) |

| MEAN | 1.008 (−44%) | 5.274 (+37%) | 33.915 (+50%) | 6.879 (>>−100%) |

| STD. DEV. | 0.707 (−51%) | 3.564 (+22%) | 16.171 (−3%) | 7.395 (>>−100%) |

Table 6.

RMSEs (Root Mean Square Errors) of plane fitting on five sub-areas for the dense point clouds derived from the original and filtered results.

Table 6.

RMSEs (Root Mean Square Errors) of plane fitting on five sub-areas for the dense point clouds derived from the original and filtered results.

| Sub-Area | Original (mm) | Filtered (mm) | Variation |

|---|

| AREA 1 | 3.022 | 2.027 | (−33%) |

| AREA 2 | 5.198 | 2.370 | (−54%) |

| AREA 3 | 2.721 | 2.137 | (−21%) |

| AREA 4 | 52.805 | 7.878 | (−85%) |

| AREA 5 | 3.774 | 3.229 | (−14%) |

| Average Variation | (~−41%) |

Table 7.

Cloud-to-cloud distance analyses between the laser scanning and the photogrammetric point clouds derived from the original and filtered results.

Table 7.

Cloud-to-cloud distance analyses between the laser scanning and the photogrammetric point clouds derived from the original and filtered results.

| Sub-Area | Original (mm) | Filtered (mm) | Variation |

|---|

| | Mean | Std. Dev. | Mean | Std. Dev. | |

|---|

| AREA 1 | 9.767 | 17.762 | 6.443 | 19.089 | (−40%) |

| AREA 2 | 13.327 | 31.877 | 10.452 | 33.685 | (−23%) |

| AREA 3 | 29.906 | 51.526 | 25.044 | 41.812 | (−17%) |

| AREA 4 | 37.972 | 81.564 | 32.390 | 73.520 | (−16%) |

| AREA 5 | 41.344 | 60.513 | 37.883 | 58.847 | (−7%) |

| Average Variation | (~−21%) |

Table 8.

Median, mean and standard deviation values for quality feature values computed on the original (not filtered) sparse point cloud (~640,000 3D tie points).

Table 8.

Median, mean and standard deviation values for quality feature values computed on the original (not filtered) sparse point cloud (~640,000 3D tie points).

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (deg) | A-Post. Std. Dev. (mm) |

|---|

| MEDIAN | 1.008 | 3 | 11.710 | 6.95 |

| MEAN | 1.239 | 4.019 | 18.597 | 108.935 |

| STD. DEV. | 0.881 | 3.529 | 19.712 | 2931.512 |

Table 9.

Values of quality parameters computed on the filtered sparse point cloud (~187,000 3D tie points) and average variations of the metrics.

Table 9.

Values of quality parameters computed on the filtered sparse point cloud (~187,000 3D tie points) and average variations of the metrics.

| | Re−Projection Error (px) | Multiplicity | Intersection Angle (deg) | A-Post. Std. Dev. (mm) |

|---|

| MEDIAN | 0.773 (−23%) | 5 (+40%) | 34.538 (+66%) | 4.565 (−34%) |

| MEAN | 0.899 (−27%) | 6.570 (+39%) | 38.413 (+52%) | 7.371 (>−100%) |

| STD. DEV. | 0.532 (−40%) | 4.094 (+14%) | 20.324 (+3%) | 34.378 (>>−100%) |

Table 10.

Check-point RMSEs in the original and filtered sparse point cloud and variation of the obtained values.

Table 10.

Check-point RMSEs in the original and filtered sparse point cloud and variation of the obtained values.

| | RMSExy (px) | RMSEx (mm) | RMSEy (mm) | RMSEy (mm) | RMSE (mm) |

|---|

| Original | 0.351 | 10.728 | 15.905 | 18.886 | 26.028 |

| Filtered | 0.319 | 8.235 | 5.326 | 11.102 | 16.332 |

| Variation | ~−10% | ~−30% | >−100% | ~−70% | ~−59% |

Table 11.

Cloud-to-cloud distance analyses on the original and filtered dense cloud and average variation of the mean values.

Table 11.

Cloud-to-cloud distance analyses on the original and filtered dense cloud and average variation of the mean values.

| Sub-Area | Original (mm) | Filtered (mm) | Mean Variation |

|---|

| | Mean | St. Deviation | Mean | St. Deviation | |

|---|

| AREA 1 | 59.394 | 92.244 | 52.529 | 86.107 | (~−10%) |

| AREA 2 | 59.358 | 90.843 | 26.768 | 32.289 | (~−54%) |

| AREA 3 | 49.3587 | 78.654 | 20.007 | 37.883 | (~−59%) |

| AREA 4 | 60.956 | 98.024 | 36.479 | 76.630 | (~−41%) |

| AREA 5 | 63.581 | 106.752 | 27.064 | 43.042 | (~−58%) |

| Average Variation | (~−44%) |

Table 12.

Median, mean and standard deviation values for the quality features values computed on the original (not filtered) sparse point cloud (~1.2 mil. 3D tie points).

Table 12.

Median, mean and standard deviation values for the quality features values computed on the original (not filtered) sparse point cloud (~1.2 mil. 3D tie points).

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (degree) | A-Post. Std. Dev. (mm) |

|---|

| MEDIAN | 13.745 | 3 | 11.585 | 5.89 |

| MEAN | 14.181 | 3.237 | 17.361 | 285.38 |

| ST. DEV. | 11.229 | 1.712 | 17.506 | 409.94 |

Table 13.

Values of the quality parameters computed on the filtered sparse point cloud and average variation of the results.

Table 13.

Values of the quality parameters computed on the filtered sparse point cloud and average variation of the results.

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (degree) | A-Post. St. Dev. (mm) |

|---|

| MEDIAN | 3.822 (−72%) | 4 (+25%) | 26.913 (+57%) | 4.956 (~−19%) |

| MEAN | 5.008 (−65%) | 4.324 (+25%) | 29.991 (+42%) | 15.6908 (>−100%) |

| ST. DEV. | 3.665 (−67%) | 1.765 (+3%) | 15.326 (−12%) | 274.57 (~−49%) |

Table 14.

Cloud-to-cloud distance analysis on the original and filtered dense cloud and average variation of the mean values.

Table 14.

Cloud-to-cloud distance analysis on the original and filtered dense cloud and average variation of the mean values.

| Sub-Area | Original Dense Cloud (mm) | Filtered Dense Cloud (mm) | Mean Variation |

|---|

| | Mean | St. Dev. | Mean | St. Dev. | |

|---|

| AREA 1 | 63.694 | 35.645 | 20.548 | 17.854 | (~−67%) |

| AREA 2 | 49.796 | 22.006 | 18.229 | 18.801 | (~−64%) |

| AREA 3 | 100.720 | 52.869 | 52.584 | 46.421 | (~−48%) |

| AREA 4 | 123.237 | 24.683 | 61.367 | 17.192 | (~−50%) |

| AREA 5 | 52.432 | 33.733 | 40.717 | 18.122 | (~−21%) |

| Average Variation | (~−50%) |

Table 15.

Median, mean and standard deviation values for the quality parameter values computed on the original (not filtered) sparse point cloud (~315,000 3D tie points).

Table 15.

Median, mean and standard deviation values for the quality parameter values computed on the original (not filtered) sparse point cloud (~315,000 3D tie points).

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (degree) | A-Post. St. Dev. (mm) |

|---|

| MEDIAN | 0.859 | 2 | 9.829 | 11.858 |

| MEAN | 1.035 | 3.409 | 14.989 | 41.612 |

| ST. DEV. | 0.775 | 2.458 | 14.833 | 239.027 |

Table 16.

Values of the quality parameters computed on the filtered sparse point cloud and average variation of the results.

Table 16.

Values of the quality parameters computed on the filtered sparse point cloud and average variation of the results.

| | Re-Projection Error (px) | Multiplicity | Intersection Angle (degree) | A-Post. St. Dev. (mm) |

|---|

| MEDIAN | 0.656 (−24%) | 3 (+33%) | 11.201 (+12%) | 6.938 (−71%) |

| MEAN | 0.697 (−33%) | 3.478 (+2%) | 16.048 (+7%) | 21.270 (−96%) |

| ST. DEV. | 0.347 (−55%) | 2.406 (−2%) | 14.756 (−1%) | 159.97 (−49%) |

Table 17.

Results of the cloud-to-cloud distance analyses on the original and filtered dense clouds and average variation of the mean values.

Table 17.

Results of the cloud-to-cloud distance analyses on the original and filtered dense clouds and average variation of the mean values.

| Sub-Area | Original Dense Cloud (mm) | Filtered Dense Cloud (mm) | Mean Variation |

|---|

| | Mean | Std. Dev. | Mean | Std. Dev. | |

|---|

| AREA 1 | 13.736 | 28.066 | 9.606 | 27.952 | (~−36%) |

| AREA 2 | 19.090 | 41.204 | 9.703 | 33.258 | (~−53%) |

| AREA 3 | 10.663 | 7.520 | 7.589 | 5.819 | (~−36%) |

| AREA 4 | 9.391 | 5.100 | 2.698 | 4.255 | (~−67%) |

| AREA 5 | 5.284 | 39.184 | 3.152 | 35.025 | (~−50%) |

| Average Variation | (~−48%) |

Table 18.

Checkpoint RMSEs in the original and filtered sparse point cloud and variation of the obtained values.

Table 18.

Checkpoint RMSEs in the original and filtered sparse point cloud and variation of the obtained values.

| | RMSExy (px) | RMSEx (mm) | RMSEy (mm) | RMSEy (mm) | RMSE |

|---|

| Original | 0.420 | 8.92 | 8.82 | 9.51 | 15.74 |

| Filtered | 0.338 | 6.17 | 4.60 | 8.12 | 11.18 |

| Variation | ~−20% | ~−31% | ~−48% | ~−15% | ~−30% |

Table 19.

Summary of average 3D reconstruction improvements in the considered four datasets verified with the available ground truth data.

Table 19.

Summary of average 3D reconstruction improvements in the considered four datasets verified with the available ground truth data.

| Dataset | Plane Fitting | Cloud to Cloud Distance | Check Points RMSE |

|---|

| Modena Cathedral | (~41%) | (~21%) | - |

| Nettuno temple | - | (~44%) | (~59%) |

| WWI Fortification | - | (~50%) | - |

| Dortmund Benchmark | - | (~48%) | (~30%) |