3.1. The Experimental Dataset

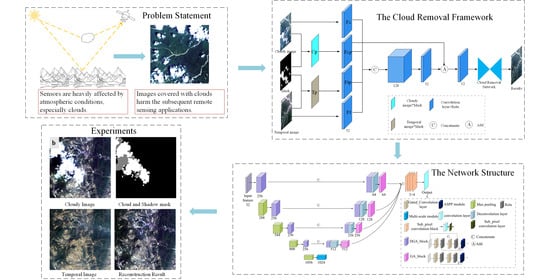

We selected the open-source WHU Cloud dataset (

Supplementary Materials) [

41] for our experiments as that dataset covers complex and various scenes and landforms and is the only cloud dataset providing temporal images for cloud repairing. Considering the GPU memory capacity, the six Landsat 8 images in the dataset were cropped into 256 × 256 patches with an overlap rate of 50%. In

Table 1, the path and row, the simulated training, validation, testing sample numbers of each data, and the real cloud samples for testing are listed.

The six images have been pre-processed by radiometric and atmosphere correction with ENVI software. Please note that, no real training samples are required in our algorithm, which avoids the high-demand of huge samples for training a common deep-learning model. Instead, all the training samples were automatically simulated on the clean pixels without requiring any manual work. They act actually the same as true samples as providing cloud masks. The simulated samples are used for quantitative training and testing, while the real samples (clouds and cloud shadows) without ground truth (pixels beneath the clouds are never known) are used for qualitative testing.

The adaptive moment estimation (Adam) was used as the gradient descent algorithm and the learning rate was set to 10−4. The training process was iterated 500 epochs each for our model and the other deep learning-based methods to which our model was compared. The weights in (10) were empirically set as λc = 5, λt = 0.5, and λp = 0.06. The algorithm was implemented under the Keras framework of Windows 10 environment, with NVIDIA 11G 1080 Ti GPU.

3.2. Cloud Removal Results

We present our experiments and comparisons of different cloud removal methods: Our proposed STGCN method, the non-local low-rank tensor completion method (NL-LRTC) [

16], the recent spatial-temporal-spectral based cloud removal algorithm via CNN (STSCNN) [

36], and the very recent temporal-based cloud removal network (CRN) [

41]. A partial convolution-based in-painting technology for irregular holes (Pconv) [

48] was also executed for quantitative and visual comparison. Except for NL-LRTC, all the other methods are mainstream deep-learning based methods.

The following representative indicators were employed to evaluate the reconstruction results: Structural similarity index measurement (SSIM), peak signal to noise ratio (PSNR), spectral angle mapper (SAM), and correlation coefficient (CC), among which PSNR is regarded as the main indicator. Meanwhile, as the pixels beneath the real clouds could not be accessed, the removal of real clouds was examined with a qualitative assessment (i.e., visual inspections).

Table 2 shows the quantitative evaluation results of different methods on the whole WHU cloud dataset, while

Table 3 is the separate evaluation results on different images. As displayed in

Table 2 and

Table 3, STGCN outperformed the other algorithms for all indicators. Pconv, which was executed only on cloudy images, performed the worst due to the lack of complementary information from temporal images. Although some indexes of the conventional NL-LRTC were sub-optimal, the following reconstructed samples show that it was unstable in some scenes, especially those lacking textures. STSCNN was constructed from an old-fashioned CNN structure, which resulted in the worst performance of the temporal-based methods. CRN was constructed from a similar and popular FCN structure as ours; however, it demonstrated worse performance than ours mainly because CRN could not discriminate between valid and invalid pixels during feature extraction. We use gated convolution for feature extraction. CRN’s inferior performance also was due to two other factors. First, the up-sampling operation with the nearest neighbor interpolation blurred some details. Second, simple MSE loss focused on the similarity of the pixel values exclusively. In contrast, our sub-pixel convolution and the combination of pixel-level, feature-level, and total variation loss contributed to our much better performance. For efficiency, our method requires a little more computational time, which is reasonable as our model is more complicated than those methods with plain convolutions.

Figure 5 shows 12 different scenes, cropped from the six datasets, with huge color, texture, and background differences that could challenge any cloud removal algorithm.

Figure 5a,b originated from data I. In

Figure 5a, the regions reconstructed by Pconv and STSCNN, obviously were worse. Pconv introduced incorrect textures and STSCNN handled color consistency poorly. The other three methods showed satisfactory performance both in textural repairing and spectral preservation, although the non-cloud image was very different from the cloud image. However, in the seaside scene (

Figure 5b), NL-LRTC performed the worst and basically failed because NL-LRTC only paid attention to the low-rankness of the tensor of the textures while the sea was smooth. Pconv was the second worst performer. Although the results of our method were not perfect, they did exceed the results of all the other methods.

Figure 5c,d originated from data II, which was a city and farmland, respectively. In

Figure 5c, none of the repairing results were very good because the temporal image was blurred or hazy. In contrast, in

Figure 5d in spite of a bad color match, the temporal image was clear, which guaranteed STSCNN, CRN, and our method obtained satisfactory results. The conventional NL-LRTC performed the worst once again. From these examples, we concluded that the advanced CNN-based methods can fix the problems caused by color calibration but are heavily affected by blurred temporal images, which gives us direction as far as selecting proper historical images for cloud removal.

Figure 5e,f originated from data III and were covered with forests and rivers, where the large parts of randomly simulated clouds challenged the cloud removal results. In

Figure 5e, STSCNN, CRN, and STGCN produced satisfactory results in spite of the color bias of the temporal image; and in

Figure 5f, CRN and STGCN performed the best. Our method performed the best in both cases. The results and conclusions from

Figure 5g,h, which were from data IV and covered with mountains, resembled the results of

Figure 5e,f.

Figure 5i,j from data V was an extremely difficult case in that the qualities of both the temporal and cloudy images were low. The three temporal-based CNN networks obtained much better results than the conventional method and single-image based method. The reconstructed results of

Figure 5i was relatively worse than that of

Figure 5j. This further demonstrates the conclusion from

Figure 5c,d: The blurred temporal image

Figure 5i heavily affected the reconstruction results, but the color bias of image 5j did not appear to be harmful.

Figure 5k,l from data VI covering bare land demonstrated again that our method performed slightly better than the two temporal-based CNNs with the two remaining methods performing the worst.

The performances of different methods for removing real clouds were judged by the visual effects, as shown in

Figure 6, for example. First, the clouds and cloud shadows were detected by a cloud detection method called CDN [

41]. Then, the mask of the cloud and cloud shadow was then expanded by two pixels to cover the entire cloud area to avoid the damaged pixels involved in training the network. Finally, the CNN-based methods were trained with the remaining clean pixels.

It was observed in

Figure 6a that Pconv did not work at all, and there was obvious color inconsistency in the results of STSCNN and apparent texture bias in the results of NL-LRTC. The results from CRN were much better than the former three methods. However, it can be seen from a close review that it blurred the repairing regions, which was largely caused by ignoring the difference between the cloud and clean pixels. Our method exhibited best-repairing performance in all aspects: Color consistency, texture consistency, and detail preservation. In

Figure 6b, the results of NL-LRTC, CRN, and our method were visually satisfactory. However, NL-LRTC showed some color bias, which was overly affected by the temporal image. In

Figure 6c, the problem occurred again in the NL-LRTC result. Our method performed the best as the repaired region of the second-best CRN was obviously blurred.3.3. Effects of Components

Our cloud removal method STGCN was featured with several new structures or blocks that were not utilized in former CNN-based cloud removal studies. In this section, we demonstrate and quantify the contribution of each introduced structure to the high performance of our method.

3.2.1. Gated Convolution

Conventional convolution treats each pixel equally when extracting layers of features. Gated convolution distinguishes the valid and invalid pixels in cloud repairing and automatically updates the cloud mask through learning.

Table 4, where STGCN_CC indicates that common convolution layers replace the gated convolution layers, shows their difference in data I. After introducing the gated convolutions, all of the indicator scores improved, especially the mPSNR score, which increased by 2.3. The significant improvement of PSNR intuitively indicates that the details and textures blurred by common convolutions were repaired by the gated convolutions.

3.2.2. Sub-Pixel Convolution

An up-sampling operation is commonly applied in a fully convolutional network several times to enlarge the compact features up to the size of the original input images. In previous studies, it was mathematically realized by the nearest interpolation, bilinear interpolation, and deconvolution. In this paper, we introduced a sub-pixel convolution to rescale the features, which is also called pixel shuffle.

Table 5 shows the powerful effect of sub-pixel convolution. The mPSNR of STGCN was 2.4 higher than STGCN with the nearest interpolation (NI), and 1.2 higher than up-convolution (UC). The effectiveness of sub-convolution may relate to the special object we face: The fractal structure of clouds requires a more exquisite tool to depict the details of the outlines.

3.2.3. Joint Loss Function

Although MSE loss, a pixel-level similarity constraint, was commonly used for CNN-based image segmentation and the inpainting of natural images in computer vision, it was far from enough for the restoration of remote sensing images. This paper introduced a joint loss function that targeted not only pixel-level reconstruction accuracy but also the feature-level consistency and color/texture consistency of the neighboring regions of cloud outlines.

Table 6 shows the effectiveness of the perceptual loss designed for feature-level constraint and the TV loss for color consistency. With the optimized weights that were found empirically, the introduction of the new loss component one at a time gradually increased the mPSNR score up to 3.4 growth.

3.2.4. Multi-Scale Module

Table 7 shows the effects of the multi-scale module. When the module for receptive field expanding and multi-scale feature fusion was introduced, there was a slight increase of mPSNR, and the other indicators remained almost the same, which indicated that the module works but was less important than the above three improvements.

3.2.5. Addition Layer

Addition layers were implemented in both of our main building blocks, the GCB_A and GCB_B, in the encoder and decoder, respectively (

Figure 7). The base of GCB_A is a ResNet-like block with a short skip connection; and the base of the GCB_B was a series of plain convolution layers. After the additional shortcuts were added to the bases, we saw from

Table 8 that the mPSNR score improved by 0.7. As a variation of a skip connection, the addition layer seemed to be effective in cloud removal, which indicated that the frequent short connections, which have widened communication channels between different depths of layers, can improve the performance of a cloud removal network, which resembles the findings in close-range image segmentation or object detection.