1. Introduction

The speech signal, which plays a significant role in daily communication and information exchange, is one of the most vital physiological signals of the human body. Therefore, numerous attempts have been made to investigate speech signal detection technology. The microphone, recognized as one of the most widely used air conduction speech signal detection technologies, has greatly improved the efficiency of human communication [

1]. This device can be used in places where speech acquisition is required and has been widely used in social life. The principle of a traditional microphone is to convert the varying pressure on the vibrating diaphragm caused by the acoustic wave into a detectable electrical signal. However, the microphone is easily interfered by various background noises and has a short detection range, which limits the development of air conduction speech detection technology to a certain extent [

2].

Different from the traditional microphone, the throat microphone is a contact speech detector that can record clean speech even in the presence of strong background noise [

3]. It is a transducer applied to the skin surrounding the larynx to pick up speech signals transmitted through the skin, and hence, it is relatively unaffected by environmental distortions. Another representative non-air conduction detector is a bone conduction microphone [

4]. This device obtains the speech signal by picking up the vibration of the vocal cords that is transmitted to the skull. Although these devices can obtain high quality speech signals, they need to be in close contact with the skin of the human subject, which restricts the activities of the user and may even cause discomfort or skin irritation. In the research of non-contact speech signal detection, laser Doppler speech detection technology was proposed [

5]. This optical speech detection technology has a good performance in long-distance speech detection [

6]. Nevertheless, it is susceptible to environmental influences such as temperature. The respective shortcomings of the above speech detection technologies limits their applications for human speech detection.

In recent years, a new non-contact vital sign detection technology, biomedical radar, has gradually gained attention in fields such as medical monitoring and military applications [

7,

8,

9]. The biomedical radar uses electromagnetic waves as the detection medium. When the electromagnetic waves reach the human body, their phase and frequency are modulated by the tiny movements on the body surface caused by the physiological activity of the human body. Human physiological signals can be obtained after demodulation. In 1971, Caro used continuous wave radar to monitor human respiration for the first time [

10], and since then, researchers have begun to apply it to the monitoring of human vital signs. Therefore, the use of radar for speech signal detection has attracted the attention of many researchers.

In [

11], a millimeter-wave (MMW) Doppler radar with grating structures was first proposed to detect speech signals. The operating principle was investigated based on the wave propagation theory and equations of the electromagnetic wave. An electromagnetic wave (EMW) radar sensor was developed in 1994 [

12]. It was then named glottal electromagnetic microwave sensor (GEMS) and used to measure the motions of the vocal organs during speech such as vocal cords, trachea, and throat [

13,

14,

15]. In [

14], the speech phonation mechanism was discussed and a vocal tract excitation model was presented. In 2005, Holzrichter verified through a special set of experiments that the vibration source of the vocal organs detected by the EM radar sensor is mainly the vocal fold [

16]. However, the GEMS also needs to be placed close to the mouth or the throat.

In 2010, a 925 MHz speech radar system with a coherent homodyne demodulator was presented for extracting speech information from the vocal vibration signal of a human subject [

17]. The results showed that the measured speech radar signals had excellent consistency with the acoustic signals, which validated the speech detection capability of the proposed radar system. In [

18], a novel 35.5 GHz millimeter-wave radar sensor with a superheterodyne receiver and high operating frequency was presented to detect speech signals. Based on this radar, this group enhanced the radar speech signals with the proposed Wiener filter method based on the wavelet entropy and bispectrum algorithm by accurately estimating and updating the noise spectrum in terms of whole signal segments [

19,

20]. Moreover, they proposed a 94 GHz MMW radar system to detect human speech in free space [

21,

22] and utilized it to detect the vibration signal from human vocal folds [

23]. However, the experiments just demonstrated the similarity of the vocal fold vibration frequency detected by the radar, microphone, and vibration measurement sensor. In our previous work, we extracted the time-varying vocal fold vibration frequency of tonal and non-tonal languages. The low relative errors showed a high consistency between the radar-detected time-varying vocal fold vibration and acoustic fundamental frequency [

24]. The variational mode decomposition (VMD) was used to obtain the time-varying vocal fold vibration [

25]. However, the recovery of speech from vocal fold vibration frequency detected by radar needs to be further explored.

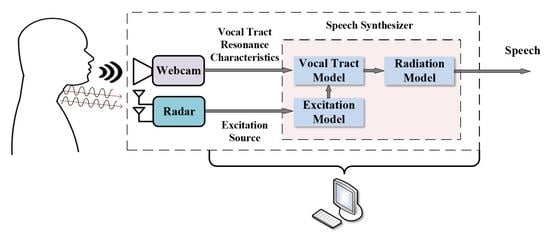

In this paper, a non-contact speech recovery technology based on a 24 GHz portable auditory radar and webcam is proposed: a formant speech synthesizer model is selected to recover speech, using the vocal fold vibration signal obtained by the continuous-wave auditory radar as the sound source excitation and the fitted formant frequency obtained from a webcam as the vocal tract resonance characteristics. We propose a method of extracting the formant frequency from visual kinetic features of lips in pronunciation utilizing the least squares support vector machine (LSSVM). The basic detection, speech synthesis theory, and overall system description are presented in

Section 2, followed by the introduction of the radar system and experimental setup in

Section 3. The results and discussion are demonstrated in

Section 4. Finally, the conclusion is drawn in

Section 5.

3. Experimental Setup

The photographs of the 24 GHz auditory radar from the front side and the right-hand side are illustrated in

Figure 6.

Figure 6a shows a pair of 4 × 4 antenna arrays designed to enhance directivity with an antenna directivity of 19.8 dBi. The antenna arrays included an RF front-end fabricated and integrated on a Rogers RT/duroid 5880 microwave substrate, thereby reducing the overall size of the device to 11.9 cm × 4.4 cm. The baseband board was integrated into the substrate, which supported the RF board as given in

Figure 6b. A power interface was placed in the microcontroller unit (MCU) board, which cascaded the baseband board to power the entire probe.

In order to ensure the high sensitivity of the vocal fold frequency detection of this system, this system used a continuous wave of 24 GHz as the transmitted signal waveform, which had a µm-scale motion detection sensitivity [

33]. The key parameters of the auditory radar are shown in

Table 1. The radio frequency signal was transmitted through the transmitting antenna and acted as a local oscillator (LO) for the mixer of the signal receiving chain, as well. At the receiver, the received echo signal was first amplified by two-stage low-noise amplifiers (LNAs). Compared with existing 24 GHz integrated mixer chips, a cost-effective six port structure was used here. The output of the six port down-converter was a differential quadrature signal, which was amplified by two differential amplifiers to produce a baseband I/Q signal. The received RF gain and baseband gain were 34 dB and 26 dB, respectively. The baseband signal was fed to a 3.5 mm audio jack, which could be easily connected to the audio interface of a laptop or smartphone to process the signal in real time.

Ten volunteers including 4 females and 6 males (from 22 to 28 years old) without any phonation disorders were selected as experimental volunteers. The details of those volunteers are given in

Table 2. The experimental setup is demonstrated in

Figure 7. The volunteer was asked to sit on a chair, and the antennas of the 24 GHz auditory radar faced the throat of the volunteer at a distance of 50 cm. The radar data, sampled at a frequency of 44,100 Hz, were then transmitted over a wired link to a laptop and stored on it. At the same time, a cellphone (iPhone 6s) with webcam and microphone embedded was placed 80 cm away from the volunteer to capture videos of lips and the acoustic signal for comparison with the auditory radar.

To guarantee high quality signals, the volunteer was required to remain seated and read specified characters or words in a quiet indoor laboratory environment during the experiment. In this paper, 8 English letters including “A”, “B”, “C”, “D”, “E”, “I”, “O”, and “U” and two words “boy” and “hello” were selected, and each of the words was recorded 10 times by both the radar and webcam. The experimental data were processed within MATLAB and Praat: doing phonetics by computer. Praat is a cross-platform multi-functional phonetics professional software for analyzing, labeling, processing, and synthesizing digital voice signals and generating various languages and text reports.

4. Results and Discussion

4.1. Vocal Fold Vibration Extraction

First, the auditory radar was used to detect the vibration frequency of vocal folds, which was verified as the fundamental frequency of the speech signals [

24]. The radar-detected time-domain signal was decomposed by the VMD after filtering and segmentation as in our previous work [

24]. Praat was used to extract the microphone-detected fundamental frequency for comparison, which is known as one of the most accurate methods in speech signal processing.

Figure 8a presents the comparison between auditory radar-detected time-varying vocal fold vibration and the acoustic fundamental frequency values of the character “A”. It was illustrated that the vocal cord vibration frequency detected by the radar was about 180 Hz, and the trend of the envelope was consistent with the acoustic fundamental frequency values. The comparative result of the word “boy” is shown in

Figure 8b. Similarly, the radar-detected frequency closely matched the microphone-detected one. The observed fluctuation of instantaneous frequencies indicated the frequency deviation of the diphthong in this word. Here, we define the deviation degree of the acoustic fundamental frequency values as relative error:

where

means the radar-detected vibration frequency and

is the acoustic fundamental frequency at the moment

,

. The relative errors of the fundamental frequency are shown in

Table 3. From this table, we find that the relative errors of the characters and words tested were below 10%. Compared with our previous work in [

24], the number of participants in the experiments increased from two to 10, and the results were similar. The low relative errors showed a high consistency between the radar-detected vibration and acoustic fundamental frequency. In addition, the duration of these English characters and words are given in the table to illustrate the difference between characters and words.

4.2. Formant Frequency Fitting

Videos recorded of 10 subjects reading characters and words were segmented by the silent segments and the voiced segments of the audio signal. There is a simple relationship between three components of red-green-blue (RGB) color space to separate lip region from skin region [

34]. We could perform a set of operations on the three components of RGB. The R (red) component and the B (blue) component were subtracted from the G (green) component and then added as:

where

,

, and

are the R, G, and B components in the RGB image, and as a result,

f had a good performance in distinguishing lip and skin.

Then, lip movement images obtained from voiced segments of videos were binarized, and miscellaneous points were wiped off to extract the contour of the outer lip. Video image processing can obtain visual information such as color, brightness, and outline from the image of each frame. In addition to visual information, motion information can also be extracted from the difference between continuous frames. Four sets of lip motion features (, , , ) during phonation were arranged with the time of the frames of the video to form a set of feature sequences. The set of feature sequence packets preserved the chronological relationship of the frames, as well as the global motion relationship of the video frames. Sixty percent of these features were used as the input samples, and the corresponding four microphone-detected formants were used as the output samples in LSSVM training. The remaining 40% of these lip movements features were used as the input testing samples, and the output was the fitted formants.

The performance and convergence of LSSVM model seriously depended on the input and parameters. When establishing the LSSVM prediction model, the regularization parameter and kernel parameter had a great influence on the performance of the model. If the value of the regularization parameter was small, the penalty on the sample data was small, which made larger training errors and strengthened the generalization ability of the algorithm. If the value of was large, the corresponding weight was small, and the generalization ability of the algorithm was poorer. When using a Gaussian kernel function, a small value of would cause over-learning of sample data, and a too large value would cause under-learning of sample data. In this paper, the determination of these two parameters was based on cross-validation and experience: and .

Four sets of formants fitted by LSSVM were compared with acoustic formants extracted by speech signal processing software Praat, and the results of the word “a” and “boy” are presented in

Figure 9.

Figure 9 indicates that although some fitted values were not very close to the acoustic ones, the trend of fitted formants was consistent with the trend of the formant frequency of the original speech when the word was pronounced.

Figure 9a shows the fitted formants of “a”, and since it was a monophthong without any changes of tone and pitch, the fitter effect was slightly better than that of the word “boy” illustrated in

Figure 9b. The acoustic formants of “boy” extracted by Praat had some discontinuities, and the LSSVM fitting results could not fit the drastic transition of them.

There are various reasons for the disagreement appearing in the comparisons of

Figure 9. Although Praat is a classic and widely used tool to extract the formant frequencies in speech signal processing, there are inherent errors. As presented in

Figure 9, the acoustic formants extracted by Praat had some discontinuities, and the formants in higher orders may have been mistaken as formants in lower orders, which showed the inaccuracy in extracting formants. Hence, the trained model may have had large errors locally when fitting the formants of the testing data. However, for a specific sound, the fitted formant frequencies were within a reasonable range. These errors would not have much effect on the final speech synthesis and the recognition of the synthesized sound. Furthermore, since the formant was not completely determined by the shape of lip, the formant fitting was relatively inaccurate due to the lack of information. The effect of fitting needed to be judged by the effect of speech synthesis.

It can be seen from

Table 4 that there was a certain error between the frequency of the fitted formant and the frequency of the formant extracted from the original speech. The error of the first formant of “E” in the table is significantly larger than the others because when the tester was pronouncing the English letter “E”, the first formant frequency was about 200–300 Hz, which was much lower than the first formant frequency of other letters. Compared with other letters, the denominator

was smaller when calculating the relative error, and therefore, the first formant of “E” was relatively larger than the others. The formant frequency was within a reasonable range, and the fitting effects needed to be evaluated by the speech synthesis results.

4.3. Speech Synthesis

Since the speech recovery technology introduced in this paper was based on the time-varying vocal fold vibration frequency obtained by auditory radar as the excitation source of speech synthesis, we chose the extracted time-varying vocal fold vibration period as the pitch period excitation, white noise as the consonant excitation, and the fitted formant frequency extracted by the webcam as the vocal tract resonance characteristics to synthesize speech [

26]. Rabiner proposed to add a high-frequency peak as compensation when using the formant parameters for speech synthesis [

35]. Here, the fourth formant was selected as a fixed value with a center frequency of 3500 Hz and a bandwidth of 100 Hz. For the bandwidths of the other three formants, we took fixed values

Hz,

Hz,

Hz. From the resonance frequency and bandwidth, the second-order filter coefficients could be calculated. In order to make the synthesized sound more natural, we calculated the energy of each frame of speech to adjust the amplitude of the synthesized speech and make the energy of each frame of synthesized speech the same as the frame energy of the original speech. In the overlapping part between frames, a linear proportional overlap addition method was used. Several audio files of the synthesized speech are presented in the

Supplementary Materials.

Figure 10 shows the detection results of the microphone system and the radar system for the English character “A”, which were compared with the synthesized speech.

Figure 10a–c presents the time-domain waveform of the microphone, the time-domain waveform of the radar after filtering and segmentation, and the time-domain waveform of the synthesized speech, respectively.

Figure 10d–f depicts the spectrogram of the signals detected by the microphone, radar, and synthesized speech, respectively. As shown in

Figure 10a–c, compared to the microphone-detected and radar-detected signals, the synthesized speech signals lost a part of the high-frequency components, which could also be seen in

Figure 10d–f. The fundamental frequency in

Figure 10d–f is similar, while the high-frequency components and energy distributions are different. As illustrated, there are multiple frequencies in both

Figure 10d,f, and the energy was mainly distributed at about 200–400 Hz, 2000–2200 Hz, and 3000–3800 Hz. A similar distribution of the energy in

Figure 10d,f showed the effect of the fitted formants. Unlike

Figure 10d,e only has a few low-frequency components, which made the radar-detected signals unable to be distinguished. Although the synthesized speech could be recognized successfully as English letters, the change of the timbre of the synthesized speech was not obvious, and the speech was a little unnatural from the perspective of hearing, which may be ascribed to the difference between the high-frequency components of the microphone-detected result in

Figure 10d and synthesized speech in

Figure 10f.

Figure 11 depicts the detection results of the microphone system and the radar system for the English word “boy”, which were compared with the synthesized speech. Like

Figure 10,

Figure 11a–c shows the loss of high-frequency components in the time-domain synthesized speech waveform.

Figure 11d–f illustrates that in the spectrograms, microphone-detected, radar-detected, and synthesized speech were consistent in their distribution patterns. The similar distribution change of the energy in

Figure 11d,f showed a high consistency between acoustic and fitted formants. The increase and decrease of frequencies presented by distribution patterns indicated the frequency deviation of the diphthong in this word. Furthermore, there were differences between

Figure 11d,f in high frequencies.

The mean opinion score (MOS) is a subjective measurement and probably the most widely adopted and the simplest procedure in evaluating speech quality [

36]. In this paper, MOS tests were conducted such that the 10 volunteers were asked to assess and compare the results of the synthesized speech and the original speech. The full score was five points according to the following criteria: 1: unqualified; 2: qualified; 3: medium; 4: good; 5: excellent. The assessor was required to listen to the synthesized speech every five minutes to avoid psychological cues caused by continuous testing. The evaluation results are shown in

Figure 12, and the effect of the synthesized speech obtained by the speech restoration system of this paper was satisfactory.

5. Conclusions

In our former work, the vibration of vocal folds was extracted by the continuous-wave radar based on VMD, which was consistent with the fundamental frequency of the original speech. However, little has been done to recognize and recover the original speech directly using the vibration of vocal folds. Therefore, in this article, we proposed a non-contact speech recovery technology based on a 24 GHz portable auditory radar and webcam, using the vocal fold vibration signal obtained by the continuous-wave auditory radar as the sound source excitation and the fitted formant frequency obtained by the webcam as the vocal tract resonance characteristics to recover speech through the formant speech synthesizer model. The LSSVM fitting model was utilized based on the mapping relationship between mouth shape motion characteristics and formant frequency when the speaker pronounces, with the motion characteristics of lip during pronunciation as the input training and testing samples and the first four formants as the output training and testing samples, respectively. The output results were compared with the formant frequencies of the speech, and they were similar and feasible within a certain range. Then, the speech synthesis was conducted based on the formant speech synthesizer. Experiments and results were presented using the radar, webcam, and microphone. The MOS evaluation results of the proposed technology showed a relatively high consistency between acoustic and synthesized speech, which enables potential applications in robust speech recognition, restoration, and surveillance.