3.3.1. Classic D–S Fusion Algorithm

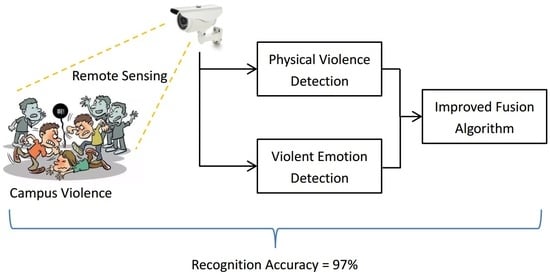

Since the video-based physical violence detection procedure and the audio-based bullying emotion detection procedure were executed separately, a combination of the two results was necessary. There were two possible results of physical violence detection and bullying emotion detection, i.e., true and false, so there were four possible combinations,

- (1)

physical violence = true and bullying emotion = true: this is a typical campus violence scene, and is exactly what the authors want to detect;

- (2)

physical violence = true and bullying emotion = false: this can be a playing or sport scene with physical confrontation. According to the authors’ observations, campus violence events are usually accompanied by bullying emotions, so this case is classified as non-violence in this paper;

- (3)

physical violence = false and bullying emotion = true: this can be an argument or a criticism scene. In this paper, the authors focus on physical violence, so they catalog this case into non-violence, too;

- (4)

physical violence = false and bullying emotion = false: this is a typical non-violent scene.

The fusion result is based on this classification criteria. Moreover, note that the actual outputs of the physical violence recognition and the bullying emotion recognition are not definite results such as true (1) or false (0), but two probabilities. If the output probabilities are simply mapped into 1 or 0 and a simple AND operation combines the two outputs, lots of information provided by the classifiers would be lost. Therefore, a fusion algorithm was necessary.

Dempster–Shafer (D–S) is a kind of uncertain evidential reasoning theory. Even if the prior probability is unknown, the D–S theory can perform fuzzy reasoning on things. Assume that

A is a proposition and Θ is the recognition frame of

A, i.e., Θ contains all the possible hypotheses of

A, and 2

Θ contains all the subsets of Θ. In a subset of Θ, if all the elements are mutually exclusive and finite, then there is only one element is the correct hypothesis of

A. The hypothesis is supported by several pieces of evidence, and this evidence has certain credibilities as well as uncertainties and insufficiencies. Therefore, D–S defines basic probability assignment (BPA) functions marked as

m. The BPA maps the set 2

Θ into the period [0,1], i.e.,

. The BPA function

m meets,

where

a is a possible hypothesis of

A.

Once the recognition frame Θ is determined, mark the BPA function as

mi. When making a decision, multiple pieces of evidence are usually taken into consideration. In the classic D–S theory, the fusion function is given as,

where

represents the conflict level between evidence.

is the conflict factor. When

= 0, it means that there is no conflict between the evidence; when

> 0, it means that there is conflict between the evidence, and the conflict level between the evidence is proportional to the value of

; when

= 1, it means that there is significant conflict between the evidence, and the classic D–S theory is no longer applicable.

The classic D–S theory has some limitations.

If there is serious conflict between the evidence, then the fusion result is unsatisfactory;

It is difficult to identify the degree of fuzziness;

The fusion result is greatly influenced by the value of probability distribution function.

In this work, there are four possible combinations of video samples and audio samples, i.e., violent video and bullying audio, violent video and non-bullying audio, non-violent video and bullying audio, and non-violent video and non-bullying audio. Among these four combinations, the combination of violent video and bullying audio is considered to be a violent scene, the combination of violent video and non-bullying audio is considered to be a scene of playing or competitive games, the combination of non-violent video and bullying audio is considered to be a criticism scene, and the combination of non-violent video and non-bullying audio is considered to be a daily-life scene. The first scene is considered as violence and marked as positive, and the remaining three are considered as non-violence and marked as negative. In this situation, there exist strong conflicts between video evidence and audio evidence, so the classic D–S theory needs to be improved.

Quite a few researchers are researching the improvement of the classic D–S fusion theory [

17]. The improvement of the D–S fusion theory can be divided into two types,

This paper improves the classic D–S fusion algorithm from the two abovementioned aspects, and compares the fusion results to decide the better one.

3.3.2. Improvement on BPA Functions

Firstly, the authors improved the BPA functions. Classic D–S fusion theory has the problem of veto power when a BPA function becomes 0. To solve this problem, BPA functions cannot be 0. Therefore, the authors redefined BPA functions in the form of exponential functions,

where

ni is the level of support of evidence

i to the hypothesis, and

r is a regulatory factor which depends on the level of support. We normalized

Ni as,

According to (10),

Ni > 0, so the BPA functions cannot be 0. Therefore, the problem of veto power is solved. When fusing the recognition results, not all of the evidence is credible, so this paper introduces a correlation coefficient to represent the confidence level of the evidence. We marked the recognition frame as

, and then the correlation coefficient between two pieces of evidence can be expressed as,

where

ni represents the support level of evidence

i, and

represents a recognition result in the recognition frame. According to (12), if the support level of either of the two pieces of evidence is 0, then the correlation coefficient is 0, and this means that the correlation between the two pieces of evidence is weak. If the support levels of two pieces of evidence upon one recognition result are the same, then their correlation coefficient is 1, and this means that the correlation between the two pieces of evidence is strong. Given

n pieces of evidence, their correlation coefficient matrix is,

Aij∈[0,1] is the correlation coefficient of evidence

i and

j,

Aij =

Aji, and

Aij = 1 when

i =

j. Define the absolute correlation degree as,

We used the absolute correlation degree as the weight of the support level of a piece of evidence on a recognition result, and recalculated the support level as,

Finally, we fused the recognition results with the new evidence.

3.3.3. Improvement on Fusion Rules

Although the above-mentioned improvement can solve the problem of veto power, the computational cost is increased. Therefore, the authors considered improving the fusion rules.

The Yager fusion rule is an improvement of the classic D–S fusion theory. The Yager fusion rule introduces an unknown proposition

m(

X) to solve the problem of evidence conflict. The improvement is given as,

where

A,

B, and

C are recognition results and

m(

A) means the probability of result

A,

X is an unknown proposition, and

k is a conflict factor which is defined as,

Although the Yager algorithm can decrease the credibility of evidence with significant conflicts, it brings in more uncertainty. Therefore, some researchers [

18] improved the Yager fusion algorithm. Mark evidence as

m1,

m2, …,

mn, and recognition result sets as

F1,

F2, …,

Fn. The conflict factor

k is defined as,

The improved Yager fusion rule introduces a new concept named evidence credibility, which is calculated as,

where

n is the number of pieces of evidence. Calculate the average support level of evidence on recognition result

A as,

The improved Yager fusion algorithm introduces the concepts of evidence credibility and average support level, based on which a new fusion rule is proposed as,

This paper further improves this fusion rule by assigning evidence conflict levels according to the average support levels on the recognition results. The proposed fusion rule is given as,

where

k represents the conflict between evidence. This fusion rule can be rewritten as,

where

y(

A) is the average trust level on

A. The calculation of the average trust level is given as below.

Firstly, define the energy function of a recognition result as,

where |

A| represents the number of possible recognition results.

Assume that

m1 and

m2 are two independent pieces of evidence on the recognition frame Θ, and

Ai and

Bj (

i,

j = 1, 2, …,

n) are the focal elements (masses) of them. Mark their non-empty intersection as

Ck (

k = 1, 2, …,

n), the union of the recognition frames of two completely conflicting pieces of evidence as F, and

Fi represents a certain recognition result in F. Then, the energy function of conflicting focal elements is,

The average trust level is calculated as follows:

Step 1. Perform basic evidence fusion as,

If there is significant conflict between two pieces of evidence, it is difficult to judge which evidence is correct without a third piece of evidence. Therefore, the authors assigned this conflict in the intersection of the two evidence, given as,

Step 2. Two pieces of evidence are related if the intersection of their recognition frames is not empty. Then, calculate the related conflict sum of the two evidences as,

Step 3. Assign the conflict according to the conflict energy of the related focal elements as,

Step 4. Calculate the average trust level as,

Section 4 will give a comparison of the existing algorithm and the improved algorithms.