1. Introduction

In recent years, target detection technology based on deep learning has made great breakthroughs in detection performance, gradually replacing traditional methods, and is widely used in autonomous driving [

1,

2], face recognition [

3,

4], remote sensing object detection [

5,

6], pose detection [

7,

8], and many other fields. Among them, the target detection algorithm application of deep learning in synthetic aperture radar (SAR) ship detection has received extensive attention. Object detection methods are generally divided into two-stage detection and single-stage detection. The two-stage detection first generates a preselected box through the proposal region network, and then the detection network realizes the classification and regression of the preselected box, so it has a high target recognition accuracy, but the detection speed is slow, see R-CNN series [

9,

10,

11]. Single-stage detection can directly get the detection results through the detection network, so the inference speed is faster. Typical examples are YOLO series [

12,

13,

14,

15], Retinanet [

16], and SSD [

17]. Among them, YOLOv4 proposed in 2020 can achieve a good balance between detection speed and accuracy in the process of practical application and has become one of the most widely used target detection algorithms.

Although the above methods can achieve good results when applied to SAR ship detection, due to the unique imaging mechanism of SAR images, these images are more susceptible to the influence of the atmosphere, background clutter, and illumination differences, with fewer feature details and unclear target feature information. At the same time, ship targets in SAR images are dense, and various ships are very small and blurred, and even submerged in extremely complex backgrounds, so the target detection algorithm based on deep learning misses detection and false detection in practical applications. In fact, the effective acquisition of target feature information is the key to all target detection, not only SAR ship detection. Therefore, it is necessary to construct an algorithm that can greatly enhance the expression of feature information.

Aiming at the problem of SAR target information ambiguity, some scholars [

18,

19] proposed optimizing the SAR image acquisition process and using the information-rich polarization method to enhance the identifiability of SAR target features. The echo intensity of the same target under different polarization methods is different, and the obtained target characteristic information is also different. The polarization method with better performance can obtain more target information. Therefore, using a reasonable polarization method in SAR can reduce the existence of interference data in the image and enhance the presentation of effective information, thereby improving the target detection performance. This is indeed a good approach. However, in the field of ship detection, the most important thing is to build an excellent target detection model, which can obtain ideal target feature information from complex image data, thereby greatly improving the effect of ship detection. At present, to obtain powerful features of image context information, the improvement of target detection algorithms mainly includes using basic neural networks that can extract richer features, fusing multiscale features, and weighting feature information. Liu et al. [

20] proposed the composite backbone network, which assembled multiple identical backbones through composite connections between adjacent backbones to form a deeper backbone network for feature extraction, which improved the detection performance of the network. Liu et al. [

21] proposed a path aggregation network (PANet), which fused high-level semantic information and low-level location information to obtain more feature details, thereby enhancing the feature information transmission capability of the network feature fusion module. In order to further improve the small target detection performance, Xu et al. [

22] increased the number of network detection layers from three to four to obtain more feature information. Yuan et al. [

23] introduced a receptive field block into the network to increase the receptive field and retain detailed feature information, generate feature maps with local context information, and improve the accuracy of the detection. Gao et al. [

24] added a channel attention module (CAM) attention mechanism to the bidirectional feature pyramid network module to help the network focus on more interesting targets and improve the effectiveness of feature fusion. The above works have improved the performance of detection algorithms from different perspectives. In general, the above methods improve the network detection performance by enhancing the effective expression of image feature information.

Through the above analysis, we know that there are generally two ways to enhance feature information: a new backbone network and an optimized feature fusion network. A backbone network with a reasonable architecture and excellent application effect can indeed greatly improve the model detection performance. However, how to design a suitable network is a difficult problem. In response to this problem, this paper proposes a new feature extraction convolutional transform feature extraction network (CTFENet), which is mainly composed of convolutional transform feature extraction (CTFE) modules. The design concept of the CTFE module comes from the Swin Transformer block [

25]. The Swin Transformer is mainly composed of a Swin Transformer block, which has excellent global feature capture ability, and has achieved state-of-the-art results in image classification, object detection and instance segmentation tasks. These experimental results confirm the superiority of the Swin Transformer block structure. At present, most people directly introduce the Swin Transformer to improve network performance [

26,

27,

28], and the experimental results further prove the effectiveness of the Swin Transformer block architecture. However, few have used convolutional networks to build architecturally similar modules. Therefore, we gradually analyze the architectural composition of the Swin Transformer block to propose the CTFE block.

Introducing modules to enhance the representation of feature information in the network can indeed improve the performance of the model. For example, channel and spatial attention mechanisms are introduced in the feature fusion module. In addition to the introduction of the module, it is also a good idea to optimize the convolution extraction block in the feature fusion module. Therefore, we propose the information hybrid convolutional block(IHCB) module as a convolutional feature extraction block to enhance information exchange and enhance the integrity of information transmission and feature uniqueness.

In fact, in addition to improving the network model, improving the bounding box regression decoding formula can also enhance the effective expression of feature information, thereby greatly improving network performance, and this method does not have such problems as network complexity and model design, its implementation method is simple, and it has great results.

In view of the above ideas, this paper proposes a new feature information efficient representation network (FIERNet), conducts comparative experiments on various real complex situations and different detection algorithms, and finally confirms the effectiveness of the proposed algorithm. The main contributions of this work are listed as follows.

This work analyzes the architecture of the Swin Transformer block, proposes the CTFE module, and then constructs the CTFENet. It further improves the feature extraction capability of the backbone network, enhances the breadth and accuracy of model feature details, weakens the interference of similar information and background information, and greatly reduces the occurrence of missed detection and false detection, thereby improving the accuracy of model detection.

We propose the efficient feature information fusion (EFIF) module. Specifically, first of all, this module uses IHCB to realize the mixing of spatial dimension and channel dimension, strengthen the exchange of information, and further ensure the richness and integrity of feature information. Second, the EFIF module cleverly uses the channel and spatial attention mechanism to filter invalid information hierarchically and strengthen the expression of semantic information and location information, thereby improving the detection accuracy and generalization performance of the network.

For SAR ship detection, this paper introduces and improves a new method of boundary regression decoding equation, and proposes a new decoding formula to enhance the decoding effect.

We incrementally give the optimal combination of network modules, resulting in FIERNet. This paper demonstrates the effectiveness of the method through test results on SSDD, SAR-ship datasets, and large-scale SAR ship images.

The remainder of this paper is organized as follows.

Section 2 reviews related work. In

Section 3, we describe the proposed model and discuss key design decisions. We report the results of the experimental evaluation in

Section 4 and conclude in

Section 5.

3. Feature Information Efficient Representation Network (FIERNet)

This paper proposes a target detection algorithm suitable for SAR ships. The algorithm is generally composed of a CTFENet, SPPNet, EFIF module, prediction module, and the BBRD method. The algorithm loss function consists of a regression loss function, confidence loss function, and classification loss function. Among them, the CIoU [

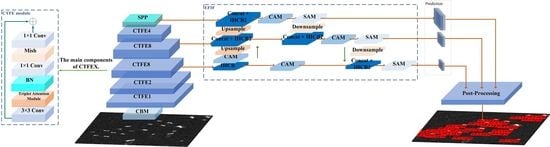

32] loss function is used as the regression loss function, and the cross-entropy loss function is used as the confidence loss function and the classification loss function, respectively. Experiments show that the detection effect of the network on different datasets is excellent in complex environments. The network structure is shown in

Figure 5.

3.1. Backbone Network

3.1.1. Convolution Transformer Feature Extraction Network (CTFENet)

The Swin Transformer model is an improved model based on the Transformer recently proposed by Microsoft. It achieves better results in vision tasks through the Swin Transformer module and can be applied to various vision tasks. Meanwhile, extensive experiments are also conducted to demonstrate the superiority of the Swin Transformer block architecture. The Swin Transformer module is mainly composed of windowed multihead self-attention (W-MSA), shifted windowed multihead self-attention (SW-MSA), layer normalization, and a multilayer perceptron (MLP). Among them, the W-MSA models the input image locally according to a fixed window, the SW-MSA realizes the interactive connection of adjacent window information, and the MLP is composed of a fully connected layer and a GELU activation function.

Inspired by the Swin Transformer block, this paper proposes a convolutional feature extraction module suitable for SAR ship target detection: the convolutional transformer feature extraction (CTFE). This module consists of a 3 × 3 convolution, triplet attention, the Mish activation function, an MLP, and a residual structure. To realize the information integration of different feature channels, the network depth and complexity are normalized. This paper uses 1 × 1 convolutions to build MLPs instead of fully connected layers, because the fully connected layer cannot realize the increase or decrease of the dimension of the feature channel. The overall structure of the module is shown in

Figure 6.

This module can extract richer target feature details, resulting in better detection results. From the later experimental results, this module achieves the original intention of this paper. Specifically, the extraction operation for the input image information of this module is mainly composed of the following steps.

- (1)

Use a 3 × 3 convolution to extract more image information and better global features, and pass this extracted information into the module. At the same time, the input channel is dimensionally reduced to further reduce the network parameters of the model and increase the practicability of the network.

- (2)

Use triplet attention to achieve cross-dimensional information interaction, and weight the corresponding feature information to highlight important image feature details and enhance the network’s recognition ability.

- (3)

Batch-normalize the output of the attention module to make the distribution of the output data more stable, accelerate the learning speed of the model, and alleviate the problem of gradient disappearance.

- (4)

Using two 1 × 1 convolution and Mish activation functions (), realize the dimensionality reduction and increase the number of channels in the feature map of the entire module, enhance the information interaction between channels, and improve the nonlinear expression ability of the model.

The instructions for using the 3 × 3 convolution and triplet attention are as follows: Use the 3 × 3 convolution kernel as a sliding window to convolve the input image to extract feature information. Then, the triplet attention is used to realize cross-dimensional information interaction and enhance the richness of feature information. The description of the batch normalization and activation function is as follows: the module abandons a large number of batch normalization and activation function combination operations between traditional convolution blocks, and only performs separate corresponding operations between 1 × 1 convolutions, and the follow-up experiments show that the method is effective. That is, the higher detection efficiency is achieved with a more streamlined architecture. This module innovatively integrates Swin’s architecture and convolution method, and thus proposes a convolution module with a better extraction effect, which has a good effect on SAR ship detection.

In this paper, a CTFE Network (CTFENet) is constructed based on the CTFE module, and the network is used as the extraction backbone of SAR ship target features to obtain more detailed feature information. Specifically, CTFENet consists of five modules: CTFE1, CTFE2, CTFE8, CTFE8, and CTFE4. At the same time, in order to further enhance the learning ability of the network and retain more feature details, this paper refers to the practice of YOLOv4, and introduces a cross stage partial (CSP) [

33] connection structure for each module. Taking CTFE2 as an example, we use the CSP structure to divide the module into two parts. The main part continues to stack the CTFE, and another part is directly mapped and merged with the main part to form a larger residual edge. As shown in

Figure 7. This method can not only solve the problem of gradient disappearance and enhance the learning ability of the model, but also reduce the network parameters and reduce the cost of model training.

3.1.2. Spatial Pyramid Pooling (SPP) Network

SPPNet [

34] uses four different scales of maximum pooling to process the feature map. The size of the pooling kernel of the maximum pooling is 1 × 1, 5 × 5, 9 × 9, and 13 × 13, and the 1 × 1 pooling kernel operation can be regarded as no processing, as shown in

Figure 8. In this paper, SPPNet is placed in the last layer of the backbone extraction network as a pooling layer, and multiple pooling windows are used to process the feature information to separate the feature information of the significant upper and lower layers, thereby greatly increasing the network receptive field.

3.2. Effective Feature Information Fusion (EFIF) Module

3.2.1. Information Hybrid Convolutional Block (IHCB)

To better realize the mixing of spatial and channel dimensions during convolution and further ensure the richness and integrity of feature information, this paper proposes an information hybrid convolutional block (IHCB). This module consists of a 1 × 1 convolution, LeakyRelu activation function, batch normalization, and ConvMixer [

35]. Among them, the ConvMixer consists of a depthwise separable convolution, GELU activation function, residual structure, and batch normalization. The IHCB is shown in

Figure 9. This paper applies the IHCB and IHCB-2 modules, respectively, where the number 2 represents the “X” in the figure.

3.2.2. EFIF Module

The path aggregation network (PANet) module is often used for feature fusion, superimposing feature information, and improving network performance. However, when the module performs feature fusion, it does not perform weighting processing on different regions in the feature map, that is, it is considered that the contributions of different feature map regions to the final prediction of the network are the same. This is unreasonable because, in real-life scenarios, the objects to be detected often have rich and complex contextual information. The operation of the direct feature fusion of the PANet module leads to the repeated superposition of a large amount of irrelevant information, which affects the network’s judgment of the main feature information of the target to be detected, resulting in missed detection and false detection.

The special semantic and location information of images is the basis for network recognition and localization. In a deep convolutional neural network, the shallow features contain location information, which is universal and conforms to the general characteristics of the target to be detected. The deep features contain rich semantic information, are more abstract and complex, have the uniqueness of the target to be detected, and are more suitable for adding attention mechanisms to enhance the expression of target semantic information and suppress irrelevant information.

In view of the above analysis, this paper proposes an effective feature information fusion (EFIF) module. This module uses IHCB as a feature acquisition convolution block, and at the same time comprehensively considers the influence of overlapping feature information of each feature layer of the network, cleverly uses the channel attention and spatial attention mechanism to filter invalid information hierarchically, and strengthens the expression of semantic information and location information.

Firstly, the channel attention module is introduced into the semantic information path with high-level features, which explicitly models the interdependence between channels, determines the content that needs to be focused on the feature map of each layer, and assists in completing the target recognition task. Secondly, a spatial attention module is introduced before each head network, the spatial attention matrix is extracted based on the preserved spatial position information, and the extracted matrix is used on the corresponding feature map of the semantic information path to determine the need to focus on a position to assist in the completion of target positioning tasks. The module structure is shown in

Figure 10. We describe the detailed operation below.

Given different input feature maps

,

, and

,

is a high-level feature map, which contains the most semantic information, which helps the network to identify the target to be detected. First, we perform IHCB on

to obtain the feature map

. We use CAM to perform the first information weighting on many feature channels in

to obtain

. This operation focuses on “what” is meaningful, given an input image, that is, the weighted processing of important semantic information. Secondly,

is upsampled and

is superimposed on the channel dimension, the superimposed feature map is subjected to IHCB2, and then upsampling is performed again to obtain

. Finally, the information of

and

is superimposed, and then the information is extracted to obtain

after IHCB2.

aggregates feature information from different feature layers, resulting in rich and complex semantic information and location information. Then, we add the CBAM attention mechanism to

, weight the channel and space, respectively, pay attention to the meaningful features in the channel and space, and obtain the network output feature information

. The rest of the

and

acquisition process is similar to

. In short, the EFIF module output is computed as:

3.3. Prediction Module

The prediction module mainly consists of a 3 × 3 convolution, batch normalization, LeakyReLU, and 1 × 1 convolution. This module processes the multiscale feature information output by the EFIF module, and obtains three output feature layers with different scales, respectively. Three kinds of anchors are set on each feature layer, and K-means clustering is used to obtain anchors according to different datasets and image input sizes. The smallest feature layer has the largest receptive field, applies larger anchors, and detects larger objects. The medium feature layer has a medium receptive field, applies medium-sized anchors, and detects medium-sized objects. Larger feature layers have smaller receptive fields, apply smaller anchors, and detect smaller objects. The specific dimensions of the prior boxes on the three prediction feature layers are shown in

Table 1.

3.4. Postprocessing—BBRD Method

The bounding box regression is to obtain the final prediction box. The decoding process of the predicted value can further improve the performance of the object detection. In

Figure 11, the purple box represents the real box, and when the prediction box is not positioned, i.e.,

, even if the target in the real box is a dog, when the dog is identified by the classifier, it can still not be detected. If we fine-tune the prediction box to adjust the frame closer to the real frame of the target, we can improve the positioning accuracy, and thus improve the detection performance. Joseph Redmon et al. then proposed a border regression method based on previous work, which is also the main method introduced in the rest of this section.

Object detection edges are generally represented by a four-dimensional vector

, representing the central point coordinates and width and height of the edges, respectively. In

Figure 11, the red box

O, represents the original prediction box and the purple box

G represents the real box of the target. The bounding box regression refers to finding a relationship, so that the predicted candidate box

O can get a regression bounding box

S that is closer to the real box

G through mapping.

Given , we search for the mapping relation such that and .

The main steps of finding the mapping relationship are the border center point translation and wide height scaling; the formula can be given as:

(1) Central point translation

Where is the predictive value of the network output, is the upper left coordinate of the grid cell at the center point of the candidate target box , is the sigmoid function, and . The control offset is within the range (0, 1), and the final obtained is the parameter value of the final predicted box. Because the value domain of the sigmoid function is an open interval, the or cannot take the boundary value, therefore or . When the central point coordinates of the regression box need to be offset to the critical point, and the adjustment range is unable to take the boundary value, it is difficult to predict the corresponding information, which consequently affects the target detection performance.

Therefore, we introduce a method [

36] to improve the center point translation of the original formulation, which we formulate as

Although the above method can solve the problem of and simultaneously, the penalty term added to the central point translation formula is relatively too large, resulting in a too large frame offset range and unsatisfactory actual effect. In this case, the present paper improves the formula penalty term to reduce the order of magnitude and further obtain a precise offset range.

The central point formula presented in this paper is as follows:

In this formula, the

is taken as

. It is evident from the formula that the coordinate offset of the center point of the candidate target box is multiplied by

, and minus

, changing from the original value domain

to

, making it easier to predict the center point in the candidate target box of the grid boundary, and at the same time, we avoid the value domain being so large as to cause an excessive candidate box offset, which affects the detection performance. The bounding box regression decoding (BBRD) process is shown in

Figure 12.

4. Experimental Results and Analysis

4.1. Dataset and Experimental Conditions

We apply FIERNet to the SAR Ship Detection Dataset (SSDD) [

37] and SAR-ship [

38] dataset to test the detection performance of the network.

SSDD dataset: SSDD is the first publicly available dataset for SAR ship target detection. It consists of 1160 images containing 2456 ship targets with a resolution of 1–15 m. The dataset is divided into many different scenes, including simple scenes with clean backgrounds, complex scenes with obvious noise, dense scenes with complex environments, and near-coastal buildings disturbing scenes. However, the results obtained on such a dataset are more credible. We set the ratio of training and test sets to 8:2.

SAR-ship dataset: The SAR-ship dataset is composed of 102 Gaofen-3 and 108 Sentinel-1 sliced images, the image size is 256 × 256, the total number of images is 43,819, and the number of ships is 59,535, which are annotated in the Pascal VOC format. The ship slice pictures in this dataset have complex environments and changeable scenes, and most of the ships are fused with the background, making them difficult to detect. The ship target has fewer feature details and weak feature information, which is beneficial to reflect the powerful feature information acquisition capability of the network proposed in this paper. We divide the dataset into a training set and test set with an 8:2 distribution ratio.

The hardware and software platform parameters implemented in this algorithm are shown in

Table 2.

In the early stage of the model training built in this paper, the model hyperparameters need to be initialized [

39]. The model hyperparameter initialization based on SSDD is shown in

Table 3. The model hyperparameter initialization for the SAR-ship dataset was similar to that of the SSDD dataset. It is only because the scales of the two datasets are different that some parameters of FIERNet on the SAR-ship dataset are different. The input size in the SAR-ship dataset was 256 × 256, the training steps were 100, and the batch size was 16.

4.2. Experimental Evaluation Metrics

In order to evaluate the effectiveness and performance of FIERNet more scientifically, this paper selected performance indicators such as Precision (P), Recall (R), F1 score, Average Precision (AP) and mean Average Precision (mAP) for testing and verification.

The formulas for calculating P and R are as follows.

where

TP and

FP represent the number of correctly classified positive samples and the number of misclassified positive samples, respectively.

FN is the number of missed samples.

The

F1 score is often used as the final measure for multiclassification problems, i.e., the harmonic mean of precision and recall. The larger the

F1 value, the better the model performance, and the smaller the value, the worse the model performance. The formula for calculating the

F1 score for each detection category is as follows.

The formula for calculating

AP is

Taking the mean value of

AP of all categories to get

mAP, its calculation formula is as follows.

The log-average miss rate (LAMR) represents the missed detection rate of the test set in the dataset. The larger the LAMR, the more missed targets are represented, whereas the smaller the LAMR, the stronger the model detection performance.

4.3. Ablation Experiments

In this section, ablation experiments are performed on the methods proposed in this paper, and the advantages and disadvantages of each method and their impact on the performance of the algorithm are discussed in detail. This experiment used the general dataset SSDD for validation. The FIERNet proposed in this paper was designed based on the architecture of YOLOv4. Therefore, in the following experiments, we used YOLOv4 as the benchmark module for experimental comparison.

As shown in

Table 4, the performance metrics change when the different modules combine, but not all module combinations can bring about a performance improvement. For example, the Recall of the combined module using the CTFENet + BBRD was decreased by 1.65% compared to the performance of the BBRD module alone, the reason being that each improvement technique is not completely independent and even though some techniques are effective when used alone, they are not effective in combination. Therefore, here we gave an incremental order of optimal network performance for various performance metrics [

36]: benchmark module + EFIF module + CTFENet + BBRD(FIERNet).

Benchmark module + BBRD(FIERNet-B): First, we considered optimizing the postprocessing method of the network model to improve the localization accuracy of detection boxes, because in general, improving the BBRD method only affects the network decoding process, and has little or no impact on the number of network parameters and inference time. We optimized the original boundary regression decoding formula and the mAP, Recall, Precision, and F1 increased by 0.79%, 0.37%, 0.41%, and 1.00%, respectively, and the LAMR decreased by 2.00%.

Benchmark module + BBRD + CTFENet (FIERNet-BC): Next, since it is difficult to continue to improve the model performance without changing the network structure, we proposed a new backbone network CTFENet to strengthen the feature extraction effect and bring about an effective improvement in performance. Among them, the mAP, Precision, and LAMR had the best effect. The mAP and Precision increased by 1.28% and 1.76%, respectively, and the LAMR decreased by 2.00%.

Benchmark module + BBRD + CTFENet + EFIF (FIERNet): The transmission and fusion module of feature information is an indispensable part of the network model, which has a great impact on the performance of the model. Therefore, this paper proposed the EFIF module as the feature transmission and fusion module of FIERNet to reduce missed and false detection and enhance network performance. After experimental verification, the performance of this module met our expectations, and the addition of EFIF could improve the Recall by 4.77% and the LAMR by 4.00%.

Finally, compared with the benchmark model, FIERN was proposed; its mAP, Recall, Precision, and F1 were improved by 2.96%, 3.49%, 3.12%, and 4.00%, respectively, and the LAMR was reduced by 8.00%.

In

Figure 13, we selected ship target images in different situations for a heatmap visualization. The brighter the color of an area in the heatmap, the more interested the model is in that area, and the more likely the target is. For ship targets that are difficult to detect and easy to miss, FIERNet can accurately obtain the rich feature details of small ship targets and give correct judgments. However, it is difficult for YOLOv4 to capture the feature information of small targets, so the detection effect is not ideal, as shown in the blue and green boxes in

Figure 13. In the detection of near-shore ship targets, it is prone to misdetection, that is, a nonship target is mistaken for a ship target. FIERNet can effectively weaken background information, focus on effective information, give clear ship characteristics, and finally obtain ideal detection performance. YOLOv4 is easily disturbed by background information, so it gives wrong judgments, as shown in the red box in

Figure 13.

4.4. Experimental Analysis of BBRD Decoding Formula

In order to facilitate the experimental analysis, this paper calls the original BBRD method BBRD-1, the method introduced from [

36] is called BBRD-2, and the method proposed in this paper is called BBRD-N.

The bounding box regression decoding is related to the presentation of the network output information and is one of the key steps of the target detection algorithm. Therefore, we proposed a new decoding formula to enhance the decoding effect. As shown in

Table 5, the bounding box decoding formula proposed here achieves good results, confirming the effectiveness of reducing the order of magnitude of the penalty terms. At the same time, BBRD-2 also achieved good results, indicating that the improvement of BBRD is necessary.

Figure 14 shows the experimental results for different a and N. It can be seen from

Figure 14 that the improvement of the penalty item is necessary, and the overall performance indicators after the improvement are improved to varying degrees. This paper selected 11 different values for verification. It can be seen from

Figure 14a that when a is 1.03, 1.04, and 1.05, the overall performance index is the best. Therefore, based on these three values, we selected 13 different N values for the experiments, as shown in

Figure 14b–d. We evaluated these three curves as a whole, and we found that when a = 1.04 and N = 12, the mAP (94.14), Recall (87.89) and Precision (98.16) achieved their maximum value. Therefore, this paper selected a = 1.04 and N = 12 to construct the BBRD-N formula for bounding box regression decoding. In addition, it can be seen from

Figure 14b–d that when N = 2, no matter what the value of a is, the maximum value of the current performance index cannot be reached. This verifies the idea proposed in this paper when optimizing the BBRD-2 formula: the penalty term is too large, which leads to reduced model performance.

4.5. Analysis of Experimental Results Based on SSDD Dataset

The comparison of evaluation indicators between FIERNet and multiple models is shown in

Table 6. It is obvious that the method proposed in this paper is excellent, and the model achieves amazing detection results. Our method obtains the best mAP (94.14%), Recall (87.89%), and F1 score (93%). Specifically, the mAP of FIERNet is 22.24% higher than Faster RCNN, 2.97% higher than YOLOv4, and 5.22% higher than YOLOX [

40]. The Recall of FIERNet is 14.68% higher than SSD512 and 11.59% higher than SAR-ShipNet [

41]. In addition, compared to other methods, the F1 of FIERNet is at least 4% higher. To sum up, the performance of FIERNet is amazing, and it also demonstrates the effectiveness and applicability of the method proposed in this paper.

Figure 15 shows the PR and F1 curves for different models. The PR curve represents the relationship between precision and recall, and the area enclosed by it and the coordinate axis is the mAP value of each model. The F1 curve is an average of precision and recall, which represents the overall performance of the model. It can be intuitively seen from the figure that the F1 and mAP values of FIERNet are higher, which strongly demonstrates the effectiveness and superiority of the model.

Figure 16 shows the visual detection results of FIERNet, CenterNet [

42], and YOLOX. It can be seen that FIERNet has excellent application effects for ship detection in complex environments. For example, CenterNet and YOLOX experience false detections, mistaking ship-like targets for ships, as shown in the yellow boxes. Ships in SAR images belong to the category of small targets, occupying fewer pixels, and are easily affected by background factors, making it difficult to detect targets. During the detection process of CenterNet and YOLOX, missed detection occurred, as shown in the green box in

Figure 16. However, FIERNet can obtain the contextual information of the target from the complex background, and then detect the ship target. FIERNet also has performance that is not inferior to other algorithms for dense target detection. The above conclusions prove that FIERNet can be applied to SAR ship detection in various scenarios.

To further dissect the FIERNet detection process, we show the feature information changes of the FIERNet network during the ship detection process in

Figure 17. From

Figure 17b we can clearly see that all three detection heads of FIERNet capture the main feature information that is beneficial for ship detection. Even the eigenhead (16 × 16), which is mainly used for large target detection, obtains obvious target information, marking the approximate location of the ship target. Combining the other two detection heads with more explicit ship feature information, we can finally get

Figure 17c. Comparing

Figure 17a, we find that FIERNet detected all the targets, and we compared the position and size of the detection box and the real box to find that they were basically the same. This verifies the validity and soundness of the method proposed in this paper.

4.6. Analysis of Experimental Results Based on SAR-Ship Dataset

The SAR-ship dataset is large in scale, the ship target environment is complex, there are many negative samples, the target is dense, and the detection is extremely difficult. However, such a dataset can better reflect the superiority of the network and the credibility of the experimental results. Therefore, this paper applied FIERNet to this dataset for testing.

The input size of the image affects the detection accuracy of the network model. Many researchers [

16,

17,

44] have demonstrated that the larger the size of the input image, the better the detection effect of the network model on the target. Therefore, it was reasonable for us to use FIERNet with an input size of 256 in

Table 7 to compare with other advanced object detection algorithms that used larger input sizes. Such comparative experiments are challenging and better demonstrate the effectiveness of FIERNet. As shown in

Table 7, the overall performance of FIERNet on large-scale datasets is better than that of other advanced target detection algorithms, and all performance indicators have reached an ideal state. Overall, FIERNet’s mAP is 1.81~16.82% higher, its Recall is 8.05~22.61% higher, and its F1 is 6~14% higher. This shows that FIERNet has good generalization performance and applicability. It is not limited to a single dataset, but also has the potential for generalization to more complex datasets.

To further highlight the strong detection performance of FIERNet, we deliberately selected multiple sets of hard-to-detect images to visualize the detection effect. The ship target pointed by the yellow arrow in

Figure 18 is integrated with the surrounding background, and the feature information is difficult to extract and easy to miss. However, FIERNet can extract unique ship features with a powerful network model, and then accurately detect ship targets. Due to the small size of SAR targets and too many similar targets, it is easy to lose ship feature information during the network detection process, resulting in false detection. For such problems, FIERNet still has good performance, as shown in the blue box in

Figure 18. FIERNet has excellent detection performance for hard-to-detect and easy-to-misdetect objects, so it should also have good detection results for ship objects in other situations. To test this idea, we continuously detected nearshore building disturbances and dense target images, as shown in the second row of

Figure 18. We can see that FIERNet still performs well with a strong object detection ability.

4.7. Verification Based on Complex and Large-Scene SAR Images

In this section, we used large-scale complex SAR ship images from two different scenes from the LS-SSDD-v1.0 dataset [

45] to verify the practicability and generalization of FIERNet. The picture scenes were: Campeche and Singapore Strait, with resolutions of 25,629 × 16,742 and 25,650 × 16,768, respectively. In this verification link, in order to further evaluate the generalization and soundness of FIERNet, instead of using the LS-SSDD-v1.0 dataset as the training set for training, we used the FIERNet trained on the SSDD dataset with a resolution of 512 × 512 as the test model. Due to limited computer performance, it could not support the testing of large-scale SAR images. Thus, each large-scale SAR image was divided into 600 subimages of 800 × 800 size. We separately fed the subimages of each SAR image into the FIERNet network to detect the performance.

Compared with other advanced target detection methods, the performance indicators of FIERNet on large-scale SAR images were still excellent. For example, the mAP of FIERNet was 4.69% and 7.5% higher than that of YOLOv4, the Recall was 6.65% and 8.79% higher than that of YOLOVX, and the F1 indicator was also much higher than that of the other methods, as shown in

Table 8. This also proves that FIERNet has strong generalization performance and soundness, and has the potential for large-scale dataset promotion.

As can be seen from

Figure 19, these two images have very complex backgrounds, large scales, and a high detection difficulty. However, under such conditions, FIERNet can still detect many small ship targets and give correct detection results, with few missed detection and false detection. This is shown by the enlarged area of the blue box in

Figure 19.

5. Conclusions

Aiming at the problems of fuzzy feature information, complex background, and difficulty in distinguishing ship targets in SAR images, a deep learning-based detection method for ship SAR images in the marine environment was proposed. We constructed a feature information efficient representation network (FIERNet) to achieve an efficient representation of the target information to be detected.

Firstly, this paper proposed CTFENet as a feature extraction network to extract broader and richer feature details. The network was built on the CTFE module, a module that implemented the Swin Transformer module architecture using convolutions. The CTFE module mainly consisted of a 3 × 3 convolution, triplet attention, Mish activation function, MLP, and a residual structure.

Secondly, the EFIF module was used to enhance the effective fusion and transfer of feature information. First, IHCB was used to realize the mixing of spatial and channel dimensions, strengthen the exchange of information in different dimensions, and further enrich the feature information of the target. Then, we used the channel and spatial attention mechanism to filter invalid information hierarchically and strengthen the expression of semantic information and location information.

Thirdly, the new BBRD method was used to optimize the postprocessing process of the network, strengthen the decoding effect, and further clarify the position of the prediction frame, thereby enhancing the performance of the target detection.

The FIERNet method proposed by the above methods could obtain powerful feature information, thereby greatly improving the network performance. In this paper, we successively used SSDD, SAR-ship datasets, and large-scale SAR ship images to demonstrate the superiority and applicability of the FIERNet method. In the future, we will explore lightweight processing of the network, hoping to achieve excellent accuracy and detection speed at the same time. For example, applying a Ghost [

46] convolution instead of the 3 × 3 convolution, appropriately reducing the number of backbone network layers, pruning the channels’ branch operations, using the Focal-EIOU [

47] regression loss function, etc.