1. Introduction

The slant total electron content, STEC, refers to the total number of electrons along a path between a radio transmitter and a receiver (unit: TECU,

electrons/m

). Ionospheric TEC is significant, among others, for Global Navigation Satellite Service (GNSS), GPS signal propagation and applications; 1 TECU corresponds to a 0.163 m range delay of an L1 frequency [

1]. As a result, TEC prediction is of great significance for satellite–ground link radio wave propagation, such as satellite navigation [

2], precise point positioning (PPP) [

3,

4,

5] and time-frequency transmission [

6,

7]. Therefore, developing accurate global models to predict the spatiotemporal variations in TEC is crucial [

8].

Since 1998, the Ionosphere Associate Analysis Centers (IAACs) of the International GNSS Service (IGS) have started generating reliable global ionospheric maps (GIMs) based on global measured observational data [

9]. These vertical TEC maps (for simplicity, called TEC maps) are able to reproduce the spatial and temporal variations of the global ionosphere as well as seasonal variations [

10]. In addition, the TEC maps are important data sources for analyzing ionospheric anomalies [

11] and ionospheric responses to storms [

12]. Further, the TEC maps have been widely used in practical applications [

13]. However, the final GIM product release of IGS has an approximate latency of 11 days, while the rapid GIM product release of IGS is delayed by one day [

14,

15]. It is necessary to make up for their lack of timeliness through prediction.

To meet the data requirements of ionospheric theoretical research and applications of satellite navigation, PPP and time-frequency transmission, many approaches have been developed to forecast global TEC values. For example, the Center for Orbit Determination in Europe (CODE) proposed a global TEC prediction model based on the spherical harmonic (SH) expansion extrapolation theory of the reference solar-geomagnetic framework [

16]. The Polytechnic University of Catalonia (UPC) developed a global TEC prediction model by using the DCT of the TEC maps and then applying a linear regression module to forecast the time evolution of each of the DCT coefficients [

17]. The Space Weather Application Center Ionosphere (SWACI) forecasts the European and global TEC maps 1 h in advance by using the Neustrelitz TEC model. The model approximates typical TEC variations according to the location, time, and level of solar activity with only a few coefficients [

18].

In recent years, there is mainly two methods for predicting the global TEC maps. One is to predict the SH coefficients and then expand the predicted SH coefficients to construct the global TEC maps. Specifically, Wang et al. [

19] proposed an adaptive autoregressive model to predict the SH coefficients for 1-day global TEC maps forecast. Liu et al. [

20] forecasted the global TEC maps 1 and 2 h in advance by using the long short-term memory (LSTM) network to forecast the SH coefficients. Tang et al. [

21] used the Prophet model to predict the SH coefficients to generate the global ionospheric TEC maps 2 days in advance.

The other is to directly output the predicted global TEC maps by inputting the TEC map of a certain period of time in the past. For example, Lee et al. [

22] made global TEC maps forecasting using conditional generative adversarial networks (GANs). Daily IGS TEC maps and 1-day difference maps are used as input data for their model, and the output is 1-day future TEC maps. Lin et al. [

23] developed a spatiotemporal network to forecast the TEC maps of the next day by inputting the TEC maps of the previous three days. Chen et al. [

24] established several LSTM-based algorithms for TEC maps forecast. The past 48 h TEC values are used as the history data and input to the networks, and the output is the future 48 h TEC values. Xia et al. [

25] developed the ED-ConvLSTM model consisting of a ConvLSTM network and convolutional neural networks (CNNs). Taking 24 global TEC maps of the past day as input, the ED-ConvLSTM model can predict the global TEC maps 1 to 7 days in advance.

In fact, forecasting the global TEC maps could be described as an image time sequence prediction problem, where the elements of the series are 2D images (global TEC maps) rather than traditional numbers or words (can be embedded into 1D matrices). Due to its unique and effective attention mechanism, which can draw global dependencies between input and output [

26], the transformer model has shown great potential in natural language processing (NLP) fields since it was first proposed in 2017. However, its applications to computer vision (CV) remained limited until Dosovitskiy et al. [

27] proposed Vision Transformer (ViT) in 2020. They successfully proved that CNNs are not necessary for vision, and a pure transformer applied directly to sequences of image patches can perform very well in image classification tasks [

27]. Inspired by Dosovitskiy et al. [

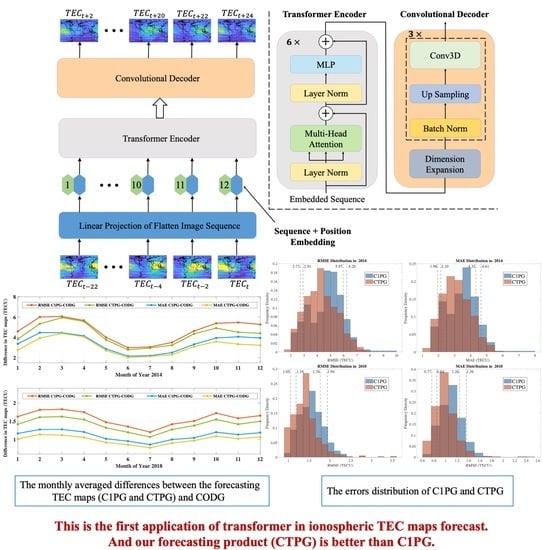

27], in this paper, we proposed a conv-attentional image time sequence transformer (CAiTST), a transformer-based image time sequence forecast model equipped with convolutional networks and attentional mechanism, and we successfully used it to forecast the global TEC maps. The input of CAiTST is one day’s 12 TEC maps (time resolution is 2 h), and the output is the next day’s 12 TEC maps. Then, we evaluate the forecasting products of CAiTST (named CTPG) in high and low solar activity and compare them with the predicted products by the 1-day CODE prediction model (named C1PG).

The paper is organized as follows. The data are described in

Section 2. The method is explained in

Section 3. The experimental results are demonstrated in

Section 4. In addition,

Section 5 contains the discussion. Finally, conclusions are given in

Section 5.

2. Data

The CODE final TEC maps (named CODG) are used as reference data to train and evaluate the model, which is obtained from NASA’s Crustal Dynamics Data Information System (CDDIS) website (

https://cddis.nasa.gov/archive/gnss/products/ionex/, accessed on 20 December 2021). The spatial longitude ranges from

W to

E, with a resolution of

, and the latitude ranges from

S to

N, with a resolution of 2.5°. Therefore, the scale of the global TEC map grid points is 73 by 71. The TEC maps from 2005 to 2017 (except 2014) constitute the training and validation sets, of which 90% are used to train the model, and 10% are used to validate the model. In addition, the TEC maps in 2014 (high solar activity year) and 2018 (low solar activity year) are applied for testing.

Furthermore, due to the periodic diurnal changes of TEC [

28], the TEC maps are processed into a spatiotemporal sequence every two days. The TEC maps of the previous day form the historical observations that are used to predict the TEC maps of the next day. As the time resolution of CODE TEC maps is 2 h, each sequence contains a total of 24 TEC maps. Therefore the dimension of the input or output of the model is (12, 71, 73, 1), where 12 represents that each sample contains one day’s 12 TEC maps, 71 and 73 are the dimensions of the TEC map, 1 represents the number of channels in the TEC maps.

5. Discussion

In this paper, forecasting the TEC maps is regarded as an image time sequence prediction problem. Each TEC map contains not only the TEC value of each grid point but also rich spatial information (longitude and latitude information). The chronological sequence of TEC maps also contains temporal information. Therefore, a forecasting model of the TEC map sequence needs to have the ability to process both spatial and temporal features. As transformer has incomparable advantages in time series prediction because of its unique self-attention mechanism, we develop a transformer-based TEC map sequence prediction model named CAiTST. By dividing an image into a certain number of subgraphs and then performing linear projection on them, transformer can also replace the traditional convolution operation to process the image. Inspired by this, the 2D TEC map is linearly projected into the 1D embedding so that the transformer can be used as an encoder to encode a 12 TEC map sequence in a day, and then the 3D CNN is added as a decoder. To accelerate the training speed of the CAiTST, LN and BN are used in the encoder and decoder, respectively. The training, verification and testing of the model are all completed by the CODE final TEC map products (CODG). The data from 2005 to 2018 constitute the training set (90% of total) and verification set (10% of total), and the test set consists of the data from the high solar activity year (2014) and low solar activity year (2018). In the test stage, RMSE and MAE are selected as evaluation metrics to investigate the performance of the model. Compared with the results of the CODE one-day prediction product (C1PG), the superiority of the CAiTST model is verified.

The results show that CTPG (TEC map products predicted by CAiTST) performs better than C1PG in both 2014 and 2018. The RMSE values of CTPG with respect to CODG decreased by 8.9% and 10.2% compared with those of C1PG in 2014 and 2018, respectively, while the MAE values decreased by 9.3% and 10.8%. The results also illustrate that the prediction performance depends on the season. The two predicted products both perform better in summer than in spring and winter. In addition, by the analysis of latitudinal behavior, it can be concluded that the errors of the two predicted products in the low latitudes are greater than those in the high and middle latitudes due to anomalies in the low latitudes such as EIA and the high background electron density. Furthermore, through the analysis of the geographic distribution of the performance, the results show that the EIA region is the most difficult to predict. It may be explained by the inaccuracy of the IGS TEC maps representing well-known ionospheric structures such as the EIA [

10]. Because GNSS stations are sparsely distributed in marine areas, C1PG has obvious errors in marine areas, where CTPG is not significantly impacted. This shows that our model is not affected by the lower accuracy of the IGS TEC maps in the southern hemisphere than in the northern hemisphere [

42].

It is worth noting that in most of the TEC prediction research, the past TEC values, which are highly correlated with the future TEC values [

43], are often used as the input of the forecasting model.

Table 6 shows the annual mean RMSE and MAE values between 12 TEC maps in the past day and 12 TEC maps in the future day during high and low solar activity periods. The reason why we compare the copy of 12 TEC maps from the past day rather than the average of TEC maps from the past two or three days is because that is the input for our model. According to

Table 2 and

Table 6, in 2014, the RMSE values between the predicted TEC maps (C1PG and CTPG) and CODG are 1.57 and 1.41 TECU, and the RMSE between CODG maps of the previous day and CODG maps of the future day is 1.61 TECU. In addition, it can be seen that in the whole test dataset, the RMSE values between predicted TEC maps and future TEC maps decrease by 11.8% compared to those between past TEC maps and future TEC maps, and the MAE values decrease by 8.9%. This illustrates that our CAiTST model has indeed learned the relationship between the past TEC maps and future TEC maps and successfully made predictions. Furthermore, in order to prove the effectiveness of the prediction model, we suggest that in future TEC prediction work, if the prediction model uses the past TEC value as input, the copy or average of the past TEC values should also be included in the evaluation.

We also evaluate the performance of CAiTST for different TEC map sources. Although the CAiTST model is trained by CODG, in 2014, the performance for IGSG is the best, and the performance for ESAG is the worst. In 2018, the model performs best for CODG and performs worst for JPLG. The results illustrate that our model could be applied to the prediction of different TEC maps sources.

The performance of CAiTST during a magnetic storm is also investigated. However, the results suggest that when a storm occurs, CAiTST fails to capture the complex and rapid changes of TEC, especially in the EIA region. This is mainly because our model only includes past TEC maps as the input, no information relating to a prior storm is considered in the model. When the TEC maps are used as the input for the neural network, it is hard to add other additional parameters as input at the same time, which prevents us from adding other parameters, such as Dst or Kp index, to represent the information relating to prior storms.

Therefore, in future work, how to use the TEC maps and some individual parameters, including storm information, as inputs to the transformer model at the same time is the next focus to be studied. Only then does the model have the possibility to work properly during a storm period. Moreover, the performance degradation of the model in the EIA region also needs further research to be solved, and developing a regional prediction model can alleviate it. Other deep learning models, such as GRU [

36] and deep generative models [

44], may address these above challenges, which will be our follow-up work.