Rapid Photogrammetry with a 360-Degree Camera for Tunnel Mapping

Abstract

:1. Introduction

2. Materials and Methods

2.1. Test Site

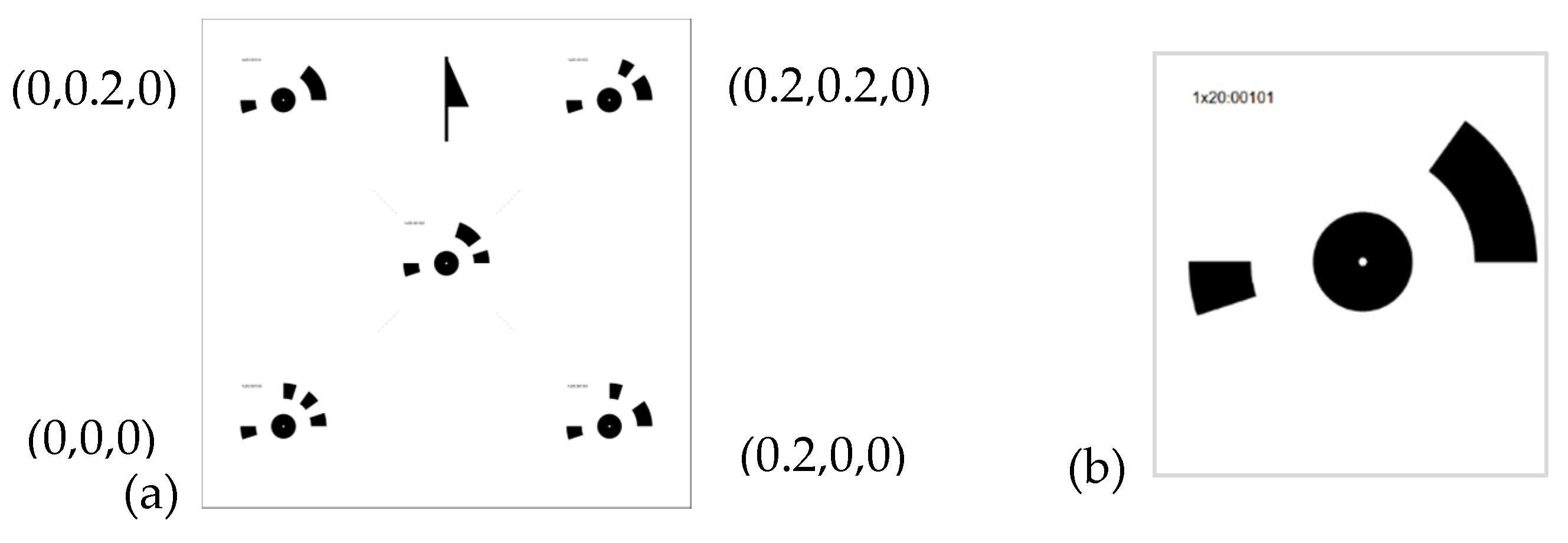

2.2. Control Points and Control Distances

2.3. Image Acquisition with a 360-Degree Camera

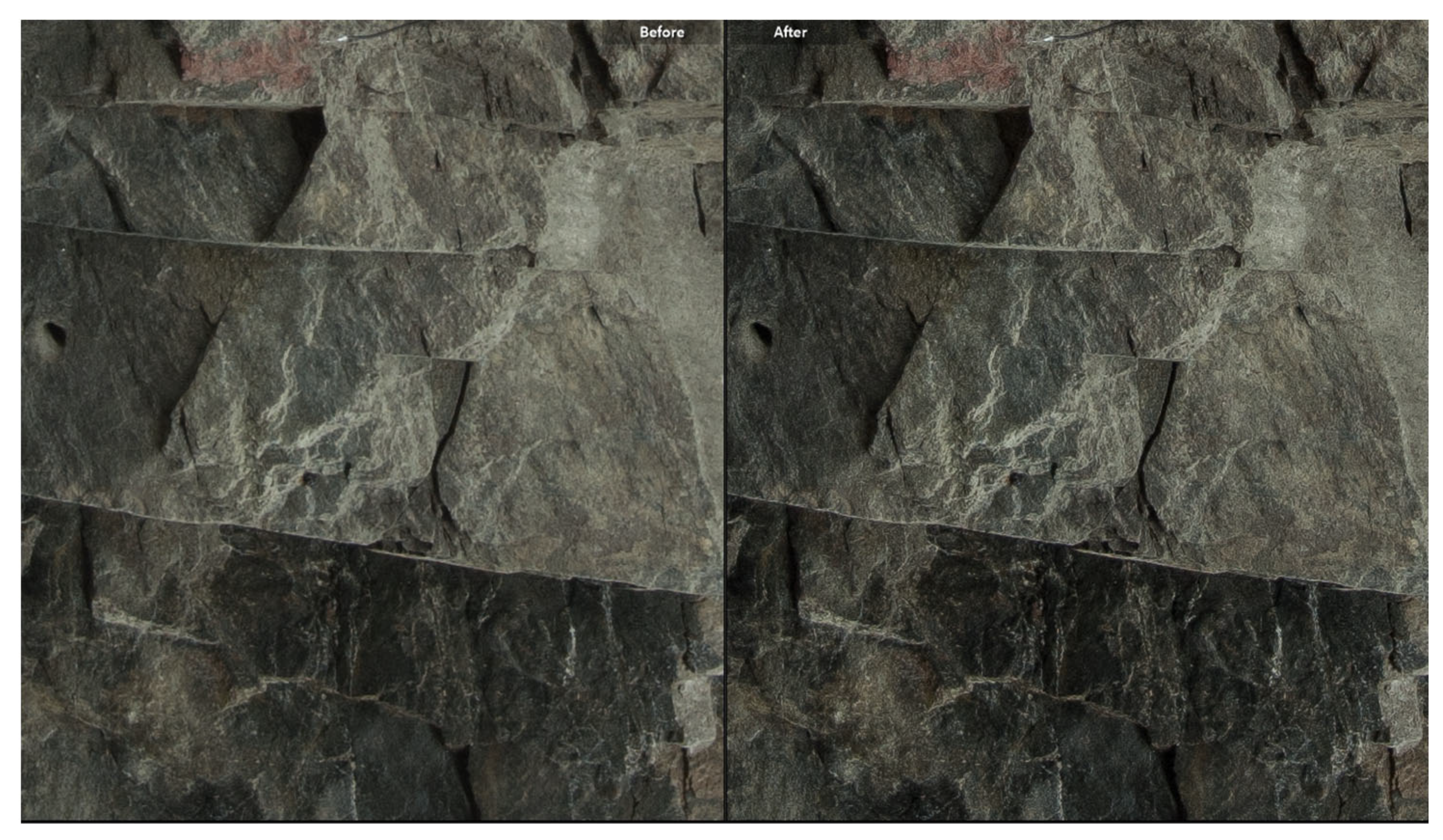

2.4. Photogrammetric Reconstruction

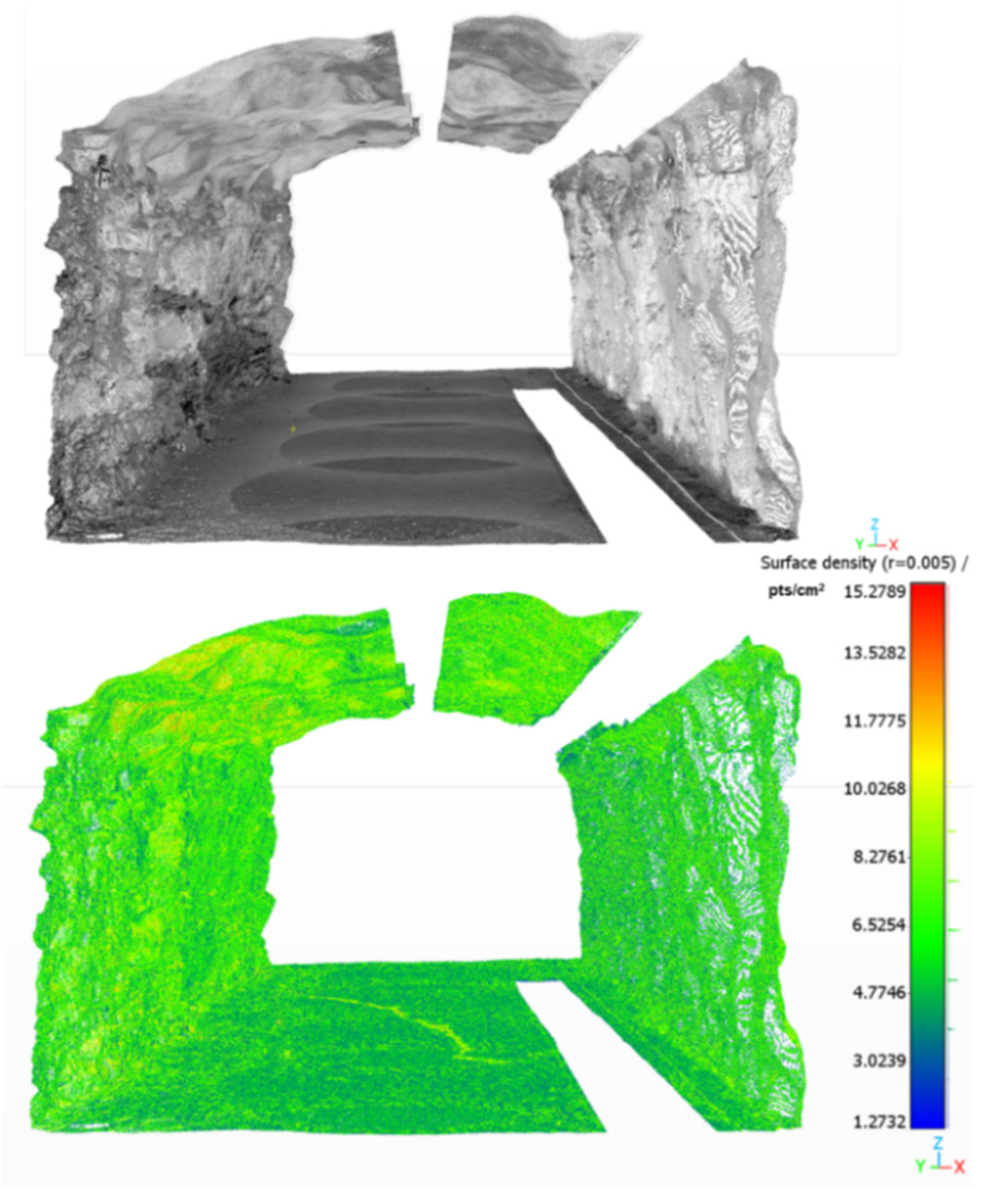

2.5. Reference Models

2.6. Point Cloud Data Analysis

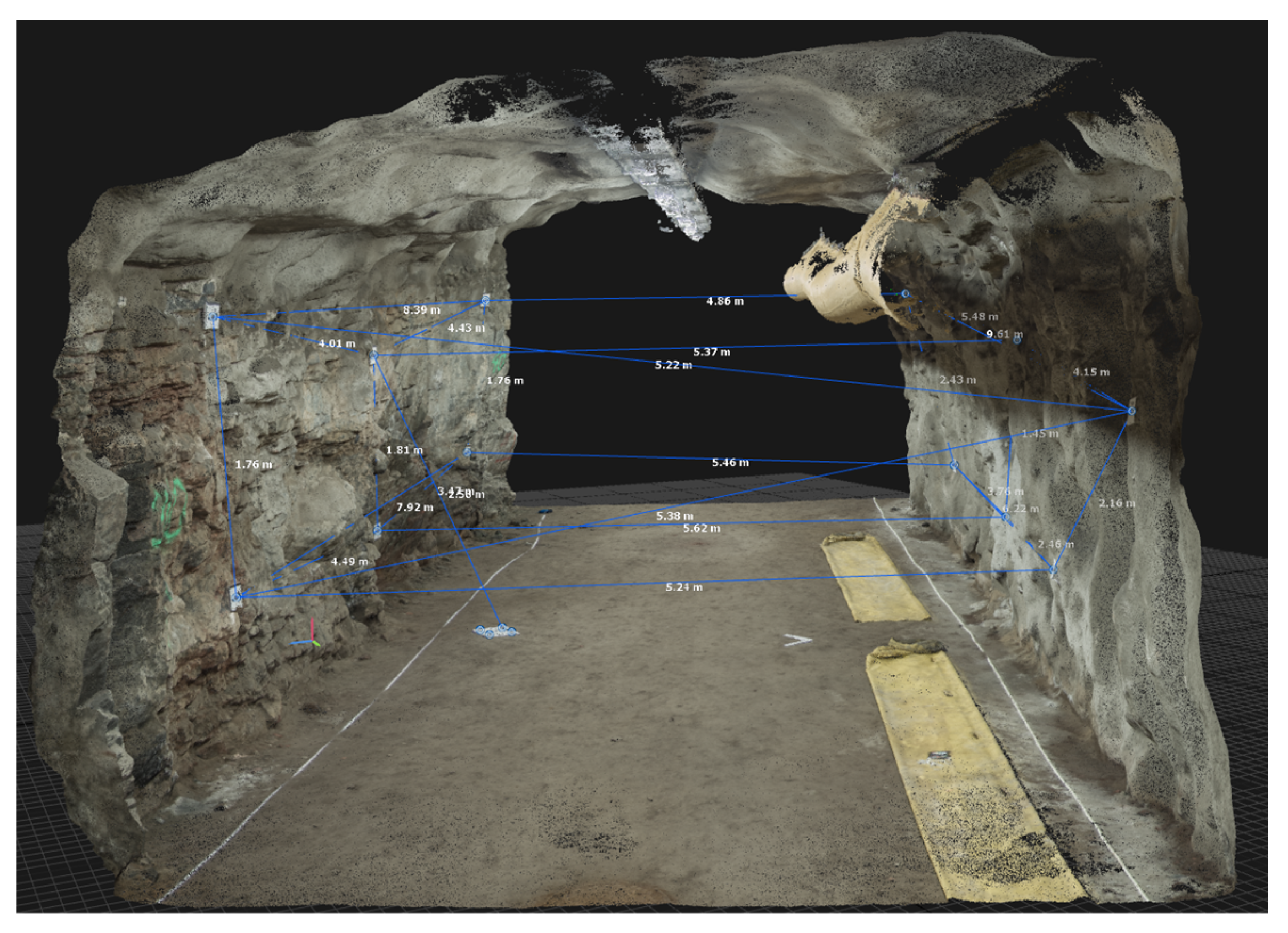

2.7. Remote Rock Mass Measurements

3. Results and Discussion

3.1. Image Acquisition Time and Photogrammetric Reconstruction

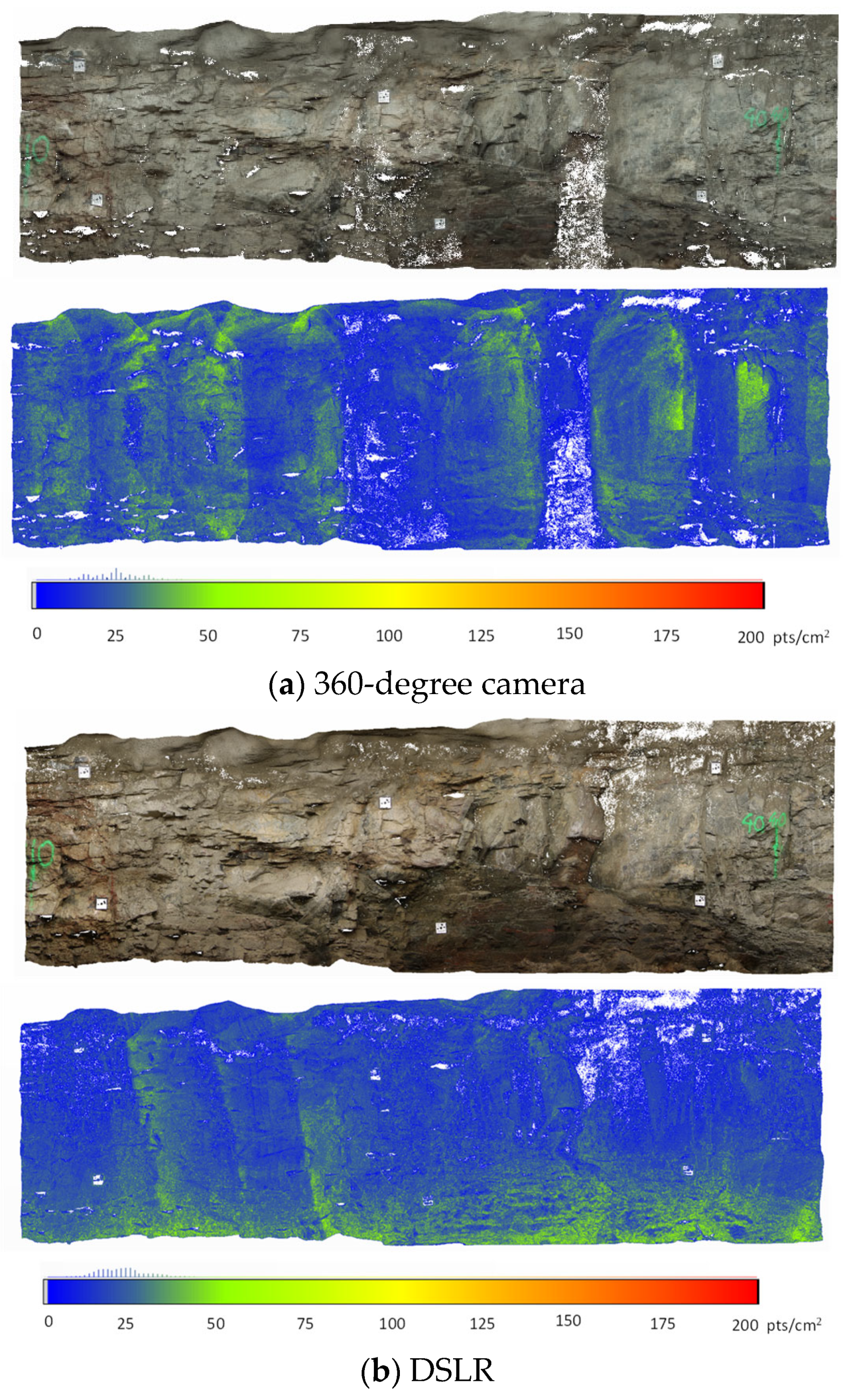

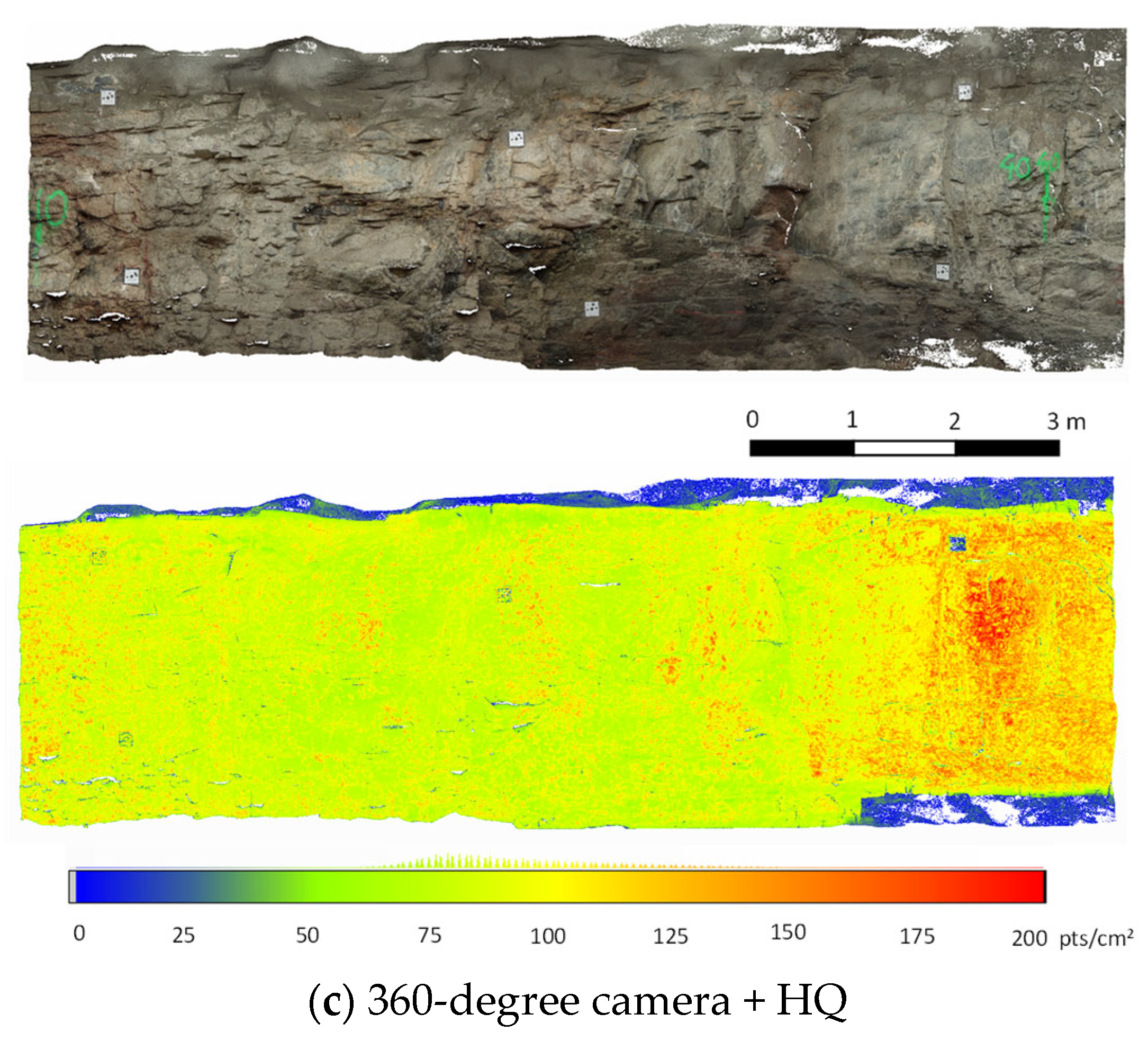

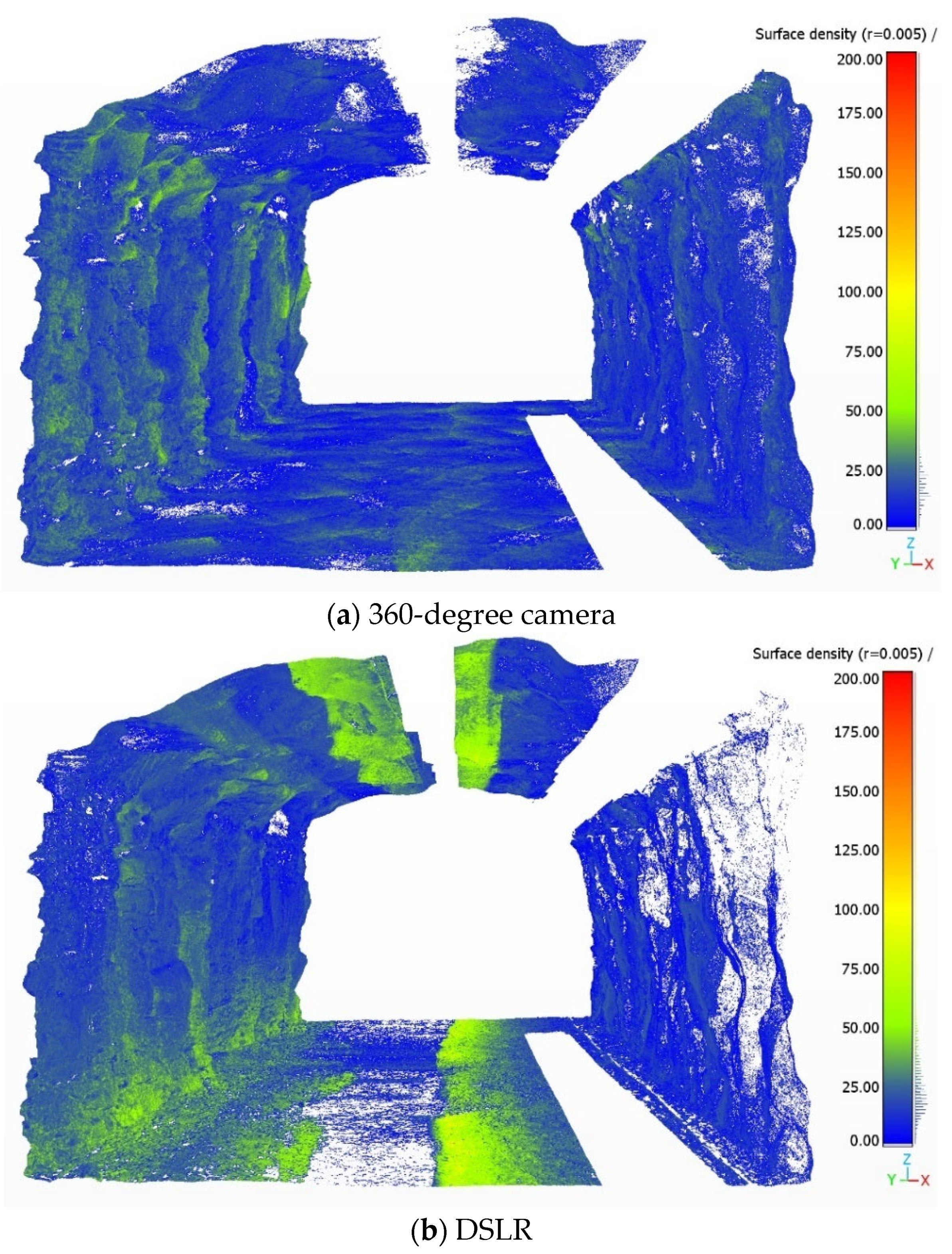

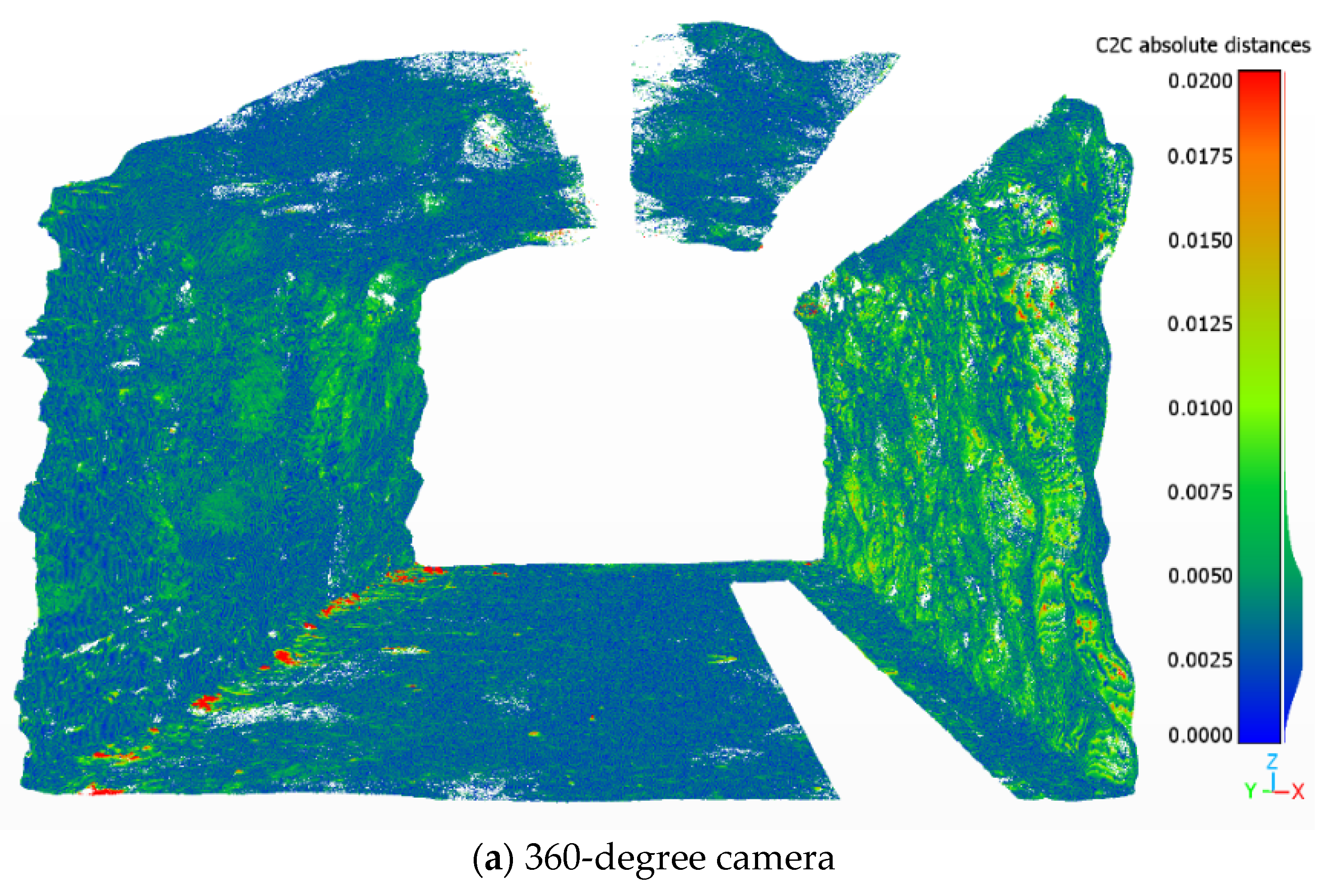

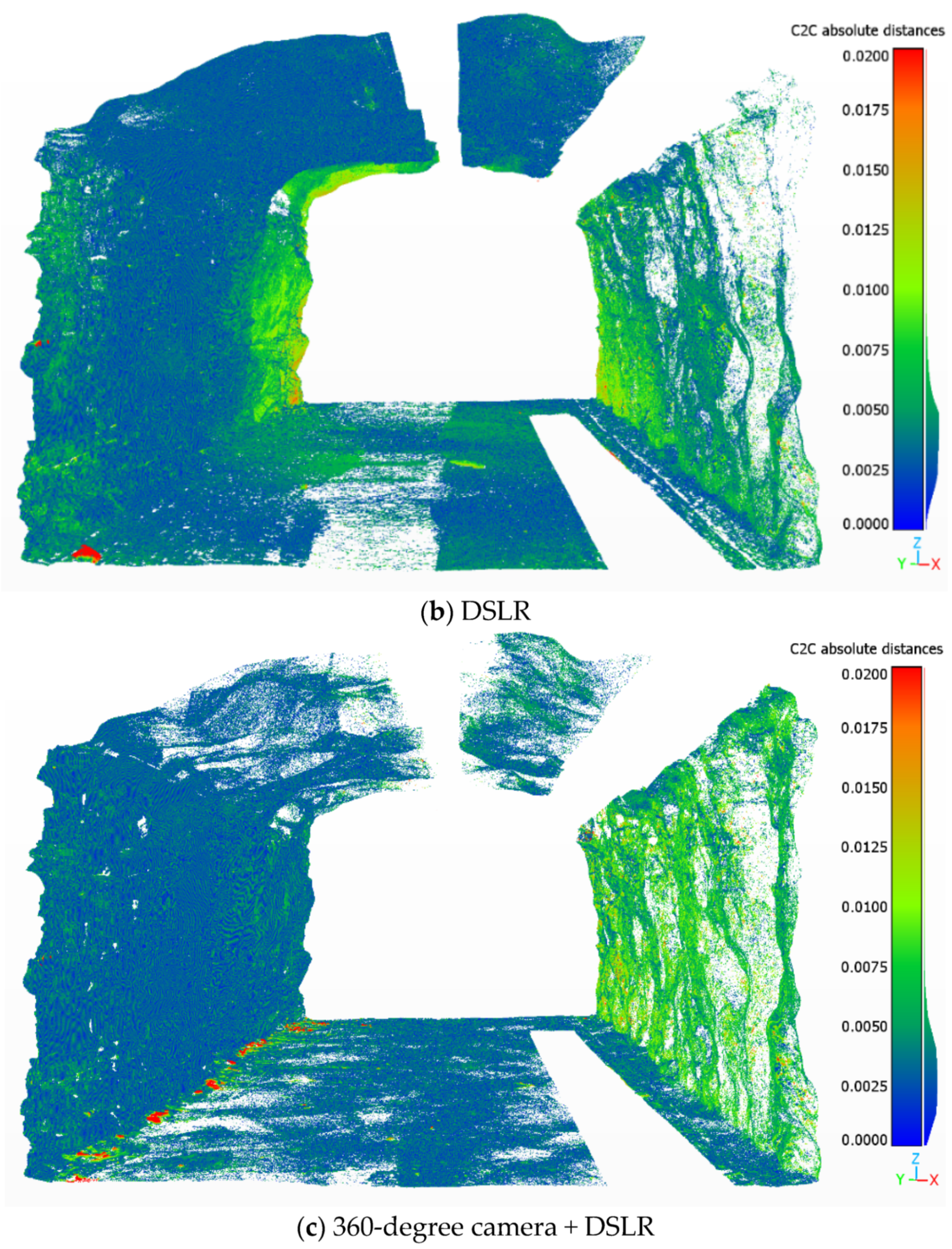

3.2. Control Distance Error and Comparison with a Reference Model

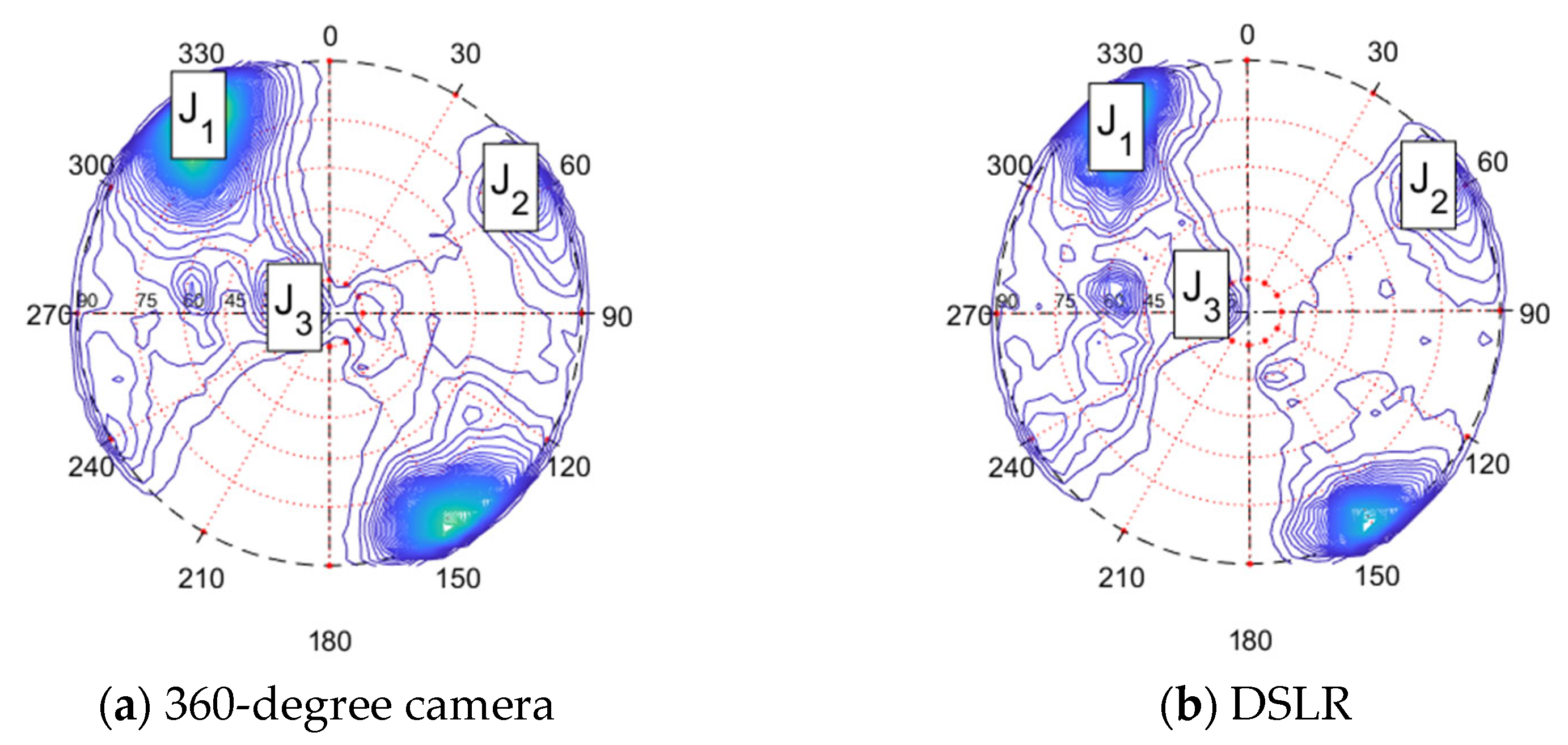

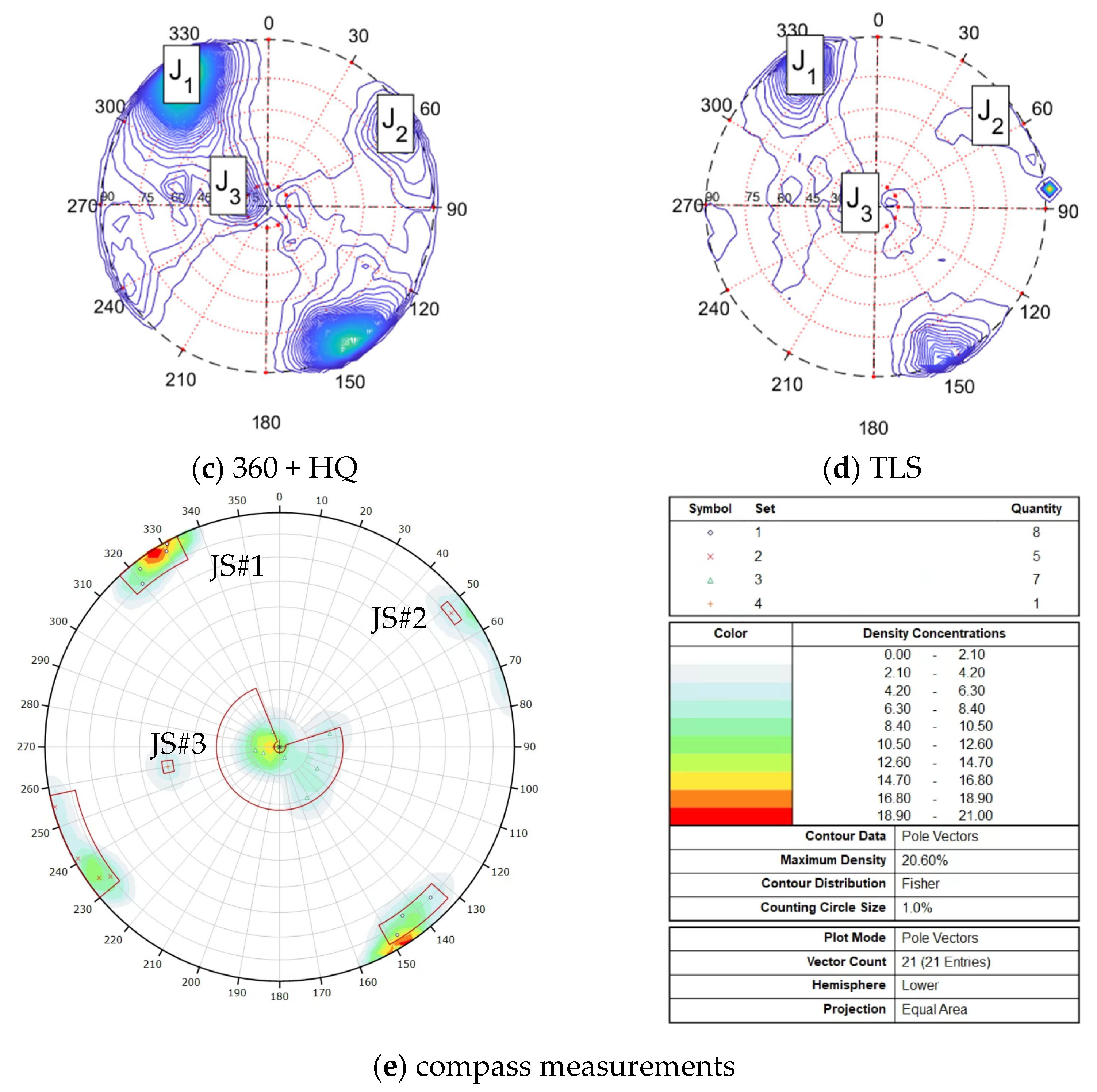

3.3. Rock Mass Characterization

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Attard, L.; Debono, C.J.; Valentino, G.; Di Castro, M. Tunnel inspection using photogrammetric techniques and image processing: A review. ISPRS J. Photogramm. Remote Sens. 2018, 144, 180–188. [Google Scholar] [CrossRef]

- Hudson, J.; Harrison, J. Engineering Rock Mechanics: An Introduction to the Principles, 2nd ed.; Elsevier: Oxford, UK, 2000. [Google Scholar]

- García-Luna, R.; Senent, S.; Jurado-Piña, R.; Jimenez, R. Structure from Motion photogrammetry to characterize underground rock masses: Experiences from two real tunnels. Tunn. Undergr. Space Technol. 2019, 83, 262–273. [Google Scholar] [CrossRef]

- Uotinen, L.; Janiszewski, M.; Baghbanan, A.; Caballero Hernandez, E.; Oraskari, J.; Munukka, H.; Szydlowska, M.; Rinne, M. Photogrammetry for recording rock surface geometry and fracture characterization. In Proceedings of the Earth and Geosciences, the 14th International Congress on Rock Mechanics and Rock Engineering (ISRM 2019), Foz do Iguassu, Brazil, 13–18 September 2019; da Fontoura, S.A.B., Rocca, R.J., Pavón Mendoza, J.F., Eds.; CRC Press: Boca Raton, FL, USA, 2019; Volume 6, pp. 461–468. [Google Scholar]

- Riquelme, A.J.; Abellán, A.; Tomás, R.; Jaboyedoff, M. A new approach for semi-automatic rock mass joints recognition from 3D point clouds. Comput. Geosci. 2014, 68, 38–52. [Google Scholar] [CrossRef] [Green Version]

- Ding, Q.; Wang, F.; Chen, J.; Wang, M.; Zhang, X. Research on Generalized RQD of Rock Mass Based on 3D Slope Model Established by Digital Close-Range Photogrammetry. Remote Sens. 2022, 14, 2275. [Google Scholar] [CrossRef]

- Torkan, M.; Janiszewski, M.; Uotinen, L.; Baghbanan, A.; Rinne, M. Photogrammetric Method to Determine Physical Aperture and Roughness of a Rock Fracture. Sensors 2022, 22, 4165. [Google Scholar] [CrossRef]

- Panella, F.; Roecklinger, N.; Vojnovic, L.; Loo, Y.; Boehm, J. Cost-benefit analysis of rail tunnel inspection for photogrammetry and laser scanning. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, XLIII-B2-2020, 1137–1144. [Google Scholar] [CrossRef]

- Fekete, S.; Diederichs, M.; Lato, M. Geotechnical and operational applications for 3-dimensional laser scanning in drill and blast tunnels. Tunn. Undergr. Space Technol. 2010, 25, 614–628. [Google Scholar] [CrossRef]

- Cawood, A.J.; Bond, C.E.; Howell, J.A.; Butler, R.W.H.; Totake, Y. LiDAR, UAV or compass-clinometer? Accuracy, coverage and the effects on structural models. J. Struct. Geol. 2017, 98, 67–82. [Google Scholar] [CrossRef]

- Francioni, M.; Simone, M.; Stead, D.; Sciarra, N.; Mataloni, G.; Calamita, F. A New Fast and Low-Cost Photogrammetry Method for the Engineering Characterization of Rock Slopes. Remote Sens. 2019, 11, 1267. [Google Scholar] [CrossRef] [Green Version]

- Ulman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. B. Biol Sci. 1979, 203, 405–426. [Google Scholar]

- Lowe, D.G. Distinctive image features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. 2006, 25, 835–846. [Google Scholar] [CrossRef] [Green Version]

- Furukawa, Y.; Hernández, C. Multi-View Stereo: A Tutorial. Found. Trends Comput. Graph. Vis. 2015, 9, 1–148. [Google Scholar] [CrossRef] [Green Version]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Janiszewski, M.; Uotinen, L.; Baghbanan, A.; Rinne, M. Digitisation of hard rock tunnel for remote fracture mapping and virtual training environment. In Proceedings of the ISRM International Symposium—EUROCK 2020, Trondheim, Norway, 14–19 June 2020. Physical Event Not Held, ISRM-EUROCK-2020-056. [Google Scholar]

- Prittinen, M. Comparison of Camera Equipment for Photogrammetric Digitization of Hard Rock Tunnel Faces. Master’s Thesis, Aalto University, Espoo, Finland, 2021. [Google Scholar]

- Perfetti, L.; Polari, C.; Fassi, F. Fisheye photogrammetry: Tests and methodologies for the survey of narrow spaces. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2017, XLII-2/W3, 573–580. [Google Scholar] [CrossRef] [Green Version]

- Fangi, G.; Pierdicca, R.; Sturari, M.; Malinverni, E.S. Improving spherical photogrammetry using 360° omni-cameras: Use cases and new applications. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-2, 331–337. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L.; Previtali, M.; Roncoroni, F. 2018 Can we use low-cost 360-degree cameras to create accurate 3d models? Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2018, XLII-2, 69–75. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L.; Previtali, M.; Scaioni, M. Procedures for Condition Mapping Using 360° Images. ISPRS Int. J. Geo-Inf. 2020, 9, 34. [Google Scholar] [CrossRef] [Green Version]

- Teppati Losè, L.; Chiabrando, F.; Giulio Tonolo, F. Documentation of Complex Environments Using 360° Cameras. The Santa Marta Belltower in Montanaro. Remote Sens. 2021, 13, 3633. [Google Scholar] [CrossRef]

- Herban, S.; Costantino, D.; Alfio, V.S.; Pepe, M. Use of Low-Cost Spherical Cameras for the Digitisation of Cultural Heritage Structures into 3D Point Clouds. J. Imaging 2022, 8, 13. [Google Scholar] [CrossRef]

- Janiszewski, M.; Prittinen, M.; Torkan, M.; Uotinen, L. Rapid tunnel scanning using a 360-degree camera and SfM photogrammetry. In Proceedings of the EUROCK 2022, Espoo, Finland, 12–15 September 2022. accepted. [Google Scholar]

- Cox, H. Advanced Post-Processing Tips: Three-Step Sharpening. 2016. Available online: https://photographylife.com/landscapes/advanced-post-processing-tips-three-step-sharpening (accessed on 17 August 2022).

- RealityCapture, Version 1.2, by CapturingReality. Available online: www.capturingreality.com (accessed on 15 August 2022).

- Zhang, Y.; Yue, P.; Zhang, G.; Guan, T.; Lv, M.; Zhong, D. Augmented Reality Mapping of Rock Mass Discontinuities and Rockfall Susceptibility Based on Unmanned Aerial Vehicle Photogrammetry. Remote Sens. 2019, 11, 1311. [Google Scholar] [CrossRef]

- Janiszewski, M.; Uotinen, L.; Merkel, J.; Leveinen, J.; Rinne, M. Virtual reality Learning Environments for Rock Engineering, Geology and Mining Education. In Proceedings of the 54th U.S. Rock Mechanics/Geomechanics Symposium, Denver, CO, USA, 28 June–1 July 2020; ARMA-2020-1101. Available online: https://onepetro.org/ARMAUSRMS/proceedings-abstract/ARMA20/All-ARMA20/ARMA-2020-1101/447531 (accessed on 15 August 2022).

- Jastrzebski, J. Virtual Underground Training Environment. Master’s Thesis, Aalto University, Espoo, Finland, 2018. [Google Scholar]

- Zhang, X. A Gamifed Rock Engineering Teaching System. Master’s Thesis, Aalto University, Espoo, Finland, 2021. [Google Scholar]

- Janiszewski, M.; Uotinen, L.; Szydlowska, M.; Munukka, H.; Dong, J. Visualization of 3D rock mass properties in underground tunnels using extended reality. IOP Conf. Ser. Earth Environ. Sci. 2021, 703, 012046. [Google Scholar] [CrossRef]

- CloudCompare, Version 2.12.4. Available online: https://https://www.cloudcompare.org/ (accessed on 15 August 2022).

- Bauer, A.; Gutjahr, K.; Paar, G.; Kontrus, H.; Glatzl, R. Tunnel Surface 3D Reconstruction from Unoriented Image Sequences. In Proceedings of the OAGM Workshop 2015, 22 May 2015; Available online: https://arxiv.org/abs/1505.06237 (accessed on 15 August 2022).

- Eyre, M.; Wetherelt, A.; Coggan, J. Evaluation of automated underground mapping solutions for mining and civil engineering applications. J. Appl. Remote Sens. 2016, 10, 046011. [Google Scholar] [CrossRef]

| Setting Group | Parameter | Value |

|---|---|---|

| Basic delighting | Highlights | −100 |

| Shadows | 100 | |

| Sharpening | Amount | 60 |

| Radius | 0.5 | |

| Detail | 100 |

| Alignment Parameter | Value |

|---|---|

| Image overlap | Low |

| Max features per Mpx | 10,000 |

| Max features per image | 40,000 |

| Detector sensitivity | Medium |

| Preselector features | 10,000 |

| Image downscale factor | 1 |

| Maximal feature reprojection error [pixels] | 1.00 |

| Use camera positions | True |

| Lens distortion model | K + Brown4 with tangential2 |

| Photogrammetric Method | 360 | DSLR | 360 + HQ |

|---|---|---|---|

| Number of images | 162 | 111 | 162 + 13 |

| Capture time | 10 min | 34 min | 10 + 4 min |

| Alignment time | 2 min 19 s | 1 min 17 s | 2 min 59 s |

| Mesh reconstruction and texturing time | 22 min 23 s | 49 min 04 s | 32 min 29 s |

| Overall processing time | 24 min 42 s | 50 min 21 s | 35 min 28 s |

| Surface point density | 20.7 pts/cm2 | 33.2 pts/cm2 | 87.4 pts/cm2 |

| Surface point density–mapping wall | 24.5 pts/cm2 | 24.2 pts/cm2 | 96.9 pts/cm2 |

| Measured Distance (m) | 360 | DSLR | 360 + HQ | TLS |

|---|---|---|---|---|

| 1.7594 | 1.7543 | 1.7561 | 1.7564 | 1.7574 |

| 5.3671 | 5.3690 | 5.3683 | 5.3703 | 5.3625 |

| 1.8094 | 1.8092 | 1.8072 | 1.8081 | 1.8025 |

| 1.7548 | 1.7623 | 1.7584 | 1.7596 | 1.7572 |

| 5.4670 | 5.4804 | 5.4643 | 5.4676 | 5.4545 |

| 1.4461 | 1.4500 | 1.4510 | 1.4515 | 1.4511 |

| 2.1470 | 2.1548 | 2.1579 | 2.1584 | 2.1542 |

| 5.3876 | 5.3816 | 5.3846 | 5.3861 | 5.3717 |

| 3.9992 | 4.0065 | 4.0074 | 4.0085 | 4.0157 |

| 4.4164 | 4.4340 | 4.4306 | 4.4322 | 4.4143 |

| 3.4650 | 3.4784 | 3.4716 | 3.4728 | 3.4637 |

| 4.4803 | 4.4914 | 4.4893 | 4.4908 | 4.4829 |

| 5.2344 | 5.2333 | 5.2361 | 5.2375 | 5.2215 |

| 5.2127 | 5.2169 | 5.2224 | 5.2237 | 5.2091 |

| 4.1423 | 4.1417 | 4.1479 | 4.1491 | 4.1431 |

| 2.4581 | 2.4639 | 2.4644 | 2.4652 | 2.4649 |

| 3.7464 | 3.7560 | 3.7576 | 3.7591 | 3.7548 |

| 5.6238 | 5.6257 | 5.6195 | 5.6205 | 5.6041 |

| 4.8475 | 4.8626 | 4.8561 | 4.8592 | 4.8421 |

| 2.4336 | 2.4353 | 2.4309 | 2.4322 | 2.4281 |

| 5.4793 | 5.4755 | 5.4757 | 5.4781 | 5.4773 |

| 9.6027 | 9.6078 | 9.6140 | 9.6176 | 9.6092 |

| 6.2157 | 6.2196 | 6.2217 | 6.2240 | 6.2155 |

| 7.9028 | 7.9295 | 7.9215 | 7.9243 | 7.9174 |

| 8.3790 | 8.3942 | 8.3925 | 8.3951 | 8.3924 |

| RMSE (m) | 0.0099 | 0.0082 | 0.0095 | 0.0090 |

| Photogrammetric Method | 360 | DSLR | 360 + HQ |

|---|---|---|---|

| Cloud-to-Cloud distance (RMS) (m) | 0.0046 | 0.0050 | 0.0043 |

| C2C (mean of a fitted Gauss distribution) (m) | 0.0040 ± 0.0048 | 0.0043 ± 0.0052 | 0.0038 ± 0.0042 |

| Joint Set | Dip Direction (°) | Abs. Diff. | Dip (°) | Abs. Diff. | |

|---|---|---|---|---|---|

| 360 | 1 | 327.5 | 2.3 | 85.8 | 3.2 |

| 2 | 55.9 | 3.0 | 83.4 | 4.5 | |

| 3 | 281.1 | 30.5 | 13.8 | 9.1 | |

| DSLR | 1 | 325.9 | 0.7 | 83.4 | 5.6 |

| 2 | 55.9 | 3.0 | 83.4 | 4.5 | |

| 3 | 293.4 | 18.2 | 20.5 | 15.8 | |

| 360 + HQ | 1 | 327.5 | 2.3 | 85.8 | 3.2 |

| 2 | 55.9 | 3.0 | 83.4 | 4.5 | |

| 3 | 281.0 | 30.6 | 7.5 | 2.8 | |

| TLS | 1 | 324.0 | 1.2 | 87.4 | 1.6 |

| 2 | 56.7 | 2.2 | 87.6 | 0.3 | |

| 3 | 287.7 | 23.9 | 8.6 | 3.9 | |

| Geological compass | 1 | 325.16 | 89.00 | ||

| 2 | 58.90 | 87.87 | |||

| 3 | 311.59 | 4.74 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Janiszewski, M.; Torkan, M.; Uotinen, L.; Rinne, M. Rapid Photogrammetry with a 360-Degree Camera for Tunnel Mapping. Remote Sens. 2022, 14, 5494. https://doi.org/10.3390/rs14215494

Janiszewski M, Torkan M, Uotinen L, Rinne M. Rapid Photogrammetry with a 360-Degree Camera for Tunnel Mapping. Remote Sensing. 2022; 14(21):5494. https://doi.org/10.3390/rs14215494

Chicago/Turabian StyleJaniszewski, Mateusz, Masoud Torkan, Lauri Uotinen, and Mikael Rinne. 2022. "Rapid Photogrammetry with a 360-Degree Camera for Tunnel Mapping" Remote Sensing 14, no. 21: 5494. https://doi.org/10.3390/rs14215494