1. Introduction

Land cover mapping plays an instrumental role in depicting the Earth’s surface characteristics, essential for environmental monitoring and management [

1,

2]. The dynamic interplay of policy shifts and natural events continuously alters land cover, necessitating regular updates to maintain the credibility of these maps [

3,

4].

Historically, land cover mapping relied heavily on manual delineation, a process fraught with inefficiencies [

5]. The integration of computers, geographic information systems, and remote sensing technologies has revolutionized this field, leading to the development of automated methods, with machine learning emerging as a crucial component [

6]. Deep learning, a more recent advancement in this domain, has surpassed traditional machine learning methods in classification accuracy [

7]. However, deep learning’s effectiveness is contingent on the availability of large, annotated datasets that can be both costly and labor-intensive [

8]. For instance, in the U.S., a Chesapeake Bay watershed’s land cover map was produced with costly labels (USD 1.3 million over 10 months) [

9]. The current trend in land cover mapping utilizes existing land cover products as training labels [

10,

11], a practice supported by the availability of various global-scale products, including FROM-GLC30 [

12], Globaland30 [

13], ESA WorldCover [

14], and so on. However, these products are limited by their resolution, typically ranging from 10 m to 30 m, and are influenced by the data sources, classification techniques, and scales.

Now, there is a growing demand for high-resolution (HR) land cover mapping [

15]. Some studies have attempted to improve the resolution of these labels using techniques like up-sampling [

16] (i.e., the nearest neighbor method [

17]), but this approach often introduces significant noise, compromising the quality of the resulting maps [

18]. In response, researchers have explored label super-resolution (SR) using deep learning, aiming to generate HR land cover maps from low-resolution (LR) labels. For instance, Malkin et al. [

19] introduced an innovative SR loss function along with a fully convolutional neural network (FCN) that effectively generate a 1-m resolution land cover map. Nevertheless with LR labels, HR labels remains indispensable for the realization of this approach. Li et al. [

20] developed an SR loss function that utilizes 30-m low-resolution labels to produce 1-m resolution maps, further advancing this field. However, these methods face challenges due to the inherent inaccuracies in LR labels and the noise introduced by the resolution disparity, affecting the final mapping quality.

Global land cover datasets also exhibit inconsistencies in accuracy and spatial congruity, influenced by varying classification techniques, data collection timelines, and sensor types. For instance, the ESRI 2020 Global Land Use Land Cover dataset asserts an accuracy of 85%, while ESA reports a precision of about 75% [

14,

21]. And the ESRI product’s overall accuracy in China is now at 64%, whereas that of the ESA product hovers around 67% [

22]. Furthermore, classification accuracy can significantly differ even within the same dataset, such as the FROM-GLC 30 dataset, with user accuracy for grassland and shrubland at merely 38.13% and 39.04%, respectively [

12]. Consequently, the reliability of utilizing these publicly available global land cover datasets for land cover mapping directly remains open to debate.

Compared to global products, certain publicly accessible national-scale land cover products display a better level of accuracy. For instance, within the U.S. region, the National Land Cover Database (NLCD) with a resolution of 30 m shows an overall accuracy of approximately 83.1% [

23], which has become fundamental in various applications related to land cover within the U.S. [

24]. However, these datasets are confined solely to the U.S. Considering the temporal, spatial, and thematic discrepancies, models trained on national-scale data encounter limitations when it comes to being direct applied to different regions [

25]. To address such challenges, domain shift methodologies are commonly employed, such as maximum mean discrepancy (MMD) [

26] and generative adversarial networks (GANs) [

27]. Among them, the Instance-Batch Normalization Network (IBN-Net) [

28], a network focused on domain shift, effectively mitigates the effects of seasonal land cover changes, light effects, and internal grade variations on domain shift [

29].

The challenge of achieving precise, high-resolution land cover mapping is exacerbated by the limited availability of detailed labels. Within this context, the utilization of pre-existing LR land cover products to facilitate HR land cover mapping constitutes a challenging yet auspicious venture. Malkin et al. [

30] used epitomic representations and a label SR algorithm to create HR land cover maps of the northern U.S. using LR data from the southern U.S. However, this methodology exclusively navigated domain shift within the confines of the U.S. Comparatively, prior scholarly exploration has understudied the amalgamation of label SR and domain shift, thereby presenting a relatively uncharted enigma. Research on domain shift has laid the groundwork for integrating data from different regions, yet the combination of label SR and domain shift remains a relatively unexplored territory. This synergy could potentially overcome regional variations in precision and the limitations of LR labels in deep learning.

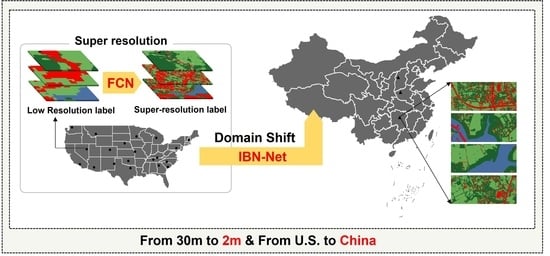

To address these challenges, this study introduces a novel approach for enhancing land cover mapping accuracy without matched labels. This novel methodology encompasses the integration of FCN for SR and IBN-Net for land cover mapping, capitalizing on HR Google Earth imagery and LR land cover products from the source domain. As a result, it yields finely detailed 2-m resolution land cover maps for the target domain without matching labels. The overall procedure of this study is depicted in

Figure 1.

4. Discussion

4.1. Comparison of Global Products

We present a subset of test regions within the target domain, encompassing diverse landscapes, such as rivers, buildings, forests, and farmlands. This presentation aims to illustrate the effectiveness of our proposed methodology in land cover mapping and to enable a comparative analysis with established global land cover mapping products, specifically, ESA WorldCover, FROM_GLC100, ESRI_LULC, and GLC_FCS. Upon examination of

Figure 12, it becomes evident that our method consistently demonstrates robust and precise mapping capabilities across varied environments while enhancing resolution. Particularly noteworthy is the clear delineation of dense concentrations of buildings, along with improved fidelity in larger land cover categories, such as forests and croplands. Additionally, finer structures are accurately delineated, highlighting the significant advancements resulting from the combined use of label super-resolution and domain adaptation.

In the Introduction section, we highlighted that various factors influence the varying accuracies of different global land cover products across different regions. Particularly concerning our target domain (China), many land cover products exhibit lower accuracies, an assertion validated in

Section 3.1 as well. By juxtaposing the results from the method proposed in this paper with global land cover products, it becomes evident that when the accuracy and resolution of global products fail to meet the need for finer land cover information, utilizing higher-accuracy products from other regions as reference labels can facilitate the generation of superior land cover maps.

4.2. Exogenous Label Noise Testing

The outcomes of our experiments highlight the potential for enhanced performance through the utilization of exogenous labels in model training. However, it is crucial to acknowledge that not all exogenous labels yield such benefits. Variations in image texture characteristics arise due to diverse factors, such as vegetation types, architectural styles, lighting conditions, and remote sensing image acquisition across different regions. Moreover, the texture features learned by deep learning models significantly impact their applicability within target areas. Consequently, under comparable levels of accuracy, outcomes derived from endogenous labels consistently outperform those from exogenous labels. Therefore, only exogenous labels attaining a certain level of accuracy with low noise have promise as training labels for domain shift.

In this section, to further investigate the impact of label noise and accuracy on domain shift with exogenous labels, different levels and types of noise were introduced to SR US_NLCD labels to assess the performance of the UNIC. Specifically, the noise was added at proportions ranging from 0.2% to 1.6%, relative to the TP of SR NLCD_US, and this noise augmentation was repeated across eight trials. The locations for introducing simulated label noise were randomly selected. For distinct classes, their corresponding confusion matrix columns, as outlined in

Section 3.1, were employed to derive the likelihood of misclassification concerning other categories. This probability computation determined how noise was injected into each class. For instance, pixels initially classified as impervious surfaces were more likely to be mislabeled as low vegetation than as other classes.

Figure 13 illustrates the evaluation metrics of SR labels following noise augmentation, along with the land cover mapping achieved by models trained using exogenous labels with varying degrees of noise. With increase in the proportion of noise, both the accuracy of SR labels and the mapping performance of UNIC experience varying degrees of decline. However, once the noise exceeds 0.42%, the mapping outcomes of models trained with exogenous labels begin to fall behind those with endogenous labels (CEIC). At a noise augmentation level of 0.58%, while falling within the range indicated by the gray region, acceptable graphical outcomes can be achieved. However, all metrics about the proposed method exhibit values lower than those of CEIC. When noise levels escalate beyond 0.66%, the mapping performance experiences a degradation that even surpasses that for exogenous training labels. Under such circumstances, the utilization of coarse exogenous training labels is insufficient to facilitate effective land cover mapping within the target domain.

In conclusion, label noise exerts an influence on the domain shift of deep learning models. The proposed method with exogenous training labels with a low degree of noise can achieve commendable outcomes. When the label noise reaches a certain threshold, it becomes more beneficial to opt for endogenous labels with lower accuracy, as opposed to selecting higher-precision exogenous labels.

4.3. Research Constraints and Opportunities

While this study has yielded significant findings, it is imperative to acknowledge its limitations. The primary constraint lies in the imbalance of the training labels, wherein the distribution of different label categories within the dataset may be uneven. This imbalance has the potential to undermine the model’s performance on certain categories, thereby compromising the accuracy and reliability of the research outcomes. Despite efforts to diversify the sampled scenarios during selection, the issue of class imbalance remains inevitable. Addressing this challenge necessitates targeted adjustments to the dataset or the implementation of other strategies to balance category distributions, thereby enhancing the model’s generalization capability and overall performance. On another note, the methodology proposed in this paper primarily emphasizes a novel approach, with multi-class land cover mapping merely serving as a medium for illustrating this method. For tasks involving semantic segmentation or classification in other domains, the applicability of this method remains pertinent, for instance, for extraction of individual land features, such as forests, water bodies, or buildings. In such tasks targeting specific object classifications, concerns regarding sample balance become less pertinent.

While the domains selected for this study encompass only China and the United States, the methods proposed herein are not confined solely to these regions. Similarly, the applicability of endogenous labels for domain shift extends beyond NLCD. Regarding the target domain chosen for this study, although numerous research teams have previously generated high-resolution land cover maps for local regions within this area, the accuracy and utility of these maps, produced by individual teams, warrant careful consideration when compared to NLCD, a nationally authoritative land cover product. In this context, results generated using existing credible land cover products carry higher credibility. The primary objective of this research is to address the challenge of insufficient matching fine labels, which incur high annotation costs. However, it is worth noting that utilizing existing finely manually annotated labels can also yield effective outcomes for mitigating the lack of labels in the target domain, thus achieving better performance in handling domain shift.

In terms of models, although this study adopts simple models and makes minor modifications only to baseline models (FCN, U-Net, ResNet-18), it is noteworthy that this paper focuses on a novel approach from an unprecedented perspective. Specifically, through the innovative method of selecting higher-precision remote labels as non-algorithmic innovations, it addresses the issue of missing training labels in semantic segmentation. Particularly for tasks with high costs in land cover mapping label production, the proposed method in this paper offers a new approach to improving mapping effectiveness for such tasks. From another perspective, the models chosen in this paper may not be the most effective ones; they serve merely as a means to validate the feasibility of the proposed approach. However, if simple models can achieve the desired results and prove the feasibility of the proposed method, further improvements to the models or selection of better models in the future may yield unexpected benefits.

5. Conclusions

For land cover mapping, achieving highly precise semantic labels through manual interpretation is typically limited to small geographic areas. Consequently, models trained with such labels often lack generalizability when applied to diverse global regions. While global land cover products cover larger spatial scales, they often suffer from varying degrees of label noise. This article presents a novel HR land cover mapping approach utilizing deep learning, thereby eliminating the requirement for precisely matched labels. The methodology involves utilizing FCN for semantic label SR, followed by the application of IBN-Net for land cover mapping using SR labels.

A comprehensive series of experiments conducted on Google Earth images and NLCD datasets demonstrates the pronounced superiority of the proposed method. The outcomes of the land cover mapping experiments reveal that, compared to endogenous labels characterized by higher levels of noise, employing exogenous NLCD labels leads to superior mapping performance within the target domain. This augmentation results in an average OA increase of 2.55%, achieving 85.48%. The diversity between the source and target domains presents challenges for domain shift, while the noise and imprecision of labels significantly impact the SR of labels and interfere with the accuracy of land cover mapping results. This necessitates a choice between utilizing local labels with lower precision or non-local labels with higher precision. Indeed, in the absence of internally generated labels with high precision and the availability of externally annotated data with a certain level of accuracy, the utilization of external annotations proves to be a more advantageous approach for enhancing the accuracy of HR land cover mapping. This helps mitigate the impact of the object appearance gap on mapping to some extent, thereby paving the way for the integration of semantic segmentation models incorporating unmatched labels.