3.2. Image Processing

The 3D reconstruction software, Agisoft PhotoScan 1.0.1, was able to perform image alignment and 3D scene reconstruction for all imagery datasets. Geo-referencing was based on camera location information, derived from GNSS and IMU data. Orthoimage, DEM and DTM computation succeeded for all imagery datasets. Resulting orthoimage ground resolution was at the level of input image ground resolution. As dense point cloud reconstruction is a very hardware-demanding task, the imagery used for DEM and DTM generation was downscaled by a factor of two to save processing time. Although DEM and DTM were exported with the corresponding orthophoto’s ground resolution, the underlying dense point cloud was built with less detail than theoretically possible.

As the produced DTMs are based on the interpolation of previously classified ground points, this method is generally prone to misclassification at dense crop stands and canopy closure. In these situations, only a small amount of ground points will be visible at all, weakening the reliability of the interpolation results. Moreover, some of the classified points may not represent the “real” ground, leading to an underestimation of crop heights. In a homogeneous field, a correction factor could compensate for this underestimation. In an inhomogeneous field, the correction factor would not be constant anymore. To avoid these problems, it is recommended to produce DTMs at sowing stage, without the need for classification and interpolation of large gaps.

Geo-referencing accuracy was assessed by the help of 24 Ground Control Points (GCPs), which were installed permanently and measured with RTK-GNSS equipment. Heavy rainfalls in July silted many of the GCPs. In addition, others have been destroyed by intensive mechanical weed control in between the corn strips. Unfortunately, the GCPs were not renewed before performing flight missions at Z39 and Z58. As a consequence, imagery from these stages lack accurate GCP information. Thus, accuracy assessment was performed on Z32 imagery, only.

Table 3.

Resulting root mean squared errors (m) (RMSE) at ground control point (GCP) locations for indirectly (GCP-based) and directly (GNSS- and IMU-based) geo-referenced imagery at Z32 for all image ground resolutions.

Table 3.

Resulting root mean squared errors (m) (RMSE) at ground control point (GCP) locations for indirectly (GCP-based) and directly (GNSS- and IMU-based) geo-referenced imagery at Z32 for all image ground resolutions.

| Geo-Reference | Coordinate Component | Ground Resolution (m·px−1) |

|---|

| 0.02 | 0.04 | 0.06 | 0.08 | 0.10 |

|---|

| GCPs | Horizontal | 0.058 | 0.063 | 0.084 | 0.089 | 0.082 |

| Vertical | 0.068 | 0.059 | 0.051 | 0.046 | 0.075 |

| GNSS & IMU | Horizontal | 0.430 | 0.375 | 0.399 | 0.409 | 0.376 |

| Vertical | 0.303 | 0.273 | 0.283 | 0.320 | 0.379 |

In addition to direct (GNSS- and IMU-based) geo-referencing, indirect (GCP-based) geo-referencing was conducted on Z32 imagery for enhanced CSM quality assessment.

Table 3 lists the resulting root mean squared errors of a comparison of measured and computed GCP coordinates for both methods and all image ground resolutions at Z32. As expected, indirectly geo-referenced imagery showed smaller residuals than the directly geo-referenced one. Horizontal RMSEs for indirectly geo-referenced imagery ranged from 0.058 to 0.089 m, whereas vertical RMSEs ranged from 0.046 to 0.075 m. In contrast to that, horizontal RMSEs for directly geo-referenced imagery ranged from 0.375 to 0.430 m, whereas vertical RMSEs ranged from 0.273 to 0.379 m. The accuracies of both methods are in accordance with the findings of Turner

et al. [

55] and Ruiz

et al. [

56], although vertical accuracy performs slightly better than expected. GCP-based accuracy assessment for directly geo-referenced imagery at Z39 and Z58 was not performed. Nevertheless, comparison of identifiable field boundaries with those of Z32 did not show excessive horizontal accuracy errors for all resolutions.

The developed R-routine managed to calculate CSMs, VIs and all threshold variants for every imagery dataset. CSM quality was assessed by comparison of mean plot heights at Z32, derived from accurate and indirectly geo-referenced imagery, with those derived from less accurate and directly geo-referenced imagery.

Table 4 shows the resulting root mean squared errors for plot height comparisons, ranging from 0.024 m for high resolution imagery to 0.008 m for low resolution imagery. With a difference of 0.20 m in between the highest and lowest mean plot height at Z32, direct geo-referencing shows little influence on mean plot height computation. Unfortunately, independent reference measurements, e.g., manual height measurements, 3D laser scanning datasets or CSMs, derived by other SfM software packages, were not available to assess absolute CSM accuracy. Therefore, subsequent analyses and results are proven for this dataset, only.

Table 4.

Resulting root mean squared errors (m) (RMSE) of comparing mean plot heights calculated from indirectly (GCP-based) and directly (GNSS- and IMU-based) geo-referenced imagery at Z32 for all image ground resolutions.

Table 4.

Resulting root mean squared errors (m) (RMSE) of comparing mean plot heights calculated from indirectly (GCP-based) and directly (GNSS- and IMU-based) geo-referenced imagery at Z32 for all image ground resolutions.

| Value | Coordinate Component | Ground Resolution (m·px−1) |

|---|

| 0.02 | 0.04 | 0.06 | 0.08 | 0.10 |

|---|

| Plot Height | Vertical | 0.024 | 0.010 | 0.009 | 0.010 | 0.008 |

Horizontal alignment errors of directly geo-referenced imagery strongly influence the results of automatic feature extraction. To account for misalignment, the polygonal shapefile, containing this field trial’s plot information, was realigned individually for all imagery at all growth stages and image ground resolutions.

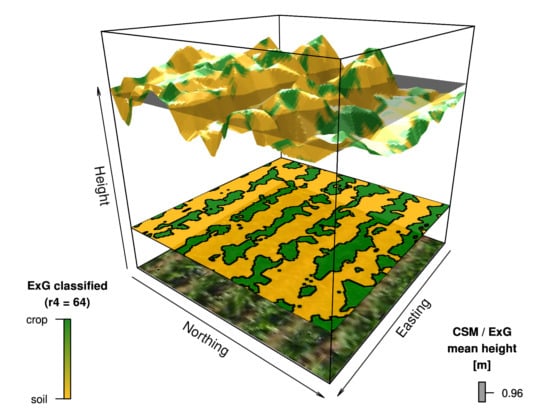

The computed original Ridler thresholds

r3 were regarded as suitable for automatic separation of crop and soil, as well as most of the threshold variants

r2 and

r4. In contrast to that, threshold variants

r1 and

r5 showed results of crop overestimation at threshold

r1 and underestimation at threshold

r5, respectively (see e.g.,

Figure 3). However, mean plot heights

Hirstv and crop coverage factors

Cirstv were computed for all strategies at every threshold level

r1−5 for subsequent comparison of prediction performance.

3.3. Modeling Strategy

All results of the applied corn grain yield prediction strategies are summarized in

Table 5, whereas

Figure 5 visualizes the most important findings. Strategy

S3 was evaluated for collinearity of its predictor variables, mean crop height and crop coverage. Critical collinearity at any crop growth stage was not found. As all strategies built on data from one growing period, leave-one-out cross-validation was conducted to evaluate each model’s predictive quality.

Table 6 shows the resulting root mean squared errors of prediction (RMSEP), ranging from 0.67 to 1.28 t·ha

−1 (8.8% to 16.9%).

Crop growth stage Z32 was neglected in

Figure 5, as none of the strategies resulted in

R2 determination coefficient values higher than 0.56. As crops were still small and stems were beginning to elongate, crops’ leaves were not overlapping at this point in time. Lacking canopy closure, the prediction models had to account for information contained at the leaf level. Therefore, imagery with highest resolutions of 0.02 and 0.04 m·px

−1 performed best and showed significant

R2 values. In contrast, lower resolution datasets did not provide much detail, resulting in low

R2 values. Strategy

S3 was generally able to significantly improve prediction accuracies of strategies

S1 and

S2 for all VIs by adding the crop coverage factor as the second predictor variable. Although the highest resolution imagery of 0.02 m·px

−1 performed best at this stage, even higher resolutions may be more appropriate for CSM and, thus, mean plot height generation. Reaching maximum

R2 values of 0.56 and considering additional environmental impacts on crop growth during the growing season, none of the applied strategies was assessed to be reliable for early-season corn grain yield prediction.

Figure 5.

Resulting determination coefficients R2 of modeling strategies S13 for all VIs and aerial image ground resolutions at crop growth stages Z39 and Z58. Grey values represent R2 values for strategy S1, whereas black values represent strategy S2 at Ridler threshold r3 and colored values represent strategy S3 at Ridler threshold r3, respectively. In addition to the R2 values of strategies S2 and S3 at Ridler threshold r3, minimum and maximum R2 values of the four remaining threshold variants are indicated as range bars for every aerial image ground resolution individually.

Figure 5.

Resulting determination coefficients R2 of modeling strategies S13 for all VIs and aerial image ground resolutions at crop growth stages Z39 and Z58. Grey values represent R2 values for strategy S1, whereas black values represent strategy S2 at Ridler threshold r3 and colored values represent strategy S3 at Ridler threshold r3, respectively. In addition to the R2 values of strategies S2 and S3 at Ridler threshold r3, minimum and maximum R2 values of the four remaining threshold variants are indicated as range bars for every aerial image ground resolution individually.

Table 5.

The resulting determination coefficients R2 of the prediction of corn grain yield by applying strategies S1−3 for all combinations of VIs, aerial image ground resolutions, crop growth stages and computed Ridler thresholds. Significance codes for predictor variable crop height are represented as *in superscript, whereas significance codes for predictor variable crop coverage factor are represented as *in subscript (appearing only in strategy S3).

Table 5.

The resulting determination coefficients R2 of the prediction of corn grain yield by applying strategies S1−3 for all combinations of VIs, aerial image ground resolutions, crop growth stages and computed Ridler thresholds. Significance codes for predictor variable crop height are represented as *in superscript, whereas significance codes for predictor variable crop coverage factor are represented as *in subscript (appearing only in strategy S3).

| Ground Res. (m·px−1) | ExG | VIg | PPRb |

|---|

| Z | Sx | rx | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 |

|---|

| Z32 | S1 | | 0.48*** | 0.26*** | 0.12** | 0.05 | 0.09* | 0.48*** | 0.26*** | 0.12** | 0.05 | 0.09* | 0.48*** | 0.26*** | 0.12** | 0.05 | 0.09* |

| Z32 | S2 | r1 | 0.54*** | 0.27*** | 0.12** | 0.05 | 0.09* | 0.53*** | 0.25*** | 0.11** | 0.05 | 0.08* | 0.46*** | 0.25*** | 0.11** | 0.05 | 0.09* |

| Z32 | S2 | r2 | 0.55*** | 0.24*** | 0.11** | 0.04 | 0.08* | 0.55*** | 0.21*** | 0.09* | 0.03 | 0.07* | 0.47*** | 0.21*** | 0.08 | 0.03 | 0.08* |

| Z32 | S2 | r3 | 0.55*** | 0.23*** | 0.08* | 0.03 | 0.08* | 0.46*** | 0.16*** | 0.05 | 0.02 | 0.05 | 0.46*** | 0.18*** | 0.05 | 0.02 | 0.07* |

| Z32 | S2 | r4 | 0.53*** | 0.20*** | 0.06* | 0.02 | 0.06* | 0.36*** | 0.11** | 0.02 | 0.01 | 0.04 | 0.42*** | 0.14** | 0.03 | 0.01 | 0.04 |

| Z32 | S2 | r5 | 0.51*** | 0.17*** | 0.04 | 0.01 | 0.05 | 0.25*** | 0.09* | 0.01 | 0.01 | 0.05 | 0.37*** | 0.09* | 0.02 | 0.00 | 0.03 |

| Z32 | S3 | r1 | 0.54*** | 0.30* | 0.21* | 0.20** | 0.21** | 0.53*** | 0.25* | 0.13 | 0.07 | 0.10 | | | 0.19* | 0.13* | 0.18* |

| Z32 | S3 | r2 | 0.55*** | | 0.32*** | 0.31*** | 0.33*** | 0.56*** | | 0.23** | 0.19*** | 0.21** | | | 0.27*** | 0.23*** | 0.27*** |

| Z32 | S3 | r3 | 0.55*** | | 0.35*** | 0.34*** | 0.38*** | | | 0.32*** | 0.31*** | 0.34*** | | | 0.33*** | 0.30*** | 0.33*** |

| Z32 | S3 | r4 | 0.55*** | | 0.33*** | 0.32*** | 0.38*** | | | 0.31*** | 0.32*** | 0.37*** | | | 0.32*** | 0.30*** | 0.33*** |

| Z32 | S3 | r5 | 0.52*** | | 0.29*** | 0.29*** | 0.34*** | | | 0.28*** | 0.29*** | 0.36*** | | | 0.30*** | 0.29*** | 0.32*** |

| Z39 | S1 | | 0.59*** | 0.68*** | 0.68*** | 0.63*** | 0.59*** | 0.59*** | 0.68*** | 0.68*** | 0.63*** | 0.59*** | 0.59*** | 0.68*** | 0.68*** | 0.63*** | 0.59*** |

| Z39 | S2 | r1 | 0.60*** | 0.69*** | 0.68*** | 0.62*** | 0.58*** | 0.59*** | 0.68*** | 0.68*** | 0.62*** | 0.59*** | 0.57*** | 0.69*** | 0.68*** | 0.62*** | 0.58*** |

| Z39 | S2 | r2 | 0.62*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** | 0.61*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** | 0.59*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** |

| Z39 | S2 | r3 | 0.63*** | 0.71*** | 0.68*** | 0.62*** | 0.58*** | 0.63*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** | 0.60*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** |

| Z39 | S2 | r4 | 0.64*** | 0.71*** | 0.68*** | 0.62*** | 0.58*** | 0.63*** | 0.70*** | 0.67*** | 0.61*** | 0.57*** | 0.61*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** |

| Z39 | S2 | r5 | 0.64*** | 0.71*** | 0.68*** | 0.62*** | 0.58*** | 0.63*** | 0.70*** | 0.66*** | 0.60*** | 0.56*** | 0.62*** | 0.70*** | 0.68*** | 0.62*** | 0.58*** |

| Z39 | S3 | r1 | 0.60*** | 0.69*** | 0.69*** | 0.62*** | 0.58*** | 0.59*** | 0.68*** | 0.68*** | 0.63*** | 0.60*** | 0.59*** | 0.69*** | 0.68*** | 0.63*** | 0.60*** |

| Z39 | S3 | r2 | | 0.71*** | 0.70*** | 0.64*** | 0.60*** | 0.62*** | 0.70*** | 0.68*** | 0.63*** | 0.59*** | 0.59*** | 0.71*** | 0.69*** | 0.62*** | 0.58*** |

| Z39 | S3 | r3 | | 0.72*** | | | | 0.63*** | 0.70*** | 0.68*** | 0.62*** | 0.59*** | 0.60*** | 0.71*** | 0.69*** | 0.63*** | 0.59*** |

| Z39 | S3 | r4 | | | | | | 0.64*** | 0.71*** | 0.68*** | 0.62*** | 0.59*** | 0.61*** | 0.72*** | 0.70*** | 0.63*** | 0.59*** |

| Z39 | S3 | r5 | | | | | | 0.64*** | 0.71*** | 0.68*** | 0.62*** | 0.58*** | 0.62*** | 0.71*** | | 0.64*** | 0.60*** |

| Z58 | S1 | | 0.62*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** | 0.62*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** | 0.62*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** |

| Z58 | S2 | r1 | 0.59*** | 0.68*** | 0.65*** | 0.64*** | 0.67*** | 0.64*** | 0.68*** | 0.64*** | 0.64*** | 0.68*** | 0.59*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S2 | r2 | 0.55*** | 0.69*** | 0.65*** | 0.65*** | 0.67*** | 0.64*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** | 0.56*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S2 | r3 | 0.52*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** | 0.64*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** | 0.53*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S2 | r4 | 0.49*** | 0.69*** | 0.65*** | 0.65*** | 0.67*** | 0.63*** | 0.68*** | 0.64*** | 0.64*** | 0.67*** | 0.52*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S2 | r5 | 0.46*** | 0.69*** | 0.65*** | 0.65*** | 0.66*** | 0.62*** | 0.68*** | 0.63*** | 0.63*** | 0.66*** | 0.52*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S3 | r1 | 0.60*** | 0.69*** | 0.65*** | 0.64*** | 0.67*** | 0.64*** | 0.68*** | 0.65*** | 0.64*** | 0.68*** | 0.59*** | 0.69*** | 0.65*** | 0.65*** | 0.69*** |

| Z58 | S3 | r2 | 0.55*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** | 0.65*** | 0.68*** | 0.65*** | 0.64*** | 0.67*** | 0.56*** | 0.69*** | 0.65*** | 0.66*** | 0.69*** |

| Z58 | S3 | r3 | 0.53*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** | 0.65*** | 0.69*** | 0.65*** | 0.64*** | 0.67*** | 0.53*** | 0.69*** | 0.65*** | 0.65*** | 0.69*** |

| Z58 | S3 | r4 | | 0.69*** | 0.65*** | 0.66*** | 0.69*** | | 0.69*** | 0.66*** | 0.65*** | 0.67*** | 0.52*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

| Z58 | S3 | r5 | | 0.69*** | 0.66*** | 0.67*** | 0.68*** | | | | 0.65*** | 0.67*** | 0.52*** | 0.69*** | 0.65*** | 0.65*** | 0.68*** |

Z39 was identified as the crop growth stage with the best prediction performance.

Figure 5 points out the most interesting findings. Generally, all VIs performed well, although the best results were achieved using ExG. High and intermediate ground resolutions of 0.04 and 0.06 m·px

−1 showed

R2 values of up to 0.74 for strategy

S3. However, strategy

S3 improved results for ExG only. VIg and PPRb did not show significant improvements. Strategy

S2 outperformed strategy

S1 for resolutions of 0.02 and 0.04 m·px

−1, whereas at intermediate and low ground resolutions, strategies

S1 and

S2 did not differ in prediction accuracy. Coarse VI layer information and the beginning of canopy closure seemed to level out differences of simple plot mean height computation and the classification-based one. Unexpectedly, the highest resolution of 0.02 m·px

−1 performed worse than high/intermediate resolutions. Although strategies

S2 and

S3 significantly improved prediction using ExG, highest resolution strategies appeared to be prone to higher noise and a scale effect, as the level of resolution leads to analysis in between leaf and canopy level. As a consequence, CSM and classification results may be biased.

Table 6.

The resulting root mean squared errors of prediction (RMSEP) of the leave-one-out cross-validation for evaluation of the predictive quality of applying strategies S1−3 for all combinations of VIs, aerial image ground resolutions, crop growth stages and computed Ridler thresholds.

Table 6.

The resulting root mean squared errors of prediction (RMSEP) of the leave-one-out cross-validation for evaluation of the predictive quality of applying strategies S1−3 for all combinations of VIs, aerial image ground resolutions, crop growth stages and computed Ridler thresholds.

| Ground Res. (m·px−1) | ExG | VIg | PPRb |

|---|

| Z | Sx | rx | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 | 0.02 | 0.04 | 0.06 | 0.08 | 0.10 |

|---|

| Z32 | S1 | | 0.93 | 1.11 | 1.20 | 1.25 | 1.21 | 0.93 | 1.11 | 1.20 | 1.25 | 1.21 | 0.93 | 1.11 | 1.20 | 1.25 | 1.21 |

| Z32 | S2 | r1 | 0.88 | 1.11 | 1.21 | 1.25 | 1.21 | 0.89 | 1.13 | 1.21 | 1.25 | 1.22 | 0.94 | 1.12 | 1.21 | 1.25 | 1.21 |

| Z32 | S2 | r2 | 0.86 | 1.13 | 1.21 | 1.25 | 1.22 | 0.87 | 1.15 | 1.22 | 1.26 | 1.23 | 0.93 | 1.14 | 1.22 | 1.25 | 1.22 |

| Z32 | S2 | r3 | 0.87 | 1.14 | 1.23 | 1.26 | 1.22 | 0.95 | 1.17 | 1.24 | 1.26 | 1.24 | 0.94 | 1.16 | 1.24 | 1.26 | 1.23 |

| Z32 | S2 | r4 | 0.88 | 1.15 | 1.24 | 1.26 | 1.23 | 1.03 | 1.20 | 1.26 | 1.27 | 1.24 | 0.98 | 1.19 | 1.26 | 1.27 | 1.24 |

| Z32 | S2 | r5 | 0.90 | 1.17 | 1.25 | 1.27 | 1.24 | 1.11 | 1.22 | 1.27 | 1.27 | 1.24 | 1.02 | 1.21 | 1.27 | 1.28 | 1.26 |

| Z32 | S3 | r1 | 0.90 | 1.10 | 1.16 | 1.17 | 1.15 | 0.90 | 1.14 | 1.23 | 1.26 | 1.23 | 0.91 | 1.10 | 1.19 | 1.23 | 1.19 |

| Z32 | S3 | r2 | 0.88 | 1.04 | 1.07 | 1.08 | 1.06 | 0.87 | 1.08 | 1.14 | 1.16 | 1.15 | 0.91 | 1.07 | 1.13 | 1.16 | 1.13 |

| Z32 | S3 | r3 | 0.88 | 1.02 | 1.05 | 1.05 | 1.02 | 0.90 | 1.03 | 1.07 | 1.08 | 1.05 | 0.91 | 1.04 | 1.08 | 1.10 | 1.07 |

| Z32 | S3 | r4 | 0.88 | 1.03 | 1.06 | 1.07 | 1.03 | 0.95 | 1.05 | 1.08 | 1.07 | 1.03 | 0.93 | 1.04 | 1.08 | 1.09 | 1.07 |

| Z32 | S3 | r5 | 0.90 | 1.07 | 1.10 | 1.09 | 1.06 | 1.00 | 1.08 | 1.10 | 1.09 | 1.04 | 0.96 | 1.07 | 1.09 | 1.10 | 1.07 |

| Z39 | S1 | | 0.83 | 0.73 | 0.74 | 0.79 | 0.83 | 0.83 | 0.73 | 0.74 | 0.79 | 0.83 | 0.83 | 0.73 | 0.74 | 0.79 | 0.83 |

| Z39 | S2 | r1 | 0.83 | 0.71 | 0.73 | 0.80 | 0.83 | 0.83 | 0.73 | 0.74 | 0.80 | 0.83 | 0.85 | 0.72 | 0.74 | 0.80 | 0.83 |

| Z39 | S2 | r2 | 0.80 | 0.70 | 0.73 | 0.80 | 0.83 | 0.81 | 0.70 | 0.74 | 0.80 | 0.83 | 0.83 | 0.71 | 0.74 | 0.80 | 0.83 |

| Z39 | S2 | r3 | 0.79 | 0.70 | 0.73 | 0.80 | 0.83 | 0.79 | 0.70 | 0.74 | 0.80 | 0.84 | 0.81 | 0.70 | 0.74 | 0.80 | 0.83 |

| Z39 | S2 | r4 | 0.78 | 0.69 | 0.73 | 0.80 | 0.84 | 0.78 | 0.70 | 0.75 | 0.81 | 0.85 | 0.81 | 0.70 | 0.74 | 0.80 | 0.83 |

| Z39 | S2 | r5 | 0.77 | 0.69 | 0.74 | 0.80 | 0.84 | 0.78 | 0.70 | 0.76 | 0.82 | 0.86 | 0.80 | 0.70 | 0.74 | 0.80 | 0.83 |

| Z39 | S3 | r1 | 0.84 | 0.72 | 0.74 | 0.81 | 0.85 | 0.84 | 0.73 | 0.74 | 0.80 | 0.83 | 0.84 | 0.73 | 0.75 | 0.80 | 0.83 |

| Z39 | S3 | r2 | 0.78 | 0.71 | 0.73 | 0.79 | 0.83 | 0.81 | 0.71 | 0.75 | 0.81 | 0.84 | 0.84 | 0.70 | 0.74 | 0.81 | 0.85 |

| Z39 | S3 | r3 | 0.75 | 0.69 | 0.70 | 0.76 | 0.79 | 0.80 | 0.71 | 0.76 | 0.81 | 0.84 | 0.83 | 0.69 | 0.73 | 0.80 | 0.84 |

| Z39 | S3 | r4 | 0.73 | 0.68 | 0.68 | 0.73 | 0.76 | 0.79 | 0.70 | 0.75 | 0.81 | 0.84 | 0.81 | 0.69 | 0.73 | 0.80 | 0.83 |

| Z39 | S3 | r5 | 0.71 | 0.67 | 0.68 | 0.72 | 0.74 | 0.78 | 0.70 | 0.75 | 0.81 | 0.85 | 0.81 | 0.70 | 0.73 | 0.79 | 0.83 |

| Z58 | S1 | | 0.82 | 0.72 | 0.77 | 0.77 | 0.74 | 0.82 | 0.72 | 0.77 | 0.77 | 0.74 | 0.82 | 0.72 | 0.77 | 0.77 | 0.74 |

| Z58 | S2 | r1 | 0.85 | 0.72 | 0.76 | 0.76 | 0.73 | 0.79 | 0.72 | 0.77 | 0.77 | 0.73 | 0.85 | 0.71 | 0.76 | 0.76 | 0.73 |

| Z58 | S2 | r2 | 0.89 | 0.71 | 0.76 | 0.76 | 0.73 | 0.79 | 0.72 | 0.77 | 0.77 | 0.73 | 0.89 | 0.71 | 0.76 | 0.76 | 0.73 |

| Z58 | S2 | r3 | 0.93 | 0.71 | 0.76 | 0.76 | 0.73 | 0.80 | 0.72 | 0.77 | 0.77 | 0.74 | 0.91 | 0.71 | 0.76 | 0.76 | 0.73 |

| Z58 | S2 | r4 | 0.96 | 0.71 | 0.76 | 0.76 | 0.73 | 0.80 | 0.72 | 0.78 | 0.77 | 0.74 | 0.93 | 0.71 | 0.76 | 0.76 | 0.73 |

| Z58 | S2 | r5 | 0.98 | 0.71 | 0.76 | 0.76 | 0.74 | 0.82 | 0.72 | 0.78 | 0.77 | 0.75 | 0.93 | 0.72 | 0.76 | 0.76 | 0.73 |

| Z58 | S3 | r1 | 0.85 | 0.72 | 0.77 | 0.77 | 0.74 | 0.80 | 0.73 | 0.78 | 0.78 | 0.74 | 0.86 | 0.72 | 0.77 | 0.76 | 0.72 |

| Z58 | S3 | r2 | 0.90 | 0.72 | 0.77 | 0.77 | 0.74 | 0.80 | 0.73 | 0.78 | 0.78 | 0.74 | 0.90 | 0.72 | 0.77 | 0.76 | 0.72 |

| Z58 | S3 | r3 | 0.94 | 0.72 | 0.77 | 0.76 | 0.73 | 0.79 | 0.72 | 0.77 | 0.78 | 0.74 | 0.92 | 0.72 | 0.77 | 0.76 | 0.73 |

| Z58 | S3 | r4 | 0.93 | 0.72 | 0.77 | 0.75 | 0.73 | 0.78 | 0.71 | 0.76 | 0.77 | 0.74 | 0.93 | 0.72 | 0.77 | 0.76 | 0.73 |

| Z58 | S3 | r5 | 0.92 | 0.72 | 0.76 | 0.75 | 0.73 | 0.78 | 0.71 | 0.76 | 0.76 | 0.74 | 0.93 | 0.72 | 0.77 | 0.77 | 0.73 |

At Z58, results were strongly influenced by the occurrence of canopy closure. Hence, neither strategy S2 nor strategy S3 were able to significantly improve the corn grain yield prediction performance of strategy S1. Moreover, highest resolution strategies showed similar patterns as in Z39. Except using VIg, imagery at a ground resolution of 0.02 m·px−1 seemed to underlay CSM and misclassification as in Z39. All other resolutions performed comparatively well, independent of applied strategy and VI. Although, these resolutions did not reach the maximum R2 values of Z39, they were still considered as suitable for prediction.

Figure 6.

Spatial illustration of plot-wise distribution of harvested corn grain yield (top), corn grain yield predicted by strategy S3 at crop growth stage Z39, with ExG at Ridler threshold r4 and an aerial image ground resolution of 0.04 m·px−1 (middle) and the resulting prediction error of this strategy (bottom). For this strategy, the total root mean squared error of prediction (RMSEP) equals 0.68 t·ha−1 (8.8%).

Figure 6.

Spatial illustration of plot-wise distribution of harvested corn grain yield (top), corn grain yield predicted by strategy S3 at crop growth stage Z39, with ExG at Ridler threshold r4 and an aerial image ground resolution of 0.04 m·px−1 (middle) and the resulting prediction error of this strategy (bottom). For this strategy, the total root mean squared error of prediction (RMSEP) equals 0.68 t·ha−1 (8.8%).

Table 7 summarizes the key findings. The most suitable resolution and modeling strategy depends on the crop growth stage. Due to row-based cultivation of corn and missing canopy closure, early growth stages require very high resolution imagery for accurate CSM computation and classification-based separation of crop and soil. Therefore, strategies

S2 and

S3 result in higher

R2 values than strategy

S1 (

R2 ≤ 0.56). With ongoing crop development and beginning canopy closure, high resolution imagery and crop/soil classification gets less and less important. Highest resolution imagery showed a significant reduction of prediction accuracy at mid-season growth stages. All other imagery resolutions performed almost equally well (approximately 0.60 ≤

R2 ≤ 0.70) at all strategies

S1–3 within these stages. Best prediction results were achieved by applying strategy

S2 and especially strategy

S3 at Z39 (

R2 ≤ 0.74). Although strategy

S3 proved to have good performance at this specific growth stage, further investigation of the influence of crop coverage factor

Cirstv on the prediction results of this multiple linear regression strategy seems of great interest.

Table 7.

Overview of the best performing parameters for early- to mid-season corn grain yield prediction at different crop growth stages. So far, the increase in prediction performance in strategy S3 appears to underlay an unknown factor. Therefore, strategy S3 is listed in brackets.

Table 7.

Overview of the best performing parameters for early- to mid-season corn grain yield prediction at different crop growth stages. So far, the increase in prediction performance in strategy S3 appears to underlay an unknown factor. Therefore, strategy S3 is listed in brackets.

| | Growth Stage |

|---|

| Z32 | Z39 | Z58 |

|---|

| Ground Resolution | highest/high | high/intermediate | high/intermediate/low |

| Vegetation Index | ExG | ExG | VIg |

| Prediction Strategy | S2 / (S3) | S2 / (S3) | S1 / S2 / (S3) |

These findings indicate the best corn grain yield prediction at mid-season crop growth stages Z39 and Z58. They are in accordance with the findings of Yin

et al. [

37]. Nevertheless, none of the strategies showed results comparable to the best predictions of Yin

et al. [

37]. Depending on the growth stage and crop rotation system, Yin

et al. [

37] stated significant determination coefficients of 0.25 ≤

R2 ≤ 0.89, whereas low

R2 values were achieved at early-season growth stages, only.

Applying strategy

S3 at Z39,

Figure 6 visualizes plot-wise prediction results and compares them to the harvested corn grain yield. Using ExG at Ridler threshold

r4 and an aerial image ground resolution of 0.04 m·px

−1, the total RMSEP equals 0.68 t·ha

−1 (8.8%). Although this strategy performed best, the ANOVA of the field trial’s input factors did not show significant influence of sowing density on corn grain yield. As strategy

S3 utilizes computed crop coverage

Cirstvas the estimator for sowing/stand density, the increase in prediction performance seems to underlay another factor, correlated with

Cirstv. Other combinations of strategy

S3 and VIg/PPRb did not show improved results compared to strategy

S2.