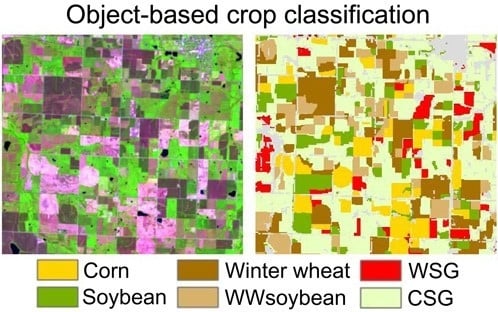

Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Data Set

| Number | Date | DOY | Number | Date | DOY |

|---|---|---|---|---|---|

| 1 | 26 Februar 2006 | 057 | 14 | 7 July 2007 | 188 |

| 2 | 6 March 2007 | 065 | 15 | 20 July 2007M | 201 |

| 3 | 14 March 2006 | 073 | 16 | 5 August 2006 | 217 |

| 4 | 2 April 2007 | 092 | 17 | 8 August 2007 | 220 |

| 5 | 7 April 2007M | 097 | 18 | 21 August 2008 | 234 |

| 6 | 18 April 2007 | 108 | 19 | 6 September 2006 | 249 |

| 7 | 23 April 2007M | 113 | 20 | 14 September 2007M | 257 |

| 8 | 9 May 2007M | 129 | 21 | 22 September 2007M | 265 |

| 9 | 20 May 2007 | 140 | 22 | 29 September 2008 | 273 |

| 10 | 25 May 2007M | 145 | 23 | 8 October 2006 | 281 |

| 11 | 2 June 2006 | 153 | 24 | 24 October 2006 | 297 |

| 12 | 10 June 2007M | 161 | 25 | 30 October 2008 | 304 |

| 13 | 21 June 2007 | 172 | 26 | 9 November 2006 | 313 |

| Crop Type | Training | Validation |

|---|---|---|

| Corn | 155 | 83 |

| Soybean | 131 | 70 |

| Winter wheat | 134 | 72 |

| WWSoybean | 121 | 64 |

| WSG | 126 | 66 |

| CSG | 155 | 83 |

| In total | 822 | 438 |

2.2. Feature Analysis and Selection

2.3. OBIA Segmentation and Quality Assessment

2.4. Decision Tree Classification

3. Results

3.1. Feature Analysis and Selection

| Feature | Tolerance | F Value | Wilks‘ Lambda |

|---|---|---|---|

| NDVI TSI 4 | 0.234 | 23.636 | 0.005 |

| NDVI 01 | 0.873 | 23.108 | 0.005 |

| NDVI 08 | 0.516 | 18.644 | 0.004 |

| NDVI 06 | 0.072 | 17.219 | 0.004 |

| NDVI 20 | 0.04 | 10.918 | 0.004 |

| NDVI 26 | 0.607 | 10.828 | 0.004 |

| NDVI TSI 3 | 0.291 | 9.992 | 0.004 |

| NDVI TSI 5 | 0.036 | 9.757 | 0.004 |

| NDVI 14 | 0.212 | 6.904 | 0.004 |

| NDVI 05 | 0.064 | 5.557 | 0.004 |

| NDVI 09 | 0.077 | 3.831 | 0.004 |

| NDVI 19 | 0.1 | 3.754 | 0.004 |

| NDVI 13 | 0.038 | 3.428 | 0.004 |

| NDVI 12 | 0.141 | 3.248 | 0.004 |

| NDVI 24 | 0.219 | 3.248 | 0.004 |

| NDVI 17 | 0.308 | 2.687 | 0.004 |

| NDVI 25 | 0.082 | 2.649 | 0.004 |

| NDVI 07 | 0.042 | 2.609 | 0.004 |

| NDVI 22 | 0.258 | 2.607 | 0.004 |

| NDVI 11 | 0.234 | 2.215 | 0.004 |

| NDVI 16 | 0.322 | 2.164 | 0.004 |

| NDVI 10 | 0.206 | 2.14 | 0.004 |

| NDVI TSI 2 | 0.118 | 2.033 | 0.004 |

| NDVI 21 | 0.114 | 1.919 | 0.004 |

| NDVI 23 | 0.307 | 1.698 | 0.004 |

| NDVI 15 | 0.109 | 1.243 | 0.004 |

| NDVI TSI 1 | 0.019 | 1.111 | 0.004 |

| NDVI 02 | 0.038 | 1.111 | 0.004 |

| NDVI 03 | 0.125 | 0.964 | 0.004 |

| NDVI 18 | 0.181 | 0.822 | 0.004 |

| NDVI 04 | 0.122 | 0.627 | 0.004 |

3.2. Image Segmentation and Quality Assessment

3.3. Crop Classification Using Object-Based Metrics

| Class | Reference | ||||||

|---|---|---|---|---|---|---|---|

| Corn | Soybean | WW | WWsoy | WSG | CSG | UA | |

| Corn | 70 | 8 | 0 | 0 | 1 | 0 | 88.61 |

| Soybean | 9 | 59 | 0 | 0 | 2 | 0 | 84.29 |

| WW | 0 | 0 | 68 | 7 | 0 | 0 | 90.67 |

| WWsoy | 0 | 0 | 2 | 56 | 0 | 0 | 96.55 |

| WSG | 4 | 2 | 0 | 0 | 63 | 1 | 90.00 |

| CSG | 0 | 1 | 2 | 1 | 0 | 82 | 95.35 |

| PA | 84.34 | 84.29 | 94.44 | 87.5 | 95.45 | 98.80 | |

| OA | 90.87 | ||||||

| Kappa | 89.02 | ||||||

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Foley, J.A.; DeFries, R.; Asner, G.P.; Bardord, C.; Bonan, G.; Carpenter, S.R.; Stuart Chapin, F.; Coe, M.T.; Daily, G.C.; Gibbs, H.K.; et al. Global consequences of land use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef] [PubMed]

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a cultivated planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef] [PubMed]

- Tilman, D.; Cassman, K.G.; Matson, P.A.; Naylor, R.; Polasky, S. Agricultural sustainability and intensive production practices. Nature 2002, 418, 671–677. [Google Scholar] [CrossRef] [PubMed]

- Gibbs, H.K.; Ruesch, A.S.; Achard, F.; Clayton, M.K.; Holmgren, P.; Ramankutty, N.; Foley, J.A. Tropical forests were the primary sources of new agricultural land in the 1980s and 1990s. Proc. Natl. Acad. Sci. USA 2010, 107, 16732–16737. [Google Scholar] [CrossRef] [PubMed]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- Castillejo-González, I.L.; López-Granados, F.; García-Ferrer, A.; Peña-Barragán, J.M.; Jurado-Expósito, M.; de la Orden, M.S. Object- and pixel-based analysis for mapping crops and their agro-environmental associated measures using QuickBird imagery. Comput. Electron. Agric. 2009, 68, 207–215. [Google Scholar] [CrossRef]

- Wang, C.; Jamison, B.E.; Spicci, A.A. Trajectory-based warm season grassland mapping in Missouri prairies with multi-temporal ASTER imagery. Remote Sens. Environ. 2010, 114, 531–539. [Google Scholar] [CrossRef]

- Serra, P.; Pons, X. Monitoring farmers’ decisions on Mediterranean irrigated crops using satellite image time series. Int. J. Remote Sens. 2008, 29, 2293–2316. [Google Scholar] [CrossRef]

- Simonneaux, V.; Duchemin, B.; Helson, D.; Er-Raki, S.; Olioso, A.; Chehbouni, A.G. The use of high-resolution image time series for crop classification and evapotranspiration estimate over an irrigated area in central Morocco. Int. J. Remote Sens. 2008, 29, 95–116. [Google Scholar] [CrossRef] [Green Version]

- Hilker, T.; Wulder, M.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L. Large-area crop mapping using time-series MODIS 250 m NDVI data: An assessment for the U.S. Central Great Plains. Remote Sens. Environ. 2008, 112, 1096–1116. [Google Scholar] [CrossRef]

- Lu, L.; Kuenzer, C.; Guo, H.; Li, Q.; Long, T.; Li, X. A novel land cover classification map based on a MODIS time-series in Xinjiang, China. Remote Sens. 2014, 6, 3387–3408. [Google Scholar] [CrossRef]

- Wang, C.; Hunt, E.R.; Zhang, L.; Guo, H. Spatial distributions of C3 and C4 grass functional types in the U.S. Great Plains and their dependency on inter-annual climate variability. Remote Sens. Environ. 2013, 138, 90–101. [Google Scholar] [CrossRef]

- Wang, C.; Zhong, C.; Yang, Z. Assessing bioenergy-driven agricultural land use change and biomass quantities in the U.S. Midwest with MODIS time series. J. Appl. Remote Sens. 2014, 8, 1–16. [Google Scholar] [CrossRef]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Emelyanova, I.V.; McVicar, T.R.; van Niel, T.G.; Li, L.T.; Dijk, A.I.J.M. Remote sensing of environment assessing the accuracy of blending Landsat—MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Singh, D. Generation and evaluation of gross primary productivity using Landsat data through blending with MODIS data. Int. J. Appl. Earth Observ. Geoinform. 2011, 13, 59–69. [Google Scholar] [CrossRef]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Bhandari, S.; Phinn, S.; Gill, T. Preparing Landsat Image Time Series (LITS) for monitoring changes in vegetation phenology in Queensland, Australia. Remote Sens. 2012, 4, 1856–1886. [Google Scholar] [CrossRef]

- Feng, M.; Sexton, J.O.; Huang, C.; Masek, J.G.; Vermote, E.F.; Gao, F.; Narasimhan, R.; Channan, S.; Wolfe, R.E.; Townshend, J.R. Global surface reflectance products from Landsat: Assessment using coincident MODIS observations. Remote Sens. Environ. 2013, 134, 276–293. [Google Scholar] [CrossRef]

- Hwang, T.; Song, C.; Bolstad, P.V.; Band, L.E. Downscaling real-time vegetation dynamics by fusing multi-temporal MODIS and Landsat NDVI in topographically complex terrain. Remote Sens. Environ. 2011, 115, 2499–2512. [Google Scholar] [CrossRef]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar] [CrossRef]

- Vieira, M.A.; Formaggio, A.R.; Rennó, C.D.; Atzberger, C.; Aguiar, D.A.; Mello, M.P. Object based image analysis and data mining applied to a remotely sensed Landsat time-series to map sugarcane over large areas. Remote Sens. Environ. 2012, 123, 553–562. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Navulur, K. Multispectral Image Analysis Using the Object-Oriented Paradigm; Taylor & Francis Group: Boca Raton, FL, USA, 2007. [Google Scholar]

- Haralick, R.; Shapiro, L. Image segmentation techniques. Comput. Vis. Gr. Image Process. 1985, 29, 100–132. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Browning, D.M.; Rango, A. A comparison of three feature selection methods for object-based classification of sub-decimeter resolution UltraCam-L imagery. Int. J. Appl. Earth Observ. Geoinform. 2012, 15, 70–78. [Google Scholar] [CrossRef]

- Carleer, A.P.; Wolff, E. Urban land cover multi-level region-based classification of VHR data by selecting relevant features. Int. J. Remote Sens. 2006, 27, 1035–1051. [Google Scholar] [CrossRef]

- Zhang, X.; Feng, X.; Jiang, H. Object-oriented method for urban vegetation mapping using IKONOS imagery. Int. J. Remote Sens. 2010, 31, 177–196. [Google Scholar] [CrossRef]

- Van Coillie, F.M.B.; Verbeke, L.P.C.; de Wulf, R.R. Feature selection by genetic algorithms in object-based classification of IKONOS imagery for forest mapping in Flanders, Belgium. Remote Sens. Environ. 2007, 110, 476–487. [Google Scholar] [CrossRef]

- Chubey, M.S.; Franklin, S.E.; Wulder, M.A. Object-based analysis of Ikonos-2 imagery for extraction of forest inventory parameters. Photogramm. Eng. Remote Sens. 2006, 72, 383–394. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Fredrickson, E.L.; Rango, A. Combining decision trees with hierarchical object-oriented image analysis for mapping arid rangelands. Photogramm. Eng. Remote Sens. 2007, 73, 197–207. [Google Scholar] [CrossRef]

- Addink, E.A.; de Jong, S.M.; Davis, S.A.; Dubyanskiy, V.; Burdelow, L.A.; Leirs, H. The use of high-resolution remote sensing for plague surveillance in Kazakhstan. Remote Sens. Environ. 2010, 114, 674–681. [Google Scholar] [CrossRef]

- Clark, M.L.; Roberts, D.A.; Clark, D.B. Hyperspectral discrimination of tropical rain forest tree species at leaf to crown scales. Remote Sens. Environ. 2005, 96, 375–398. [Google Scholar] [CrossRef]

- Van Aardt, J.A.N.; Wynne, R.H. Examining pine spectral separability using hyperspectal data from an airborne sensor: An extension of field-based results. Int. J. Remote Sens. 2007, 28, 431–436. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Bittencourt, H.R.; Clarke, R.T. Feature selection by using classification and regression trees (CART). In Proceedings of the 20th ISPRS Congress, Istanbul, Turkey, 12–23 July 2004; Volume XXXV. Commission, 7.

- Lawrence, R.L.; Wrlght, A. Rule-based classification systems using classification and regression tree (CART) analysis. Photogramm. Eng. Remote Sens. 2001, 67, 1137–1142. [Google Scholar]

- CropScape-Cropland Data Layer. Availabe online: http://nassgeodata.gmu.edu/CropScape/ (accessed on 16 May 2015).

- Masek, J.G.; Vermote, E.F.; Saleous, N.E.; Wolfe, R.; Hall, F.G.; Huemmrich, F.; Gao, F.; Kutler, J.; Li, T.-K. A Landsat surface reflectance data set for North America, 1990–2000. IEEE Geosci. Remote Sens. Lett. 2006, 3, 69–72. [Google Scholar]

- Huberty, C.J. Applied Discriminant Analysis; Jon Wiley and Sons: New York, NY, USA, 1994. [Google Scholar]

- Baatz, M.; Benz, U.; Dehghani, S.; Heynen, M.; Höltje, A.; Hofmann, P.; Lingenfelder, I.; Mimler, M.; Sohlbach, M.; Weber, M.; et al. eCognition Professional User Guide; Definiens Imaging GmbH: München, Germany, 2004. [Google Scholar]

- Ke, Y.; Quackenbush, L.J.; Im, J. Synergistic use of QuickBird multispectral imagery and LIDAR data for object-based forest species classification. Remote Sens. Environ. 2010, 114, 1141–1154. [Google Scholar] [CrossRef]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Observ. Geoinform. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. An assessment of the effectiveness of decision tree methods for land cover classification. Remote Sens. Environ. 2003, 86, 554–565. [Google Scholar] [CrossRef]

- Wright, C.; Gallant, A. Improved wetland remote sensing in Yellowstone National Park using classification trees to combine TM imagery and ancillary environmental data. Remote Sens. Environ. 2007, 107, 582–605. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Li, L.; Tugrul Yilmaz, M.; Jackson, T.J. Comparison of vegetation water contents derived from shortwavae-infared and passive-microwave sensors over central Iowa. Remote Sens. Environ. 2011, 115, 2376–2383. [Google Scholar] [CrossRef]

- Dronova, I.; Gong, P.; Clinton, N.E.; Wang, L.; Fu, W.; Qi, S.; Liu, Y. Landscape analysis of wetland plant functional types: The effects of image segmentation scale, vegetation classes and classification methods. Remote Sens. Environ. 2012, 127, 357–369. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data. Remote Sens. 2015, 7, 16091-16107. https://doi.org/10.3390/rs71215820

Li Q, Wang C, Zhang B, Lu L. Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data. Remote Sensing. 2015; 7(12):16091-16107. https://doi.org/10.3390/rs71215820

Chicago/Turabian StyleLi, Qingting, Cuizhen Wang, Bing Zhang, and Linlin Lu. 2015. "Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data" Remote Sensing 7, no. 12: 16091-16107. https://doi.org/10.3390/rs71215820

APA StyleLi, Q., Wang, C., Zhang, B., & Lu, L. (2015). Object-Based Crop Classification with Landsat-MODIS Enhanced Time-Series Data. Remote Sensing, 7(12), 16091-16107. https://doi.org/10.3390/rs71215820