4.1. Comparison between the Proposed Method and the Wishart Supervised Classification

Classification results of the proposed method with the L-band image are shown in

Figure 4a. The study area is a highly urbanized city (San Francisco, CA, USA). Urban structures, including buildings in different orientations and roads are fairly identified. Green covers in urban lands (e.g., parks) are clear. Ocean surfaces also show clear tonal differences from far range to near range.

Figure 4.

Classification results of proposed method and Wishart supervised method on L-band data; (a) proposed method; (b) Wishart supervised method.

Figure 4.

Classification results of proposed method and Wishart supervised method on L-band data; (a) proposed method; (b) Wishart supervised method.

As a comparison, the commonly applied Wishart supervised classification [

11] was also performed with the L-band image. The Wishart supervised classification (

Figure 4b) is more greenish than that of the proposed method, revealing apparent overestimation of green covers. Correspondingly, urban structures are severely underestimated. The near ocean is misclassified as bare land (pink area in the upper right), while the far ocean is confused with lake and near ocean in the left and grass near the bridge in the upper left corner. Between

Figure 4a,b, our proposed method yields the overall distributions of land surfaces that are similar to the original image.

Using the validation points in

Table 1, the accuracies the two classifications in

Figure 4 are also compared with a confusion matrix approach (

Table 4 and

Table 5).

Table 4.

Confusion Matrix of the Proposed Method (L-band).

Table 4.

Confusion Matrix of the Proposed Method (L-band).

| Classified Data | Reference Data |

|---|

| BL | OB | NB | FO | DF | TS | LK | MO | NO | GS | RD | SD | UA (%) |

|---|

| BL | 758 | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 0 | 64 | 4 | 1 | 91.22 |

| OB | 0 | 1290 | 11 | 0 | 0 | 9 | 0 | 0 | 0 | 0 | 3 | 0 | 98.25 |

| NB | 0 | 10 | 1408 | 0 | 15 | 225 | 0 | 0 | 0 | 0 | 52 | 0 | 82.34 |

| FO | 0 | 0 | 0 | 2150 | 0 | 0 | 5 | 71 | 0 | 11 | 0 | 0 | 96.11 |

| DF | 0 | 0 | 19 | 0 | 838 | 47 | 0 | 0 | 0 | 3 | 13 | 5 | 90.59 |

| TS | 0 | 2 | 124 | 0 | 22 | 785 | 0 | 0 | 0 | 0 | 33 | 0 | 81.26 |

| LK | 1 | 0 | 0 | 4 | 0 | 0 | 209 | 10 | 0 | 3 | 1 | 0 | 91.67 |

| MO | 0 | 0 | 0 | 50 | 0 | 0 | 41 | 1867 | 1 | 0 | 0 | 0 | 95.30 |

| NO | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2105 | 0 | 0 | 0 | 99.76 |

| GS | 90 | 0 | 0 | 0 | 1 | 0 | 9 | 0 | 0 | 1340 | 99 | 24 | 85.73 |

| RD | 35 | 0 | 22 | 0 | 8 | 62 | 0 | 0 | 0 | 119 | 1343 | 38 | 82.54 |

| SD | 4 | 0 | 0 | 0 | 0 | 0 | 3 | 0 | 0 | 21 | 16 | 564 | 92.76 |

| PA (%) | 84.88 | 99.08 | 88.89 | 97.55 | 94.8 | 69.59 | 77.12 | 95.84 | 99.95 | 85.84 | 85.87 | 89.24 | - |

| OA (%): 91.17; kappa: 0.90. |

Table 5.

Confusion Matrix of the Wishart Supervised Classification (L-band).

Table 5.

Confusion Matrix of the Wishart Supervised Classification (L-band).

| Classified Data | Reference Data |

|---|

| BL | OB | NB | FO | DF | TS | LK | MO | NO | GS | RD | SD | UA (%) |

|---|

| BL | 11 | 0 | 0 | 0 | 0 | 0 | 0 | 14 | 810 | 12 | 6 | 0 | 1.29 |

| OB | 0 | 720 | 39 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 94.74 |

| NB | 0 | 552 | 664 | 0 | 0 | 365 | 0 | 0 | 0 | 0 | 2 | 0 | 41.95 |

| FO | 0 | 0 | 0 | 1620 | 0 | 0 | 23 | 944 | 0 | 9 | 0 | 0 | 62.40 |

| DF | 1 | 4 | 215 | 0 | 554 | 203 | 0 | 0 | 0 | 1 | 180 | 0 | 47.84 |

| TS | 0 | 26 | 660 | 0 | 253 | 509 | 0 | 0 | 0 | 0 | 17 | 0 | 34.74 |

| LK | 0 | 0 | 0 | 537 | 0 | 0 | 181 | 982 | 2 | 17 | 0 | 0 | 10.53 |

| MO | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | N/A |

| NO | 85 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1294 | 0 | 0 | 0 | 93.84 |

| GS | 395 | 0 | 0 | 47 | 0 | 1 | 38 | 8 | 0 | 648 | 402 | 2 | 42.05 |

| RD | 243 | 0 | 6 | 0 | 77 | 48 | 0 | 0 | 0 | 293 | 579 | 71 | 43.96 |

| SD | 158 | 0 | 0 | 0 | 0 | 1 | 29 | 0 | 0 | 581 | 378 | 559 | 32.77 |

| PA (%) | 1.23 | 55.30 | 41.92 | 73.50 | 62.67 | 45.12 | 66.79 | 0.00 | 61.44 | 41.51 | 37.02 | 88.45 | - |

| OA (%): 45.65; kappa: 0.41. |

The overall accuracy (OA) of the proposed method was 91.17%, much higher than that of Wishart supervised classification (45.65%). The kappa value of the proposed method was 0.90, also much higher than 0.41 of the Wishart supervised classification. Furthermore, the producer’s (PA) and user’s (UA) accuracies were higher than those of the Wishart supervised classification for all classes. As an example, the UA and PA of bare land (BL) evaluated by the Wishart supervised classifier was 1.29% and 1.23%, respectively. As indicated by the confusion matrix, bare land was frequently confused with near ocean, grass and road. The proposed method greatly alleviated this situation, improving the UA and PA to 91.22% and 84.88%, respectively. For the example of non-orthogonal buildings (NB), the Wishart supervised classifier dramatically confused it with dense forest (DF) and trees (TS), yielding the UA and PA of 41.95% and 41.92%, respectively. The proposed method largely remedied the confusion and increased the UA and PA to 82.34% and 88.89%. Similar results were obtained for classifications with C- and P-band data. The results indicate a huge improvement of classification with the proposed method in urban lands.

4.2. Contribution of Polarimetric and TF Features

The contribution was assessed by performing the C5.0 decision tree classification using a specific type of features (polarimetric or TF) each time. Their overall accuracies and Kappa values are compared with the all-feature classification that we proposed in this study (

Table 6).

Classification with full features reached the highest accuracies. By using polarimetric features (POL-only) in the classification, the overall accuracy for each band was about 3%–5% lower than the full-feature classification. The kappa coefficients were also decreased. Using TF information itself (TF-only), the overall accuracies were dramatically reduced, with approximately 14% in the C-band, 13% in the L-band and 17% in the P-band. The kappa coefficients also significantly decreased. Therefore, polarimetric features played a better role in POLSAR image classification than TF features.

Table 6.

Accuracies for classification with full features (proposed), polarimetric features (POL-only) and TF features (TF-only) of the three images.

Table 6.

Accuracies for classification with full features (proposed), polarimetric features (POL-only) and TF features (TF-only) of the three images.

| | C-Band | L-Band | P-Band |

|---|

| | OA (%) | Kappa | OA (%) | Kappa | OA (%) | Kappa |

|---|

| Proposed | 90.45 | 0.89 | 91.17 | 0.90 | 84.91 | 0.83 |

| POL-only | 84.74 | 0.83 | 88.29 | 0.87 | 80.85 | 0.79 |

| TF-only | 76.49 | 0.74 | 78.30 | 0.76 | 67.64 | 0.64 |

In order to investigate the contribution of TF and polarimetric features to the accuracies of different classes, their producer’s (PA) and user’s (UA) accuracies with L-band image are listed in

Table 7.

In comparison with our classification using full features, the PAs and UAs of different ground objects decreased when POL- or TF-only information was used. It indicates that both TF and polarimetric information are important in the proposed method. The POL-only method significantly reduced the PA and UA of DF (dense forest), TS (trees) and LK (lakes) (>5%), indicating that TF information is required for accurately classifying these ground objects. The TF-only method also considerably decreased the PA and UA of ground objects. The decline in PA and UA of bare land and lake exceeded 20%. Therefore, polarimetric information is important for accurately classifying bare land, lake and central ocean areas.

Table 7.

PA and UA of POL-only and TF-only method on L-band.

Table 7.

PA and UA of POL-only and TF-only method on L-band.

| | PA (%) | UA (%) |

|---|

| | Proposed | POL-Only | TF-Only | Proposed | POL-Only | TF-Only |

|---|

| bare land (BL) | 84.88 | 85.89 | 48.49 | 91.22 | 89.60 | 68.62 |

| orthogonal building (OB) | 99.08 | 99.08 | 94.39 | 98.25 | 97.95 | 90.30 |

| non-orthogonal building (NB) | 88.89 | 84.91 | 76.39 | 82.34 | 77.03 | 72.80 |

| far Ocean (OFOF) | 97.55 | 95.55 | 89.07 | 96.11 | 93.89 | 83.32 |

| dense forest (DF) | 94.80 | 87.22 | 91.29 | 90.59 | 77.41 | 79.82 |

| trees (TS) | 69.59 | 57.54 | 59.49 | 81.26 | 73.09 | 71.23 |

| lake (LK) | 77.12 | 59.04 | 54.61 | 91.67 | 84.21 | 66.37 |

| middle ocean (MO) | 95.84 | 95.23 | 77.41 | 95.30 | 93.78 | 79.45 |

| near ocean (NO) | 99.95 | 99.95 | 99.53 | 99.76 | 99.91 | 96.90 |

| grass (GS) | 85.84 | 85.14 | 67.01 | 85.73 | 84.17 | 60.05 |

| road (RD) | 85.87 | 80.95 | 64.58 | 82.54 | 79.97 | 66.71 |

| shadow (SD) | 89.24 | 87.18 | 74.05 | 92.76 | 92.76 | 81.53 |

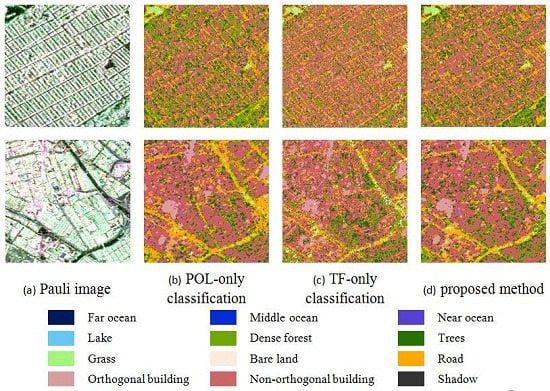

Figure 5 shows the results of POL-only and TF-only classifications on L-band data. In the absence of TF information (

Figure 5a), higher misclassifications were observed than the proposed full-feature classification in

Figure 4a. For example, near the bridge in the upper left corner, the far ocean was misclassified as bare land. In the absence of polarimetric information (

Figure 5b), some green areas in urban lands were misclassified as buildings. Two subsets of the image (marked as the red and blue squares in

Figure 5) were selected to show more details about the effects of polarimetric and TF information. In these subsets, the original image and the three classification results are visually compared (

Figure 6).

Figure 5.

Classification results of POL-only and TF-only on L-band data. (a) POL-only; (b) TF-only.

Figure 5.

Classification results of POL-only and TF-only on L-band data. (a) POL-only; (b) TF-only.

Figure 6.

Comparison of classification results in two subsets marked in

Figure 5a. (

a–

d) represent the red-squired subset: (a) Pauli image (b) POL-only classification (c) TF-only classification (d) proposed method; Figures (

e–

h) represent the blue-squared subset: (e) Pauli image (f) POL-only classification (g) TF-only classification (h) proposed method.

Figure 6.

Comparison of classification results in two subsets marked in

Figure 5a. (

a–

d) represent the red-squired subset: (a) Pauli image (b) POL-only classification (c) TF-only classification (d) proposed method; Figures (

e–

h) represent the blue-squared subset: (e) Pauli image (f) POL-only classification (g) TF-only classification (h) proposed method.

As displayed in

Figure 6a, the red-squared subset is a typical dense residential area with regularly oriented dense buildings. Compared with the full-feature classification (

Figure 6d), removing TF information (

Figure 6b) resulted in misclassifying buildings to dense forest. The importance of TF information in delineating dense forest from non-orthogonal buildings has also been reported in previous studies [

42]. On Google Earth, the blue-squared subset is a newly developed commercial and light industrial land. It has mixed cover of buildings, parking lots and open spaces with dense road networks (e.g., highways) (

Figure 6e). For road classification, the TF-only classification results in coarse clusters (

Figure 6g), while the POL-only classification (

Figure 6f) is noisy. It is the combination of TF and polarimetric features that contributes to a reasonable classification result in

Figure 6h. This phenomenon is in conformity with the analysis of accuracy of road classification in

Table 7.

4.3. Contribution of C5.0 Decision Tree Algorithm

To evaluate the contribution of the C5.0 decision tree algorithm in the proposed method, the algorithm was replaced by various alternative classifiers [

19] in L-band; neural network (NN), and SVMs with different kernel functions-radial basis function (SVM-RBF) and polynomial (SVM-POLY) [

19]. The OA and kappa values of the classification results are listed in

Table 8.

From the table, the highest accuracies and kappa coefficients in each band were obtained by the proposed method. This indicates that the C5.0 decision tree classifier adopted in the proposed method is more effective than the other tested classifiers. Moreover, the Wishart supervised classifier yielded the lowest classification accuracy, while the classifier with multiple features achieved a relatively high accuracy, revealing that accurate classification requires the integration of multiple features. Finally, regardless of classifier, P-band data were classified with the lowest accuracy. This behavior may be caused by the long wavelength of the P-band. Ground features in most urban areas are difficult to distinguish due to the complex scattering mechanisms of signals at longer wavelengths.

Table 8.

Classification Accuracy of Different Classifiers.

Table 8.

Classification Accuracy of Different Classifiers.

| | OA (%) | Kappa |

|---|

| Proposed | 91.17 | 0.90 |

| Quest | 71.85 | 0.69 |

| NN | 86.00 | 0.84 |

| SVM-RBF | 88.81 | 0.88 |

| SVM-POLY | 88.41 | 0.87 |

| Wishart | 45.65 | 0.41 |

QUEST decision tree is designed to reduce the processing time required for the large decision tree analysis. Compared with QUEST, the rule of C5.0 decision tree is more complex, but it allows for more than two subgroups of segmentation many times. SVM is computationally expensive. Neural network has a strong ability of nonlinear fitting, but it is difficult to provide clear classification rules. C5.0 decision tree has a better performance on feature space optimization and feature selection, especially when the feature set is large [

24].

4.4. Contribution of Multi-Frequency Dataset

Radar signals at different wavelengths exhibit different sensitivities to ground features [

43,

44]. Thus, combining multiple bands might be helpful for ground imaging. Here, POLSAR data of three frequencies are combined and input to C5.0 decision tree. The results of this test are shown in

Figure 7 and

Table 9.

Figure 7.

Classification results of adding C- and P-band data to L-band data.

Figure 7.

Classification results of adding C- and P-band data to L-band data.

Compared with other results, simultaneous use of C-, L- and P-band data further reduces the quantities of confused pixels between classes. For example, misclassification is diminished near the bridge in

Figure 7, and the distribution of vegetation and buildings is more comparable to the high-resolution image at Google Earth.

Table 9.

Accuracy of Multi-Frequency Dataset.

Table 9.

Accuracy of Multi-Frequency Dataset.

| Band Selection | OA (%) | Kappa |

|---|

| C | 90.45 | 0.89 |

| L | 91.17 | 0.90 |

| P | 84.91 | 0.83 |

| C+L | 95.56 | 0.95 |

| C+P | 94.78 | 0.94 |

| L+P | 94.89 | 0.94 |

| C+L+P | 96.39 | 0.96 |

In

Table 9, combining any two bands dramatically increased the accuracies compared to any single-frequency classification. Using all of C-, L, and P-band data reached the highest OA (96.39%) and Kappa coefficient (0.96). In order to study the effects of single bands and band combinations of classification accuracy on different ground objects more clearly, PA and UA of typical classes were provided in

Figure 8.

Figure 8.

PA and UA histogram of Multi-Frequency Dataset.

Figure 8.

PA and UA histogram of Multi-Frequency Dataset.

From the

Figure 8a, PA of trees in C-band was higher than that in L-band, while PA of orthogonal building in C-band was lower. Comparing the scattering mechanisms at different frequencies, the C-band return is primarily from volume scattering in the vegetation canopy, whereas L-band scattering is stronger for ground as well as double bounce in urban areas. The L-band classification plays better in the distinction among forest, trees, and building. At higher frequencies, POLSAR data are less sensitive to azimuth slope variations because electromagnetic waves at short wavelength are more sensitive and less penetrative to small scatterers. This may explain the poorest performance of P-band classification.

Classification accuracies of multi-frequency dataset performed better than those of single bands. For instance, using the combination of C- and L-band datasets, the PA of each class was increased, compared with that of a single band. The PA and UA of trees, grass, and non-orthogonal buildings were enhanced to a large degree. As waves at different wavelength are sensitive to various scatterers, the methods using the combination among different bands dataset for comprehensive utilization of this nature makes the classification precision improvement. Overall, the C- and L-band PolSAR data are more suitable for single band data classification, and multi-band classification performs much better than any single-band data.

4.5. Stable Features in POLSAR Image Classification

When all POLSAR features are included, the proposed method reaches high classification accuracy. However, practically, it is time consuming and inefficient to collect such a large set of features from POLSAR imagery. With reduced sets of features, the complexity of the C5.0 decision tree can be effectively decreased and the applicability improved. For this purpose, all features (100%) involved in the proposed method were sorted by their predictor importance (calculated by the C5.0 decision tree algorithm) to test the feasibility of feature reduction. The feature groups at top-ranking 50%, 40%, 30%, 20% and 10% were selected and classified in the C5.0 approach. The accuracies are compared in

Table 10.

Table 10.

Overall Accuracies of classification with reduced features.

Table 10.

Overall Accuracies of classification with reduced features.

| | C-Band | L-Band | P-Band |

|---|

| 100% | 90.45% | 91.17% | 84.91% |

| 50% | 90.10% | 91.00% | 84.42% |

| 40% | 89.79% | 90.66% | 84.95% |

| 30% | 89.39% | 90.17% | 84.72% |

| 20% | 85.59% | 88.55% | 84.78% |

| 10% | 79.64% | 85.65% | 81.87% |

For all images in three frequencies, the overall accuracies were similar when using 100%, top 50%, 40%, and 30% features. Accuracies slightly changed when features used in classifications dropped to 20%. When only 10% of features were used, however, there was a relatively large decrease of the accuracies. Therefore, the top-ranking 20% of features are a reasonable set of input features for classification.

Table 11 lists the top 20% of features used in the proposed method of C-, L- and P-band in a descending order of their predictor importance scores. For images at different frequencies, a different set of features was included in each rank. Four features were always selected: three polarimetric features including H/A/Alpha decomposition (entropy), Shannon entropy, and T11 Coherency Matrix element that describes the single scattering flat surface (or odd scattering), and one TF feature that is the intensity of coherence of HH. These four features are highlighted in bold in

Table 11.

Using these four features as inputs, the accuracies of the proposed method and the Wishart supervised classification method are compared in

Table 12.

For all frequencies, the overall accuracies of the proposed methods were around 30% higher than the Wishart supervised method. For the C-band image, its accuracy was even higher than the top 10% features as listed in

Table 10. Interestingly, with only four features, classification of the C-band image reached the highest accuracy, while that of the L-band image had the best results when more features were used (as shown in

Table 10). The P-band image turned out to have the lowest accuracies for all combination of features, which could be related to noises introduced by more complex interaction between longer wavelength signals and heterogeneous urban surfaces.

Table 11.

Top 20% of features in the proposed method of C-, L- and P-band.

Table 11.

Top 20% of features in the proposed method of C-, L- and P-band.

| C-Band | L-Band | P-Band |

|---|

| Shannon Entropy | VanZyl_Vol | Shannon Entropy |

| (TF)Δalpha | (TF)intensity of coherence of HH | (TF)intensity of coherence of HH |

| (TF)intensity of coherence of HH | Shannon Entropy | Neum2_Pha |

| T11 | Yam3_Vol | Entropy |

| ConformityCoefficient | SERD | Krog_H |

| Krog_D | Freeman_Vol | (TF) Intensity interferogram of HH |

| Yam3_Vol | Depolarization index | DERD |

| PedestalHeight | Yam4_Vol | Holm2_T22 |

| (TF)Intensity interferogram of HH | Yam4_Dbl | Huynen_T22 |

| Yam3_Dbl | Cloude_T33 | Anisotropy |

| Yam3_Odd | Freeman2_Vol | T11 |

| Entropy | Touzi_tau2 | Conformity Coefficient |

| Holm1_T22 | Freeman_Dbl | Barnes2_T11 |

| Huynen_T22 | Krog_S | Huynen_T33 |

| Anisotropy | T11 | Touzi_tau1 |

| Cloude_T11 | Touzi_alpha1 | (TF)ΔYam4_Odd |

| Touzi_tau1 | Entropy | alpha3 |

| VanZyl_Vol | alpha2 | (TF) amplitude coherence of HV |

| (TF) Intensity interferogram of HV | VanZyl_Odd | Yam4_Hlx |

Table 12.

Overall Accuracy of Wishart supervised method and proposed method using only 4 features.

Table 12.

Overall Accuracy of Wishart supervised method and proposed method using only 4 features.

| | C-Band | L-Band | P-Band |

|---|

| Wishart supervised | 56.44% | 45.65% | 43.20% |

| Proposed method | 82.22% | 79.38% | 73.20% |