Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery

Abstract

:1. Introduction

- Manipulate the frame imagery while using minimal control to produce a geometrically-accurate orthophoto, which will be denoted as the RGB-based orthophoto.

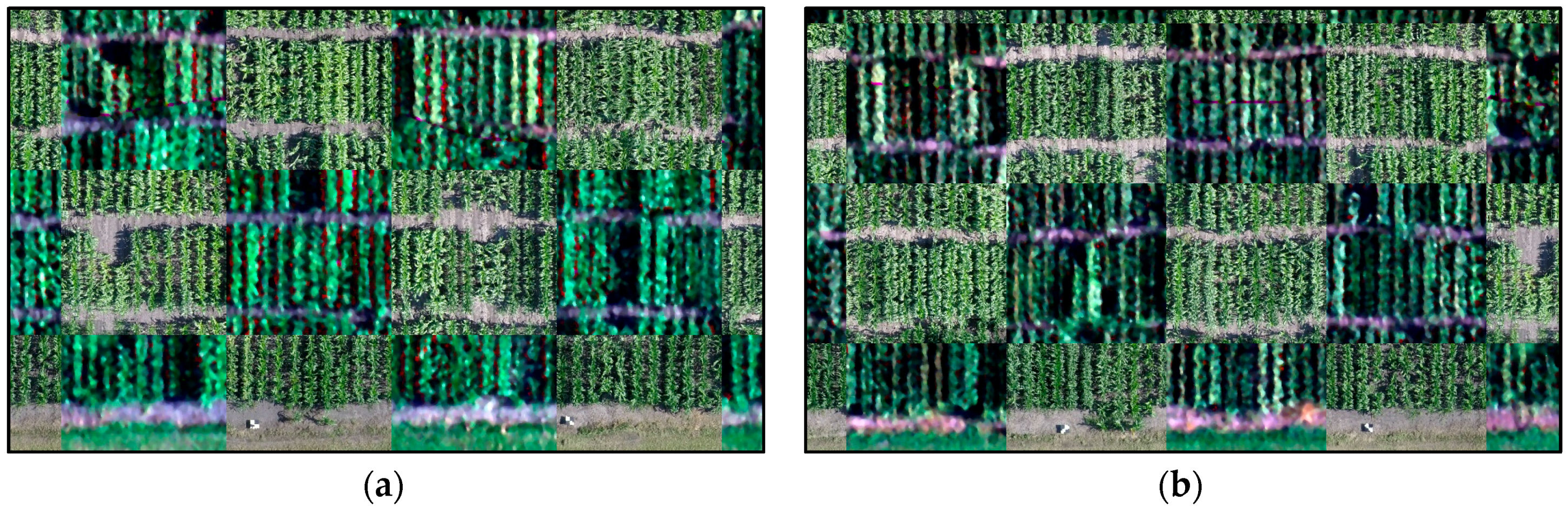

- Utilize the low-quality navigation data for ortho-rectifying the hyperspectral data. Since the navigation data are based on a consumer-grade GNSS/INS unit, the rectified scenes will be denoted as partially-rectified hyperspectral orthophotos. In other words, residual errors in the navigation data are expected to have a negative impact on the ortho-rectification process; thus, we use the term “partially-rectified”.

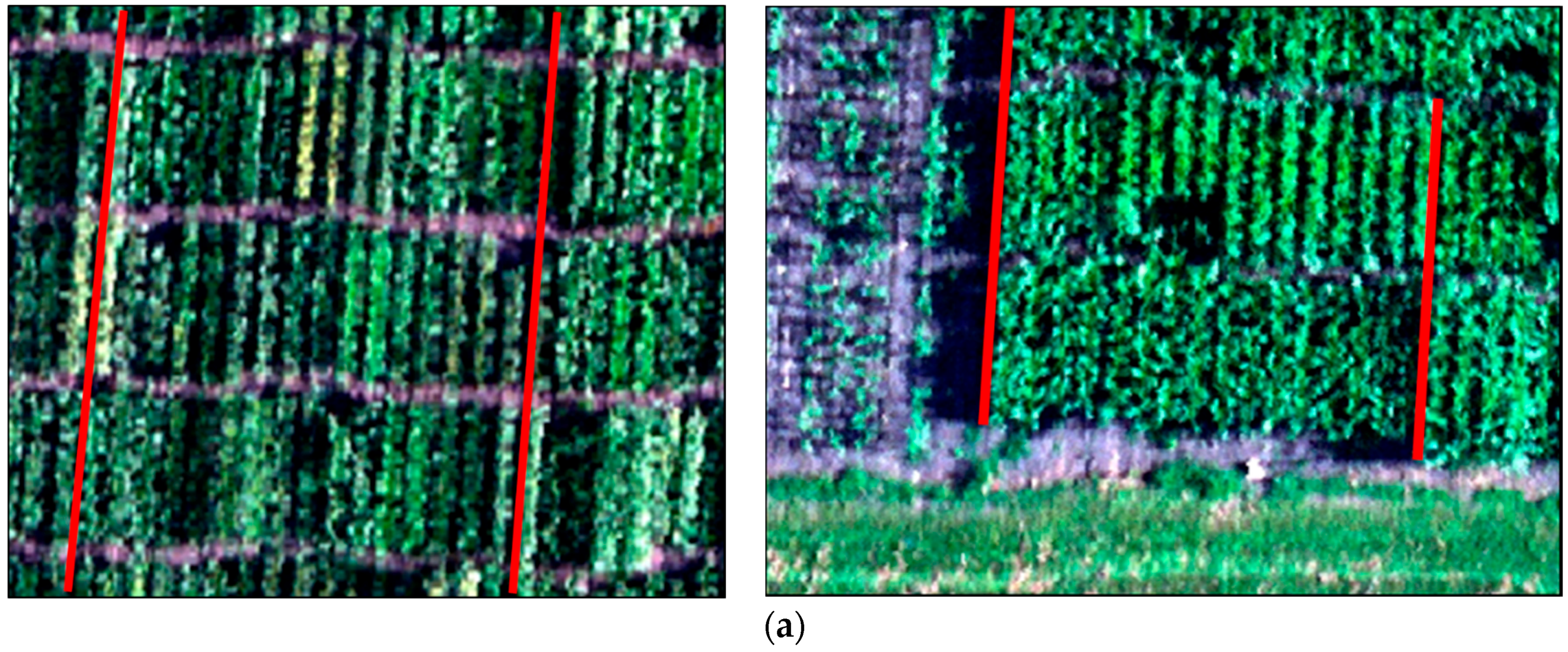

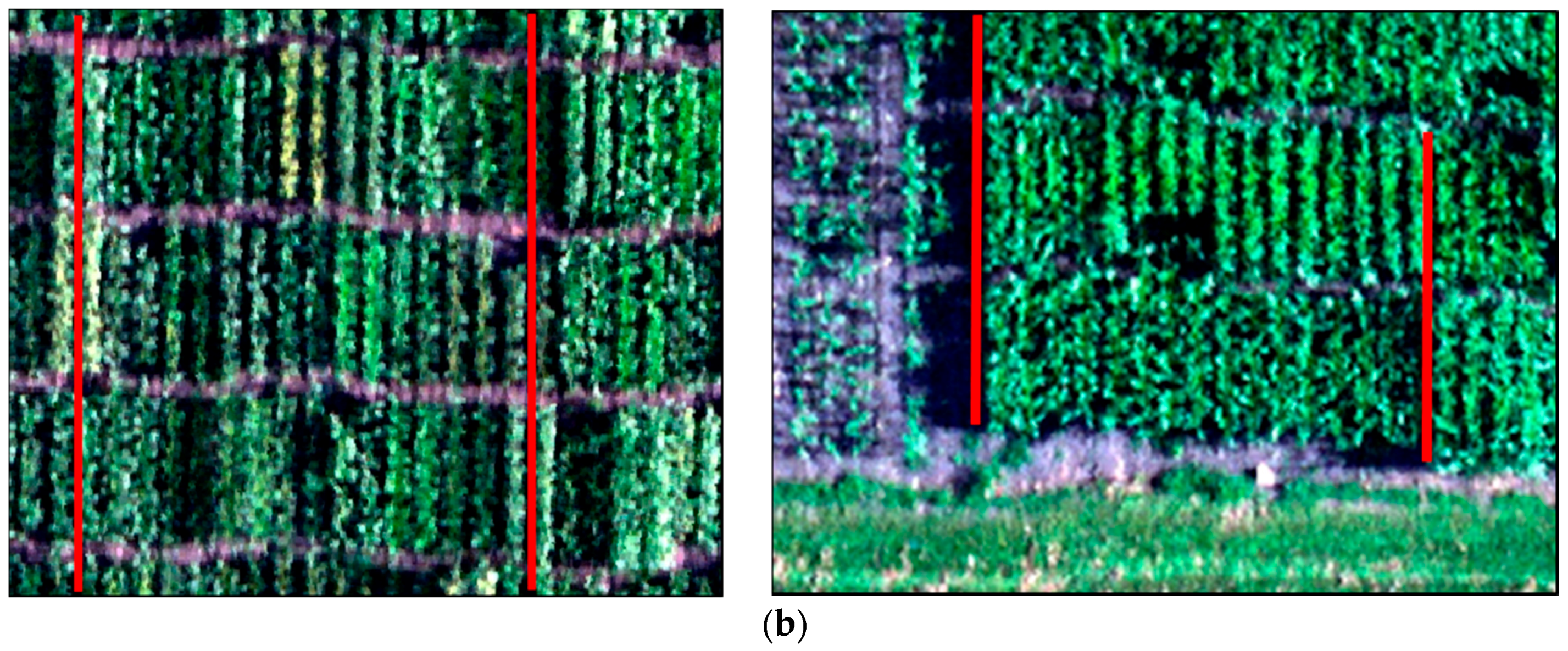

- Develop a matching strategy that relies on the available navigation data to identify conjugate features among the partially-rectified hyperspectral and RGB-based orthophotos to minimize mismatches arising from the repetitive pattern in a mechanized agriculture field.

- Use the matched features to derive the parameters of a transformation function that models the impact of residual errors in the navigation data on the partially-rectified hyperspectral orthophoto.

- Incorporate the transformation parameters in a resampling procedure that transforms the partially-rectified hyperspectral orthophoto to the reference frame of the RGB-based one.

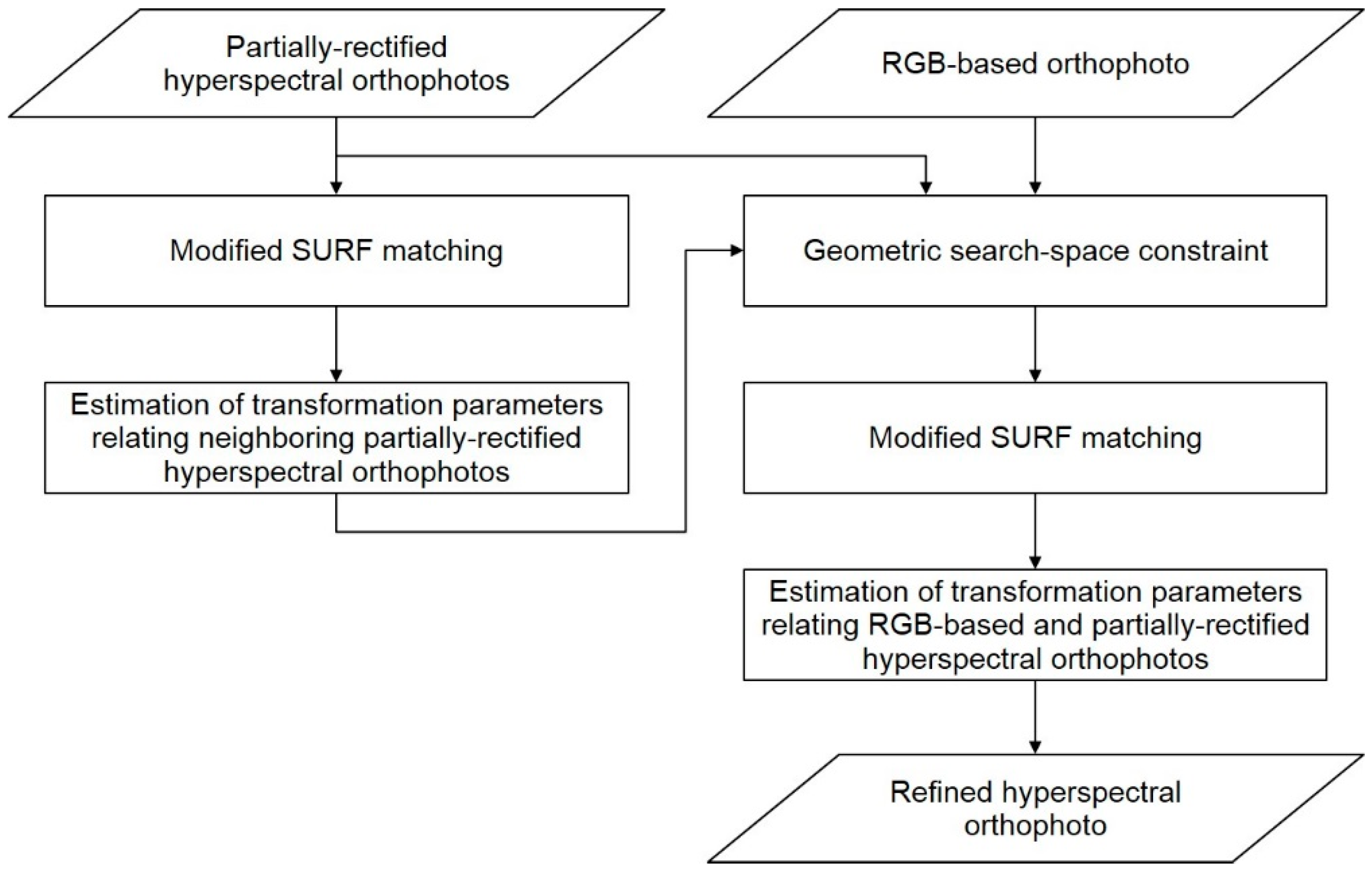

2. Methodology

2.1. Speeded-Up Robust Feature Algorithm

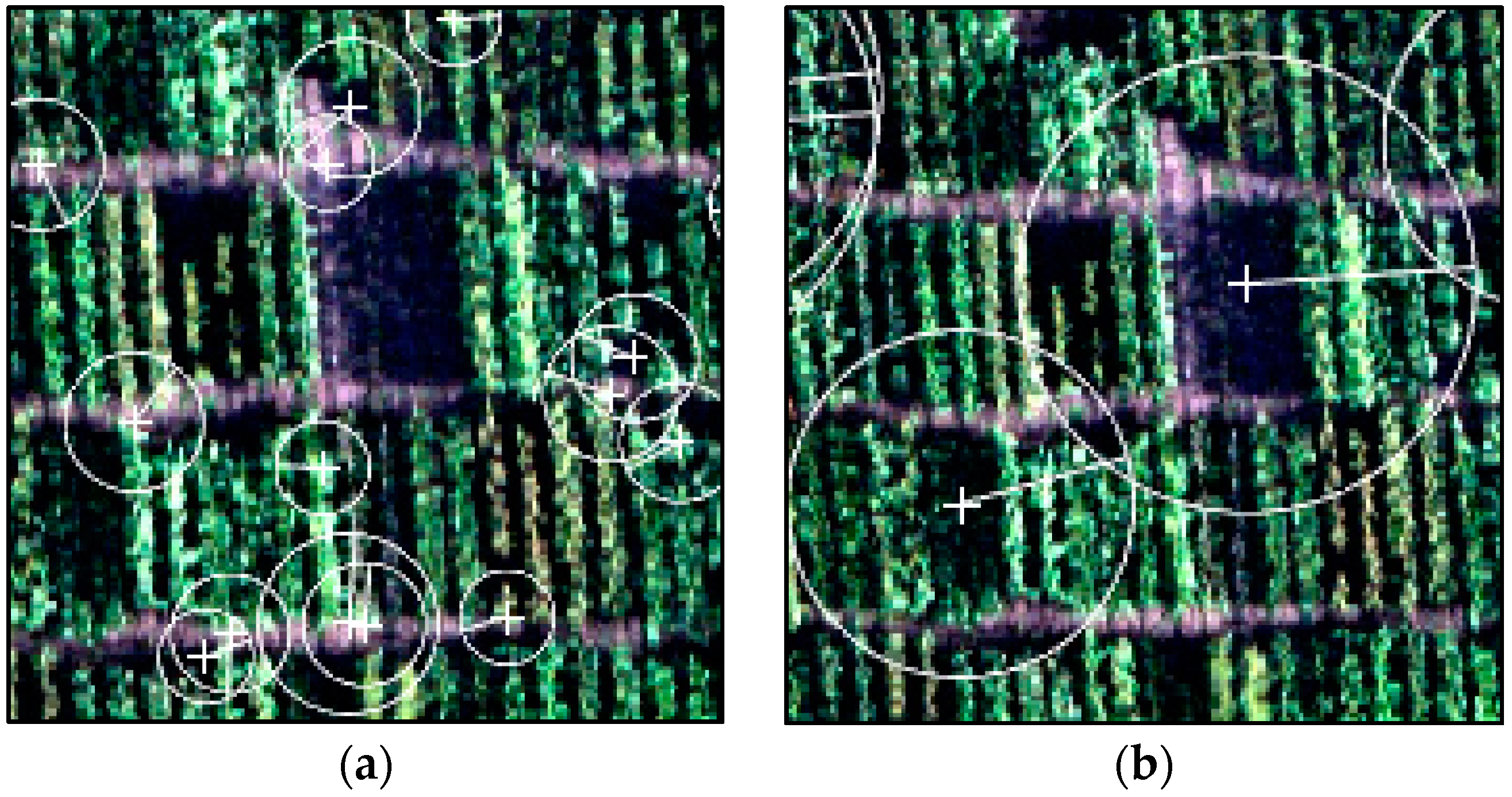

2.2. Modified SURF Algorithm

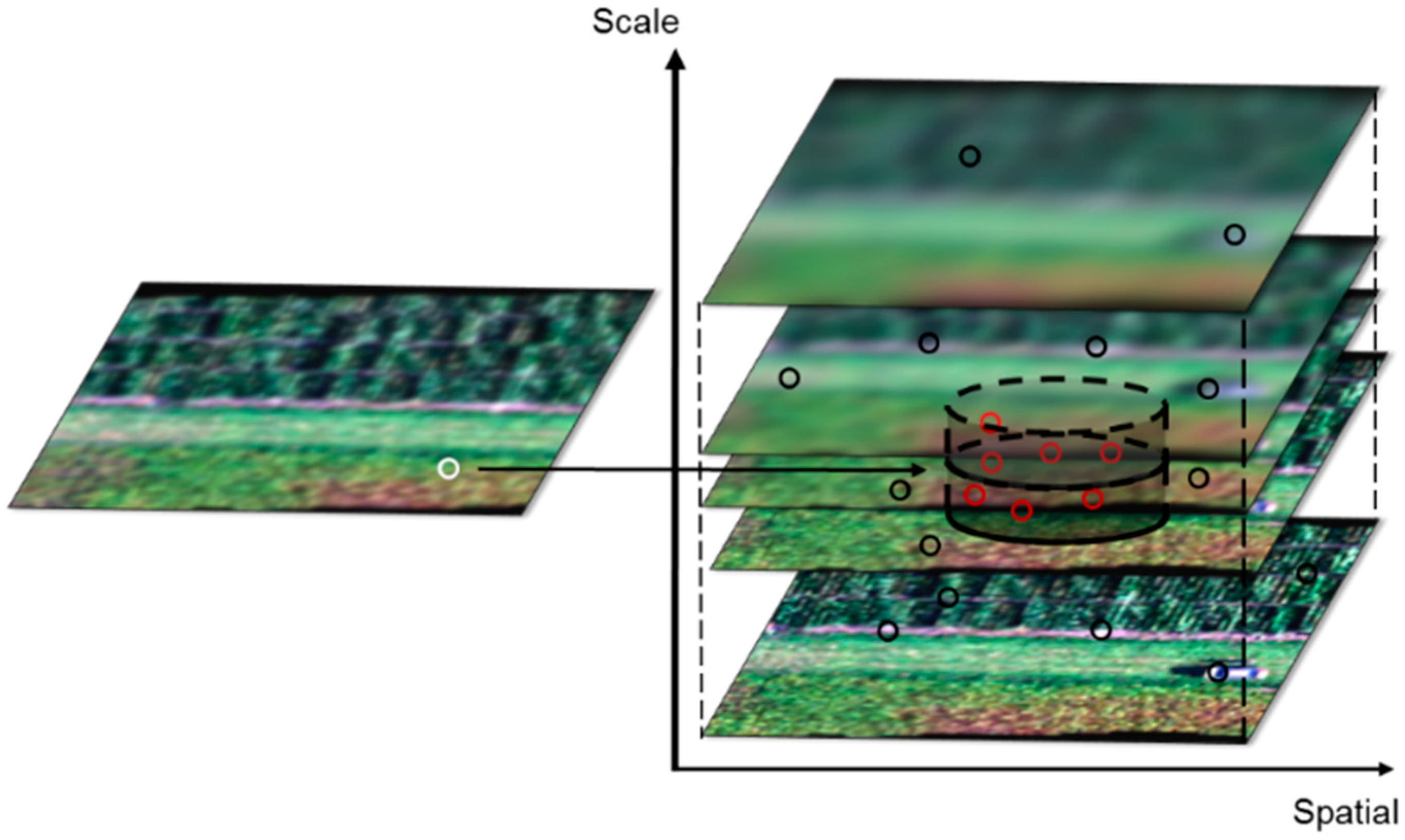

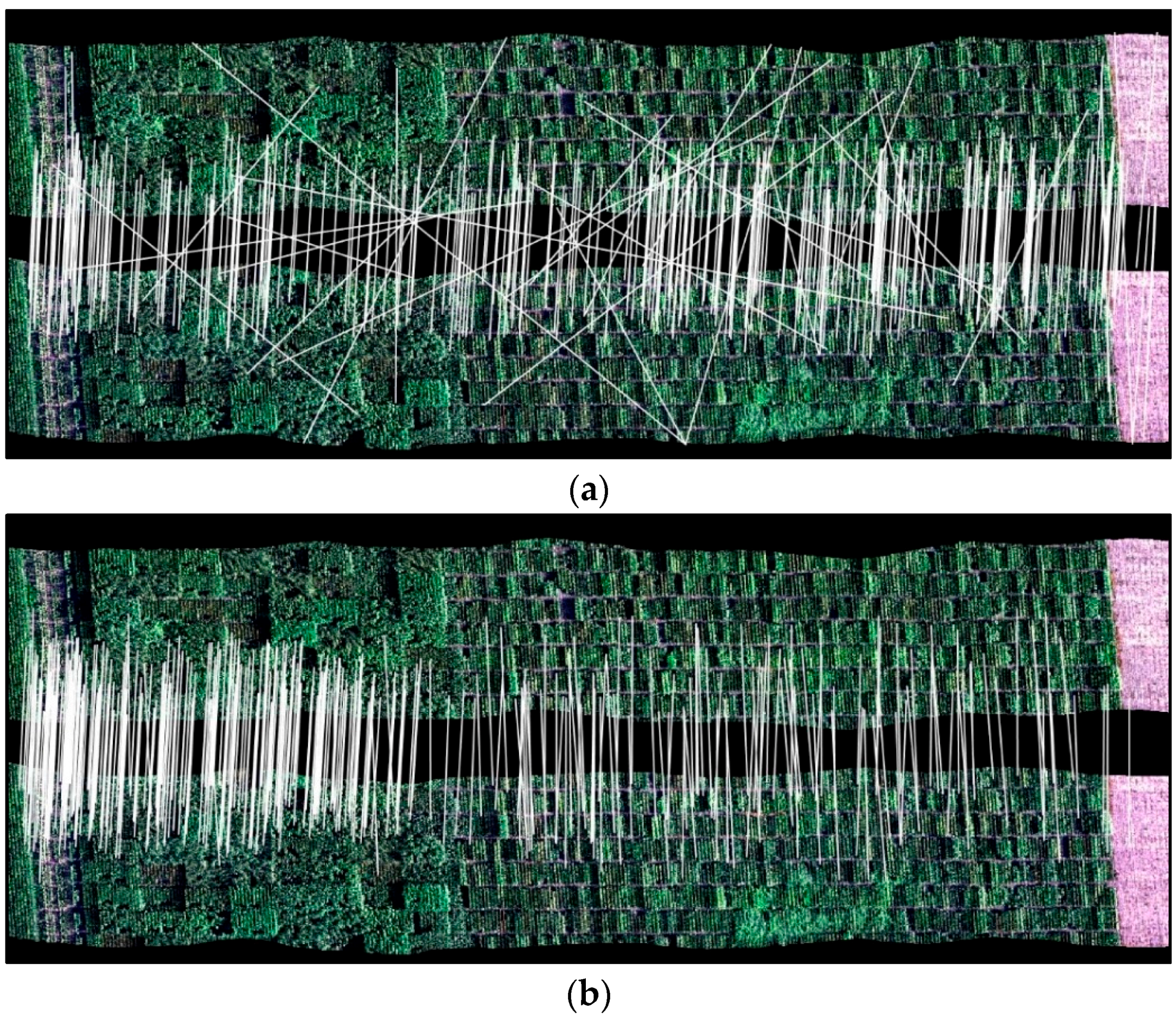

2.3. Spatial Search Space Consideration in the Matching Process

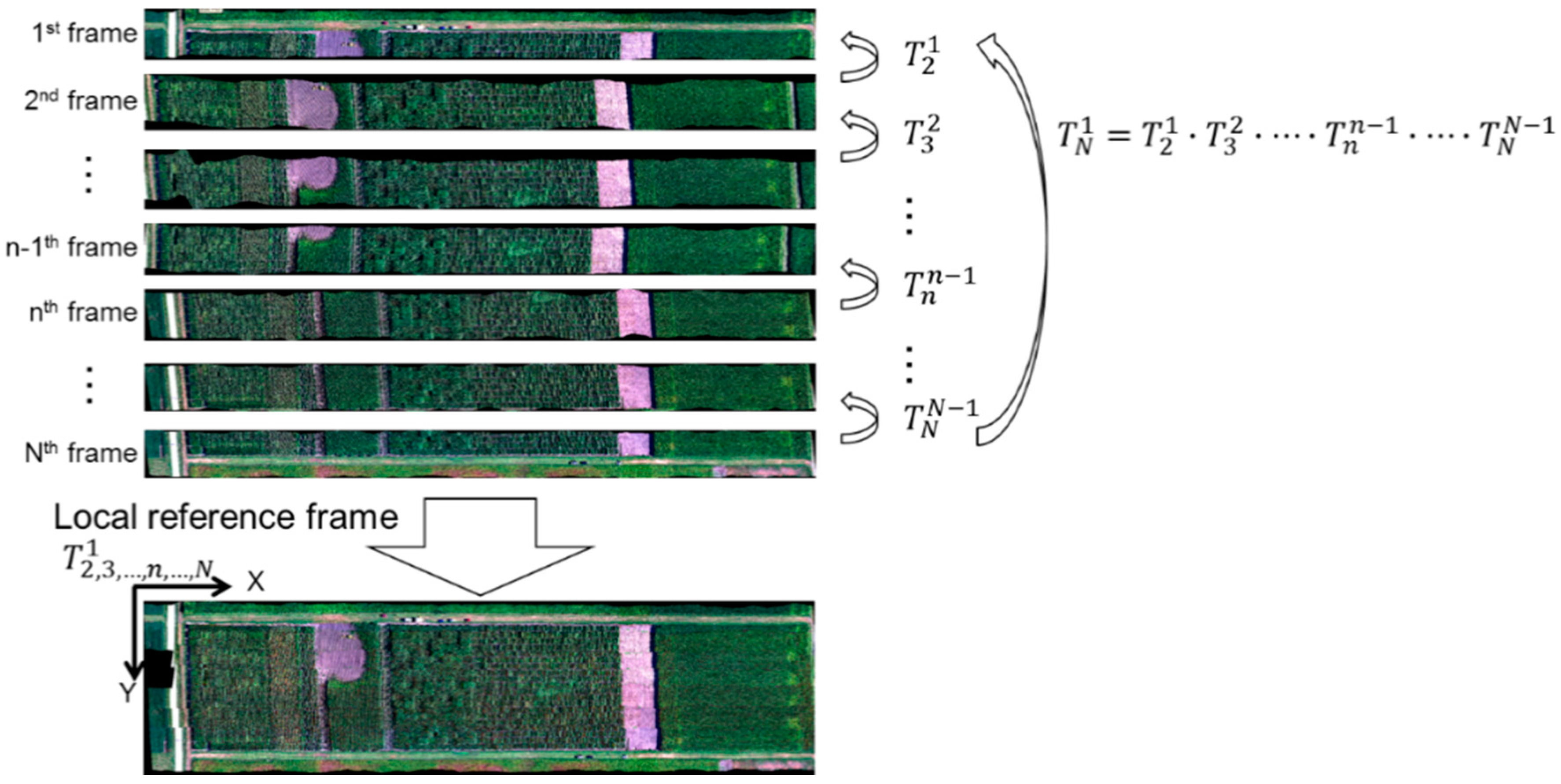

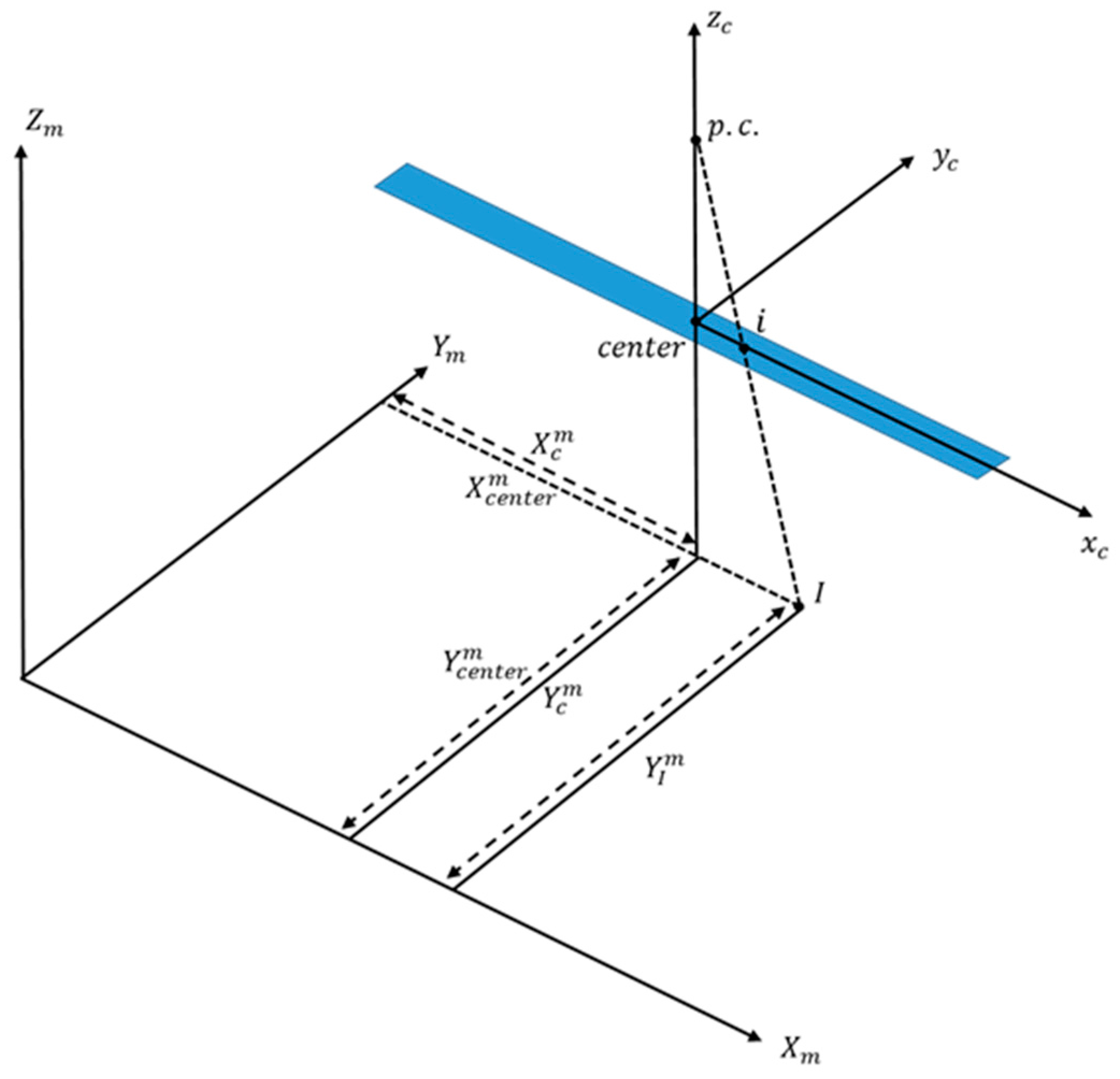

2.3.1. Approximate Evaluation of the Geometric Transformation Relating Partially-Rectified Hyperspectral Orthophotos

2.3.2. Spatial-Search Space Constrained Identification of Tie Points among the RGB-Based and Partially-Rectified Hyperspectral Orthophotos

2.4. Registration of the RGB and Partially-Rectified Hyperspectral Orthophotos

3. Experimental Results

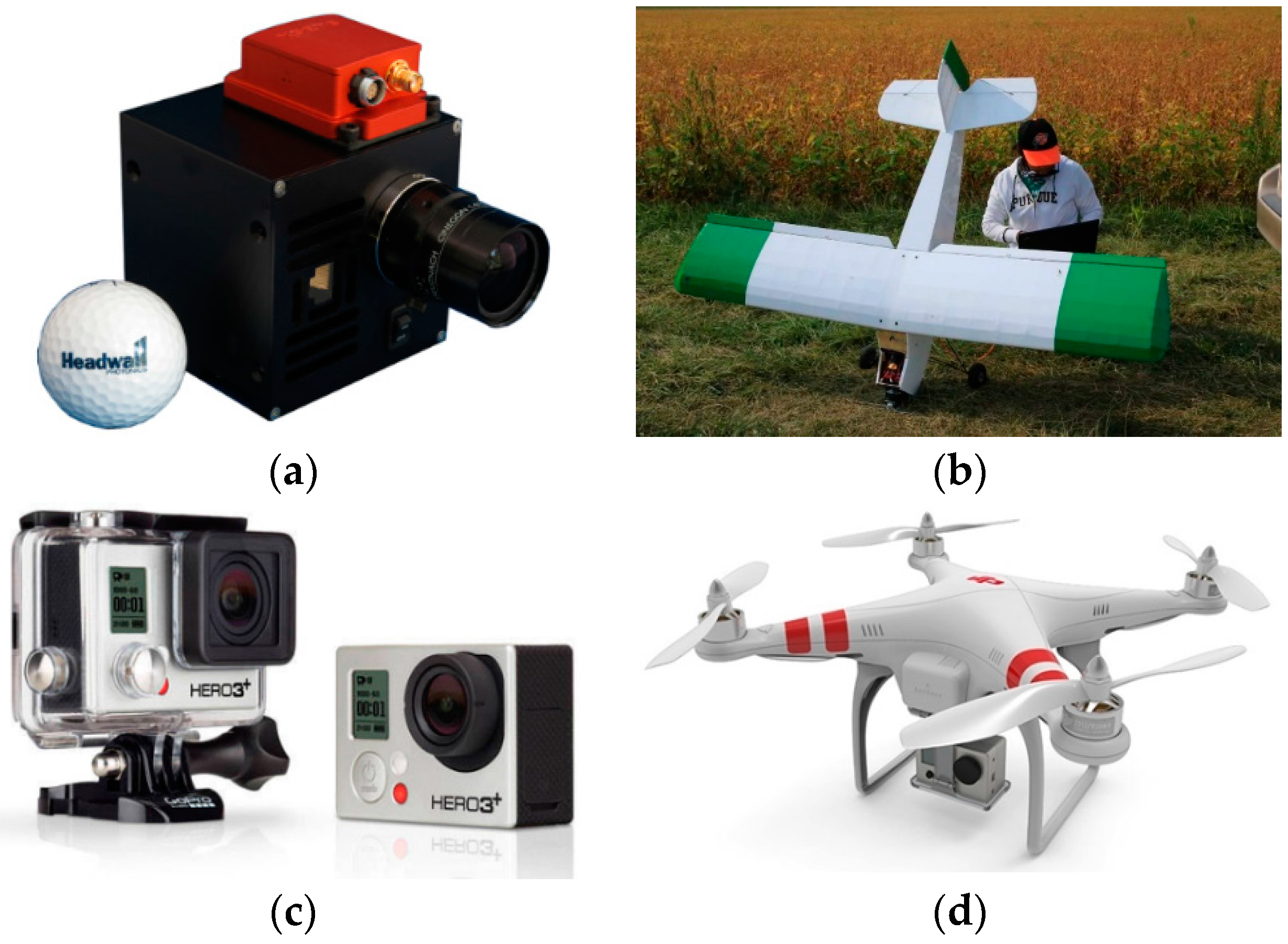

3.1. Test Site and Dataset Description

- A Structure from Motion (SfM) approach [37] is applied to derive the Exterior Orientation Parameters (EOPs) for the captured images and a sparse point cloud representing the field relative to an arbitrarily-defined reference frame.

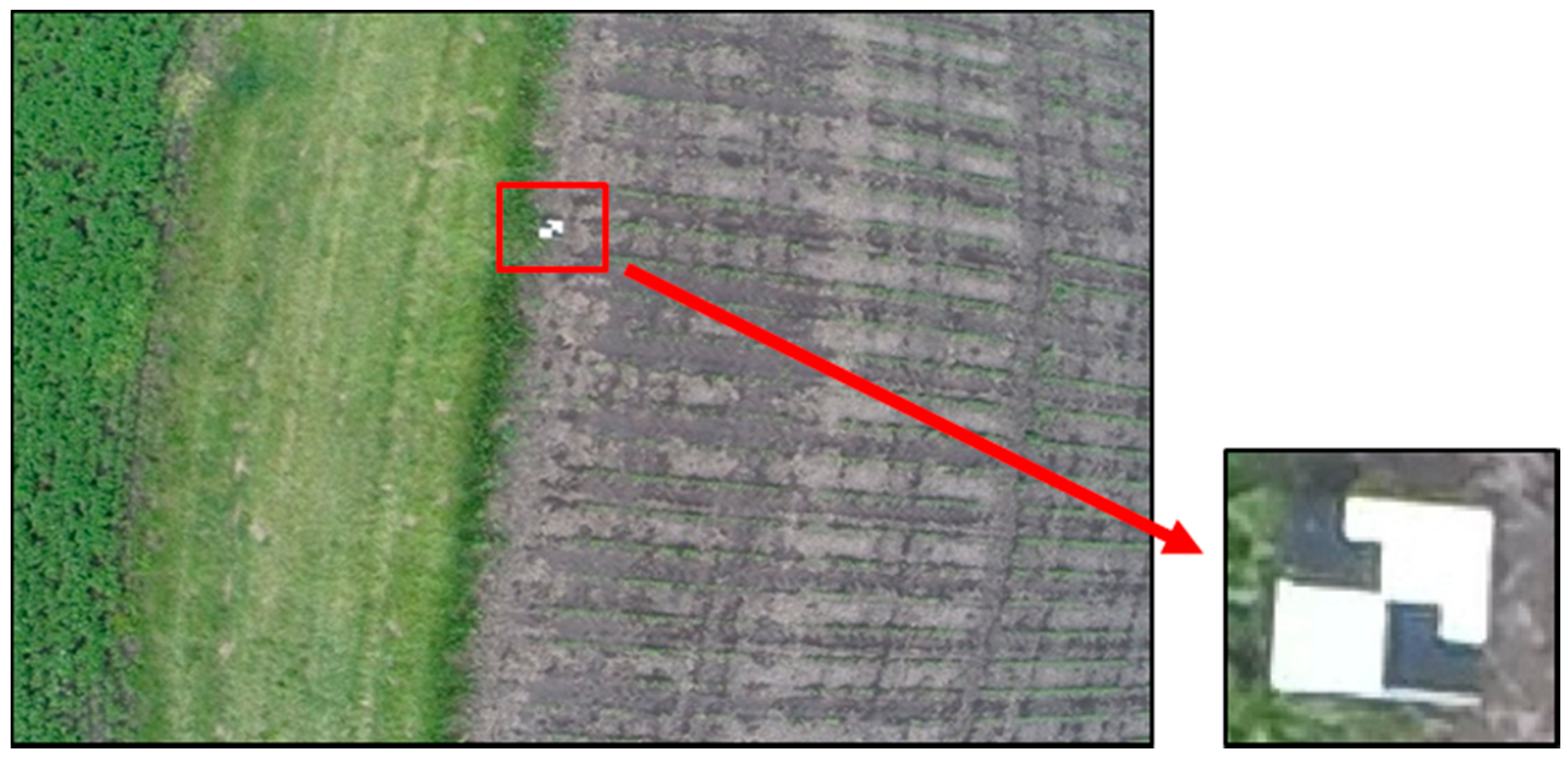

- An absolute orientation process is then applied to transform the derived point cloud and estimated EOPs to a global mapping reference frame. Signalized GCPs, whose coordinates are derived through an RTK GPS survey (10 GCPs are measured), are used to estimate the absolute orientation parameters for the SfM-based point cloud and EOPs (refer to Figure 9 for a close-up image of one of the targets). Then, a global bundle adjustment is carried out to refine the EOPs of the different images and sparse point-cloud coordinates relative to the GCPs’ reference frame.

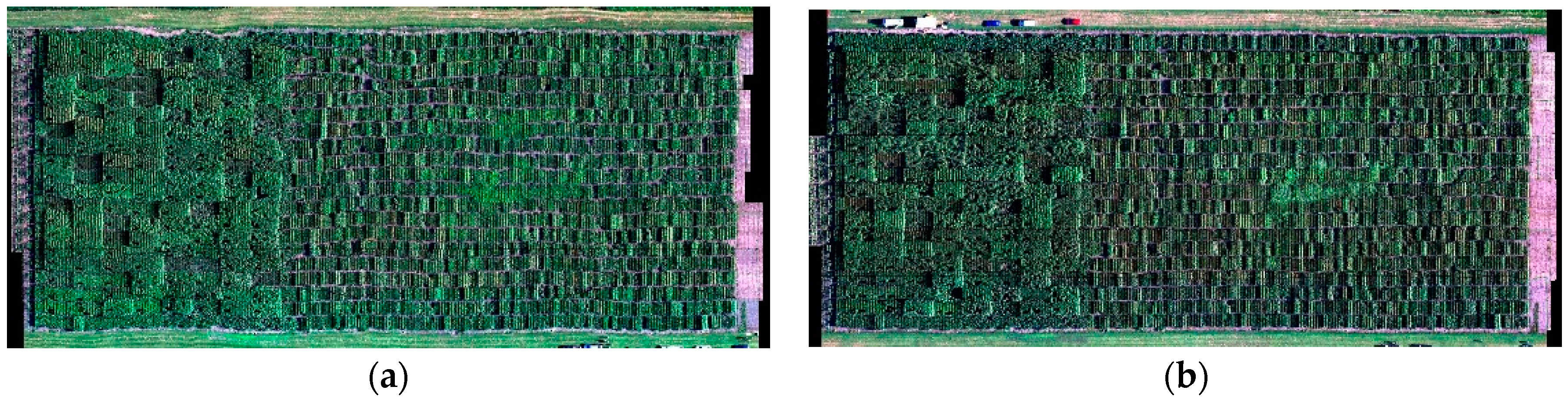

- A DEM is interpolated from the sparse point cloud. The bundle-based EOPs and the camera IOPs, as well as the DEM are finally used to produce an RGB-based orthophoto mosaic of the entire test field (Figure 10). One should note that the color variation between the north and south portions in the orthophoto is caused by illumination differences between the data acquisition epochs. The RGB-based orthophoto has a 4-cm GSD with the Root Mean Square Error (RMSE) of the X, Y and Z coordinates for 20 check points being 2 cm, 3 cm and 6 cm, respectively.

3.2. Results and Analysis

- Although the quality of fit for the manually-based transformation parameters is better than the automatically-based ones, the proposed approach is capable of improving the quality of partially-rectified hyperspectral orthophotos without the need for manual intervention. The proposed automated approach has improved the quality of fit from roughly 5 m to almost 0.6 m. In this regard, one should note that the utilized quality of fit is biased towards the manually-based transformation parameters, since the right half of Table 3 and Table 4 is based on the manually-identified features both for the estimation of the transformation parameters and evaluating the quality of fit.

- The proposed approach tends to show better performance when the original direct geo-referencing information is relatively accurate. In other words, whenever the quality of fit before the refinement is relatively high (i.e., small RMSE value), the corresponding quality of fit after the refinement is significantly improved.

- The number of automatically-identified tie points does not have a significant impact on the quality of the hyperspectral orthophoto refinement. It can be seen from the accuracy assessment of the quality of fit that the RMSE among the tie points using the estimated transformation parameters showed similar values regardless of the number of identified tie points.

4. Conclusions and Recommendations for Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating Multispectral Images and Vegetation Indices for Precision Farming Applications from UAV Images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Fiorani, F.; Schurr, U. Future scenarios for plant phenotyping. Annu. Rev. Plant Biol. 2013, 64, 267–291. [Google Scholar] [CrossRef] [PubMed]

- Busemeyer, L.; Mentrup, D.; Möller, K.; Wunder, E.; Alheit, K.; Hahn, V.; Maurer, H.P.; Reif, J.C.; Würschum, T.; Müller, J.; et al. BreedVision—A Multi-Sensor Platform for Non-Destructive Field-Based Phenotyping in Plant Breeding. Sensors 2013, 13, 2830–2847. [Google Scholar] [CrossRef] [PubMed]

- Tao, V.; Li, J. Advances in Mobile Mapping Technology; ISPRS Book Series No. 4; Taylor & Francis: London, UK, 2007. [Google Scholar]

- Habib, A.; Xiong, W.; He, F.; Yang, H.; Crawford, M. Improving Orthorectification of UAV-based Push-broom Scanner Imagery using Derived Orthophotos from Frame Cameras. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The Application of Small Unmanned Aerial Systems for Precision Agriculture: A Review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and Assessment of Spectrometric, Stereoscopic Imagery Collected using a Lightweight UAV Spectral Camera for Precision Agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Hernandez, A.; Murcia, H.; Copot, C.; De Keyser, R. Towards the Development of a Smart Flying Sensor: Illustration in the Field of Precision Agriculture. Sensors 2015, 15, 16688–16709. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Araus, J.L.; Cairns, J.E. Field High-throughput Phenotyping: The New Crop Breeding Frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef] [PubMed]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D Hyperspectral Information with Lightweight UAV Snapshot Cameras for Vegetation Monitoring: From Camera Calibration to Quality Assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular Dependency of Hyperspectral Measurements over Wheat Characterized by a Novel UAV based Goniometer. Remote Sens. 2015, 7, 725–746. [Google Scholar] [CrossRef]

- Deery, D.; Jimenez-Berni, J.; Jones, H.; Sirault, X.; Furbank, R. Proximal Remote Sensing Buggies and Potential Applications for Field based Phenotyping. Agronomy 2014, 4, 349–379. [Google Scholar] [CrossRef]

- Lawrence, K.C.; Park, B.; Windham, W.R.; Mao, C. Calibration of a Pushbroom Hyperspectral Imaging System for Agricultural Inspection. Trans. Am. Soc. Agric. Eng. 2003, 46, 513–522. [Google Scholar] [CrossRef]

- Inoue, Y.; Sakaiya, E.; Zhu, Y.; Takahashi, W. Diagnostic Mapping of Canopy Nitrogen Content in Rice based on Hyperspectral Measurements. Remote Sens. Environ. 2012, 126, 210–221. [Google Scholar] [CrossRef]

- Mitchell, J.J.; Glenn, N.F.; Sankey, T.T.; Derryberry, D.R.; Germino, M.J. Remote Sensing of Sagebrush Canopy Nitrogen. Remote Sens. Environ. 2012, 124, 217–223. [Google Scholar] [CrossRef]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and Narrowband Multispectral Remote Sensing for Vegetation Monitoring from an Unmanned Aerial Vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Habib, A.F.; Morgan, M.; Lee, Y. Bundle Adjustment with Self-Calibration using Straight Lines. Photogramm. Rec. 2002, 17, 635–650. [Google Scholar] [CrossRef]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV Photogrammetry for Mapping and 3D Modeling—Current Status and Future Perspectives. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 25–31. [Google Scholar] [CrossRef]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A Lightweight Hyperspectral Mapping System and Photogrammetric Processing Chain for Unmanned Aerial Vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef]

- Ramirez-Paredes, J.-P.; Lary, D.J.; Gans, N.R. Low-altitude Terrestrial Spectroscopy from a Pushbroom Sensor. J. Field Robot. 2016, 33, 837–852. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from Scale-invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. SURF Speeded Up Robust Features. Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–152.

- Sedaghat, A.; Mokhtarzade, M.; Ebadi, H. Uniform Robust Scale-invariant Feature Matching for Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4516–4527. [Google Scholar] [CrossRef]

- Han, Y.; Choi, J.; Byun, Y.; Kim, Y. Parameter Optimization for the Extraction of Matching Points between High-resolution Multisensor Images in Urban Areas. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5612–5621. [Google Scholar]

- Huo, C.; Pan, C.; Huo, L.; Zhou, Z. Multilevel SIFT Matching for Large-size VHR Image Registration. IEEE Geosci. Remote Sens. Lett. 2012, 9, 171–175. [Google Scholar] [CrossRef]

- Wang, L.; Niu, Z.; Wu, C.; Xiie, R.; Huang, H. A Robust Multisource Image Automatic Registration System based on the SIFT Descriptor. Int. J. Remote Sens. 2012, 33, 3850–3869. [Google Scholar] [CrossRef]

- Yu, L.; Zhang, D.; Holden, E.J. A Fast and Fully Automatic Registration Approach based on Point Features for Multi-source Remote-sensing Images. Comput. Geosci. 2008, 34, 838–848. [Google Scholar] [CrossRef]

- Fan, B.; Huo, C.; Pan, C.; Kong, Q. Registration of Optical and SAR Satellite Images by Exploring the Spatial Relationship of the Improved SIFT. IEEE Geosci. Remote Sens. Lett. 2013, 10, 657–661. [Google Scholar] [CrossRef]

- Han, Y.; Byun, Y.; Choi, J.; Han, D.; Kim, Y. Automatic Registration of High-resolution Images using Local Properties of Features. Photogramm. Eng. Remote Sens. 2012, 78, 211–221. [Google Scholar] [CrossRef]

- Kang, Z.Z.; Jia, F.M.; Zhang, L.Q. A Robust Image Matching Method based on Optimized BaySAC. Photogramm. Eng. Remote Sens. 2012, 80, 1041–1052. [Google Scholar] [CrossRef]

- Han, Y.; Byun, Y. Automatic and Accurate Registration of VHR Optical and SAR Images using a Quadtree Structure. Int. J. Remote Sens. 2015, 36, 2277–2295. [Google Scholar] [CrossRef]

- Long, T.; Jiao, W.; He, G.; Zhang, Z.A. Fast and Reliable Matching Method for Automated Georeferencing of Remotely-Sensed Imagery. Remote Sens. 2016, 8, 56. [Google Scholar] [CrossRef]

- Liu, F.; Bi, F.; Chen, L.; Shi, H.; Liu, W. Feature-area Optimization: A Novel SAR Image Registration Method. IEEE Geosci. Remote Sens. Lett. 2016, 13, 242–246. [Google Scholar] [CrossRef]

- Meer, P.; Mintz, D.; Rosenfeld, A. Robust Regression Methods for Computer Vision: A Review. Int. J. Comput. Vis. 1991, 6, 59–70. [Google Scholar] [CrossRef]

- He, F.; Habib, A. Target-based and Feature-based Calibration of Low-cost Digital Cameras with Large Field-of-view. In Proceedings of the ASPRS Annual Conference, Tampa, FL, USA, 4–8 April 2015.

- He, F.; Habib, A. Linear Approach for Initial Recovery of the Exterior Orientation Parameters of Randomly Captured Images by Low-cost Mobile Mapping System. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 1, 149–154. [Google Scholar] [CrossRef]

| RGB Frame Camera | Hyperspectral Push-Broom Scanner | |

|---|---|---|

| Acquisition date | 25 July 2015 | 4 August 2015 |

| 21 August 2015 | ||

| Focal length | 3 mm | 17 mm |

| Spatial resolution | 1.5 cm (frame image) | 5 cm (partially-rectified orthophoto) |

| 4 cm (generated orthophoto) | ||

| Geometric accuracy (orthophoto) | ±0.04 m | ±5 m |

| Spectral resolution | 3 bands (RGB) | 278 bands with a 2.2-nm width for each band |

| Flight Lines | Number of Extracted Tie Point Pairs | |

|---|---|---|

| 4 August 2015 | 21 August 2015 | |

| Pass 1–2 | 203 | 126 |

| Pass 2–3 | 303 | 350 |

| Pass 3–4 | 202 | 269 |

| Pass 4–5 | 89 | 210 |

| Pass 5–6 | 306 | 341 |

| Pass 6–7 | 295 | 351 |

| RMSE for the Automatically-Extracted Features Using the Estimated Transformation Parameters from the Proposed Procedure | RMSE for the Manually-Extracted Features Using the Estimated Transformation Parameters from the Manual and Proposed Procedures | |||||

|---|---|---|---|---|---|---|

| Number of Features | RMSE (Before) (m) | RMSE (After) (m) | Number of Features | Transformation Parameters from Manual Measurements (m) | Transformation Parameters from Automatically-Extracted Features (m) | |

| Pass 1 | 25 points | 4.54 | 0.69 | 14 points and 25 lines | 0.26 | 0.84 |

| Pass 2 | 122 points | 5.09 | 0.67 | 27 lines | 0.29 | 0.67 |

| Pass 3 | 95 points | 4.57 | 0.46 | 14 points and 22 lines | 0.21 | 0.36 |

| Pass 4 | 94 points | 4.27 | 0.63 | 26 lines | 0.33 | 0.72 |

| Pass 5 | 173 points | 3.54 | 0.35 | 14 points and 33 lines | 0.31 | 0.63 |

| Pass 6 | 68 points | 5.00 | 0.99 | 24 lines | 0.28 | 0.96 |

| Pass 7 | 178 points | 2.75 | 0.55 | 28 lines | 0.29 | 0.49 |

| RMSE for the Automatically-Extracted Features Using the Estimated Transformation Parameters from the Proposed Procedure | RMSE for the Manually-Extracted Features Using the Estimated Transformation Parameters from the Manual and Proposed Procedures | |||||

|---|---|---|---|---|---|---|

| Number of Features | RMSE (Before) (m) | RMSE (After) (m) | Number of Features | Transformation Parameters from Manual Measurements (m) | Transformation Parameters from Automatically-Extracted Features (m) | |

| Pass 1 | 26 points | 3.15 | 0.70 | 14 points and 25 lines | 0.19 | 0.78 |

| Pass 2 | 86 points | 4.51 | 0.66 | 26 lines | 0.19 | 0.78 |

| Pass 3 | 64 points | 2.08 | 0.51 | 14 points and 26 lines | 0.19 | 0.37 |

| Pass4 | 64 points | 3.70 | 0.70 | 27 lines | 0.18 | 0.77 |

| Pass 5 | 117 points | 1.80 | 0.58 | 26 lines | 0.15 | 0.35 |

| Pass 6 | 58 points | 4.15 | 0.72 | 26 lines | 0.22 | 0.70 |

| Pass 7 | 101 points | 1.42 | 0.62 | 26 lines | 0.20 | 0.38 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Habib, A.; Han, Y.; Xiong, W.; He, F.; Zhang, Z.; Crawford, M. Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sens. 2016, 8, 796. https://doi.org/10.3390/rs8100796

Habib A, Han Y, Xiong W, He F, Zhang Z, Crawford M. Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery. Remote Sensing. 2016; 8(10):796. https://doi.org/10.3390/rs8100796

Chicago/Turabian StyleHabib, Ayman, Youkyung Han, Weifeng Xiong, Fangning He, Zhou Zhang, and Melba Crawford. 2016. "Automated Ortho-Rectification of UAV-Based Hyperspectral Data over an Agricultural Field Using Frame RGB Imagery" Remote Sensing 8, no. 10: 796. https://doi.org/10.3390/rs8100796