One-Class Classification of Airborne LiDAR Data in Urban Areas Using a Presence and Background Learning Algorithm

Abstract

:1. Introduction

2. Materials and Methods

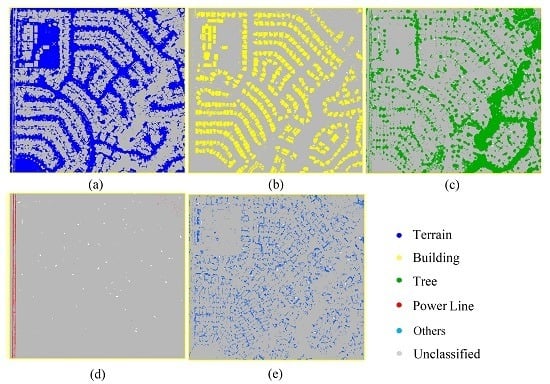

2.1. Dataset

2.2. Features of Interest

2.2.1. Height-Based Features

2.2.2. Eigen-Based Features

2.2.3. Echo-Based Features

2.2.4. Multi-Scale Features

2.3. Classification Schemes

2.3.1. PBL-BP Classification

2.3.2. Other One-Class Classification Methods

2.3.3. SVM Classification

2.4. Accuracy Assessment

3. Results

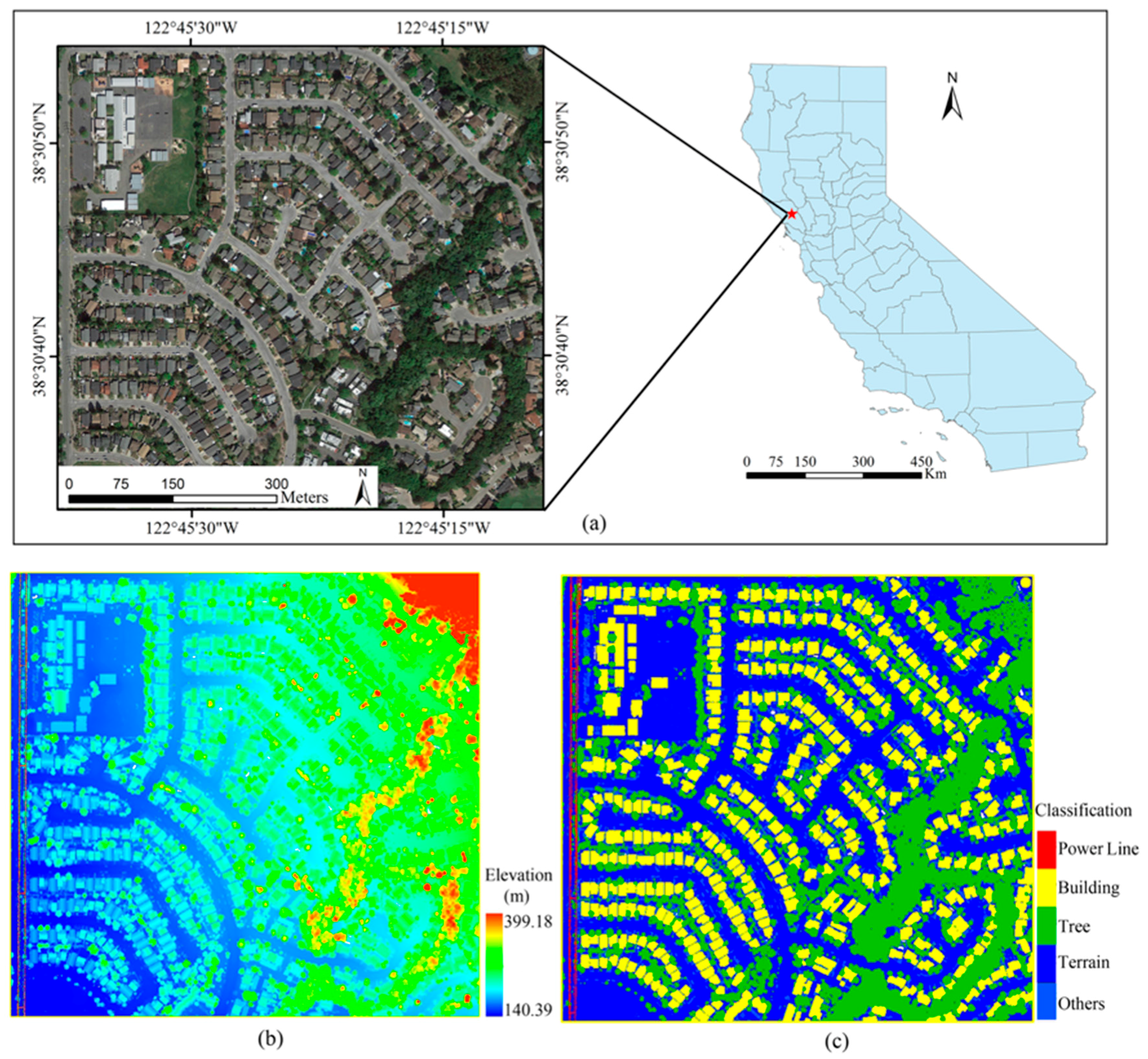

3.1. One-Class Classification Results

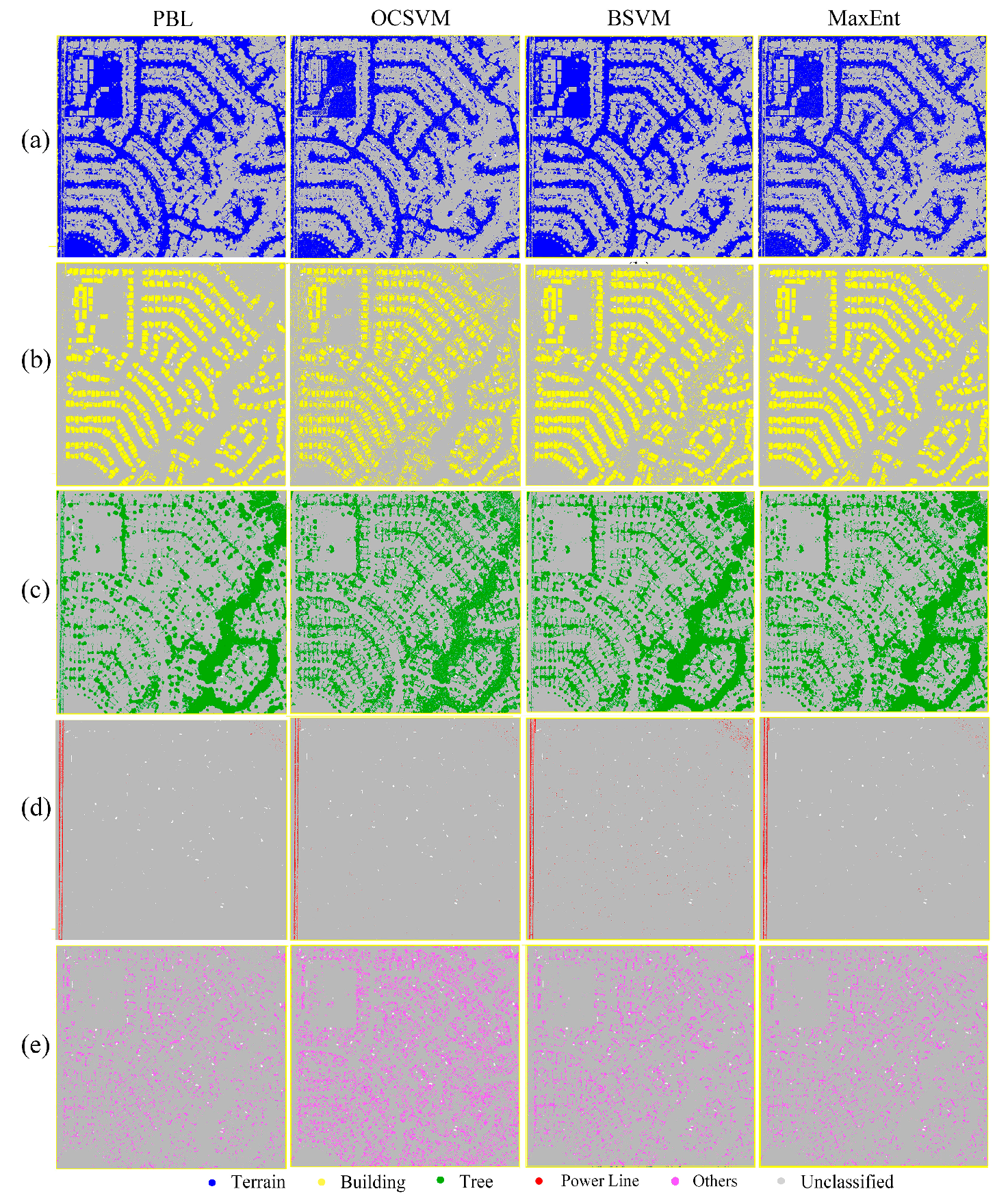

3.2. Multi-Class Classification Results

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Niemeyer, J.; Rottensteiner, F.; Soergel, U. Contextual classification of LiDAR data and building object detection in urban areas. ISPRS J. Photogramm. Remote Sens. 2014, 87, 152–165. [Google Scholar] [CrossRef]

- Tian, S.; Zhang, X.; Tian, J.; Sun, Q. Random forest classification of wetland landcovers from multi-sensor data in the arid region of Xinjiang, China. Remote Sens. 2016, 8, 954. [Google Scholar] [CrossRef]

- Su, Y.; Guo, Q.; Collins, B.M.; Fry, D.L.; Hu, T.; Kelly, M. Forest fuel treatment detection using multi-temporal airborne LiDAR data and high-resolution aerial imagery: A case study in the sierra nevada mountains, California. Int. J. Remote Sens. 2016, 37, 3322–3345. [Google Scholar] [CrossRef]

- Wu, B.; Yu, B.; Wu, Q.; Yao, S.; Zhao, F.; Mao, W.; Wu, J. A graph-based approach for 3d building model reconstruction from airborne LiDAR point clouds. Remote Sens. 2017, 9, 92. [Google Scholar] [CrossRef]

- Charaniya, A.P.; Manduchi, R.; Lodha, S.K. Supervised Parametric Classification of Aerial LiDAR Data. In Proceedings of the CVPRW, Washington, DC, USA, 27 June–2 July 2004; p. 30. [Google Scholar]

- Chehata, N.; Guo, L.; Mallet, C. Airborne LiDAR feature selection for urban classification using random forests. Int. Arch. Photogramm. Remote Sens. 2009, 38, 207–212. [Google Scholar]

- Alexander, C.; Tansey, K.; Kaduk, J.; Holland, D.; Tate, N.J. Backscatter coefficient as an attribute for the classification of full-waveform airborne laser scanning data in urban areas. ISPRS J. Photogramm. Remote Sens. 2010, 65, 423–432. [Google Scholar] [CrossRef]

- Lodha, S.K.; Fitzpatrick, D.M.; Helmbold, D.P. Aerial LiDAR Data Classification Using Adaboost. In Proceedings of the 3DIM, Montreal, QC, Canada, 21–23 August 2007; pp. 435–442. [Google Scholar]

- Guo, B.; Huang, X.; Zhang, F.; Sohn, G. Classification of airborne laser scanning data using jointboost. ISPRS J. Photogramm. Remote Sens. 2015, 100, 71–83. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F.; Roux, M.; Soergel, U.; Heipke, C. Relevance assessment of full-waveform LiDAR data for urban area classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, S71–S84. [Google Scholar] [CrossRef]

- Zhou, M.; Li, C.; Ma, L.; Guan, H. Land cover classification from full-waveform LiDAR data based on support vector machines. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B3, 447–452. [Google Scholar]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the support of a high-dimensional distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Li, P.; Wang, J. Urban built-up area extraction from landsat TM/ETM+ images using spectral information and multivariate texture. Remote Sens. 2014, 6, 7339–7359. [Google Scholar] [CrossRef]

- Liu, B.; Dai, Y.; Li, X.; Lee, W.S.; Yu, P.S. Building Text Classifiers Using Positive and Unlabeled Examples. In Proceedings of the ICDM, Melbourne, FL, USA, 22 November 2003; pp. 179–186. [Google Scholar]

- Baldeck, C.A.; Asner, G.P. Single-species detection with airborne imaging spectroscopy data: A comparison of support vector techniques. IEEE J. STARS 2015, 8, 2501–2512. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, W.; Li, D.; Qin, Q. Partially supervised classification: based on weighted unlabeled samples support vector machine. Int. J. Data Warehous. 2008, 2, 42–56. [Google Scholar] [CrossRef]

- Mack, B.; Roscher, R.; Waske, B. Can I Trust My One-Class Classification. Remote Sens. 2014, 6, 8779–8802. [Google Scholar] [CrossRef]

- Elkan, C.; Noto, K. Learning Classifiers from Only Positive and Unlabeled Data. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 213–220. [Google Scholar]

- Li, W.; Guo, Q.; Elkan, C. A positive and unlabeled learning algorithm for one-class classification of remote-sensing data. IEEE Trans. Geosci. Remote Sens. 2011, 49, 717–725. [Google Scholar] [CrossRef]

- Guo, Q.; Li, W.; Liu, D.; Chen, J. A framework for supervised image classification with incomplete training samples. Photogramm. Eng. Remote Sens. 2012, 78, 595–604. [Google Scholar] [CrossRef]

- Wan, B.; Guo, Q.; Fang, F.; Su, Y.; Wang, R. Mapping us urban extents from modis data using one-class classification method. Remote Sens. 2015, 7, 10143–10163. [Google Scholar] [CrossRef]

- Chen, X.; Yin, D.; Chen, J.; Cao, X. Effect of training strategy for positive and unlabeled learning classification: Test on Landsat imagery. Remote Sens. Lett. 2016, 7, 1063–1072. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620. [Google Scholar] [CrossRef]

- Phillips, S.J.; Dudík, M.; Schapire, R.E. A Maximum Entropy Approach to Species Distribution Modeling. In Proceedings of the Twenty-First International Conference on Machine Learning, Banff, AB, Canada, 4–8 July 2004; p. 83. [Google Scholar]

- Phillips, S.J.; Anderson, R.P.; Schapire, R.E. Maximum entropy modeling of species geographic distributions. Ecol. Model. 2006, 190, 231–259. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q. A maximum entropy approach to one-class classification of remote sensing imagery. Int. J. Remote Sens. 2010, 31, 2227–2235. [Google Scholar] [CrossRef]

- Baldwin, R.A. Use of maximum entropy modeling in wildlife research. Entropy 2009, 11, 854–866. [Google Scholar] [CrossRef]

- Merow, C.; Smith, M.J.; Silander, J.A. A practical guide to MaxEnt for modeling species’ distributions: What it does, and why inputs and settings matter. Ecography 2013, 36, 1058–1069. [Google Scholar] [CrossRef]

- Radosavljevic, A.; Anderson, R.P. Making better MaxEnt models of species distributions: Complexity, overfitting and evaluation. J. Biogeogr. 2014, 41, 629–643. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Elkan, C. Can we model the probability of presence of species without absence data? Ecography 2011, 34, 1096–1105. [Google Scholar] [CrossRef]

- Bretar, F.; Chauve, A.; Bailly, J.-S.; Mallet, C.; Jacome, A. Terrain surfaces and 3d landcover classification from small footprint full-waveform LiDAR data: Application to badlands. Hydrol. Earth Syst. Sci. 2009, 13, 1531–1544. [Google Scholar] [CrossRef]

- Huang, Y.; Yu, B.; Zhou, J.; Hu, C.; Tan, W.; Hu, Z.; Wu, J. Toward automatic estimation of urban green volume using airborne LiDAR data and high resolution remote sensing images. Front. Earth Sci. 2013, 7, 43–54. [Google Scholar] [CrossRef]

- Zhu, X.; Toutin, T. Land cover classification using airborne LiDAR products in Beauport, Québec, Canada. Int. J. Image Data Fusion 2013, 4, 252–271. [Google Scholar] [CrossRef]

- LAStools-Efficient Tools for LiDAR Processing, Version 140430. 2014. Available online: https://rapidlasso.com/lastools/ (accessed on 6 August 2017).

- Lin, C.-H.; Chen, J.-Y.; Su, P.-L.; Chen, C.-H. Eigen-feature analysis of weighted covariance matrices for LiDAR point cloud classification. ISPRS J. Photogramm. Remote Sens. 2014, 94, 70–79. [Google Scholar] [CrossRef]

- Zhang, J.; Lin, X.; Ning, X. Svm-based classification of segmented airborne LiDAR point clouds in urban areas. Remote Sens. 2013, 5, 3749–3775. [Google Scholar] [CrossRef]

- Ni, H.; Lin, X.; Zhang, J. Classification of ALS point cloud with improved point cloud segmentation and random forests. Remote Sens. 2017, 9, 288. [Google Scholar] [CrossRef]

- Singh, K.K.; Vogler, J.B.; Shoemaker, D.A.; Meentemeyer, R.K. LiDAR-landsat data fusion for large-area assessment of urban land cover: Balancing spatial resolution, data volume and mapping accuracy. ISPRS J. Photogramm. Remote Sens. 2012, 74, 110–121. [Google Scholar] [CrossRef]

- Brennan, R.; Webster, T. Object-oriented land cover classification of LiDAR-derived surfaces. Can. J. Remote Sens. 2006, 32, 162–172. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D terrestrial LiDAR data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef] [Green Version]

- Richard, M.D.; Lippmann, R.P. Neural network classifiers estimate bayesian a posteriori probabilities. Neural Comput. 1991, 3, 461–483. [Google Scholar] [CrossRef]

- Yuan, H.; Van Der Wiele, C.F.; Khorram, S. An automated artificial neural network system for land use/land cover classification from landsat tm imagery. Remote Sens. 2009, 1, 243–265. [Google Scholar] [CrossRef]

- Allwein, E.L.; Schapire, R.E.; Singer, Y. Reducing multiclass to binary: A unifying approach for margin classifiers. J. Mach. Learn. Res. 2000, 1, 113–141. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Sanchez-Hernandez, C.; Boyd, D.S.; Foody, G.M. One-class classification for mapping a specific land-cover class: SVDD classification of fenland. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1061–1073. [Google Scholar] [CrossRef]

- Joachims, T. Making large-Scale SVM Learning Practical. In Advances in Kernel Methods—Support Vector Learning; Schölkopf, B., Burges, C., Smola, A., Eds.; MIT-Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Phillips, S.J. A Brief Tutorial on Maxent. 2017. Available online: http://biodiversityinformatics.amnh.org/open_source/maxent/ (accessed on 6 August 2017).

- Song, X.; Fan, G.; Rao, M. Svm-based data editing for enhanced one-class classification of remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. Lett. 2008, 5, 189–193. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A.; Sanchez-Hernandez, C.; Boyd, D.S. Training set size requirements for the classification of a specific class. Remote Sens. Environ. 2006, 104, 1–14. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Xi, X.; Zeng, H.; Li, D.; Xia, S.; Wang, P. Fusion of airborne discrete-return LiDAR and hyperspectral data for land cover classification. Remote Sens. 2015, 8, 3. [Google Scholar] [CrossRef]

- Duan, K.; Keerthi, S.S.; Poo, A.N. Evaluation of simple performance measures for tuning svm hyperparameters. Neurocomputing 2003, 51, 41–59. [Google Scholar] [CrossRef]

- Foody, G. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q. A New accuracy assessment method for one-class remote sensing classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 4621–4632. [Google Scholar]

- Castelli, V.; Cover, T.M. The relative value of labeled and unlabeled samples in pattern recognition with an unknown mixing parameter. IEEE Trans. Inf. Theory 1996, 42, 2102–2117. [Google Scholar] [CrossRef]

- Phillips, S.J. Transferability, sample selection bias and background data in presence-only modelling: A response to peterson et al. (2007). Ecography 2008, 31, 272–278. [Google Scholar] [CrossRef]

- Warren, D.L.; Seifert, S.N. Ecological niche modeling in MaxEnt: The importance of model complexity and the performance of model selection criteria. Ecol. Appl. 2011, 21, 335–342. [Google Scholar] [CrossRef] [PubMed]

- Mack, B.; Waske, B. In-depth comparisons of MaxEnt, biased SVM and one-class SVM for one-class classification of remote sensing data. Remote Sens. Lett. 2017, 8, 290–299. [Google Scholar] [CrossRef]

- Stenzel, S.; Fassnacht, F.E.; Mack, B.; Schmidtlein, S. Identification of high nature value grassland with remote sensing and minimal field data. Ecol. Indic. 2017, 74, 28–38. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the importance of training data sample selection in random forest image classification: A case study in peatland ecosystem mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Silva, J.; Bacao, F.; Caetano, M. Specific land cover class mapping by semi-supervised weighted support vector machines. Remote Sens. 2017, 9, 181. [Google Scholar] [CrossRef]

- Silva, J.; Bacao, F.; Dieng, M.; Foody, G.M.; Caetano, M. Improving specific class mapping from remotely sensed data by cost-sensitive learning. Int. J. Remote Sens. 2017, 38, 3294–3316. [Google Scholar] [CrossRef]

- Liu, Y.; Guo, Q.; Tian, Y. A software framework for classification models of geographical data. Comput. Geosci. 2012, 42, 47–56. [Google Scholar] [CrossRef]

- Foody, G.; Boyd, D.; Sanchez-Hernandez, C. Mapping a specific class with an ensemble of classifiers. Int. J. Remote Sens. 2007, 28, 1733–1746. [Google Scholar] [CrossRef]

- Ko, C.; Sohn, G.; Remmel, T.K.; Miller, J. Hybrid ensemble classification of tree genera using airborne LiDAR data. Remote Sens. 2014, 6, 11225–11243. [Google Scholar] [CrossRef]

- Garg, A.; Pavlovic, V.; Huang, T.S. Bayesian Networks as Ensemble of Classifiers. In Proceedings of the IEEE 16th International Conference on Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; Volume 2, pp. 779–784. [Google Scholar]

- Ko, C.; Sohn, G.; Remmel, T.K.; Miller, J.R. Maximizing the diversity of ensemble random forests for tree genera classification using high density LiDAR data. Remote Sens. 2016, 8, 646. [Google Scholar] [CrossRef]

| Classes | Performance Metrics | PBL-BP | OCSVM | BSVM | MaxEnt |

|---|---|---|---|---|---|

| Terrain | UA (%) | 98.11 | 97.03 | 95.80 | 100.00 |

| PA (%) | 98.04 | 71.48 | 99.57 | 96.29 | |

| F-score | 0.98 | 0.82 | 0.97 | 0.98 | |

| Building | UA (%) | 94.03 | 70.56 | 82.94 | 86.17 |

| PA (%) | 90.63 | 79.72 | 96.79 | 98.57 | |

| F-score | 0.92 | 0.74 | 0.89 | 0.91 | |

| Tree | UA (%) | 96.01 | 66.26 | 82.92 | 87.56 |

| PA (%) | 92.49 | 74.59 | 98.09 | 97.38 | |

| F-score | 0.94 | 0.70 | 0.89 | 0.92 | |

| Power Line | UA (%) | 95.89 | 91.03 | 65.10 | 90.50 |

| PA (%) | 95.94 | 88.93 | 99.66 | 98.67 | |

| F-score | 0.96 | 0.89 | 0.78 | 0.94 | |

| Others | UA (%) | 91.73 | 16.18 | 45.49 | 86.48 |

| PA (%) | 92.00 | 48.81 | 82.89 | 98.22 | |

| F-score | 0.92 | 0.24 | 0.58 | 0.91 |

| Prediction | Terrain | Building | Tree | Power Line | Others | |

|---|---|---|---|---|---|---|

| Reference | ||||||

| Terrain | 2,301,098 | 37 | 792 | 0 | 1142 | |

| Building | 1396 | 917,961 | 49,115 | 7 | 3024 | |

| Tree | 5987 | 31,905 | 1,018,900 | 458 | 19,167 | |

| Power Line | 0 | 129 | 697 | 10,873 | 0 | |

| Others | 12,428 | 2795 | 10,582 | 3 | 226,807 | |

| PA (%) | 99.91 | 94.49 | 94.66 | 92.94 | 89.78 | |

| UA (%) | 99.15 | 96.34 | 94.33 | 95.87 | 90.67 | |

| OA (%) | 96.97 | |||||

| Prediction | Terrain | Building | Tree | Power Line | Others | |

|---|---|---|---|---|---|---|

| Reference | ||||||

| Terrain | 2,241,724 | 0 | 5 | 0 | 61,340 | |

| Building | 0 | 935,627 | 27,062 | 89 | 8725 | |

| Tree | 11 | 31,467 | 1,008,098 | 2002 | 34,839 | |

| Power Line | 0 | 0 | 71 | 11,628 | 0 | |

| Others | 11,044 | 2319 | 2435 | 0 | 236,817 | |

| PA (%) | 97.34 | 96.31 | 93.65 | 99.39 | 93.75 | |

| UA (%) | 99.51 | 96.51 | 97.15 | 84.75 | 69.30 | |

| OA (%) | 96.07 | |||||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ao, Z.; Su, Y.; Li, W.; Guo, Q.; Zhang, J. One-Class Classification of Airborne LiDAR Data in Urban Areas Using a Presence and Background Learning Algorithm. Remote Sens. 2017, 9, 1001. https://doi.org/10.3390/rs9101001

Ao Z, Su Y, Li W, Guo Q, Zhang J. One-Class Classification of Airborne LiDAR Data in Urban Areas Using a Presence and Background Learning Algorithm. Remote Sensing. 2017; 9(10):1001. https://doi.org/10.3390/rs9101001

Chicago/Turabian StyleAo, Zurui, Yanjun Su, Wenkai Li, Qinghua Guo, and Jing Zhang. 2017. "One-Class Classification of Airborne LiDAR Data in Urban Areas Using a Presence and Background Learning Algorithm" Remote Sensing 9, no. 10: 1001. https://doi.org/10.3390/rs9101001