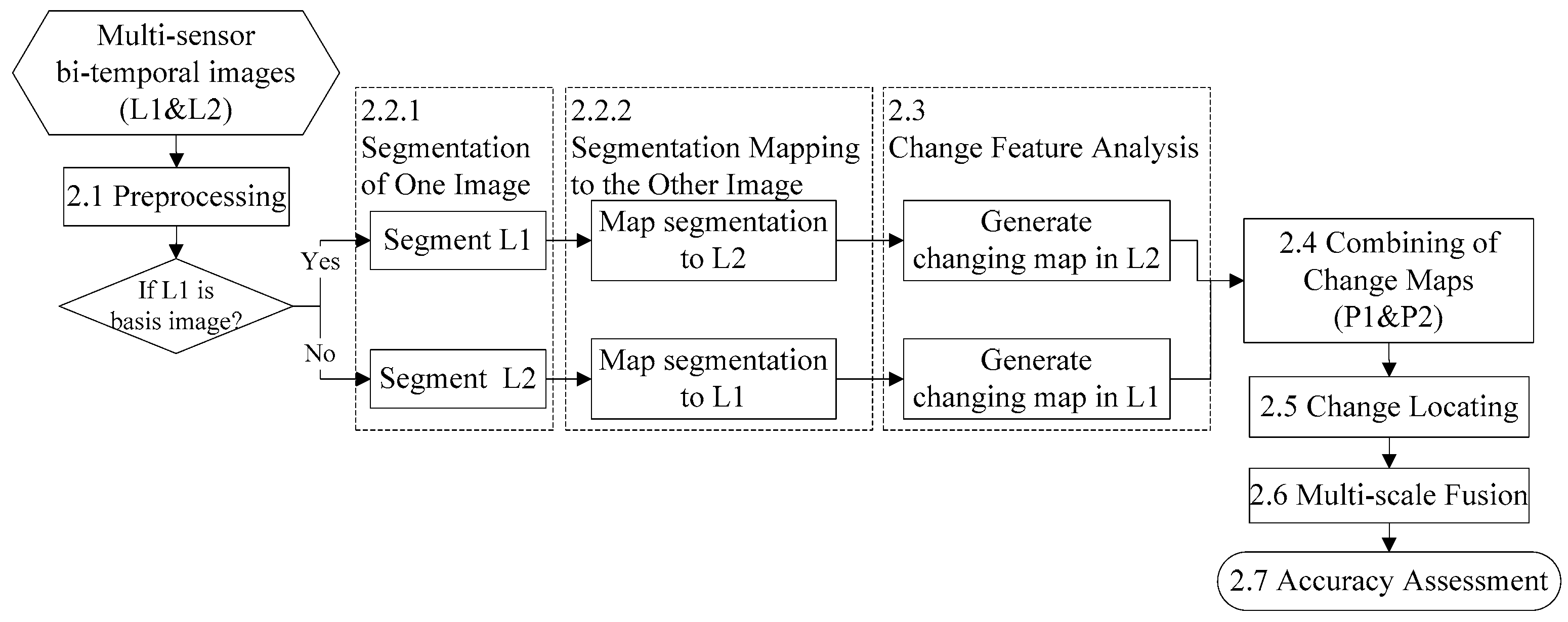

3.1. The First Study Area

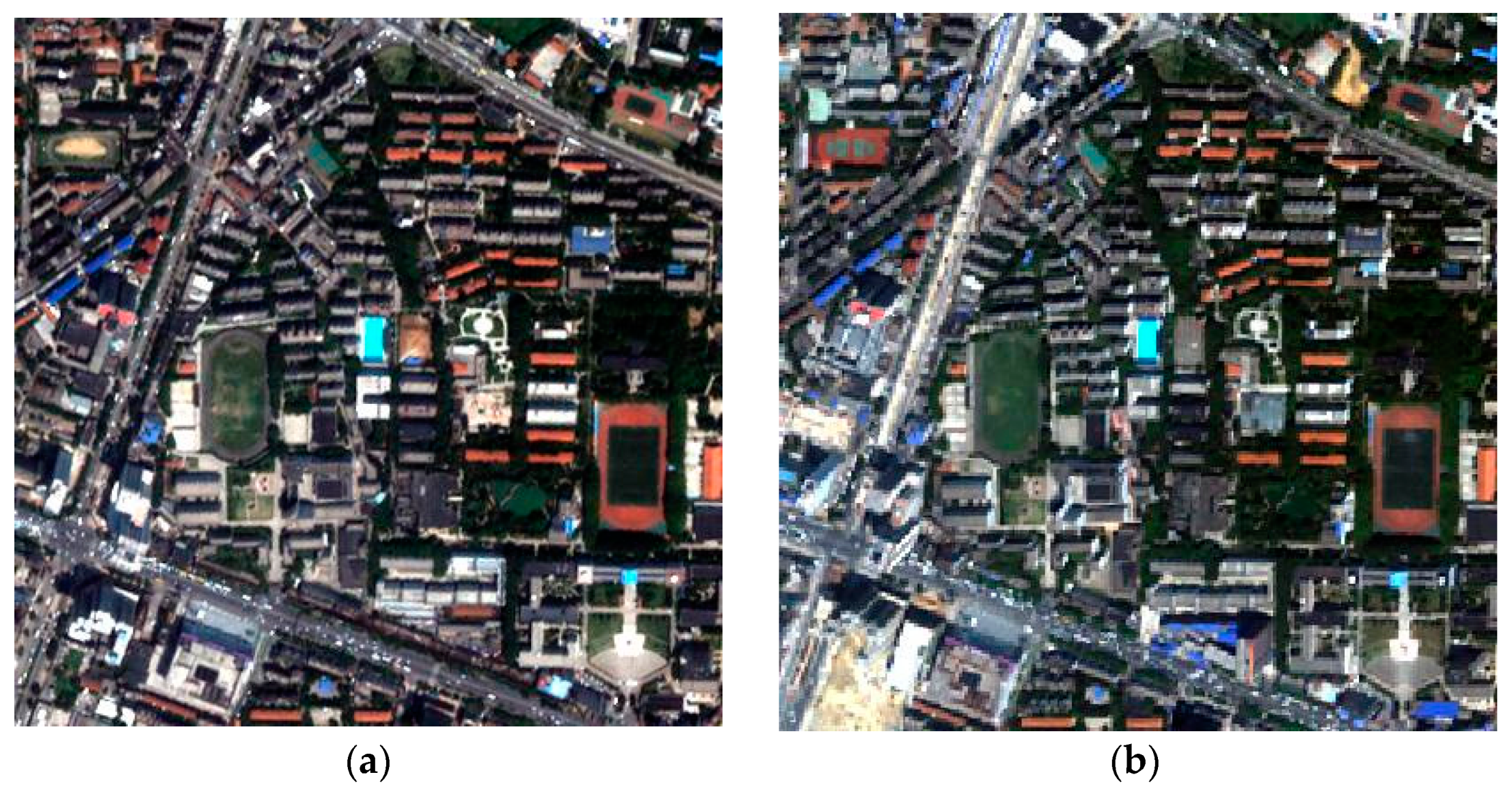

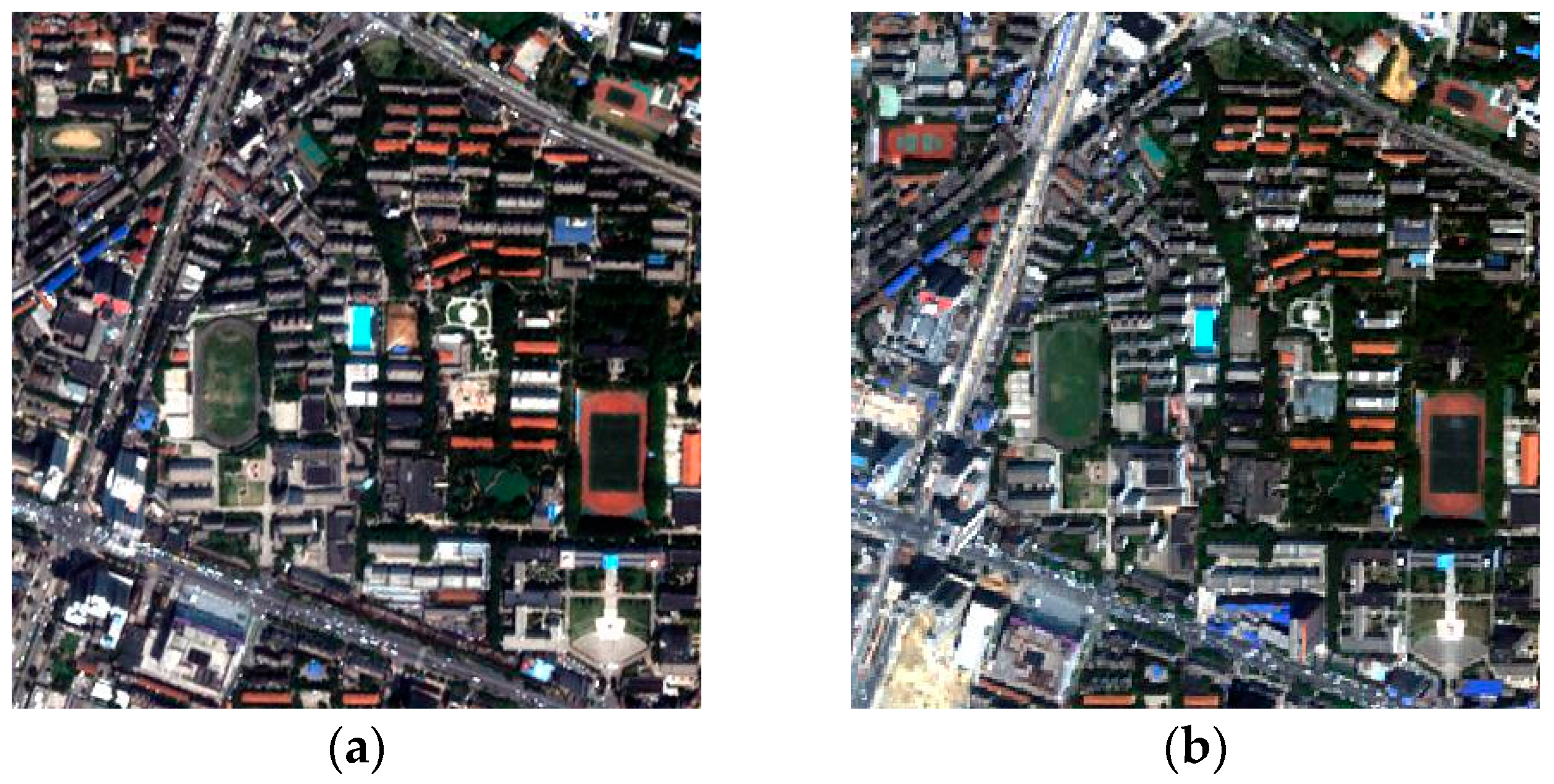

The first study area covers the campus of Wuhan University in Hubei province of China. The bi-temporal images were respectively acquired by the QuickBird satellite in April 2005 (L1) and the IKONOS satellite in July 2009 (L2). In order to preserve the spectral information, the MS images were used in the experiments. Although there were four bands in both images, their spectral and spatial characteristics differed as they were acquired by different sensors (

Table 1). Either L1 or L2 can be viewed as the basis image in the image resampling preprocessing.

1. L1 as the basis image

With L1 as the basis image, L2 was interpolated to the spatial resolution of L1.

Figure 3 shows the bi-temporal images after the interpolation, which are both 400 × 400 pixels. In order to avoid the effects of vegetation phenology and solar elevation, the vegetation and shadow were extracted and masked out.

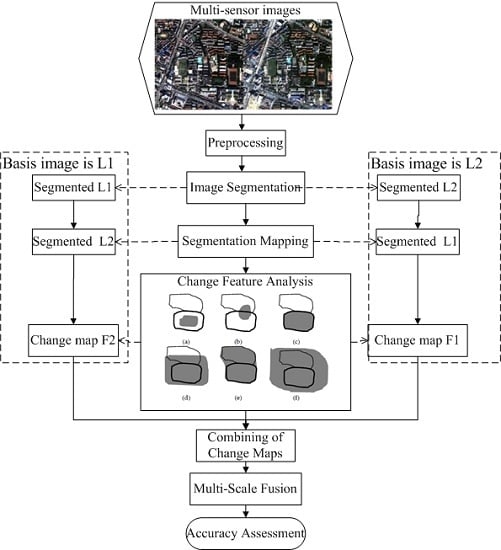

By mapping the segmentation of L1 to L2, a change map was generated by calculating the value of the change probability (Equation (7)) for each object. The other change map was obtained by exchanging the order of the two images. With different ratios for combining these maps, the characteristics of the combined changed maps varied.

In order to determine the change locations, it is crucial to discriminate the changes from the unchanged areas in the combined change map. The two threshold selection techniques and

k-means clustering (

k = 2) (introduced in

Section 2.5) were used to analyze the combined change map. The results of the three methods are shown in

Table 2. In this table, the left, middle, and right parts, respectively, show false, missed alarms, and overall errors among the three methods with different combining ratios of change maps. It can be seen that the overall errors of the three methods are similar. The

k-means clustering (

k = 2) obtains the smallest number of errors, and the threshold selection by clustering gray levels of boundary method performs a little better than Otsu’s thresholding method. Moreover, with the increase of the combination ratio of the change maps, the overall errors of each method decrease. This is because, in Equation (8),

P2 and

P1 represent the change probability of L2 and L1, which was mapped from the segmentation of L1 and L2, respectively. As L2 was interpolated to the spatial resolution of L1, the segmentation of L1 was more accurate than the segmentation of L2. Therefore, a larger weight of P2 leads to a higher accuracy of change feature analysis.

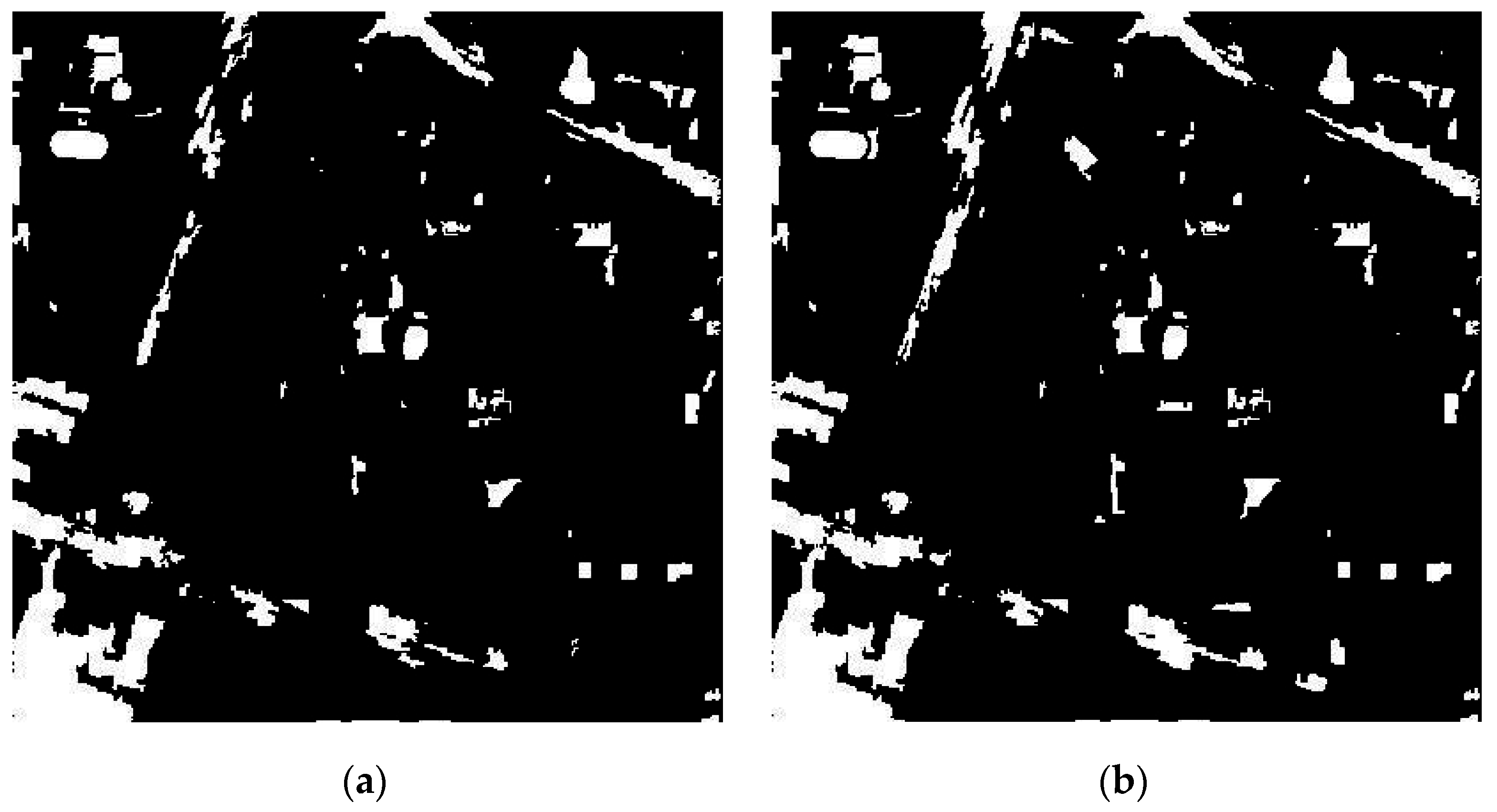

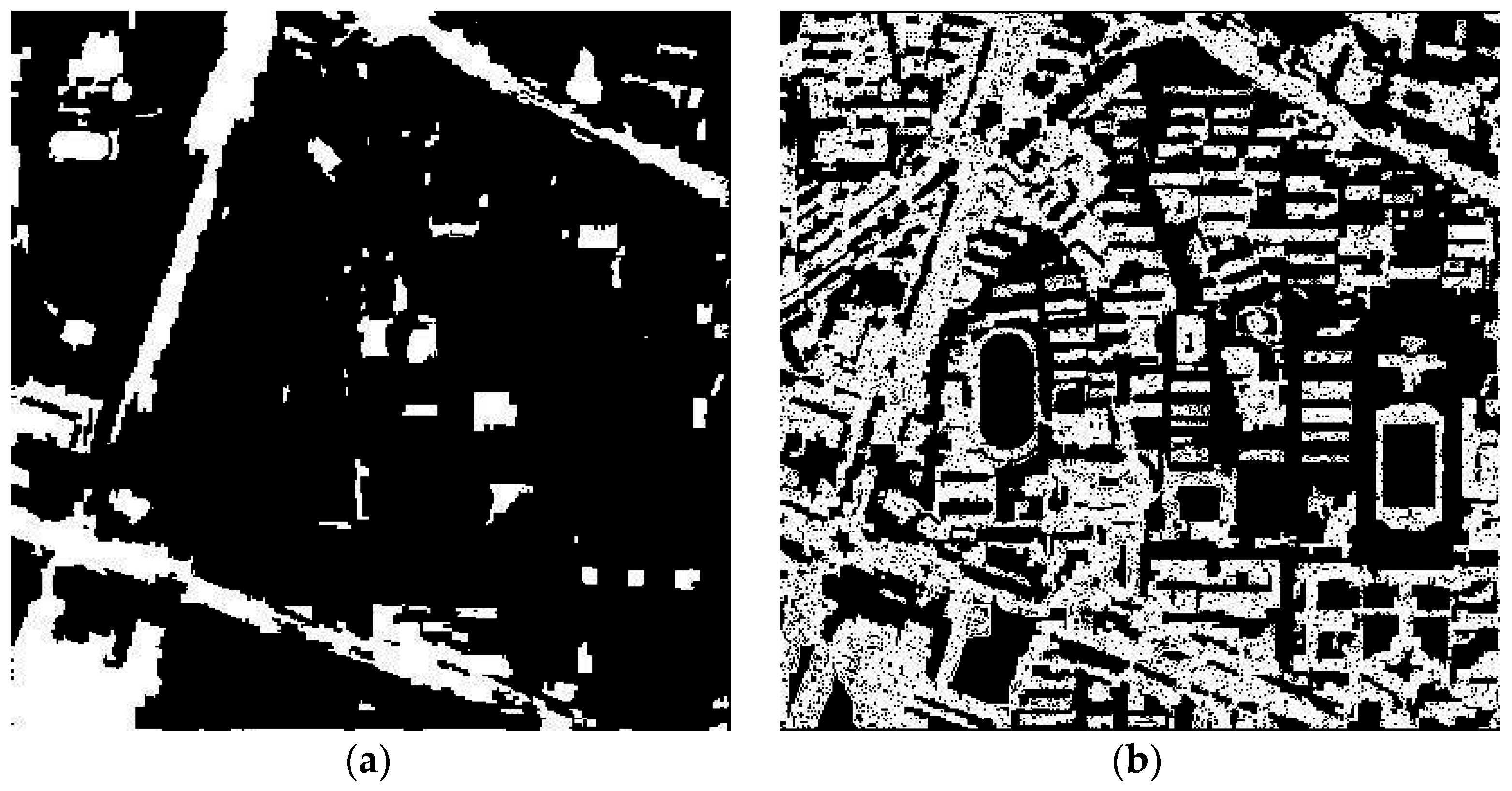

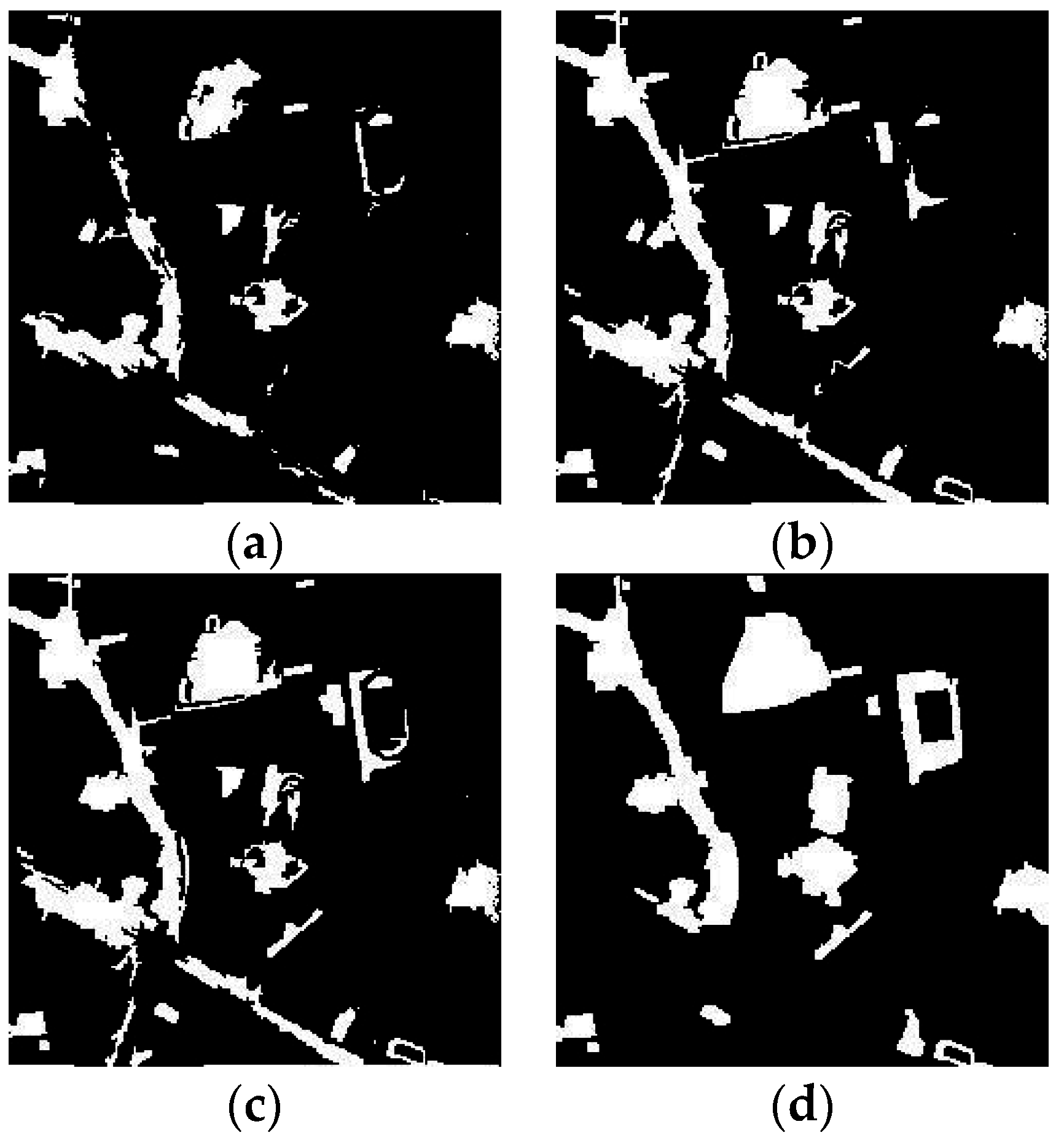

The results are visually compared in

Figure 4, in which the white and black regions, respectively, represent the changed and unchanged areas. The results of the three methods are similar, but the number of false alarms for

k-means clustering (

k = 2) is slightly more than for the other two methods, and the missed alarms are fewer in number, especially in the road areas.

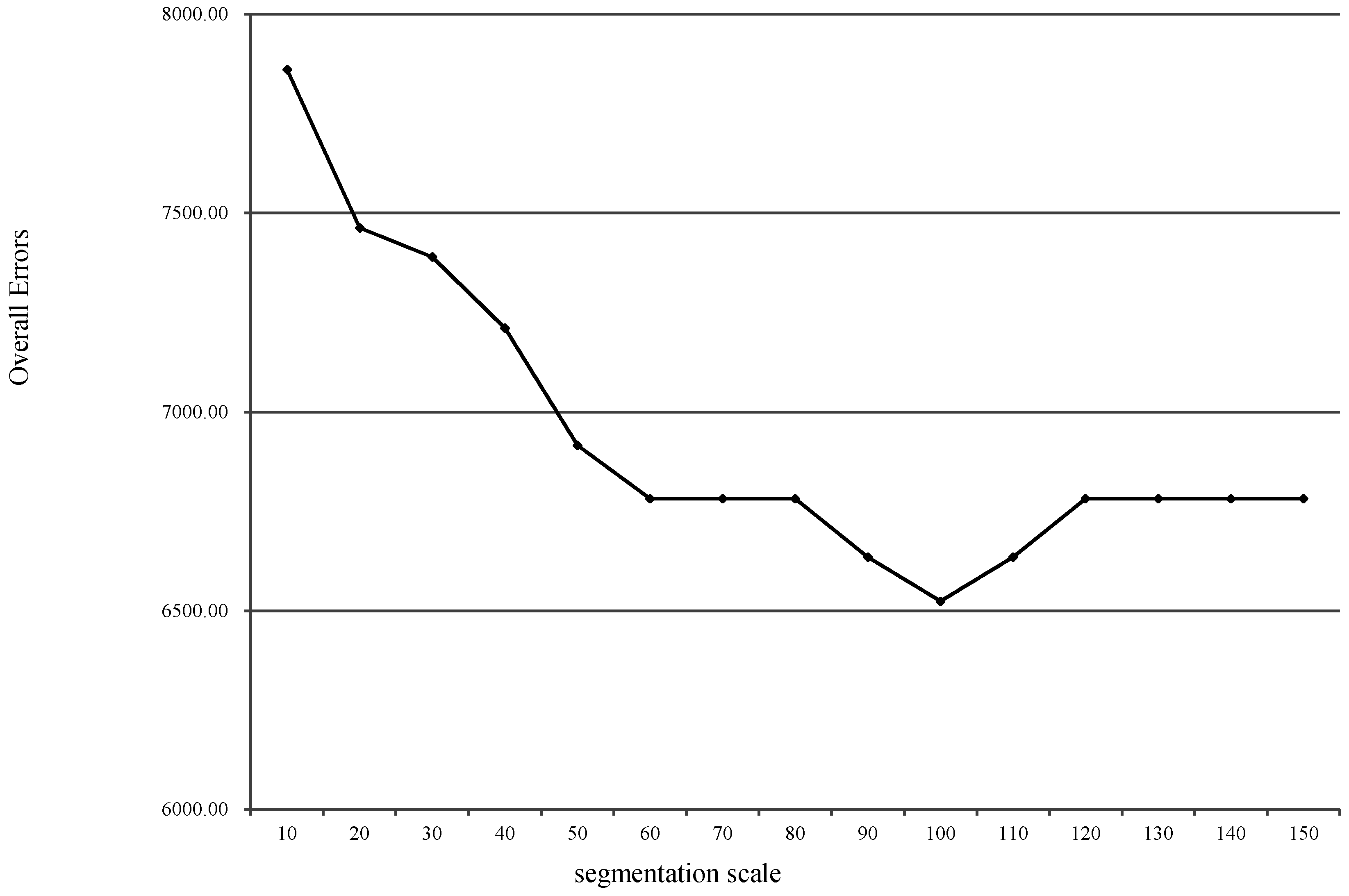

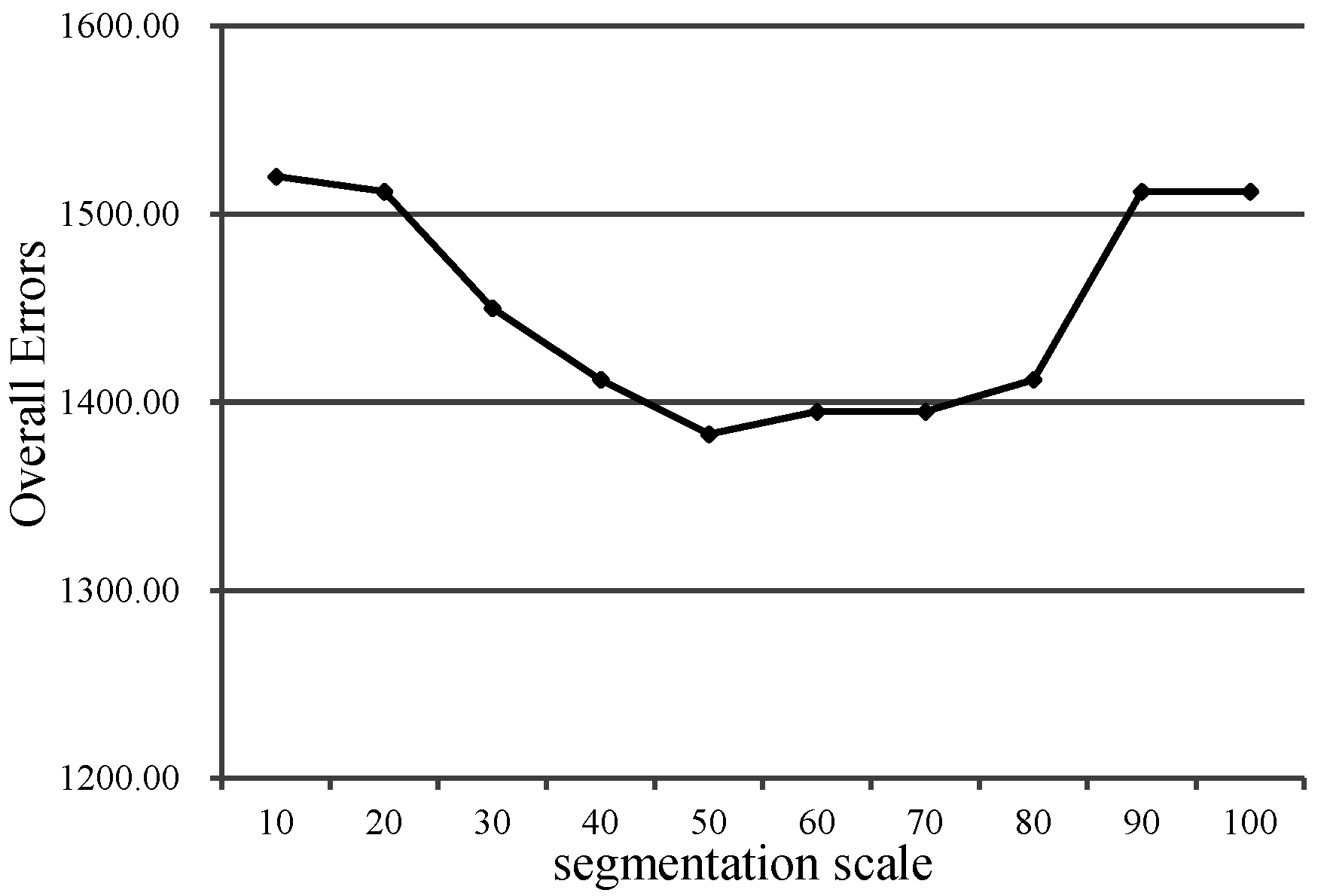

According to the spatial resolution and the objects’ sizes in the bi-temporal images after preprocessing, the scale interval and step size increase were set as [10, 150] and 10, respectively. The results of the change feature analysis differ with the varying segmentation scales (

Figure 5), and the optimal scale is around 100. Considering the multi-resolution characteristics of ground objects, multi-scale fusion is applied in the proposed method, and is realized by voting from the single-scale binary change maps.

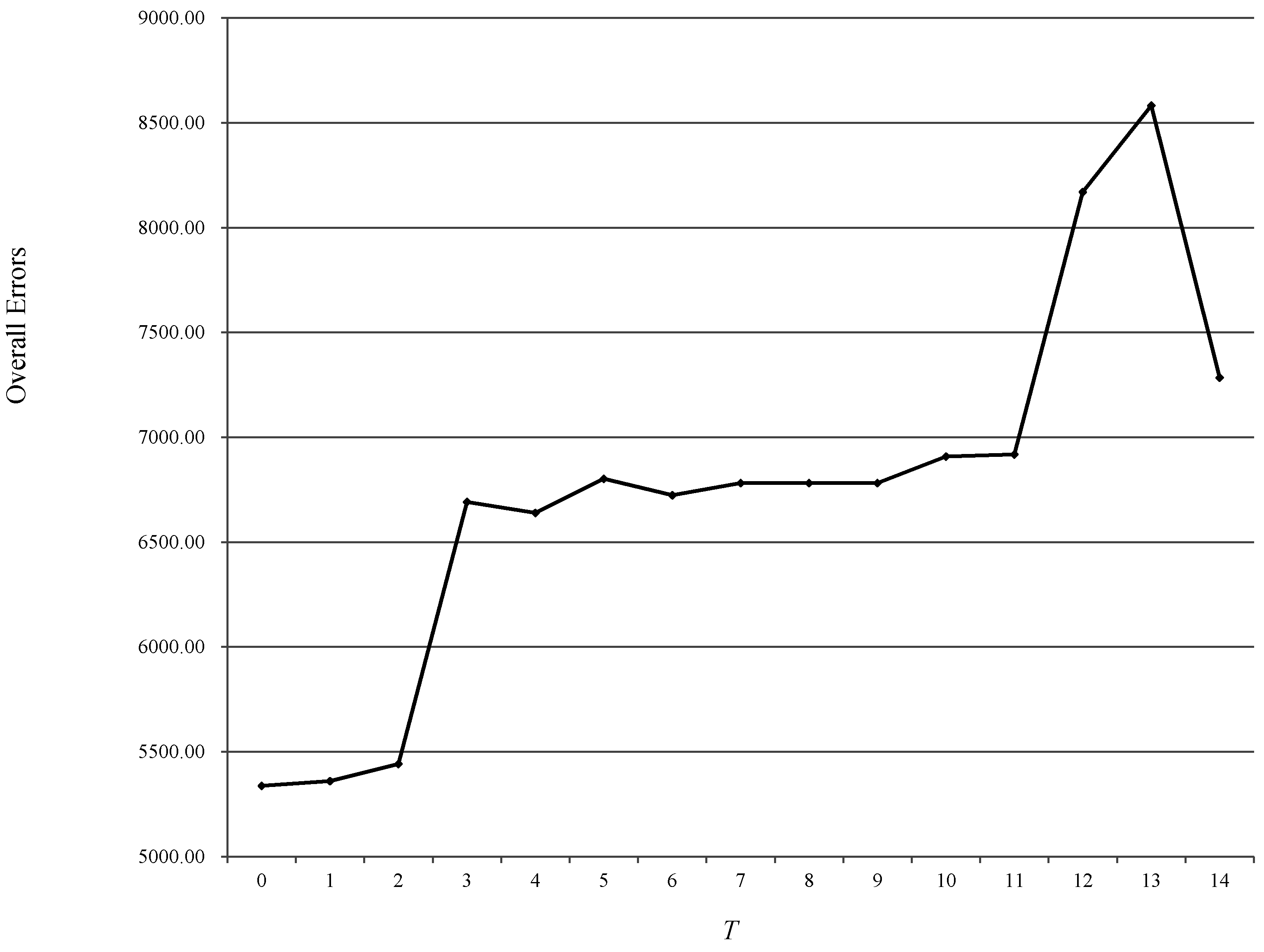

Figure 6 shows the accuracy of the

k-means clustering (

k = 2) after the multi-scale fusion. The overall errors are the lowest when

Tf in Equation (13) is 0, which means that the optimal multi-scale fusion is the sum of the changes in all of the single-scale change detection maps.

The accuracies of both the single-scale and multi-scale proposed method are shown in

Table 3. As the multi-scale fusion integrates all the single-scale change maps, there are more false alarms but fewer missed alarms than for the optimal single-scale method. Comparing the overall errors, the multi-scale version is more accurate.

Moreover, in order to validate the effectiveness of the proposed change detection method for multi-sensor MS imagery, it was compared with the method proposed in [

35]. In

Figure 7, the white and black regions represent the changed and unchanged areas, respectively. It can be seen that the proposed method effectively decreases the false alarms and suppresses the salt-and-pepper noise in the changed areas. As there are great differences in the visual results, the quantitative assessment and comparison are omitted. The time costs of the two methods were both less than two minutes using MATLAB Software (Mathworks, Natick, MA, USA) on a personal computer with 1.80 GHz CPU and 8.00 GB RAM.

2. L2 as the basis image

In this experiment, L2 was used as the basis image in the preprocessing. Having a higher spatial resolution, L1 was degraded to the same resolution as L2.

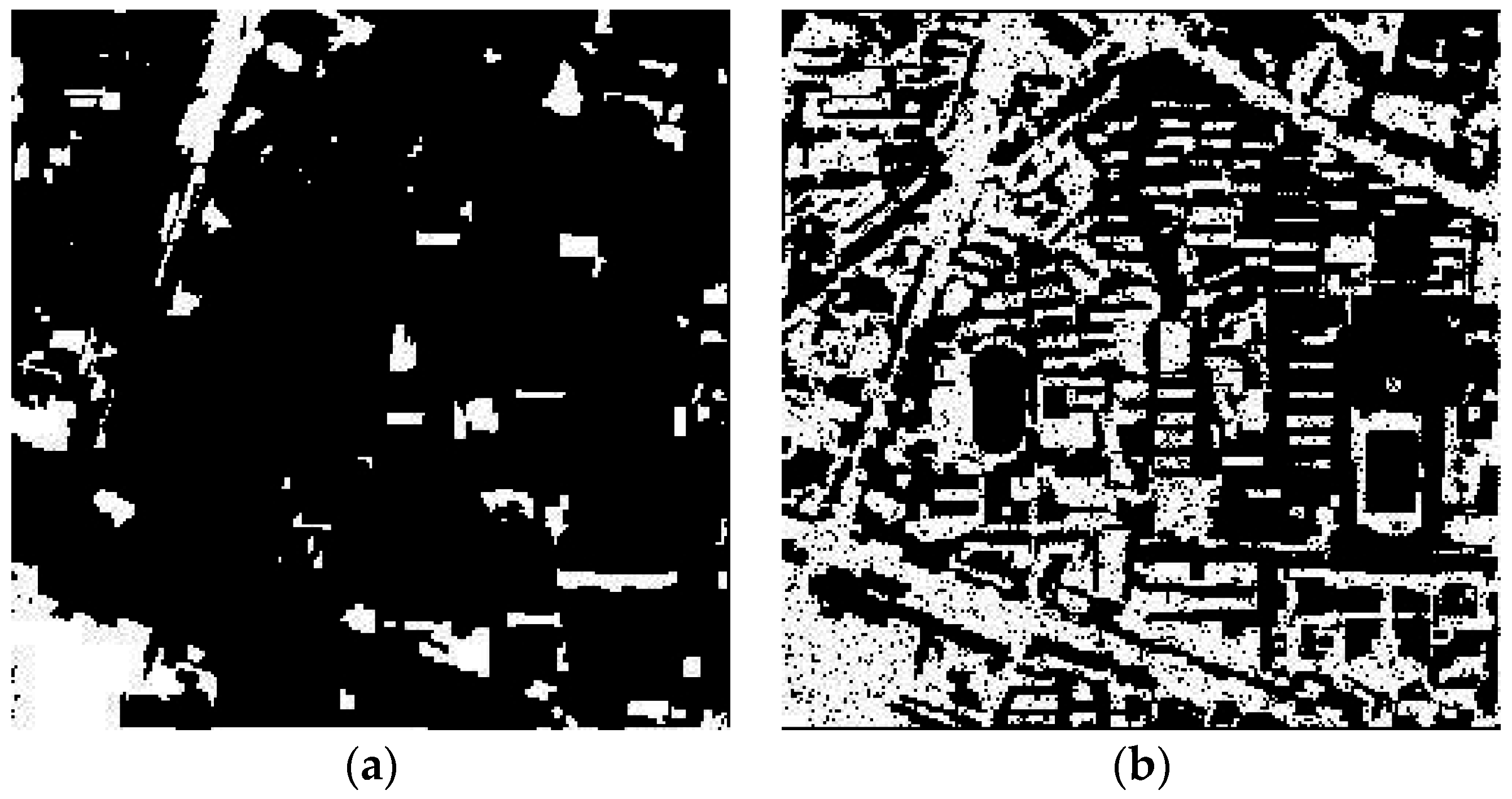

Figure 8 shows the bi-temporal images after the down-sampling, which are both 240 × 240 pixels. The vegetation and shadow were again masked out.

In the analysis of the combined change map, the two threshold selection methods and

k-means clustering (

k = 2) were again used. The results are shown in

Table 4. In this table, the left, middle, and right parts, respectively, show false, missed alarms, and overall errors among the three methods with increasing ratio of

P2. It can be seen that the overall errors of the three methods are again similar. The

k-means clustering (

k = 2) obtains the least number of errors, and the threshold selection by clustering gray levels of boundary method performs slightly better than Otsu’s thresholding method.

Figure 9 shows a visual comparison of the results, in which the white and black regions represent the changed and unchanged areas, respectively. The results of the three methods are again similar, and the

k-means clustering (

k = 2) obtains slightly fewer missed alarms than the two threshold selection methods, which is the same as the result of the experiment with L1 as the basis image.

However, it is worth noting that the overall errors increase with the decreasing combination ratio of P1. This is probably because the down-sampling of L1 resulted in the loss of some valuable image information. As a result, the change map of P1, which was generated by the change feature analysis of L1 mapped from the segmentation of L2, was more accurate than the other change map. Therefore, a larger weight of P1 in the combined change map leads to a higher accuracy. From the results of these experiments, we can conclude that the accuracy of the change analysis is improved by increasing the weight of the change map which is generated by mapping the segmentation of the basis image.

According to the spatial resolution and the objects’ sizes in the bi-temporal images after preprocessing, the scale interval and step size increase were set as [10, 100] and 10, respectively.

Figure 10 shows the results of the proposed single-scale method using different segmentation scales. The optimal scale is 50. As can be seen in

Figure 6, the overall errors are the lowest when

Tf in Equation (13) is 0. In addition,

Table 5 shows the improvement of the multi-scale fusion with

Tf equal to 0, which was realized by

k-means clustering (

k = 2).

In

Figure 11, the proposed method is compared with the method proposed in [

35]. The white and black regions represent the changed and unchanged areas, respectively. It can be seen that the proposed method is better able to detect the changes in an urban area with multi-sensor MS images. It suppresses the missed alarms in the changed areas and decreases the false alarms. As there is a significant difference in the visual results, the quantitative assessment and comparison are omitted. The time costs of the two methods were both about one minute using MATLAB Software (Mathworks, Natick, MA, USA) on a personal computer with 1.80 GHz CPU and 8.00 GB RAM.

Comparing the two sets of experiments in the first study area, the accuracy is higher in the results with L2 as the basis image. This is probably due to the lower spatial resolution of the basis image.

3.2. The Second Study Area

In order to further verify the proposed method, it was also applied to images from another area in the south of Wuhan, Hubei province, China. The bi-temporal images were respectively acquired by QuickBird in April 2002 (L1) and by IKONOS in July 2009 (L2). L2, with the lower resolution, was regarded as the basis image in the preprocessing, and L1 was degraded by down-sampling. The images after reprocessing, with a size of 240 × 240 pixels, are shown in

Figure 12. The vegetation and shadow were, again, masked out to avoid the effects of vegetation phenology and solar elevation.

As the spatial resolutions were the same and the ground objects of the urban area were similar to those of the first study area, the segmentation scale was again set to 50. The results of the two threshold selection methods and

k-means clustering (

k = 2) are compared in

Table 6, with a decreasing

P1 ratio. In this table, the left, middle, and right parts, respectively, show false, missed alarms, and overall errors among the three methods with decreasing ratio of

P1. The accuracies of the three change locating methods are again similar.

K-means clustering (

k = 2) performs the best, and the threshold selection by clustering gray levels of boundary method performs slightly better than Otsu’s thresholding method, which is the same as the first study area. As with the results in the first study area, the accuracy of the proposed method is improved by increasing the weight of P1, which is generated by mapping the segmentation of the basis image of L2. Therefore, it can be concluded that if the weight of the change map, which is mapped from the segmentation of the basis image, is larger than the other, the accuracy of the proposed method increases.

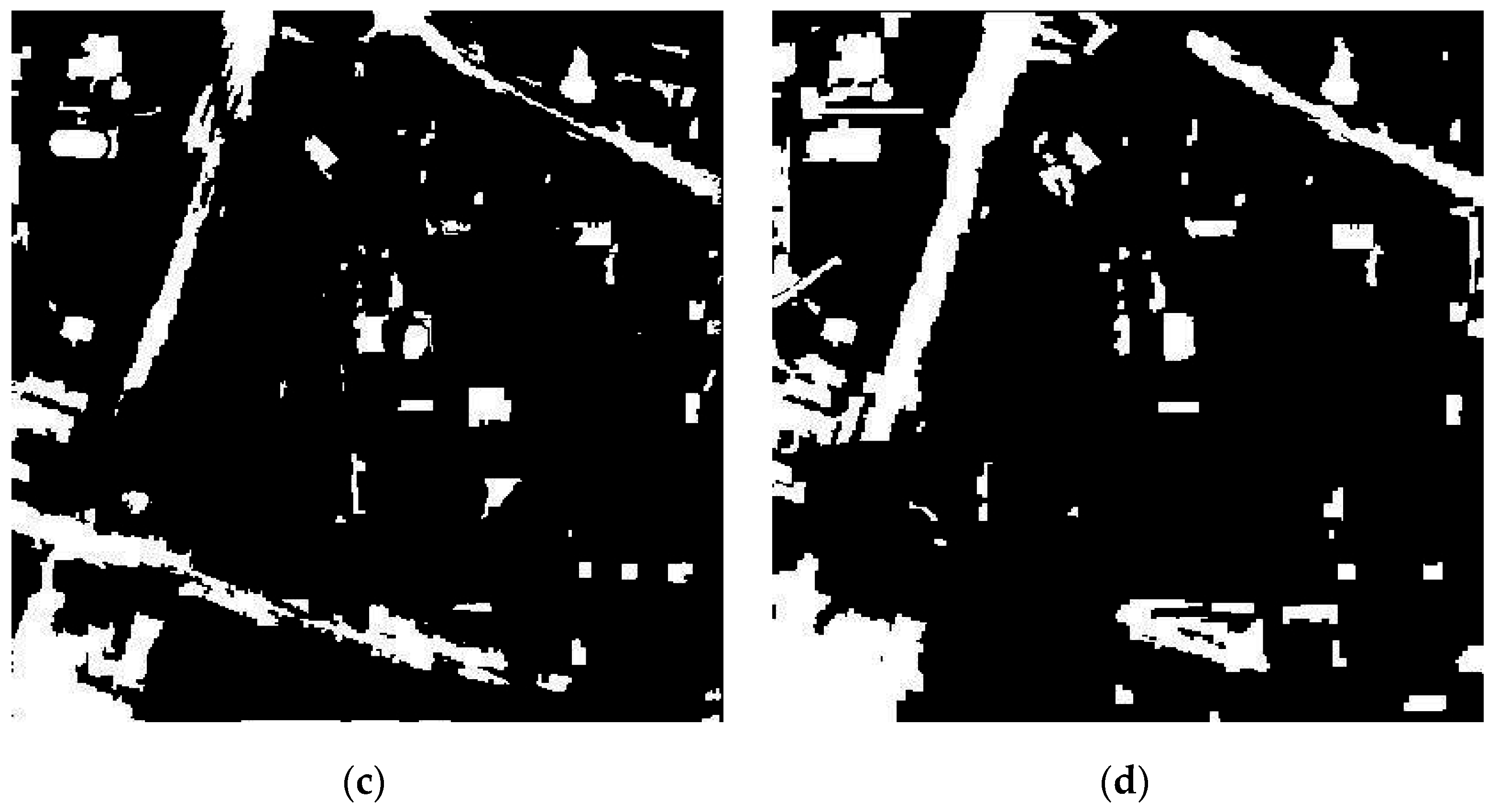

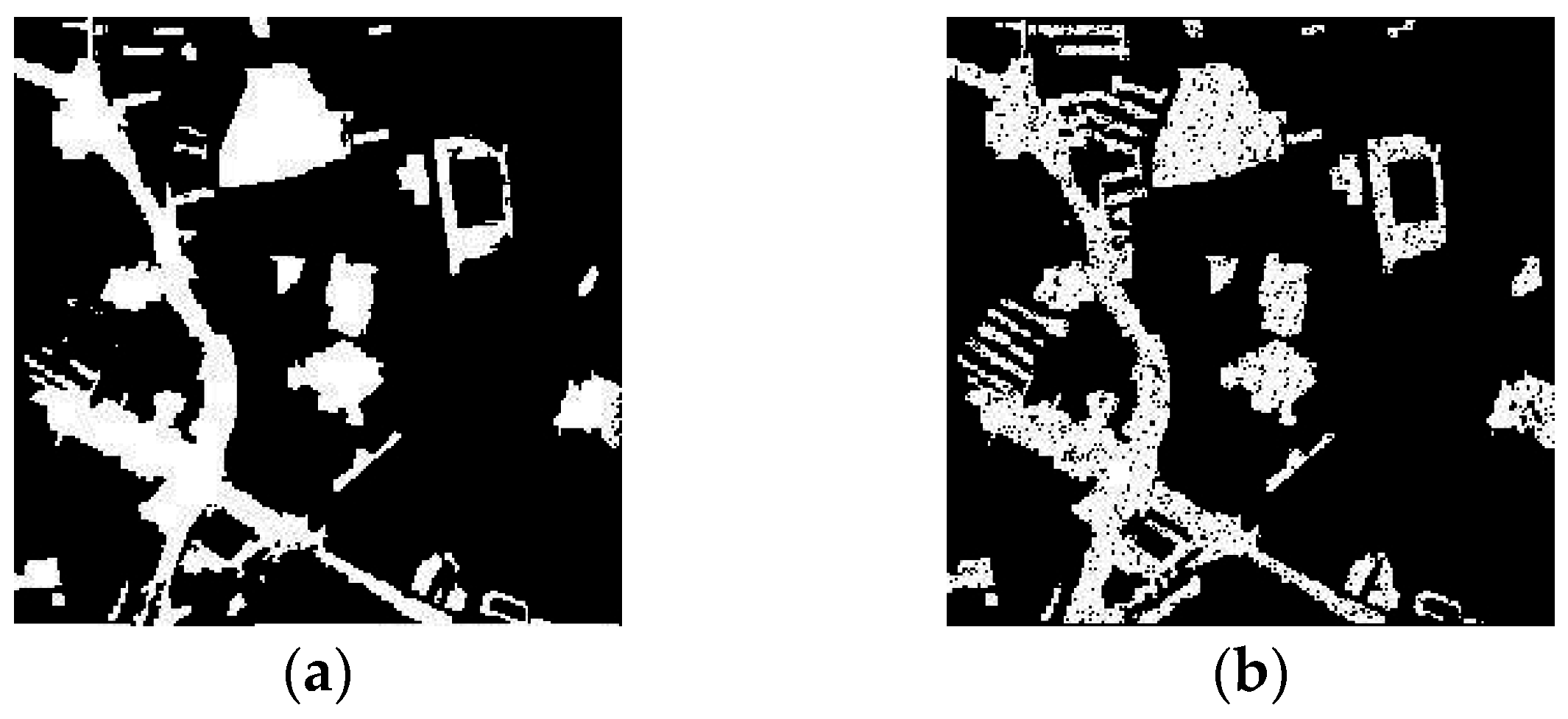

The binary change maps of the three methods are shown in

Figure 13, in which the white and black regions represent the changed and unchanged areas, respectively. Compared with the reference image, the results of the three methods are similar, and the

k-means clustering (

k = 2) obtains the least number of missed alarms.

As can be seen in

Figure 6, the overall errors after the multi-scale fusion are the lowest when

Tf in Equation (13) is 0.

Table 7 shows the improvement of the multi-scale fusion with

Tf equal to 0, which was realized by

k-means clustering (

k = 2). It can be concluded that the proposed multi-scale method suppresses the missed alarms and keeps the false alarms to an acceptable level.

In

Figure 14, the white and black regions represent the changed and unchanged areas, respectively. Compared with the method proposed in [

35], the proposed method is shown to be effective in detecting changes in an urban area using multi-sensor MS images. It can effectively decrease the missed alarms in the changed areas while removing the false alarms. As there is a great difference in the visual results, the quantitative assessment and comparison are omitted. The time costs of the two methods were both about one minute using MATLAB Software (Mathworks, Natick, MA, USA) on a personal computer with 1.80 GHz CPU and 8.00 GB RAM.