What is the Point? Evaluating the Structure, Color, and Semantic Traits of Computer Vision Point Clouds of Vegetation

Abstract

:1. Introduction

1.1. Research Objectives and Approach

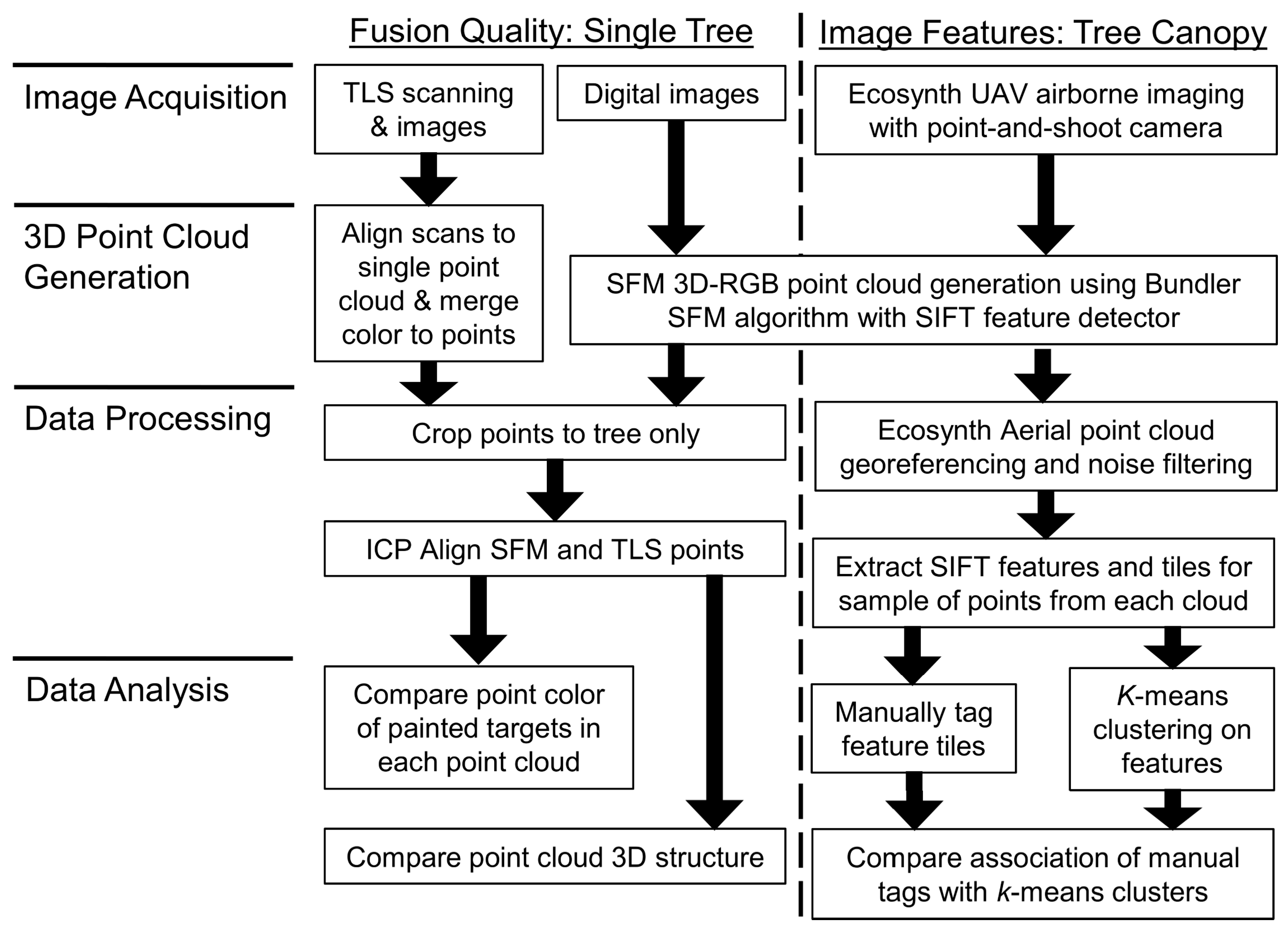

2. Materials and Methods

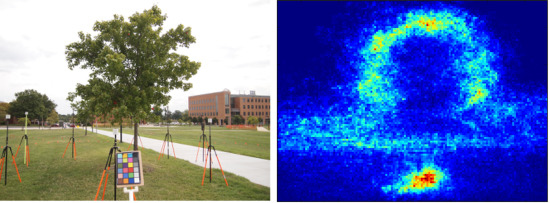

2.1. Evaluating SFM 3D-RGB Fusion Quality

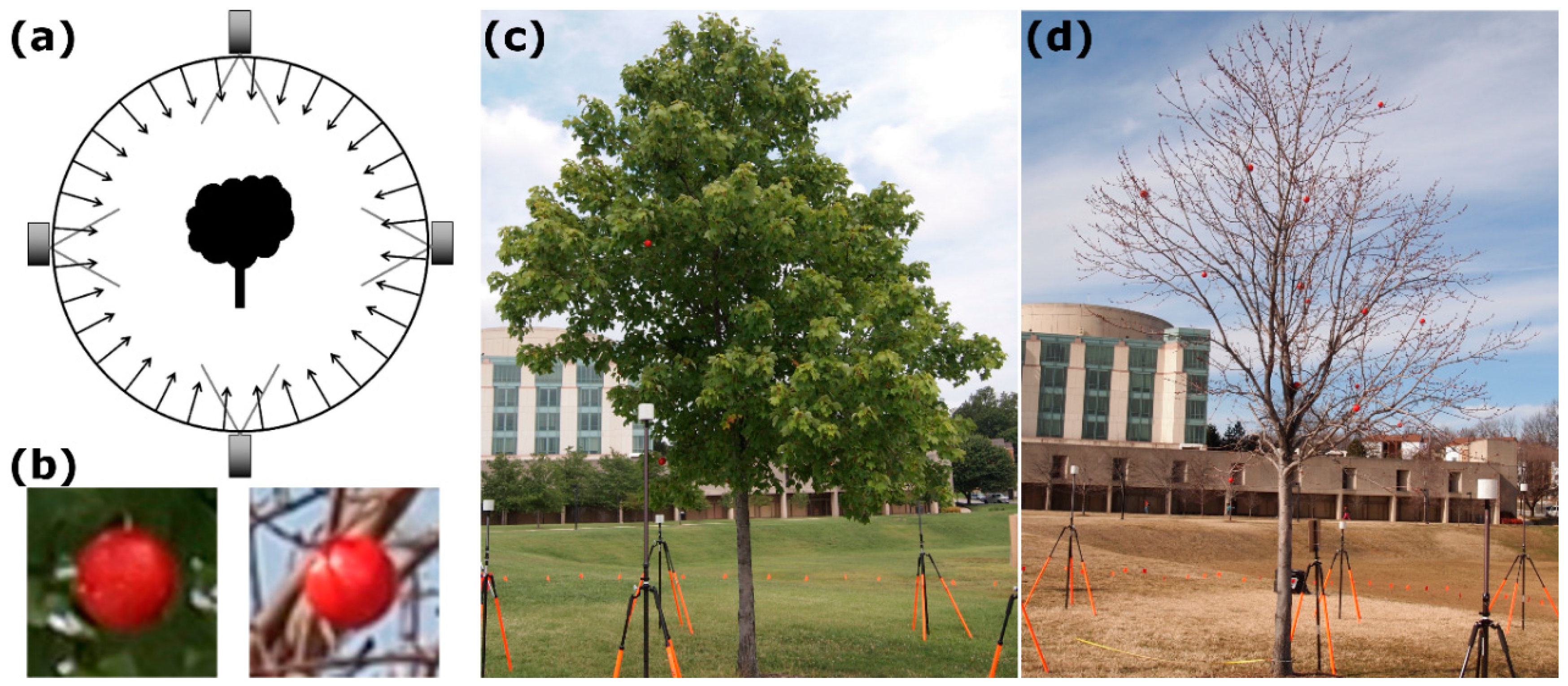

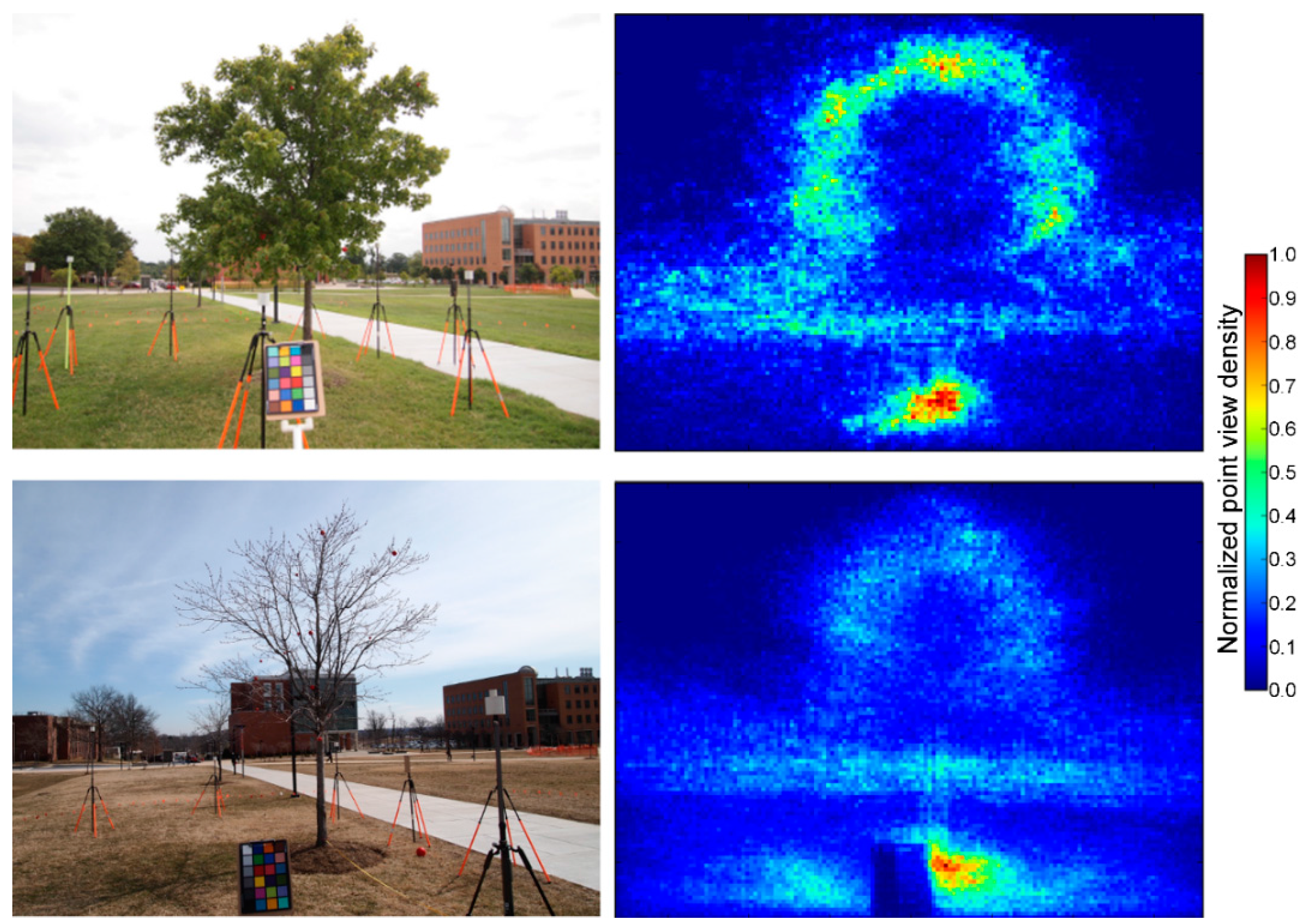

2.1.1. Ground-Based Scanning of a Single Tree

2.1.2. SFM 3D-RGB Point Clouds from Digital Images

2.1.3. TLS Data Processing

2.1.4. Extracting Points at Painted Targets

2.1.5. Evaluation of 3D-RGB Fusion Quality

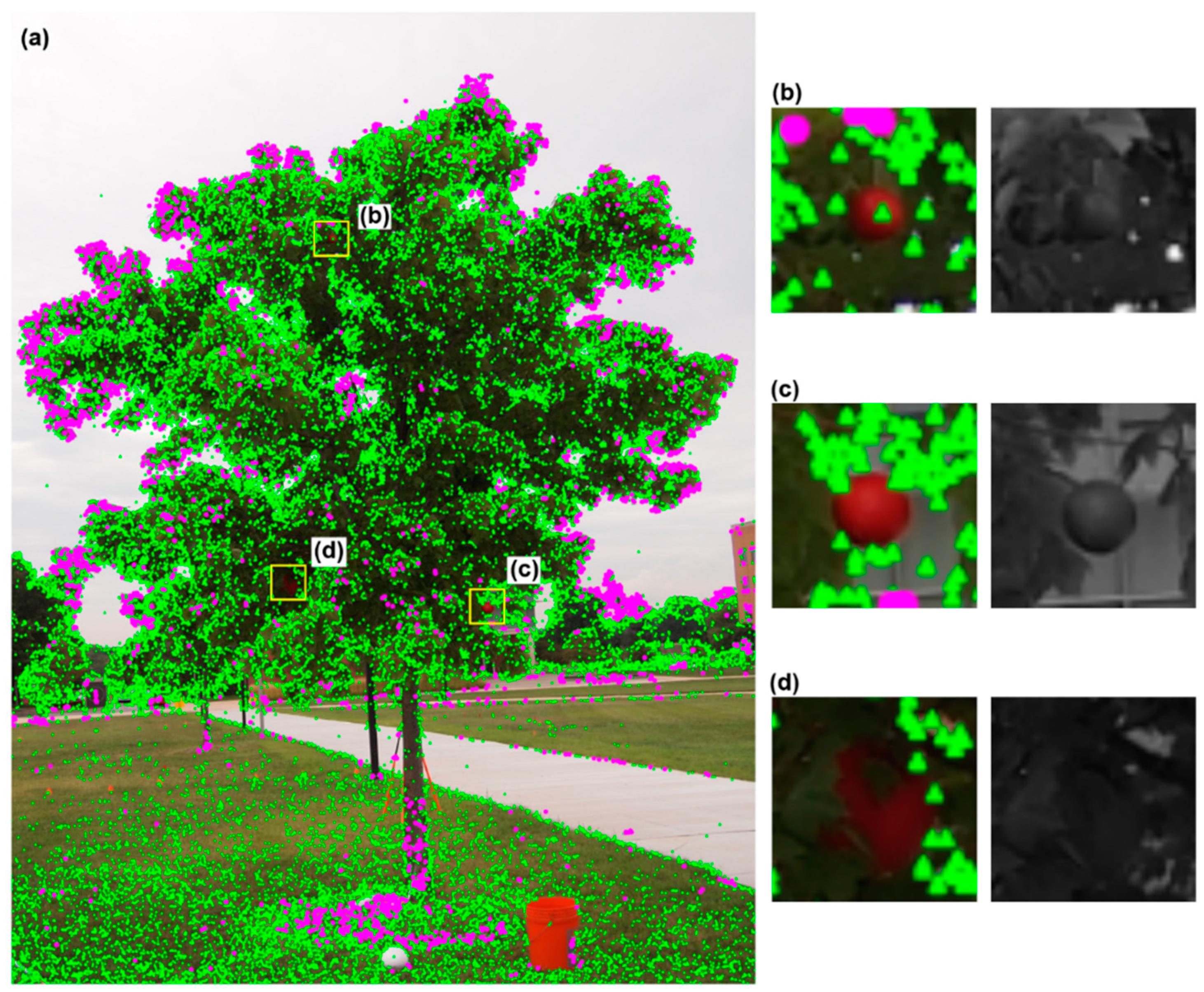

2.2. Evaluation of Image Features from Tree Canopy Point Clouds

2.2.1. UAV Canopy Aerial Imagery

2.2.2. SFM 3D-RGB Point Clouds from Digital Images

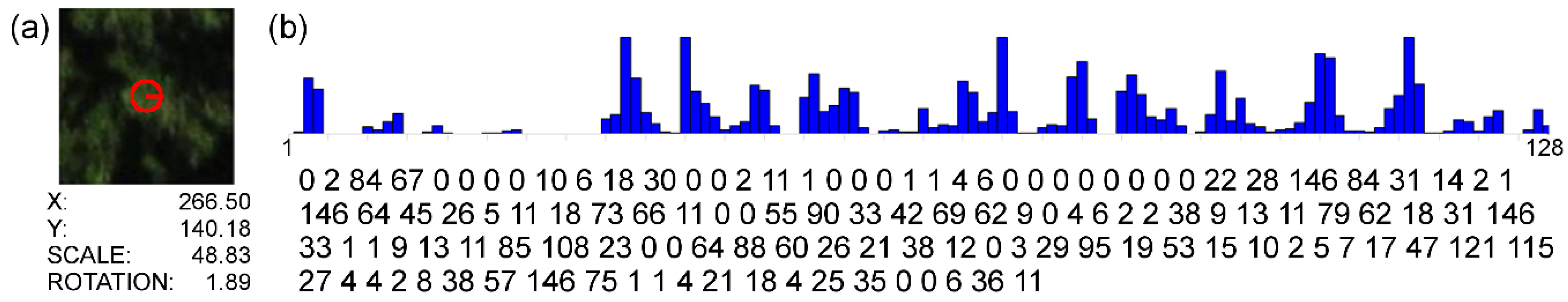

2.2.3. Extracting Image Features for SFM Point Clouds

2.2.4. Classification and Clustering of Image Features

3. Results

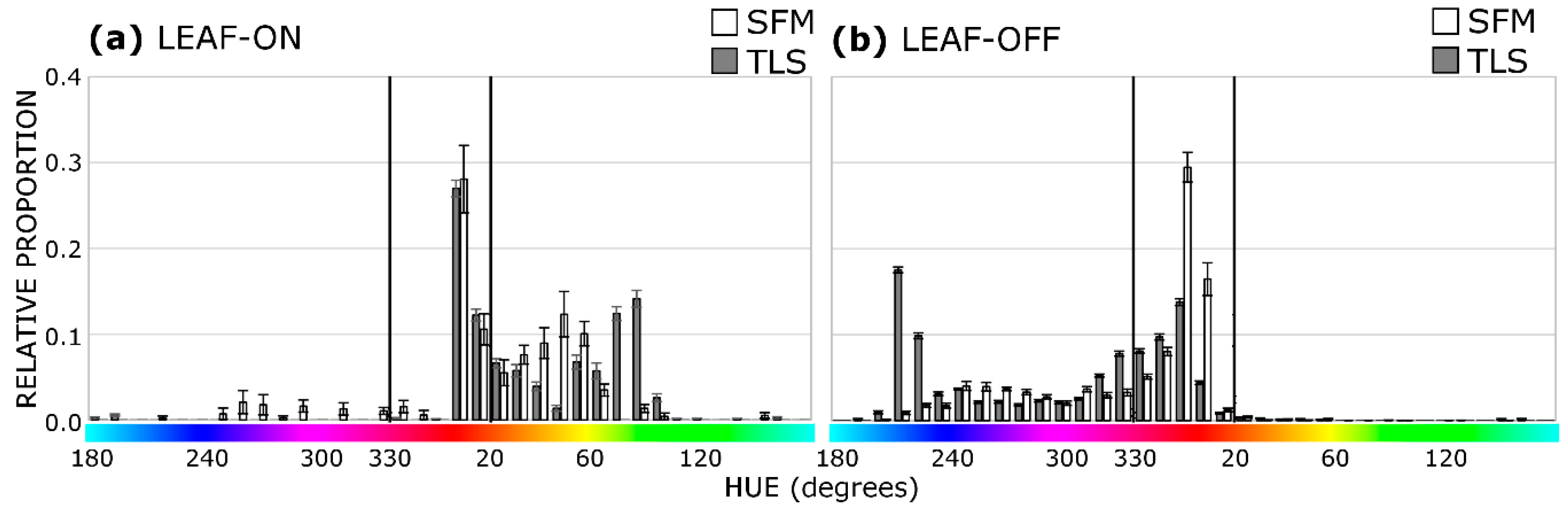

3.1. Evaluation of SFM 3D-RGB Fusion Quality

3.1.1. 3D-RGB Fusion Location and Color Accuracy

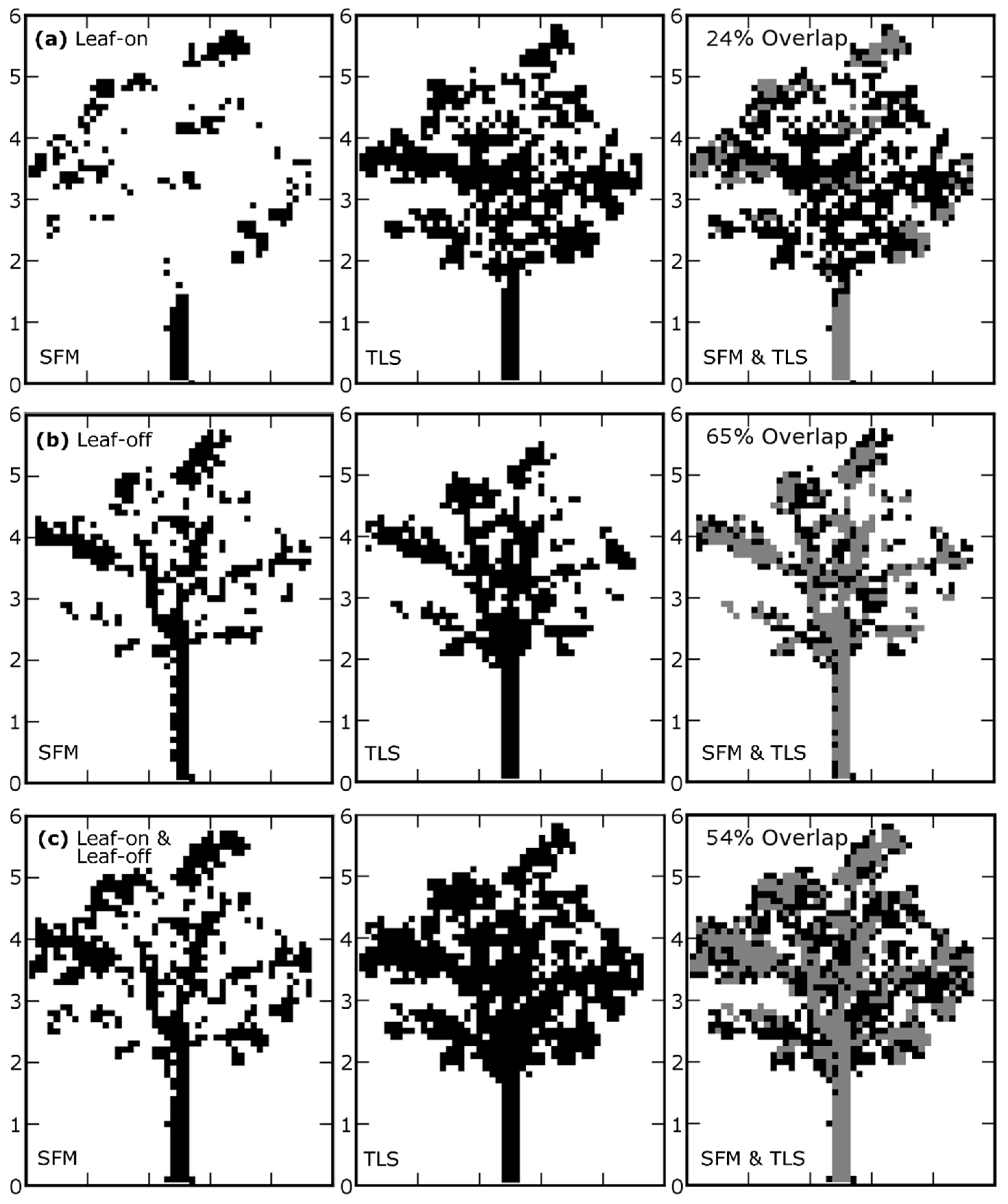

3.1.2. 3D Structure Quality

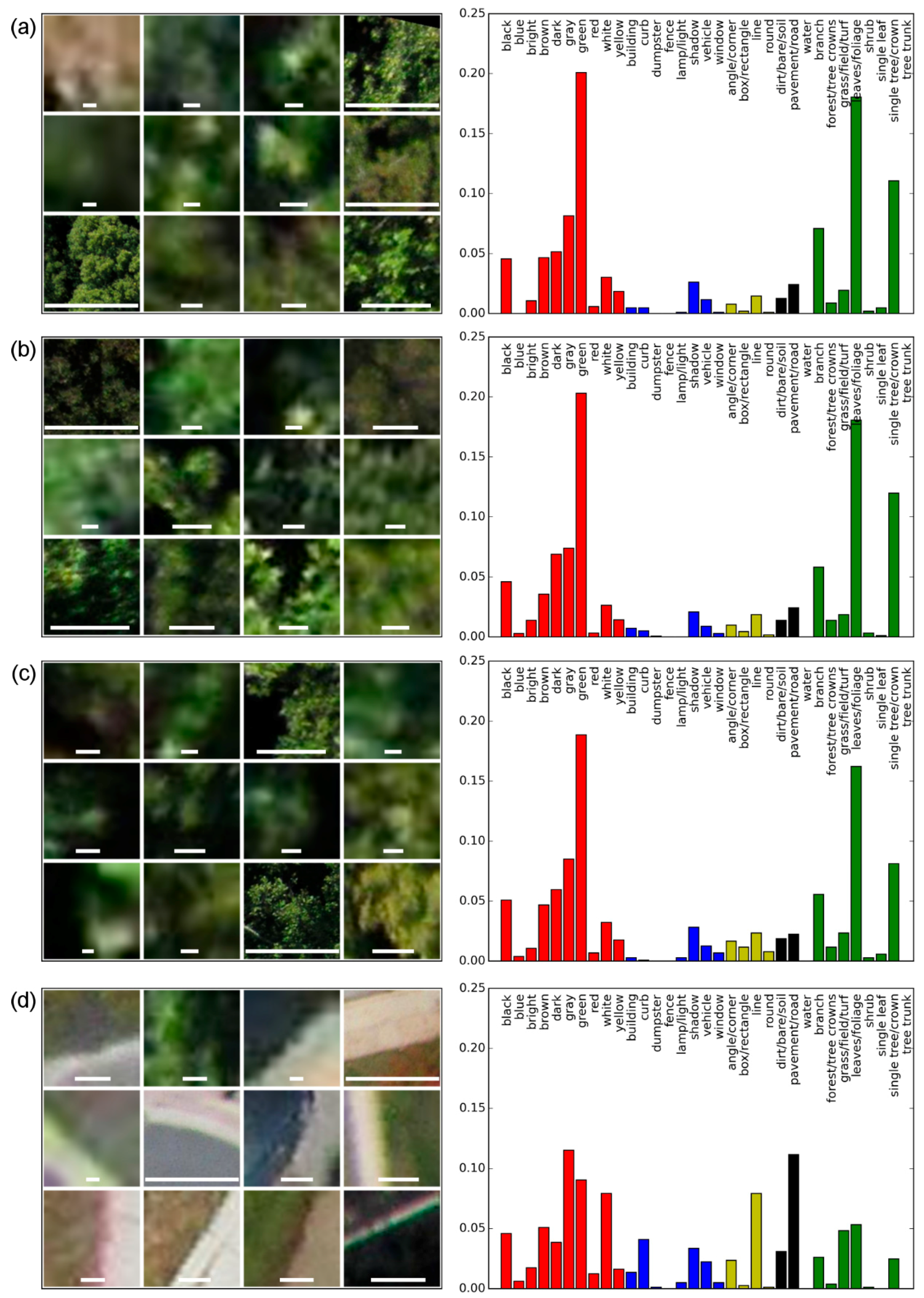

3.2. Evaluation of Image Features from Tree Canopy Point Clouds

4. Discussion

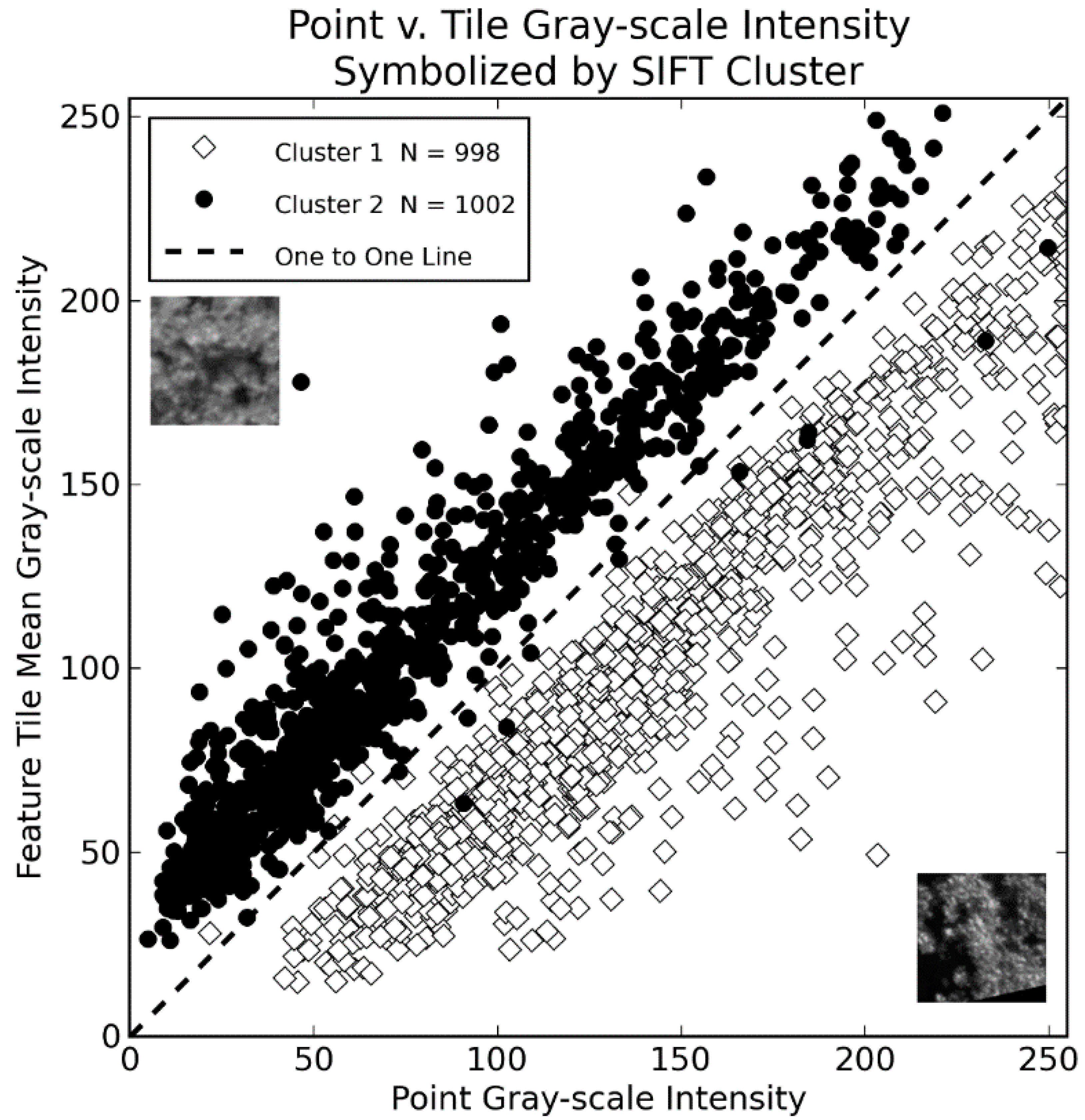

4.1. Point as an Image Sample

4.2. Point as a Feature Descriptor

4.3. Point as a 3D-RGB Point

4.4. SFM and TLS See Vegetation Differently

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. Remote sensing of vegetation structure using computer vision. Remote Sens. 2010, 2, 1157–1176. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Lisein, J.; Pierrot-Deseilligny, M.; Bonnet, S.; Lejeune, P. A photogrammetric workflow for the creation of a forest canopy height model from small unmanned aerial system imagery. Forests 2013, 4, 922–944. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A. Assessing the accuracy of georeferenced point clouds produced via multi-view stereopsis from unmanned aerial vehicle (UAV) imagery. Remote Sens. 2012, 4, 1573–1599. [Google Scholar] [CrossRef]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the topography of shallow braided rivers using structure-from-motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Morgenroth, J.; Gomez, C. Assessment of tree structure using a 3D image analysis technique—A proof of concept. Urban For. Urban Green. 2014, 13, 198–203. [Google Scholar] [CrossRef]

- Vitousek, P.; Asner, G.P.; Chadwick, O.A.; Hotchkiss, S. Landscape-level variation in forest structure and biogeochemistry across a substrate age gradient in hawaii. Ecology 2009, 90, 3074–3086. [Google Scholar] [CrossRef] [PubMed]

- Erdody, T.L.; Moskal, L.M. Fusion of LIDAR and imagery for estimating forest canopy fuels. Remote Sens. Environ. 2010, 114, 725–737. [Google Scholar] [CrossRef]

- Tooke, T.; Coops, N.; Goodwin, N.; Voogt, J. Extracting urban vegetation characteristics using spectral mixture analysis and decision tree classifications. Remote Sens. Environ. 2009, 113, 398–407. [Google Scholar] [CrossRef]

- Asner, G.P.; Martin, R.E. Airborne spectranomics: Mapping canopy chemical and taxonomic diversity in tropical forests. Front. Ecol. Environ. 2009, 7, 269–276. [Google Scholar] [CrossRef]

- Baldeck, C.A.; Asner, G.P.; Martin, R.E.; Anderson, C.B.; Knapp, D.E.; Kellner, J.R.; Wright, S.J. Operational tree species mapping in a diverse tropical forest with airborne imaging spectroscopy. PLoS ONE 2015, 10, e0118403. [Google Scholar] [CrossRef] [PubMed]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Geerling, G.; Labrador-Garcia, M.; Clevers, J.; Ragas, A.; Smits, A. Classification of floodplain vegetation by data fusion of spectral (CASI) and LIDAR data. Int. J. Remote Sens. 2007, 28, 4263–4284. [Google Scholar] [CrossRef]

- Hudak, A.T.; Lefsky, M.A.; Cohen, W.B.; Berterretche, M. Integration of LIDAR and Landsat ETM+ data for estimating and mapping forest canopy height. Remote Sens. Environ. 2002, 82, 397–416. [Google Scholar] [CrossRef]

- Mundt, J.T.; Streutker, D.R.; Glenn, N.F. Mapping sagebrush distribution using fusion of hyperspectral and LIDAR classifications. Photogramm. Eng. Remote Sens. 2006, 72, 47. [Google Scholar] [CrossRef]

- Anderson, J.; Plourde, L.; Martin, M.; Braswell, B.; Smith, M.; Dubayah, R.; Hofton, M.; Blair, J. Integrating waveform LIDAR with hyperspectral imagery for inventory of a northern temperate forest. Remote Sens. Environ. 2008, 112, 1856–1870. [Google Scholar] [CrossRef]

- Popescu, S.; Wynne, R. Seeing the trees in the forest: Using LIDAR and multispectral data fusion with local filtering and variable window size for estimating tree height. Photogramm. Eng. Remote Sens. 2004, 70, 589–604. [Google Scholar] [CrossRef]

- Packalén, P.; Suvanto, A.; Maltamo, M. A two stage method to estimate speciesspecific growing stock. Photogramm. Eng. Remote Sens. 2009, 75, 1451–1460. [Google Scholar] [CrossRef]

- Kampe, T.U.; Johnson, B.R.; Kuester, M.; Keller, M. Neon: The first continental-scale ecological observatory with airborne remote sensing of vegetation canopy biochemistry and structure. J. Appl. Remote Sens. 2010, 4, 043510. [Google Scholar] [CrossRef]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal altitude, overlap, and weather conditions for computer vision uav estimates of forest structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef]

- Glennie, C. Rigorous 3D error analysis of kinematic scanning LIDAR systems. J. Appl. Geod. 2007, 1, 147–157. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.; Szeliski, R. Photo Tourism: Exploring Photo Collections in 3D; The Association for Computing Machinery (ACM): New York, NY, USA, 2006; pp. 835–846. [Google Scholar]

- Szeliski, R. Computer Vision; Springer: Berlin, Germany, 2011. [Google Scholar]

- de Matías, J.; Sanjosé, J.J.D.; López-Nicolás, G.; Sagüés, C.; Guerrero, J.J. Photogrammetric methodology for the production of geomorphologic maps: Application to the veleta rock glacier (sierra nevada, granada, spain). Remote Sens. 2009, 1, 829–841. [Google Scholar] [CrossRef]

- Huang, H.; Gong, P.; Cheng, X.; Clinton, N.; Li, Z. Improving measurement of forest structural parameters by co-registering of high resolution aerial imagery and low density LIDAR data. Sensors 2009, 9, 1541–1558. [Google Scholar] [CrossRef] [PubMed]

- Lingua, A.; Marenchino, D.; Nex, F. Performance analysis of the sift operator for automatic feature extraction and matching in photogrammetric applications. Sensors 2009, 9, 3745–3766. [Google Scholar] [CrossRef] [PubMed]

- Schwind, P.; Suri, S.; Reinartz, P.; Siebert, A. Applicability of the sift operator to geometric SAR image registration. Int. J. Remote Sens. 2010, 31, 1959–1980. [Google Scholar] [CrossRef]

- Beijborn, O.; Edmunds, P.J.; Kline, D.I.; Mitchell, B.G.; Kriegman, D. Automated annotation of coral reef survey images. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1170–1177. [Google Scholar]

- Kendal, D.; Hauser, C.E.; Garrard, G.E.; Jellinek, S.; Giljohann, K.M.; Moore, J.L. Quantifying plant colour and colour difference as perceived by humans using digital images. PLoS ONE 2013, 8, e72296. [Google Scholar] [CrossRef] [PubMed]

- Nilsback, M.-E. An Automatic Visual Flora—Segmentation and Classication of Flower Images; University of Oxford: Oxford, UK, 2009. [Google Scholar]

- Yang, Y.; Newsam, S. Geographic image retrieval using local invariant features. IEEE Trans. Geosci. Remote Sens. 2013, 51, 818–832. [Google Scholar] [CrossRef]

- Hosoi, F.; Nakai, Y.; Omasa, K. Estimation and error analysis of woody canopy leaf area density profiles using 3-d airborne and ground-based scanning LIDAR remote-sensing techniques. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2215–2223. [Google Scholar] [CrossRef]

- Seielstad, C.; Stonesifer, C.; Rowell, E.; Queen, L. Deriving fuel mass by size class in douglas-fir (pseudotsuga menziesii) using terrestrial laser scanning. Remote Sens. 2011, 3, 1691–1709. [Google Scholar] [CrossRef]

- Bundler v0.4. Available online: https://www.cs.cornell.edu/~snavely/bundler/ (accessed on 11 February 2017).

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Besl, P.J.; McKay, H.D. A method for registration of 3-d shapes. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Meshlab v1.3.3 64-bit. Available online: http://www.meshlab.net/ (accessed on 11 February 2017).

- Aptoula, E.; Lefèvre, S. Morphological description of color images for content-based image retrieval. IEEE Trans. Image Process. 2009, 18, 2505–2517. [Google Scholar] [CrossRef] [PubMed]

- Manjunath, B.S.; Ohm, J.R.; Vasudevan, V.V.; Yamada, A. Color and texture descriptors. IEEE Trans. Circuits Syst. Video Technol. 2001, 11, 703–715. [Google Scholar] [CrossRef]

- Ecosynth Aerial v1.0. Available online: http://code.ecosynth.org/EcosynthAerial (accessed on 11 February 2017).

- Li, Y.; Snavely, N.; Huttenlocher, D. Location recognition using prioritized feature matching. In Computer Vision ECCV 2010 Lecture Notes in Computer Science; Springer: Berlin, Germany, 2011; pp. 791–804. [Google Scholar]

- Jain, A.K. Data clustering: 50 years beyond k-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Lange, T.; Roth, V.; Braun, M.L.; Buhmann, J.M. Stability-based validation of clustering solutions. Neural Comput. 2004, 16, 1299–1323. [Google Scholar] [CrossRef] [PubMed]

- Holden, M.; Hill, D.L.G.; Denton, E.R.E.; Jarosz, J.M.; Cox, T.C.S.; Rohlfing, T.; Goodey, J.; Hawkes, D.J. Voxel similarity measures for 3-d serial mr brain image registration. IEEE Trans. Med. Imaging 2000, 19, 94–102. [Google Scholar] [CrossRef] [PubMed]

- Parker, G.; Harding, D.; Berger, M. A portable LIDAR system for rapid determination of forest canopy structure. J. Appl. Ecol. 2004, 41, 755–767. [Google Scholar] [CrossRef]

- Huang, C.; Thomas, N.; Goward, S.N.; Masek, J.G.; Zhu, Z.; Townshend, J.R.G.; Vogelmann, J.E. Automated masking of cloud and cloud shadow for forest change analysis using Landsat images. Int. J. Remote Sens. 2010, 31, 5449–5464. [Google Scholar] [CrossRef]

- McKean, J.; Isaak, D.; Wright, W. Improving stream studies with a small-footprint green LIDAR. Eos Trans. Am. Geophys. Union 2009, 90, 341–342. [Google Scholar] [CrossRef]

- Parker, G.G. Structure and microclimate of forest canopies. In Forest Canopies: A review of Research on a Biological Frontier; Lowman, M., Nadkarni, N., Eds.; Academic Press: San Diego, CA, USA, 1995; pp. 73–106. [Google Scholar]

- Van de Sande, K.E.A.; Gevers, T.; Snoek, C.G.M. Evaluating color descriptors for object and scene recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1582–1596. [Google Scholar] [CrossRef] [PubMed]

- Keenan, T.F.; Darby, B.; Felts, E.; Sonnentag, O.; Friedl, M.A.; Hufkens, K.; O’Keefe, J.; Klosterman, S.; Munger, J.W.; Toomey, M.; et al. Tracking forest phenology and seasonal physiology using digital repeat photography: A critical assessment. Ecol. Appl. 2014, 24, 1478–1489. [Google Scholar] [CrossRef]

- Mizunuma, T.; Wilkinson, M.; Eaton, E.L.; Mencuccini, M.; Morison, J.I.L.; Grace, J. The relationship between carbon dioxide uptake and canopy colour from two camera systems in a deciduous forest in southern England. Funct. Ecol. 2013, 27, 196–207. [Google Scholar] [CrossRef]

- Garzon-Lopez, C.X.; Bohlman, S.A.; Olff, H.; Jansen, P.A. Mapping tropical forest trees using high-resolution aerial digital photographs. Biotropica 2013, 45, 308–316. [Google Scholar] [CrossRef]

- Lefsky, M.; McHale, M.R. Volume estimates of trees with complex architecture from terrestrial laser scanning. J. Appl. Remote Sens. 2008, 2, 023521. [Google Scholar]

- McHale, M.R.; Burke, I.C.; Lefsky, M.A.; Peper, P.J.; McPherson, E.G. Urban forest biomass estimates: Is it important to use allometric relationships developed specifically for urban trees? Urban Ecosyst. 2009, 12, 95–113. [Google Scholar] [CrossRef]

| (a) | TLS Leaf-on | (b) | TLS Leaf-off | ||||||

| Red | Not red | Sum | Red | Not red | Sum | ||||

| SFM Leaf-on | Red | 1 | 2 | 3 | SFM Leaf-off | Red | 4 | 9 | 13 |

| Not red | 4 | 4 | 8 | Not red | 0 | 1 | 1 | ||

| Sum | 5 | 6 | 11 | Sum | 4 | 10 | 14 | ||

| SFM Accuracy = 3/11 = 27% | SFM Accuracy = 13/14 = 93% | ||||||||

| TLS Accuracy = 5/11 = 45% | TLS Accuracy = 4/14 = 29% | ||||||||

| Overall Agreement = 5/11 = 45% | Overall Agreement = 5/14 = 36% | ||||||||

| Kappa = −0.14 | Kappa = 0.06 | ||||||||

| Image Sample | Numeric Feature Descriptor | 3D Coordinate and RGB Color | |

|---|---|---|---|

| Description | A portion of the original image, determined by the feature detector. | Numeric vector around a group of pixels, determined by the feature detector. | XYZ coordinates and RGB color with variable scale/size corresponding to part of the scene. |

| Quality | SIFT locates points that are brighter or darker than the surroundings. | Individually, a bright or dark spot, but not necessarily a distinct canopy object. | RGB color accurately describes object color if the object can be observed by the detector. |

| Implications | Sampling of a scene is determined by the feature detector. | Descriptors may be related to landscape context, but more information is needed to get to specific objects. | Fusion does not suffer occlusion effects as when different sensors are used, but will have omission errors due to feature detector. |

| Future Research | Examine how sampling of vegetation or parts of vegetation varies with different detectors. | Evaluate the use of multi-stage description of features including with color, scale, and other detectors. | Apply SFM 3D-RGB fusion to improve understanding of canopy color and structure, e.g., examining 3D dynamics of canopy phenology. |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dandois, J.P.; Baker, M.; Olano, M.; Parker, G.G.; Ellis, E.C. What is the Point? Evaluating the Structure, Color, and Semantic Traits of Computer Vision Point Clouds of Vegetation. Remote Sens. 2017, 9, 355. https://doi.org/10.3390/rs9040355

Dandois JP, Baker M, Olano M, Parker GG, Ellis EC. What is the Point? Evaluating the Structure, Color, and Semantic Traits of Computer Vision Point Clouds of Vegetation. Remote Sensing. 2017; 9(4):355. https://doi.org/10.3390/rs9040355

Chicago/Turabian StyleDandois, Jonathan P., Matthew Baker, Marc Olano, Geoffrey G. Parker, and Erle C. Ellis. 2017. "What is the Point? Evaluating the Structure, Color, and Semantic Traits of Computer Vision Point Clouds of Vegetation" Remote Sensing 9, no. 4: 355. https://doi.org/10.3390/rs9040355