Optimizing the Processing of UAV-Based Thermal Imagery

Abstract

:1. Introduction

- 1)

- Image pre-processing, which is the removal of blurry imagery and conversion of all images to 16-bit TIFF files in which all images have the same dynamic scale range, to ensure that a temperature value corresponds to the same digital number (DN) value in all images.

- 2)

- Image alignment, for which the initial estimations of the image position are derived from the on-board GPS log-file and the time stamp of each image.

- 3)

- Spatial image co-registration to RGB (or other) orthophotos is performed by manually adding ground control points (GCPs) with known position, either from real-time kinematic (RTK) GPS or from the processed RGB imagery.

2. Materials and Methods

2.1. Background on the Influence of Meteorological Conditions on Thermal Data Collection and Processing

2.2. Flights and System Set-Up

2.2.1. Flight System

2.2.2. Agricultural Dataset

2.2.3. Afforestation Dataset

2.3. Data Processing

2.3.1. Suggested Improvements

a. Camera Pre-Calibration

b. Temperature Correction

c. Improved Estimation of Image Position

2.3.2. Data Processing

a. Data Processing of RGB Imagery

b. Thermal Data Pre-Processing

c. Alignment Procedures

d. Comparison of Different Alignment Approaches

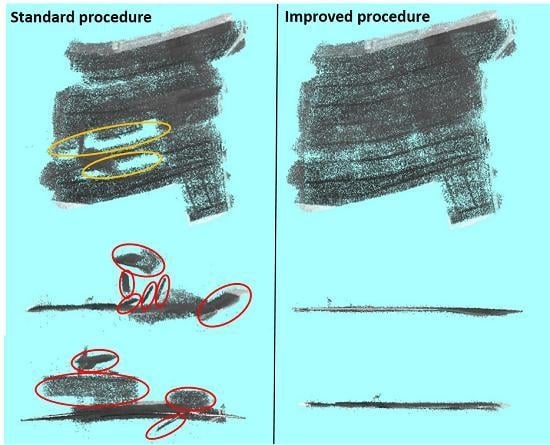

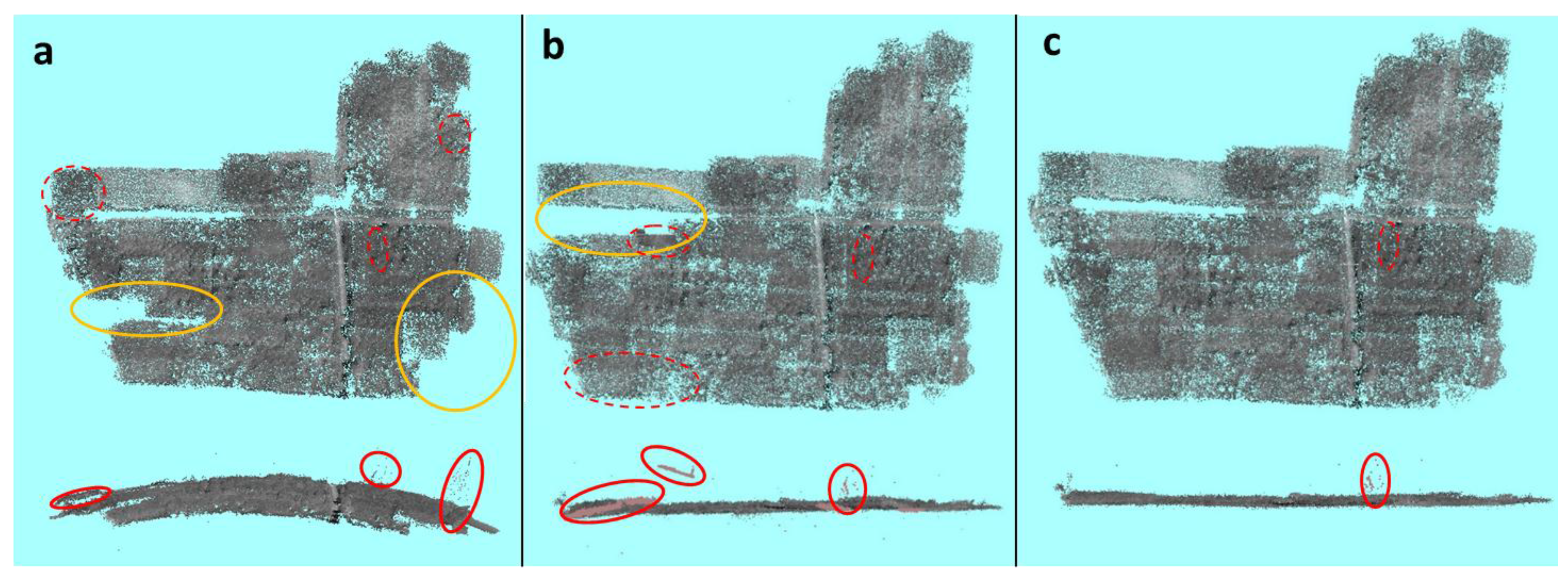

- A bowl effect, where there is a systematic deviation in image alignment, causing a flat surface to become bent in the X- and Y-direction the shape of a bowl;

- Alignment gaps, defined as areas where several consecutive images were not aligned, leading to areas without any points;

- Images that are clearly not aligned correctly, which can be detected by clear deviations in the point cloud.

e. Further Processing

3. Results

3.1. Agricultural Dataset

3.2. Afforestation Dataset

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Modeling the world from Internet photo collections. Int. J. Comput. Vis. 2008, 80, 189–210. [Google Scholar] [CrossRef]

- Carrivick, J.L.; Smith, M.W.; Quincey, D.J. Structure from Motion in the Geosciences; Wiley-Blackwell: Hoboken, NJ, USA, 2016; p. 208. [Google Scholar]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Neugirg, F.; Stark, M.; Kaiser, A.; Vlacilova, M.; Della Seta, M.; Vergari, F.; Schmidt, J.; Becht, M.; Haas, F. Erosion processes in calanchi in the Upper Orcia Valley, Southern Tuscany, Italy based on multitemporal high-resolution terrestrial LiDAR and UAV surveys. Geomorphology 2016, 269, 8–22. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; de Jong, S.M. Time series analysis of landslide dynamics using an Unmanned Aerial Vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef]

- Lucieer, A.; de Jong, S.M.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinform. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Shi, Y.Y.; Thomasson, J.A.; Murray, S.C.; Pugh, N.A.; Rooney, W.L.; Shafian, S.; Rajan, N.; Rouze, G.; Morgan, C.L.S.; Neely, H.L.; et al. Unmanned Aerial Vehicles for high-yhroughput phenotyping and agronomic research. PLoS ONE 2016, 11, e0159781. [Google Scholar] [CrossRef] [PubMed]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Mikita, T.; Janata, P.; Surovy, P. Forest Stand Inventory Based on Combined Aerial and Terrestrial Close-Range Photogrammetry. Forests 2016, 7, 165. [Google Scholar] [CrossRef]

- Li, D.; Guo, H.D.; Wang, C.; Li, W.; Chen, H.Y.; Zuo, Z.L. Individual tree delineation in windbreaks using airborne-laser-scanning data and unmanned aerial vehicle stereo images. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1330–1334. [Google Scholar] [CrossRef]

- Dillen, M.; Vanhellemont, M.; Verdonckt, P.; Maes, W.H.; Steppe, K.; Verheyen, K. Productivity, stand dynamics and the selection effect in a mixed willow clone short rotation coppice plantation. Biomass Bioenerg. 2016, 87, 46–54. [Google Scholar] [CrossRef]

- Staben, G.W.; Lucieer, A.; Evans, K.G.; Scarth, P.; Cook, G.D. Obtaining biophysical measurements of woody vegetation from high resolution digital aerial photography in tropical and arid environments: Northern Territory, Australia. Int. J. Appl. Earth Obs. Geoinform. 2016, 52, 204–220. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovsky, Z.; Turner, D.; Vopenka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Boon, M.A.; Greenfield, R.; Tesfamichael, S. Unmanned Aerial Vehicle (UAV) Photogrammetry Produces Accurate High-resolution Orthophotos, Point Clouds and Surface Models for Mapping Wetlands. S. Afr. J. Geomat. 2016, 5, 186–200. [Google Scholar] [CrossRef]

- Cunliffe, A.M.; Brazier, R.E.; Anderson, K. Ultra-fine grain landscape-scale quantification of dryland vegetation structure with drone-acquired structure-from-motion photogrammetry. Remote Sens. Environ. 2016, 183, 129–143. [Google Scholar] [CrossRef]

- Honkavaara, E.; Eskelinen, M.A.; Polonen, I.; Saari, H.; Ojanen, H.; Mannila, R.; Holmlund, C.; Hakala, T.; Litkey, P.; Rosnell, T.; et al. Remote Sensing of 3-D Geometry and Surface Moisture of a Peat Production Area Using Hyperspectral Frame Cameras in Visible to Short-Wave Infrared Spectral Ranges Onboard a Small Unmanned Airborne Vehicle (UAV). IEEE Trans. Geosci. Remote Sens. 2016, 54, 5440–5454. [Google Scholar] [CrossRef]

- Calderon, R.; Montes-Borrego, M.; Landa, B.B.; Navas-Cortes, J.A.; Zarco-Tejada, P.J. Detection of downy mildew of opium poppy using high-resolution multi-spectral and thermal imagery acquired with an unmanned aerial vehicle. Precis. Agric. 2014, 15, 639–661. [Google Scholar] [CrossRef]

- Tattaris, M.; Reynolds, M.P.; Chapman, S.C. A Direct Comparison of Remote Sensing Approaches for High-Throughput Phenotyping in Plant Breeding. Front. Plant Sci. 2016, 7, 1131. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolas, E.; Nortes, P.A.; Alarcon, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.J.; Fereres, E. Applicability and limitations of using the crop water stress index as an indicator of water deficits in citrus orchards. Agric. For. Meteorol. 2014, 198, 94–104. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. 2016, 20, 697–713. [Google Scholar] [CrossRef]

- Ortega-Farias, S.; Ortega-Salazar, S.; Poblete, T.; Kilic, A.; Allen, R.; Poblete-Echeverria, C.; Ahumada-Orellana, L.; Zuniga, M.; Sepulveda, D. Estimation of Energy Balance Components over a Drip-Irrigated Olive Orchard Using Thermal and Multispectral Cameras Placed on a Helicopter-Based Unmanned Aerial Vehicle (UAV). Remote Sens. 2016, 8, 638. [Google Scholar] [CrossRef]

- Sepúlveda-Reyes, D.; Ingram, B.; Bardeen, M.; Zúñiga, M.; Ortega-Farías, S.; Poblete-Echeverría, C. Selecting canopy zones and thresholding approaches to assess grapevine water status by using aerial and ground-based thermal imaging. Remote Sens. 2016, 8, 822. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Sepulcre-Canto, G.; Fereres, E.; Villalobos, F. Mapping canopy conductance and CWSI in olive orchards using high resolution thermal remote sensing imagery. Remote Sens. Environ. 2009, 113, 2380–2388. [Google Scholar] [CrossRef]

- Christensen, B.R. Use of UAV or remotely piloted aircraft and forward-looking infrared in forest, rural and wildland fire management: Evaluation using simple economic analysis. N. Z. J. For. Sci. 2015, 45, 16. [Google Scholar] [CrossRef]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned Aerial Vehicles (UAVs) and Artificial Intelligence Revolutionizing Wildlife Monitoring and Conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Chretien, L.P.; Theau, J.; Menard, P. Visible and thermal infrared remote sensing for the detection of white-tailed deer using an unmanned aerial system. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- Cleverly, J.; Eamus, D.; Restrepo Coupe, N.; Chen, C.; Maes, W.; Li, L.; Faux, R.; Santini, N.S.; Rumman, R.; Yu, Q.; et al. Soil moisture controls on phenology and productivity in a semi-arid critical zone. Sci. Total Environ. 2016, 568, 1227–1237. [Google Scholar] [CrossRef] [PubMed]

- Turner, D.; Lucieer, A.; Malenovsky, Z.; King, D.H.; Robinson, S.A. Spatial co-registration of ultra-high resolution visible, multispectral and thermal images acquired with a micro-UAV over Antarctic moss beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Pech, K.; Stelling, N.; Karrasch, P.; Maas, H.G. Generation of Multitemporal Thermal Orthophotos from UAV Data in Uav-G2013; Grenzdorffer, G., Bill, R., Eds.; Copernicus Gesellschaft Mbh: Gottingen, Germany, 2013; pp. 305–310. [Google Scholar]

- Raza, S.-E.-A.; Smith, H.K.; Clarkson, G.J.J.; Taylor, G.; Thompson, A.J.; Clarkson, J.; Rajpoot, N.M. Automatic detection of regions in spinach canopies responding to soil moisture deficit using combined visible and thermal imagery. PLoS ONE 2014, 9, e9761. [Google Scholar] [CrossRef] [PubMed]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Jones, H.G. Plants and microclimate. In a Quantitative Approach to Environmental Plant Physiology, 2nd ed.; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Maes, W.H.; Steppe, K. Estimating evapotranspiration and drought stress with ground-based thermal remote sensing in agriculture: A review. J. Exp. Bot. 2012, 63, 4671–4712. [Google Scholar] [CrossRef] [PubMed]

- Maes, W.H.; Pashuysen, T.; Trabucco, A.; Veroustraete, F.; Muys, B. Does energy dissipation increase with ecosystem succession? Testing the ecosystem exergy theory combining theoretical simulations and thermal remote sensing observations. Ecol. Model. 2011, 23–24, 3917–3941. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suarez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Huband, N.D.S.; Monteith, J.L. Radiative surface-temperature and energy-balance of a wheat canopy, 1: Comparison of radiative and aerodynamic canopy temperature. Bound.-Layer Meteorol. 1986, 36, 1–17. [Google Scholar]

- Sobrino, J.A.; Raissouni, N.; Li, Z.L. A comparative study of land surface emissivity retrieval from NOAA data. Remote Sens. Environ. 2001, 75, 256–266. [Google Scholar] [CrossRef]

- Verheyen, K.; Vanhellemont, M.; Auge, H.; Baeten, L.; Baraloto, C.; Barsoum, N.; Bilodeau-Gauthier, S.; Bruelheide, H.; Castagneyrol, B.; Godbold, D.; et al. Contributions of a global network of tree diversity experiments to sustainable forest plantations. Ambio 2016, 45, 29–41. [Google Scholar] [CrossRef] [PubMed]

- Verheyen, K.; Ceunen, K.; Ampoorter, E.; Baeten, L.; Bosman, B.; Branquart, E.; Carnol, M.; De Wandeler, H.; Grégoire, J.-C.; Lhoir, P.; et al. Assessment of the functional role of tree diversity: The multi-site FORBIO experiment. Plant Ecol. Evol. 2013, 146, 26–35. [Google Scholar] [CrossRef]

- Van de Peer, T.; Verheyen, K.; Baeten, L.; Ponette, Q.; Muys, B. Biodiversity as insurance for sapling survival in experimental tree plantations. J. Appl. Ecol. 2016, 53, 1777–1786. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction of a 6-Band multispectral imaging sensor for UAV remote sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef]

- Harwin, S.; Lucieer, A.; Osborn, J. The impact of the calibration method on the accuracy of point clouds derived using Unmanned Aerial Vehicle multi-view stereopsis. Remote Sens. 2015, 7, 11933–11953. [Google Scholar] [CrossRef]

- Maes, W.H.; Minchin, P.E.H.; Snelgar, W.P.; Steppe, K. Early detection of Psa infection in kiwifruit by means of infrared thermography at leaf and orchard scale. Funct. Plant Biol. 2014, 41, 1207–1220. [Google Scholar] [CrossRef]

- Miao, C.; Zhang, Q.; Fang, J.; Lei, X. Design of orientation estimation system by inertial and magnetic sensors; Proceedings of the Institution of Mechanical Engineers. J. Aerosp. Eng. 2013, 228, 1105–1113. [Google Scholar]

| Time Start | Time End | Tair (°C) | VPD (kPa) | Wind Speed (m s−1) | Net Radiation (W m−2) | |

|---|---|---|---|---|---|---|

| Agricultural Dataset (25 August 2016) | ||||||

| Flight 1 | 13:11 | 13:22 | 30.7 ± 0.5 | 2.31 ± 0.13 | 1.5 ± 0.6 | 608 ± 8 |

| Flight 2 | 13:32 | 13:45 | 30.9 ± 0.3 | 2.29 ± 0.08 | 1.1 ± 0.5 | 602 ± 9 |

| Flight 3 | 13:53 | 13:57 | 31.1 ± 0.3 | 2.41 ± 0.12 | 1.4 ± 0.8 | 598 ± 17 |

| Afforestation Dataset (24 August 2016) | ||||||

| Flight 1 | 12:28 | 12:41 | 28.7 ± 0.2 | 1.88 ± 0.05 | 1.4 ± 0.6 | 595 ± 5 |

| Flight 2 | 13:08 | 13:20 | 29.4 ± 0.3 | 2.28 ± 0.08 | 1.6 ± 0.8 | 627 ± 9 |

| Principal Point | Affinity | Skew | Radial | Tangential | |||||

|---|---|---|---|---|---|---|---|---|---|

| cx | cy | B1 | B2 | k1 | k2 | k3 | P1 | P2 | |

| Parameter/estimate | −7.38 | 10.71 | 0.94 | 1.12 | −0.19 | 0.95 | −4.27 | 0.003745 | −0.00611 |

| Standard Deviation | 1.16 | 1.01 | 0.13 | 0.15 | 0.03 | 0.38 | 1.56 | 0.00059 | 0.00053 |

| Variable | Initial Estimate of Image Position | Calibrated? | % Aligned | # Not Aligned | # of Points (103) (% of Total) | Bowl Effect | Gaps? | Wrongfully Aligned Images? |

|---|---|---|---|---|---|---|---|---|

| Ts | None | No | 96 | 48 | 202.6 (88.7) | Y | Large | No |

| Ts_c | None | No | 98 | 31 | 207.8 (90.9) | Y | No | One large section |

| Ts | None | Yes | 91 | 114 | 194.3 (85.0) | Y | Large | No |

| Ts_c | None | Yes | 93 | 100 | 196.9 (86.2) | Y | Large | No |

| Ts | GPS log | No | 93 | 96 | 195.4 (85.5) | N | Large | Several sections |

| Ts_c | GPS log | No | 94 | 75 | 202.5 (88.6) | N | No | Several sections |

| Ts | GPS log | Yes | 98 | 30 | 209.1 (91.5) | N | No | Several sections |

| Ts_c | GPS log | Yes | 98 | 29 | 208.0 (91.0) | (Y) | No | Several sections |

| Ts | RGB-based | No | 99 | 13 | 210.5 (92.1) | N | No | One small section |

| Ts_c | RGB-based | No | 99 | 13 | 212.0 (92.8) | N | No | One small section |

| Ts | RGB-based | Yes | 94 | 87 | 196.2 (85.9) | N | Large | Several sections |

| Ts_c | RGB-based | Yes | 99 | 14 | 210.9 (92.3) | N | No | No |

| Variable | Initial Estimate of Image Position | Calibrated? | % Aligned | # not Aligned | # of Points (103) (% of Total) | Bowl Effect | Gaps? | Wrongfully Aligned Images? |

|---|---|---|---|---|---|---|---|---|

| Ts | None | No | 86.9 | 137 | 190.3 (80.5) | Y | Several | Few sections |

| Ts_c | None | No | 94.2 | 61 | 205.8 (87.1) | (Y) | Three | Few sections |

| Ts | None | Yes | 94.2 | 61 | 205.0 (86.7) | Y | Three | Few sections |

| Ts_c | None | Yes | 94.2 | 61 | 206.2 (87.2) | (Y) | Two | Few sections |

| Ts | Mission File | No | 99.8 | 2 | 219.0 (92.7) | N | One | Several sections |

| Ts_c | Mission File | No | 99.8 | 2 | 218.9 (92.6) | N | One | Several sections |

| Ts | Mission File | Yes | 99.8 | 2 | 218.7 (92.5) | N | One | Several sections |

| Ts_c | Mission File | Yes | 99.9 | 1 | 219.0 (92.7) | N | One | Several sections |

| Ts | RGB-based | No | 99.5 | 5 | 218.5 (92.4) | N | No | One small, one large section |

| Ts_c | RGB-based | No | 99.9 | 1 | 218.9 (92.6) | N | No | One small section |

| Ts | RGB-based | Yes | 99.5 | 5 | 218.3 (92.4) | N | No | One small section |

| Ts_c | RGB-based | Yes | 99.6 | 4 | 218.6 (92.5) | N | No | One small section |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the Processing of UAV-Based Thermal Imagery. Remote Sens. 2017, 9, 476. https://doi.org/10.3390/rs9050476

Maes WH, Huete AR, Steppe K. Optimizing the Processing of UAV-Based Thermal Imagery. Remote Sensing. 2017; 9(5):476. https://doi.org/10.3390/rs9050476

Chicago/Turabian StyleMaes, Wouter H., Alfredo R. Huete, and Kathy Steppe. 2017. "Optimizing the Processing of UAV-Based Thermal Imagery" Remote Sensing 9, no. 5: 476. https://doi.org/10.3390/rs9050476