Detection of Tropical Overshooting Cloud Tops Using Himawari-8 Imagery

Abstract

:1. Introduction

2. Data

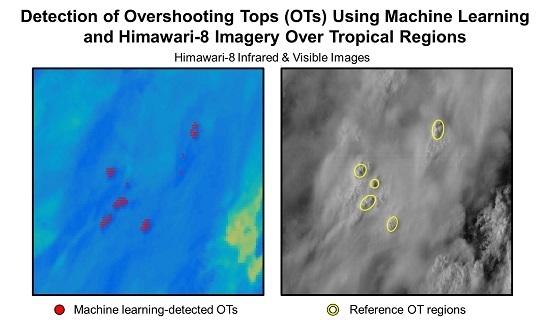

2.1. Himawari-8 Visible and Infrared Imagery

2.2. MODIS Visible Imagery

2.3. Tropopause Temperature from the Numerical Weather Prediction Model

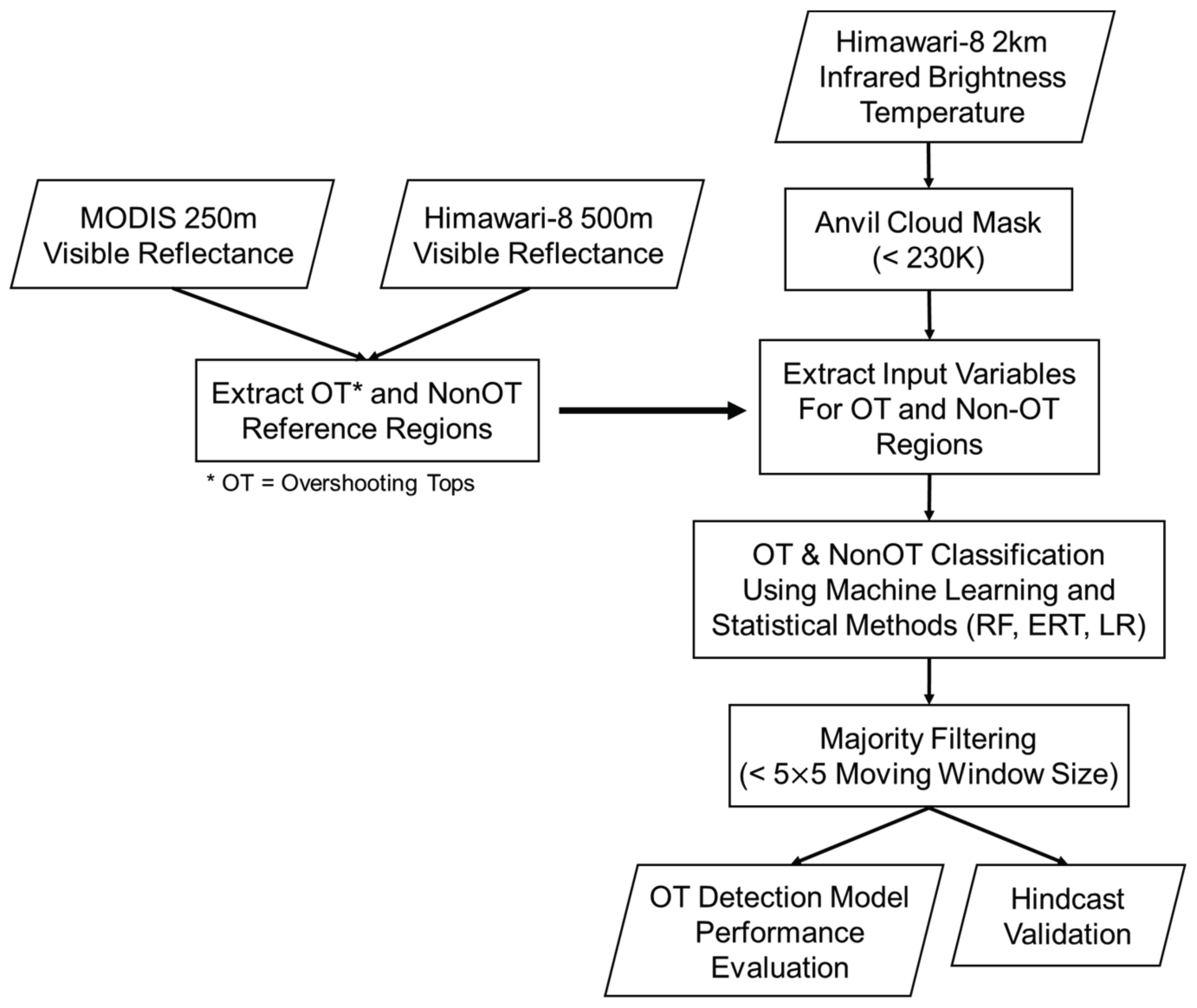

3. Methods

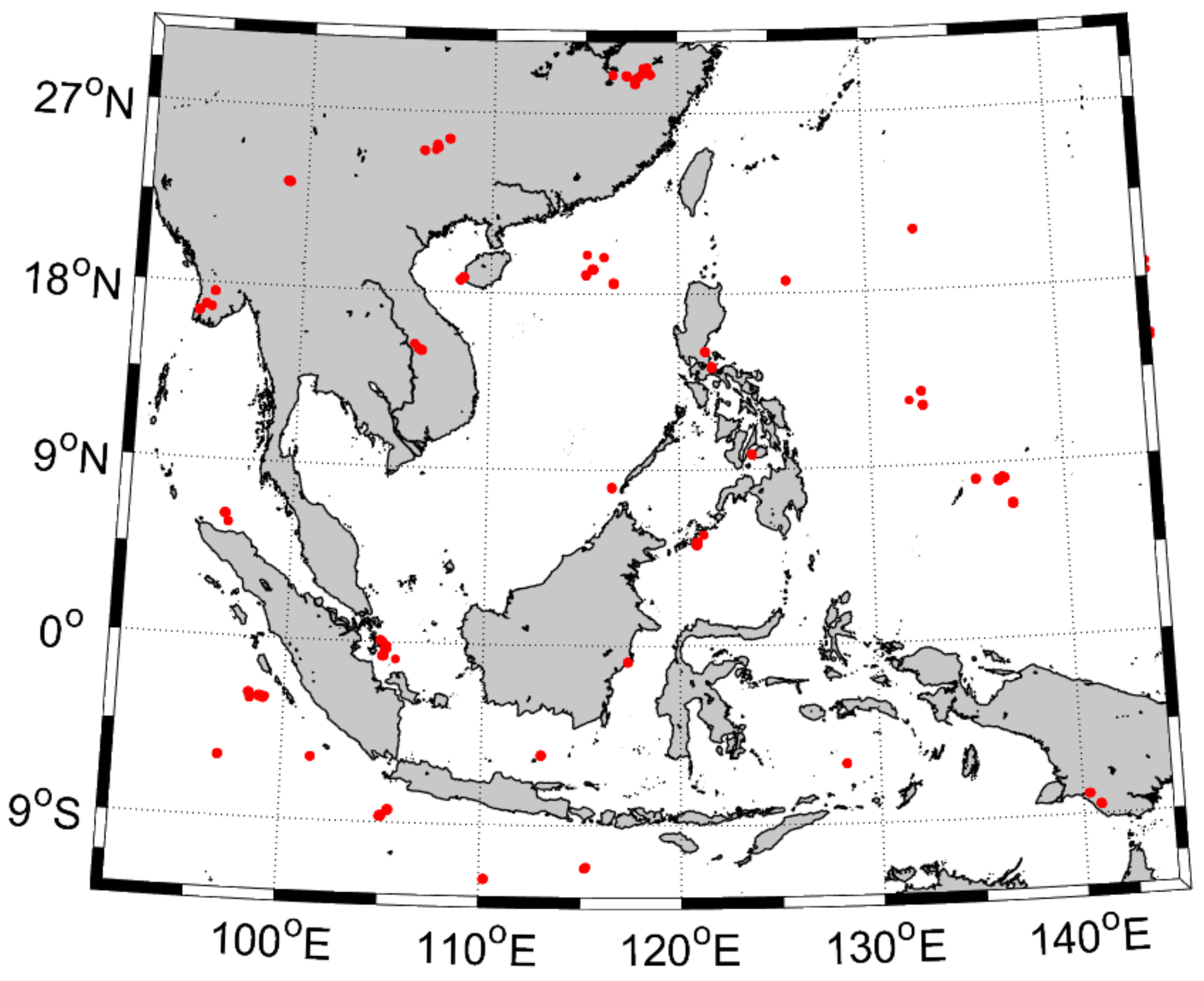

3.1. Construction of Overshooting Top and Non-Overshooting Top Reference Datasets

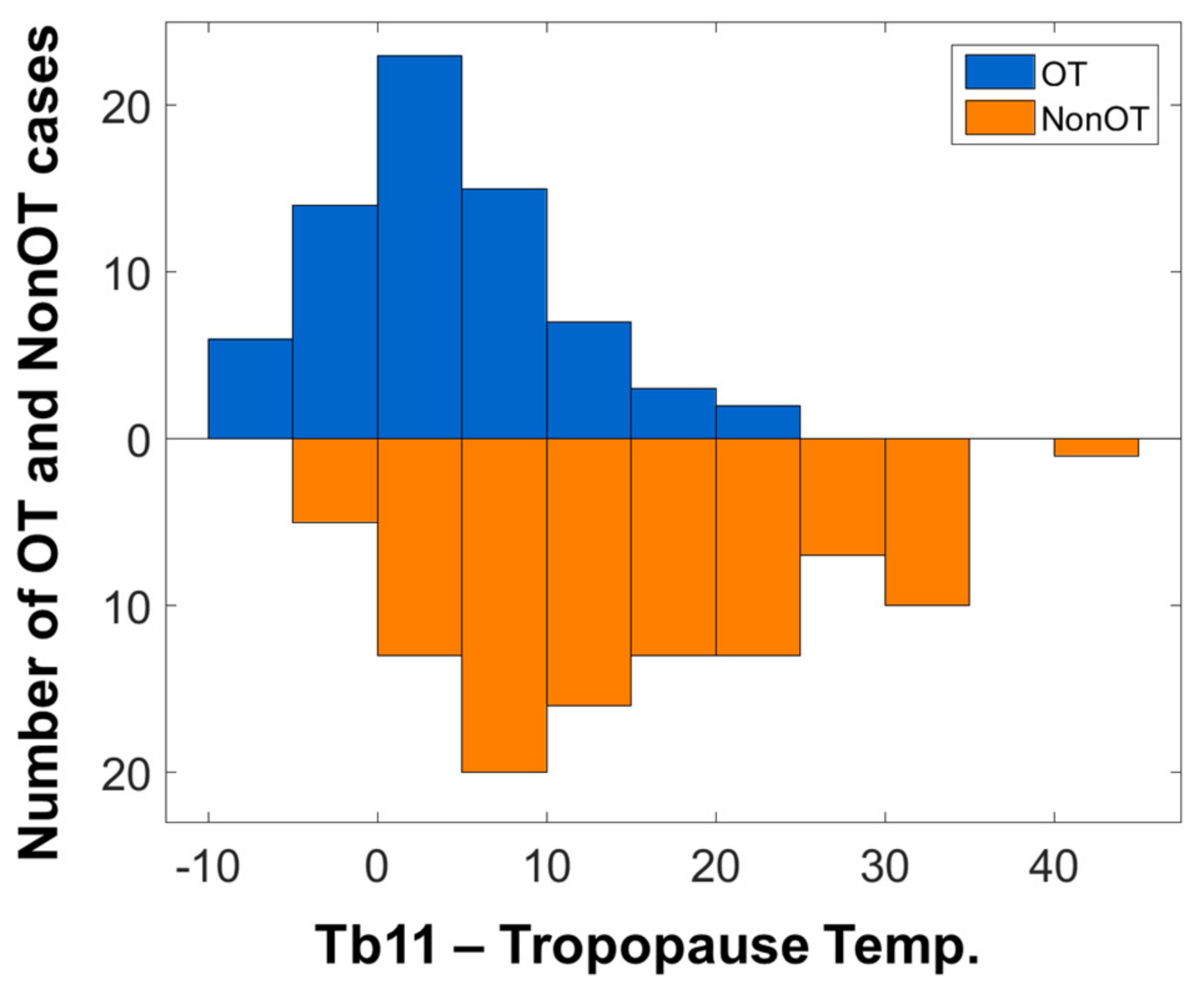

3.2. Input Variables and Training, Test, and Validation Dataset for Classification of Overshooting Tops

3.3. Machine Learning Approaches for the Development of Overshooting Top Classification Models

3.3.1. Tree-Based Ensemble Models: Random Forest and Extremely Randomized Trees

3.3.2. Logistic Regression

4. Results and Discussion

4.1. Model Performances

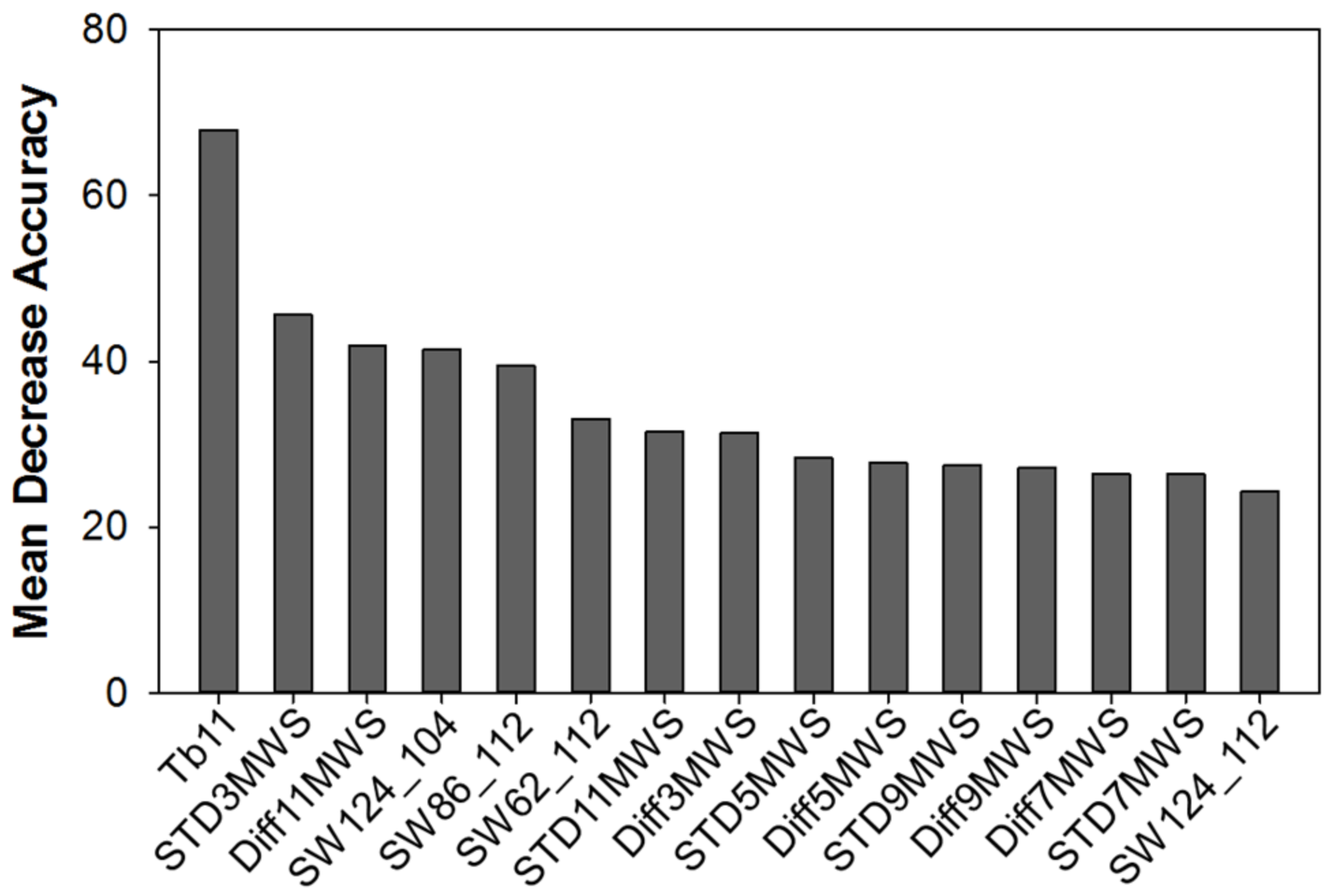

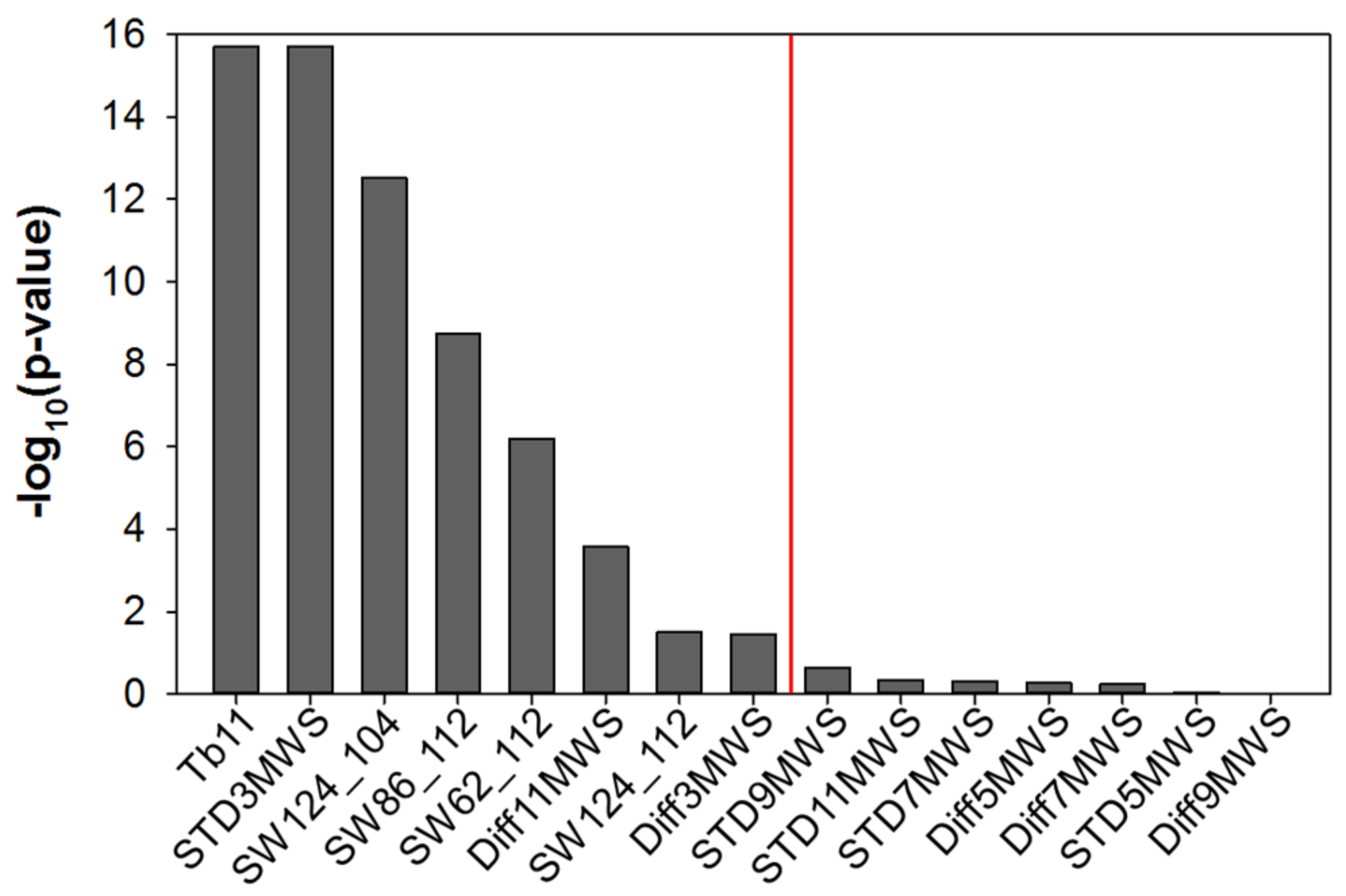

4.2. Contribution of Input Variables for Overshooting Top Detection

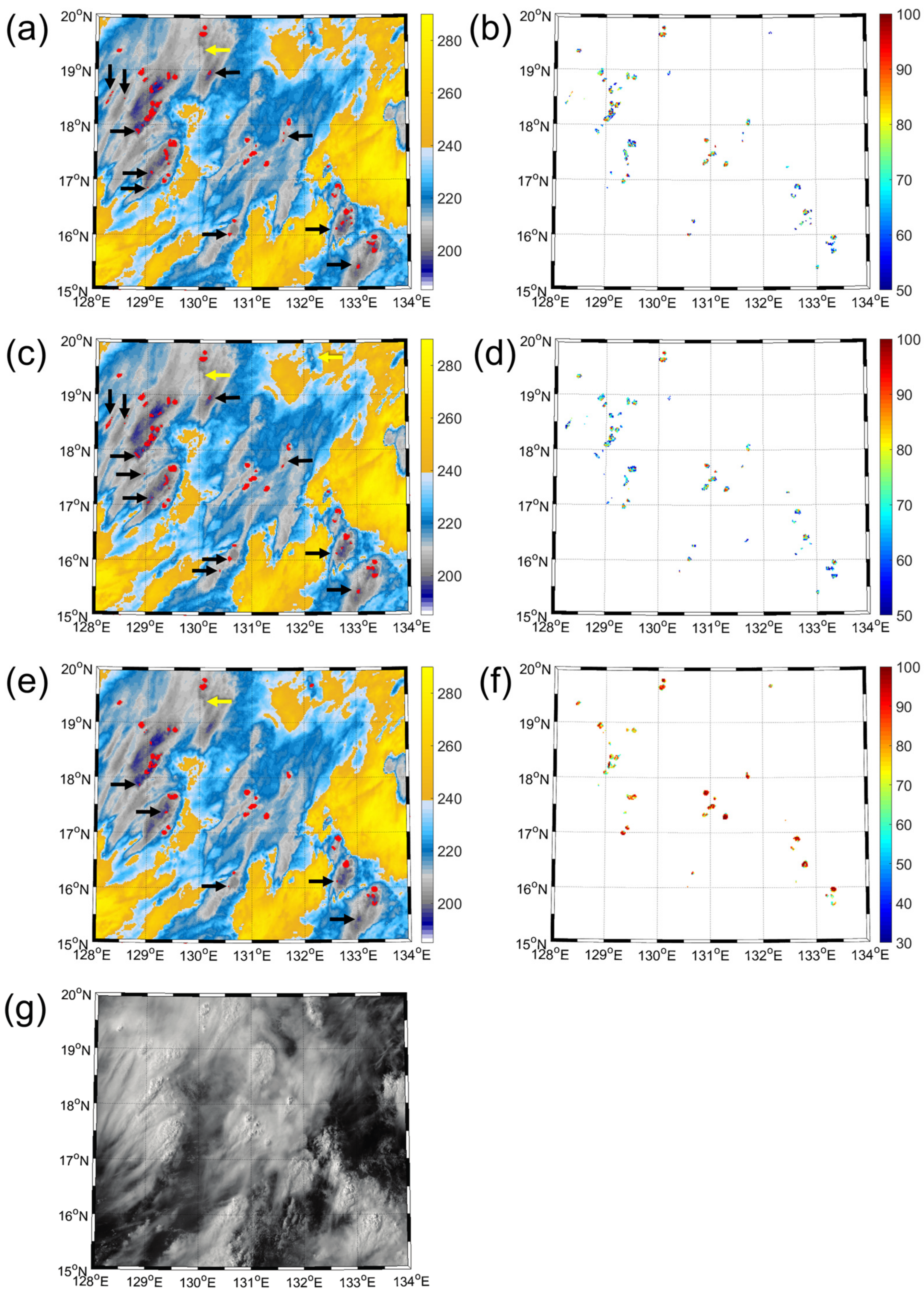

4.3. Qualitative Evaluation of Overshooting Top Detection Models

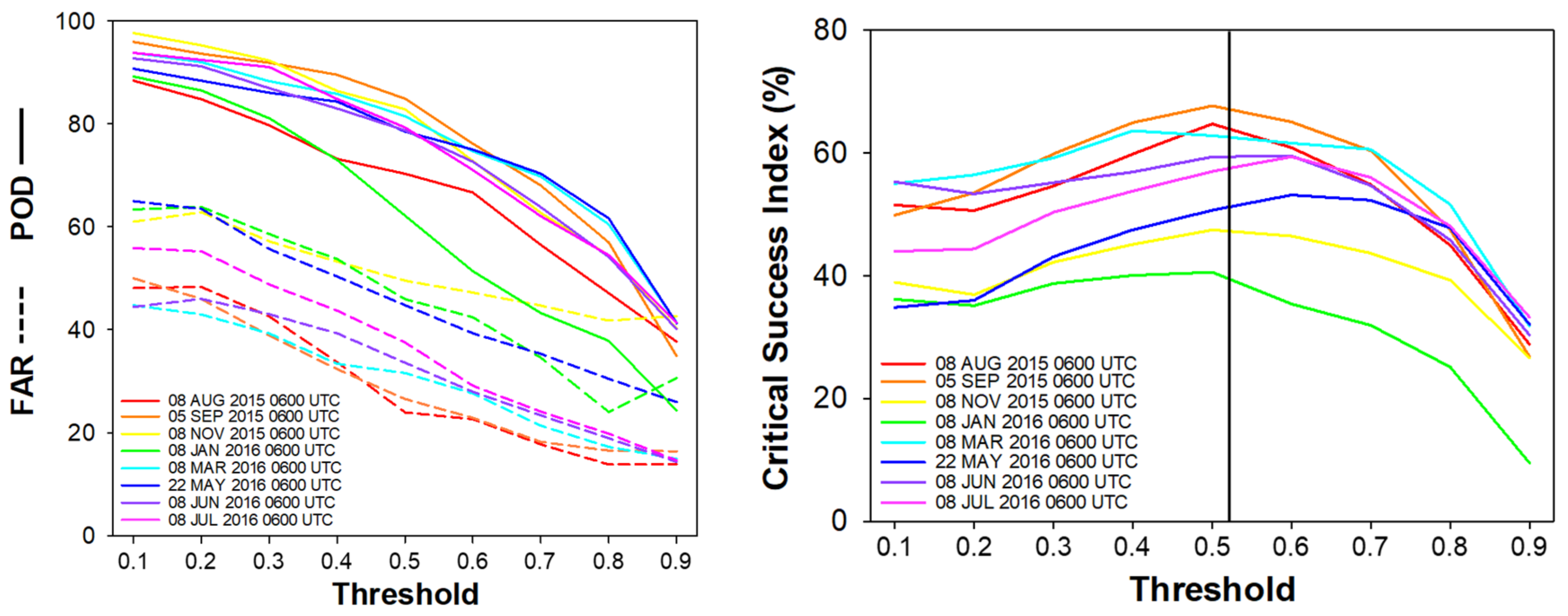

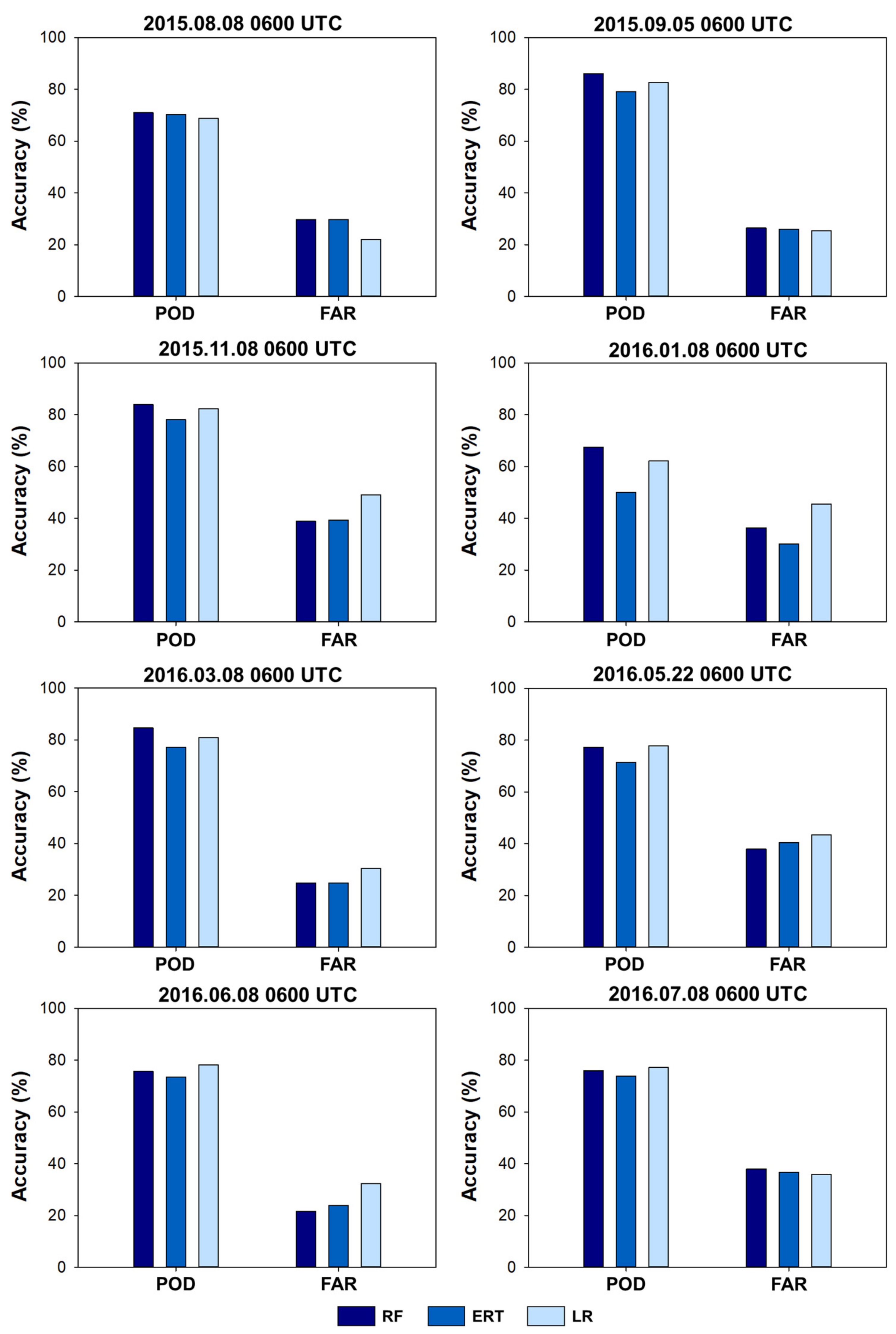

4.4. Quantitative Evaluation of Overshooting Top Detection Models

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- American Meteorological Society. Available online: http://glossary.ametsoc.org/wiki/Overshooting_top (accessed on 28 May 2017).

- Fujita, T.T. Tornado Occurrences Related to Overshooting Cloud-Top Heights as Determined from ATS Pictures; NASA: Chicago, IL, USA, 1972.

- Reynolds, D.W. Observations of damaging hailstorms from geosynchronous satellite digital data. Mon. Weather Rev. 1980, 108, 337–348. [Google Scholar] [CrossRef]

- Negri, A.J.; Adler, R.F. Relation of satellite-based thunderstorm intensity to radar-estimated rainfall. J. Appl. Meteorol. 1981, 20, 288–300. [Google Scholar] [CrossRef]

- Adler, R.F.; Markus, M.J.; Fenn, D.D. Detection of severe midwest thunderstorms using geosynchronous satellite data. Mon. Weather Rev. 1985, 113, 769–781. [Google Scholar] [CrossRef]

- Lane, T.P.; Sharman, R.D.; Clark, T.L.; Hsu, H.-M. An investigation of turbulence generation mechanisms above deep convection. J. Atmos. Sci. 2003, 60, 1297–1321. [Google Scholar] [CrossRef]

- Mikus, P.; Mahović, N.S. Satellite-based overshooting top detection methods and an analysis of correlated weather conditions. Atmos. Res. 2013, 123, 268–280. [Google Scholar] [CrossRef]

- Bedka, K.M. Overshooting cloud top detections using msg seviri infrared brightness temperatures and their relationship to severe weather over Europe. Atmos. Res. 2011, 99, 175–189. [Google Scholar] [CrossRef]

- Takahashi, H.; Luo, Z.J. Characterizing tropical overshooting deep convection from joint analysis of cloudsat and geostationary satellite observations. J. Geophys. Res. 2014, 119, 112–121. [Google Scholar] [CrossRef]

- Liu, C.; Zipser, E.J. Global distribution of convection penetrating the tropical tropopause. J. Geophys. Res. 2005, 110, 37–42. [Google Scholar] [CrossRef]

- Berendes, T.A.; Mecikalski, J.R.; MacKenzie, W.M.; Bedka, K.M.; Nair, U. Convective cloud identification and classification in daytime satellite imagery using standard deviation limited adaptive clustering. J. Geophys. Res. 2008, 113, D20. [Google Scholar] [CrossRef]

- Lindsey, D.T.; Grasso, L. An effective radius retrieval for thick ice clouds using goes. J. Appl. Meteorol. Climatol. 2008, 47, 1222–1231. [Google Scholar] [CrossRef]

- Rosenfeld, D.; Woodley, W.L.; Lerner, A.; Kelman, G.; Lindsey, D.T. Satellite detection of severe convective storms by their retrieved vertical profiles of cloud particle effective radius and thermodynamic phase. J. Geophys. Res. 2008, 113, D4. [Google Scholar] [CrossRef]

- Ackerman, S.A. Global satellite observations of negative brightness temperature differences between 11 and 6.7 µm. J. Atmos. Sci. 1996, 53, 2803–2812. [Google Scholar] [CrossRef]

- Schmetz, J.; Tjemkes, S.; Gube, M.; Van de Berg, L. Monitoring deep convection and convective overshooting with meteosat. Adv. Space Res. 1997, 19, 433–441. [Google Scholar] [CrossRef]

- Setvak, M.; Rabin, R.M.; Wang, P.K. Contribution of the MODIS instrument to observations of deep convective storms and stratospheric moisture detection in goes and msg imagery. Atmos. Res. 2007, 83, 505–518. [Google Scholar] [CrossRef]

- Bedka, K.; Brunner, J.; Dworak, R.; Feltz, W.; Otkin, J.; Greenwald, T. Objective satellite-based detection of overshooting tops using infrared window channel brightness temperature gradients. J. Appl. Meteorol. Climatol. 2010, 49, 181–202. [Google Scholar] [CrossRef]

- Martin, D.W.; Kohrs, R.A.; Mosher, F.R.; Medaglia, C.M.; Adamo, C. Over-ocean validation of the global convective diagnostic. J. Appl. Meteorol. Climatol. 2008, 47, 525–543. [Google Scholar] [CrossRef]

- Proud, S.R. Analysis of overshooting top detections by meteosat second generation: A 5-year dataset. Q. J. R. Meteorol. Soc. 2015, 141, 909–915. [Google Scholar] [CrossRef]

- Dworak, R.; Bedka, K.; Brunner, J.; Feltz, W. Comparison between goes-12 overshooting-top detections, wsr-88d radar reflectivity, and severe storm reports. Weather Forecast. 2012, 27, 684–699. [Google Scholar] [CrossRef]

- Bedka, K.M.; Khlopenkov, K. A probabilistic multispectral pattern recognition method for detection of overshooting cloud tops using passive satellite imager observations. J. Appl. Meteorol. Climatol. 2016, 55, 1983–2005. [Google Scholar] [CrossRef]

- Gomez-Landesa, E.; Rango, A.; Bleiweiss, M. An algorithm to address the MODIS bowtie effect. Can. J. Remote Sens. 2004, 30, 644–650. [Google Scholar] [CrossRef]

- Sayer, A.; Hsu, N.; Bettenhausen, C. Implications of MODIS bow-tie distortion on aerosol optical depth retrievals, and techniques for mitigation. Atmos. Meas. Tech. 2015, 8, 5277–5288. [Google Scholar] [CrossRef]

- Lucas, C.; Zipser, E.J.; Lemone, M.A. Vertical velocity in oceanic convection off tropical Australia. J. Atmos. Sci. 1994, 51, 3183–3193. [Google Scholar] [CrossRef]

- Zipser, E.J. Some views on “hot towers” after 50 years of tropical field programs and two years of trmm data. Meteorol. Monogr. Am. Meteorol. Soc. 2003, 29, 49–58. [Google Scholar] [CrossRef]

- Bedka, K.M.; Dworak, R.; Brunner, J.; Feltz, W. Validation of satellite-based objective overshooting cloud-top detection methods using cloudsat cloud profiling radar observations. J. Appl. Meteorol. Climatol. 2012, 51, 1811–1822. [Google Scholar] [CrossRef]

- Mecikalski, J.R.; Bedka, K.M. Forecasting convective initiation by monitoring the evolution of moving cumulus in daytime goes imagery. Mon. Weather Rev. 2006, 134, 49–78. [Google Scholar] [CrossRef]

- Mecikalski, J.R.; MacKenzie, W.M., Jr.; Koenig, M.; Muller, S. Cloud-top properties of growing cumulus prior to convective initiation as measured by meteosat second generation. Part I: Infrared fields. J. Appl. Meteorol. Climatol. 2010, 49, 521–534. [Google Scholar] [CrossRef]

- Lindsey, D.T.; Schmit, T.J.; MacKenzie, W.M.; Jewett, C.P.; Gunshor, M.M.; Grasso, L. 10.35 μm: An atmospheric window on the goes-r advanced baseline imager with less moisture attenuation. J. Appl. Remote Sens. 2012, 6, 063598. [Google Scholar] [CrossRef]

- Richardson, H.J.; Hill, D.J.; Denesiuk, D.R.; Fraser, L.H. A comparison of geographic datasets and field measurements to model soil carbon using random forests and stepwise regressions (British Columbia, Canada). GISci. Remote Sens. 2017, 54, 573–591. [Google Scholar] [CrossRef]

- Pham, T.D.; Yoshino, K.; Bui, D.T. Biomass estimation of Sonneratia caseolaris (L.) engler at a coastal area of hai phong city (Vietnam) using alos-2 palsar imagery and gis-based multi-layer perceptron neural networks. GISci. Remote Sens. 2017, 54, 329–353. [Google Scholar] [CrossRef]

- Lin, Z.; Yan, L. A support vector machine classifier based on a new kernel function model for hyperspectral data. GISci. Remote Sens. 2016, 53, 85–101. [Google Scholar] [CrossRef]

- Moreira, L.C.J.; Teixeira, A.D.S.; Galvao, L.S. Potential of multispectral and hyperspectral data to detect saline-exposed soils in Brazil. GISci. Remote Sens. 2015, 52, 416–436. [Google Scholar] [CrossRef]

- Kim, M.; Im, J.; Han, H.; Kim, J.; Lee, S.; Shin, M.; Kim, H.-C. Landfast sea ice monitoring using multisensor fusion in the antarctic. GISci. Remote Sens. 2015, 52, 239–256. [Google Scholar] [CrossRef]

- Xun, L.; Wang, L. An object-based svm method incorporating optimal segmentation scale estimation using Bhattacharyya Distance for mapping salt cedar (Tamarisk spp.) with quickbird imagery. GISci. Remote Sens. 2015, 52, 257–273. [Google Scholar] [CrossRef]

- Ihaka, R.; Gentleman, R. R: A language for data analysis and graphics. J. Comput. Gr. Stat. 1996, 5, 299–314. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Lee, S.; Im, J.; Kim, J.; Kim, M.; Shin, M.; Kim, H.; Quackenbush, L. Arctic sea ice thickness estimation from CryoSat-2 satellite data using machine learning-based lead detection. Remote Sens. 2016, 8, 698. [Google Scholar] [CrossRef]

- Park, S.; Im, J.; Park, S.; Rhee, J. Drought monitoring using high resolution soil moisture through machine learning approaches over the Korean peninsula. Agric. For. Meteorol. 2017, 237, 257–269. [Google Scholar] [CrossRef]

- Gleason, C.J.; Im, J. Forest biomass estimation from airborne LiDAR data using machine learning approaches. Remote Sens. Environ. 2012, 125, 80–91. [Google Scholar] [CrossRef]

- Lee, S.; Han, H.; Im, J.; Jang, E. Detection of deterministic and probabilistic convective initiation using Himawari-8 Advanced Himawari Imager data. Atmos. Meas. Tech. 2017, 10, 1859–1874. [Google Scholar] [CrossRef]

- Long, J.A.; Lawrence, R.L.; Greenwood, M.C.; Marshall, L.; Miller, P.R. Object-oriented crop classification using multitemporal etm+ slc-off imagery and random forest. GISci. Remote Sens. 2013, 50, 418–436. [Google Scholar]

- Han, H.; Lee, S.; Im, J.; Kim, M.; Lee, M.-I.; Ahn, M.H.; Chung, S.-R. Detection of convective initiation using meteorological imager onboard communication, ocean, and meteorological satellite based on machine learning approaches. Remote Sens. 2015, 7, 9184–9204. [Google Scholar] [CrossRef]

- Torbick, N.; Corbiere, M. Mapping urban sprawl and impervious surfaces in the northeast United States for the past four decades. GISci. Remote Sens. 2015, 52, 746–764. [Google Scholar] [CrossRef]

- Park, S.; Im, J.; Jang, E.; Rhee, J. Drought assessment and monitoring through blending of multi-sensor indices using machine learning approaches for different climate regions. Agric. For. Meteorol. 2016, 216, 157–169. [Google Scholar] [CrossRef]

- Park, M.; Kim, M.; Lee, M.; Im, J.; Park, S. Detection of tropical cyclone genesis via quantitative satellite ocean surface wind pattern and intensity analyses using decision trees. Remote Sens. Environ. 2016, 183, 205–214. [Google Scholar] [CrossRef]

- Lu, Z.; Im, J.; Rhee, J.; Hodgson, M.E. Building type classification using spatial attributes derived from LiDAR remote sensing data. Landsc. Urban Plan. 2014, 130, 134–148. [Google Scholar] [CrossRef]

- Ke, Y.; Im, J.; Park, S.; Gong, H. Downscaling of MODIS 1 km Evapotranspiration using Landsat 8 data and machine learning approaches. Remote Sens. 2016, 8, 215. [Google Scholar] [CrossRef]

- Kim, Y.; Im, J.; Ha, H.; Choi, J.; Ha, S. Machine learning approaches to coastal water quality monitoring using GOCI satellite data. GISci. Remote Sens. 2014, 51, 158–174. [Google Scholar] [CrossRef]

- Rhee, J.; Im, J. Meteorological drought forecasting for ungauged areas based on machine learning: Using long-range forecast and remote sensing data. Agric. For. Meteorol. 2017, 237, 105–122. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Koutsias, N.; Karteris, M. Burned area mapping using logistic regression modeling of a single post-fire landsat-5 thematic mapper image. Int. J. Remote Sens. 2000, 21, 673–687. [Google Scholar] [CrossRef]

- Nyarko, B.; Diekkruger, B.; van de Giesen, N.; Vlek, P. Floodplain wetland mapping in the White Volta River Basin of Ghana. GISci. Remote Sens. 2015, 52, 374–395. [Google Scholar] [CrossRef]

- Setvak, M.; Lindsey, D.T.; Rabin, R.M.; Wang, P.K.; Demeterova, A. Indication of water vapor transport into the lower stratosphere above midlatitude convective storms: Meteosat second generation satellite observations and radiative transfer model simulations. Atmos. Res. 2008, 89, 170–180. [Google Scholar] [CrossRef]

- Setvak, M.; Bedka, K.; Lindsey, D.T.; Sokol, A.; Charvat, Z.; Stástka, J.; Wang, P.K. A-train observations of deep convective storm tops. Atmos. Res. 2013, 123, 229–248. [Google Scholar] [CrossRef]

- GOES-R ABI Bands Quick Info Guides. Available online: http://www.goes-r.gov/education/ABI-bands-quick-info.html (accessed on 28 May 2017).

- Cintineo, J.L.; Pavolonis, M.J.; Sieglaff, J.M.; Heidinger, A.K. Evolution of severe and nonsevere convection inferred from goes-derived cloud properties. J. Appl. Meteorol. Climatol. 2013, 52, 2009–2023. [Google Scholar] [CrossRef]

- Wang, P.K.; Su, S.-H.; Setvak, M.; Lin, H.; Rabin, R.M. Ship wave signature at the cloud top of deep convective storms. Atmos. Res. 2010, 97, 294–302. [Google Scholar] [CrossRef]

| Date | Time (UTC) for MODIS | Time (UTC) for Himawari-8 |

|---|---|---|

| 1 August 2015 | 05:10, 05:15 | 05:10 |

| 15 August 2015 | 07:00, 07:05 | 07:00 |

| 1 September 2015 | 06:10 | 06:10 |

| 15 September 2015 | 03:05 | 03:00 |

| 1 October 2015 | 06:20 | 06:20 |

| 15 October 2015 | 03:15 | 03:20 |

| 1 November 2015 | 05:35 | 05:40 |

| 1 December 2015 | 07:25 | 07:30 |

| 1 January 2016 | 06:40 | 06:40 |

| 15 January 20016 | 06:50 | 06:50 |

| 1 February 2016 | 05:55 | 05:50 |

| 15 February 2016 | 06:10 | 06:10 |

| 1 March 2016 | 05:25 | 05:20 |

| 15 March 2016 | 05:40 | 05:40 |

| 1 Aprial 2016 | 06:20 | 06:20 |

| 15 April 2016 | 04:55 | 04:50 |

| 1 May 2016 | 06:35, 06:40 | 06:40 |

| 15 May 2016 | 06:50 | 06:50 |

| 1 June 2016 | 05:55, 06:00 | 06:00 |

| 15 June 2016 | 06:10 | 06:10 |

| 1 July 2016 | 04:25, 04:30 | 04:30 |

| 15 July 2016 | 04:40 | 04:40 |

| 1 August 2016 | 05:20, 05:25 | 05:20 |

| 15 August 2016 | 05:40 | 05:40 |

| Satellite/Sensor | List of Used Variables (a Total of 15 Input Variables) | Abbreviations | Period | Spatial Resolution |

|---|---|---|---|---|

| Himawari-8/AHI | Tb11 (IR 11.2 µm) | Tb11 | 1st and 15th day of each month from August 2015 to August 2016 | 2 km |

| Standard Deviation (STD) of Tb11 in 3 × 3 moving window size (MWS) | STD3MWS | |||

| STD of Tb11 in 5 × 5 MWS | STD5MWS | |||

| STD of Tb11 in 7 × 7 MWS | STD7MWS | |||

| STD of Tb11 in 9 × 9 MWS | STD9MWS | |||

| STD of Tb11 in 11 × 11 MWS | STD11MWS | |||

| Difference between the center of 3 × 3 MWS and its boundary pixels | Diff3MWS | |||

| Difference between the center of 5 × 5 MWS and its boundary pixels | Diff5MWS | |||

| Difference between the center of 7 × 7 MWS and its boundary pixels | Diff7MWS | |||

| Difference between the center of 9 × 9 MWS and its boundary pixels | Diff9MWS | |||

| Difference between the center of 11 × 11 MWS and its boundary pixels | Diff11MWS | |||

| 6.2–11.2 µm Split Window (SW) difference | SW62_112 | |||

| 8.6–11.2 µm SW difference | SW86_112 | |||

| 12.4–10.4 µm SW difference | SW24_104 | |||

| 12.4–11.2 µm SW difference | SW124_112 |

| Date | Time (UTC) for Himawari-8 |

|---|---|

| 8 August 2015 | 06:00 |

| 5 September 2015 | 06:00 |

| 8 November 2015 | 06:00 |

| 8 January 2016 | 06:00 |

| 8 March 2016 | 06:00 |

| 22 May 2016 | 06:00 |

| 8 June 2016 | 06:00 |

| 8 July 2016 | 06:00 |

| Reference | OT | nonOT | Sum | User’s Accuracy | |

|---|---|---|---|---|---|

| Classified as | |||||

| OT | 173 | 29 | 202 | 85.64% | |

| non-OT | 42 | 398 | 440 | 90.45% | |

| Sum | 215 | 427 | 642 | ||

| Producer’s accuracy | 80.47% | 93.21% | |||

| Overall accuracy | 88.94% | ||||

| Kappa coefficient | 0.75 | ||||

| Reference | OT | nonOT | Sum | User’s Accuracy | |

|---|---|---|---|---|---|

| Classified as | |||||

| OT | 175 | 24 | 199 | 87.94% | |

| non-OT | 40 | 403 | 443 | 90.97% | |

| Sum | 215 | 427 | 642 | ||

| Producer’s accuracy | 81.40% | 94.38% | |||

| Overall accuracy | 90.03% | ||||

| Kappa coefficient | 0.77 | ||||

| Reference | OT | nonOT | Sum | User’s Accuracy | |

|---|---|---|---|---|---|

| Classified as | |||||

| OT | 154 | 44 | 198 | 77.78% | |

| non-OT | 61 | 383 | 444 | 86.26% | |

| Sum | 215 | 427 | 642 | ||

| Producer’s accuracy | 71.63% | 89.70% | |||

| Overall accuracy | 83.64% | ||||

| Kappa coefficient | 0.63 | ||||

| Date | Accuracy | RF | ERT | LR |

|---|---|---|---|---|

| 8 August 2015 | POD | 71.01% | 70.29% | 68.84% |

| 0600 UTC | FAR | 29.68% | 29.76% | 21.99% |

| 5 September 2015 | POD | 86.05% | 79.07% | 82.72% |

| 0600 UTC | FAR | 26.47% | 26.02% | 25.37% |

| 8 November 2015 | POD | 84.02% | 78.11% | 82.29% |

| 0600 UTC | FAR | 38.84% | 39.33% | 49.07% |

| 8 January 2016 | POD | 67.57% | 50.05% | 62.16% |

| 0600 UTC | FAR | 36.36% | 30.05% | 45.54% |

| 8 March 2016 | POD | 84.57% | 77.16% | 80.86% |

| 0600 UTC | FAR | 24.81% | 24.79% | 30.43% |

| 22 May 2016 | POD | 77.33% | 71.51% | 77.91% |

| 0600 UTC | FAR | 37.97% | 40.42% | 43.39% |

| 8 June 2016 | POD | 75.68% | 73.56% | 78.16% |

| 0600 UTC | FAR | 21.66% | 23.96% | 32.39% |

| 8 July 2016 | POD | 75.86% | 73.79% | 77.26% |

| 0600 UTC | FAR | 38.05% | 36.66% | 35.84% |

| Average | POD | 77.76% | 71.69% | 76.27% |

| FAR | 31.73% | 31.38% | 35.50% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.; Im, J.; Park, H.; Park, S.; Lee, M.-I.; Ahn, M.-H. Detection of Tropical Overshooting Cloud Tops Using Himawari-8 Imagery. Remote Sens. 2017, 9, 685. https://doi.org/10.3390/rs9070685

Kim M, Im J, Park H, Park S, Lee M-I, Ahn M-H. Detection of Tropical Overshooting Cloud Tops Using Himawari-8 Imagery. Remote Sensing. 2017; 9(7):685. https://doi.org/10.3390/rs9070685

Chicago/Turabian StyleKim, Miae, Jungho Im, Haemi Park, Seonyoung Park, Myong-In Lee, and Myoung-Hwan Ahn. 2017. "Detection of Tropical Overshooting Cloud Tops Using Himawari-8 Imagery" Remote Sensing 9, no. 7: 685. https://doi.org/10.3390/rs9070685