On the Objectivity of the Objective Function—Problems with Unsupervised Segmentation Evaluation Based on Global Score and a Possible Remedy

Abstract

:1. Introduction

2. Materials and Methods

3. Results

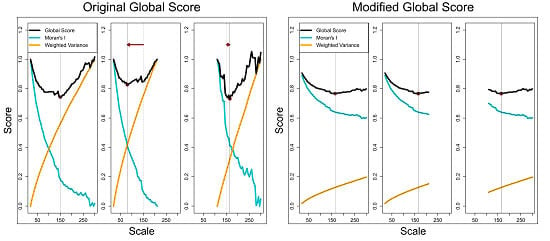

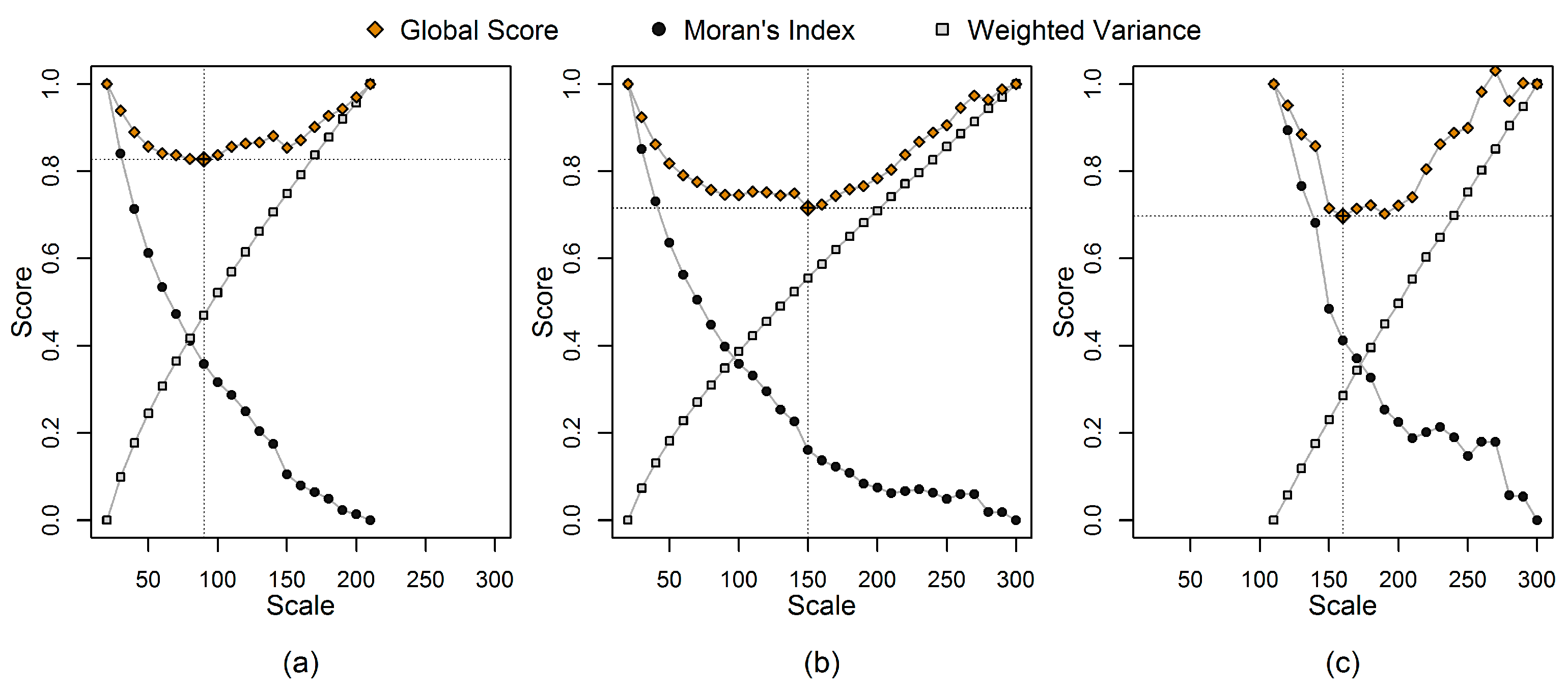

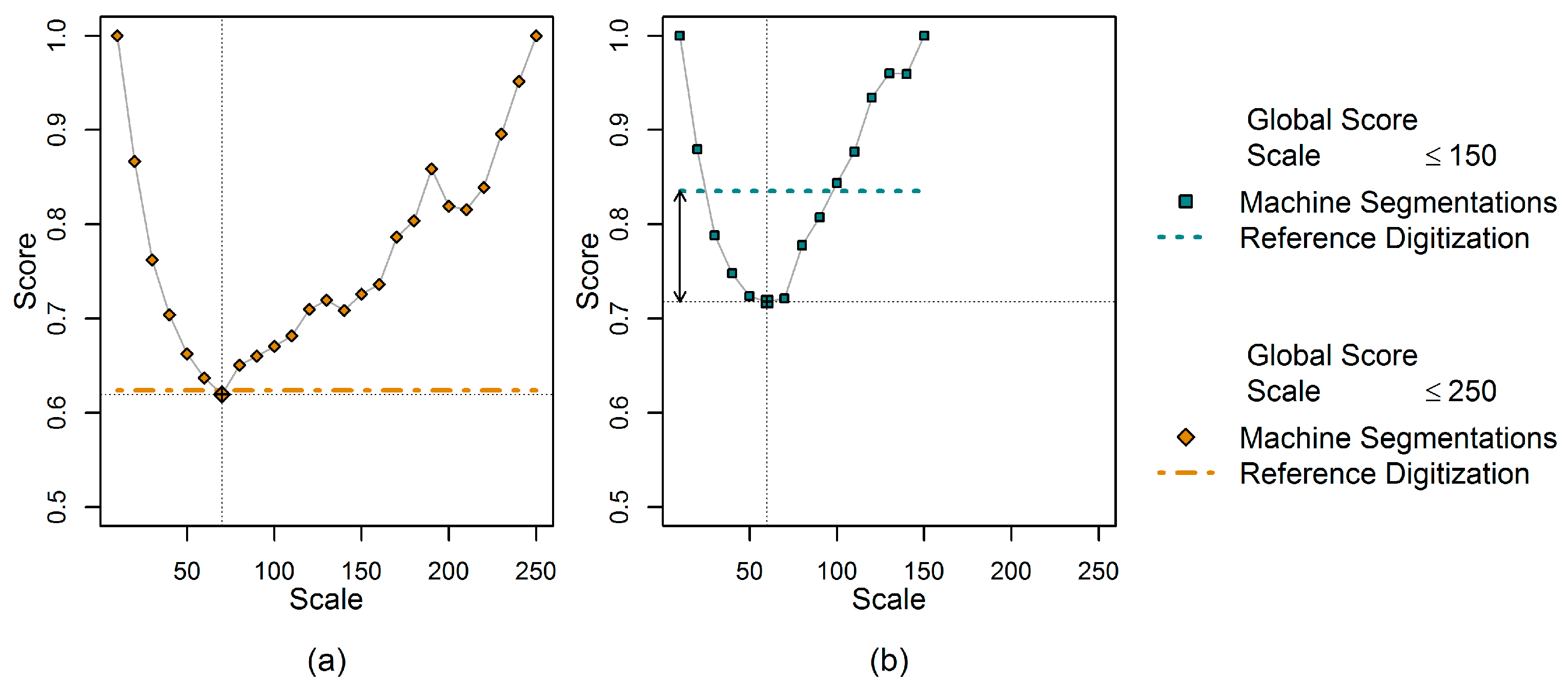

3.1. Illustration of the Sensitivity of GS to the User-Defined Range of Tested Segmentations

- the absolute values of GS change,

- the optimum (minimum) value of GS is shifted,

- the relative ranking of acceptable candidate solutions is altered.

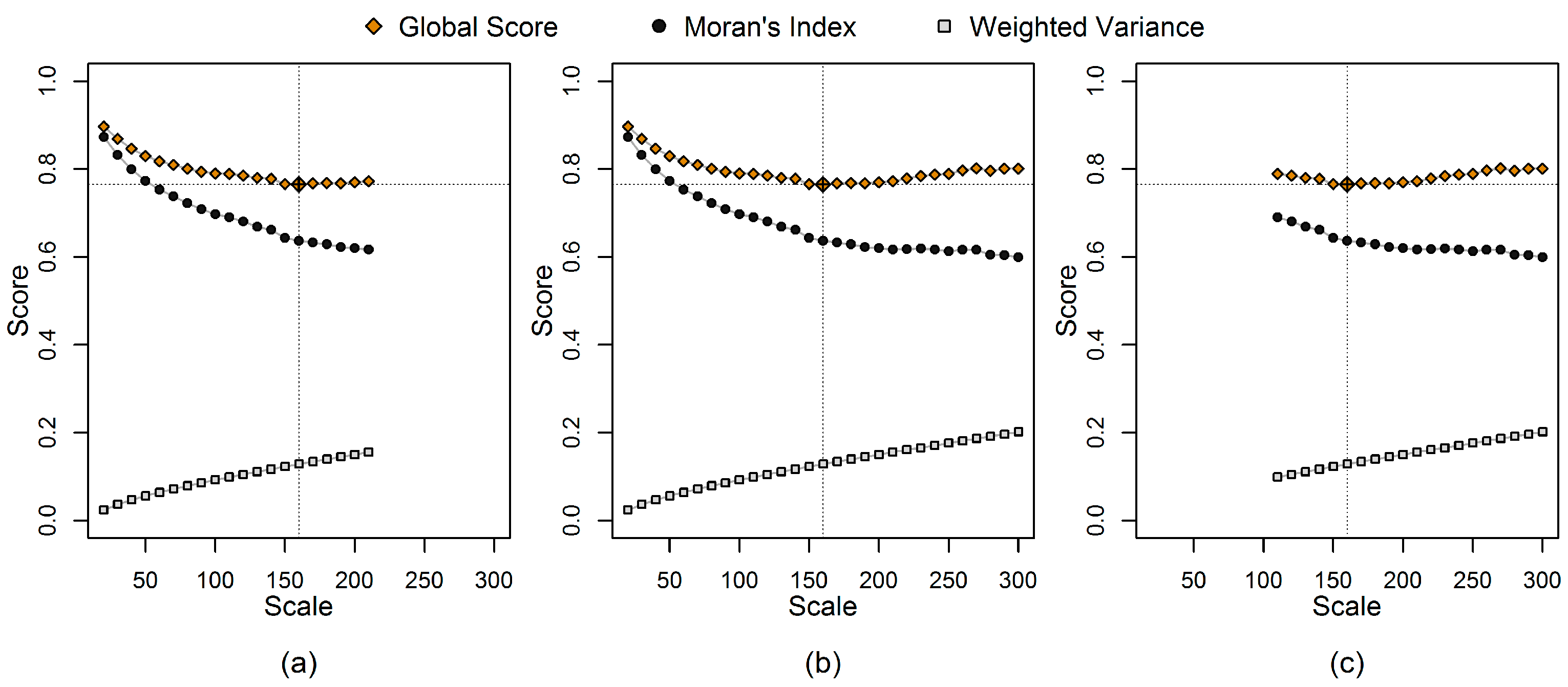

3.2. Illustration of an Alternative Normalization Scheme

4. Discussion

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Zhang, Y. An overview of image and video segmentation in the last 40 Years. In Advances in Image and Video Segmentation; Idea Group Inc.: Calgary, AB, Canada, 2006; pp. 1–15. [Google Scholar]

- Martin, A.; Laanaya, H.; Arnold-Bos, A. Evaluation for uncertain image classification and segmentation. Pattern Recognit. 2006, 39, 1987–1995. [Google Scholar] [CrossRef]

- Zhang, H.; Cholleti, S.; Goldman, S.A.; Fritts, J.E. Meta-evaluation of image segmentation using machine learning. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision Pattern Recognit, New York, NY, USA, 17–22 June 2006; Volume 1, pp. 1138–1145. [Google Scholar]

- Räsänen, A.; Rusanen, A.; Kuitunen, M.; Lensu, A. What makes segmentation good? A case study in boreal forest habitat mapping. Int. J. Remote Sens. 2013, 34, 8603–8627. [Google Scholar] [CrossRef]

- Espindola, G.M.; Camara, G.; Reis, I.A.; Bins, L.S.; Monteiro, A M. Parameter selection for region-growing image segmentation algorithms using spatial autocorrelation. Int. J. Remote Sens. 2006, 27, 3035–3040. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Stefanski, J.; Mack, B.; Waske, B. Optimization of object-based image analysis with Random Forests for land cover mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2492–2504. [Google Scholar] [CrossRef]

- Corcoran, P.; Winstanley, A.; Mooney, P. Segmentation performance evaluation for object-based remotely sensed image analysis. Int. J. Remote Sens. 2010, 31, 617–645. [Google Scholar] [CrossRef]

- Levine, M.D.; Nazif, A.M. Dynamic measurement of computer generated image segmentations. IEEE Trans. Pattern Anal. Mach. Intell. 1985, 7, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Chabrier, S.; Emile, B.; Rosenberger, C.; Laurent, H. Unsupervised performance evaluation of image segmentation. EURASIP J. Adv. Signal Process. 2006, 2006, 1–13. [Google Scholar] [CrossRef]

- Zhang, Y.J. A survey on evaluation methods for image segmentation. Pattern Recognit. 1996, 29, 1335–1346. [Google Scholar] [CrossRef]

- Liu, Y.; Bian, L.; Meng, Y.; Wang, H.; Zhang, S.; Yang, Y.; Shao, X.; Wang, B. Discrepancy measures for selecting optimal combination of parameter values in object-based image analysis. ISPRS J. Photogramm. Remote Sens. 2012, 68, 144–156. [Google Scholar] [CrossRef]

- Johnson, B.; Xie, Z. Unsupervised image segmentation evaluation and refinement using a multi-scale approach. ISPRS J. Photogramm. Remote Sens. 2011, 66, 473–483. [Google Scholar] [CrossRef]

- Moran, P.A.P. Notes on continuous stochastic phenomena. Biometrika 1950, 37, 17–23. [Google Scholar] [CrossRef] [PubMed]

- Goodchild, M.F. Spatial Autocorrelation. Concepts and Techniques in Modern Geography 47; Geo Books: Norwich, UK, 1986. [Google Scholar]

- Gao, Y.A.N.; Mas, J.F.; Kerle, N.; Navarrete Pacheco, J.A. Optimal region growing segmentation and its effect on classification accuracy. Int. J. Remote Sens. 2011, 32, 3747–3763. [Google Scholar] [CrossRef]

- Fonseca-Luengo, D.; García-Pedrero, A.; Lillo-Saavedra, M.; Costumero, R.; Menasalvas, E.; Gonzalo-Martín, C. Optimal scale in a hierarchical segmentation method for satellite images. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2014; Volume 8537, pp. 351–358. [Google Scholar]

- Johnson, B.; Xie, Z. Classifying a high resolution image of an urban area using super-object information. ISPRS J. Photogramm. Remote Sens. 2013, 83, 40–49. [Google Scholar] [CrossRef]

- Yang, J.; Li, P.; He, Y. A multi-band approach to unsupervised scale parameter selection for multi-scale image segmentation. ISPRS J. Photogramm. Remote Sens. 2014, 94, 13–24. [Google Scholar] [CrossRef]

- Martha, T.R.; Kerle, N.; Van Westen, C.J.; Jetten, V.; Kumar, K.V. Segment optimization and data-driven thresholding for knowledge-based landslide detection by object-based image analysis. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4928–4943. [Google Scholar] [CrossRef]

- Ming, D.; Yang, J.; Li, L.; Song, Z. Modified ALV for selecting the optimal spatial resolution and its scale effect on image classification accuracy. Math. Comput. Model. 2011, 54, 1061–1068. [Google Scholar] [CrossRef]

- Ming, D.; Ci, T.; Cai, H.; Li, L.; Qiao, C.; Du, J. Semivariogram-based spatial bandwidth selection for remote sensing image segmentation with mean-shift algorithm. IEEE Geosci. Remote Sens. Lett. 2012, 9, 813–817. [Google Scholar] [CrossRef]

- Varo-Martínez, M.Á.; Navarro-Cerrillo, R.M.; Hernández-Clemente, R.; Duque-Lazo, J. Semi-automated stand delineation in Mediterranean Pinus sylvestris plantations through segmentation of LiDAR data: The influence of pulse density. Int. J. Appl. Earth Obs. Geoinf. 2017, 56, 54–64. [Google Scholar] [CrossRef]

- Grybas, H.; Melendy, L.; Congalton, R.G. A comparison of unsupervised segmentation parameter optimization approaches using moderate- and high-resolution imagery. GIScience Remote Sens. 2017, 54, 515–533. [Google Scholar] [CrossRef]

- Ikokou, G.B.; Smit, J. A Technique for Optimal selection of segmentation scale parameters for object-oriented classification of urban scenes. S. Afr. J. Geomat. 2013, 2, 358–369. [Google Scholar]

- Cánovas-García, F.; Alonso-Sarría, F. A local approach to optimize the scale parameter in multiresolution segmentation for multispectral imagery. Geocarto Int. 2015, 30, 937–961. [Google Scholar] [CrossRef]

- Chen, J.; Deng, M.; Mei, X.; Chen, T.; Shao, Q.; Hong, L. Optimal segmentation of a high-resolution remote-sensing image guided by area and boundary. Int. J. Remote Sens. 2014, 35, 6914–6939. [Google Scholar] [CrossRef]

- Yue, A.; Yang, J.; Zhang, C.; Su, W.; Yun, W.; Zhu, D.; Liu, S.; Wang, Z. The optimal segmentation scale identification using multispectral WorldView-2 images. Sens. Lett. 2012, 10, 285–297. [Google Scholar] [CrossRef]

- Johnson, B.; Bragais, M.; Endo, I.; Magcale-Macandog, D.; Macandog, P. Image segmentation parameter optimization considering within- and between-segment heterogeneity at multiple scale levels: Test case for mapping residential areas using Landsat imagery. ISPRS Int. J. Geo-Inf. 2015, 4, 2292–2305. [Google Scholar] [CrossRef]

- Mohan Vamsee, A.; Kamala, P.; Martha, T.R.; Vinod Kumar, K.; Jai Sankar, G.; Amminedu, E. A tool assessing optimal multi-scale image segmentation. J. Indian Soc. Remote Sens. 2017. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An optimization approach for high quality multi-scale image segmentation. J. Photogramm. Remote Sens. 2000, 58, 12–23. [Google Scholar]

- Schultz, B.; Immitzer, M.; Formaggio, A.R.; Sanches, I.D.A.; Luiz, A.J.B.; Atzberger, C. Self-guided segmentation and classification of multi-temporal Landsat 8 images for crop type mapping in Southeastern Brazil. Remote Sens. 2015, 7, 14482–14508. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T. Estimation of optimal image object size for the segmentation of forest stands with multispectral IKONOS imagery. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 291–307. [Google Scholar]

- De Jong, P.; Sprenger, C.; van Veen, F. On extreme values of Moran’s I and Geary’s c. Geogr. Anal. 1984, 16, 17–24. [Google Scholar] [CrossRef]

- Reis, M.S.; Pantalepo, E.; de Siqueira Sant’Anna, S.J.; Dutra, L.V. Proposal of a weighted index for segmentation evaluation. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 3742–3745. [Google Scholar]

- Fu, K.S.; Mui, J.K. A survey on image segmentation. Pattern Recognit. 1981, 13, 3–16. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Böck, S.; Immitzer, M.; Atzberger, C. On the Objectivity of the Objective Function—Problems with Unsupervised Segmentation Evaluation Based on Global Score and a Possible Remedy. Remote Sens. 2017, 9, 769. https://doi.org/10.3390/rs9080769

Böck S, Immitzer M, Atzberger C. On the Objectivity of the Objective Function—Problems with Unsupervised Segmentation Evaluation Based on Global Score and a Possible Remedy. Remote Sensing. 2017; 9(8):769. https://doi.org/10.3390/rs9080769

Chicago/Turabian StyleBöck, Sebastian, Markus Immitzer, and Clement Atzberger. 2017. "On the Objectivity of the Objective Function—Problems with Unsupervised Segmentation Evaluation Based on Global Score and a Possible Remedy" Remote Sensing 9, no. 8: 769. https://doi.org/10.3390/rs9080769