1. Introduction

In recent years, we have considered many applications of population-based search algorithms in a diverse area of both theoretical and practical problems. Basically, these metaheuristic search algorithms are exceedingly well suited for optimization problems with a huge number of variables. Due to the nature of these multi variable problems, it is almost impossible to find a precise solution set for them within a polynomial bounded computation time. Therefore, swarm-based algorithms are preferred for finding the most optimal solution as much as possible within a sensible time. Some of the most well-known stochastic optimization methods are Ant Colony Optimization (ACO) [

1], Artificial Bee Colony (ABC) algorithms [

2], Biogeography-Based Optimization (BBO) [

3], Genetic Algorithms (GA) [

4], Particle Swarm Optimization (PSO) [

5], the Cuckoo Search (CS) algorithm [

6] and the Bees Algorithm (BA) [

7].

The ACO algorithm mimics real ants’ behaviour in seeking food. Real ants generally wander arbitrarily to find food, and once it is found, they return to their colony while making a trail by spreading pheromone. If other ants face such a path, they will follow and reinforce the track, or revise it in some cases. Some theoretical and practical applications of ACO can be found in [

8,

9]. Some improvements for the search mechanism of ACO using derivative free optimization methods can be found in [

10].

The ABC is another global optimization algorithm which is derived from the behaviour of honey bees while searching for food. For the first time, ABC was proposed in [

2] for solving numerical optimization problems. The ABC has a colony model including three different groups of bees: employed, onlooker and scout. In the ABC, the employed bees are responsible for looking for new food resources rich with nutrients within the vicinity of the food resources that they have visited before [

11]. Onlookers monitor the employed bees’ dancing and choose food resources based on the dances. Finally, the task of finding new food resources in a random manner is given to scout bees. The performance of ABC versus GA and PSO is evaluated by testing them on a set of multi variable optimization problems in [

12]. In [

13], the parameters of a feedforward neural network for classifying magnetic resonance (MR) brain images are optimized by a variant of ABC, called scaled chaotic ABC (SCABC). The performance of the SCABC algorithm is also compared against ABC, GA, and simulated annealing [

14] in [

13]. In [

15], the ABC algorithm is used to select threshold values for image segmentation, where the ABC algorithm outperformed the GA and PSO algorithms. An example of the ABC application to increase throughput for wireless sensor networks is given in [

16]. Regarding more recent variants of ABC, a velocity-based ABC (VBAC) algorithm is proposed in [

17] where the onlooker bees apply the PSO search strategy to select the candidate solutions. An ABC algorithm based on information learning (ILABC) is proposed in [

18], where a clustering partition is used to form subpopulation at each iteration to emphasize the exploitation process. A hybrid artificial bee colony (HABC) in proposed in [

19], where the population size is dynamic based on a life-cycle model for the bees. In this method, a PSO-based comprehensive learning and a conjugate gradient descent search method is used for the exploitation of the search domain. A Migratory Multi-swarm Artificial Bee Colony (MiMSABC) is proposed in [

20] where the bees are initially equally divided into some groups but with different perturbation mode. Gradually, as the algorithm proceeds, the subpopulation of each group may increase or decrease depending on the performance of each group. A comprehensive survey of many different types of bees optimization algorithms whether they are inspired by queen bees’ behaviour and mating, or foraging and communication of other bees is presented in [

21].

The BBO algorithm, inspired from biogeography, is introduced by Dan Simon in 2008 [

3]. In BBO, a solution is represented as an island including a number of species, i.e., independent input variables. A species migration probability is a function of the Habitat Suitability Index (HSI) of an island, i.e., the fitness of a solution. After a migration process, similar to other evolutionary algorithms, a mutation operation is applied on each input variable with some probability. A recent survey of the BBO algorithm can be found in [

22].

The GA and PSO are two other well-known optimization algorithms which are inspired by two different biological approaches. The former works according to the evolutionary idea of the natural selection and genetics and the latter imitates the “social behaviour of bird flocking or fish schooling” [

23]. The two techniques have been applied in a vast number of applications. As an example, in [

24], GA is successfully used for calibrating the walking controller of a humanoid robot. Also, the performances of ACO, PSO, and GA on the radio frequency magnetron sputtering process are compared in [

25]. A recent variant of the GA known as the “Quantum Genetic Algorithm” is reviewed in [

26]. Regarding the PSO variants, a superior solution guided PSO (SSG-PSO) is proposed in [

27] where a set of superior solutions are constructed for particles to learn from. In this variant, a mutation operation and gradient-based or derivative-free local search methods are employed. A scatter learning PSO algorithm (SLPSOA) is proposed in [

28] where a pool of high-quality solutions are constructed as the exemplar pool. Each particle then selects an exemplar using a roulette wheel to update their position. An enhanced comprehensive learning particle swarm optimization (ECLPSO) is proposed in [

29], where particles’ velocities have an additional perturbation term and use different exemplars for each dimension. A recent comprehensive survey on the PSO and its applications can be found in [

30].

The CS, another meta-heuristic search algorithm, is introduced by Yang and Deb in 2009 [

6]. This algorithm imitates the “obligate brood parasitic behaviour” of some cuckoos, which put their eggs in nests of other birds [

31]. A solution is represented by an egg which can be found by another host bird with a specific likelihood. The host bird can then follow two policies: to toss the egg out, or to drop the nest in order to construct a new nest. As such, the highest quality nest will be transferred through the generations to keep the best solutions throughout the process. A review of the CS algorithm and its applications can be found in [

32]. A conceptual comparison of the CS to ABC and PSO can be found in [

33].

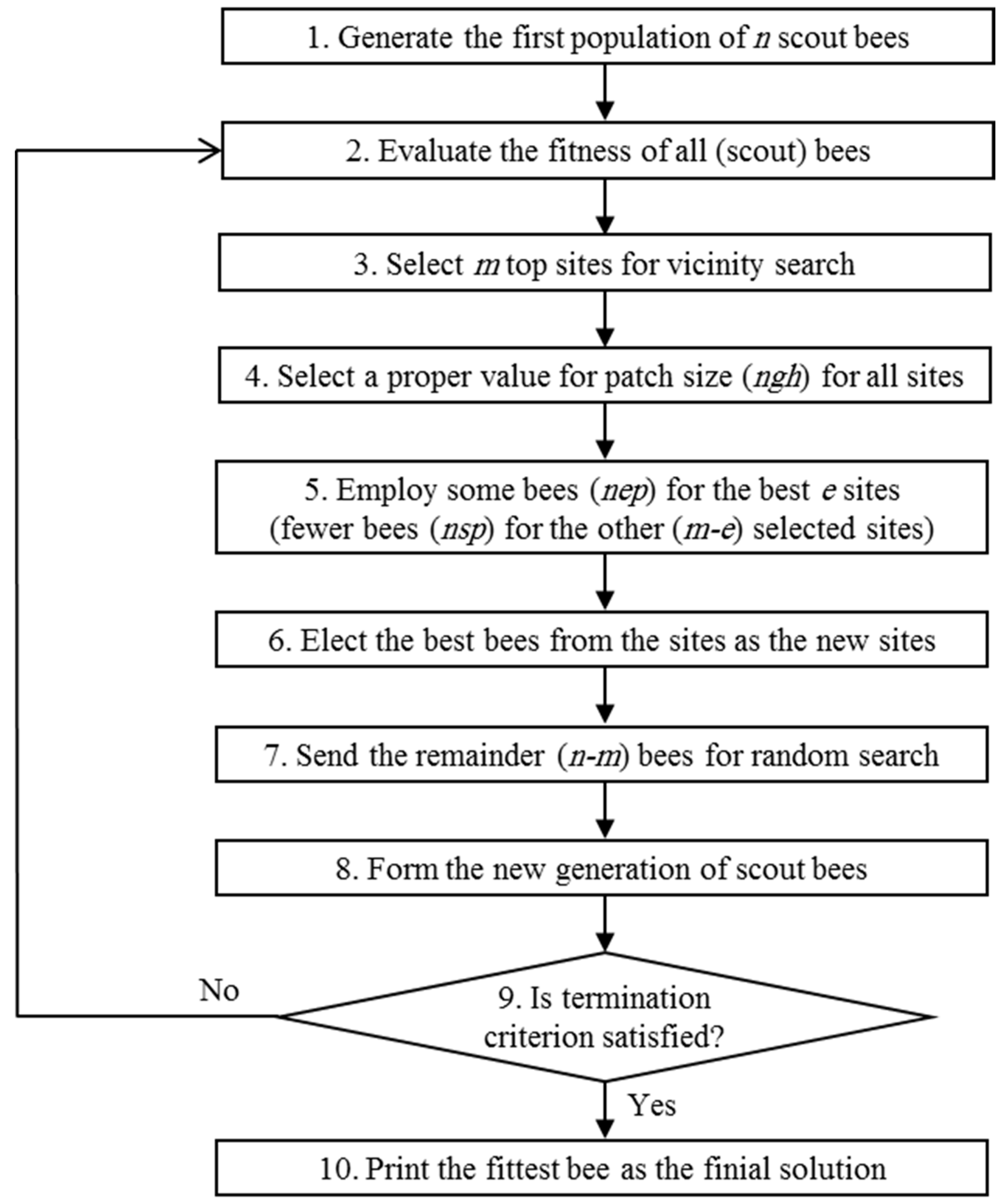

The BA is another optimization metaheuristic derived from the food-seeking behaviour of honey bees in nature. A detailed description of BA can be found in

Section 2. A comprehensive survey and comparison of BA to ABC and PSO is presented in [

34]. The basic BA and its other versions have been widely used in various problems including manufacturing cell formation [

35], integer-valued optimizations for designing a gearbox [

36], printed-circuit board (PCB) assembly configuration [

37,

38], machine job scheduling [

39], adjusting membership functions of a fuzzy control system [

40], neural network training [

41], data clustering [

42], automated quiz creator system [

43], and channel estimation within a MC-CDMA (Multi-Carrier Code Division Multiple Access) communication system [

44].

In the literature, various versions of BA such as enhanced BA, standard BA and modified BA are introduced after the basic BA. In [

45], a fairly detailed review of many different versions of BA is presented. The enhanced BA has higher computational complexity because of its included fuzzy subsystem which selects potentially better sites or patches (i.e., solutions) for better exploration, though it reduces the number of setting parameters. The standard BA has increased the accuracy and speed by applying two new procedures on the basic version. This improvement has led to higher complexity for standard BA. In the modified BA, a collection of new operators is added to the basic BA, which results in a performance improvement, and consequently, too much more complexity compared to all the other versions. To compare the performance of the BA family with those of other population-based algorithms, such as GA, PSO or ACO, see [

34,

46,

47]. Nevertheless, it is worth mentioning that, based on these studies, the Bees Algorithm family outperforms all the other population-based algorithms in continuous-variable optimization problems. As the benchmarks set used in these studies are exactly the same as the one in this paper, the performance of the proposed grouped bees algorithm is only compared against other BA variants.

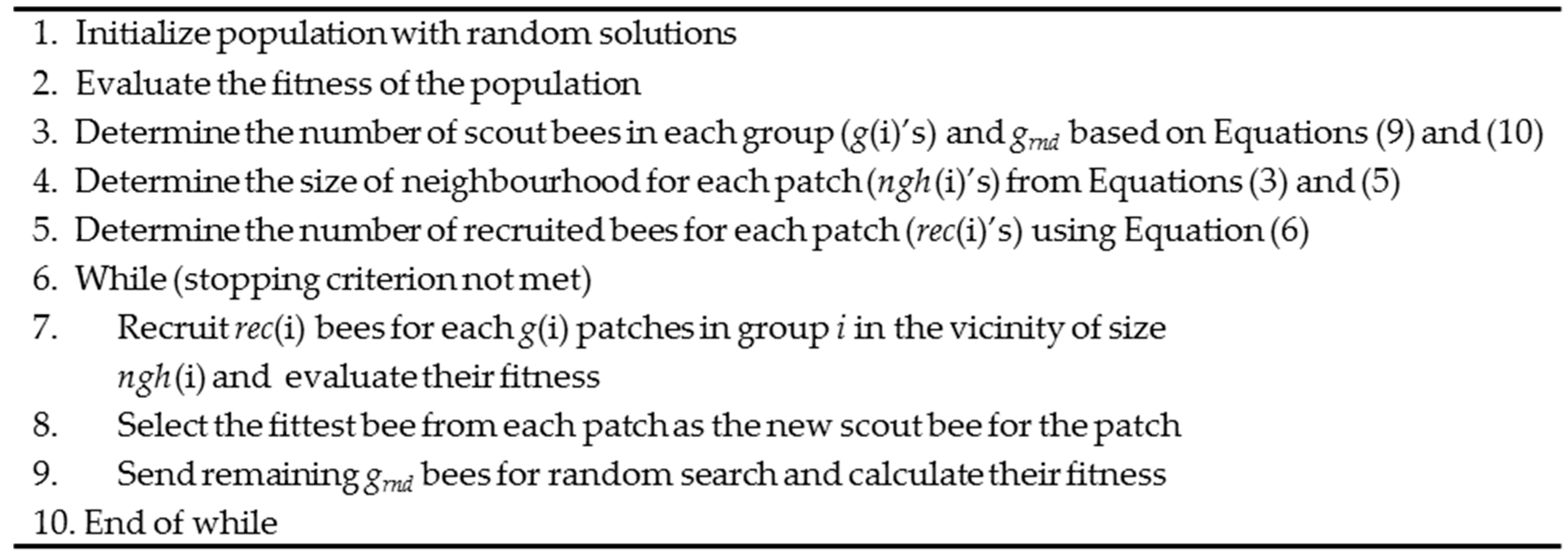

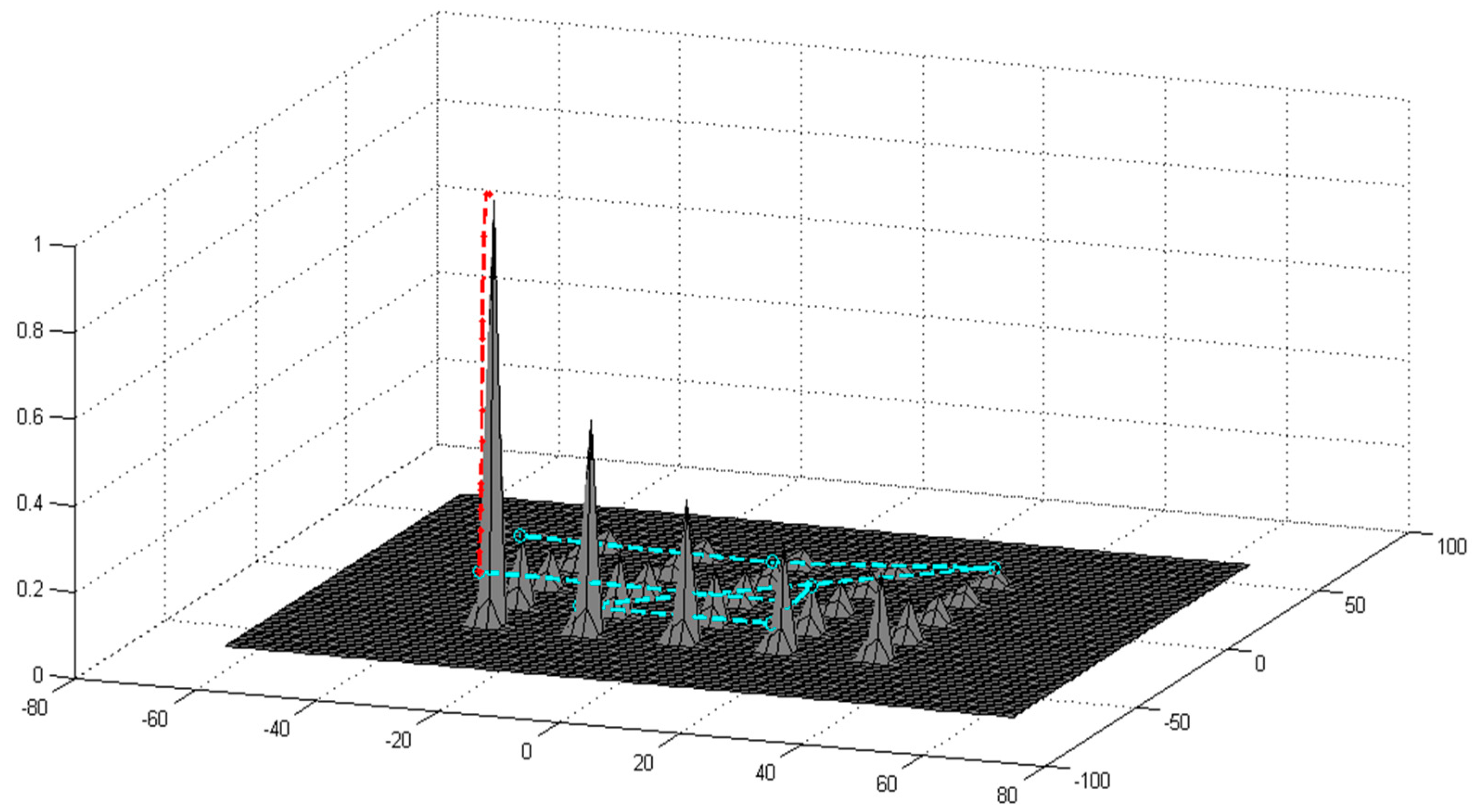

In this paper, a grouped version of the Bees Algorithm is introduced for multi-variable optimization problems. In this variant, bees are grouped into two or more search groups. In the first groups, more bees are recruited to search smaller but richer patches. In the last groups, more scout bees attempt to discover new patches within larger neighbourhoods. Well-defined formulas are used to set the parameters of the algorithm. Therefore, the number of adjustable parameters that has to be set is reduced. The original behaviour of the Bees Algorithm is maintained without any additional system or any extra operators. The remainder of this paper is organized as follows. The proposed Grouped Bees Algorithm (GBA) is elaborated in

Section 2, where the core idea of the basic Bees Algorithm and its different modifications are also reviewed. In

Section 3, the benchmark functions for evaluating the proposed algorithm and the performance of the algorithm on each of them are discussed. General interpretation of results in view of other BA variants and future research directions are given in

Section 4. Finally,

Section 5 summarizes the paper.

4. Discussion

To summarize the above-mentioned experiments, GBA not only is substantially faster than the basic version of BA, it also has a higher accuracy than the standard BA and a similar accuracy to the complex modified BA. It is notable that the modified BA is not considered to be one of the main implementations of the Bees Algorithm [

45]. The modified BA may be considered to be a hybrid kind of BA that employs various operators including mutation, crossover, interpolation and extrapolation; whereas the GBA is not introducing any new operator to the basic BA, and has significantly less conceptual and computational complexity compared to all the other variants built on top of the basic BA.

As mentioned, the performance of GA, PSO, and ACO on exactly the same benchmark functions, used in this paper, can be found in [

34,

46,

47]. In addition to the mathematical functions discussed here, GBA has shown great performance in two other applications. It was used for calibrating the parameters of a scripted AI in a boxing simulation game [

63] where the fuzzy AI could not defeat the scripted AI after the GBA-based calibration procedure. GBA was also used to tune up the parameters of a hybrid controller for bipedal walking [

64]. The parameters were adjusted in a way that a trajectory generator module, as a subsystem of the hybrid controller, produced stable walking trajectories.

The notion of grouping is to organize all the particles hierarchically so that the degree of awareness of a group search decreases by increasing their group number. This is achieved by increasing the search neighborhood for later groups in order to have a spectrum of groups by different precisions in the exploration strategy. Although basic BA and some of its variants suffer from discontinuity more than other metaheuristics like GA and PSO, it is possible to generalize the concept of grouping for other population-based algorithms. In GA, for example, the whole population could be divided into several groups by the same policy as GBA, and a freedom factor for each group should be considered. The freedom factor of each group would define the freedom degree of the new child produced by selected parents. In other words, it determines how much a new solution is allowed to move through search space by a GA operator such as crossover or mutation. Clearly, as group number increases, the freedom degree raises. The same policy can be applied on PSO and so on in order to implement the idea of grouping.

A further extension of this work focuses on comparing and discussing the performance of GBA against the genetics algorithm and simulated annealing on optimizing discreet input space and combinatorial problems such as travelling salesman problem and component placement problem, initially discussed in [

14]. Moreover, the proposed GBA could be compared against a number of more recent advances in the metaheuristics optimization literature in high-dimensional problems.