Vacuum Thermoforming Process: An Approach to Modeling and Optimization Using Artificial Neural Networks

Abstract

:1. Introduction

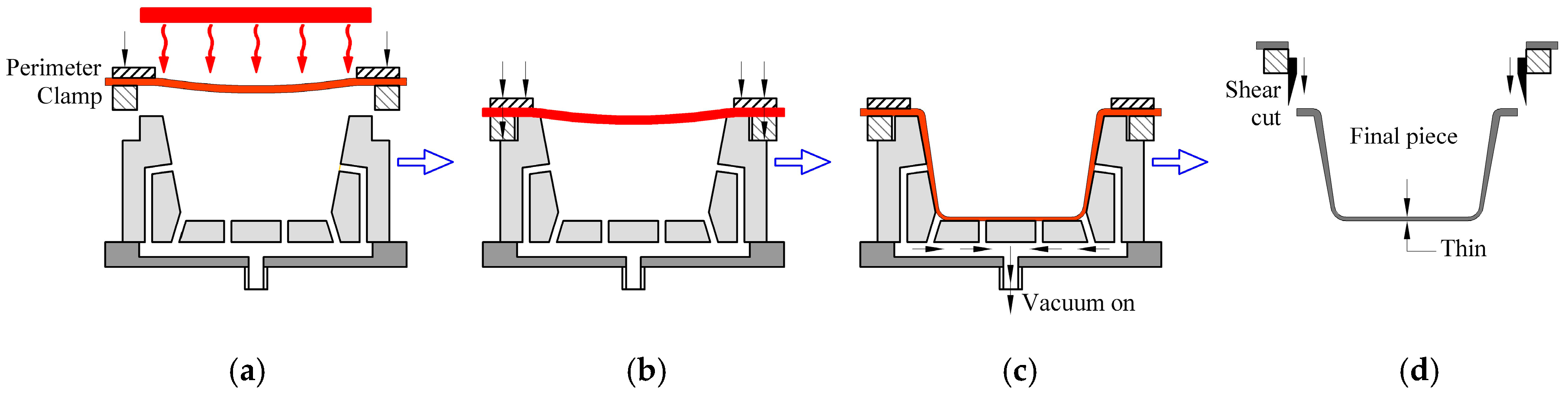

2. Experimental Work

2.1. Material, Equipment, and System

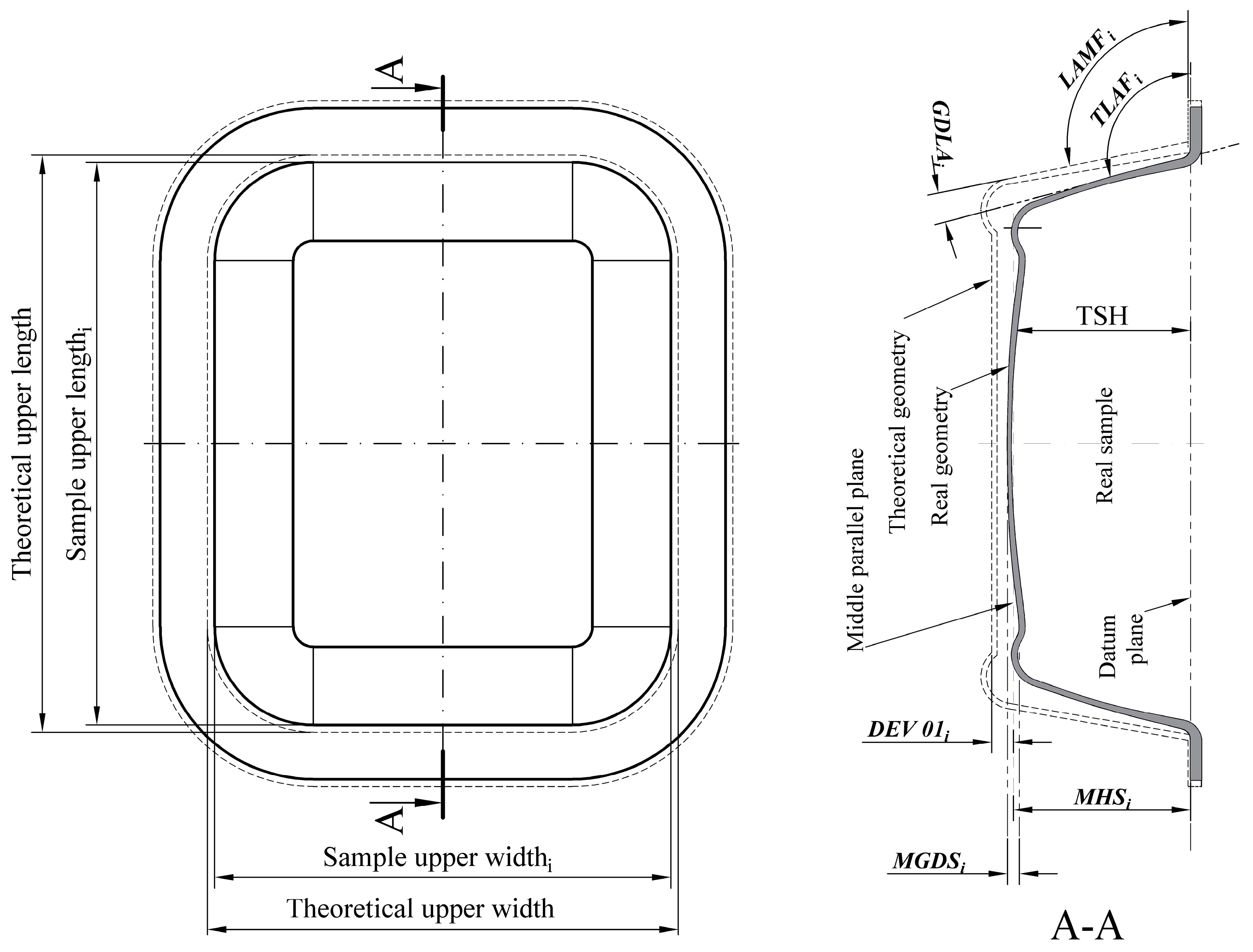

2.2. Parameters and Measurement Procedure

2.3. Experimental Study

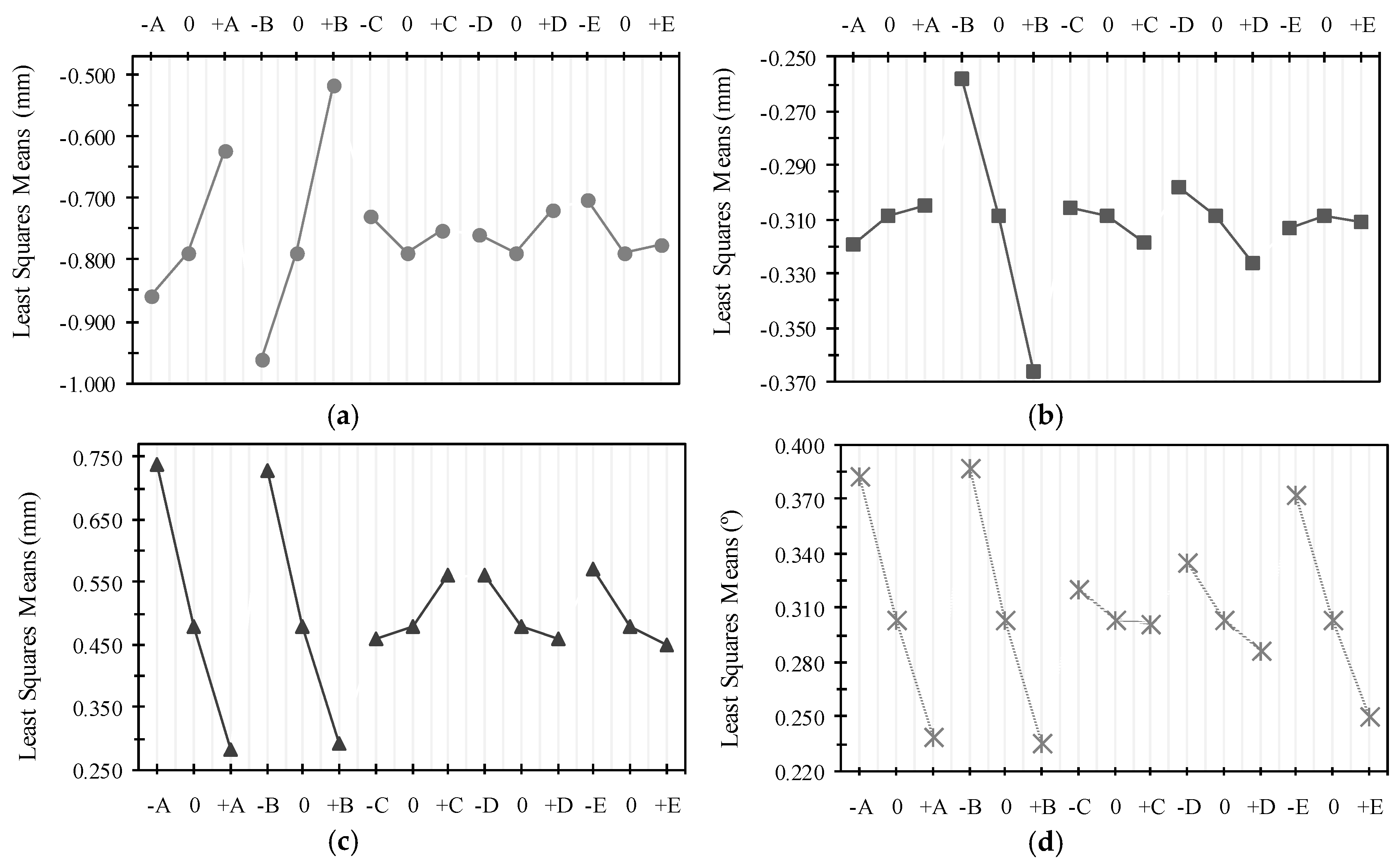

2.4. Analysis of Data

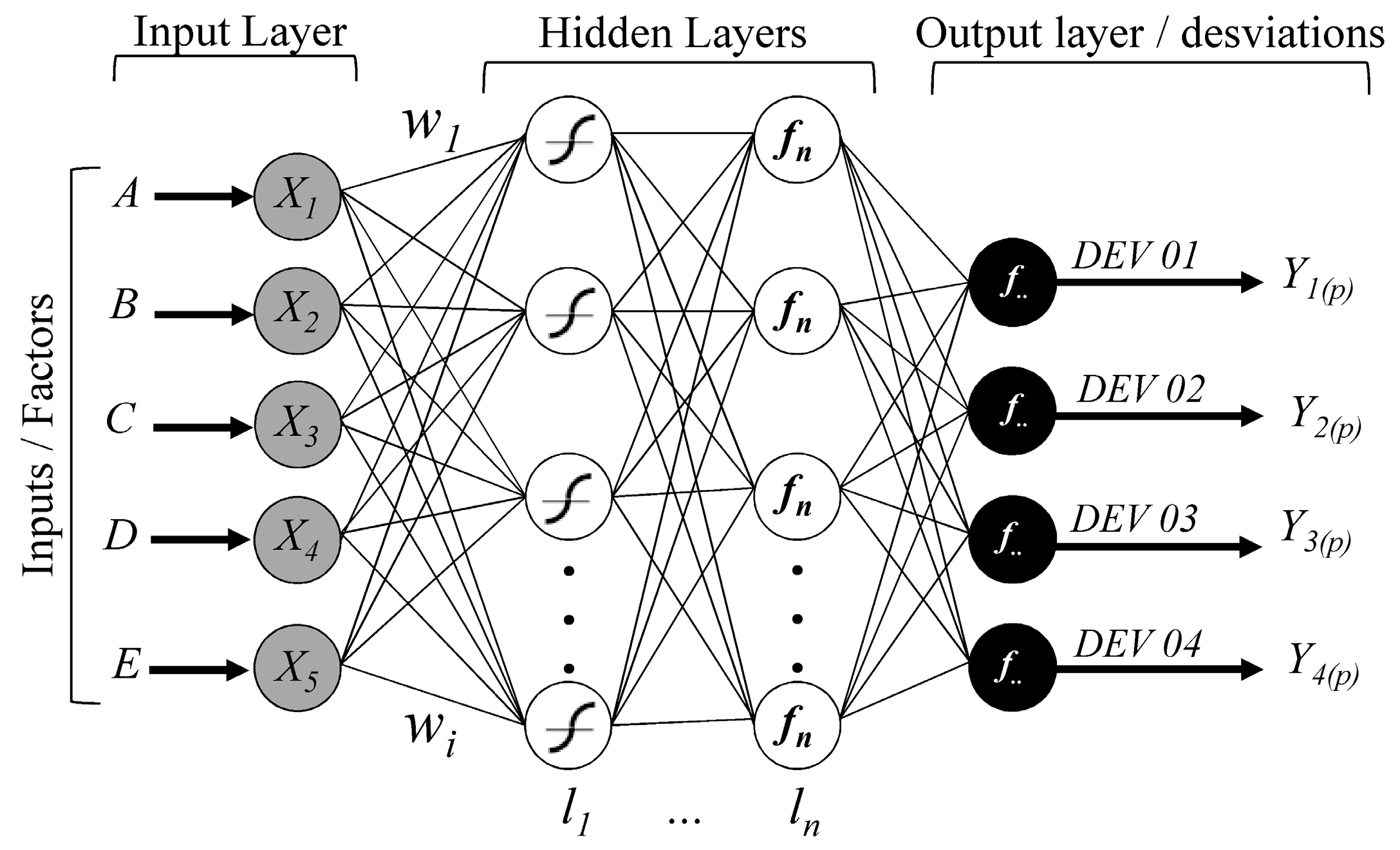

3. Development of Modeling and Optimization of Process Based on ANN Models

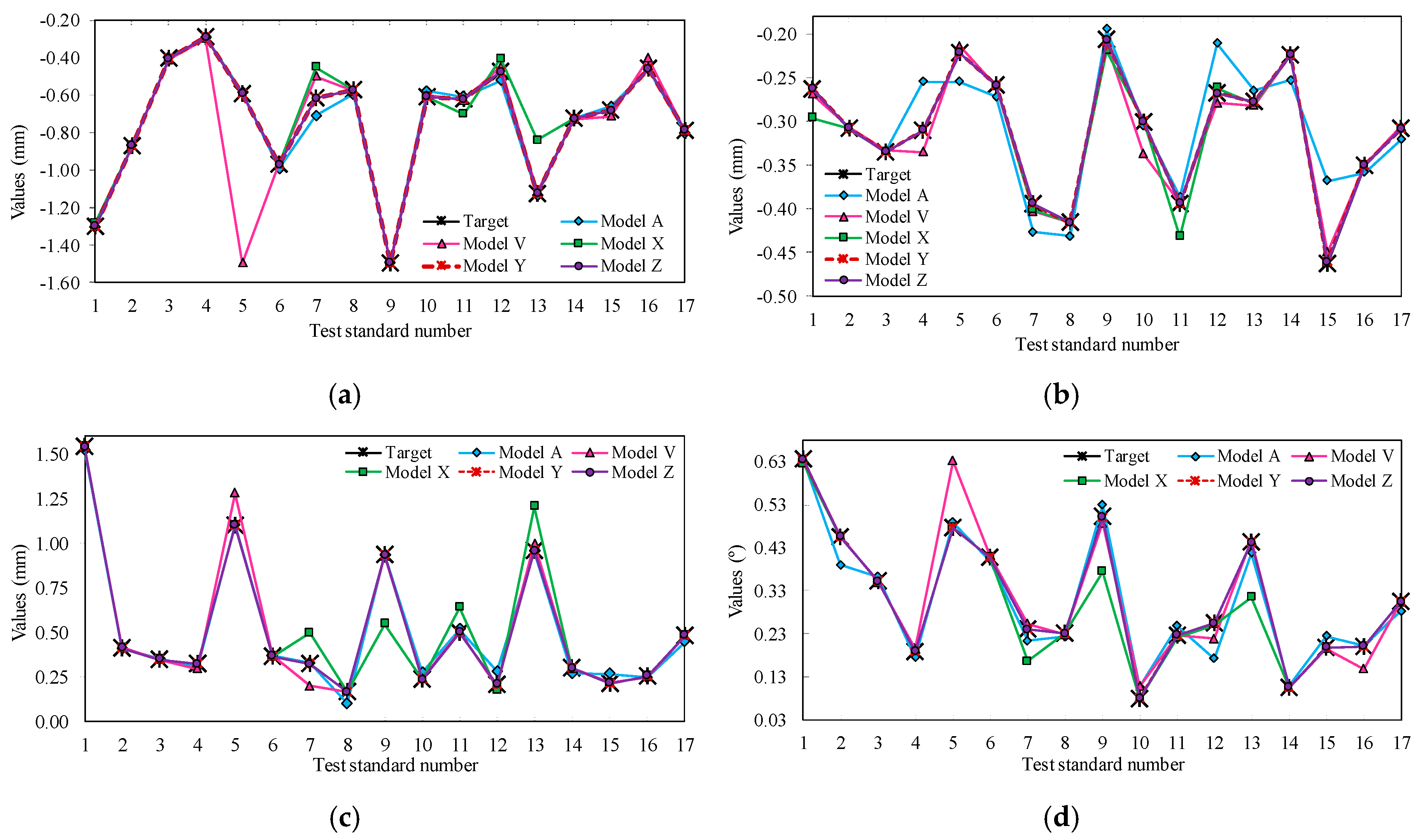

3.1. Modeling, Tests, and Selection of Artificial Neural Network Models

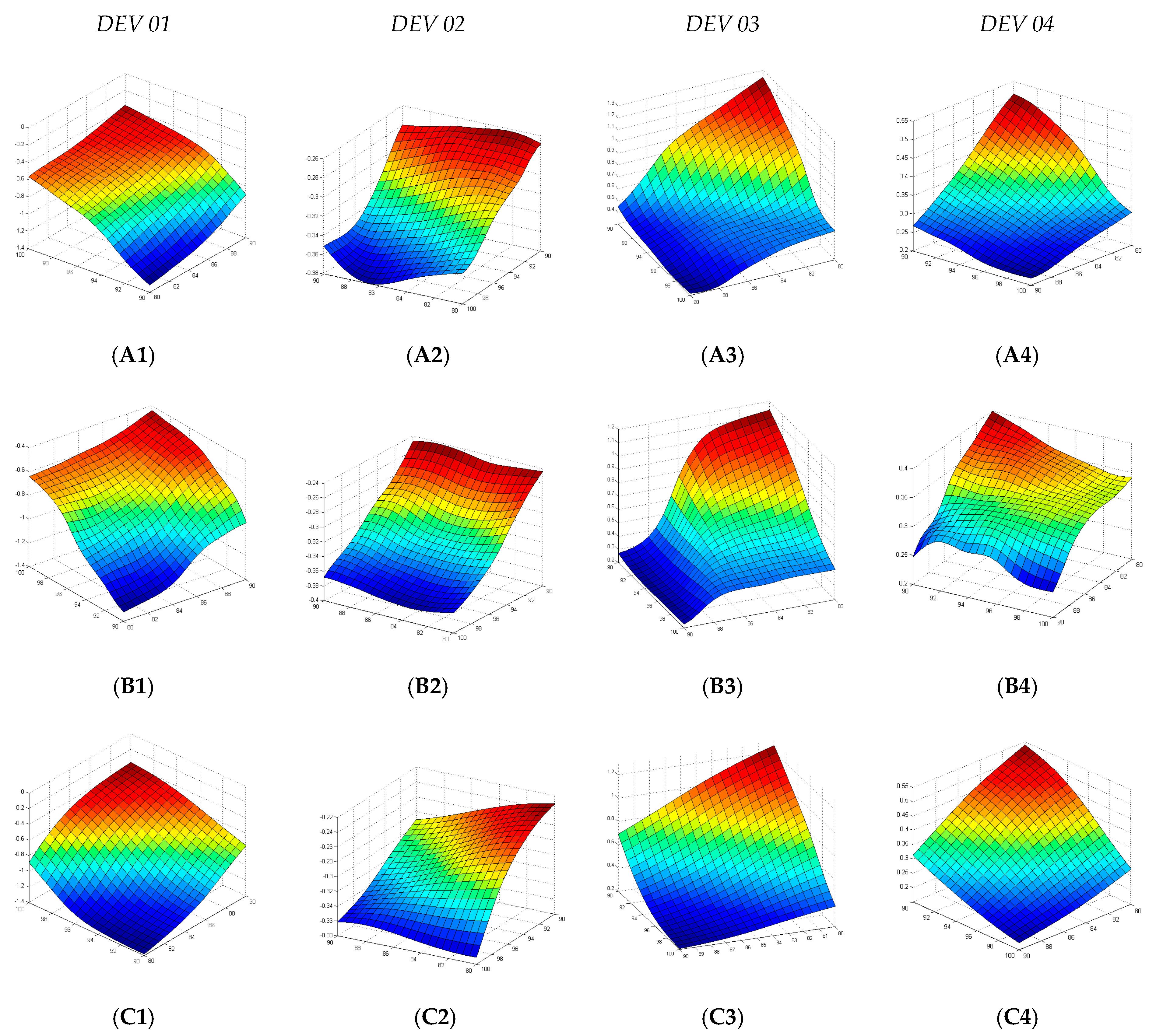

3.2. Modeling and Test of Multi-Criteria Optimization Algorithm Models

3.3. Confirmation Experiment

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Throne, J. Thermoforming (Part III, Chapter 19). In Applied Plastics Engineering Handbook: Processing and Materials, 1st ed.; Kutz, M., Ed.; William Andrew (Elsevier): Waltham, MA, USA, 2011; p. 784. ISBN 9781437735154. [Google Scholar]

- Throne, J.L. Technology of Thermoforming, 1st ed.; Carl Hanser Verlag GmbH & Co. KG: New York, NY, USA, 1996; Volume 1, p. 882. ISBN 978-1569901984. [Google Scholar]

- Küttner, R.; Karjust, K.; Ponlak, M. The design and production technology of large composite plastic products. J. Proc. Estonian Acad. Sci. Eng. 2007, 13, 117–128. [Google Scholar]

- Muralisrinivasan, N.S. Update on Troubleshooting in Thermoforming, 1st ed.; Smithers Rapra Technology: Shrewsbury, Shropshire, UK, 2010; p. 140. ISBN 978-1-84735-137-1. [Google Scholar]

- Ghobadnam, M.; Mosaddegh, P.; Rejani, M.R.; Amirabadi, H.; Ghaei, A. Numerical and experimental analysis of HIPS sheets in thermoforming process. Int. J. Adv. Manuf. Technol. 2015, 76, 1079–1089. [Google Scholar] [CrossRef]

- Engelmann, S.; Salmang, R. Optimizing a thermoforming process for packaging (chapter 21). In Advanced Thermoforming: Methods, Machines and Materials, Applications and Automation, 1st ed.; Engelmann, S., Ed.; Wiley & Sons: Hoboken, NJ, USA, 2012; Volume 1, pp. 125–136. [Google Scholar]

- Klein, P. Fundamentals of Plastics Thermoforming, 1st ed.; Editon Morgan & Claypool Publishers: Williston, WI, USA, 2009; p. 98. ISBN 9781598298840. [Google Scholar]

- Throne, J.L. Understanding Thermoforming, 2nd ed.; Hanser: New York, NY, USA, 2008; p. 279, ISBN 10:1569904286. [Google Scholar]

- Yang, C.; Hung, S.W. Modeling and Optimization of a Plastic Thermoforming Process. J. Reinf. Plast. Compos. 2004, 23, 109–121. [Google Scholar] [CrossRef]

- Yang, C.; Hung, S.W. Optimising the thermoforming process of polymeric foams: An approach by using the Taguchi method and the utility concept. Int. J. Adv. Manuf. Technol. 2004, 24, 353–360. [Google Scholar] [CrossRef]

- Sala, G.; Landro, L.D.; Cassago, D. A numerical and experimental approach to optimise sheet stamping technologies: Polymers thermoforming. J. Mater. Des. 2002, 23, 21–39. [Google Scholar] [CrossRef]

- Warby, M.K.; Whitemana, J.R.; Jiang, W.G.; Warwick, P.; Wright, T. Finite element simulation of thermoforming processes for polymer sheets. Math. Comput. Simul. 2003, 61, 209–218. [Google Scholar] [CrossRef]

- Ayhan, Z.; Zhang, H. Wall Thickness Distribution in Thermoformed Food Containers Produced by a Benco Aseptic. Polym. Eng. Sci. 2000, 40, 1–10. [Google Scholar] [CrossRef]

- Erdogan, E.S.; Eksi, O. Prediction of Wall Thickness Distribution in Simple Thermoforming Moulds. J. Mech. Eng. 2014, 60, 195–202. [Google Scholar] [CrossRef]

- Kommoji, S.; Banerjee, R.; Bhatnaga, N.; Ghosh, K.G. Studies on the stretching behaviour of medium gauge high impact polystyrene sheets during positive thermoforming. J. Plast. Film Sheet. 2015, 31, 96–112. [Google Scholar] [CrossRef]

- Velsker, T.; Eerme, M.; Majak, J.; Pohlak, M.; Karjust, K. Artificial neural networks and evolutionary algorithms in engineering design. J. Achiev. Mater. Manuf. Eng. 2011, 44, 88–95. [Google Scholar]

- Martin, P.J.; Keaney, T.; McCool, R. Development of a Multivariable Online Monitoring System for the Thermoforming Process. Polym. Eng. Sci. 2014, 54, 2815–2823. [Google Scholar] [CrossRef]

- Chy, M.M.I.; Boulet, B.; Haidar, A. A Model Predictive Controller of Plastic Sheet Temperature for a Thermoforming Process. In Proceedings of the American Control Conference, San Francisco, CA, USA, 29 June–1 July 2011; pp. 4410–4415. [Google Scholar] [CrossRef]

- Boutaous, M.; Bourgin, P.; Heng, D.; Garcia, D. Optimization of radiant heating using the ray tracing method: Application to thermoforming. J. Adv. Sci. 2005, 17, 1–2, 139–145. [Google Scholar] [CrossRef]

- Zhen-zhe, L.; Cheng, T.H.; Shen, Y.; Xuan, D.J. Optimal Heater Control with Technology of Fault Tolerance for Compensating Thermoforming Preheating System. Adv. Mater. Sci. Eng. 2015, 12, 1–5. [Google Scholar]

- Meziane, F.; Vadera, S.; Kobbacy, K.; Proudlove, N. Intelligent systems in manufacturing: Current developments and future prospects. Integr. Manuf. Syst. 1990, 11, 4, 218–238. [Google Scholar] [CrossRef]

- Tadeusiewicz, R. Introduction to intelligent systems (Part I: Chapter 1). In Intelligent Control Systems (Neural Networks), 2nd ed.; Wilamowski, B.M., Irwin, J., Eds.; CRC Press: New York, NY, USA, 2011; Volume 1, pp. 1-1–1-12. ISBN 9781439802830. [Google Scholar]

- Pham, D.T.; Pham, P.T.N. Computational intelligence for manufacturing (Part I). In Computational Intelligence in Manufacturing Handbook, 1st ed.; Wang, J., Kusiak, A., Eds.; CRC Press LLC: Boca Raton, FL, USA, 2001; Volume 1, p. 560. ISBN 0-8493-0592-6. [Google Scholar]

- Chang, Y.Z.; Wen, Y.Z.; Liu, S.J. Derivation of optimal processing parameters of polypropylene foam thermoforming by an artificial neural network. J. Polym. Eng. Sci. 2005, 45, 375–384. [Google Scholar] [CrossRef]

- Efe, M.Ö. From Backpropagation to Neurocontrol (Part III: Chapter 1). In Intelligent Control Systems (Neural Networks), 2nd ed.; Wilamowski, B.M., Irwin, J.D., Eds.; CRC Press: New York, NY, USA, 2011; Volume 1, pp. 2-1–2-11. ISBN 9781439802830. [Google Scholar]

- Huang, S.H.; Zhang, H.C. Artificial neural networks in manufacturing: Concepts, applications, and perspectives. IEEE Comp. Packag. Manuf. Technol. (Part I) 1994, 17, 212–228. [Google Scholar] [CrossRef]

- Kumar, K.; Thakur, G.S.M. Advanced applications of neural networks and artificial intelligence: A review. Int. J. Inf. Technol. Comput. Sci. 2012, 6, 57–68. [Google Scholar] [CrossRef]

- Karnik, S.R.; Gaitonde, V.N.; Campos Rubio, J.; Esteves Correia, A.; Abrão, A.M.; Paulo Davim, J. Delamination analysis in high speed drilling of carbon fiber reinforced plastics (CFRP) using artificial neural network model. Mater. Des. 2008, 29, 1768–1776. [Google Scholar] [CrossRef]

- Mehta, H.; Meht, A.M.; Manjunath, T.C.; Ardil, C. Multi-layer Artificial Neural Network Architecture Design for Load Forecasting in Power Systems. Int. J. Appl. Math. Comput. Sci. 2008, 5, 207–220. [Google Scholar]

- Esteban, L.G.; García Fernández, F.; Palacios, P.; Conde, M. Artificial neural networks in variable process control: Application in particleboard manufacture. J. Investig. Agrar. Sist. Recur. For. 2009, 18, 92–100. [Google Scholar] [CrossRef]

- Kosko, B. Neural Networks and Fuzzy Systems: A Dynamical Systems Approach to Machine Intelligence, 1st ed.; Dskt Edition: New Delhi, India, 1994; p. 449. ISBN 978–0136114352. [Google Scholar]

- Schalkoff, R.J. Artificial Neural Networks, 1st ed.; McGraw-Hill Companies: New York, NY, USA, 1997; p. 422. [Google Scholar]

- Hagan, M.T.; Menhaj, M.B. Training feedforward networks with the Marquardt algorithm. IEEE Trans. Neural Netw. 1994, 5, 989–993. [Google Scholar] [CrossRef] [PubMed]

- Demuth, H.; Beale, M. Neural Network Toolbox, User’s Guide, version 4.0.4.; The MathWorks, Inc.: Natick, MA, USA, 2004; p. 840. [Google Scholar]

- Hao, Y.U.; Wilamowski, B.M. Levenberg-Marquardt training (Part II: Chapter 12). In Intelligent Control Systems (Neural Networks), 2nd ed.; Wilamowski, B.M., Irwin, J.D., Eds.; CRC Press: New York, NY, USA, 2011; Volume 1, pp. 12-1–12-16. ISBN 9781439802830. [Google Scholar]

- Eschenauer, H.; Koski, J.; Osyczka, A. Multicriteria Design Optimization: Procedures and Applications, 1st ed.; Springer: Berlin, Germany, 1990; p. 482. ISBN 978-3-642-48699-9. [Google Scholar]

- Rosen, S. Thermoforming: Improving Process Performance, 1st ed.; Society of Manufacturing Engineers (Plastics Molders & Manufacturers Association of SME): Dearborn, MI, USA, 2002; p. 344. ISBN 978-0872635821. [Google Scholar]

- Montgomery, D.C. Design and Analysis Of Experiments, 8th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2013; p. 730. ISBN 978-1118146927. [Google Scholar]

- Zain, A.M.; Haron, H.; Sharif, S. Prediction of surface roughness in the end milling machining using Artificial Neural Network. Expert Syst. Appl. 2010, 37, 1755–1768. [Google Scholar] [CrossRef]

- Vongkunghae, A.; Chumthong, A. The performance comparisons of backpropagation algorithm’s family on a set of logical functions. ECTI Trans. Electr. Eng. Electron. Commun. 2007, 5, 114–118. [Google Scholar]

- Manjunath Patel, G.C.; Krishna, P. A review on application of artificial neural networks for injection moulding and casting processes. Int. J. Adv. Eng. Sci. 2013, 3, 1–12. [Google Scholar]

| Level | Factors | ||||

|---|---|---|---|---|---|

| A (s a) | B (% a) | C (bar and cm/s a) | D (s a) | E (mbar a) | |

| 1 (−1) | 80 | 90 | 3.4 and 18.4 (100%) | 7.2 | 10 |

| 2 (+1) | 90 | 100 | 4.0 and 21.6 (85%) | 9.0 | 15 |

| Standard order test | Responses | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DEV 01 (mm a) | DEV 02 (mm a) | DEV 03 (° a) | DEV 04 (mm a) | |||||||||

| Mean b | AE e | S | Mean b | AE e | S | Mean b | AE e | S | Mean b | AE e | S | |

| 1 | −1.300 | ±0.040 | 0.025 | −0.263 | ±0.039 | 0.024 | 1.542 c | ±0.104 | 0.065 | 0.635 | ±0.023 | 0.015 |

| 2 | −0.871 | ±0.461 | 0.290 | −0.308 | ±0.040 | 0.025 | 0.411 | ±0.222 | 0.139 | 0.455 | ±0.098 | 0.062 |

| 3 | −0.408 | ±0.192 | 0.121 | −0.335 | ±0.253 | 0.159 | 0.349 | ±0.160 | 0.100 | 0.351 | ±0.121 | 0.076 |

| 4 | −0.293 | ±0.327 | 0.206 | −0.310 | ±0.133 | 0.084 | 0.323 | ±0.134 | 0.084 | 0.188 | ±0.154 | 0.097 |

| 5 | −0.596 | ±0.129 | 0.081 | −0.222 | ±0.010 | 0.006 | 1.100 | ±0.123 | 0.077 | 0.476 | ±0.066 | 0.041 |

| 6 | −0.971 | ±0.145 | 0.091 | −0.259 | ±0.035 | 0.022 | 0.366 | ±0.201 | 0.126 | 0.407 | ±0.021 | 0.013 |

| 7 | −0.618 | ±0.131 | 0.082 | −0.395 | ±0.054 | 0.034 | 0.321 | ±0.470 | 0.296 | 0.239 | ±0.006 | 0.004 |

| 8 | −0.576 | ±0.467 | 0.293 | −0.416 | ±0.072 | 0.045 | 0.164 | ±0.200 | 0.125 | 0.230 | ±0.020 | 0.013 |

| 9 | −1.498 | ±0.270 | 0.170 | −0.207 | ±0.087 | 0.054 | 0.933 | ±0.132 | 0.083 | 0.501 | ±0.095 | 0.060 |

| 10 | −0.611 | ±0.283 | 0.178 | −0.301 | ±0.015 | 0.010 | 0.234 | ±0.152 | 0.096 | 0.078 | ±0.064 | 0.040 |

| 11 | −0.625 | ±0.428 | 0.269 | −0.394 | ±0.068 | 0.043 | 0.500 | ±0.450 | 0.283 | 0.227 | ±0.007 | 0.005 |

| 12 | −0.476 | ±0.226 | 0.142 | −0.268 | ±0.038 | 0.024 | 0.208 | ±0.069 | 0.043 | 0.253 | ±0.098 | 0.061 |

| 13 | −1.128 | ±0.241 | 0.152 | −0.278 | ±0.060 | 0.038 | 0.955 | ±0.364 | 0.229 | 0.442 | ±0.001 | 0.000 |

| 14 | −0.728 | ±0.483 | 0.303 | −0.224 | ±0.016 | 0.010 | 0.297 | ±0.101 | 0.063 | 0.105 | ±0.067 | 0.042 |

| 15 | −0.684 | ±0.200 | 0.126 | −0.463 | ±0.028 | 0.018 | 0.214 | ±0.042 | 0.027 | 0.198 | ±0.063 | 0.039 |

| 16 | −0.461 | ±0.449 | 0.282 | −0.350 | ±0.105 | 0.066 | 0.254 | ±0.031 | 0.020 | 0.200 | ±0.034 | 0.021 |

| 17 d | −0.789 | ±0.079 | 0.049 | −0.309 | ±0.019 | 0.012 | 0.481 | ±0.276 | 0.174 | 0.304 | ±0.045 | 0.029 |

| Factor | Responses | |||||||

|---|---|---|---|---|---|---|---|---|

| DEV 01 | DEV 02 | DEV 03 | DEV 04 | |||||

| F(0) | p-Value | F(0) | p-Value | F(0) | p-Value | F(0) | p-Value | |

| A | 10.2 a | 0.005 | 0.42 | 0.542 | 89.7 a | 0.000 | 77.72 a | 0.000 |

| B | 37.0 a | 0.000 | 22.5 a | 0.000 | 82.6 a | 0.000 | 86.23 a | 0.000 |

| C | 0.30 | 0.592 | 1.44 | 0.246 | 4.6 a | 0.046 | 8.93 a | 0.008 |

| D | 0.98 | 0.336 | 0.02 | 0.899 | 6.43 a | 0.021 | 56.03 a | 0.000 |

| E | 0.08 | 0.776 | 0.34 | 0.567 | 4.50 a | 0.049 | 1.36 | 0.259 |

| A*B | 1.92 | 0.184 | 3.91 | 0.065 | 52.1 a | 0.000 | 43.81 a | 0.000 |

| A*C | 4.86 a | 0.042 | 0.27 | 0.612 | 2.73 | 0.117 | 6.24 a | 0.023 |

| A*D | 6.13 a | 0.024 | 2.27 | 0.150 | 1.29 | 0.271 | 5.58 a | 0.030 |

| A*E | 1.87 | 0.189 | 0.29 | 0.596 | 2.63 | 0.123 | 2.04 | 0.171 |

| B*C | 5.66 a | 0.029 | 5.04 a | 0.038 | 0.01 | 0.943 | 0.42 | 0.525 |

| B*D | 0.05 | 0.833 | 0.12 | 0.739 | 6.98 a | 0.017 | 30.14 a | 0.000 |

| B*E | 0.63 | 0.438 | 0.89 | 0.359 | 0.08 | 0.783 | 2.45 | 0.136 |

| C*D | 0.03 | 0.867 | 0.14 | 0.709 | 1.81 | 0.196 | 1.54 | 0.232 |

| C*E | 3.02 | 0.100 | 1.12 | 0.305 | 2.23 | 0.154 | 29.55 a | 0.000 |

| D*E | 4.89 a | 0.041 | 1.38 | 0.257 | 0.37 | 0.550 | 0.25 | 0.817 |

| Model name | Error model (MAE) | Error model (MSE) | Processing time of Model | No. training data of Model | No. test data of Model | ANN architecture | Network training function of ANN | Transfer function of ANN (1st Layer) | Transfer function of ANN (Layer Hidden) | Best epoch of ANN |

|---|---|---|---|---|---|---|---|---|---|---|

| Z | 0.0001 | 0.0000001 | 5.347 | 14 | 6 | 10-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘tansig’ | 461 |

| Y | 0.0002 | 0.0000003 | 6.728 | 12 | 4 | 10-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘tansig’ | 873 |

| X | 0.0301 | 0.0000163 | 8.004 | 11 | 3 | 10-8-4 | ‘‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘tansig’ | 832 |

| W | 0.0877 | 0.0720541 | 39.575 | 11 | 3 | 10-8-4 | ‘traingd’; η = 0.001; ρ = 0.001; τ = 0.001; | ‘tansig’ | ‘tansig’ | 10359 |

| V | 0.0303 | 0.0000795 | 6.192 | 11 | 3 | 10-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ’tansig’ | 685 |

| T | 0.0164 | 0.0000976 | 220.040 | 11 | 3 | 16-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ’tansig’ | 19855 |

| P | 0.0319 | 0.0000000 | 58.800 | 11 | 3 | 5-4-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 762 |

| O | 0.0085 | 0.0000105 | 64.461 | 11 | 3 | 8-8-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 4482 |

| M | 0.0320 | 0.0000620 | 140.268 | 11 | 3 | 16-8-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 7444 |

| K | 0.1529 | 0.1669912 | 74.772 | 11 | 3 | 24-12-8-4 | ‘traingd’; η = 0.001; ρ = 0.001; τ = 0.001; | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 11882 |

| H | 0.0256 | 0.0000000 | 490.485 | 11 | 3 | 24-12-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 9340 |

| D | 0.1832 | 0.1938314 | 7.900 | 11 | 3 | 32-16-8-4 | ‘traingd’; η = 0.001; ρ = 0.001; τ = 0.001; | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 1656 |

| A | 0.02135 | 0.0005825 | 205.544 | 11 | 3 | 32-16-8-4 | ‘trainlm’; mu_max = 1 × 10308 | ‘tansig’ | ‘purelin’, ‘tansig’, ’purelin’ | 3507 |

| Optimization model | Factor | Constraints | Generated points | ||

|---|---|---|---|---|---|

| Domain | Discretization | ||||

| ≤ Xi ≤ | Unit | ||||

| Variation“A” | A | 80 | 90 | 5 | 3 |

| B | 90 | 100 | 5 | 3 | |

| C | 85 | 100 | 7.5 | 3 | |

| D | 7.2 | 9.0 | 0.9 | 3 | |

| E | 10 | 15 | 2.5 | 3 | |

| Total | 243 | ||||

| Variation“B” | A | 75 | 95 | 2.2 | 10 |

| B | 85 | 105 | 2.5 | 9 | |

| C | 77.5 | 100 | 2.5 | 10 | |

| D | 6.3 | 9.9 | 0.9 | 5 | |

| E | 7.5 | 15 | 1.25 | 7 | |

| Total | 31500 | ||||

| Solution | Factor | Oj(p) | ||||

|---|---|---|---|---|---|---|

| A (s) | B (%) | C (%) | D (s) | E (mbar) | ||

| 1st | 90 | 100 | 100 | 8.1 | 12.5 | 0.27 |

| 2nd | 90 | 100 | 92.5 | 7.2 | 12.5 | 0.27 |

| 3rd | 85 | 100 | 100 | 7.2 | 12.5 | 0.27 |

| 4th | 90 | 95 | 100 | 8.1 | 12.5 | 0.28 |

| 5th | 90 | 100 | 85 | 8.1 | 10 | 0.28 |

| 6th | 90 | 95 | 100 | 7.2 | 12.5 | 0.28 |

| 7th | 85 | 95 | 100 | 7.2 | 12.5 | 0.28 |

| 8th | 90 | 95 | 92.5 | 7.2 | 12.5 | 0.29 |

| 9th | 90 | 100 | 100 | 7.2 | 12.5 | 0.29 |

| 10th | 85 | 95 | 100 | 7.2 | 12.5 | 0.30 |

| Solution | Factor | Oj(p) | ||||

|---|---|---|---|---|---|---|

| A (s) | B (%) | C (%) | D (s) | E (mbar) | ||

| 1st | 92.6 | 90 | 100 | 7.2 | 12.5 | 0.24 |

| 2nd | 95 | 90 | 100 | 8.1 | 12.5 | 0.24 |

| 3rd | 95 | 87.5 | 100 | 7.2 | 12.5 | 0.24 |

| 4th | 95 | 90 | 100 | 7.2 | 12.5 | 0.24 |

| 5th | 95 | 87.5 | 100 | 6.3 | 10 | 0.24 |

| 6th | 95 | 90 | 96.25 | 8.1 | 12.5 | 0.24 |

| 7th | 95 | 87.5 | 96.25 | 6.3 | 10 | 0.24 |

| 8th | 92.6 | 90 | 96.25 | 7.2 | 12.5 | 0.24 |

| 9th | 92.6 | 87.5 | 100 | 7.2 | 12.5 | 0.24 |

| 10th | 95 | 87.5 | 100 | 8.1 | 12.5 | 0.24 |

| Validation samples a | Model type “A” | Main experimental n° 04 b | |||||

|---|---|---|---|---|---|---|---|

| Mean | 95% CI | Predicted | Mean | 95% CI | |||

| DEV 01 | −0.255 | −0.298 | −0.213 | −0.294 | −0.293 | −0.620 | 0.034 |

| DEV 02 | −0.341 | −0.419 | −0.263 | −0.376 | −0.310 | −0.444 | −0.177 |

| DEV 03 | 0.193 | 0.156 | 0.231 | 0.185 | 0.323 | 0.189 | 0.456 |

| DEV 04 | 0.134 | 0.050 | 0.218 | 0.188 | 0.188 | 0.034 | 0.342 |

| Oj | 0.23 | 0.17 | 0.30 | 0.27 | 0.31 | 0.39 | 0.27 |

| Validation Samples a | Model Type “B” | Main Experimental n° 04 b | |||||

|---|---|---|---|---|---|---|---|

| Mean | 95% CI | Predicted | Mean | 95% CI | |||

| DEV 01 | −0.366 | −0.480 | −0.252 | −0.293 | −0.293 | −0.620 | 0.034 |

| DEV 02 | −0.246 | −0.267 | −0.225 | −0.242 | −0.310 | −0.444 | −0.177 |

| DEV 03 | 0.108 | 0.078 | 0.139 | 0.182 | 0.323 | 0.189 | 0.456 |

| DEV 04 | 0.136 | 0.068 | 0.204 | 0.099 | 0.188 | 0.034 | 0.342 |

| Oj | 0.25 | 0.17 | 0.33 | 0.24 | 0.31 | 0.39 | 0.27 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leite, W.D.O.; Campos Rubio, J.C.; Mata Cabrera, F.; Carrasco, A.; Hanafi, I. Vacuum Thermoforming Process: An Approach to Modeling and Optimization Using Artificial Neural Networks. Polymers 2018, 10, 143. https://doi.org/10.3390/polym10020143

Leite WDO, Campos Rubio JC, Mata Cabrera F, Carrasco A, Hanafi I. Vacuum Thermoforming Process: An Approach to Modeling and Optimization Using Artificial Neural Networks. Polymers. 2018; 10(2):143. https://doi.org/10.3390/polym10020143

Chicago/Turabian StyleLeite, Wanderson De Oliveira, Juan Carlos Campos Rubio, Francisco Mata Cabrera, Angeles Carrasco, and Issam Hanafi. 2018. "Vacuum Thermoforming Process: An Approach to Modeling and Optimization Using Artificial Neural Networks" Polymers 10, no. 2: 143. https://doi.org/10.3390/polym10020143