An Alternative Estimate of Potential Predictability on the Madden–Julian Oscillation Phase Space Using S2S Models

Abstract

:1. Introduction

2. Data

2.1. Verification and Phase Space

2.2. Forecast Data

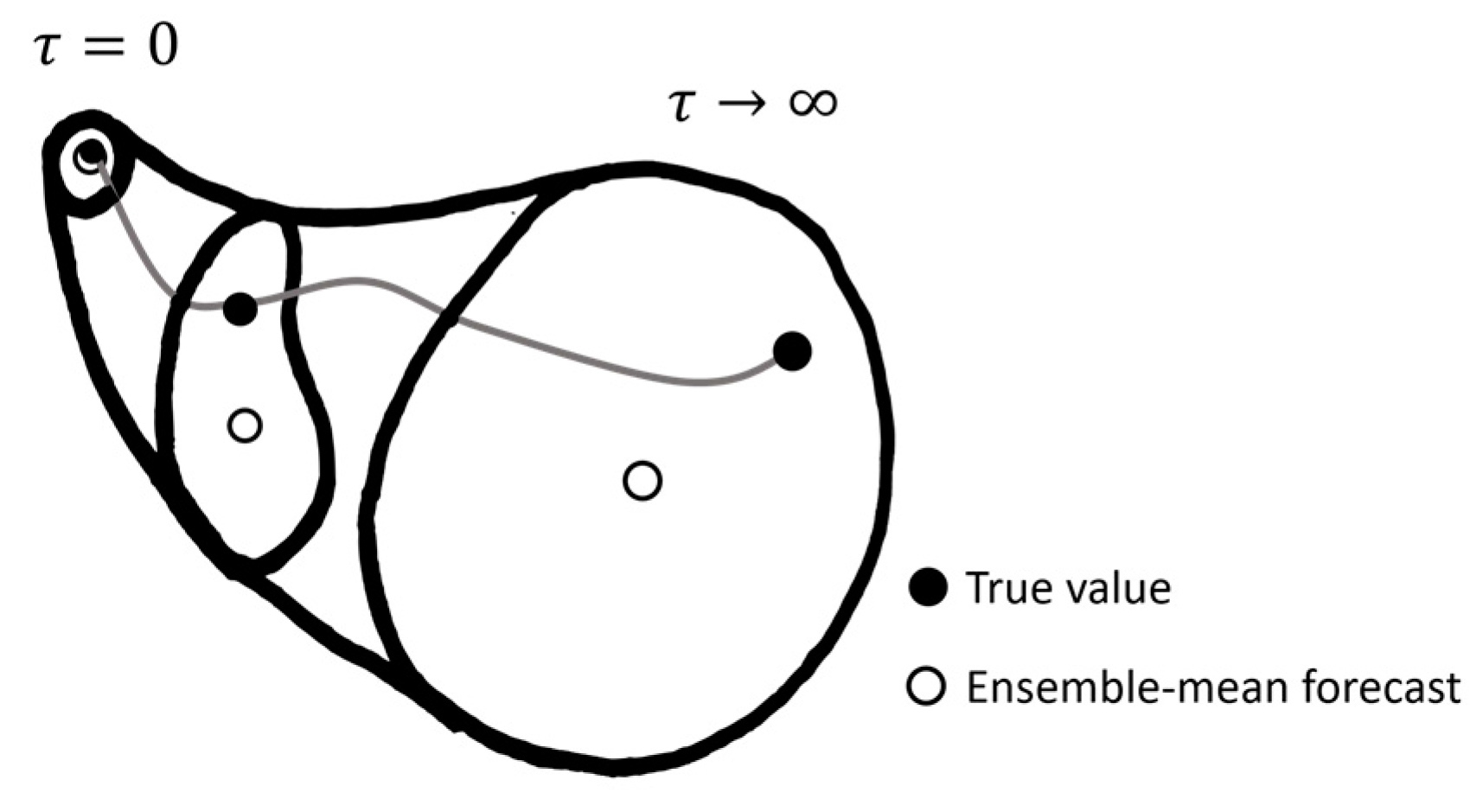

3. Alternative Method to Estimate Potential Predictability

4. Results

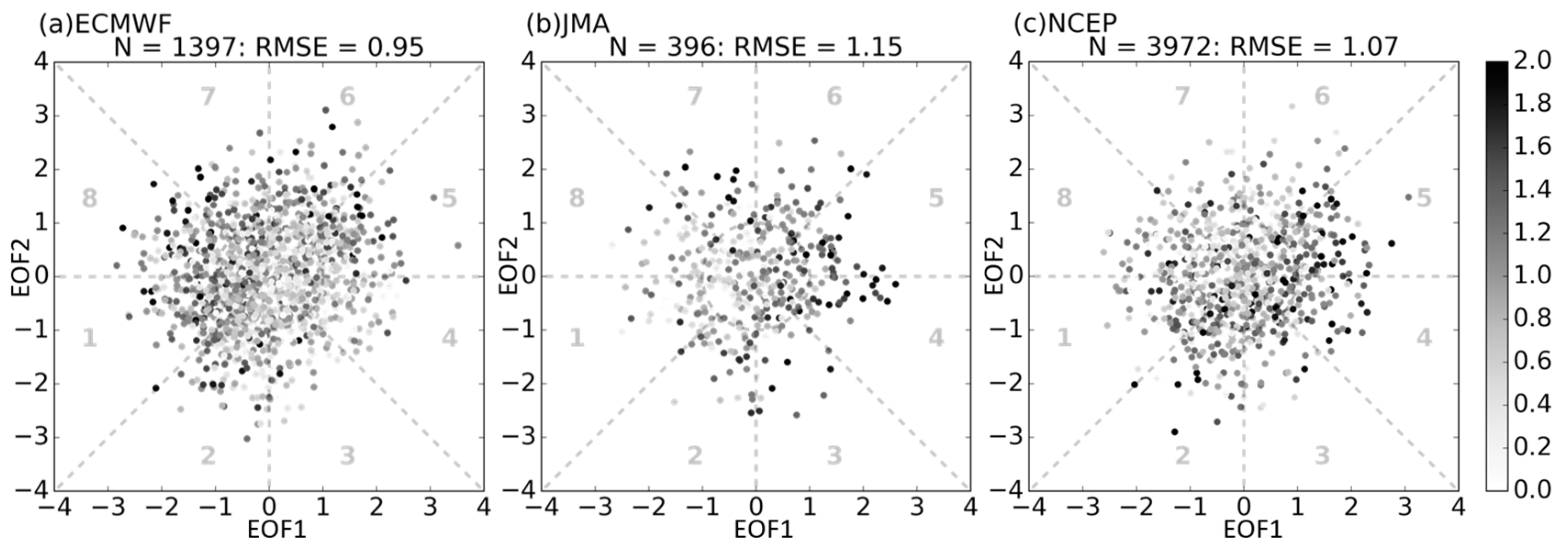

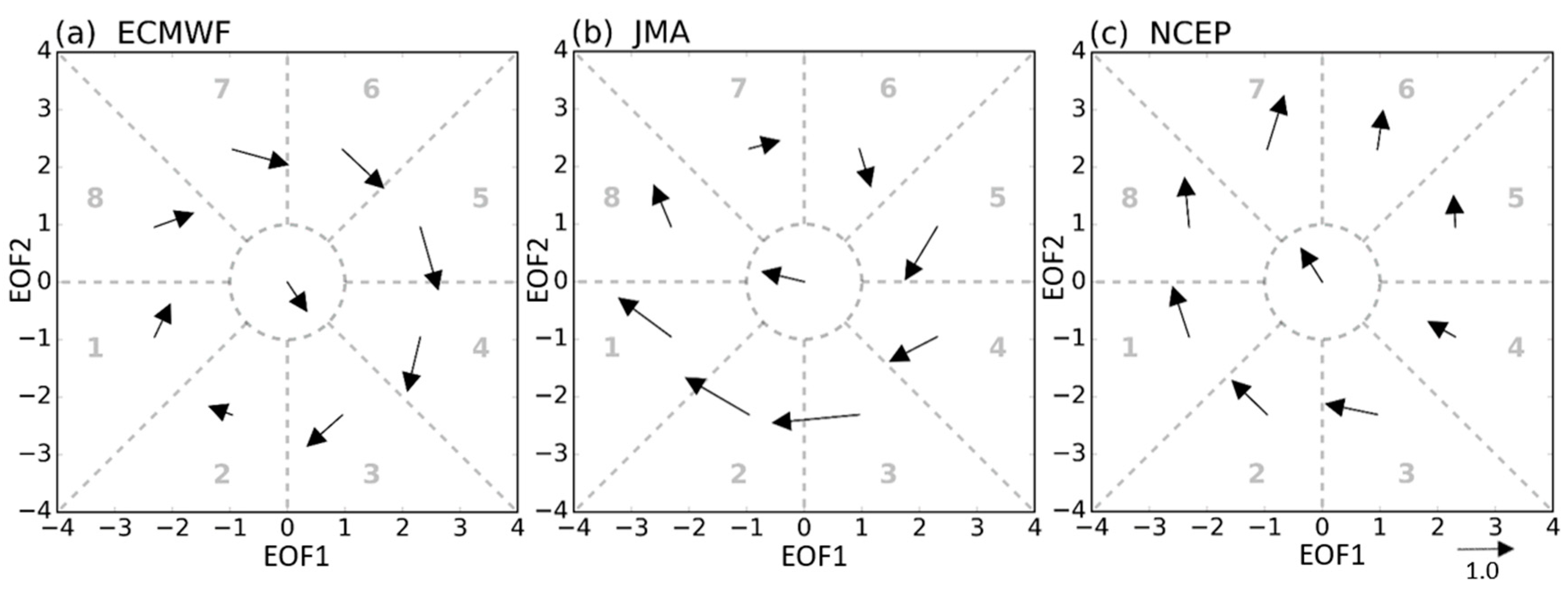

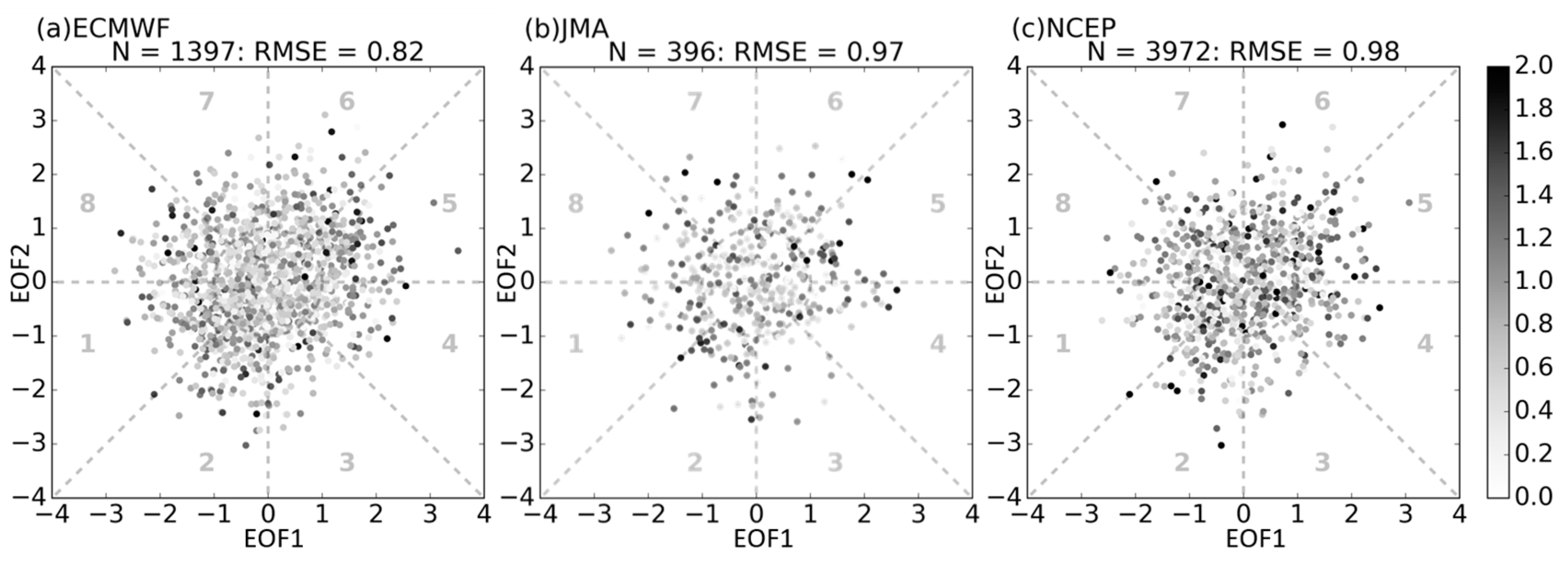

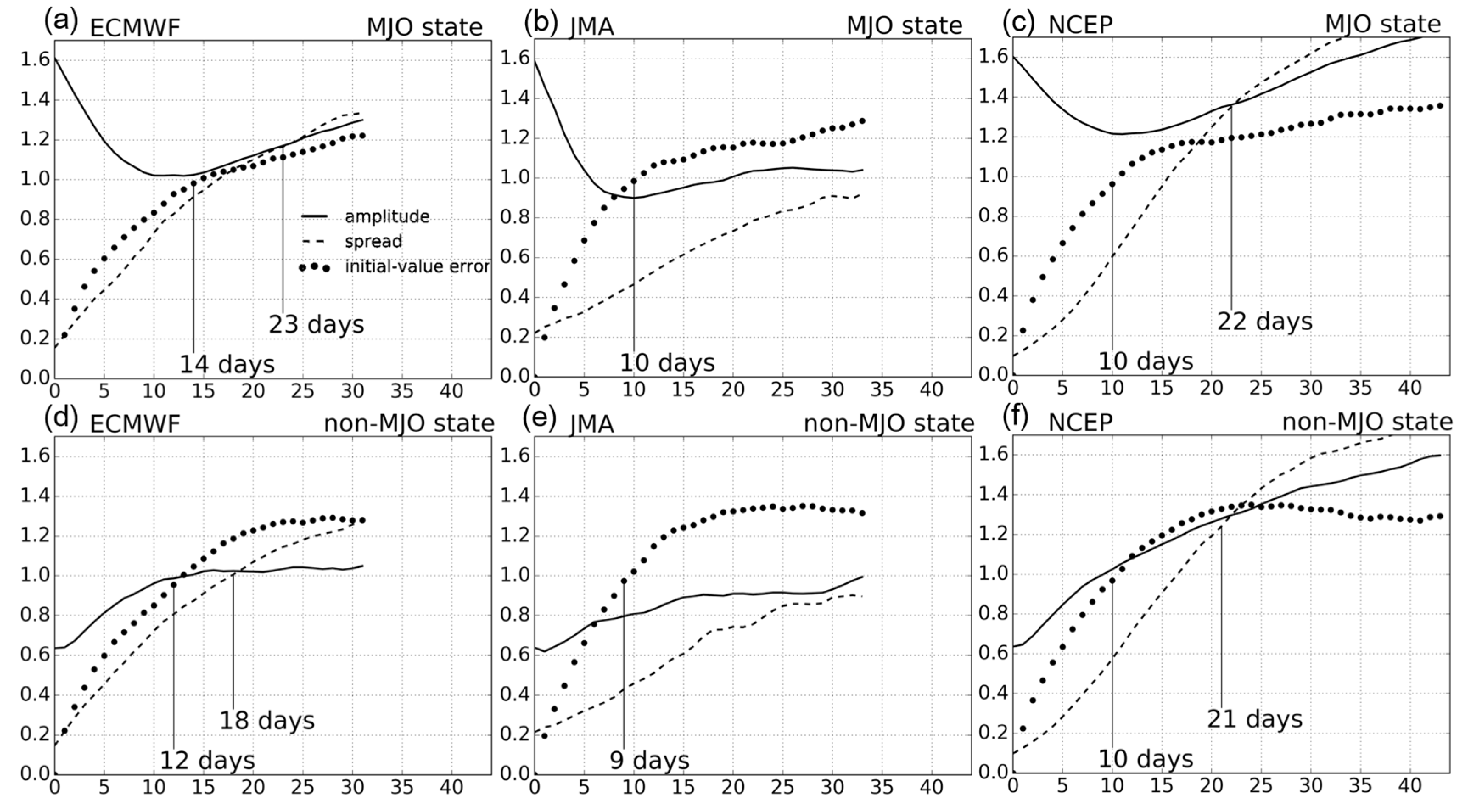

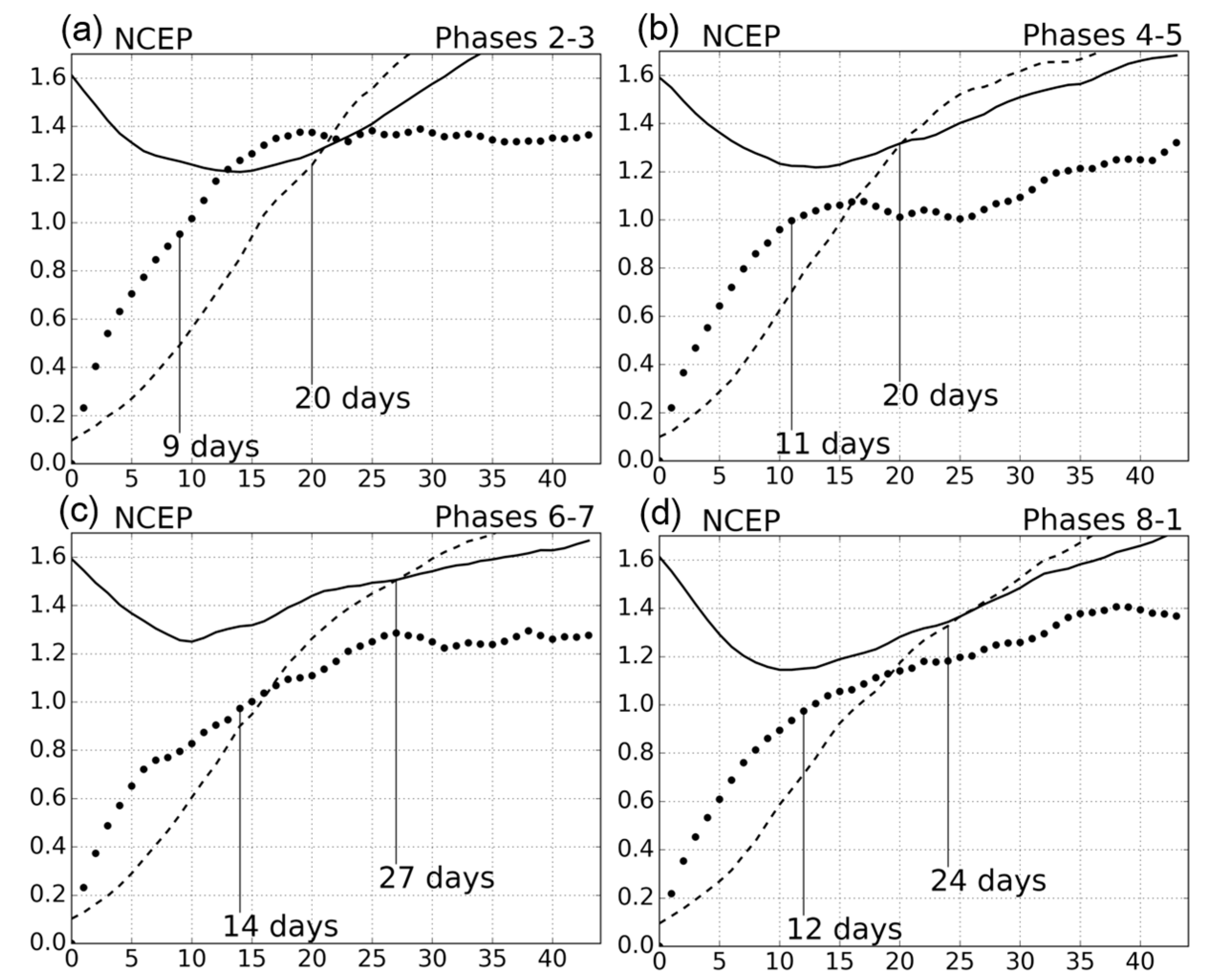

4.1. The Prediction Skill and Bias Correction

4.2. Prediction Limit and Its Comparison with a Conventional Method

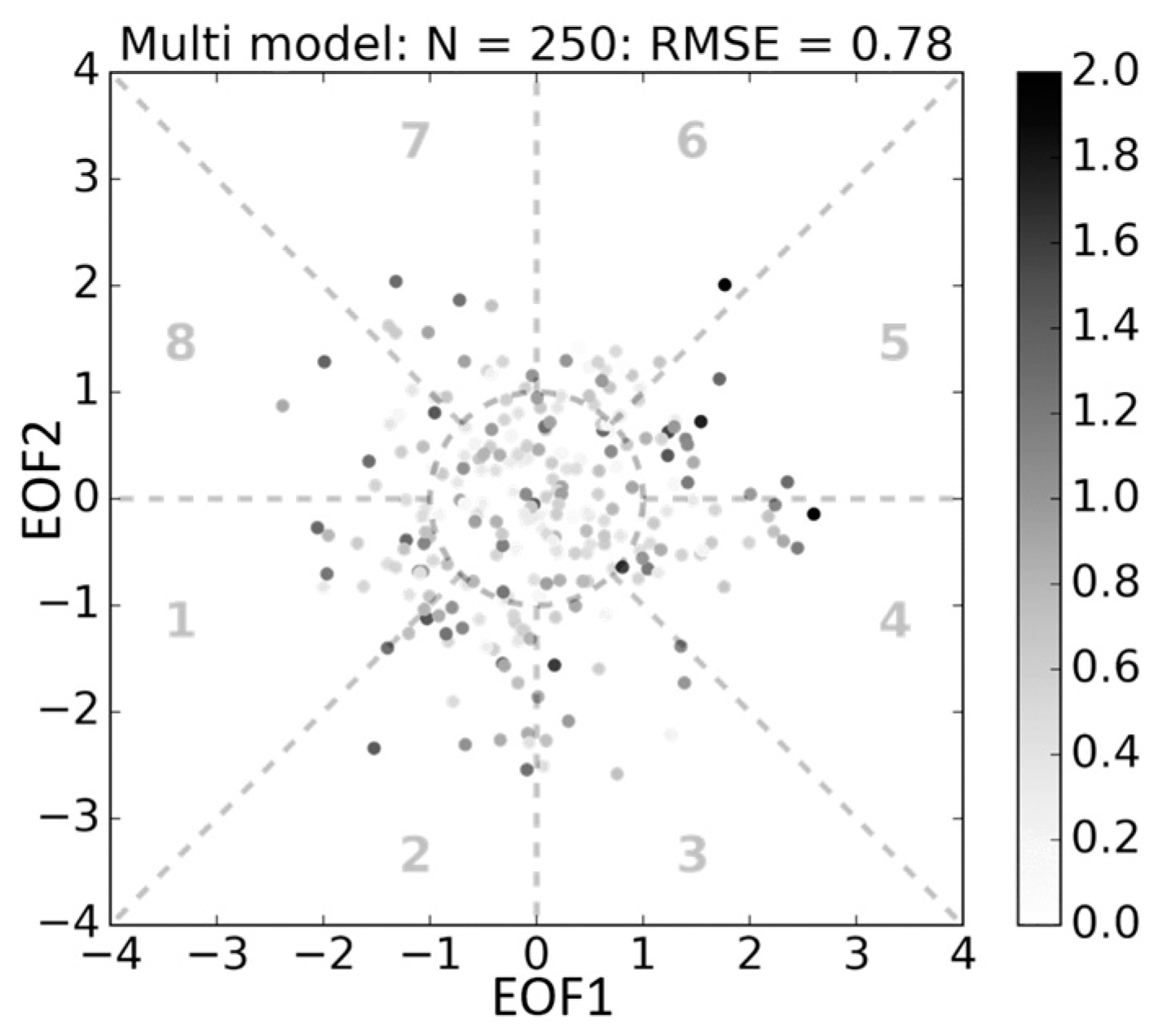

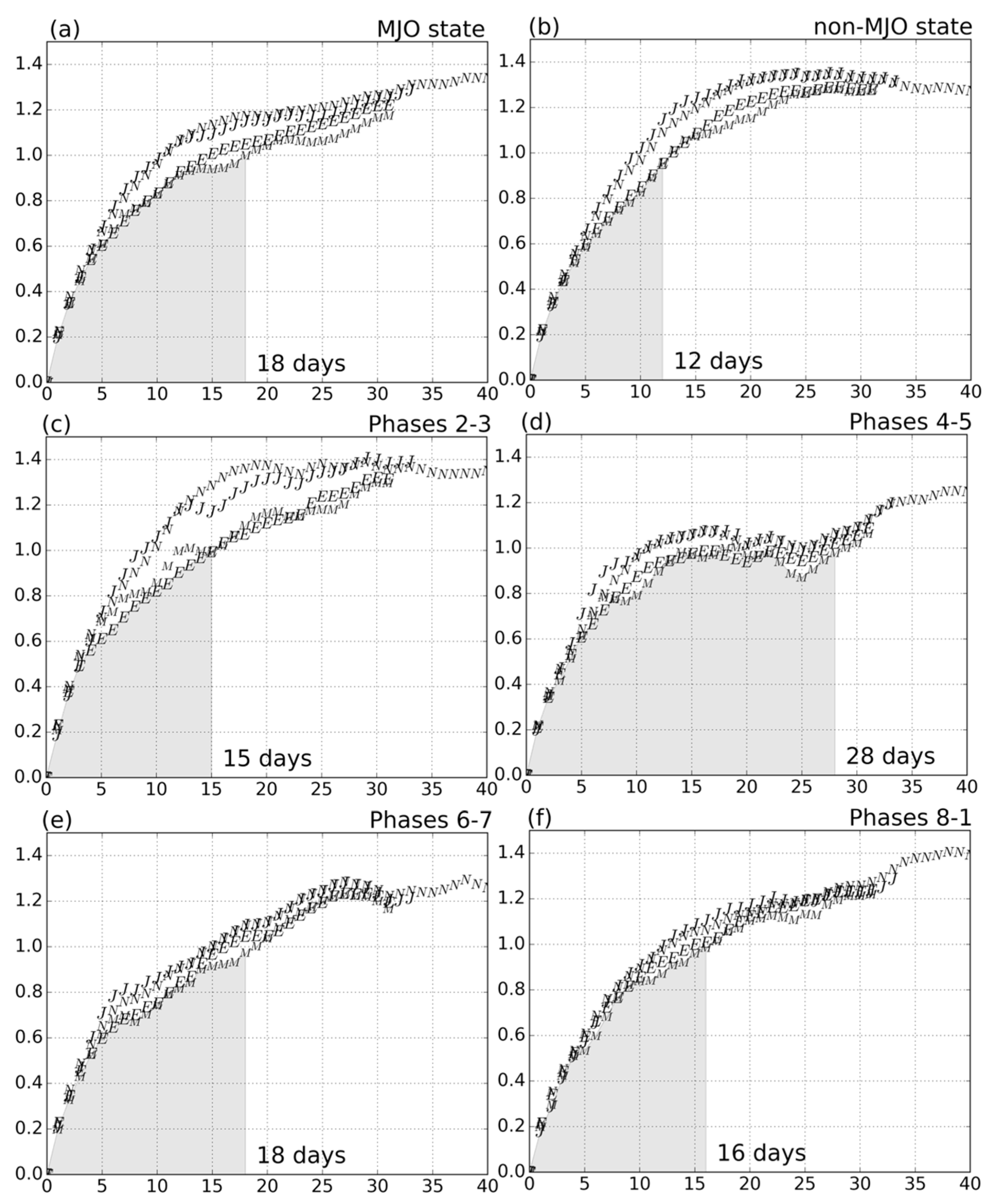

4.3. The Multimodel Ensemble Analysis

5. Concluding Remarks

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Madden, R.A.; Julian, P.R. Observations of the 40–50-day tropical oscillation—A review. Mon. Weather Rev. 1994, 122, 814–837. [Google Scholar] [CrossRef]

- Zhang, C. Madden-Julian Oscillation. Rev. Geophys. 2005, 43. [Google Scholar] [CrossRef]

- Waliser, D.E.; Lau, K.M.; Stern, W.; Jones, C. Potential predictability of the Madden–Julian Oscillation. Bull. Am. Meteorol. Soc. 2003, 84, 33–50. [Google Scholar] [CrossRef]

- Matsueda, M.; Endo, H. Verification of medium-range MJO forecasts with TIGGE. Geophys. Res. Lett. 2011, 38. [Google Scholar] [CrossRef]

- Neena, J.M.; Lee, J.Y.; Waliser, D.; Wang, B.; Jiang, X. Predictability of the Madden–Julian Oscillation in the intraseasonal variability hindcast experiment (ISVHE). J. Clim. 2014, 27, 4531–4543. [Google Scholar] [CrossRef]

- Zhang, C.; Dong, M.; Gualdi, S.; Hendon, H.H.; Maloney, E.D.; Marshall, A.; Sperber, K.R.; Wang, W. Simulations of the Madden-Julian oscillation in four pairs of coupled and uncoupled global models. Clim. Dyn. 2006, 27, 573–592. [Google Scholar] [CrossRef]

- Rashid, H.A.; Hendon, H.H.; Wheeler, M.C.; Alves, O. Prediction of the Madden–Julian Oscillation with the POAMA dynamical prediction system. Clim. Dyn. 2010, 36, 649–661. [Google Scholar] [CrossRef]

- Lin, H.; Brunet, G.; Derome, J. Forecast skill of the Madden–Julian Oscillation in two canadian atmospheric models. Mon. Weather Rev. 2008, 136, 4130–4149. [Google Scholar] [CrossRef]

- Miyakawa, T.; Satoh, M.; Miura, H.; Tomita, H.; Yashiro, H.; Noda, A.T.; Yamada, Y.; Kodama, C.; Kimoto, M.; Yoneyama, K. Madden–Julian Oscillation prediction skill of a new-generation global model demonstrated using a supercomputer. Nat. Commun. 2014, 5. [Google Scholar] [CrossRef] [PubMed]

- Vitart, F. Evolution of ECMWF sub-seasonal forecast skill scores. Q. J. R. Meteorol. Soc. 2014, 140, 1889–1899. [Google Scholar] [CrossRef]

- Kim, H.-M.; Webster, P.J.; Toma, V.E.; Kim, D. Predictability and prediction skill of the MJO in two operational forecasting systems. J. Clim. 2014, 27, 5364–5378. [Google Scholar] [CrossRef]

- Subramanian, A.C.; Zhang, G.J. Diagnosing MJO hindcast biases in NCAR CAM3 using nudging during the DYNAMO field campaign. J. Geophys. Res. Atmos. 2014, 119, 7231–7253. [Google Scholar] [CrossRef]

- Wang, W.; Hung, M.-P.; Weaver, S.J.; Kumar, A.; Fu, X. MJO prediction in the NCEP Climate Forecast System version 2. Clim. Dyn. 2013, 42, 2509–2520. [Google Scholar] [CrossRef]

- Kumar, A.; Hoerling, M.P. Analysis of a conceptual model of seasonal climate variability and implications for seasonal prediction. Bull. Am. Meteorol. Soc. 2000, 81, 255–264. [Google Scholar] [CrossRef]

- Ichikawa, Y.; Inatsu, M. Methods to evaluate prediction skill in the Madden-Julian oscillation phase space. J. Meteorol. Soc. Jpn. 2016, 94, 257–267. [Google Scholar] [CrossRef]

- Kumar, A.; Peng, P.; Chen, M. Is there a relationship between potential and actual skill? Mon. Weather Rev. 2014, 142, 2220–2227. [Google Scholar] [CrossRef]

- Scaife, A.A.; Arribas, A.; Blockley, E.; Brookshaw, A.; Clark, R.T.; Dunstone, N.; Eade, R.; Fereday, D.; Folland, C.K.; Gordon, M.; et al. Skillful long-range prediction of European and North American winters. Geophys. Res. Lett. 2014, 41, 2514–2519. [Google Scholar] [CrossRef]

- Eade, R.; Smith, D.; Scaife, A.; Wallace, E.; Dunstone, N.; Hermanson, L.; Robinson, N. Do seasonal-to-decadal climate predictions underestimate the predictability of the real world? Geophys. Res. Lett. 2014, 41, 5620–5628. [Google Scholar] [CrossRef] [PubMed]

- Brunet, G.; Shapiro, M.; Hoskins, B.J.; Moncrieff, M.; Dole, R.; Kiladis, G.N.; Kirtman, B.; Lorenc, A.; Mills, B.; Morss, R.; et al. Collaboration of the weather and climate communities to advance subseasonal-to-seasonal prediction. Bull. Am. Meteorol. Soc. 2010, 91, 1397–1406. [Google Scholar] [CrossRef]

- Vitart, F.; Robertson, A.W.; Anderson, D.L.T. Subseasonal to Seasonal Prediction Project: Bridging the gap between weather and climate. Bull. Word Meteorol. Organ. 2012, 61, 23–28. [Google Scholar] [CrossRef]

- Vitart, F.; Ardilouze, C.; Bonet, A.; Brookshaw, A.; Chen, M.; Codorean, C.; Déqué, M.; Ferranti, L.; Fucile, E.; Fuentes, M.; et al. The Sub-seasonal to Seasonal Prediction (S2S) Project Database. Bull. Am. Meteorol. Soc. 2016. [Google Scholar] [CrossRef]

- Dee, D.P.; Uppala, S.M.; Simmons, A.J.; Berrisford, P.; Poli, P.; Kobayashi, S.; Andrae, U.; Balmaseda, M.A.; Balsamo, G.; Bauer, P.; et al. The ERA-Interim reanalysis: Configuration and performance of the data assimilation system. Q. J. R. Meteorol. Soc. 2011, 137, 553–597. [Google Scholar] [CrossRef]

- Kobayashi, S.; Ota, Y.; Harada, Y.; Ebita, A.; Morita, M.; Onoda, H.; Onogi, K.; Kamamori, H.; Kobayashi, C.; Endo, H.; et al. The JRA-55 reanalysis: General specifications and basic characteristics. J. Meteorol. Soc. Jpn. 2015, 93, 5–48. [Google Scholar] [CrossRef]

- Saha, S.; Moorthi, S.; Pan, H.L.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Kistler, R.; Woollen, J.; Behringer, D.; et al. The NCEP climate forecast system reanalysis. Bull. Am. Meteorol. Soc. 2010, 91, 1015–1057. [Google Scholar] [CrossRef]

- Trenberth, K.E.; Fasullo, J.T.; Mackaro, J. Atmospheric moisture transports from ocean to land and global energy flows in reanalyses. J. Clim. 2011, 24, 4907–4924. [Google Scholar] [CrossRef]

- Kim, J.-E.; Alexander, M.J. Tropical precipitation variability and convectively coupled equatorial waves on submonthly time scales in reanalyses and TRMM. J. Clim. 2013, 26, 3013–3030. [Google Scholar] [CrossRef]

- Park, Y.-Y.; Buizza, R.; Leutbecher, M. TIGGE: Preliminary results on comparing and combining ensembles. Q. J. R. Meteorol. Soc. 2008, 134, 2029–2050. [Google Scholar] [CrossRef]

- Wei, M.Z.; Toth, Z.; Zhu, Y.J. Analysis differences and error variance estimates from multi-centre analysis data. Aust. Meteorol. Oceanogr. J. 2010, 59, 25–34. [Google Scholar] [CrossRef]

- Wheeler, M.C.; Hendon, H.H. An all-season real-time multivariate MJO index: Development of an index for monitoring and prediction. Mon. Weather Rev. 2004, 132, 1917–1932. [Google Scholar] [CrossRef]

- Straub, K.H. MJO Initiation in the real-time multivariate MJO index. J. Clim. 2013, 26, 11301151. [Google Scholar] [CrossRef]

- Japan Meteorological Agency. Outline of the Operational Numerical Weather Prediction of the Japan Meteorological Agency. Available online: http://www.jma.go.jp/jma/jma-eng/jma-center/nwp/outline2013-nwp/pdf/outline2013_03.pdf (accessed on 11 May 2017).

- Saha, S.; Moorthi, S.; Wu, X.; Wang, J.; Nadiga, S.; Tripp, P.; Behringer, D.; Hou, Y.T.; Chuang, H.Y.; Iredell, M.; et al. The NCEP climate forecast system version 2. J. Clim. 2014, 27, 2185–2208. [Google Scholar] [CrossRef]

- Ide, K.; Courtier, P.; Ghil, M.; Lorenc, A.C. Unified Notation for Data Assimilation: Operational, Sequential and Variational. J. Meteorol. Soc. Jpn. 1997, 75, 181–189. [Google Scholar] [CrossRef]

- DelSole, T.; Feng, X. The “Shukla–Gutzler” method for estimating potential seasonal predictability. Mon. Weather Rev. 2013, 141, 822–831. [Google Scholar] [CrossRef]

- Williams, R.M.; Ferro, C.A.T.; Kwasniok, F. A comparison of ensemble post-processing methods for extreme events TL-140. Q. J. R. Meteorol. Soc. 2014, 140, 1112–1120. [Google Scholar] [CrossRef]

- Jones, C.; Dudhia, J. Potential Predictability during a Madden–Julian Oscillation Event. J. Clim. 2017, 30, 5345–5360. [Google Scholar] [CrossRef]

- Seo, K.-H.; Wang, W.; Gottschalck, J.; Zhang, Q.; Schemm, J.-K.E.; Higgins, W.R.; Kumar, A. Evaluation of MJO forecast skill from several statistical and dynamical forecast models. J. Clim. 2009, 22, 2372–2388. [Google Scholar] [CrossRef]

| Organization | ECMWF | JMA | NCEP |

|---|---|---|---|

| Forecast period | 32 days | 33 days | 44 days |

| Initial perturbation | Singular vector and ensemble data assimilation perturbation | Bred vector and lagged averaging method | Lagged averaging method |

| Ensemble size | 4 | 4 | 3 |

| Initial time | 00 UTC | 12 UTC | 00 UTC |

| Frequency | Twice a week | 3 times a month | Everyday |

| Total number of forecasts | 1397 | 396 | 3972 |

| Horizontal resolution | TL639 (TL319 after day 10) | TL319 | T126 |

| Vertical layers | 91 | 60 | 64 |

| Month | Day | ||

|---|---|---|---|

| Jan. | 1 | 11 | 21 |

| Feb. | 1 | 11 | |

| Mar. | 10 | 21 | 31 |

| Apr. | 11 | 21 | 30 |

| May | 21 | ||

| Jun. | 11 | ||

| Jul. | 20 | ||

| Aug. | 10 | 20 | 31 |

| Sep. | 10 | 21 | |

| Oct. | 1 | ||

| Nov. | 30 | ||

| Dec. | 10 | 21 | |

| Phase Block | ECMWF | JMA | NCEP | Multimodel Ensemble |

|---|---|---|---|---|

| Non-MJO phase | 570 | 160 | 1661 | 107 |

| All MJO phase | 827 | 236 | 2311 | 143 |

| Phases 2–3 | 182 | 51 | 511 | 41 |

| Phases 4–5 | 218 | 64 | 638 | 43 |

| Phases 6–7 | 196 | 58 | 529 | 31 |

| Phases 8–1 | 231 | 63 | 633 | 28 |

| Initial State | Proposed Method | Conventional Method |

|---|---|---|

| Phases 2–3 | 15 | 17 |

| Phases 4–5 | 27 | 27 |

| Phases 6–7 | 16 | 25 |

| Phases 8–1 | 14 | 17 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ichikawa, Y.; Inatsu, M. An Alternative Estimate of Potential Predictability on the Madden–Julian Oscillation Phase Space Using S2S Models. Atmosphere 2017, 8, 150. https://doi.org/10.3390/atmos8080150

Ichikawa Y, Inatsu M. An Alternative Estimate of Potential Predictability on the Madden–Julian Oscillation Phase Space Using S2S Models. Atmosphere. 2017; 8(8):150. https://doi.org/10.3390/atmos8080150

Chicago/Turabian StyleIchikawa, Yuiko, and Masaru Inatsu. 2017. "An Alternative Estimate of Potential Predictability on the Madden–Julian Oscillation Phase Space Using S2S Models" Atmosphere 8, no. 8: 150. https://doi.org/10.3390/atmos8080150

APA StyleIchikawa, Y., & Inatsu, M. (2017). An Alternative Estimate of Potential Predictability on the Madden–Julian Oscillation Phase Space Using S2S Models. Atmosphere, 8(8), 150. https://doi.org/10.3390/atmos8080150