Comparing CMAQ Forecasts with a Neural Network Forecast Model for PM2.5 in New York

Abstract

:1. Introduction

Paper Structure

2. CMAQ Local and Regional Assessment

2.1. Datasets

2.1.1. Models

2.1.2. Ground-Based Observations

2.2. Methods

Assessing Accuracy of CMAQ Forecasting Models

2.3. Results

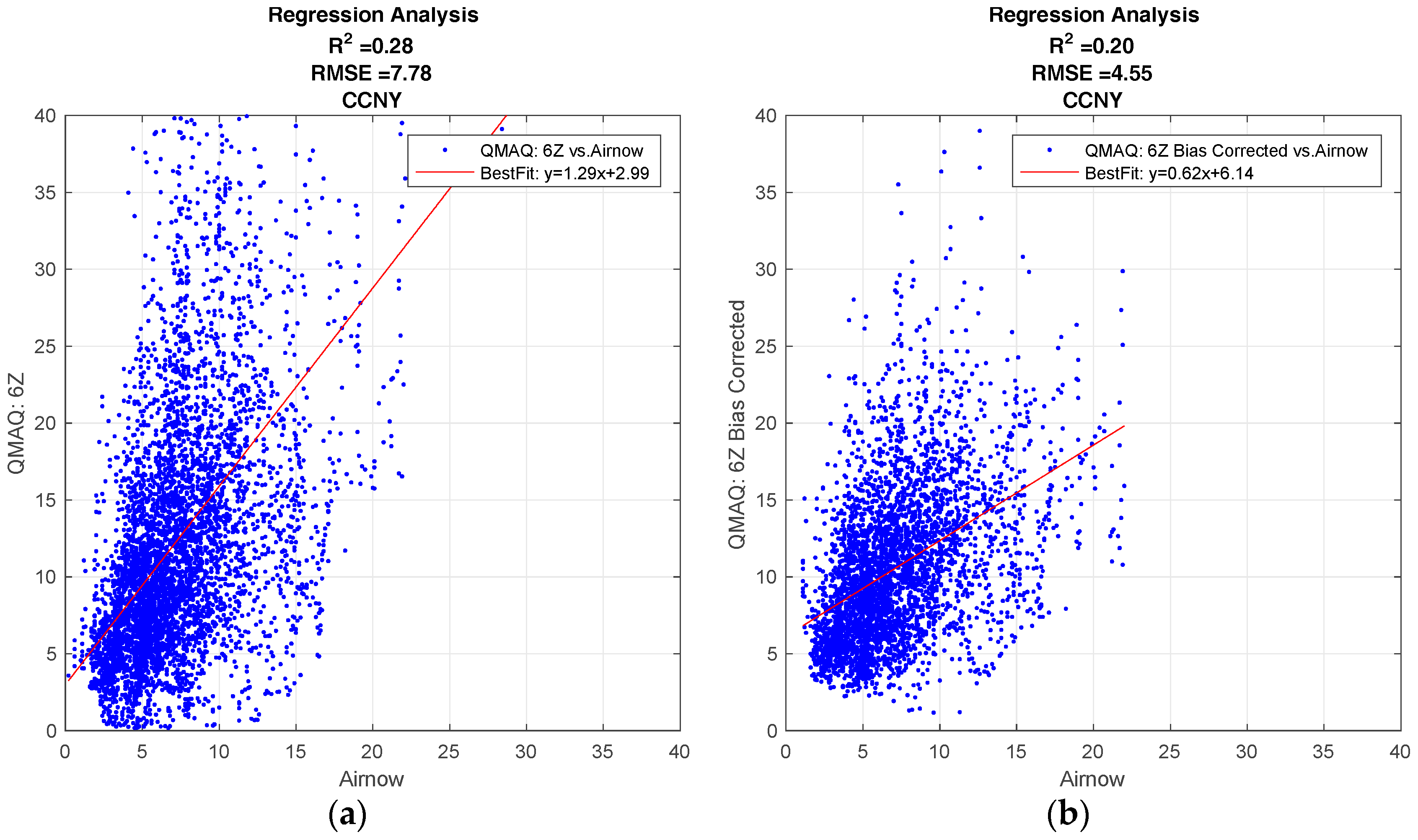

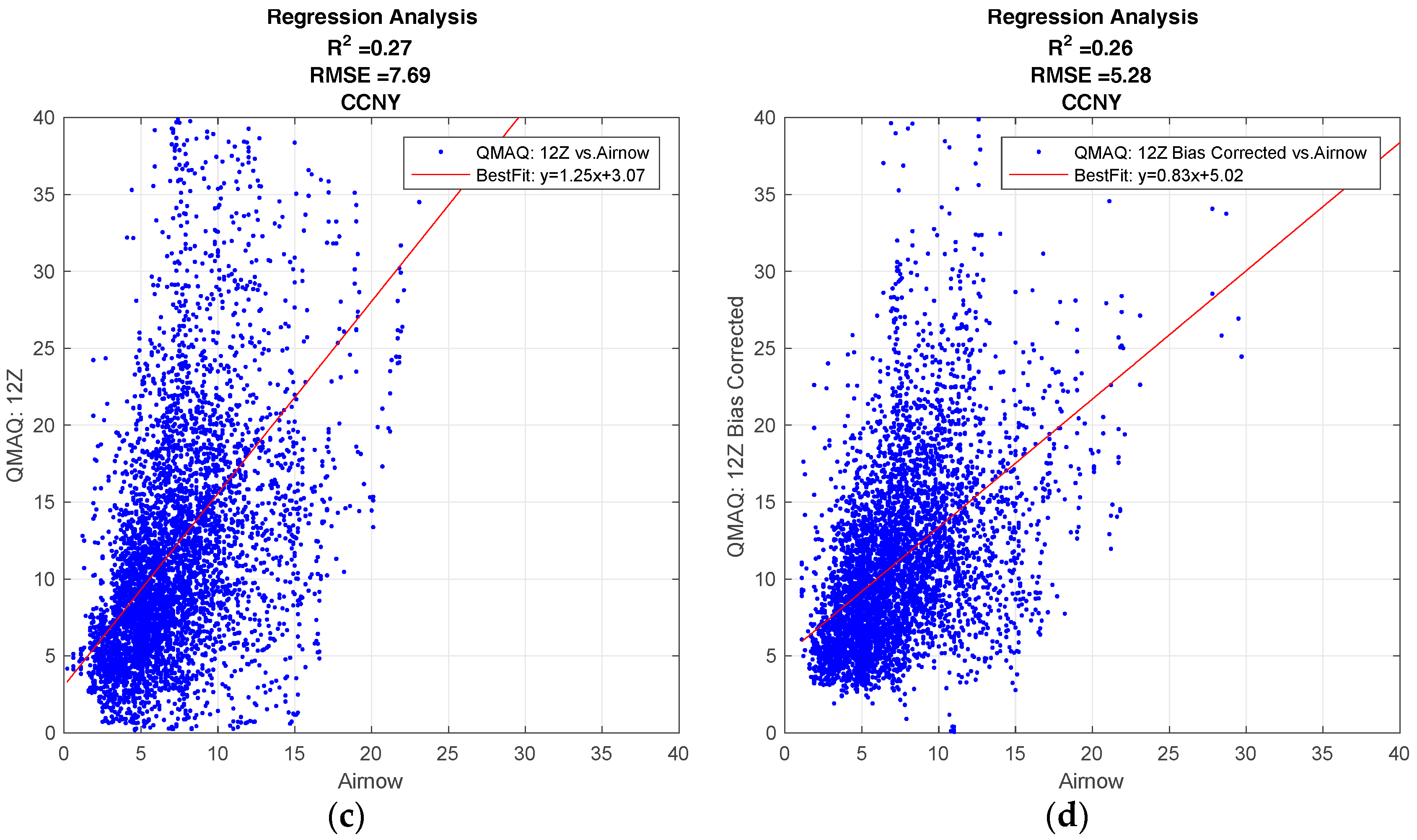

2.3.1. Effects of Bias and Release Time

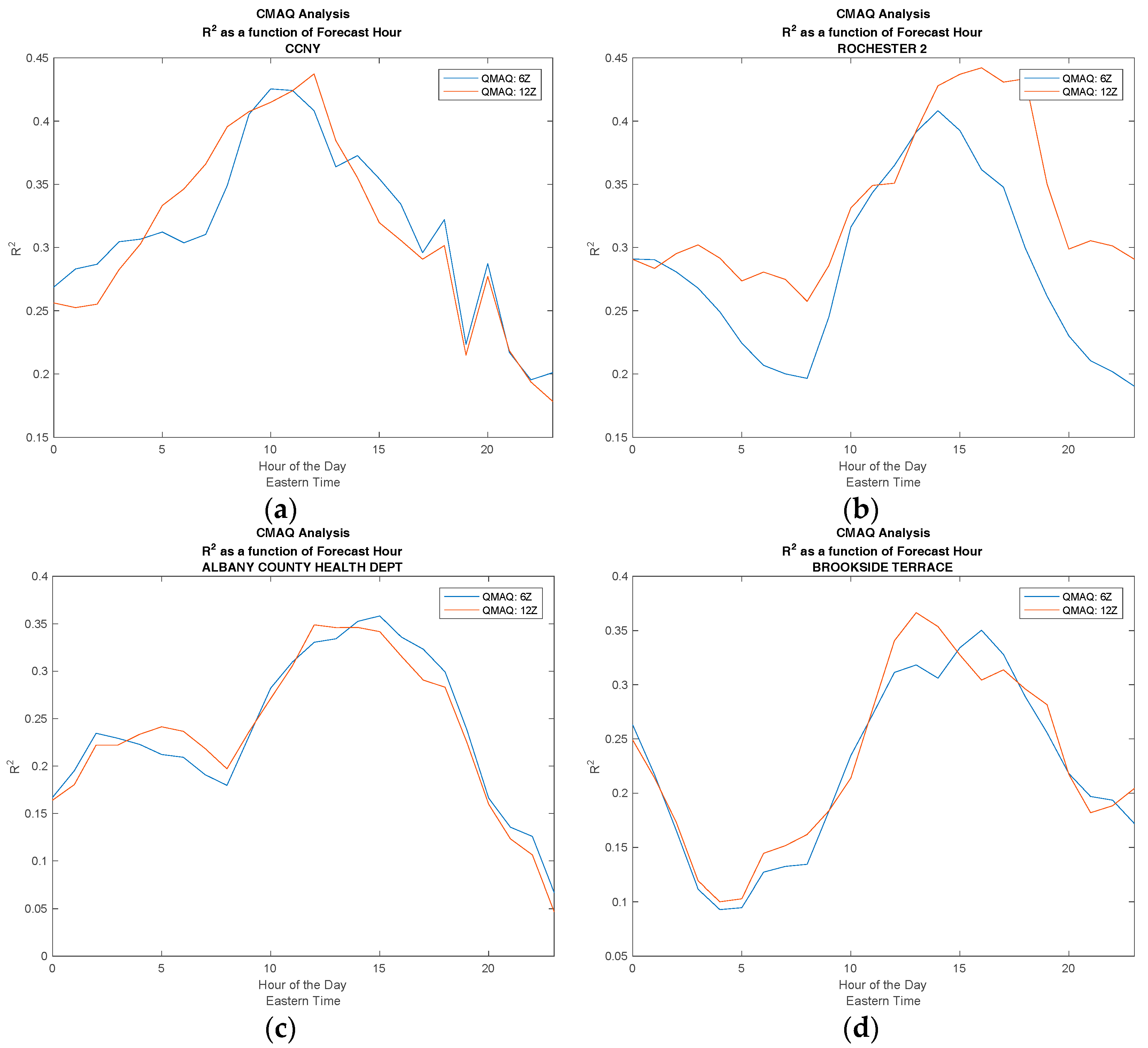

2.3.2. Differences between Urban and Non-Urban Locations

3. Data Driven (Neural Network) Development

3.1. Datasets

3.1.1. Ground-Based Observations

3.1.2. Models

3.2. Methods

3.2.1. Development of the Neural Network

Input Selection Scenarios

| (Field measurements) | |||

| (NARR Forecasts) | |||

| (NARR Forecasts) |

| (Field measurements) | |||

| | | (NARR Forecasts) (NARR Observations) | |

| (NARR Forecasts) |

| Targets: | (Field measurements) |

Neural Network Training Approach

Neural Network Scenario Results

3.3. Results

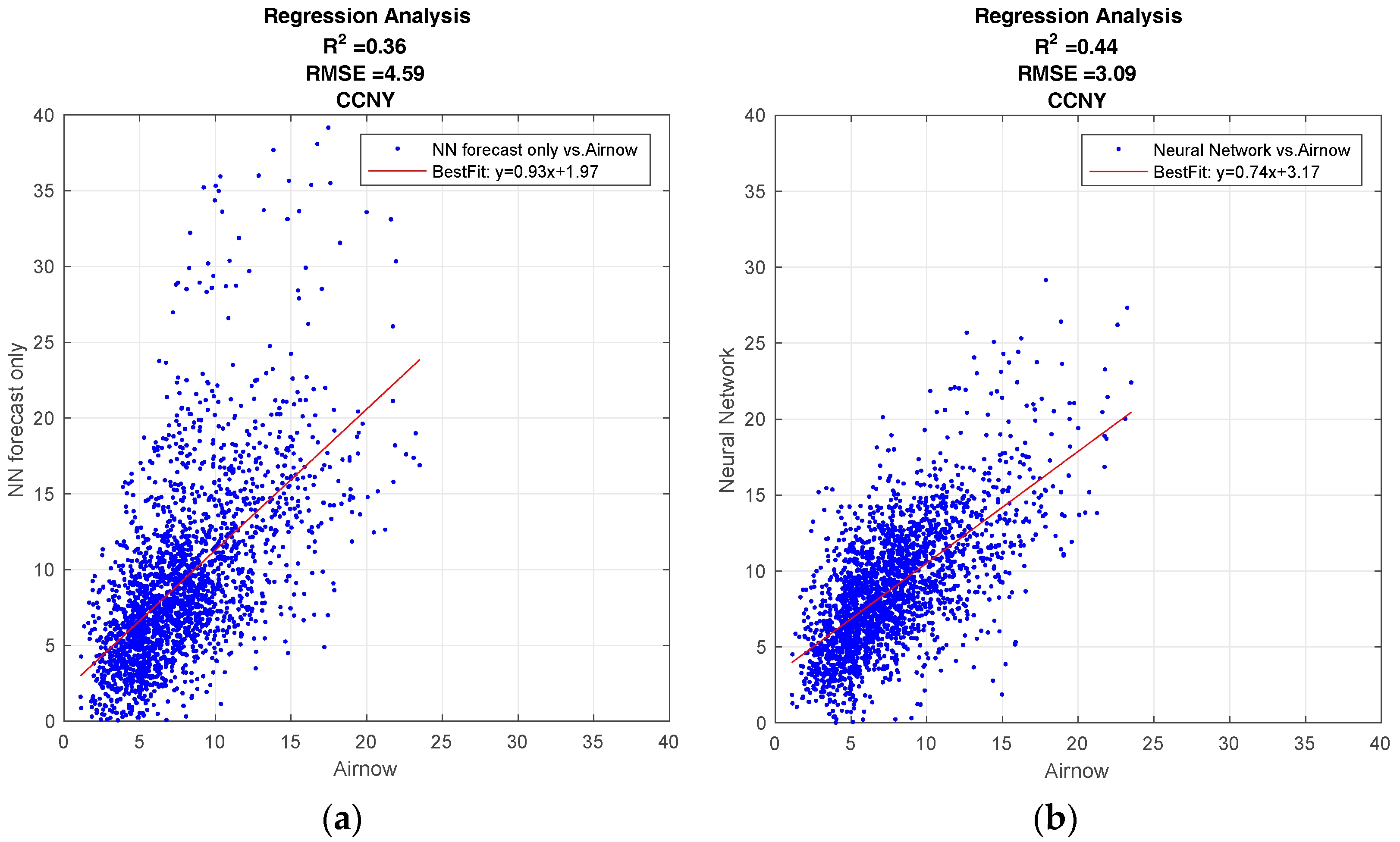

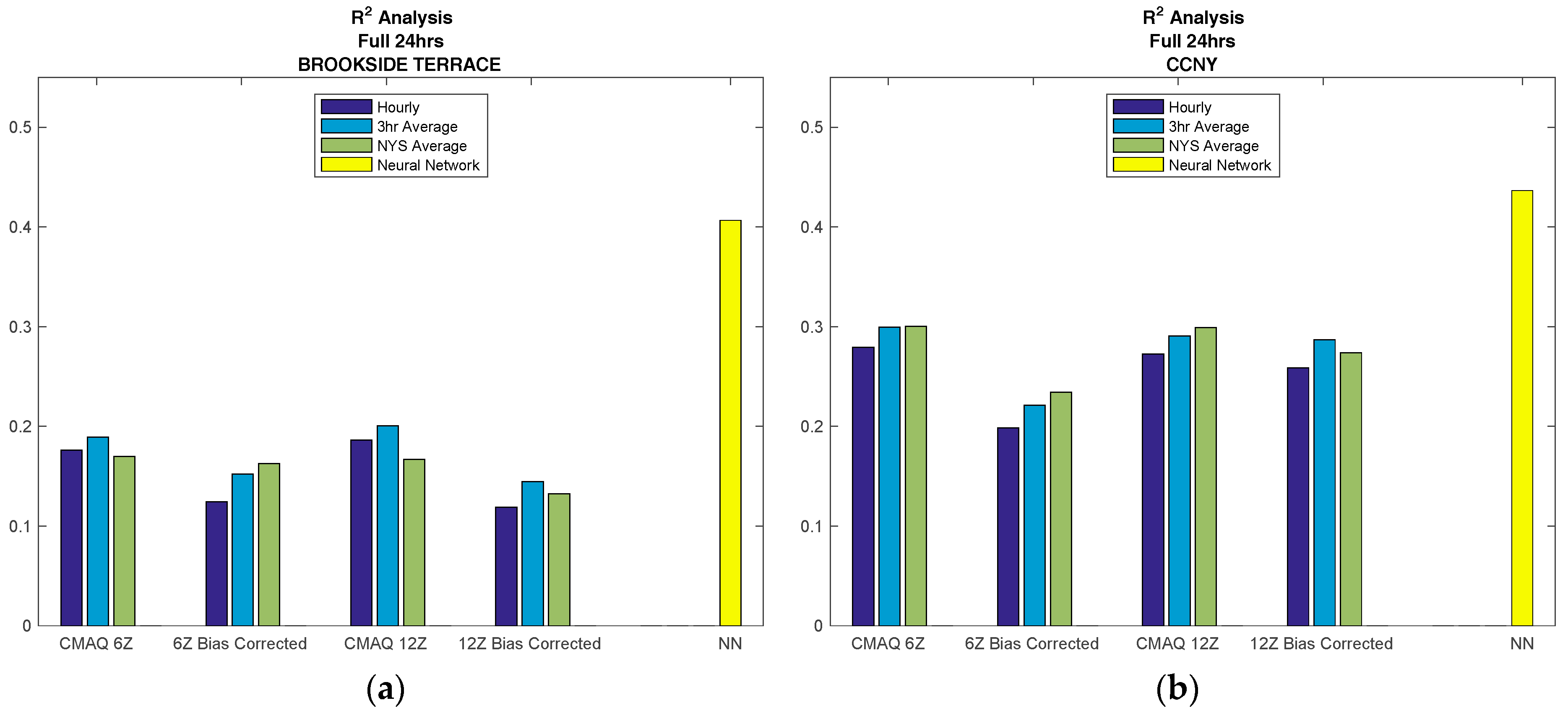

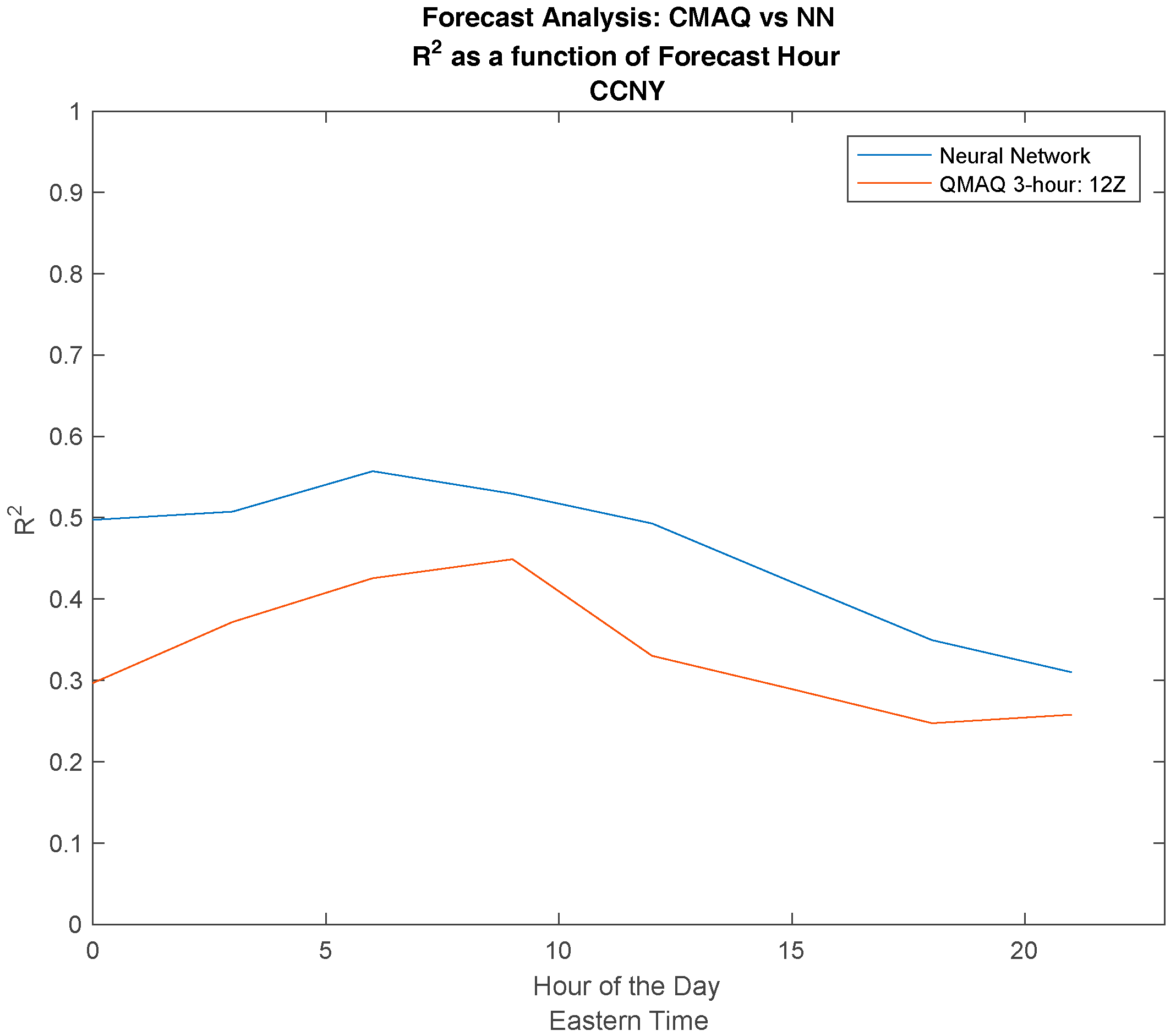

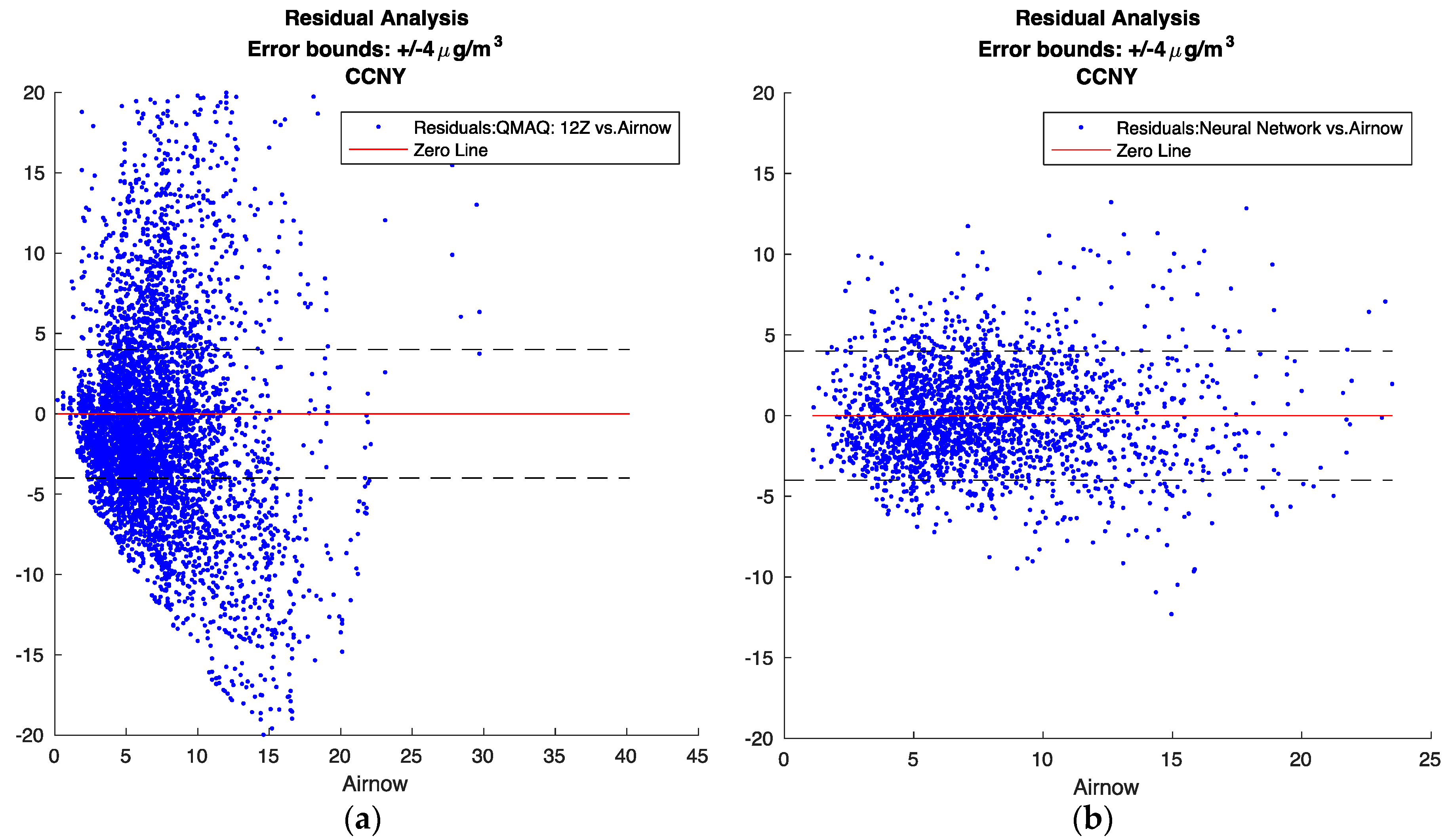

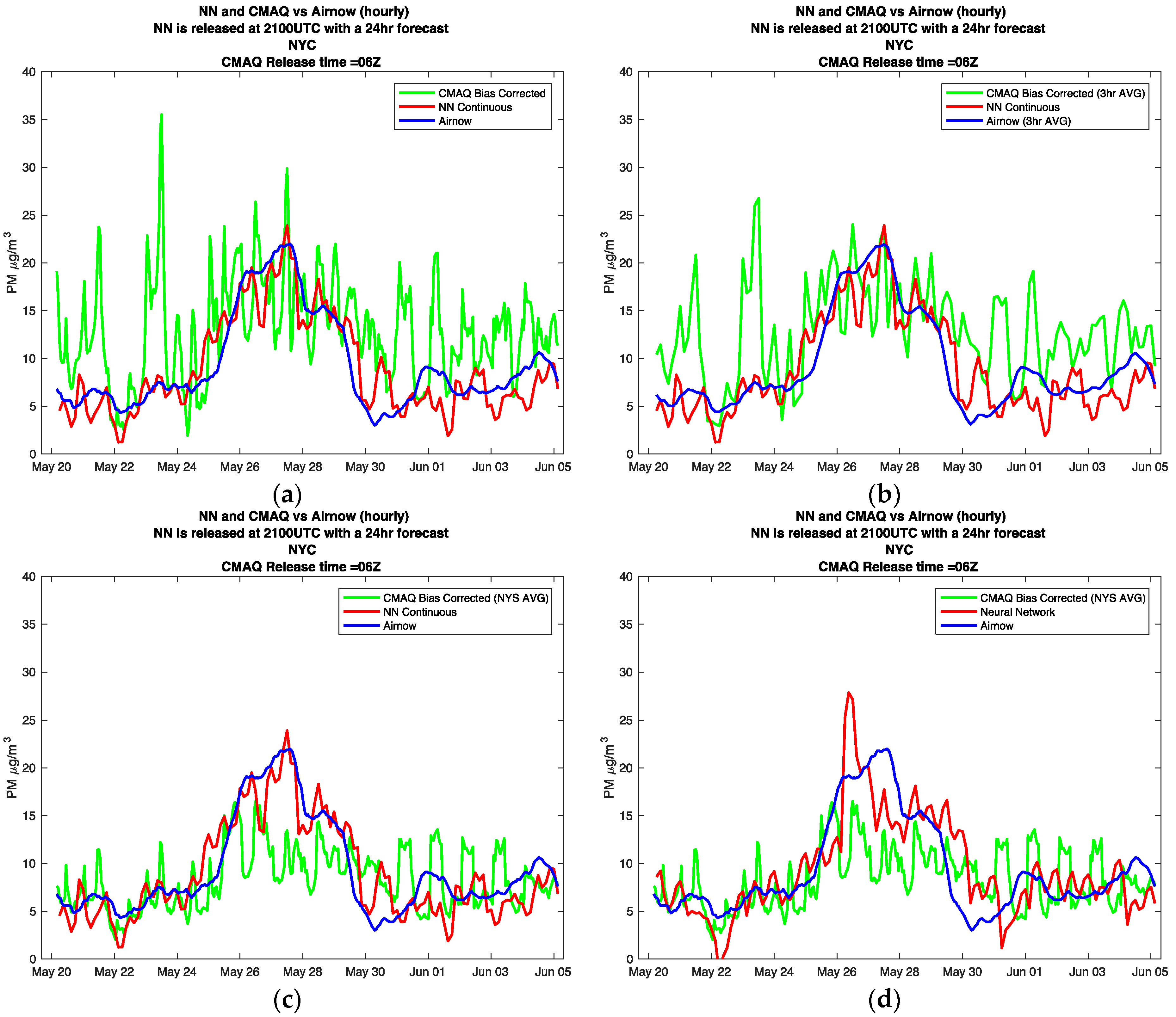

3.3.1. Neural Network and CMAQ Comparison

3.3.2. Heavy Pollution Transport Events

4. Conclusions

Future Work

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Datasets

| Name | Abbreviation | Latitude | Longitude | Land Type |

|---|---|---|---|---|

| Amherst | AMHT | 42.99 | −78.77 | Suburban |

| CCNY | CCNY | 40.82 | −73.95 | Urban |

| Holtsville | HOLT | 40.83 | −73.06 | Suburban |

| IS 52 | IS52 | 40.82 | −73.90 | Suburban |

| Loudonville | LOUD | 42.68 | −73.76 | Urban |

| Queens College 2 | QC2 | 40.74 | −73.82 | Suburban |

| Rochester Pri 2 | RCH2 | 43.15 | −77.55 | Urban |

| Rockland County | RCKL | 41.18 | −74.03 | Rural |

| S. Wagner HS | WGHS | 40.60 | −74.13 | Urban |

| White Plains | WHPL | 41.05 | −73.76 | Suburban |

| NYSDEC ID | Station Name | Latitude | Longitude | Land Type |

|---|---|---|---|---|

| 360010005 | Albany County Health Dept | 42.6423 | −73.7546 | Urban |

| 360050112 | IS 74 | 40.8155 | −73.8855 | Suburban |

| 360291014 | Brookside Terrace | 42.9211 | −78.7653 | Suburban |

| 360551007 | Rochester 2 | 43.1462 | −77.5482 | Urban |

| 360610135 | CCNY | 40.8198 | −73.9483 | Urban |

| 360810120 | Maspeth Library | 40.7270 | −73.8931 | Suburban |

| 360850055 | Freshkills West | 40.5802 | −74.1983 | Suburban |

| 360870005 | Rockland County | 41.1821 | −74.0282 | Rural |

| 361030009 | Holtsville | 40.8280 | −73.0575 | Suburban |

| 361192004 | White Plains | 41.0519 | −73.7637 | Suburban |

References

- Pope, C.A., III; Dockery, D.W. Health effects of fine particulate air pollution: Lines that connect. J. Air Waste Manag. Assoc. 2006, 56, 709–742. [Google Scholar] [CrossRef] [PubMed]

- Pope, C.A., III; Burnett, R.T.; Thun, M.J.; Calle, E.E.; Krewski, D.; Ito, K.; Thurston, G.D. Lung cancer, cardiopulmonary mortality, and long-term exposure to fine particulate air pollution. JAMA 2002, 287, 1132–1141. [Google Scholar] [CrossRef] [PubMed]

- Weber, S.A.; Insaf, T.Z.; Hall, E.S.; Talbot, T.O.; Huff, A.K. Assessing the impact of fine particulate matter (PM2.5) on respiratory-cardiovascular chronic diseases in the New York City Metropolitan area using Hierarchical Bayesian Model estimates. Environ. Res. 2016, 151, 399–409. [Google Scholar] [CrossRef] [PubMed]

- Rattigan, O.V.; Felton, H.D.; Bae, M.; Schwab, J.J.; Demerjian, K.L. Multi-year hourly PM2.5 carbon measurements in New York: Diurnal, day of week and seasonal patterns. Atmos. Environ. 2010, 44, 2043–2053. [Google Scholar] [CrossRef]

- Byun, D.; Schere, K.L. Review of the governing equations, computational algorithms, and other components of the Models-3 Community Multiscale Air Quality (CMAQ) modeling system. Appl. Mech. Rev. 2006, 59, 51–77. [Google Scholar] [CrossRef]

- McKeen, S.; Chung, S.H.; Wilczak, J.; Grell, G.; Djalalova, I.; Peckham, S.; Gong, W.; Bouchet, V.; Moffet, R.; Tang, Y.; et al. Evaluation of several PM2.5 forecast models using data collected during the ICARTT/NEAQS 2004 field study. J. Geophys. Res. Atmos. 2007, 112. [Google Scholar] [CrossRef]

- Yu, S.; Mathur, R.; Schere, K.; Kang, D.; Pleim, J.; Young, J.; Tong, D.; Pouliot, G.; McKeen, S.A.; Rao, S.T. Evaluation of real-time PM2.5 forecasts and process analysis for PM2.5 formation over the eastern United States using the Eta-CMAQ forecast model during the 2004 ICARTT study. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Huang, J.; McQueen, J.; Wilczak, J.; Djalalova, I.; Stajner, I.; Shafran, P.; Allured, D.; Lee, P.; Pan, L.; Tong, D.; et al. Improving NOAA NAQFC PM2.5 Predictions with a Bias Correction Approach. Weather Forecast. 2017, 32, 407–421. [Google Scholar] [CrossRef]

- Doraiswamy, P.; Hogrefe, C.; Hao, W.; Civerolo, K.; Ku, J.Y.; Sistla, G. A retrospective comparison of model-based forecasted PM2.5 concentrations with measurements. J. Air Waste Manag. Assoc. 2010, 60, 1293–1308. [Google Scholar] [CrossRef] [PubMed]

- Gan, C.-M.; Wu, Y.; Madhavan, B.L.; Gross, B.; Moshary, F. Application of active optical sensors to probe the vertical structure of the urban boundary layer and assess anomalies in air quality model PM2.5 forecasts. Atmos. Environ. 2011, 45, 6613–6621. [Google Scholar] [CrossRef]

- Files in /mmb/aq/sv/grib. Available online: http://www.emc.ncep.noaa.gov/mmb/aq/sv/grib/ (accessed on 1 December 2016).

- NCEP Operational Air Quality Forecast Change Log. Available online: http://www.emc.ncep.noaa.gov/mmb/aq/AQChangelog.html (accessed on 1 May 2017).

- Lee, P.; McQueen, J.; Stajner, I.; Huang, J.; Pan, L.; Tong, D.; Kim, H.; Tang, Y.; Kondragunta, S.; Ruminski, M.; et al. NAQFC developmental forecast guidance for fine particulate matter (PM2.5). Weather Forecast. 2017, 32, 343–360. [Google Scholar] [CrossRef]

- McKeen, S.; Grell, G.; Peckham, S.; Wilczak, J.; Djalalova, I.; Hsie, E.; Frost, G.; Peischl, J.; Schwarz, J.; Spackman, R.; et al. An evaluation of real-time air quality forecasts and their urban emissions over eastern Texas during the summer of 2006 Second Texas Air Quality Study field study. J. Geophys. Res. Atmos. 2009, 114. [Google Scholar] [CrossRef]

- Demuth, H.; Beale, M. MATLAB and Neural Network Toolbox Release 2016a; The MathWorks, Inc.: Natick, MA, USA, 1998. [Google Scholar]

- Wu, Y.; Pena, W.; Diaz, A.; Gross, B.; Moshary, F. Wildfire Smoke Transport and Impact on Air Quality Observed by a Multi-Wavelength Elastic-Raman Lidar and Ceilometer in New York City. In Proceedings of the 28th International Laser Radar Conference, Bucharest, Romania, 25–30 June 2017. [Google Scholar]

- Anderson, W.; Krimchansky, A.; Birmingham, M.; Lombardi, M. The Geostationary Operational Satellite R Series SpaceWire Based Data System. In Proceedings of the 2016 International SpaceWire Conference (SpaceWire), Yokohama, Japan, 25–27 October 2016. [Google Scholar]

- Lebair, W.; Rollins, C.; Kline, J.; Todirita, M.; Kronenwetter, J. Post launch calibration and testing of the Advanced Baseline Imager on the GOES-R satellite. In Proceedings of the SPIE Asia-Pacific Remote Sensing, New Delhi, India, 3–7 April 2016. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lightstone, S.D.; Moshary, F.; Gross, B. Comparing CMAQ Forecasts with a Neural Network Forecast Model for PM2.5 in New York. Atmosphere 2017, 8, 161. https://doi.org/10.3390/atmos8090161

Lightstone SD, Moshary F, Gross B. Comparing CMAQ Forecasts with a Neural Network Forecast Model for PM2.5 in New York. Atmosphere. 2017; 8(9):161. https://doi.org/10.3390/atmos8090161

Chicago/Turabian StyleLightstone, Samuel D., Fred Moshary, and Barry Gross. 2017. "Comparing CMAQ Forecasts with a Neural Network Forecast Model for PM2.5 in New York" Atmosphere 8, no. 9: 161. https://doi.org/10.3390/atmos8090161