Comparison of Precipitation and Streamflow Correcting for Ensemble Streamflow Forecasts

Abstract

:1. Introduction

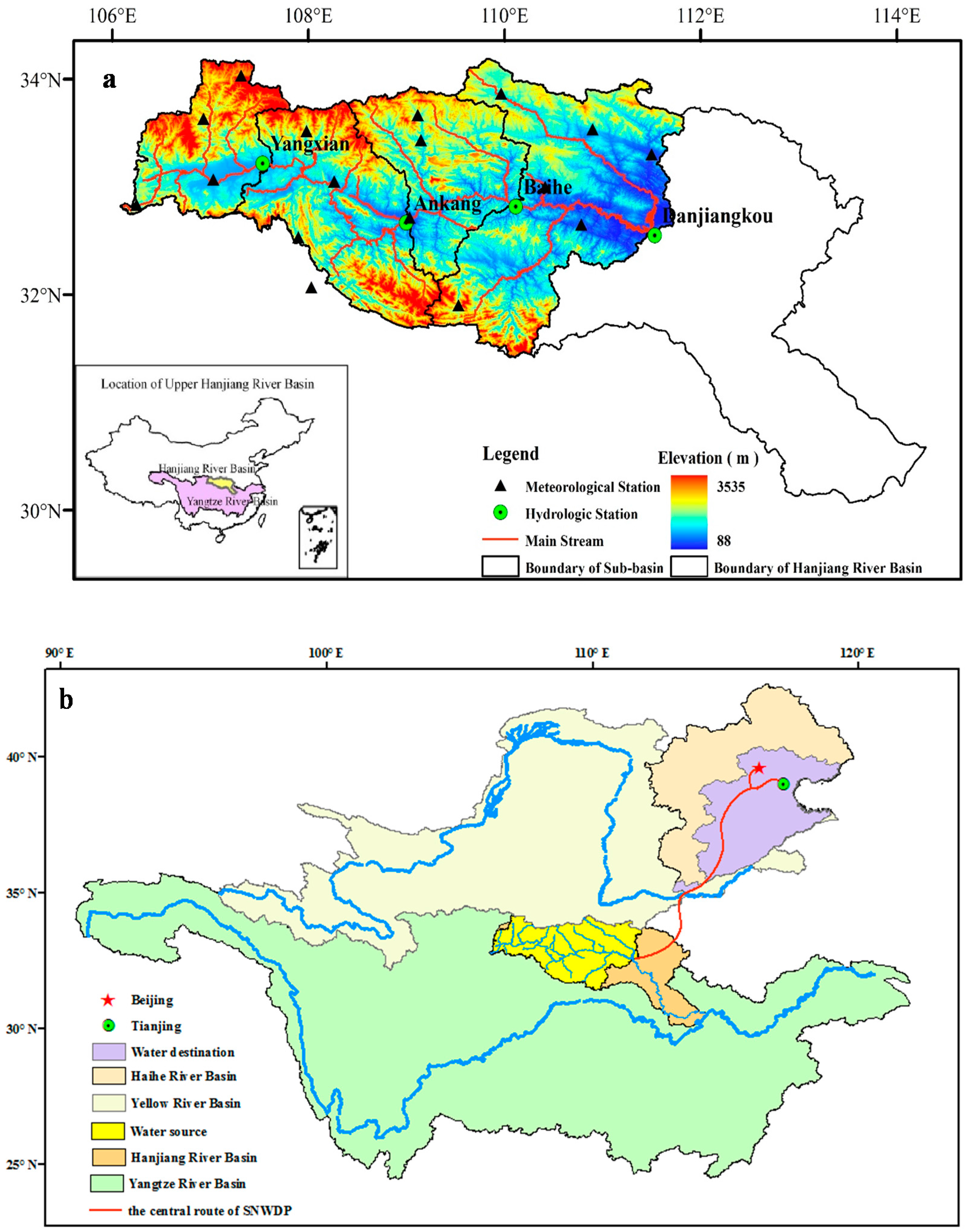

2. Study Catchment and Data

2.1. Study Catchment

2.2. Data

3. Method

3.1. Hydrological Model

3.2. Bias Correction Method

3.3. Description of HEPS Method

3.4. Forecast Verification

4. Results

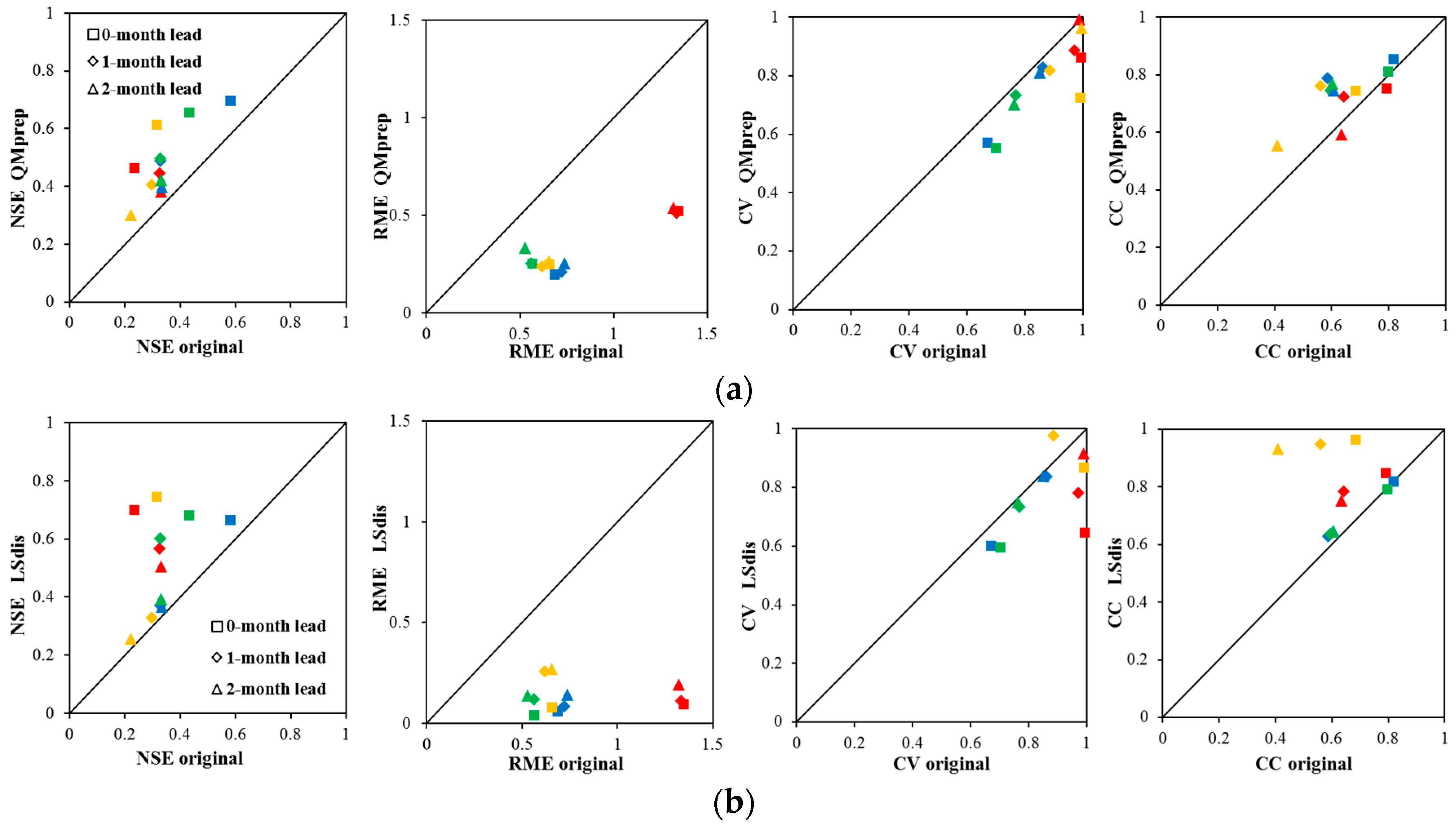

4.1. Forecast Accuracy

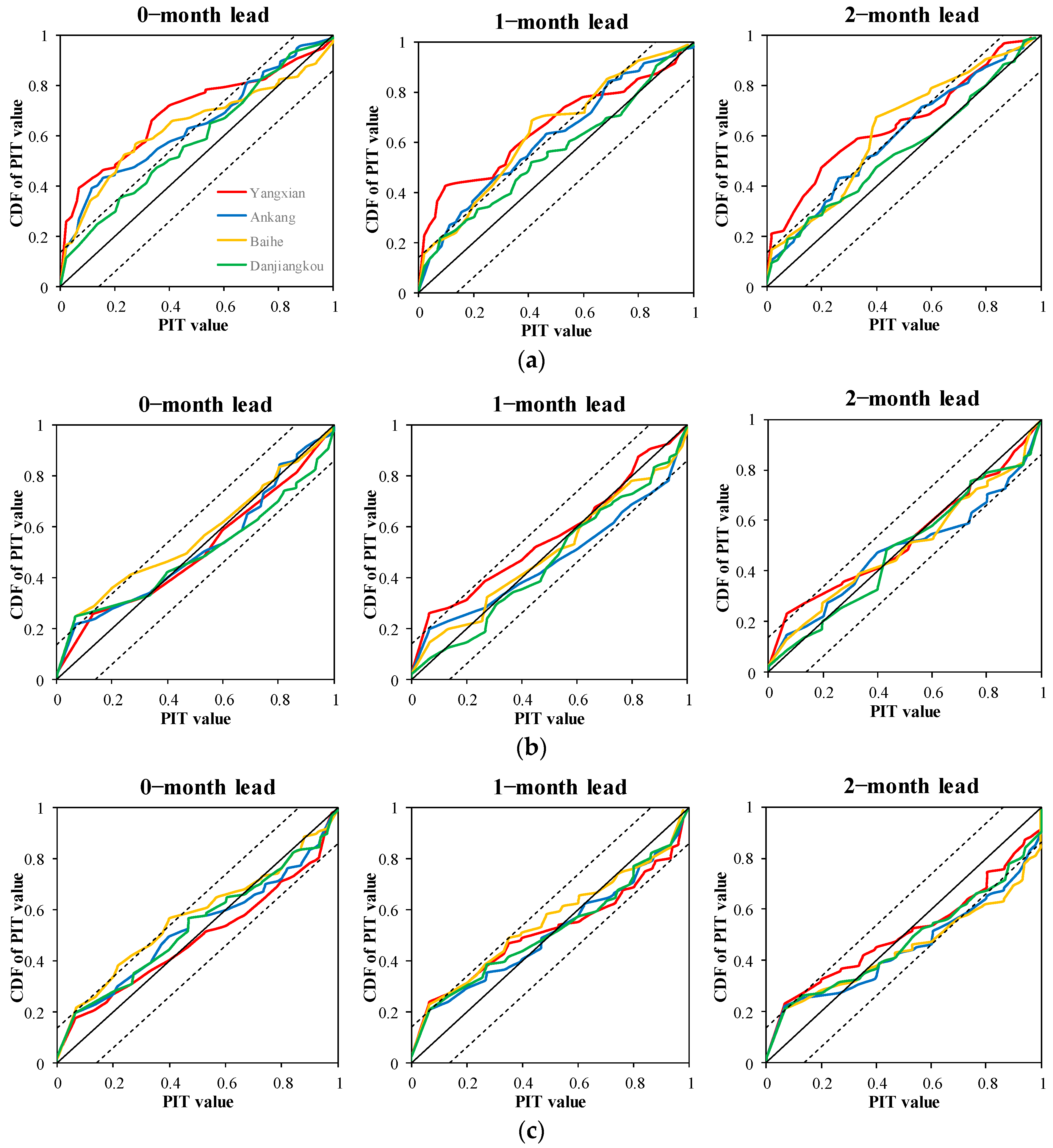

4.2. Forecast Reliability

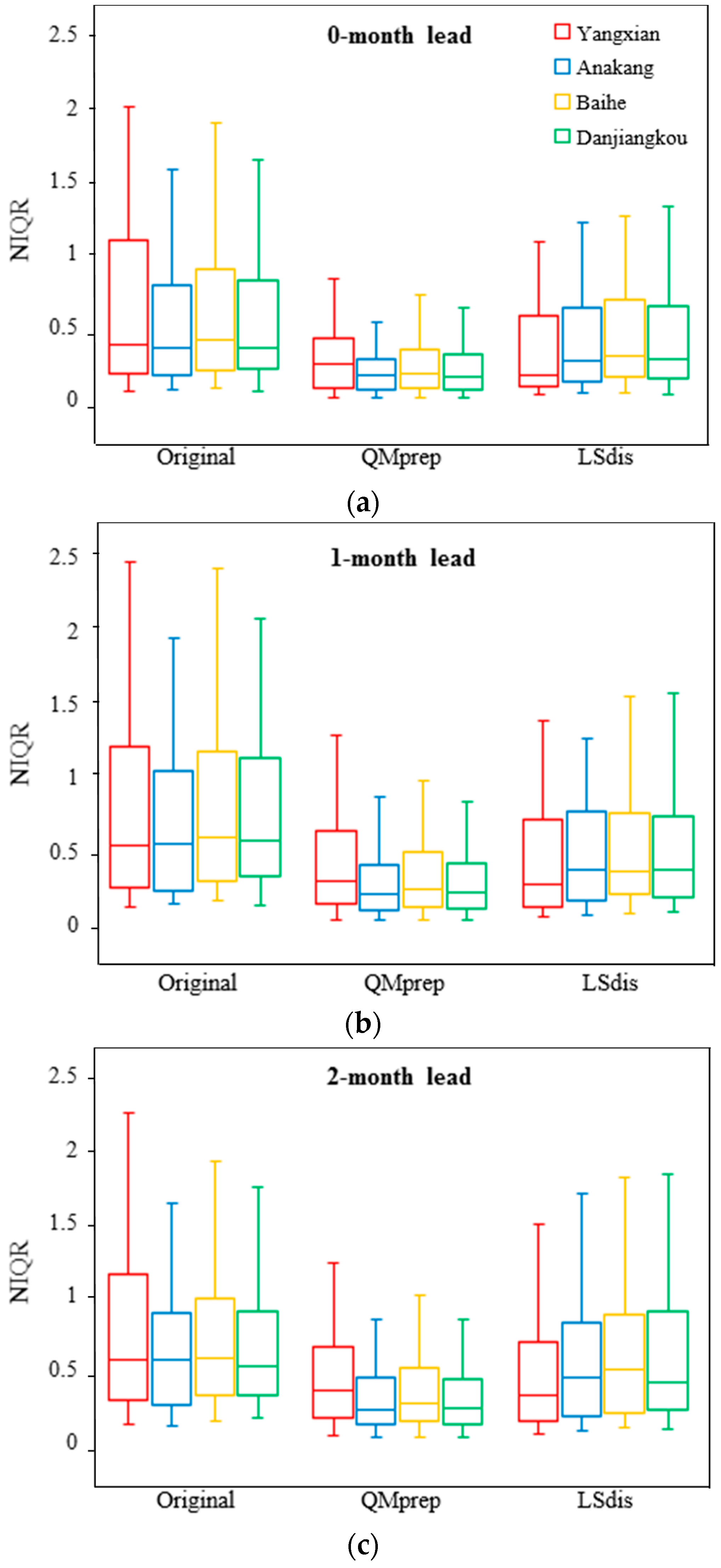

4.3. Forecast Sharpness

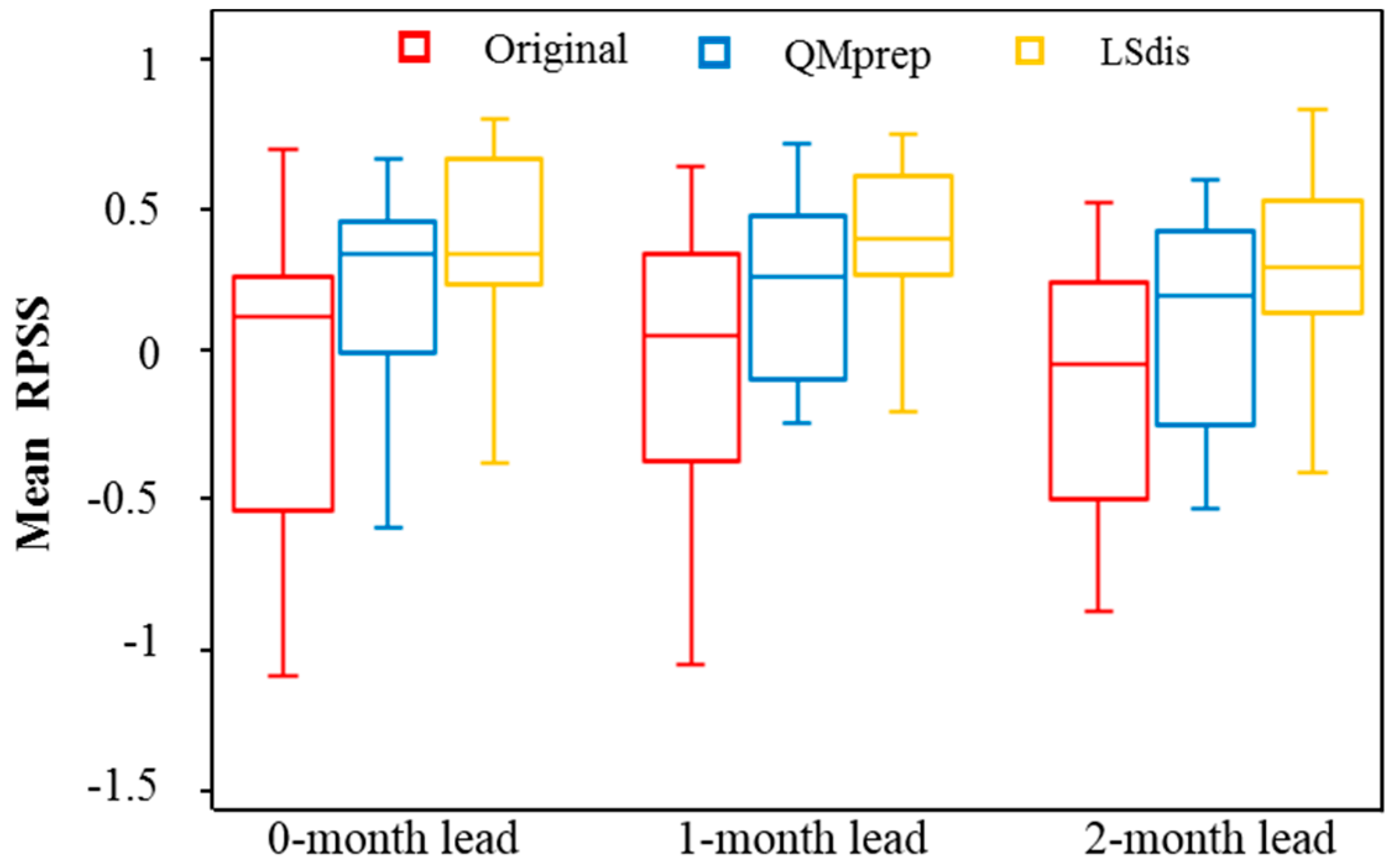

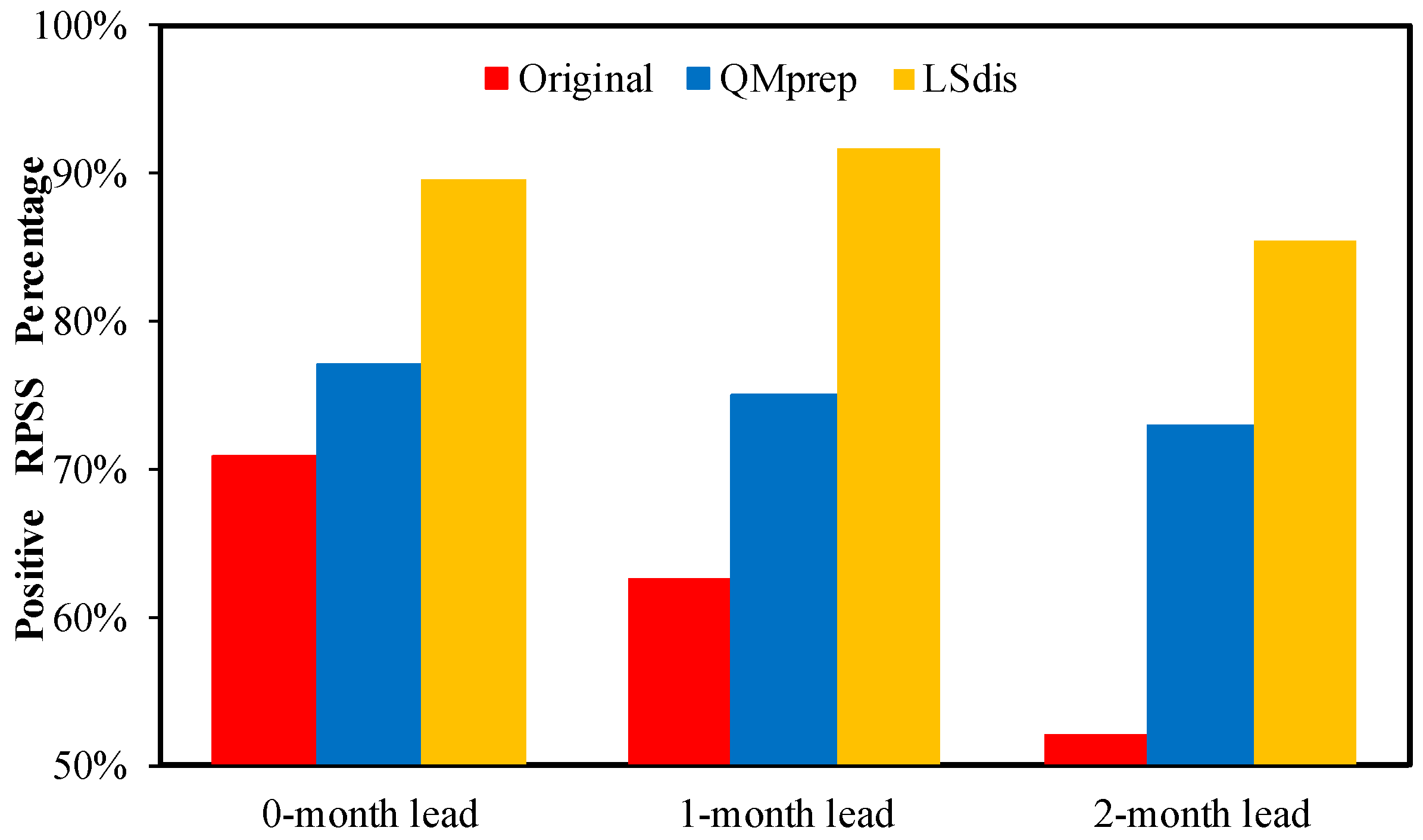

4.4. Forecast Overall Performance

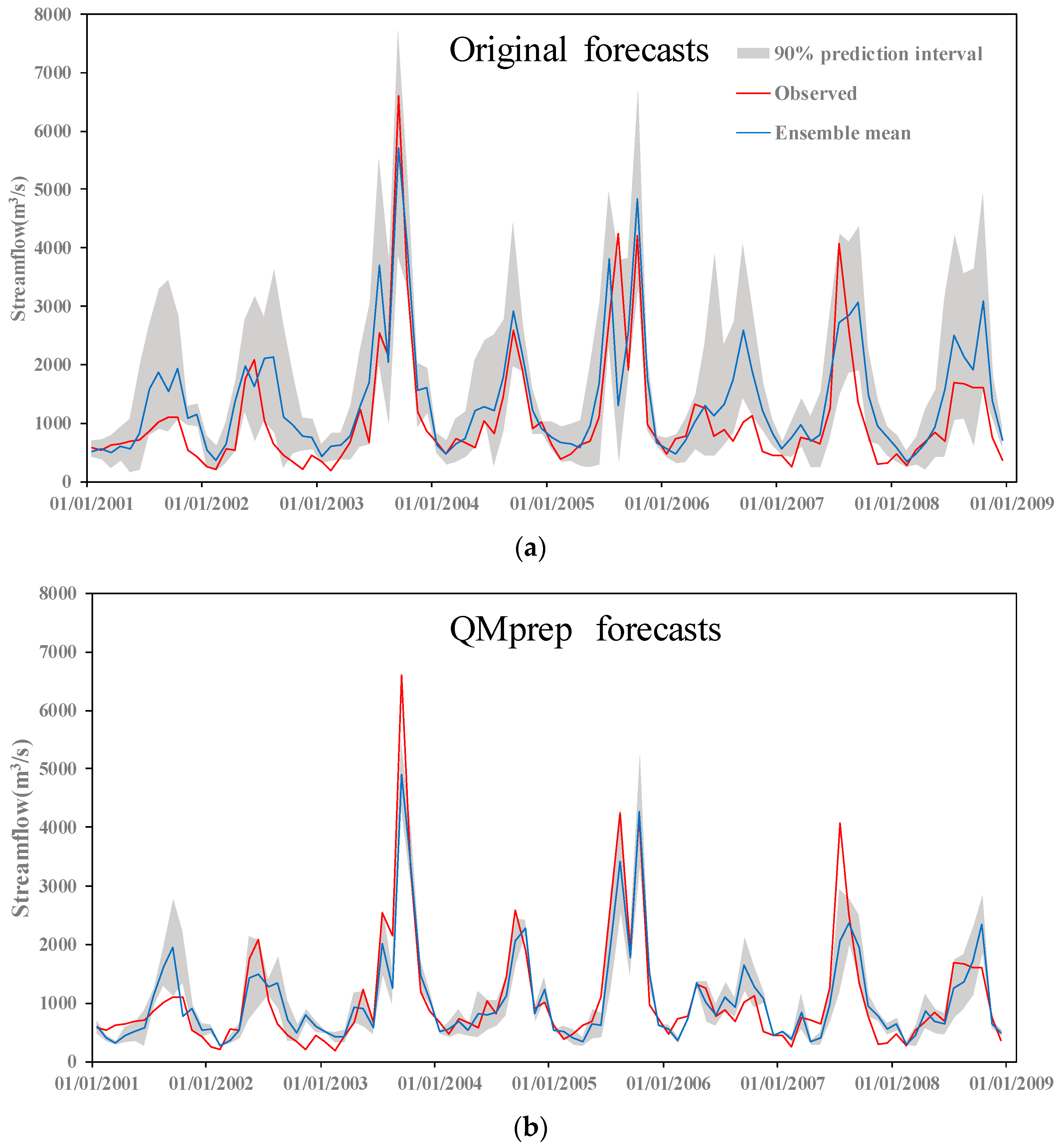

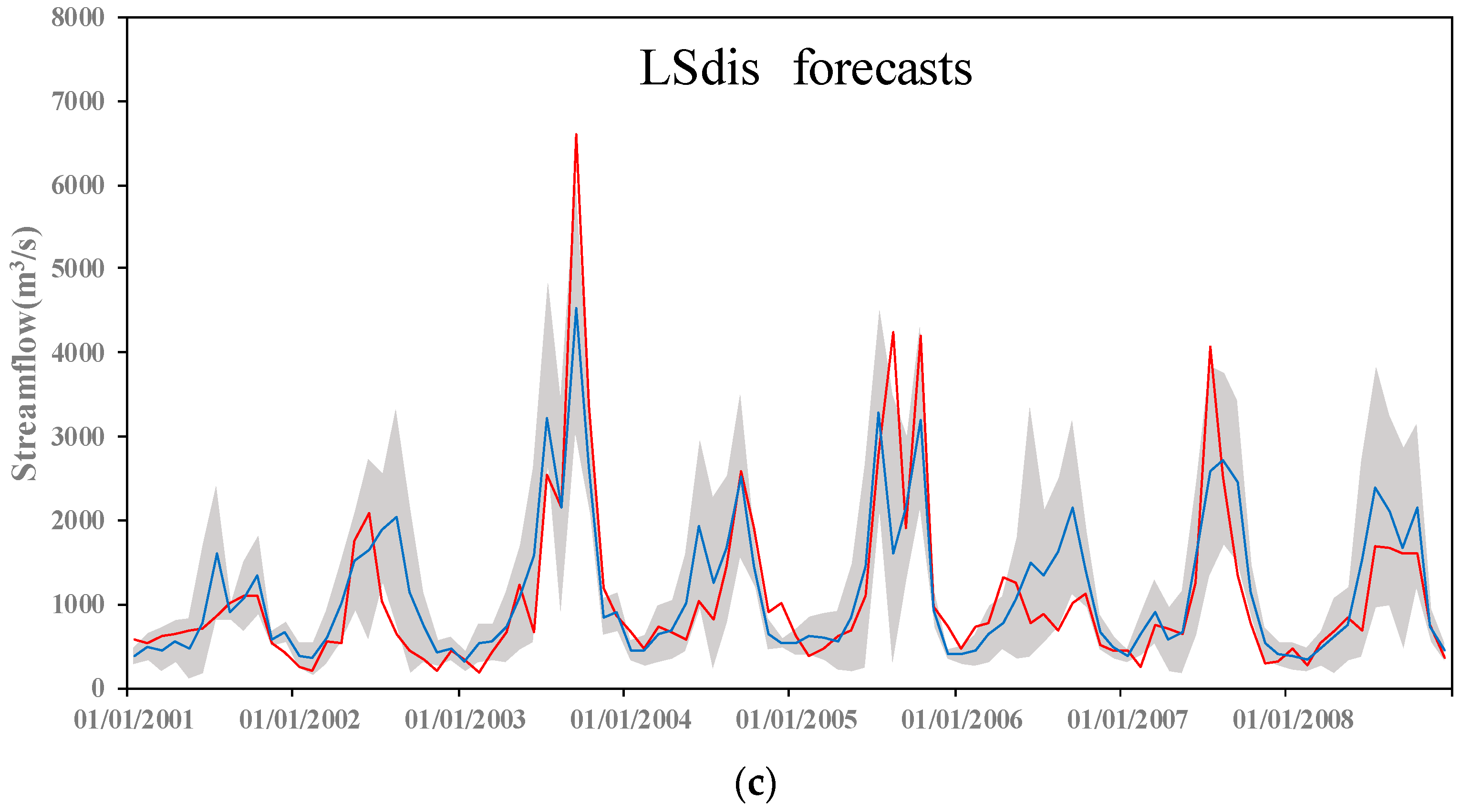

4.5. Forecast Hydrographs Illustration

5. Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A

Appendix A.1. Deterministic Verification Metrics

Appendix A.1.1. Nash–Sutcliffe Efficiency

Appendix A.1.2. Relative Mean Error

Appendix A.1.3. Coefficient Variation of Root Mean Squared Error

Appendix A.1.4. Correlation Coefficient

Appendix A.2. Probabilistic Verification Metrics

Appendix A.2.1. Probability Integral Transform (PIT) Diagram

Appendix A.2.2. Normalized Interquartile Range

Appendix A.2.3. Mean Rank Probability Skill Score

References

- Alemu, E.T.; Palmer, R.N.; Polebitski, A.; Meaker, B. Decision support system for optimizing reservoir operations using ensemble streamflow predictions. J. Water Res. Plan. Manag. 2011, 137, 72–82. [Google Scholar] [CrossRef]

- Chiew, F.H.S.; Zhou, S.L.; McMahon, T.A. Use of seasonal streamflow forecasts in water resources management. J. Hydrol. 2003, 270, 135–144. [Google Scholar] [CrossRef]

- Maurer, E.P.; Lettenmaier, D.P. Potential effects of long-lead hydrologic predictability on Missouri River main-stem reservoirs. J. Clim. 2004, 17, 174–186. [Google Scholar] [CrossRef]

- Khan, M.Y.A.; Hasan, F.; Panwar, S.; Chakrapani, G.J. Neural network model for discharge and water-level prediction for Ramganga River catchment of Ganga Basin, India. Hydrol. Sci. J. 2016, 61, 2084–2095. [Google Scholar] [CrossRef]

- Cloke, H.L.; Pappenberger, F. Ensemble flood forecasting: A review. J. Hydrol. 2009, 375, 613–626. [Google Scholar] [CrossRef]

- Zhao, T.; Zhao, J. Joint and respective effects of long- and short-term forecast uncertainties on reservoir operations. J. Hydrol. 2014, 517, 83–94. [Google Scholar] [CrossRef]

- Chen, Y.D.; Zhang, Q.; Xiao, M.; Singh, V.P.; Zhang, S. Probabilistic forecasting of seasonal droughts in the Pearl River basin, China. Stoch. Environ. Res. Risk Assess. 2015, 30, 2031–2040. [Google Scholar] [CrossRef]

- Easey, J.; Prudhomme, C.; Hannah, D.M. Seasonal forecasting of river flows: A review of the state-of-the-art. IAHS Publ. 2006, 308, 158–162. [Google Scholar]

- Schaake, J.C.; Hamill, T.M.; Buizza, R.; Clark, M. HEPEX: The Hydrological ensemble prediction experiment. Bull. Am. Meteorol. Soc. 2007, 88, 1541–1547. [Google Scholar] [CrossRef]

- Hamill, T.M.; Hagedorn, R.; Whitaker, J.S. Probabilistic forecast calibration using ECMWF and GFS ensemble reforecasts. Part II: Precipitation. Mon. Weather Rev. 2008, 136, 2620–2632. [Google Scholar] [CrossRef]

- Roulin, E.; Vannitsem, S. Postprocessing of ensemble precipitation predictions with extended logistic regression based on hindcasts. Mon. Weather Rev. 2012, 140, 874–888. [Google Scholar] [CrossRef]

- Bremnes, J.B. Probabilistic forecasts of precipitation in terms of quantiles using NWP model output. Mon. Weather Rev. 2004, 132, 338–347. [Google Scholar] [CrossRef]

- Friederichs, P.; Hense, A. A probabilistic forecast approach for daily precipitation totals. Weather Forecast. 2008, 23, 659–673. [Google Scholar] [CrossRef]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian model averaging to calibrate forecast ensembles. Mon. Weather Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef]

- Schmeits, M.J.; Kok, K.J. A Comparison between raw ensemble output, (modified) Bayesian model averaging, and extended logistic regression using ECMWF ensemble precipitation reforecasts. Mon. Weather Rev. 2010, 138, 4199–4211. [Google Scholar] [CrossRef]

- Hamill, T.M.; Whitaker, J.S.; Wei, X. Ensemble reforecasting: Improving medium-range forecast skill using retrospective forecasts. Mon. Weather Rev. 2004, 132, 1434–1447. [Google Scholar] [CrossRef]

- Yuan, X.; Wood, E.F. Downscaling precipitation or bias-correcting streamflow? Some implications for coupled general circulation model (CGCM)-based ensemble seasonal hydrologic forecast. Water Resour. Res. 2012, 48, 12519. [Google Scholar] [CrossRef]

- Yuan, X.; Wood, E.F.; Ma, Z. A review on climate-model-based seasonal hydrologic forecasting: Physical understanding and system development. Wiley Interdiscip. Rev. Water 2015, 2, 523–536. [Google Scholar] [CrossRef]

- Wood, A.W.; Lettenmaier, D.P. A Test Bed for New Seasonal Hydrologic Forecasting Approaches in the Western United States. Bull. Am. Meteorol. Soc. 2006, 87, 1699–1712. [Google Scholar] [CrossRef]

- Piani, C.; Haerter, J.O.; Coppola, E. Statistical bias correction for daily precipitation in regional climate models over Europe. Theor. Appl. Climatol. 2009, 99, 187–192. [Google Scholar] [CrossRef]

- Lafon, T.; Dadson, S.; Buys, G.; Prudhomme, C. Bias correction of daily precipitation simulated by a regional climate model: A comparison of methods. Int. J. Climatol. 2013, 33, 1367–1381. [Google Scholar] [CrossRef] [Green Version]

- Hashino, T.; Bradley, A.A.; Schwartz, S.S. Evaluation of bias-correction methods for ensemble streamflow volume forecasts. Hydrol. Earth Syst. Sci. 2007, 11, 939–950. [Google Scholar] [CrossRef]

- Kang, T.-H.; Kim, Y.-O.; Hong, I.-P. Comparison of pre- and post-processors for ensemble streamflow prediction. Atmos. Sci. Lett. 2010, 11, 153–159. [Google Scholar] [CrossRef]

- Wood, A.W.; Schaake, J.C. Correcting errors in streamflow forecast ensemble mean and spread. J. Hydrometeorol. 2008, 9, 132–148. [Google Scholar] [CrossRef]

- Roy, T.; Serrat-Capdevila, A.; Gupta, H.; Valdes, J. A platform for probabilistic multimodel and multiproduct streamflow forecasting. Water Resour. Res. 2017, 53, 376–399. [Google Scholar] [CrossRef]

- Zalachori, I.; Ramos, M.H.; Garçon, R.; Mathevet, T.; Gailhard, J. Statistical processing of forecasts for hydrological ensemble prediction: A comparative study of different bias correction strategies. Adv. Sci. Res. 2012, 8, 135–141. [Google Scholar] [CrossRef]

- Trambauer, P.; Werner, M.; Winsemius, H.C.; Maskey, S.; Dutra, E.; Uhlenbrook, S. Hydrological drought forecasting and skill assessment for the Limpopo River basin, southern Africa. Hydrol. Earth Syst. Sci. 2015, 19, 1695–1711. [Google Scholar] [CrossRef]

- Wood, A.W. Long-range experimental hydrologic forecasting for the eastern United States. J. Geophys. Res. 2002, 107. [Google Scholar] [CrossRef]

- Shi, X.; Wood, A.W.; Lettenmaier, D.P. How Essential is hydrologic model calibration to seasonal streamflow forecasting? J. Hydrometeorol. 2008, 9, 1350–1363. [Google Scholar] [CrossRef]

- Crochemore, L.; Ramos, M.-H.; Pappenberger, F. Bias correcting precipitation forecasts to improve the skill of seasonal streamflow forecasts. Hydrol. Earth Syst. Sci. 2016, 20, 3601–3618. [Google Scholar] [CrossRef]

- Clark, M.; Gangopadhyay, S.; Hay, L.; Rajagopalan, B.; Wilby, R. The Schaake shuffle: A method for reconstructing space-time variability in forecasted precipitation and temperature fields. J. Hydrometeorol. 2004, 5, 243–262. [Google Scholar] [CrossRef]

- Luo, L.; Wood, E.F.; Pan, M. Bayesian merging of multiple climate model forecasts for seasonal hydrological predictions. J. Geophys. Res. 2007, 112. [Google Scholar] [CrossRef]

- Wang, Q.J.; Robertson, D.E.; Chiew, F.H.S. A Bayesian joint probability modeling approach for seasonal forecasting of streamflows at multiple sites. Water Resour. Res. 2009, 45, 641–648. [Google Scholar] [CrossRef]

- Schepen, A.; Wang, Q.J. Ensemble forecasts of monthly catchment rainfall out to long lead times by post-processing coupled general circulation model output. J. Hydrol. 2014, 519, 2920–2931. [Google Scholar] [CrossRef]

- Zhao, T.; Bennett, J.C.; Wang, Q.J.; Schepen, A.; Wood, A.W.; Robertson, D.E.; Ramos, M.-H. How Suitable Is Quantile Mapping for Postprocessing GCM Precipitation Forecasts? J. Clim. 2017, 30, 3185–3196. [Google Scholar] [CrossRef]

- Molteni, F.; Stockdale, T.; Balmaseda, M.; Balsamo, G.; Buizza, R.; Ferranti, L.; Magnusson, L.; Mogensen, K.; Palmer, T. The New ECMWF Seasonal Forecast System (System 4); European Centre for Medium Range Weather Forecasts (ECMWF): Reading, UK, 2011. [Google Scholar]

- Peng, Z.; Wang, Q.J.; Bennett, J.C.; Schepen, A.; Pappenberger, F.; Pokhrel, P.; Wang, Z. Statistical calibration and bridging of ECMWF System4 outputs for forecasting seasonal precipitation over China. J. Geophys. Res. Atmos. 2014, 119, 7116–7135. [Google Scholar] [CrossRef]

- Kim, H.-M.; Webster, P.J.; Curry, J.A. Seasonal prediction skill of ECMWF System 4 and NCEP CFSv2 retrospective forecast for the Northern Hemisphere winter. Clim. Dyn. 2012, 39, 2957–2973. [Google Scholar] [CrossRef]

- Wetterhall, F.; Winsemius, H.C.; Dutra, E.; Werner, M.; Pappenberger, E. Seasonal predictions of agro-meteorological drought indicators for the Limpopo basin. Hydrol. Earth Syst. Sci. 2015, 19, 2577–2586. [Google Scholar] [CrossRef]

- Liu, X.; Luo, Y.; Yang, T.; Liang, K.; Zhang, M.; Liu, C. Investigation of the probability of concurrent drought events between the water source and destination regions of China’s water diversion project. Geophys. Res. Lett. 2015, 42, 8424–8431. [Google Scholar] [CrossRef]

- Sun, Y.; Tian, F.; Yang, L.; Hu, H. Exploring the spatial variability of contributions from climate variation and change in catchment properties to streamflow decrease in a mesoscale basin by three different methods. J. Hydrol. 2014, 508, 170–180. [Google Scholar] [CrossRef]

- Allen, R.G.; Pereira, L.S.; Raes, D.; Smith, M. Crop Evapotranspiration-Guidelines for Computing Crop Water Requirements-FAO Irrigation and Drainage Paper 56; Food and Agriculture Organization (FAO): Rome, Italy, 1998; Volume 300, p. D05109. [Google Scholar]

- Tian, F.; Hu, H.; Lei, Z.; Sivapalan, M. Extension of the Representative Elementary Watershed approach for cold regions via explicit treatment of energy related processes. Hydrol. Earth Syst. Sci. 2006, 10, 619–644. [Google Scholar] [CrossRef]

- Reggiani, P.; Sivapalan, M.; Hassanizadeh, S.M. A unifying framework for watershed thermodynamics: Balance equations for mass, momentum, energy and entropy, and the second law of thermodynamics. Adv. Water Resour. 1998, 22, 367–398. [Google Scholar] [CrossRef]

- He, Z.H.; Tian, F.Q.; Gupta, H.V.; Hu, H.C.; Hu, H.P. Diagnostic calibration of a hydrological model in a mountain area by hydrograph partitioning. Hydrol. Earth Syst. Sci. 2015, 19, 1807–1826. [Google Scholar] [CrossRef]

- Li, H.; Sivapalan, M.; Tian, F. Comparative diagnostic analysis of runoff generation processes in Oklahoma DMIP2 basins: The Blue River and the Illinois River. J. Hydrol. 2012, 418–419, 90–109. [Google Scholar] [CrossRef]

- Tian, F.; Li, H.; Sivapalan, M. Model diagnostic analysis of seasonal switching of runoff generation mechanisms in the Blue River basin, Oklahoma. J. Hydrol. 2012, 418–419, 136–149. [Google Scholar] [CrossRef]

- Reed, P.; Minsker, B.S.; Goldberg, D.E. Simplifying multiobjective optimization: An automated design methodology for the nondominated sorted genetic algorithm-II. Water Resour. Res. 2003, 39, 257–271. [Google Scholar] [CrossRef]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

- Alfieri, L.; Pappenberger, F.; Wetterhall, F.; Haiden, T.; Richardson, D.; Salamon, P. Evaluation of ensemble streamflow predictions in Europe. J. Hydrol. 2014, 517, 913–922. [Google Scholar] [CrossRef]

- Renner, M.; Werner, M.G.F.; Rademacher, S.; Sprokkereef, E. Verification of ensemble flow forecasts for the River Rhine. J. Hydrol. 2009, 376, 463–475. [Google Scholar] [CrossRef]

- Verkade, J.S.; Brown, J.D.; Reggiani, P.; Weerts, A.H. Post-processing ECMWF precipitation and temperature ensemble reforecasts for operational hydrologic forecasting at various spatial scales. J. Hydrol. 2013, 501, 73–91. [Google Scholar] [CrossRef]

- Laio, F.; Tamea, S. Verification tools for probabilistic forecasts of continuous hydrological variables. Hydrol. Earth Syst. Sci. 2007, 11, 1267–1277. [Google Scholar] [CrossRef]

- Gneiting, T.; Balabdaoui, F.; Raftery, A.E. Probabilistic forecasts, calibration and sharpness. J. R. Stat. Soc. B 2007, 69, 243–268. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences; Academic Press: Cambridge, MA, USA, 2011; Volume 100. [Google Scholar]

- Bourgin, F.; Ramos, M.H.; Thirel, G.; Andréassian, V. Investigating the interactions between data assimilation and post-processing in hydrological ensemble forecasting. J. Hydrol. 2014, 519, 2775–2784. [Google Scholar] [CrossRef]

- Gneiting, T.; Raftery, A.E.; Westveld, A.H.; Goldman, T. Calibrated probabilistic forecasting using ensemble model output statistics and minimum CRPS estimation. Mon. Weather Rev. 2005, 133, 1098–1118. [Google Scholar] [CrossRef]

- Smith, J.A.; Day, G.N.; Kane, M.D. Nonparametric framework for long-range streamflow forecasting. J. Water Res. Plan. Manag. 1992, 118, 82–92. [Google Scholar] [CrossRef]

- Yang, L.; Tian, F.; Sun, Y.; Yuan, X.; Hu, H. Attribution of hydrologic forecast uncertainty within scalable forecast windows. Hydrol. Earth Syst. Sci. 2014, 18, 775–786. [Google Scholar] [CrossRef] [Green Version]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Jiang, Y.; Lei, X.; Tian, F.; Duan, H.; Lu, H. Comparison of Precipitation and Streamflow Correcting for Ensemble Streamflow Forecasts. Water 2018, 10, 177. https://doi.org/10.3390/w10020177

Li Y, Jiang Y, Lei X, Tian F, Duan H, Lu H. Comparison of Precipitation and Streamflow Correcting for Ensemble Streamflow Forecasts. Water. 2018; 10(2):177. https://doi.org/10.3390/w10020177

Chicago/Turabian StyleLi, Yilu, Yunzhong Jiang, Xiaohui Lei, Fuqiang Tian, Hao Duan, and Hui Lu. 2018. "Comparison of Precipitation and Streamflow Correcting for Ensemble Streamflow Forecasts" Water 10, no. 2: 177. https://doi.org/10.3390/w10020177

APA StyleLi, Y., Jiang, Y., Lei, X., Tian, F., Duan, H., & Lu, H. (2018). Comparison of Precipitation and Streamflow Correcting for Ensemble Streamflow Forecasts. Water, 10(2), 177. https://doi.org/10.3390/w10020177