1. Introduction

Virtual reality (VR) is a technology that stimulates a variety of the user’s senses, including tactile, vision and sound, inside a virtual environment created with a computer, so that users may virtually experience environments and spaces that can be difficult to undergo in real life. As computer graphics-based applied VR technology becomes advanced and as various VR hardware devices are developed and popularized, a variety of applications that enable the user to virtually encounter spatial and temporal experiences is being produced. Additionally, there is a growing demand for research and technologies that support these applications.

It is important that VR moves beyond the boundaries of the virtual and the real to provide a presence that allows the user to feel and experience realistic sensations. To achieve this, it is fundamentally necessary to provide 3D visual information by utilizing devices, such as the head-mounted display (HMD) or the 3D monitor, and to present interactions similar to those in real environments by satisfying the user’s sense of sound and tactile sensation. Ultimately, a system or interaction must be provided that allows the user to immerse deeper into the virtual environment. A variety of studies on immersive VR has been conducted toward achieving this goal [

1,

2,

3,

4]. Related research includes studies that have presented users with varied presence by providing new visual experiences [

5]. Many satisfy the user’s sense of sound by supplying the VR with vast amounts of audio via area and volumetric sources [

6]. Additionally, research has been conducted to enhance immersion during interaction by accurately detecting and tracking hand motions, using devices, such as the optical marker, expressing them in virtual spaces [

7], and in a haptic system that transmits physical feedback, force, etc., generated during hand interaction to the fingertips [

8]. In recent years, a wide range of studies has been conducted to provide improved presence by enhancing the immersion in VR, including research on multimodality, which enhances immersion by providing tactile and sound feedback when the user’s feet meet the ground while wearing shoes with haptic sensors attached [

9]. Studies also directly utilized the user’s leg to create the feeling of walking freely inside a vast virtual space [

10].

However, most studies related to immersive VR and the various applications produced based on these studies have constructed their environment to support the first-person perspective of the user wearing an HMD. In terms of creating a realistic presence, it is thus necessary to construct the environment in the first-person, such that the user views the world through a virtual character’s viewpoint, providing a greater immersion. However, to produce applications that provide new presence and experience, VRs must be presented from a variety of different perspectives. Moreover, focusing solely on high immersion for enhanced presence can result in VR sickness and cause negative effects on VR applications. Thus, diversified viewpoints may be capable of lessening VR sickness while providing a sufficient level of immersion, allowing the user to experience satisfying presence. However, existing studies on user interaction in immersive VR are only applicable to first-person applications; there are not enough studies on presence analysis, which takes various viewpoints into consideration.

Therefore, this study uses a different approach than traditional VR viewpoints and proposes interactions that can provide an enhanced presence to users via high immersion in third-person VR (TPVR). Fundamentally, the proposed TPVR interaction asks users to interact with a virtual environment or an object in a more direct way: using their hands while wearing an HMD to transmit 3D visual information. To accomplish this, the study utilizes existing technical equipments that detect and track hand motions. The contributions of the proposed TPVR interaction can be summarized in two ways.

This study presents a method of interaction that applies methods traditionally implemented with a mouse using third-person interactive content with the hands. This includes an interface that allows the user to control the movements of a virtual character from the third-person viewpoint, select 3D virtual environments and objects in a wide concept space, unlike the first-person viewpoint, and expand range-of-action.

By conducting a survey questionnaire experiment, this study analyzes whether the proposed TPVR interaction can provide a presence and experience different from the first-person viewpoint and whether it can be practically applied in VR applications by causing less VR sickness.

Section 2 introduces existing studies on immersive VR that help analyze the need for the proposed TPVR interaction.

Section 3 describes the proposed TPVR interaction.

Section 4 describes the process of constructing the application into two separate versions (i.e., the first- and third-person viewpoints) to analyze the proposed interaction.

Section 5 analyzes presence, experience and VR sickness through a questionnaire experiment. Finally,

Section 6 presents the conclusions of this study with analyses of its limitations and directions for future research.

2. Related Works

A variety of studies has been conducted on immersive VR, which provides users with realistic experiences about where they are, who they are with and what actions they take, based on their senses, including vision, sound and tactile. A display that transmits 3D virtual scenes is needed to enable the user to become more deeply immersed in the VR. Additionally, research should be conducted on techniques, like haptic systems, which provide tactile feedback, and audio sources, which maximize spatial sense [

6,

7,

11,

12].

Current VR-related technologies include Oculus Rift CV1 and HTC Vive HMD, both used in PC environments. There is also Google Cardboard and Samsung Gear VR, both used on mobile platforms. Owing to the newly-popularized market environment for these technologies, it has become easier for consumers to experience visual immersion. Moreover, these technologies are being actively researched and developed with the aim of providing users with realistic presence by detecting a variety of different motions and movements and expressing them in the virtual environment through both visual and tactile feedback. Studies related to the haptic system and motion platforms are not only able to accurately detect the motions of our joints and express them realistically in virtual environments, but they can also calculate the force generated in this process to convey physical responses, including tactile sensations [

13], to the user. Relatedly, research has been done on technologies, including the 3-RSR Haptic Wearable Device [

14], which controls the force that contacts the fingertips with great precision. There is also the new three degrees-of-freedom wearable haptic interface that applies force vectors directly to the fingers. Bianchi et al. [

15] conducted research on the wearable fabric yielding display, a tactile display made of fabric. These studies on haptic systems have included a user-friendly approach to produce easy-to-carry and portable devices [

8]. Additionally, the algorithm proposed by Vasylevska et al. [

16], which can infinitely express a user walking inside a limited space, and the portable walking simulator proposed by Lee et al. [

5], which can detect walking in place, have widened the range of possible walking motions for users. However, most of these studies are limited in terms of high-cost and other constraints that present difficulties in applying them to a large variety of VR applications. Therefore, other studies have been conducted on user-friendly interfaces or interactions. An interface based on the gaze-pointer [

17] or hand interaction [

18], etc., is the result of studies on interactions that can easily be applied to various VR applications. However, the limitation of these studies is that only the user interfaces and interactions in the first-person have been taken into consideration. To provide new presence with a variety of different experiences in immersive VR, the user’s viewpoint should be approached in ways different from traditional methods. Moreover, the usability of the proposed technology should also be considered in this process to ensure that it can be applied to a large variety of VR applications. Therefore, this study presents an environment where the user can experience immersive VR in a new way by designing an intuitively-structured user-centric TPVR interaction.

Examples of currently-developed TPVR applications are Chronos, Lucky’s Tale and the Unreal Engine-based Doll City VR provided by the Oculus Home Store [

19]. However, all of these applications offer elementary interaction methods using gamepads. This means that, aside from wearing an HMD, the experience offered by these applications will not be very different from playing a traditional third-person game. Ultimately, compared to first-person, which offers a relatively high presence in VR, these applications are unable to deliver the same level of immersion to the user. Based on the god-like interaction [

20], the proposed TPVR interaction asks the user to directly interact with the virtual environment or object using hands in the virtual space. This gives users an intense feeling of being in the virtual space and provides a different interface from the traditional methods of interaction.

Presence is the most fundamental element needed to experience VR. For this reason, Slater et al. [

21] conducted a study to analyze the general effects of VR on our lives, using various approaches, including psychology, neuroscience and social phenomena. A variety of studies has been carried out, including analyzing the relationship between gaze and presence and analyzing presence during walking in both the first- and third-person viewpoints. In addition, studies have been conducted that focus on the user’s emotions and presence, compare the presence and emotion in virtual space with various display devices and evaluate haptic techniques on presence [

22,

23,

24]. However, there are still no cases of designing interactions according to viewpoints in immersive VR and studying the presence, VR sickness and experience elements. Based on existing research, this study compares and analyzes the difference in presence, depending on the viewpoint, as the user interacts with the virtual environment or object. Moreover, it analyzes the relationship between VR sickness and presence using the above process to examine the usability of the proposed TPVR interaction.

3. Interaction of TPVR

Unlike the first-person viewpoint, the proposed TPVR interaction requires an interface that enables the user to comprehensively handle the process of viewing a 3D virtual space from an observer’s perspective, while judging situations and controlling the character’s movements and behaviors. This study aims to control all of the user’s interactions with the virtual environment with the hands. To do this, a technical device is required to precisely detect and trace hand motions and gestures. Because one of the goals of the proposed interaction is usability, this study will use Leap Motion (Leap Motion, Inc., San Francisco, CA, USA), a popular hand-tracking technology, for its interface.

3.1. Structure

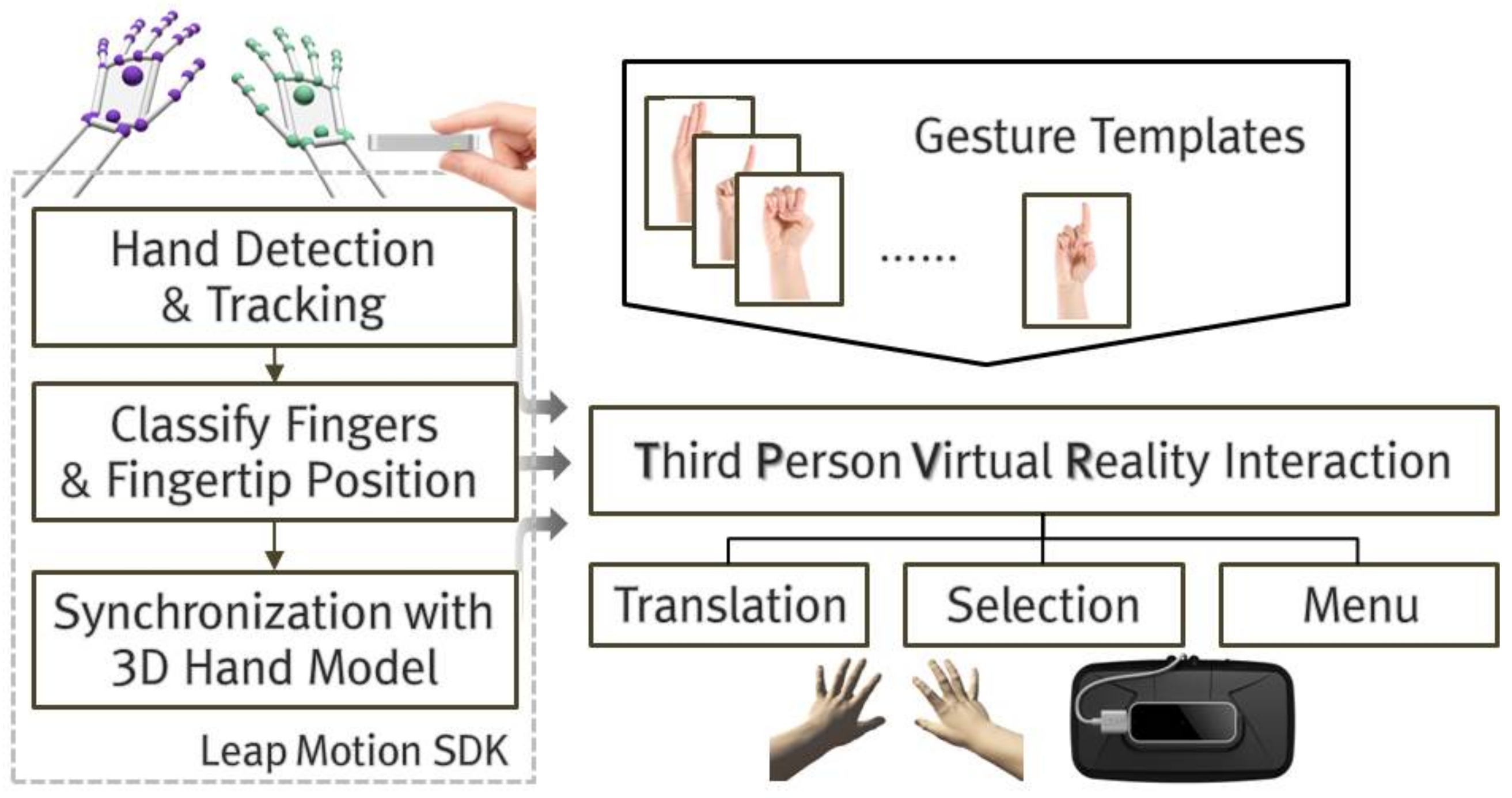

The interaction structure of the TPVR is as follows (

Figure 1). First, it detects the user’s hands and continuously tracks their motions. During this process, the structure is set to distinguish each finger, recognize whether they are folded or unfolded and receive information on fingertip positions. Thus far, the process occurs in the Leap Motion device and is implemented using functions of the Leap Motion SDK (e.g., hand recognition range, synchronization of the 3D virtual and real hands). Next, an interface that controls the user’s interaction with the virtual environment is designed, based on the recognized hand gesture. The proposed interaction consists of translating the character and the process of selecting virtual objects and includes a menu interface required to control the application. The interaction also correlates hand gestures with functions to diversify the actions and controls made with the hands. The interaction structure defines the hand gestures as templates in the preprocessing process, and the functions that correspond to the recognized hand gestures are designed to operate in the interface.

3.2. User Behavior and Interface

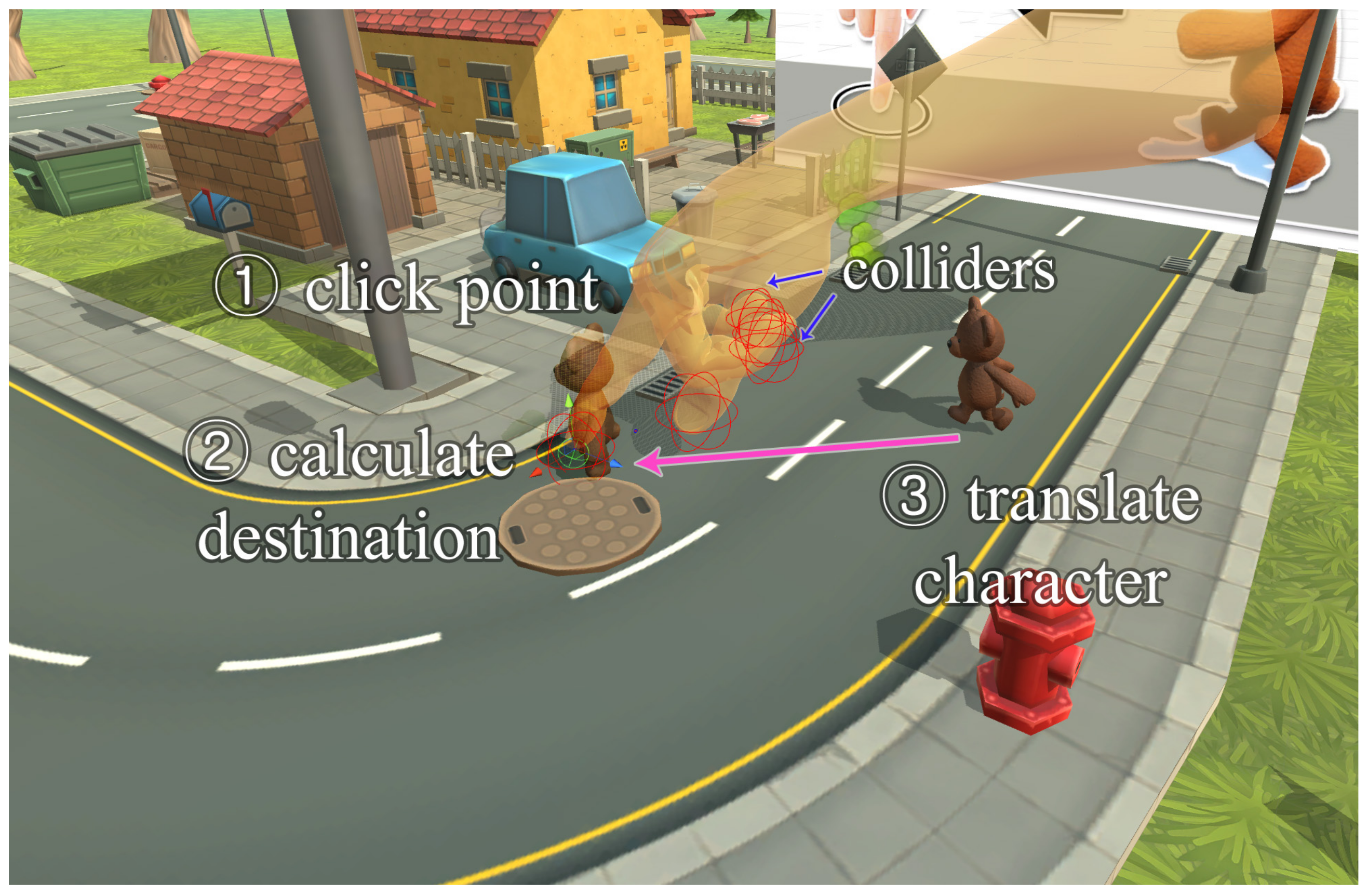

The interaction of this study consists of user behavior and an interface for controlling the application. First, the behaviors available to the user are translation and selection. With a typical third-person interactive content, a mouse is used to interface with the characters. When the user clicks a destination on the screen with a mouse, a ray that converts the screen coordinates into spatial coordinates is calculated, and the coordinates of the location where the ray intersects the plane are determined as the destination to which the character is translated. The structure of using the mouse to process character translation is applied to the hands, so that when the user directly uses his/her right index finger to click a destination on the terrain, the character is designed to move to that clicked point. The step-by-step process of translating a character with the hand is shown in

Figure 2. A spherical collider is set in advance at the fingertip of the 3D virtual hand joint model, and then, the character is translated to the destination point: a 3D coordinate where the collider intersects (

) the plain of terrain (Equation (

1)). In other words, if the user clicks the terrain with an index finger tip, the destination point is calculated using Equation (

1), and the character is then translated.

Here, the plane equation of terrain is , and is the normal vector of the plane. is the center point of the spherical collider, and r is the radius. Moreover, is the calculated destination point of the character.

Presented next is the process of selecting and controlling various objects that exist in the VR space. As described above, a spherical collider is set to the fingertip in advance. When various objects in the virtual environment are selected using the fingers, including terrains and objects, selection is determined by performing a collision detection with the collider. A rigid body transformation is applied to the collider to express the natural movements of the virtual object after collision. Whereas in a first-person VR, the scope of the user’s visual field is limited, in the third-person, it is possible to select multiple objects simultaneously, as the user can view the entire space. Therefore, this study also applies the algorithm for multiple selections to the interaction. Algorithm 1 summarizes the procedure of multiple object selection. First, the right and left index fingers and thumbs are made to collide with each other. Then, when both fingertips move away from each other, all objects that fall into the rectangular area generated from each fingertip are selected simultaneously. The calculation procedure converts the fingertip coordinates in the 3D virtual space into screen coordinates (Equation (

2)) and identifies the objects inside the rectangle calculated through the left-hand and right-hand coordinates. The process of selecting multiple objects using both hands is shown in

Figure 3.

Here, refers to the 3D coordinate of fingertip, is the maximum value of the near clipping plane of the perspective projection view volume and represents the z value of the near clipping plane. Moreover, and are the width and height values of the screen. Based on these values, the projection coordinate and the screen coordinate are calculated.

| Algorithm 1 Multiple object selection procedure using both hands. |

| 1: | 3D coordinate of virtual objects. |

| 2: | procedure multiple object selection(X) |

| 3: | (1) collision detection from fingertip of both hands. |

| 4: | (2) measure the movement amount of both fingertip. |

| 5: | if then |

| 6: | (3) convert fingertip 3D coordinates to screen coordinates using Equation (2). |

| 7 | screen coordinate of left hand (left_bottom). |

| 8: | screen coordinate of right hand (right_top). |

| 9: | (4) calculate rectangular coordinates from both fingertip coordinates. |

| 10: | (5) convert screen coordinate of X using Equation (2). |

| 11: | if and then |

| 12: | if and then |

| 13: | (6) object selection. |

| 14: | end if |

| 15: | end if |

| 16: | end if |

| 17: | end procedure |

The last step is designing a menu interface for controlling the application. Generally, 3D interactive content and first-person VR applications utilize 2D sprites to provide menu functions. As first-person VR content offers a relatively narrow visual field, this can cause nausea. Our interface is designed as simply as possible. However, when VR is observed from the third-person perspective, the user can utilize the entire space from a wide visual field. Thus, the virtual space can be utilized directly to build a menu without the need for 2D images. Therefore, this study designs its interface so that the menu items are created as 3D objects, and the menu is implemented on the virtual space to be selected directly by the hands. The menu can be activated or deactivated depending on the user’s desire. For this, gestures defined in advance are made to correspond with the activation of the menu. The menu activation procedure is shown in

Figure 4. When the user simultaneously opens both palms outward, the gesture is recognized, and the menu is activated or deactivated on top of the 3D character. The index finger is used to select the desired item from the activated menu.

Additionally, the interaction can be expanded by defining desired gestures in advance and connecting them to corresponding functions.

4. Application

The goal of this study is to confirm that, through the proposed interaction, TPVR applications will also be able to provide users with enhanced presence through high immersion, as well as new experiences and interest. For this purpose, we designed our own VR application that makes it possible to compare and analyze the differences in the interaction process, depending on the first- or third-person viewpoints.

4.1. Overview

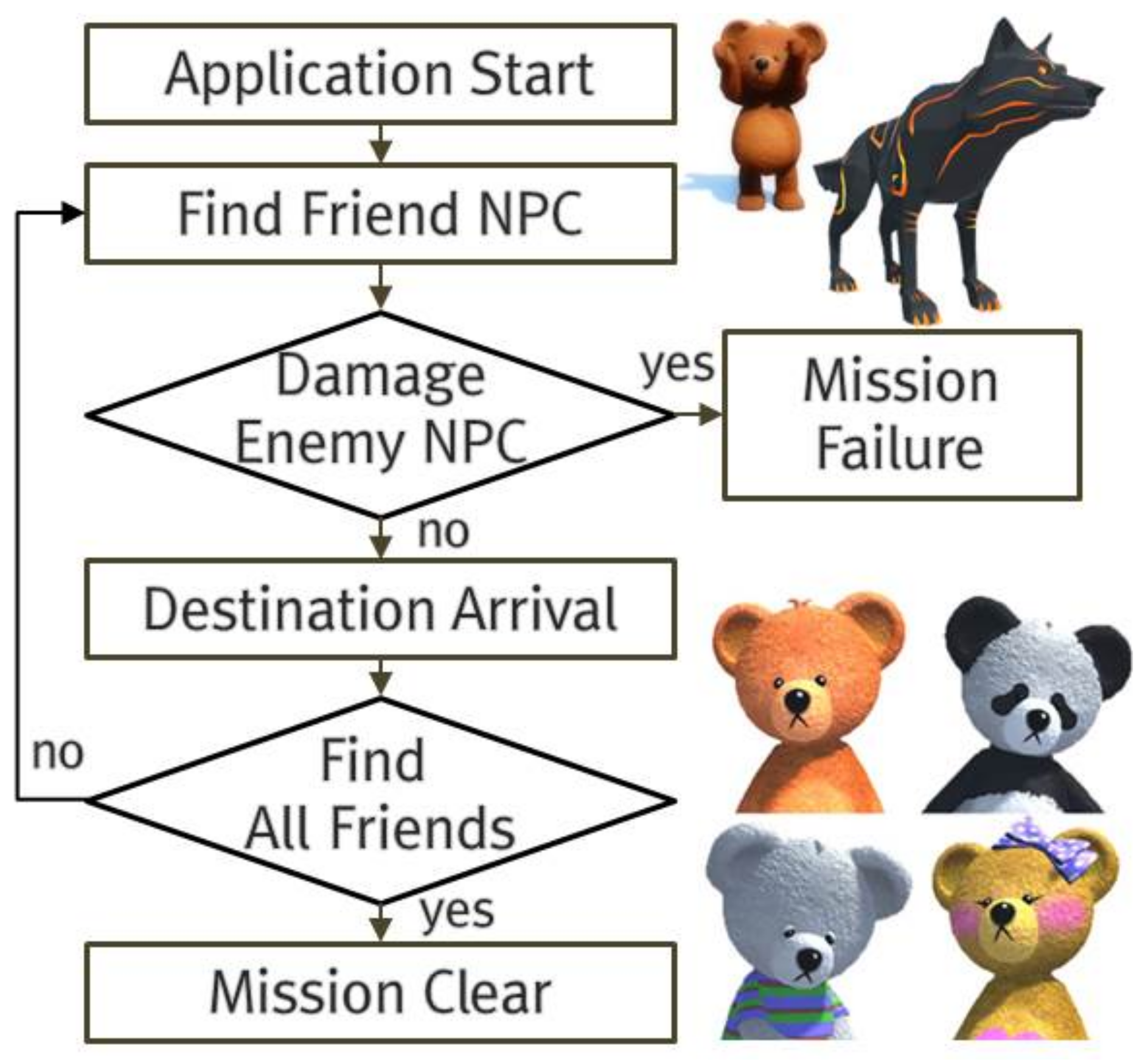

The production process of the VR application is identical to that of typical 3D interactive content. This study builds its development environment on the Unity3D engine (Unity Technologies, San Francisco, CA, USA) and integrates the Oculus and Leap Motion development tools. Additionally, it implements necessary functions for operating the application by utilizing the components, prefabs, etc., provided by the development tools. A characteristic feature of the application is that the proposed interaction uses hands to control all movements and operate the interface, even in the first-person. The user must use hands to control all functions without using typical input devices, such as the keyboard or mouse. The objectives presented to the user with the application and its process flow are as follows. Under the premise of a basic background story, the character must start from a designated location, find all friend NPCs and arrive safely at the destination. During this process, the user must translate the character or perform actions to damage or dodge enemy NPCs that prevent him or her from finding friend NPCs. The process flow of the proposed application is shown in

Figure 5.

4.2. First Person vs. Third Person View

This study compares the user’s sense of presence and experience by controlling the application from different viewpoints. A basic difference is created in the scenes conveyed to the user’s eyes because of the differences in viewpoint. The difference in scene, depending on the location of the camera, affects presence. However, as the user proceeds with the application, lacking presence can be satisfied depending on how the virtual environment and the user interact. Conversely, the interaction could have a negative effect on presence by causing high VR sickness. Therefore, this study initially designed interactions suitable to the third-person view and modified this to suit the first-person viewpoint to make comparisons. However, the interaction through the first-person viewpoint should be able to induce the same immersion and interest as through the third-person view, while utilizing the hand. Therefore, this study designs a first-person interaction based on immersive hand interaction (i.e., movement, stopping and object control) proposed by Jeong et al. [

25].

Based on the information described in

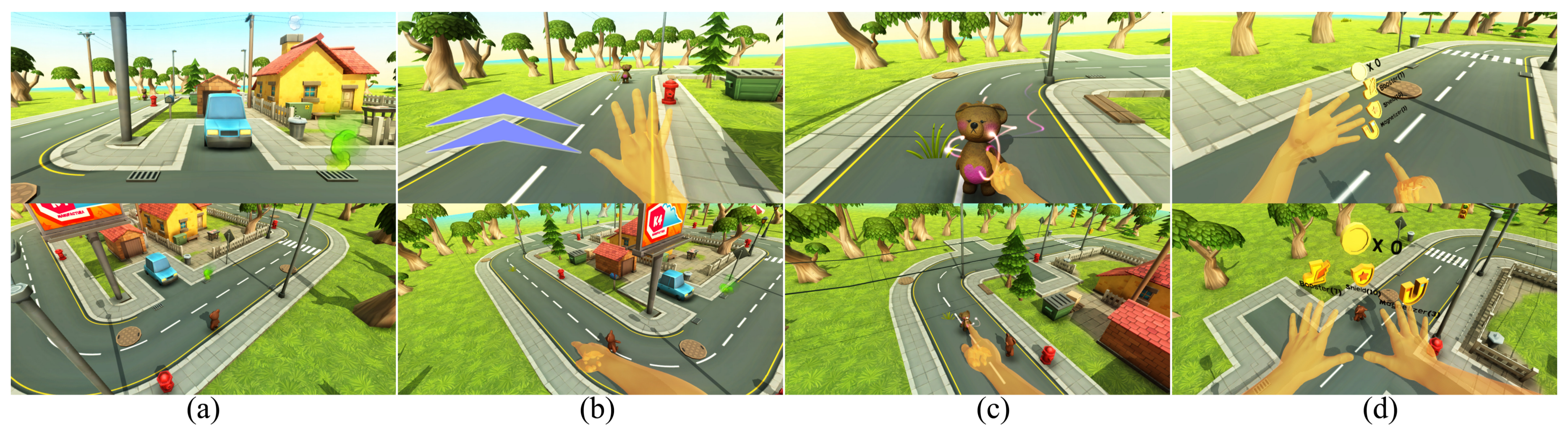

Section 3.2, this study compares the different viewpoints from three aspects. In first-person VR applications, the act of moving is typically processed using a keyboard or gamepad. However, based on the premise of this study, in which all interactions are to be made using hands, movement is also designed to be controlled by the hands. When all five fingers are outspread, the user is translated in the direction he or she is facing. Selection is processed by the same method, via third-person interaction, by clicking the desired object with a finger. However, because the user is placed face-to-face with virtual objects or characters in the first-person, the multiple selections function cannot be applied. The multi-selection function is considered to be a differentiated function only for the third-person viewpoint, and we confirmed through subsequent experiments that the quality of the interaction or interface derives from the viewpoint. Finally, the menu interface differs in its processing method, depending on the viewpoint. In the third-person view, the menu items are laid out in the virtual space, making full use of the advantages of the third-person viewpoint. However, this is impossible in the first-person. Therefore, for the first-person view, a 3D menu interface is constructed by laying out the items at the end of the fingers. When the user turns over his or her left hand after displaying the back of the hand, menu items appear at the end of the fingertips. The gestures needed for first-person interaction are also defined in advance and made to correspond with various functions. A comparison of the different interactions from the viewpoints is shown in

Figure 6. To increase our confidence in the experimental results, the interactions are implemented to maximize the characteristics and advantages of each viewpoint (

Supplementary Video S1).

5. Experimental Results and Analysis

5.1. Environment

The VR application designed to implement the proposed interaction of TPVR and conduct an analytic experiment was created using Unity3D 5.3.4.f1, Oculus SDK (ovr_unity_utilities 1.3.2) and Leap Motion SDK v4.1.4. The PC environment used for the system construction and the experiment was equipped with Intel Core i7-6700 (Intel Corporation, Santa Clara, CA, USA), 16-Gb RAM and Geforce GTX 1080 GPU (NVIDIA, Santa Clara, CA, USA). Additionally, the background elements of the application, such as buildings, trees and roads, were made using the resources of Manufactura K4’s Cartoon Town [

26]. The VR application designed by this study is shown in

Figure 7a. Interaction methods appropriate for the first- and third-person viewpoints were each applied to this application (

Supplementary Video S1). Users were then asked to use the application and to complete a survey to obtain experimental results. The user wears an HMD with a hand-tracking device and uses the application in a standing position. The experimental environment for participants is shown in

Figure 7b. By expanding the space based on the terrain, the user experience is diversified and the reliability of the results of the survey is increased (

Figure 7c). The experiments for both the first- and third-person viewpoints are conducted in the same environment. Based on this setup, a survey experiment was conducted on presence, VR sickness and experience. A total of 20 participants, aged 21 to 35, were randomly selected.

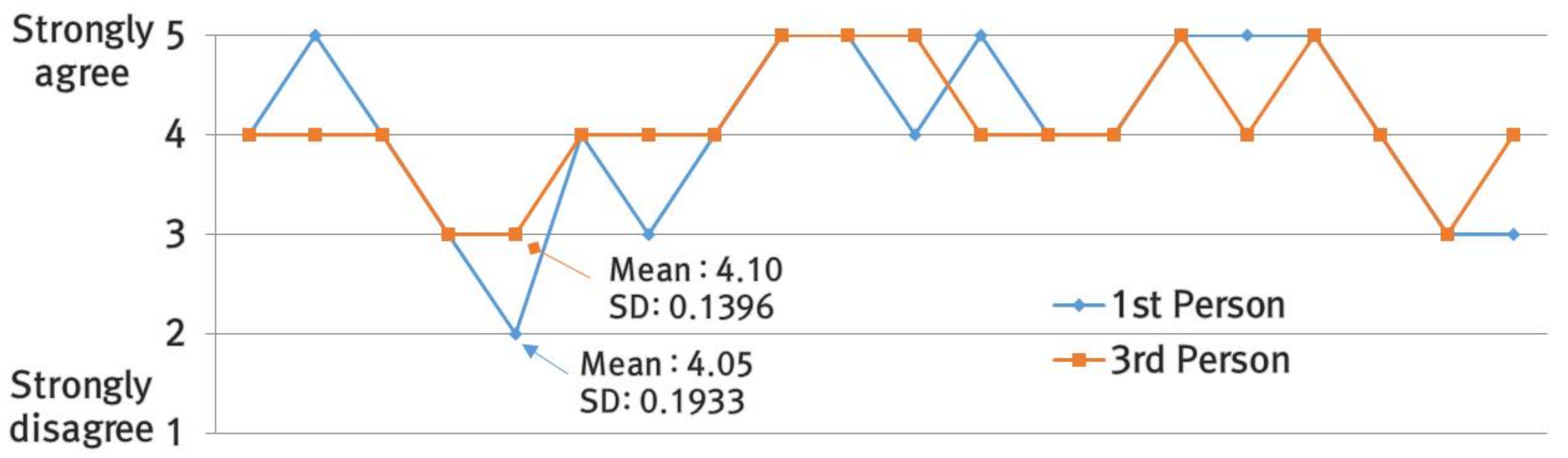

Prior to conducting a survey of participants on the detailed items, we first confirmed that the proposed TPVR application is suitable for comparative experiments on the different viewpoints.

Figure 8 shows the results of this experiment. The participants were asked to respond with one (strongly disagree) to five (strongly agree). Thus, we confirmed that the suitability of the TPVR application in both first- and third-person viewpoints showed a high level of approximately 4.0 and that no difference exists in terms of viewpoint (

p-value:

).

5.2. Presence and VR Sickness

The survey experiment was conducted to examine whether the proposed interaction of TPVR could be applied to various VR fields by providing users with satisfying presence and inducing low VR sickness. First, presence was measured by using the presence questionnaire proposed by Witmer et al. [

27]. The questionnaire consists of 19 questions that ask the participants to measure realism, quality of interface, etc., with numbers between one and seven. Seven denotes maximum satisfaction. The survey results on presence are shown in

Figure 9, in detail. The overall average shows that, for both viewpoints, participants experienced satisfying presence, scoring over five points (M: 5.48, 5.85, SD: 0.47, 0.52). However, unlike initial expectations, presence in the first-person was recorded lower than in the third-person, although the difference was small. Most of the participants responded that the interactions presented in the experimental application were easier to manipulate and induced a more realistic immersion in the third-person viewpoint. The reason for this result is that the interactions of the application were initially designed to be appropriate for the third-person and later modified so that it could be manipulated from the first-person viewpoint. In addition, differentiated interactions such as multiple selections that were provided only in the third-person view also served as a cause. Interactions in the first-person presented users with a relatively awkward experience, unlike actual environments, or with difficulties in manipulation, resulting in lower scores. Nonetheless, an overall high presence was maintained, confirming that the difference in presence depending on the viewpoint can be overcome sufficiently through interaction. This study used the Wilcoxon test to analyze whether the viewpoint creates a significant difference in presence. The calculation resulted in

, confirming that the difference in viewpoints did not render a significant difference in presence (>0.05).

The second part is an analysis of VR sickness. This study proposed that the negative effects of VR sickness can be prevented in the third-person. Thus, it offers a wide visual field from an observer’s perspective. To verify this hypothesis, this study systematically examined VR sickness using the Simulator Sickness Questionnaire (SSQ) [

28]. The SSQ consists of 16 questions, and the participant can measure their degree of sickness with numbers between zero (none) and three (severe). It also allows analysis on specific aspects for each item, such as nausea, oculomotor and disorientation. The SSQ results of the participants after experiencing the application from each viewpoint are shown in

Table 1. As the aim of this study is to compare and analyze VR sickness depending on the viewpoint, only raw data were used to examine the SSQ results with weights excluded. As expected, the results showed significantly low scores for VR sickness in the third-person. An analysis of the participants’ responses revealed that, whereas in the first-person, the participants had to frequently move their heads to make instantaneous responses to situations occurring each moment, there was not a large amount of head or visionary movement needed in the third-person, because the scene could be viewed in whole from an observer’s perspective. For this reason, the scores for oculomotor and disorientation were close to one in the first-person (0.64,0.82). Therefore, the user experienced some discomfort during the tryout of the application, which can eventually become a potential factor that impedes users from experiencing VR for an extended amount of time. However, this study was able to reduce the amount of discomfort by 25∼35% by presenting participants with third-person interactions in the same application. Additionally, most participants of the experiment experienced the VR application more than once. Because the application was also in the first-person, the participants responded that they found the third-person more convenient.

Because the order in which the participants experienced the application in each viewpoint could affect the survey results, half of the participants were asked to try out the first-person viewpoint first and then move on to third-person interactions. The other half were asked to proceed in the opposite order. However, the questionnaire results revealed that the order of the experience did not affect the participants’ presence or VR sickness, and the results were not separated according to this criterion.

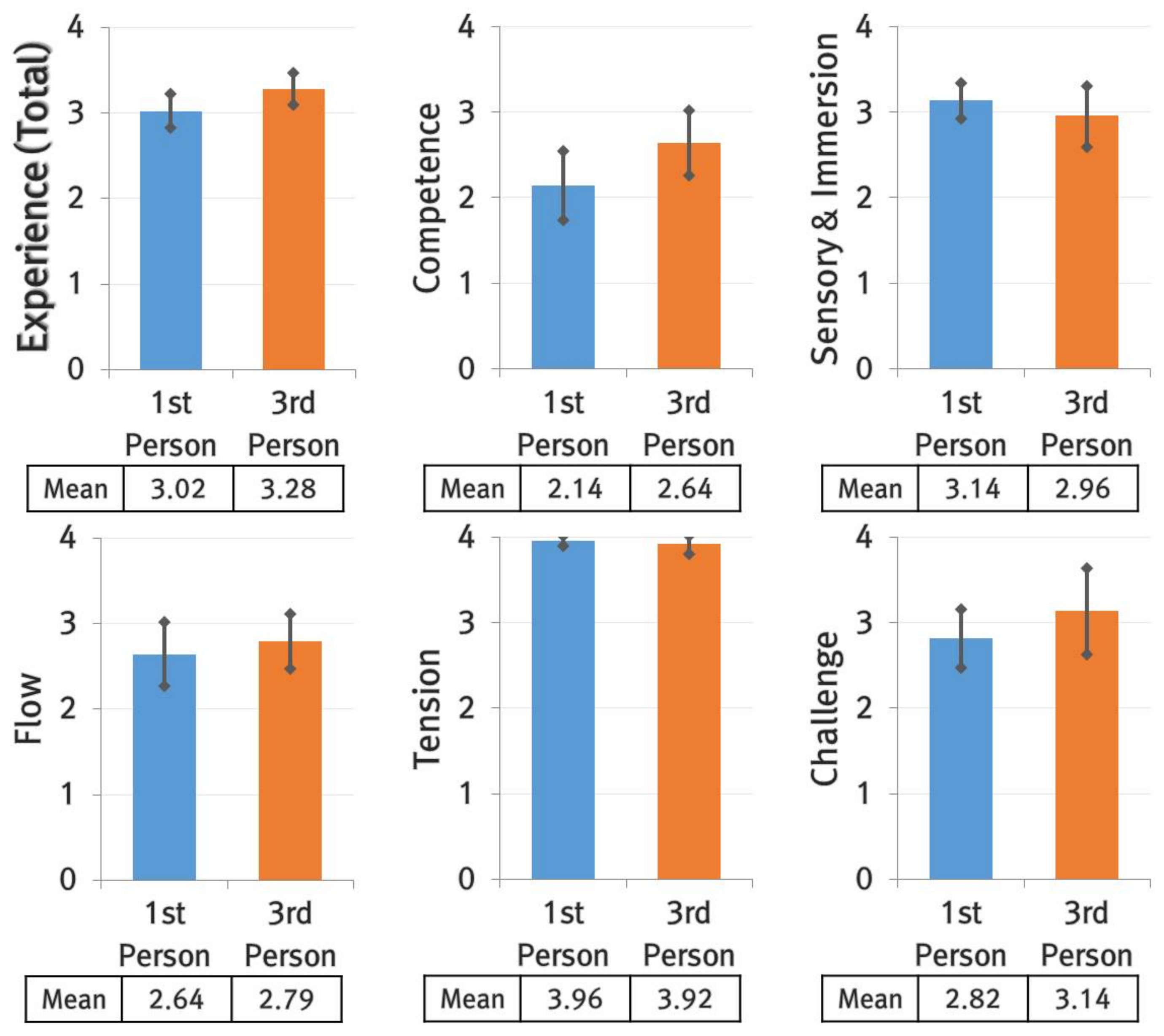

5.3. Experiences

With the final survey experiment, this study compared and analyzed the differences in the user’s experience depending on the viewpoint. The Game Experience Questionnaire (GEQ) [

29] was used for this purpose. Among the different questionnaire modules, this study based its questionnaire on the 14 items of the In-game GEQ and analyzed the user’s experience by dividing it into seven steps. The participants were asked to answer each item with a number between zero (not at all) and four (extremely). The results are shown in

Figure 10. The overall average score reveals that the third-person interaction environment provided users with a better experience. The reason for this result is the same as the difference in presence discussed above. As the proposed interaction is more focused on the third-person viewpoint, it resulted in relative differences in the participants’ level of satisfaction with experience. The results of the detailed items in the questionnaire also revealed clear differences, depending on the viewpoint. For the first-person viewpoint, the scores for immersion and tension were relatively high, because of the spatial experience provided by the visionary field. Whereas flow and competence scores were high for the third-person, participants were easily able to comprehend the entire situation. The characteristics of a scene rendered by the viewpoint were also revealed to have a large influence on experience, including interaction. Differences in the method in which they interact with virtual environments or objects, even in the same virtual space, sometimes increase the challenge and sometimes stimulate the senses. However, this is because of the visual information and interaction derived from different viewpoints rather than the progress flow and graphic elements of the proposed application. Therefore, this study expects that an experience satisfying to all users regardless of the viewpoint or a new experience appropriate to the viewpoint can be achieved with the design of an interaction well suited to each viewpoint.

6. Conclusions

The purpose of this study was to design an interaction that can provide users with satisfying presence and a new experience appropriate for the observer’s field-of-view in third-person VR. To achieve this goal, the interaction of TPVR was designed to translate characters, select objects and operate various menu functions using hands. Existing hand-tracking devices were used to detect the user’s hands, recognize gestures and correlate them to 3D hand joint models. In addition, traditional methods using the mouse to control third-person interactive content were applied to the use of hands, to design interactions with intuitive structures that are easy to learn and maneuver. This included the ability to designate the character’s destination and select virtual environments and objects with the hands, including an interface that utilized the virtual space to lay out the menu items. To analyze whether the proposed TPVR interaction ultimately provided users with a new experience and enhanced presence compared to traditional first-person VR environments, as well as its influence on VR sickness, this study designed its own application and conducted survey experiments. The results revealed that the difference in presence for different viewpoints was not high. The significant point is that enhanced presence can be provided in VR, regardless of viewpoint, as long as optimized interaction appropriate for the viewpoint is designed. The SSQ results suggest that the third-person viewpoint may be able to alleviate some of the negative factors of existing VR applications. Moreover, this study confirmed that the proposed interaction of TPVR could sufficiently provide new experiences, different from the first-person viewpoint, and broaden the field and directions in which traditional VR applications can be utilized.

However, because the proposed interaction was more focused on the third-person viewpoint, there were some weaknesses in the comparative experiment in terms of the designed application and the interaction in the first-person environment. The proposed TPVR application did not operate in a more favorable manner with a specific viewpoint. In addition, first-person interaction was also based on the existing immersive hand interaction. However, controlling the first-person viewpoint by hand was relatively inconvenient. Therefore, we plan to design differentiated interactions for the same application, optimized for each viewpoint for future comparative analysis, to increase our confidence in the survey results on presence and experience, depending on the perspective. Moreover, instead of using hand-tracking devices presupposed in current interactions, our future studies will seek ways to increase the usability of third-person interactions by adapting a controller provided with the HMD to the hands.