Anti-3D Weapon Model Detection for Safe 3D Printing Based on Convolutional Neural Networks and D2 Shape Distribution

Abstract

:1. Introduction

2. Related Works

2.1. Handgun Detection and 3D Model Matching

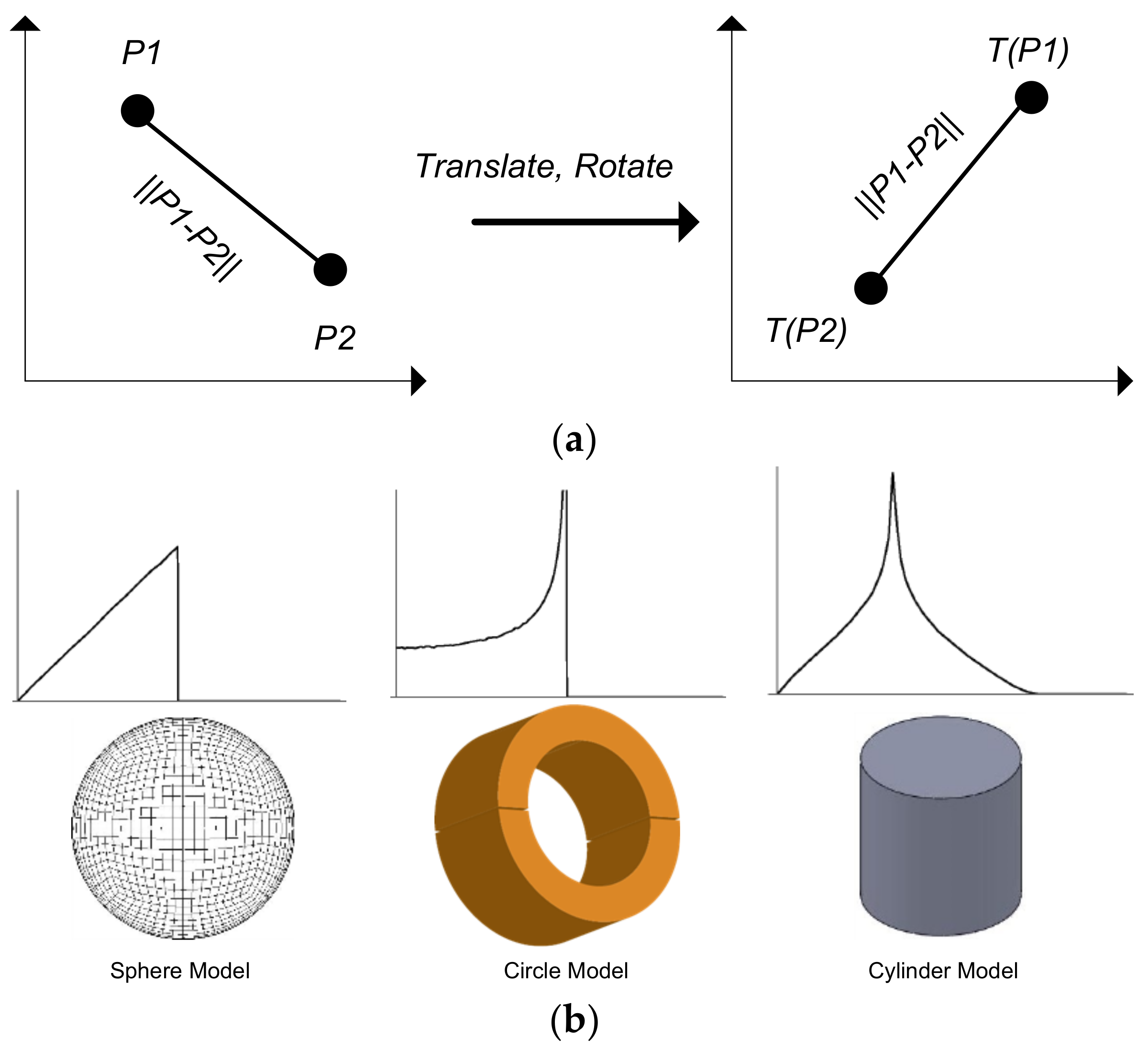

2.2. Shape Distribution

- ✓

- A3 shape function: compute the angle between three random points on the surface of a 3D model. The A3 shape distribution of a 3D model is the distribution of a set of angles that is computed from a set of three random points on the surface of a 3D model.

- ✓

- D1 shape function: compute the distance between a fixed point and one random point on the surface of a 3D model. The D1 shape distribution of a 3D model is the distribution of a set of distances that is computed from a fixed point to a set of random points on the surface of a 3D model. Normally, the fixed point is the center points of a 3D model.

- ✓

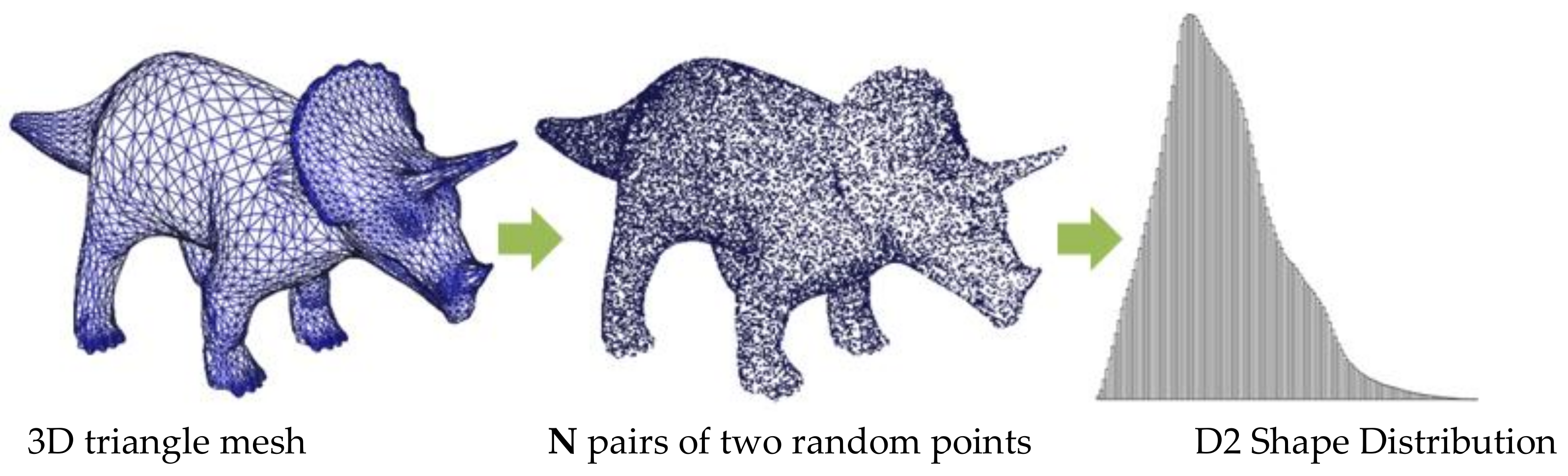

- D2 shape function: compute the distance between two random points on the surface of a 3D model. The D2 shape distribution of a 3D model is the distribution of a set of distances that is computed from a set of two random points on the surface of a 3D model.

- ✓

- D3 shape function: compute the square root of the area of the triangle between three random points on the surface of a 3D model. The D3 shape distribution of a 3D model is the distribution of a set of square roots that is computed from a set of the area of the triangle between three random points on the surface of a 3D model.

- ✓

- D4 shape function: compute the cube root of the volume of the tetrahedron between four random points on the surface of a 3D model. The D4 shape distribution of a 3D model is the distribution of a set of cube roots that is computed from a set of tetrahedron volumes between four random points on the surface of a 3D model.

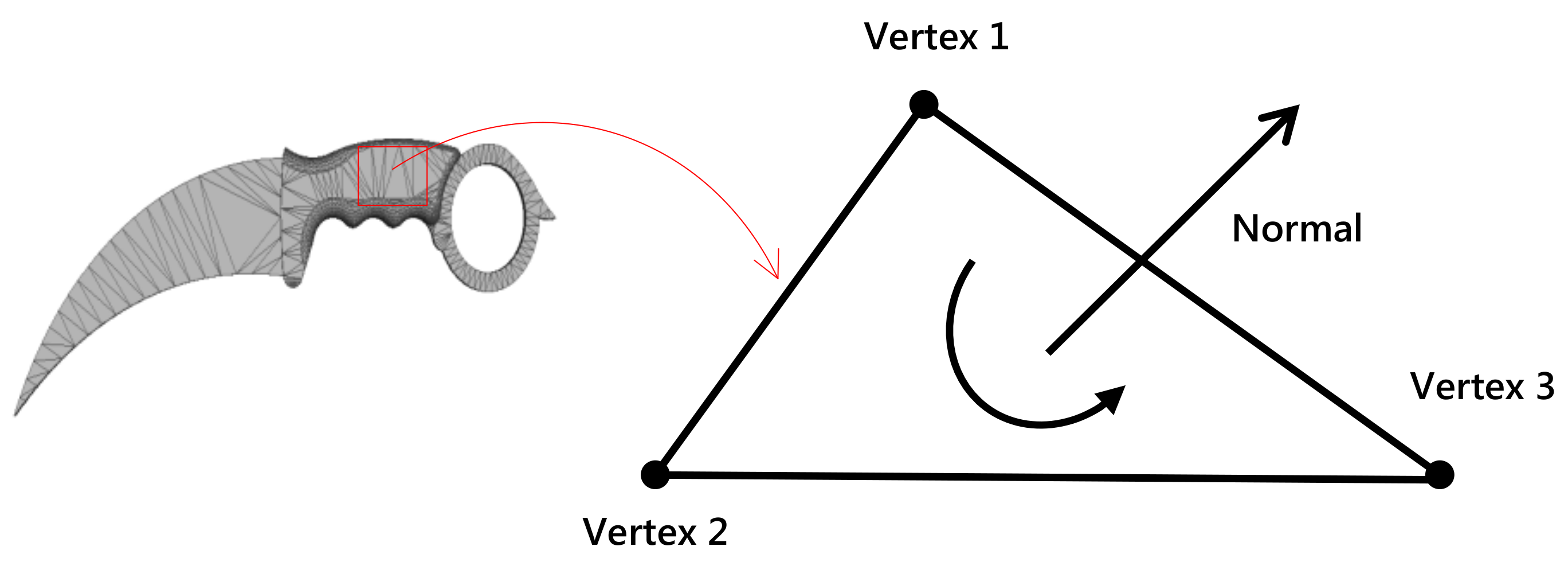

2.3. 3D Triangle Mesh-Based Anti-3D Weapon Model Detection

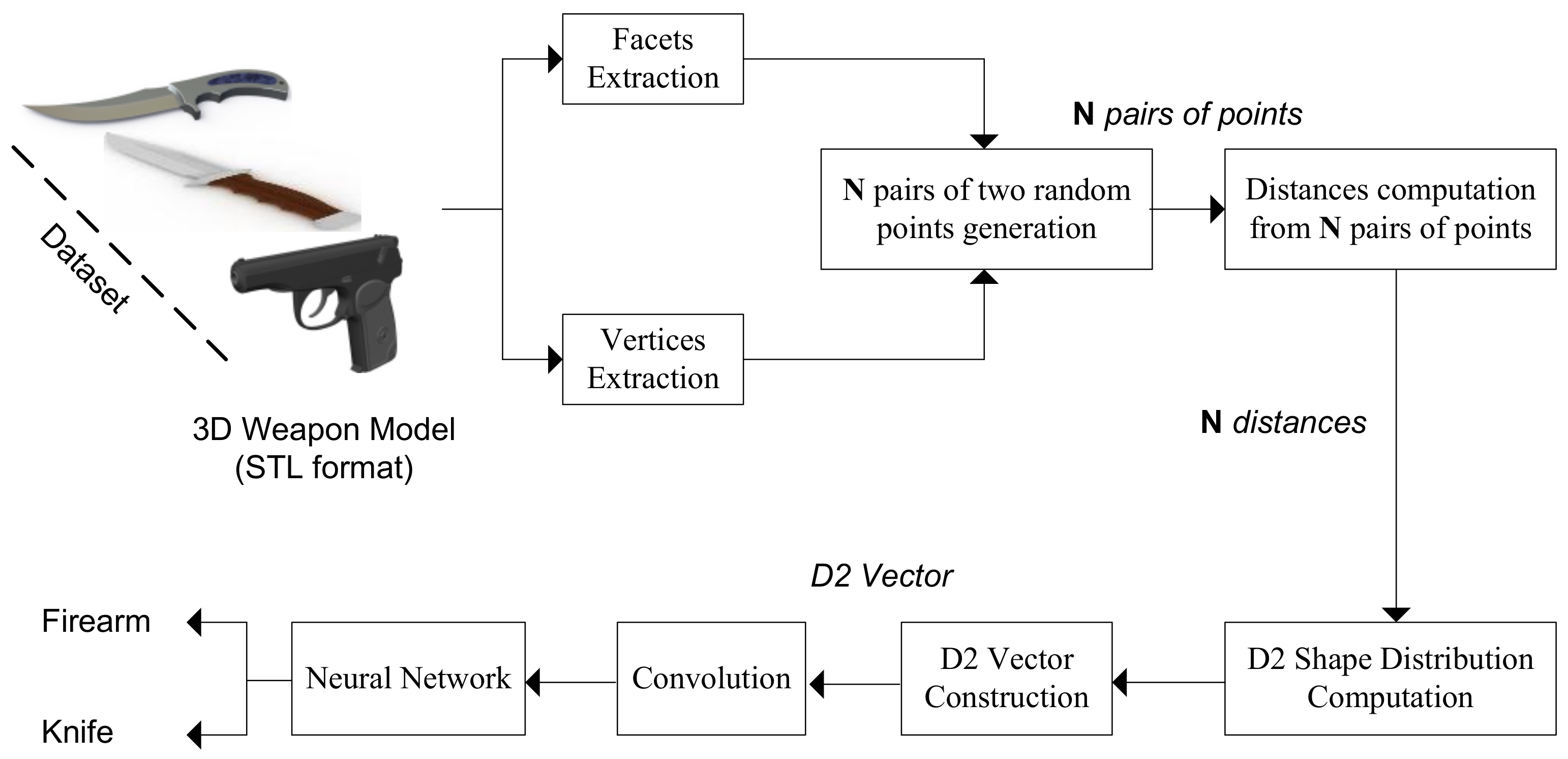

3. The Proposed Algorithm

3.1. Overview

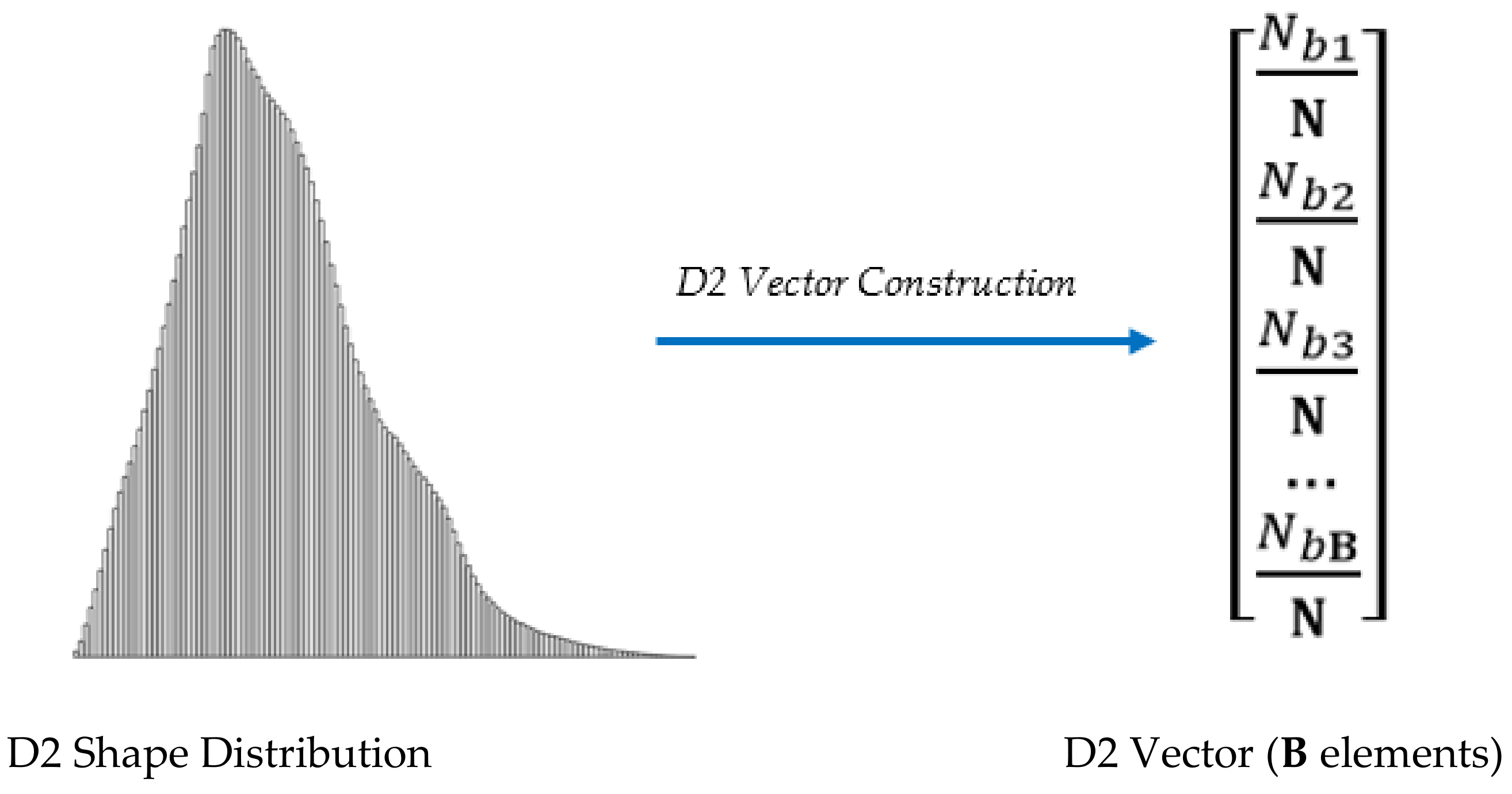

3.2. D2 Shape Distribution Computation and D2 Vector Construction

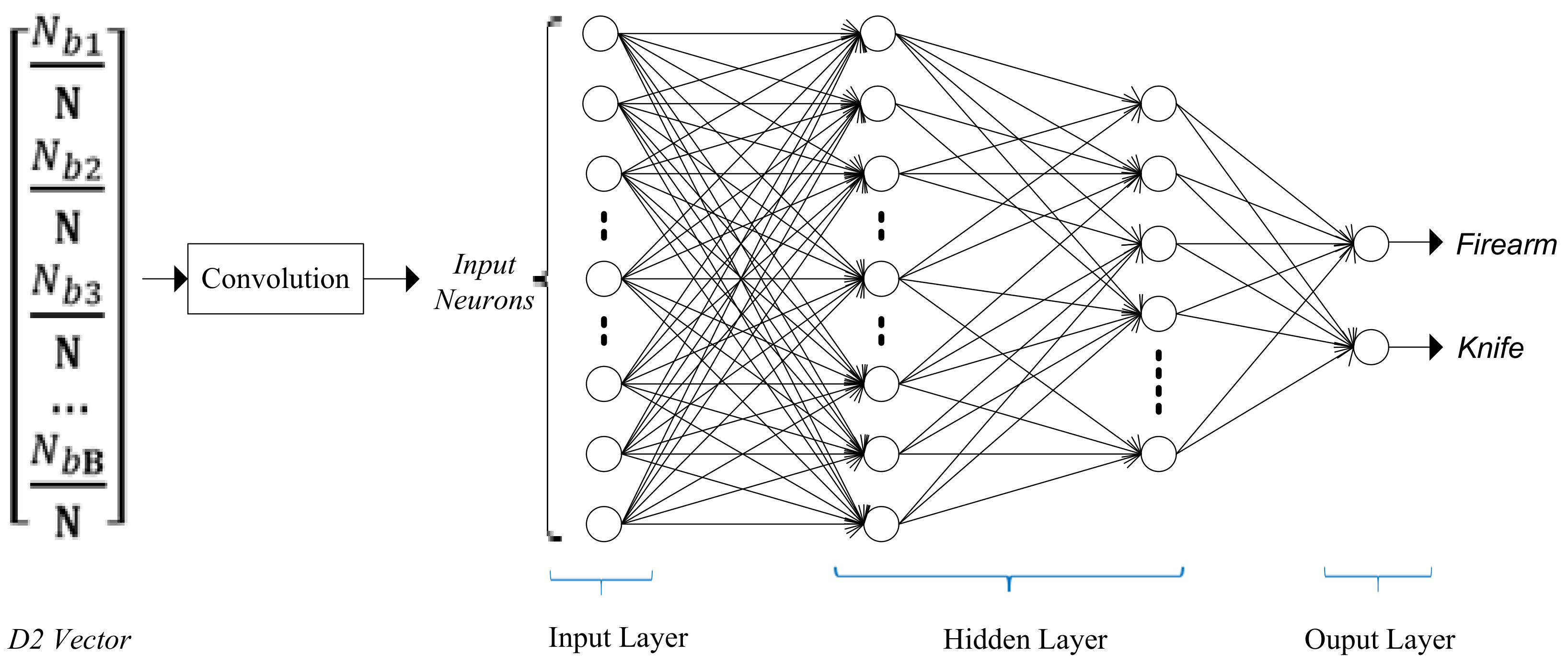

3.3. D2 Shape Distribution Training by CNNs

4. Experimental Results and Evaluation

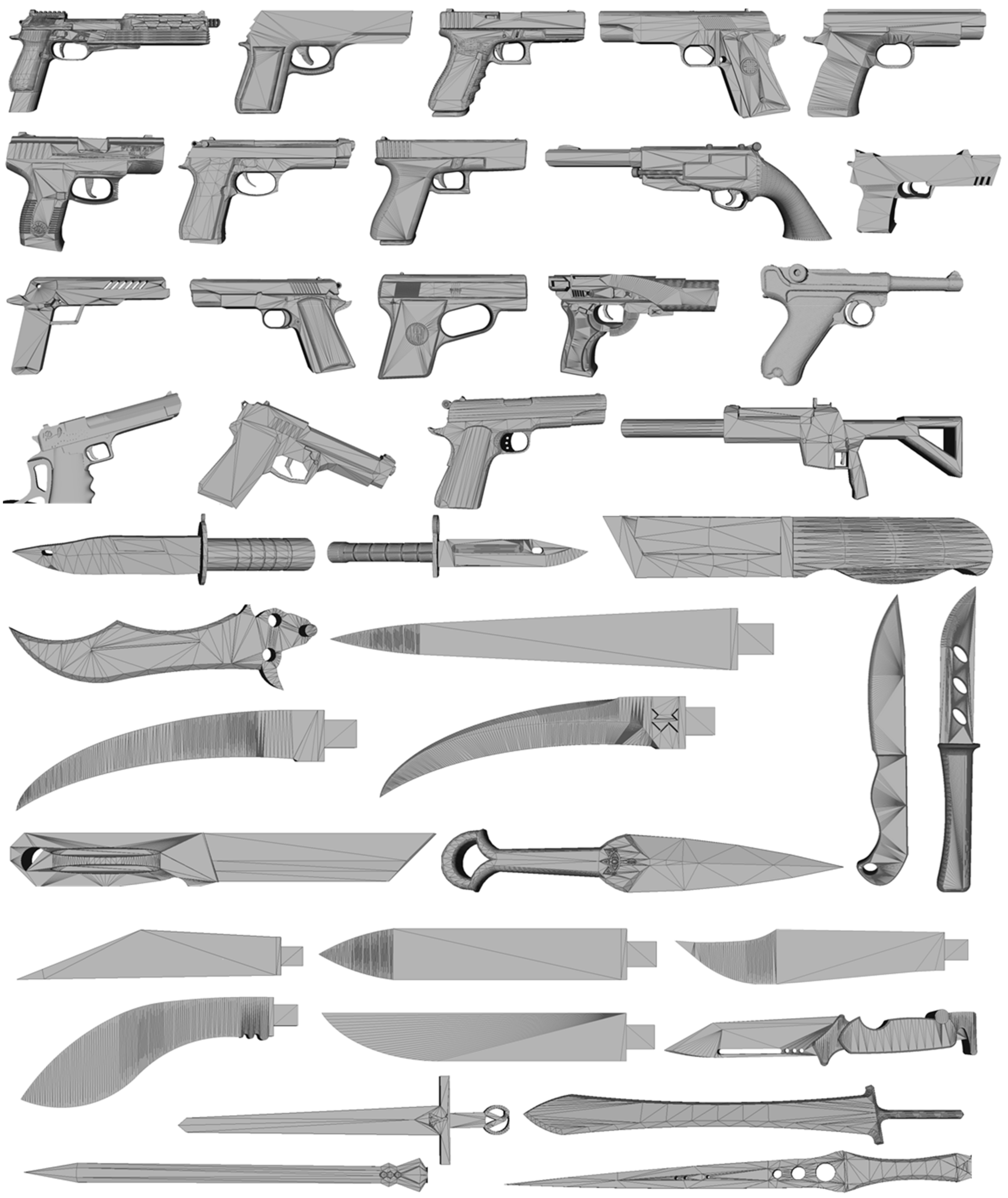

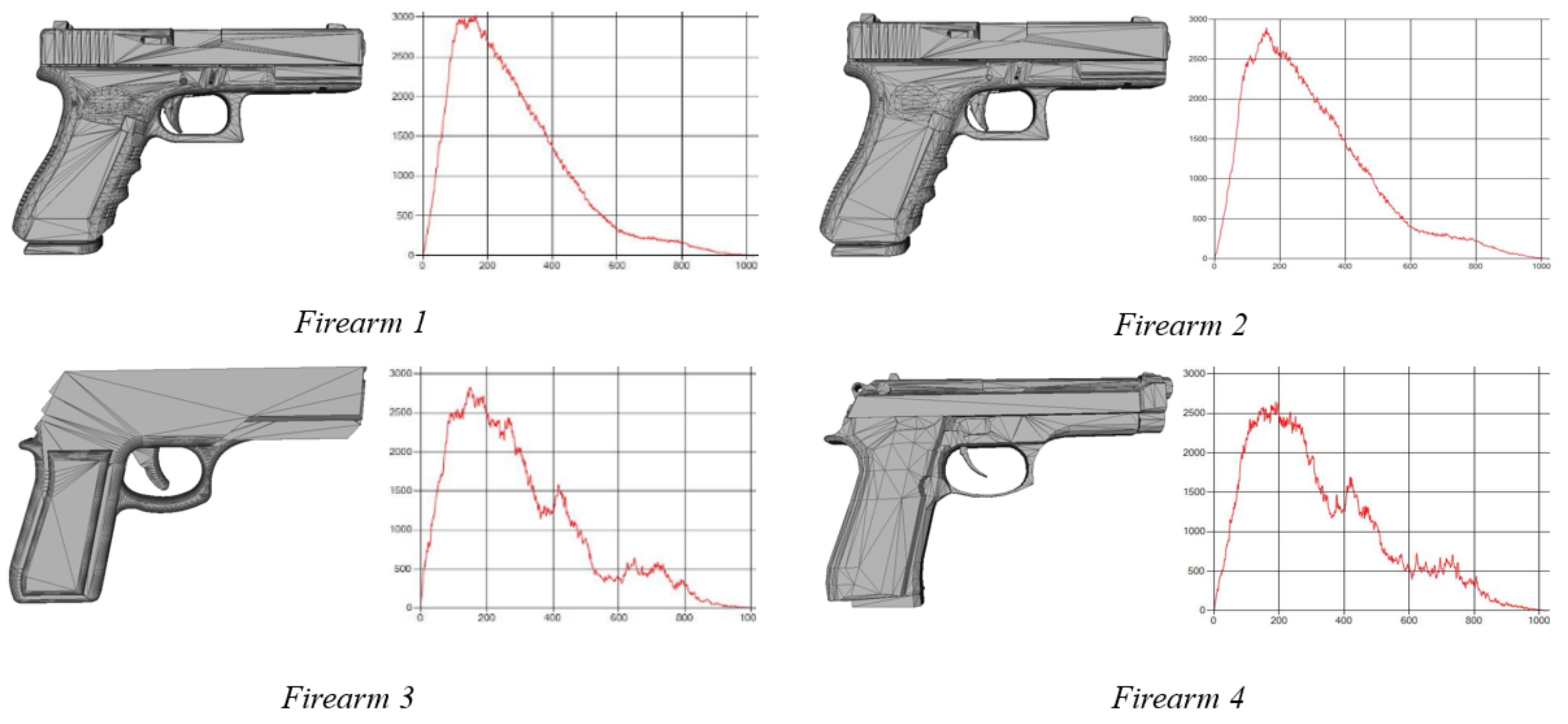

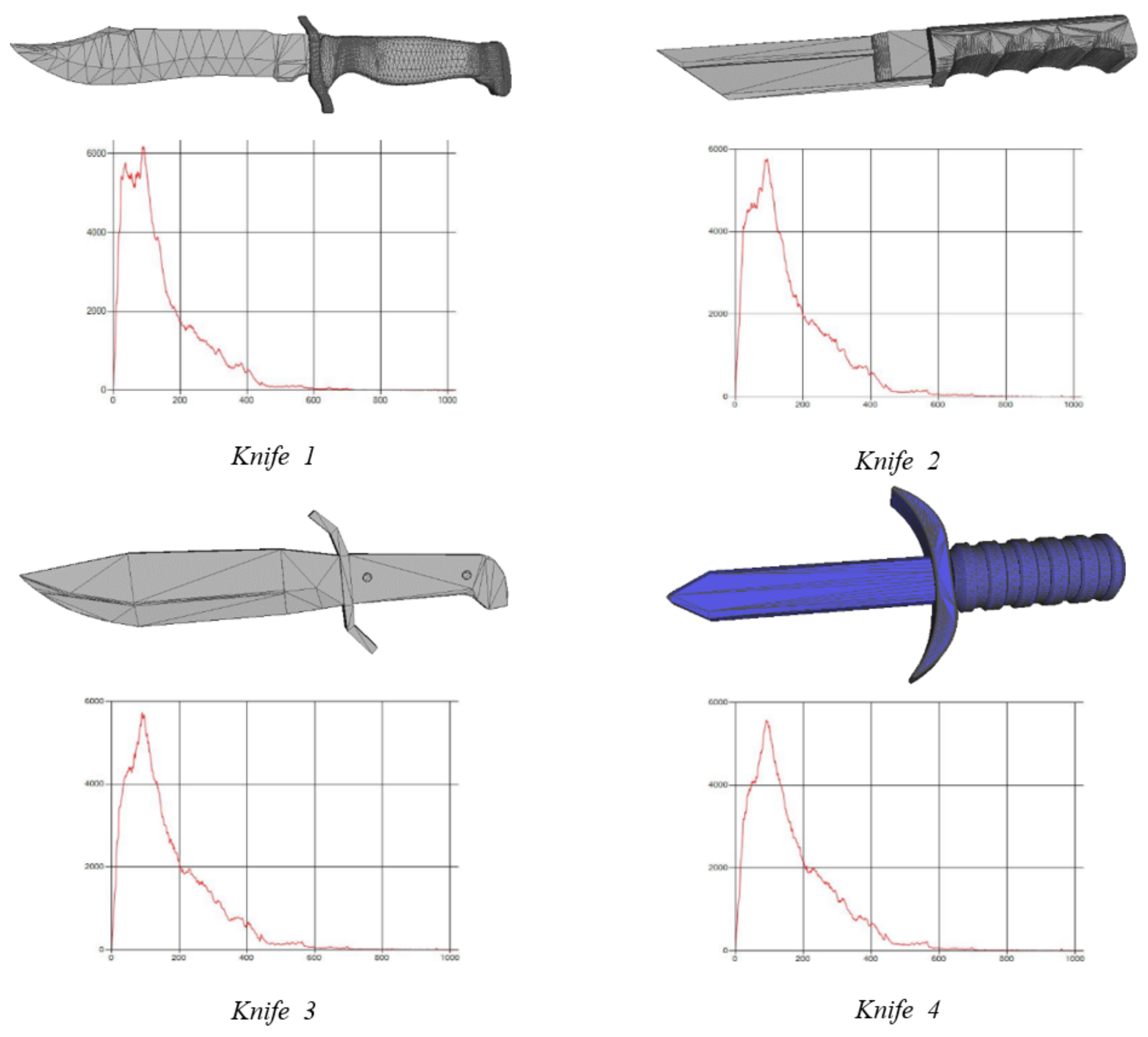

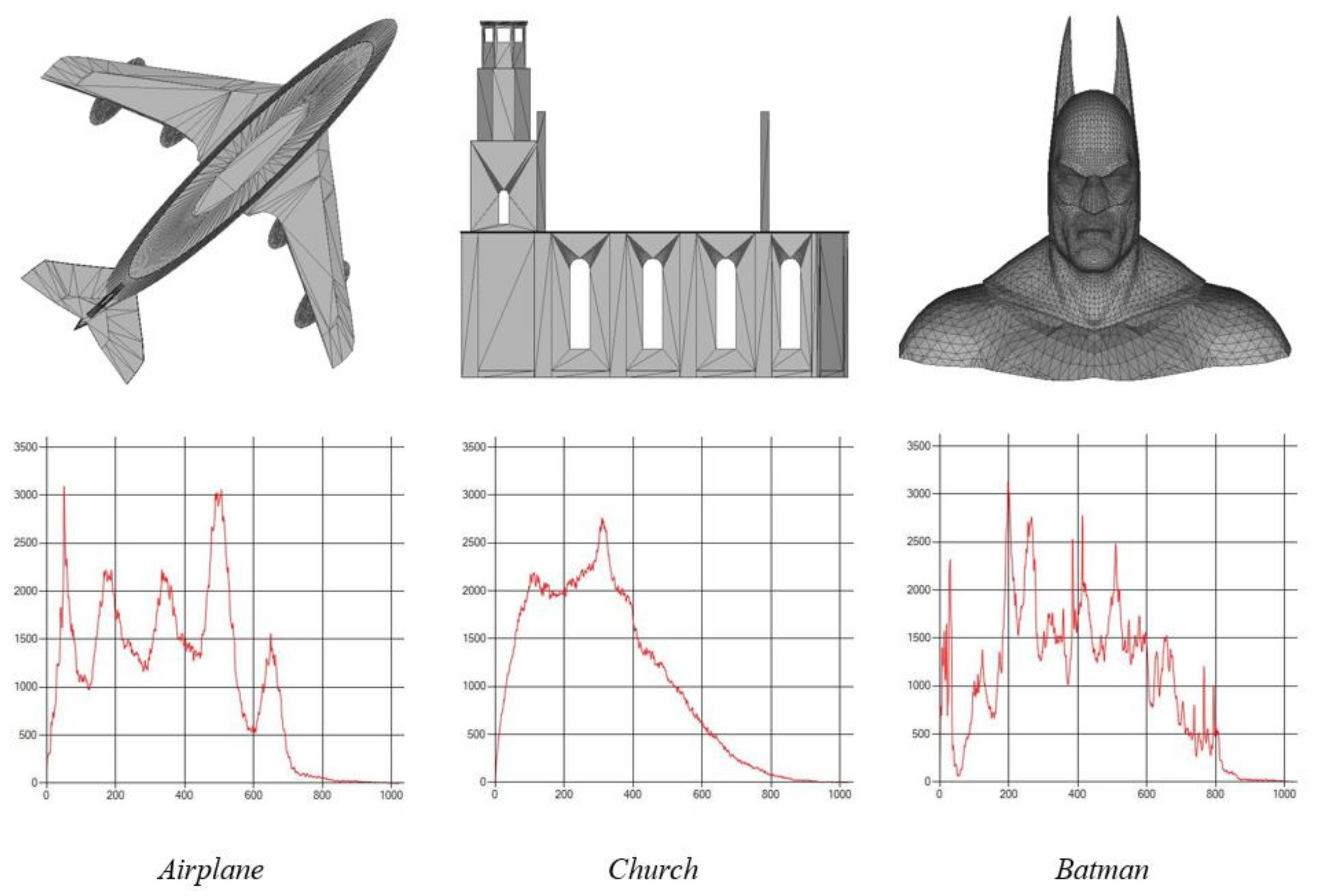

4.1. Experimental Results of D2 Shape Distribution for 3D Triangle Mesh

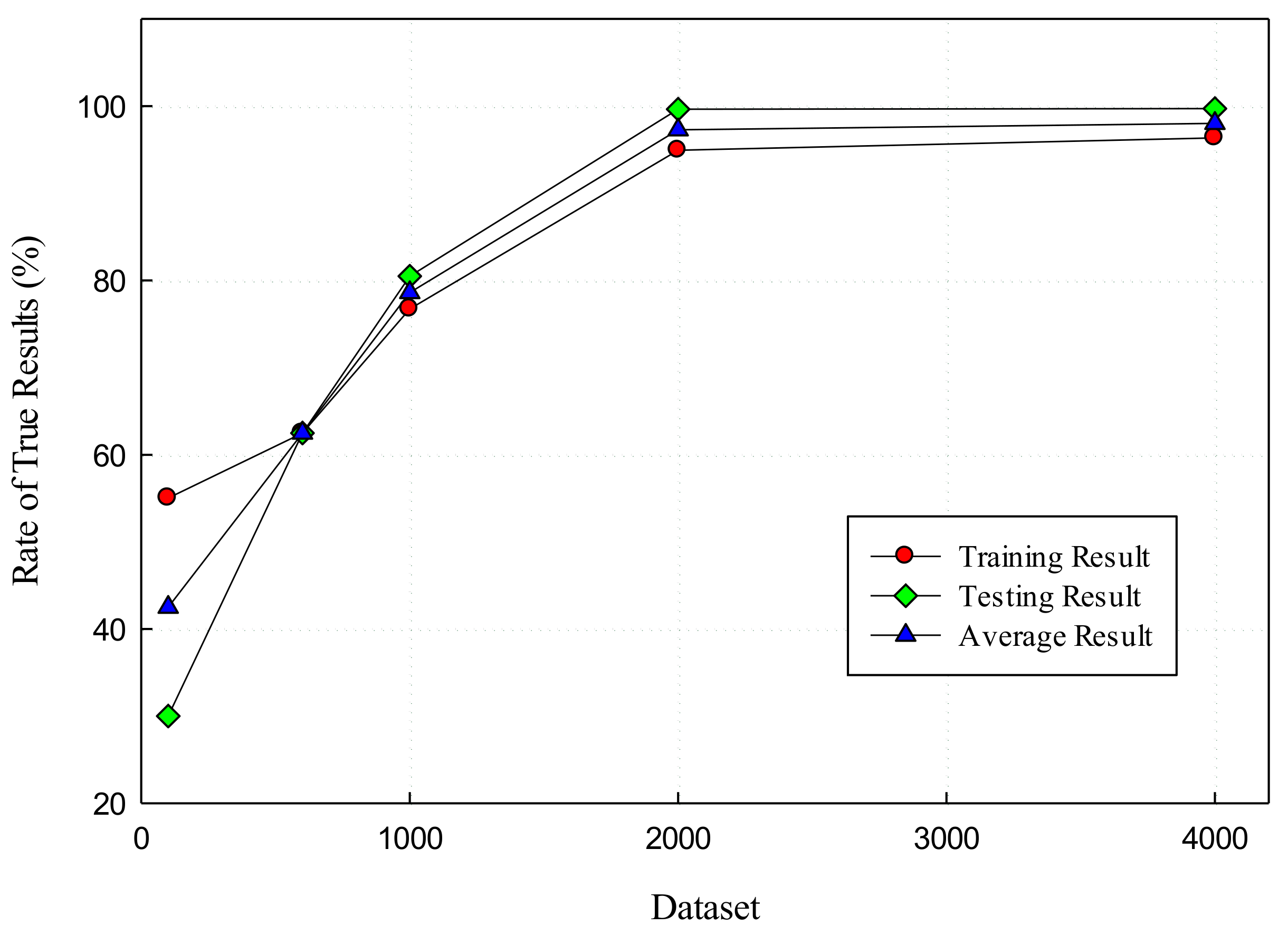

4.2. Training, Testing Results with CNNs

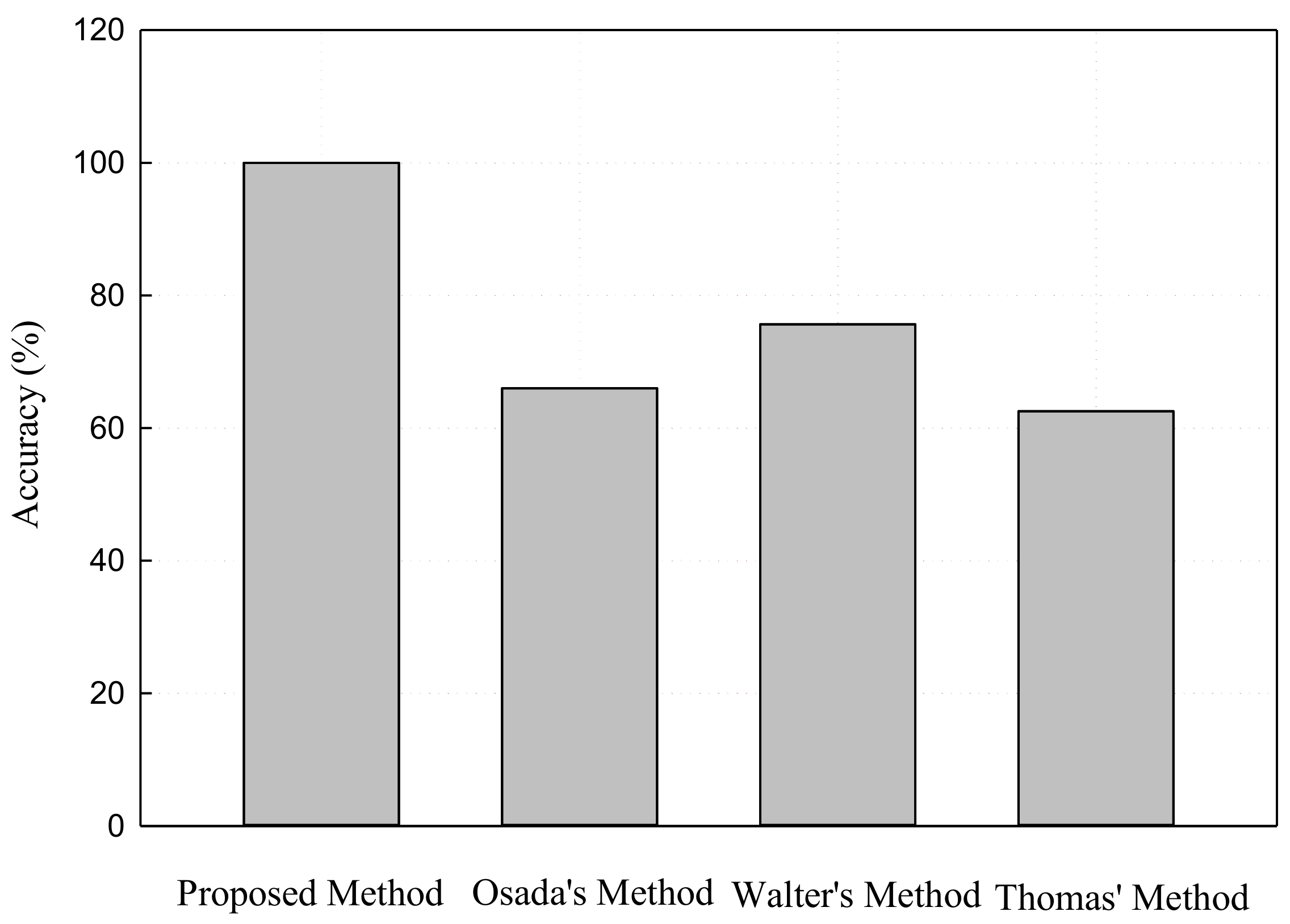

4.3. Performance Evaluation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- How 3D Printing Works: The Vision, Innovation and Technologies Behind Inkjet 3D Printing; 3D Systems: Rock Hill, CA, USA, 2012; Available online: http://www.officeproductnews.net/sites/default/files/3dWP_0.pdf (accessed on 6 March 2018).

- Lidia, H.A.; Paul, A.J.; Jose, R.J.; Will, H.; Vincent, C.A. White Paper: 3D Printing; Atos: Irving, TX, USA, 2014; Available online: https://atos.net/wp-content/uploads/2016/06/01052014-AscentWhitePaper-3dPrinting-1.pdf (accessed on 6 March 2018).

- Chandra, T.A.; Patel, M.; Singh, P.K. Study of 3D Printing and its Application. Int. J. Res. Adv. Comput. Sci. Eng. 2016, 2, 9–12. [Google Scholar]

- Learn How 3D Printing Is Useful Everywhere. Available online: https://www.sculpteo.com/en/applications/ (accessed on 6 March 2018).

- The Terrifying Reality of 3D-Printed Guns: Devices that ANYONE Can Make Are Quickly Evolving into Deadly Weapons. Available online: http://www.dailymail.co.uk/sciencetech/article-2630473/The-terrifying-reality-3D-printed-guns-Devices-ANYONE-make-quickly-evolving-deadly-weapons.html (accessed on 6 March 2018).

- World Security Alert: Dutch Students Sneak Potentially Deadly 3D Printed Knives into Courtroom. Available online: https://3dprint.com/60530/3d-printed-knives-security/ (accessed on 6 March 2018).

- 3D Printed AR-15 Test Fire. Available online: https://www.youtube.com/watch?v=zz8mlB1hZ-o (accessed on 6 March 2018).

- Songbird 3D Printed Pistol. Available online: https://www.youtube.com/watch?v=1jFjtE7bzeU (accessed on 6 March 2018).

- Gustav, L. Why Should We Care about 3D-Printing and What Are Potential Security Implications; The Geneva Centre for Security Policy: Geneva, Switerland, 2014. [Google Scholar]

- Gerald Walther. Printing Insecurity? The Security Implications of 3D-Printing of Weapons. Sci. Eng. Ethics 2015, 21, 1435–1445. [Google Scholar] [CrossRef]

- Hossein, P.; Omid, S.; Mansour, N. A Novel Weapon Detection Algorithm in X-ray Dual-Energy Images Based on Connected Component Analysis and Shape Features. Aust. J. Basic Appl. Sci. 2011, 5, 300–307. [Google Scholar]

- Mohamed, R.; Rajashankari, R. Detection of Concealed Weapons in X-Ray Images Using Fuzzy KNN. Int. J. Comput. Sci. Eng. Inf. Technol. 2012, 2, 187–196. [Google Scholar] [CrossRef]

- Parande, M.; Soma, S. Concealed Weapon Detection in a Human Body by Infrared Imaging. Int. J. Sci. Res. 2013, 4, 182–188. [Google Scholar]

- Asnani, S.; Syed, D.W.; Ali, A.M. Unconcealed Gun Detection using Haar-like and HOG Features—A Comparative Approach. Asian J. Eng. Sci. Technol. 2014, 4, 34–39. [Google Scholar]

- Kumar, R.; Verma, K. A Computer Vision based Framework for Visual Gun Detection using SURF. In Proceedings of the 2015 International Conference on Electrical, Electronics, Signals, Communication and Optimization, Visakhapatnam, India, 24–25 January 2015; pp. 1–6. [Google Scholar]

- Xu, T. Multi-Sensor Concealed Weapon Detection Using the Image Fusion Approach. Master’s Thesis, University of Windsor, Windsor, ON, Canada, 2016. [Google Scholar]

- Thomas, F.; Patrick, M.; Michael, K.; Chen, J.; Alex, H.; David, D.; David, J. A Search Engine for 3D Models. ACM Trans. Graph. 2002, 5, 1–28. [Google Scholar] [CrossRef]

- Walter, W.; Markus, V. Shape Based Depth Image to 3D Model Matching and Classification with Interview Similarity. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, Los Angeles, CA, USA, 25–30 September 2011; pp. 4865–4870. [Google Scholar]

- Osada, R.; Thomas, F.; Bernard, C.; David, D. Shape Distributions. ACM Trans. Graph. 2002, 21, 807–832. [Google Scholar] [CrossRef]

- Levi, C.; Ruiz, R.; Huang, Z. A Shape Distribution for Comparing 3D Models; Springer Lecture Note 4351; Springer: Berlin, Germany, 2007; pp. 54–63. [Google Scholar]

- STL Format in 3D Printing. Available online: https://all3dp.com/what-is-stl-file-format-extension-3d-printing/ (accessed on 30 March 2018).

- The Virtual Reality Modeling Language. Available online: http://www.cacr.caltech.edu/~slombey/asci/vrml/ (accessed on 30 March 2018).

- Convolution. Available online: http://mathworld.wolfram.com/Convolution.html (accessed on 30 March 2018).

- Rojas, R. Neural Networks: Chap 7 the Back-Propagation Algorithm; Springer: Berlin, Germany, 1996. [Google Scholar]

- Artificial Neural Networks/Activation Functions. Available online: https://en.wikibooks.org/wiki/Artificial_Neural_Networks/Activation_Functions (accessed on 6 March 2018).

- Mean Squared Error. Available online: https://www.probabilitycourse.com/chapter9/9_1_5_mean_squared_error_MSE.php (accessed on 30 March 2018).

| No. | Number of models | Accuracy (%) | ||

|---|---|---|---|---|

| Training | Testing | Average | ||

| Dataset 1 | 100 | 55.00 | 30.00 | 42.50 |

| Dataset 2 | 600 | 62.50 | 62.50 | 62.50 |

| Dataset 3 | 1000 | 76.75 | 80.50 | 78.62 |

| Dataset 4 | 2000 | 94.94 | 99.64 | 97.29 |

| Dataset 5 | 4000 | 96.35 | 99.72 | 98.03 |

| Method No. | Used Features | Test Classes | Accuracy (%) |

|---|---|---|---|

| Thomas’ method | Text, 2D sketch, D2 Shape | Chair, Elf, Table, Cannon, Bunked | 62.54 |

| Walter’s method | Depth Image | Hammer, Mug, Airplane, Bottle, Car, Shoe | 75.66 |

| Osada’s method | D2 Shape | Chair, Animal, Cup, Car, Sofa | 66 |

| Levi’s method | Improved D2 Shape | Unknown (not shown) | Unknown |

| Our method | D2 Shape, improved CNNs | Firearm, Knife | 98.03 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pham, G.N.; Lee, S.-H.; Kwon, O.-H.; Kwon, K.-R. Anti-3D Weapon Model Detection for Safe 3D Printing Based on Convolutional Neural Networks and D2 Shape Distribution. Symmetry 2018, 10, 90. https://doi.org/10.3390/sym10040090

Pham GN, Lee S-H, Kwon O-H, Kwon K-R. Anti-3D Weapon Model Detection for Safe 3D Printing Based on Convolutional Neural Networks and D2 Shape Distribution. Symmetry. 2018; 10(4):90. https://doi.org/10.3390/sym10040090

Chicago/Turabian StylePham, Giao N., Suk-Hwan Lee, Oh-Heum Kwon, and Ki-Ryong Kwon. 2018. "Anti-3D Weapon Model Detection for Safe 3D Printing Based on Convolutional Neural Networks and D2 Shape Distribution" Symmetry 10, no. 4: 90. https://doi.org/10.3390/sym10040090