Patent Automatic Classification Based on Symmetric Hierarchical Convolution Neural Network

Abstract

1. Introduction

- To improve the efficiency of automatic patent classification, the model is trained by combining the short text of patent name and abstract.

- After pre-processing the input data by word embedding method, for further classification, we introduce a hierarchical CNN framework to create the representations of the input text.

- We conduct the PAC experiments in five ’sections’ and 50 ’classes’ on large-scale real Chinese patent datasets. The experiment results verify that our model based on hierarchical CNN outperforms other advanced alternatives consistently and statistically significantly on both fine-grained and coarse-grained classification.

2. Related Work

2.1. International Patent Classification System

2.2. PAC Algorithms

3. CNN Framework

4. Data Collection and Preprocessing

4.1. Data Collection

4.2. Data Pre-Processing

- The first way is to use patent names as the original input for classification. We use regular expression to directly extract the name and the corresponding classification number from a patent document, i.e., forming name–sections (e.g., H) tag data.

- The second way is to use patent abstracts as the original input for classification. We use regular expression to directly extract the abstract and the corresponding classification number from a patent document, i.e., forming abstract–sections (e.g., H) tag data.

- The third way is to use patent names and abstracts as the original inputs for classification. We use regular expression to directly extract the name, abstract and the corresponding classification number from a patent document, thereby forming the name and abstract–section (e.g., H)/Class(e.g., H01) tag data.

- Firstly, the original text of the input is processed as a word segmentation task. In the experiment, the word segmentation of the text is completed by jieba participle module of the Python version.

- Secondly, the common stop words collected on the Internet and the high-frequency words with low degree of discrimination in the patent literature are combined to form a stop word list, which is used to remove the stop word after the word segmentation, meanwhile reducing the feature dimension.

- Thirdly, a vectorized representation of words is implemented by combining word embedding techniques. In the experiments, the open source gensim [8] word vector training tool is used to pre-train all the experimental datasets, obtaining the corresponding embeddings.

5. Experimental Results and Analysis

5.1. Datasets and Evaluation Metrics

5.1.1. Datasets

- Dataset_ is used to examine the performance of algorithms on section classification which only use the patent names as input.

- Dataset_ is used to examine the performance of algorithms on section classification that only use patent abstracts as input.

- S_Dataset_ is used to examine the performance of algorithms on section classification that combines the patent name and abstract as input.

- C_Dataset_ is used to examine the performance of algorithms on class classification that combines the patent name and abstract as input.

5.1.2. Evaluation Metrics

5.2. Experimental Results on Coarse-Grained Classification

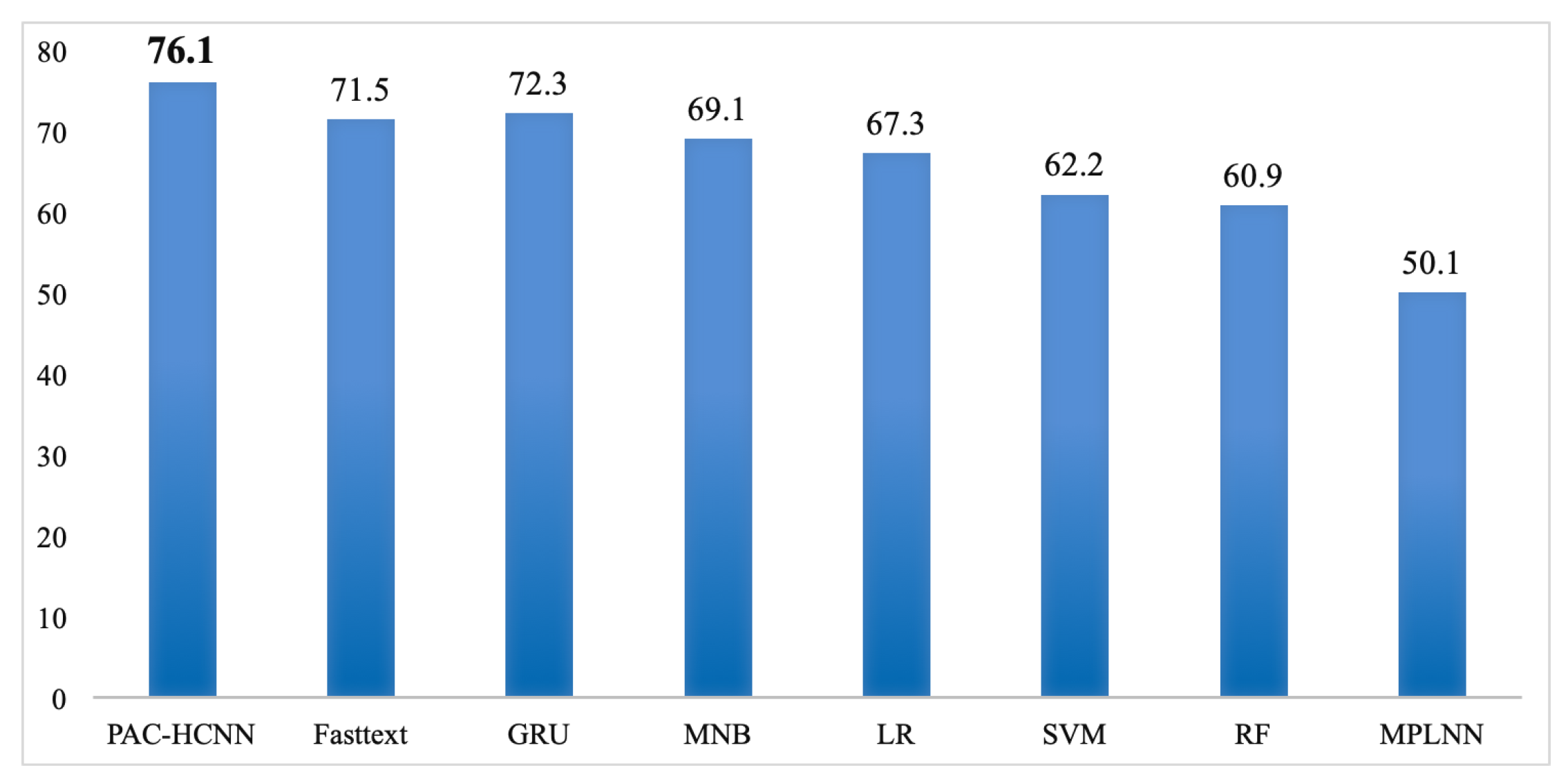

5.3. Comparing with Other Models

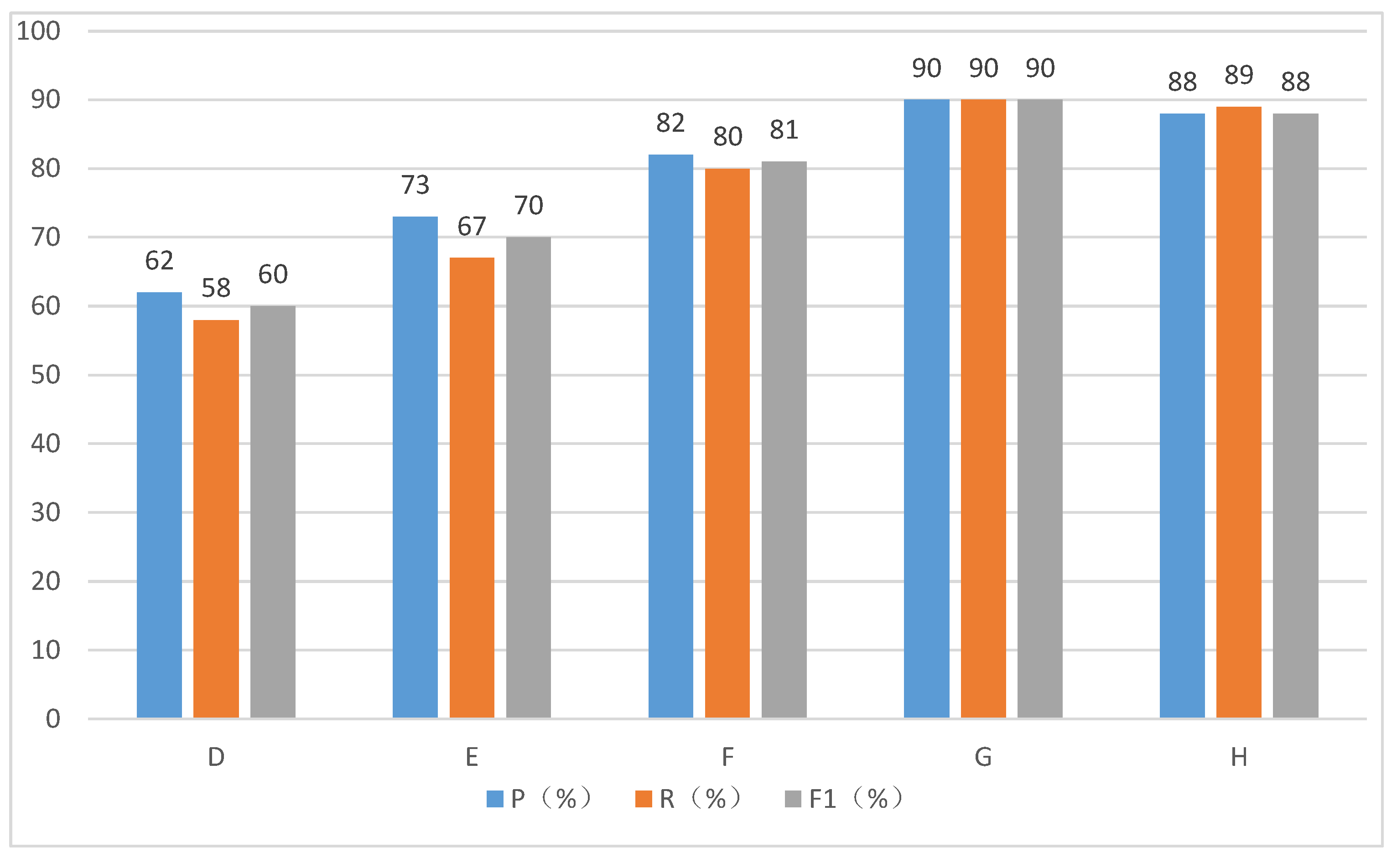

5.4. Performance Variation on Different Sections

5.5. Computation Cost

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Neuhäusler, P.; Rothengatter, O.; Frietsch, R. Patent Applications-Structures, Trends and Recent Developments 2018. Technical Report, Studien Zum Deutschen Innovationssystem. 2019. Available online: https://www.econstor.eu/bitstream/10419/194274/1/1067672206.pdf (accessed on 15 January 2020).

- Meng, L.; He, Y.; Li, Y. Research of Semantic Role Labeling and Application in Patent Knowledge Extraction. In Proceedings of the First International Workshop on Patent Mining And Its Applications (IPaMin 2014) Co-Located with Konvens 2014, Hildesheim, Germany, 6–7 October 2014. [Google Scholar]

- Roh, T.; Jeong, Y.; Yoon, B. Developing a Methodology of Structuring and Layering Technological Information in Patent Documents through Natural Language Processing. Sustainability 2017, 9, 2117. [Google Scholar] [CrossRef]

- Wu, J.; Chang, P.; Tsao, C.; Fan, C. A patent quality analysis and classification system using self-organizing maps with support vector machine. Appl. Soft Comput. 2016, 41, 305–316. [Google Scholar] [CrossRef]

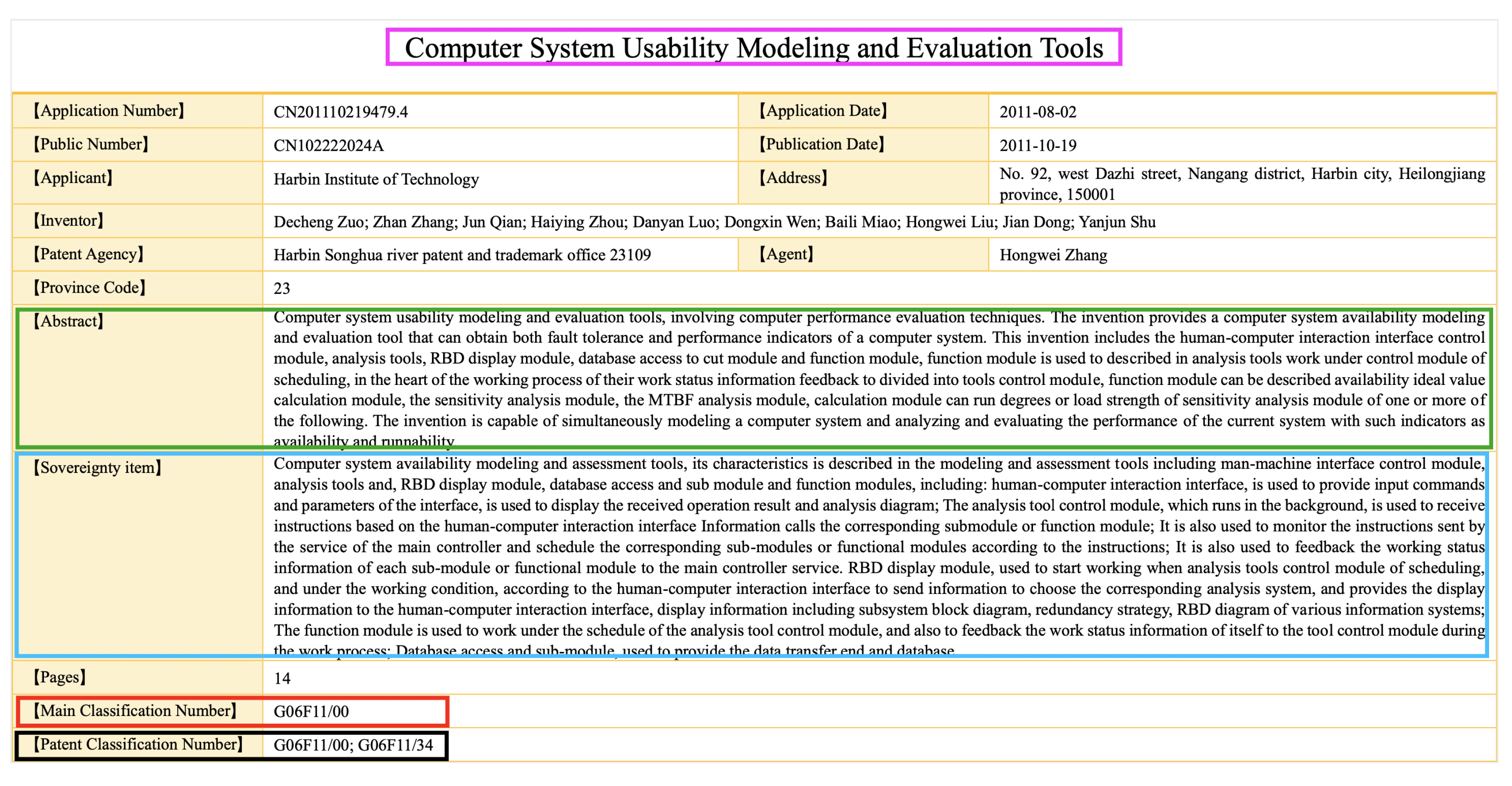

- Zuo, D.; Zhang, Z.; Qian, J.; Zhou, H.; Luo, D.; Wen, D.; Miao, B.; Liu, H.; Dong, J.; Shu, Y. Computer System Usability Modeling and Evaluation Tool. Chinese CN102222024A, 19 October 2011. Available online: http://dbpub.cnki.net/grid2008/dbpub/detail.aspx?dbcode=SCPD&dbname=SCPD2011&filename=CN102222024A&uid=WEEvREcwSlJHSldRa1FhcTdnTnhXM281cEdHa0o5bTQ1ZHlUd3YrcTFwND0=\protect\T1\textdollar9A4hF_YAuvQ5obgVAqNKPCYcEjKensW4IQMovwHtwkF4VYPoHbKxJw!! (accessed on 15 January 2020).

- Liao, J.; Jia, G.; Zhang, Y. The rapid automatic categorization of patent based on abstract text. Inf. Stud. Theory Appl. 2016, 39, 103–105. [Google Scholar] [CrossRef]

- Xu, D. Fast Automatic Classification Method of Patents Based on Claims. J. Libr. Inf. Sci. 2018, 3, 72–76. [Google Scholar] [CrossRef]

- Heuer, H. Text Comparison Using Word Vector Representations and Dimensionality Reduction. CoRR 2016. Available online: http://xxx.lanl.gov/abs/1607.00534 (accessed on 15 January 2020).

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional Sequence to Sequence Learning. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 1243–1252. [Google Scholar]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language Modeling with Gated Convolutional Networks. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, P. The Predicament and Outlet of International Patent Classification. China Invent. Pat. 2009, 8, 75–79. [Google Scholar] [CrossRef]

- Jie, H.; Li, S.; Hu, J.; Yang, G. A Hierarchical Feature Extraction Model for Multi-Label Mechanical Patent Classification. Sustainability 2018, 10, 219. [Google Scholar] [CrossRef]

- Hu, Z.; Fang, X.; Wen, Y.; Zhang, X.; Liang, T. Study on Automatic Classification of Patents Oriented to TRIZ. Data Anal. Knowl. Discov. 2015, 31, 66–74. [Google Scholar]

- He, C.; Loh, H.T. Grouping of TRIZ Inventive Principles to facilitate automatic patent classification. Expert Syst. Appl. 2008, 34, 788–795. [Google Scholar] [CrossRef]

- Jia, S.; Liu, C.; Sun, L.; Liu, X.; Peng, T. Patent Automatic Classification Based on Multi-Feature Multi-Classifier Integration. Data Anal. Knowl. Discov. 2017, 1, 76–84. [Google Scholar]

- Chu, X.; Ma, C.; Li, J.; Lu, B.; Utiyama, M.; Isahara, H. Large-scale patent classification with min-max modular support vector machines. In Proceedings of the International Joint Conference on Neural Networks, IJCNN 2008, Part of the IEEE World Congress on Computational Intelligence, WCCI 2008, Hong Kong, China, 1–6 June 2008; pp. 3973–3980. [Google Scholar] [CrossRef]

- Wu, C.; Ken, Y.; Huang, T. Patent classification system using a new hybrid genetic algorithm support vector machine. Appl. Soft Comput. 2010, 10, 1164–1177. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, A Meeting of SIGDAT, a Special Interest Group of the ACL, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Lee, J.Y.; Dernoncourt, F. Sequential Short-Text Classification with Recurrent and Convolutional Neural Networks. In Proceedings of the NAACL HLT 2016, The 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 515–520. [Google Scholar]

- Dai, L.; Jiang, K. Chinese Text Classification Based on FastText. Comput. Mod. 2018, 5, 35–40. [Google Scholar] [CrossRef]

- Sun, L.; Cao, B.; Wang, J.; Srisa-an, W.; Yu, P.; Leow, A.D.; Checkoway, S. KOLLECTOR: Detecting Fraudulent Activities on Mobile Devices Using Deep Learning. IEEE Trans. Mobile Comput. 2020. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A System for Large-Scale Machine Learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, OSDI 2016, Savannah, GA, USA, 2–4 November 2016; pp. 265–283. [Google Scholar]

- Zhang, Y.; Fang, Y.; Xiao, W. Deep keyphrase generation with a convolutional sequence to sequence model. In Proceedings of the 4th International Conference on Systems and Informatics, ICSAI 2017, Hangzhou, China, 11–13 November 2017; pp. 1477–1485. [Google Scholar] [CrossRef]

- Myers, D.W.; McGuffee, J. Choosing scrapy. J. Comput. Sci. Coll. 2015, 31, 83–89. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2012, 12, 2825–2830. [Google Scholar]

| Dataset Name | #Train | #Val | #Test | #Label |

|---|---|---|---|---|

| Dataset_ | 23,565 | 4418 | 1474 | 5 |

| Dateset_ | 23,565 | 4418 | 1474 | 5 |

| S_Dataset_ | 23,565 | 4418 | 1474 | 5 |

| C_Dataset_ | 23,565 | 4418 | 1474 | 50 |

| Parameter Name | Value | Parameter Name | Value |

|---|---|---|---|

| Num_Classes | 5 | Kernel_size | 5 |

| Num_filters | 128 | Vocab_size | 5000 |

| Learning_rate | 1 × | Hidden_dim | 128 |

| Batch_size | 256 | Num_epochs | 20 |

| Dataset Name | Average P(%) | Average R(%) | Average F_1(%) |

|---|---|---|---|

| Dataset_ | 80.3 | 82.1 | 81.2 |

| Dateset_ | 83.7 | 84.1 | 83.9 |

| S_Dataset_ | 85.7 | 87.8 | 86.7 |

| Model | Times |

|---|---|

| PAC-HCNN | 1873 |

| GRU | 2837 |

| Fasttext | 1674 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; He, C.; Fang, Y.; Ge, B.; Xing, M.; Xiao, W. Patent Automatic Classification Based on Symmetric Hierarchical Convolution Neural Network. Symmetry 2020, 12, 186. https://doi.org/10.3390/sym12020186

Zhu H, He C, Fang Y, Ge B, Xing M, Xiao W. Patent Automatic Classification Based on Symmetric Hierarchical Convolution Neural Network. Symmetry. 2020; 12(2):186. https://doi.org/10.3390/sym12020186

Chicago/Turabian StyleZhu, Huiming, Chunhui He, Yang Fang, Bin Ge, Meng Xing, and Weidong Xiao. 2020. "Patent Automatic Classification Based on Symmetric Hierarchical Convolution Neural Network" Symmetry 12, no. 2: 186. https://doi.org/10.3390/sym12020186

APA StyleZhu, H., He, C., Fang, Y., Ge, B., Xing, M., & Xiao, W. (2020). Patent Automatic Classification Based on Symmetric Hierarchical Convolution Neural Network. Symmetry, 12(2), 186. https://doi.org/10.3390/sym12020186