Comparative Study of Random Forest and Support Vector Machine Algorithms in Mineral Prospectivity Mapping with Limited Training Data

Abstract

1. Introduction

2. Machine Learning Algorithms

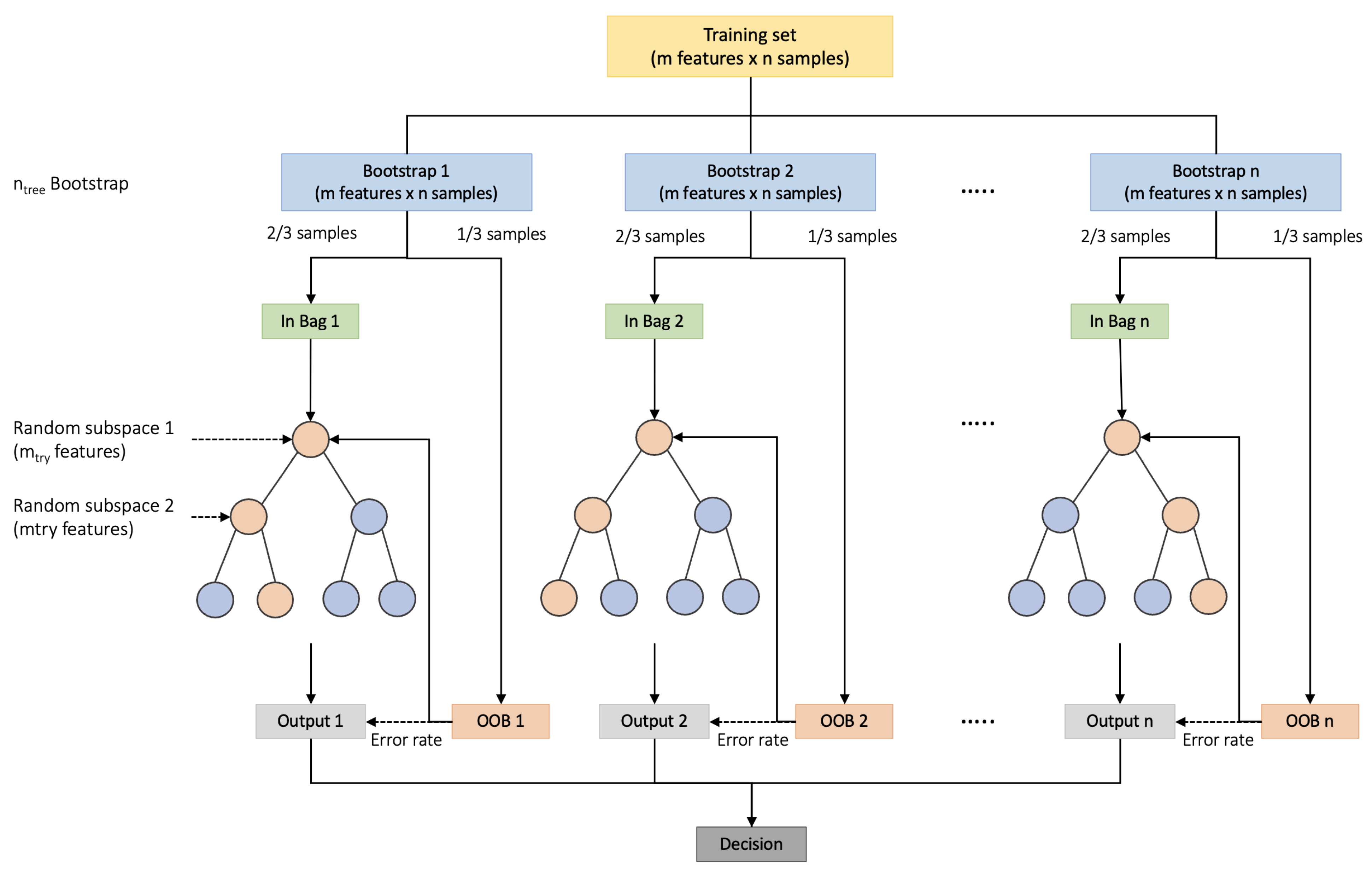

2.1. Random Forest

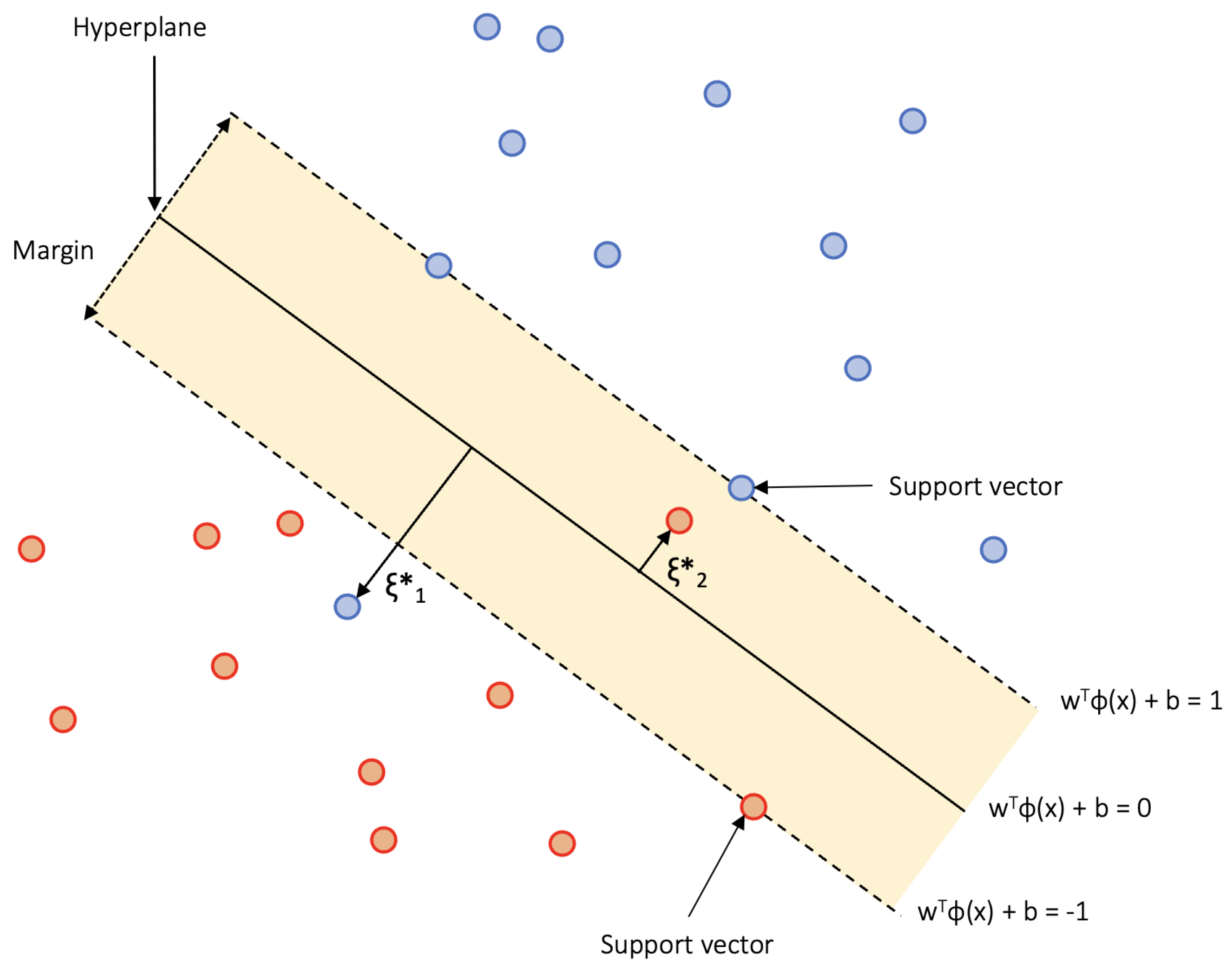

2.2. Support Vector Machine

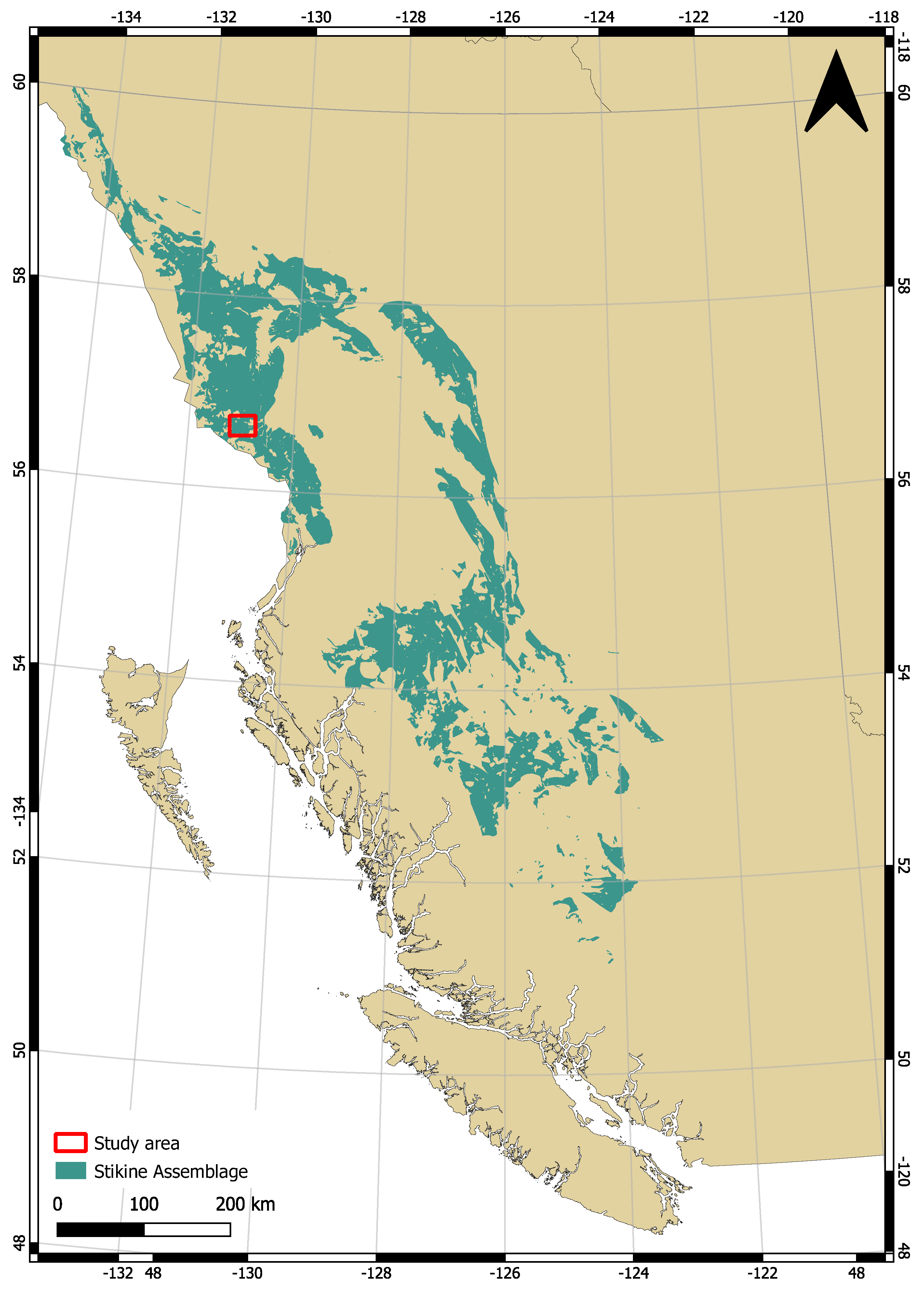

3. Study Area

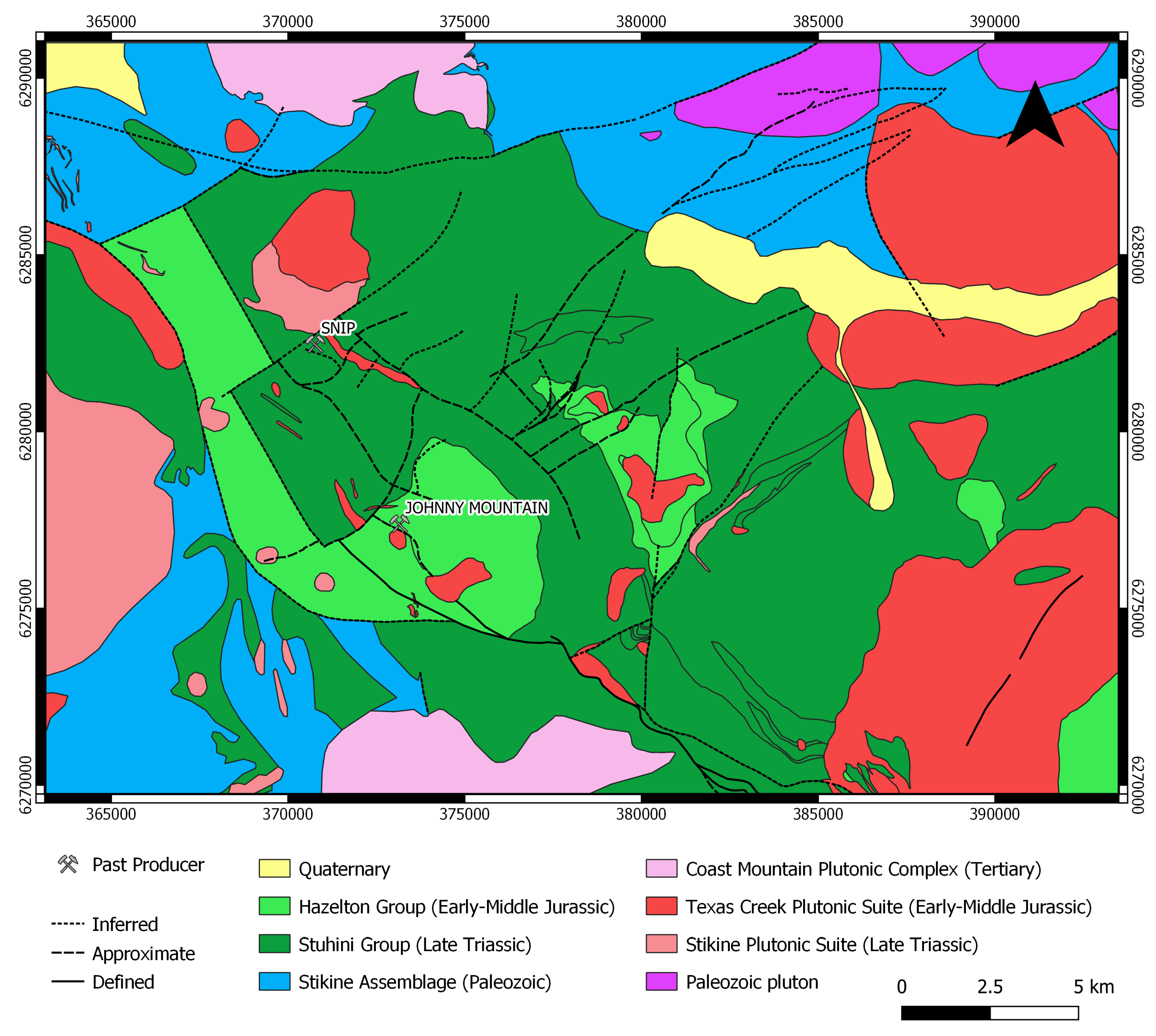

3.1. Geologic Setting

3.2. Conceptual Exploration Model

- The existence of potential Au source rocks.

- The presence of a favorable geological setting (also referred to as traps).

- The existence of clear pathways for the mineralization process.

4. Methodology

4.1. Data Preparation

4.1.1. Training Dataset

- Au must be listed as the primary commodity;

- The occurrence must be categorized as an epithermal deposit (i.e., vein type);

- The occurrence must be an advanced exploration project (i.e., prospects and past producers).

4.1.2. Input Dataset

4.1.3. Evidential Features

4.2. Model Training

4.3. Model Evaluation

5. Results

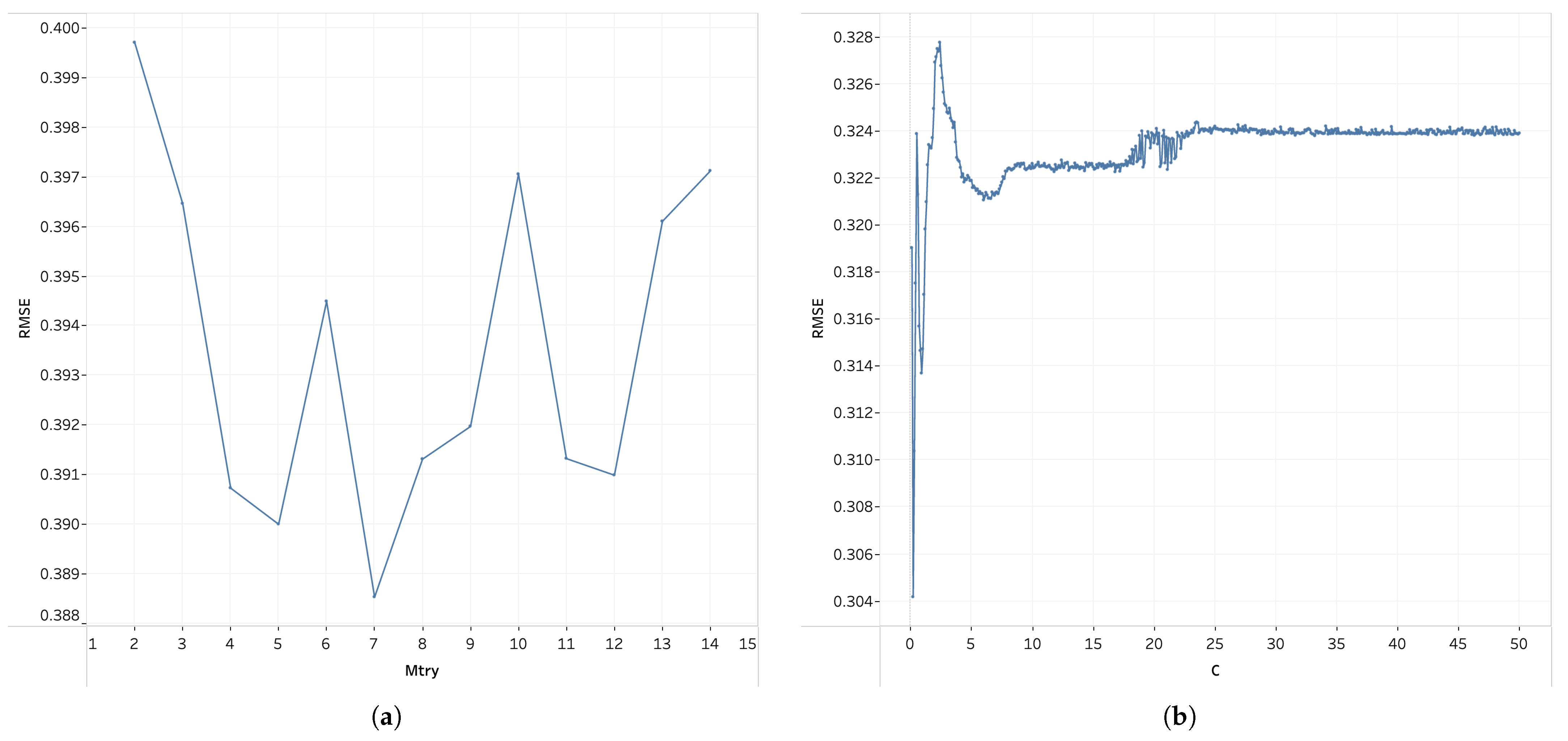

5.1. Sensitivity Analysis

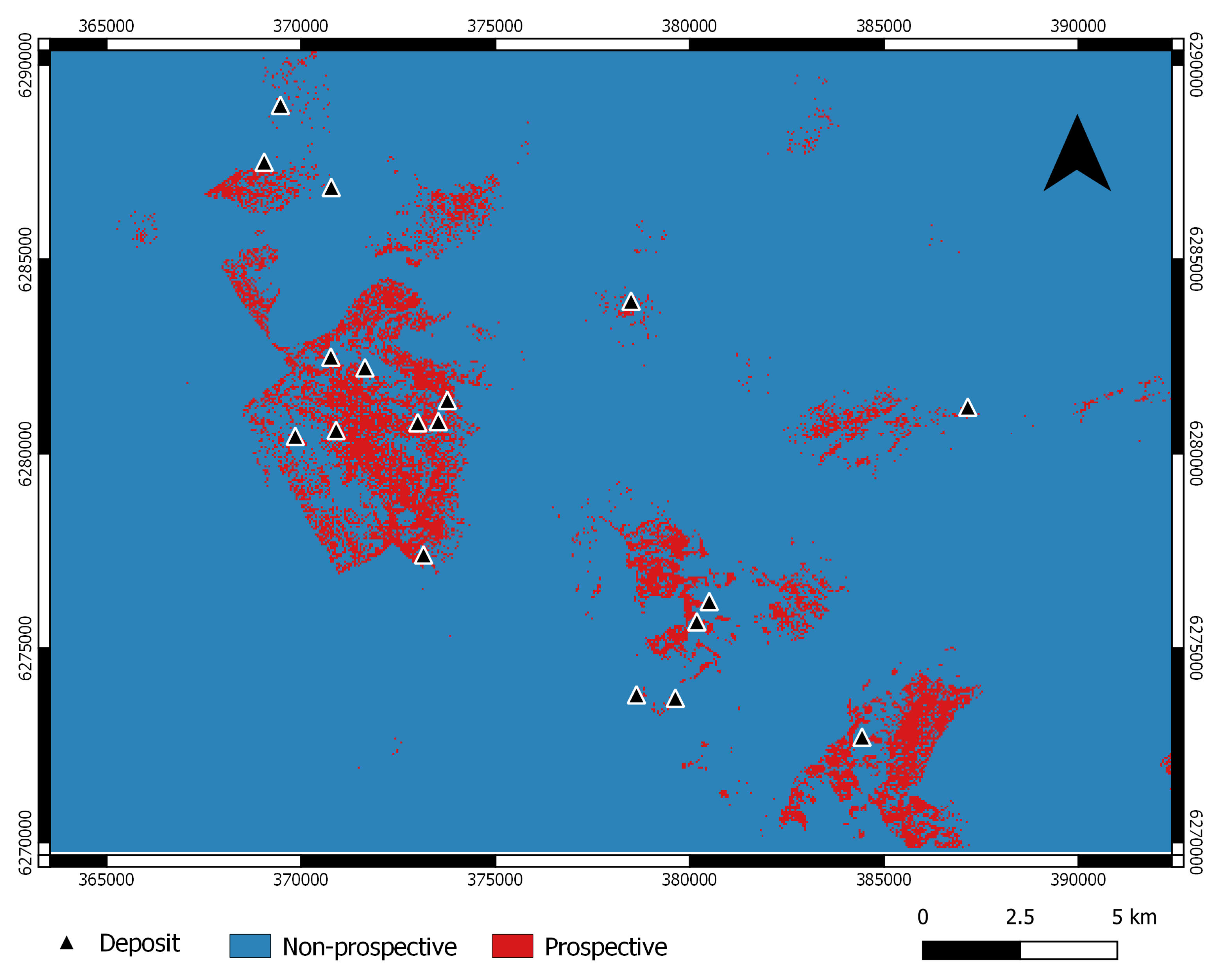

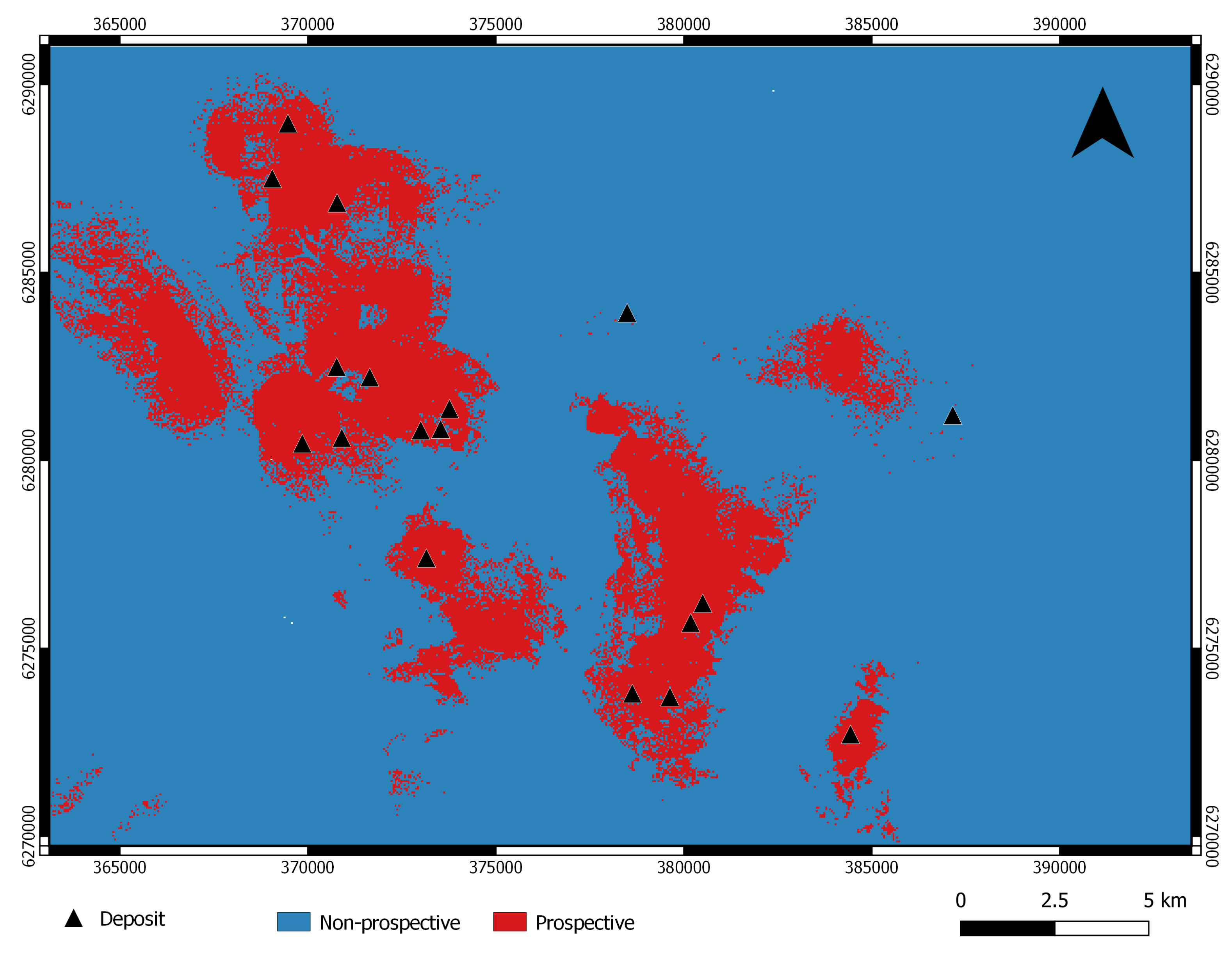

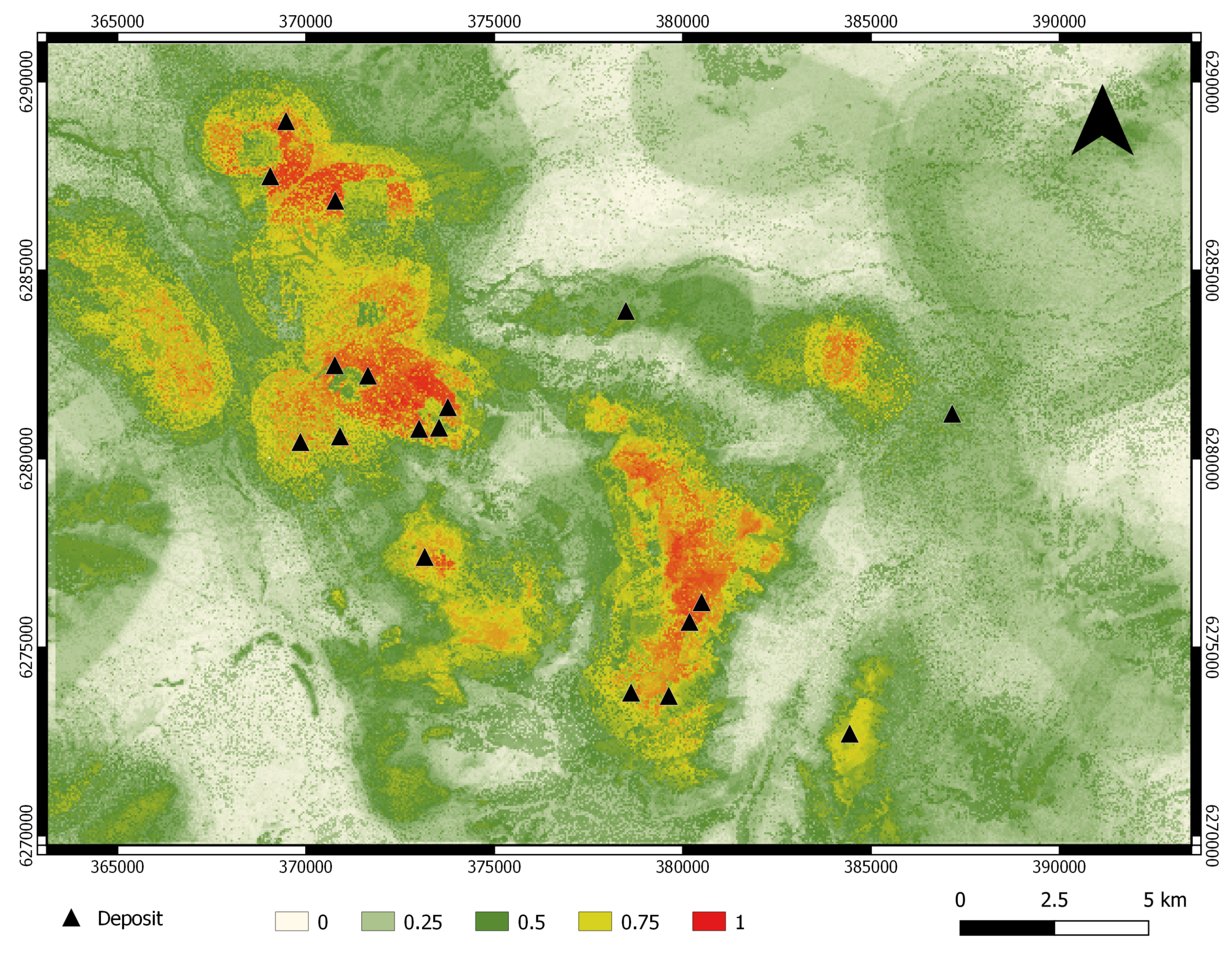

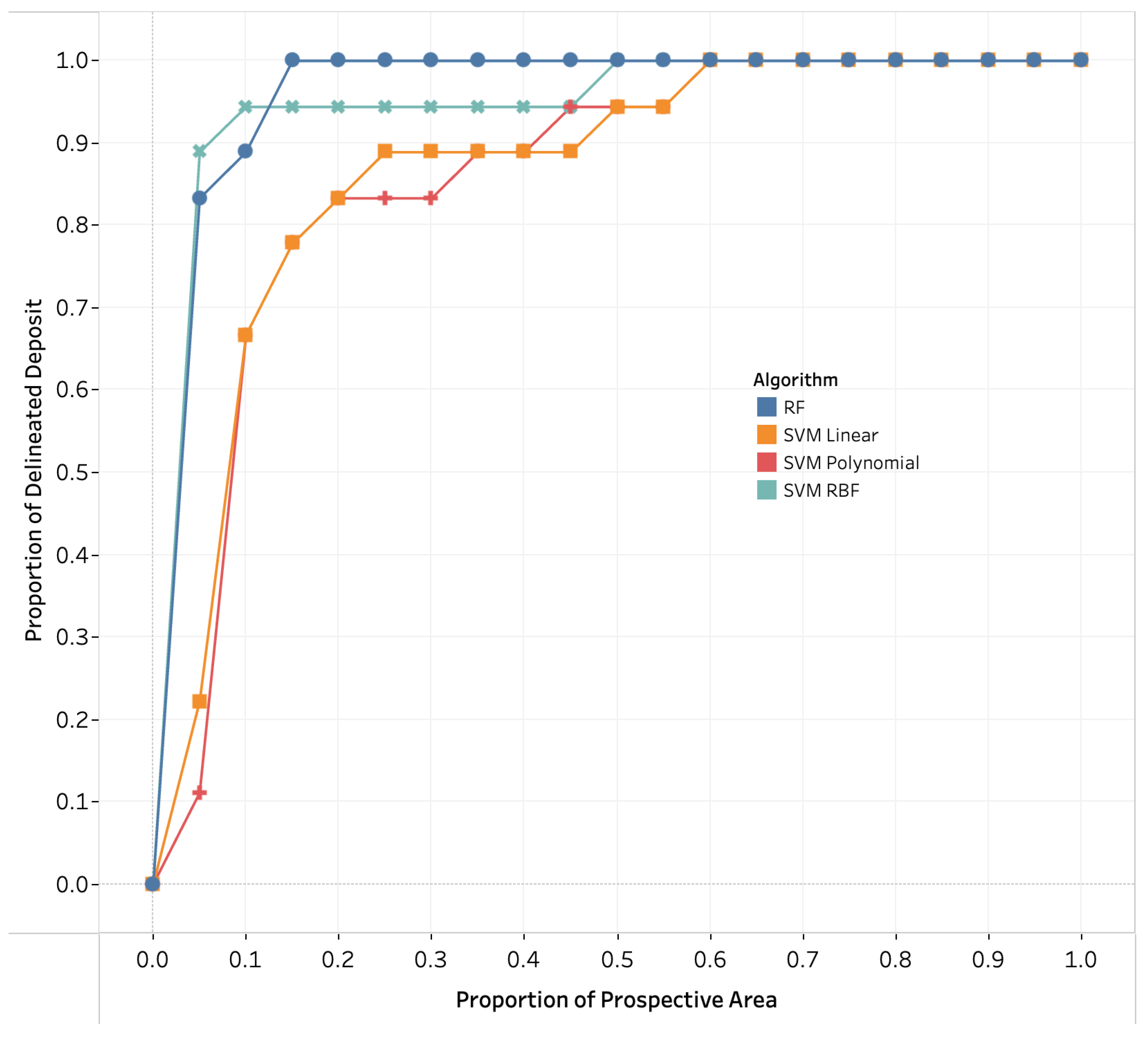

5.2. Performance Assessment of the Predictive Models

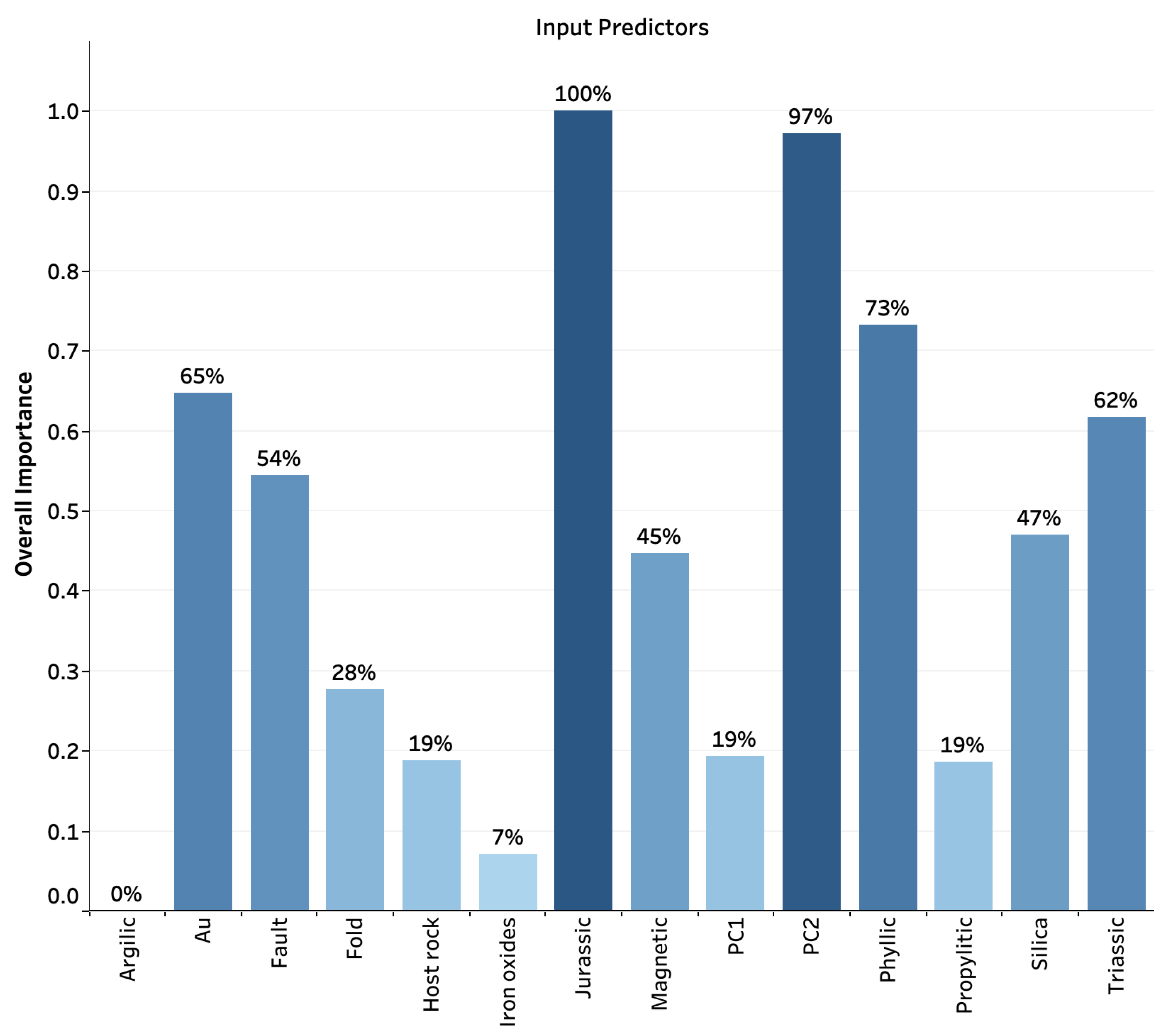

5.3. Feature Relative Importance

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| MINFILE # | NAME | STATUS | UTM NORTH | UTM EAST |

|---|---|---|---|---|

| 104B 077 | BRONSON SLOPE | Developed Prospect | 6,282,211 | 371,642 |

| 104B 089 | SNIP NORTH-EAST ZONE | Prospect | 6,286,850 | 370,775 |

| 104B 107 | JOHNNY MOUNTAIN | Past Producer | 6,277,401 | 373,149 |

| 104B 113 | INEL | Developed Prospect | 6,275,679 | 380,178 |

| 104B 116 | TAMI (BLUE RIBBON) | Prospect | 6,272,714 | 384,430 |

| 104B 138 | KHYBER PASS | Prospect | 6,273,715 | 379,627 |

| 104B 204 | WARATAH 6 | Prospect | 6,283,926 | 378,489 |

| 104B 250 | SNIP | Past Producer | 6,282,486 | 370,764 |

| 104B 264 | C3 (REG) | Prospect | 6,280,600 | 370,900 |

| 104B 300 | BRONSON | Prospect | 6,281,374 | 373,763 |

| 104B 356 | GORGE | Prospect | 6,287,500 | 369,050 |

| 104B 357 | GREGOR | Prospect | 6,288,962 | 369,467 |

| 104B 537 | MYSTERY | Prospect | 6,281,200 | 387,150 |

| 104B 557 | AK | Prospect | 6,276,200 | 380,500 |

| 104B 563 | CE CONTACT | Prospect | 6,280,800 | 373,000 |

| 104B 567 | SMC | Prospect | 6,280,450 | 369,850 |

| 104B 571 | CE | Prospect | 6,280,829 | 373,529 |

| 104B 685 | KHYBER WEST | Prospect | 6,273,802 | 378,627 |

Appendix B

| MINFILE # | NAME | STATUS | UTM NORTH | UTM EAST |

|---|---|---|---|---|

| 104B 005 | CRAIG RIVER | Showing | 6,276,177 | 366,697 |

| 104B 205 | HANDEL | Showing | 6,281,905 | 376,693 |

| 104B 206 | WOLVERINE | Showing | 6,277,250 | 377,150 |

| 104B 256 | WOLVERINE (INEL) | Showing | 6,277,063 | 383,766 |

| 104B 268 | HANGOVER TRENCH | Showing | 6,275,185 | 369,738 |

| 104B 272 | DAN 2 | Showing | 6,271,824 | 375,475 |

| 104B 292 | GIM (ZONE 1) | Showing | 6,281,770 | 383,605 |

| 104B 305 | MILL | Showing | 6,272,879 | 363,417 |

| 104B 306 | NORTH CREEK | Showing | 6,275,031 | 368,709 |

| 104B 324 | IAN 4 | Showing | 6,286,725 | 379,485 |

| 104B 326 | CAM 9 | Showing | 6,279,635 | 391,709 |

| 104B 327 | CAM SOUTH | Showing | 6,279,579 | 392,696 |

| 104B 331 | IAN 8 | Showing | 6,286,038 | 383,655 |

| 104B 362 | KIRK MAGNETITE | Showing | 6,276,565 | 389,635 |

| 104B 368 | ELMER | Showing | 6,275,780 | 391,286 |

| 104B 377 | ROCK AND ROLL | Developed Prospect | 6,288,261 | 363,286 |

| 104B 416 | IAN 6 SOUTH | Showing | 6,286,900 | 382,200 |

| 104B 500 | KRL-FORREST | Showing | 6,288,950 | 393,400 |

| 104B 536 | ANDY | Showing | 6,278,300 | 385,825 |

References

- Bonham-Carter, G. Geographic Information Systems for Geoscientists: Modelling with GIS; Number 13; Elsevier: Amsterdam, The Netherlands, 1994. [Google Scholar]

- Carranza, E.; Hale, M.; Faassen, C. Selection of coherent deposit-type locations and their application in data-driven mineral prospectivity mapping. Ore Geol. Rev. 2008, 33, 536–558. [Google Scholar] [CrossRef]

- Harris, D.; Zurcher, L.; Stanley, M.; Marlow, J.; Pan, G. A comparative analysis of favorability mappings by weights of evidence, probabilistic neural networks, discriminant analysis, and logistic regression. Nat. Resour. Res. 2003, 12, 241–255. [Google Scholar] [CrossRef]

- Joly, A.; Porwal, A.; McCuaig, T.C. Exploration targeting for orogenic gold deposits in the Granites-Tanami Orogen: Mineral system analysis, targeting model and prospectivity analysis. Ore Geol. Rev. 2012, 48, 349–383. [Google Scholar] [CrossRef]

- Harris, J.; Wilkinson, L.; Heather, K.; Fumerton, S.; Bernier, M.; Ayer, J.; Dahn, R. Application of GIS processing techniques for producing mineral prospectivity maps—A case study: Mesothermal Au in the Swayze Greenstone Belt, Ontario, Canada. Nat. Resour. Res. 2001, 10, 91–124. [Google Scholar] [CrossRef]

- Brown, W.M.; Gedeon, T.; Groves, D.; Barnes, R. Artificial neural networks: A new method for mineral prospectivity mapping. Aust. J. Earth Sci. 2000, 47, 757–770. [Google Scholar] [CrossRef]

- Abedi, M.; Norouzi, G.H.; Bahroudi, A. Support vector machine for multi-classification of mineral prospectivity areas. Comput. Geosci. 2012, 46, 272–283. [Google Scholar] [CrossRef]

- Geranian, H.; Tabatabaei, S.H.; Asadi, H.H.; Carranza, E.J.M. Application of discriminant analysis and support vector machine in mapping gold potential areas for further drilling in the Sari-Gunay gold deposit, NW Iran. Nat. Resour. Res. 2016, 25, 145–159. [Google Scholar] [CrossRef]

- Shabankareh, M.; Hezarkhani, A. Application of support vector machines for copper potential mapping in Kerman region, Iran. J. Afr. Earth Sci. 2017, 128, 116–126. [Google Scholar] [CrossRef]

- Carranza, E.J.M.; Laborte, A.G. Data-driven predictive mapping of gold prospectivity, Baguio district, Philippines: Application of Random Forests algorithm. Ore Geol. Rev. 2015, 71, 777–787. [Google Scholar] [CrossRef]

- Carranza, E.J.M.; Laborte, A.G. Data-driven predictive modeling of mineral prospectivity using random forests: A case study in Catanduanes Island (Philippines). Nat. Resour. Res. 2016, 25, 35–50. [Google Scholar] [CrossRef]

- Harris, J.; Grunsky, E.; Behnia, P.; Corrigan, D. Data-and knowledge-driven mineral prospectivity maps for Canada’s North. Ore Geol. Rev. 2015, 71, 788–803. [Google Scholar] [CrossRef]

- Lachaud, A.; Marcus, A.; Vučetić, S.; Mišković, I. Study of the Influence of Non-Deposit Locations in Data-Driven Mineral Prospectivity Mapping: A Case Study on the Iskut Project in Northwestern British Columbia, Canada. Minerals 2021, 11, 597. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Sanchez-Castillo, M.; Chica-Olmo, M.; Chica-Rivas, M. Machine learning predictive models for mineral prospectivity: An evaluation of neural networks, random forest, regression trees and support vector machines. Ore Geol. Rev. 2015, 71, 804–818. [Google Scholar] [CrossRef]

- Zuo, R.; Carranza, E.J.M. Support vector machine: A tool for mapping mineral prospectivity. Comput. Geosci. 2011, 37, 1967–1975. [Google Scholar]

- De Boissieu, F.; Sevin, B.; Cudahy, T.; Mangeas, M.; Chevrel, S.; Ong, C.; Rodger, A.; Maurizot, P.; Laukamp, C.; Lau, I.; et al. Regolith-geology mapping with support vector machine: A case study over weathered Ni-bearing peridotites, New Caledonia. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 377–385. [Google Scholar] [CrossRef]

- Kuhn, S.; Cracknell, M.J.; Reading, A.M. Lithologic mapping using Random Forests applied to geophysical and remote-sensing data: A demonstration study from the Eastern Goldfields of Australia. Geophysics 2018, 83, B183–B193. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.; Mendes, M.P.; Garcia-Soldado, M.J.; Chica-Olmo, M.; Ribeiro, L. Predictive modeling of groundwater nitrate pollution using Random Forest and multisource variables related to intrinsic and specific vulnerability: A case study in an agricultural setting (Southern Spain). Sci. Total Environ. 2014, 476, 189–206. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.K.; Srivastava, P.K.; Gupta, M.; Thakur, J.K.; Mukherjee, S. Appraisal of land use/land cover of mangrove forest ecosystem using support vector machine. Environ. Earth Sci. 2014, 71, 2245–2255. [Google Scholar] [CrossRef]

- Sun, T.; Chen, F.; Zhong, L.; Liu, W.; Wang, Y. GIS-based mineral prospectivity mapping using machine learning methods: A case study from Tongling ore district, eastern China. Ore Geol. Rev. 2019, 109, 26–49. [Google Scholar] [CrossRef]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; IEEE: Piscataway, NJ, USA, 1995; Volume 1, pp. 278–282. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Macdonald, A.J.; Lewis, P.D.; Thompson, J.F.; Nadaraju, G.; Bartsch, R.; Bridge, D.J.; Rhys, D.A.; Roth, T.; Kaip, A.; Godwin, C.I.; et al. Metallogeny of an early to middle Jurassic arc, Iskut river area, northwestern British Columbia. Econ. Geol. 1996, 91, 1098–1114. [Google Scholar] [CrossRef]

- Logan, J.M.; Mihalynuk, M.G. Tectonic controls on early Mesozoic paired alkaline porphyry deposit belts (Cu-Au±Ag-Pt-Pd-Mo) within the Canadian Cordillera. Econ. Geol. 2014, 109, 827–858. [Google Scholar] [CrossRef]

- Burgoyne, A.; Giroux, G. Mineral Resource Estimate—Bronson Slope Deposit; Technical Report Prepared for Skyline Gold Corporation; Skyline Gold Corporation: Vancouver, BC, Canada, 2008. [Google Scholar]

- Rhys, D.A. Geology of the Snip Mine, and Its Relationship to the Magmatic and Deformational History of the Johnny Mountain Area, Northwestern British Columbia. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 1993. [Google Scholar]

- McCuaig, T.C.; Beresford, S.; Hronsky, J. Translating the mineral systems approach into an effective exploration targeting system. Ore Geol. Rev. 2010, 38, 128–138. [Google Scholar] [CrossRef]

- MINFILE Mineral Occurrence Database. Available online: https://catalogue.data.gov.bc.ca/dataset/minfile-mineral-occurrence-database (accessed on 30 April 2023).

- Bedrock Geology. Available online: https://catalogue.data.gov.bc.ca/dataset/bedrock-geology (accessed on 30 April 2023).

- GeoFiles. Available online: https://www2.gov.bc.ca/gov/content/industry/mineral-exploration-mining/british-columbia-geological-survey/publications/geofiles (accessed on 30 April 2023).

- Geophysical Data Portal. Available online: http://gdr.agg.nrcan.gc.ca/gdrdap/dap/index-eng.php?db_project_no=10011 (accessed on 30 January 2022).

- USGS Earth Explorer. Available online: https://earthexplorer.usgs.gov (accessed on 30 January 2022).

- Carranza, E.J.M. Objective selection of suitable unit cell size in data-driven modeling of mineral prospectivity. Comput. Geosci. 2009, 35, 2032–2046. [Google Scholar] [CrossRef]

- Aitchison, J. The statistical analysis of compositional data. J. R. Stat. Soc. Ser. B (Methodol.) 1982, 44, 139–160. [Google Scholar] [CrossRef]

- Grunsky, E.C. The interpretation of geochemical survey data. Geochem. Explor. Environ. Anal. 2010, 10, 27–74. [Google Scholar] [CrossRef]

- Nykänen, V.; Lahti, I.; Niiranen, T.; Korhonen, K. Receiver operating characteristics (ROC) as validation tool for prospectivity models—A magmatic Ni–Cu case study from the Central Lapland Greenstone Belt, Northern Finland. Ore Geol. Rev. 2015, 71, 853–860. [Google Scholar] [CrossRef]

| Proxy | Exploration Criteria | Note |

|---|---|---|

| Distance to the Texas Creek Plutonic Suite | Au source | This represents a key spatial proxy for the potential source of Au. Its distance from other geological formations is a critical factor influencing the formation and dispersion of Au deposits. |

| Favorable host rocks of the Hazelton Group or the Stuhini Group | Geological Setting | These rocks form part of the favorable geological setting necessary for Au deposits. The presence and location of these groups can also influence the concentration and accessibility of Au. |

| Distance to faults | Mineralization Pathway | Fault lines serve as potential pathways for migrating mineral-rich fluids, making their presence and proximity other key factors in determining prospective zones for Au deposits. |

| Distance to folds within the folded units of the Stuhini Group | Mineralization Pathway | Similar to faults, folds also act as conduits for fluid migration, especially in folded rock units such as those seen in the Stuhini Group. |

| Presence of multi-element geochemical anomalies | Geological Setting | These anomalies often signify favorable geological conditions for mineral deposits. Identifying these anomalies can lead to discovering areas highly prospective for Au. |

| Presence of hydrothermal alterations | Geological Setting | Hydrothermal alterations often occur in close association with ore deposits, particularly Au. Their presence can strongly indicate a favorable geological setting and potential Au mineralization. |

| Kernel | Parameter | Interval |

|---|---|---|

| Linear | Cost | 0.1–50, at 0.1 interval |

| RBF | 0.1–1, at 0.01 interval | |

| Cost | 0.1–50, at 0.1 interval | |

| Polynomial | 0.1–1, at 0.01 interval | |

| d | 1–5, at 1 interval | |

| Cost | 0.1–10, at 0.1 interval |

| Model | Min | Max | Mean | St. Dev. |

|---|---|---|---|---|

| 0.149 | 0.504 | 0.357 | 0.103 | |

| 0.212 | 0.554 | 0.356 | 0.114 | |

| 0.135 | 0.548 | 0.306 | 0.137 | |

| 0.174 | 0.602 | 0.388 | 0.131 |

| SVMlinear | SVMRBF | SVMpoly | RF | |

|---|---|---|---|---|

| Overall Accuracy | 89% | 97% | 89% | 78% |

| Kappa | 78% | 94% | 78% | 56% |

| Sensitivity | 89% | 100% | 89% | 73% |

| Specificity | 89% | 94% | 89% | 83% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lachaud, A.; Adam, M.; Mišković, I. Comparative Study of Random Forest and Support Vector Machine Algorithms in Mineral Prospectivity Mapping with Limited Training Data. Minerals 2023, 13, 1073. https://doi.org/10.3390/min13081073

Lachaud A, Adam M, Mišković I. Comparative Study of Random Forest and Support Vector Machine Algorithms in Mineral Prospectivity Mapping with Limited Training Data. Minerals. 2023; 13(8):1073. https://doi.org/10.3390/min13081073

Chicago/Turabian StyleLachaud, Alix, Marcus Adam, and Ilija Mišković. 2023. "Comparative Study of Random Forest and Support Vector Machine Algorithms in Mineral Prospectivity Mapping with Limited Training Data" Minerals 13, no. 8: 1073. https://doi.org/10.3390/min13081073

APA StyleLachaud, A., Adam, M., & Mišković, I. (2023). Comparative Study of Random Forest and Support Vector Machine Algorithms in Mineral Prospectivity Mapping with Limited Training Data. Minerals, 13(8), 1073. https://doi.org/10.3390/min13081073