From Rocks to Pixels: A Protocol for Reproducible Mineral Imaging and its Applications in Machine Learning

Abstract

:1. Introduction

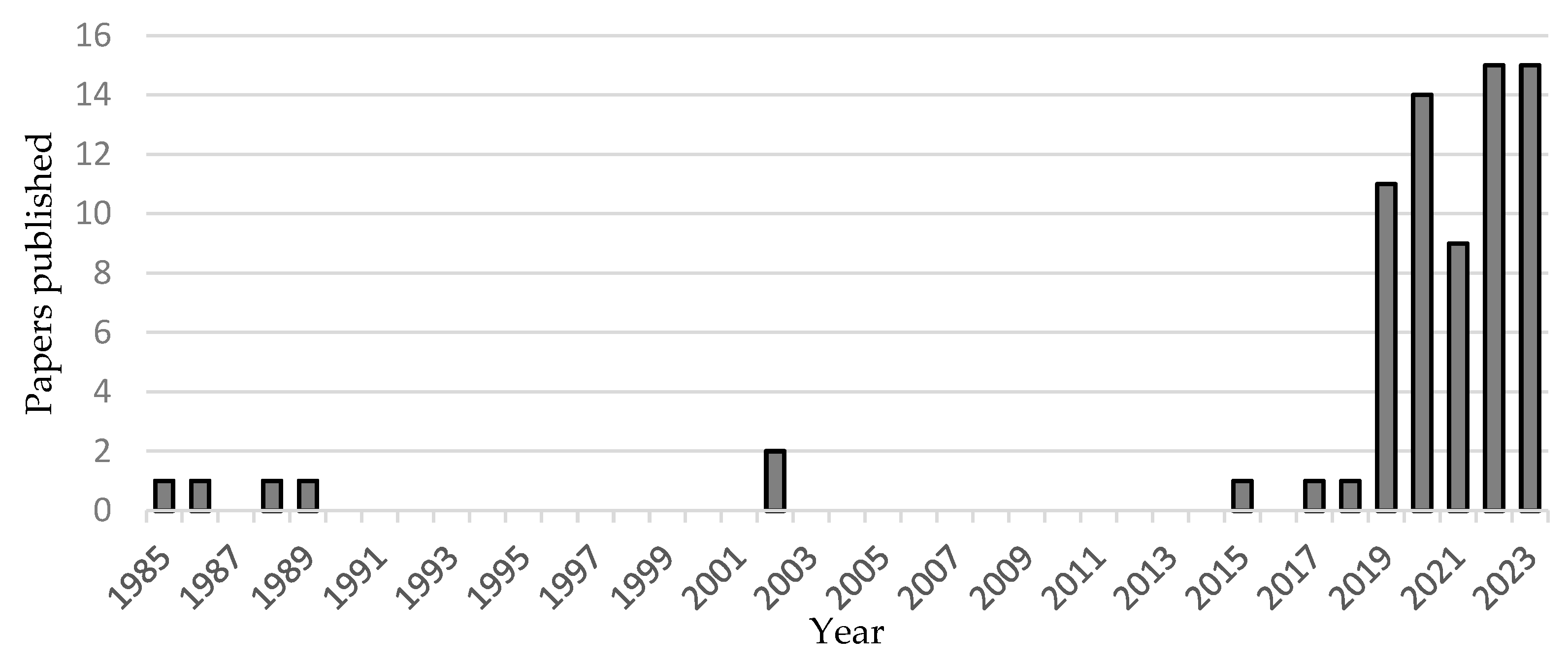

2. Machine Learning and Optical Identification of Minerals

3. Previous Work

- Select and keep the same optics and filters throughout the study.

- Set the illumination according to the most reflective mineral to ensure that the images do not saturate.

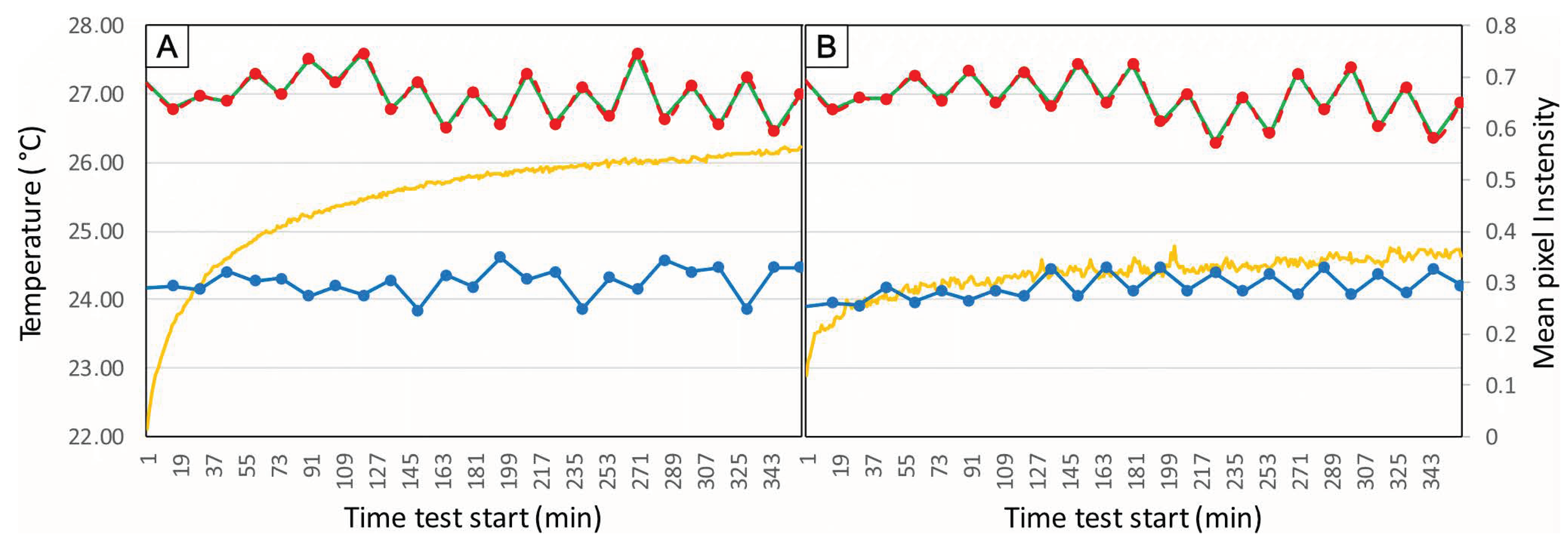

- Warm up the charge-coupled device (CCD) sensor for up to 90 min.

- Ensure that all images have the same acquisition and processing parameters and avoid file formats with compression.

- Take a black reference image. This image can be obtained by not having any photons hit the camera’s sensor during the acquisition.

- Take a white reference image using a reflectance reference material.

- Acquire a series of images without changing any parameters and apply the image calibration of Equation (1).

4. The Theory behind Photomicrographs Acquisition

4.1. Microscope

4.1.1. Type

4.1.2. Objective, Lighting, and Filters

4.1.3. Microscope Hardware

4.2. Camera

4.3. Image

5. Protocol Design Recommendation

5.1. Study Purpose

5.2. Parameters Settings

5.2.1. Lighting and Exposure

5.2.2. Objective Choice

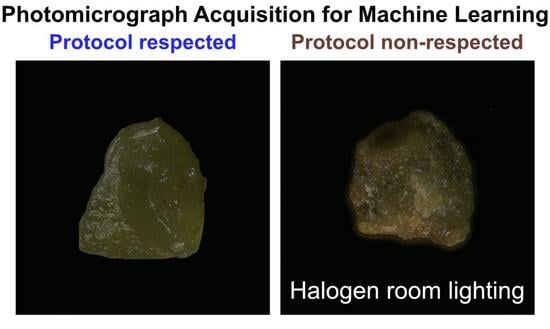

5.2.3. Room Lighting and Noise

- No external light affects image acquisition.

- Camera tests are much easier to perform.

- Dust and unwanted particles are kept away from the microscope and samples.

- Verify that the microscope camera is at room temperature.

- Ensure that no stray light strikes the camera sensor (use an enclosure or other means to ensure complete darkness).

- Turn on the microscope, computer, software, and NO lighting.

- Take pictures every 15 min for at least 3 h.

- Use any coding language to extract the pixel intensity values for each channel from the image. This must be performed for each image.

- Plot a graph of the time using the equation (9, versus the mean intensity per image for each channel) (see Figure 7).

- where is the time, is the frame number, and is the frame rate.

- Analyze the graph. Ideally, the graph will show increasing mean pixel intensity value for each channel up to a plateau. From the moment the curve stabilizes around a particular value, the camera has warmed and the error is constant.

- Identify the stabilization point marking the time needed to warm up the camera before image acquisition.

- This procedure will ensure the reproducibility of the acquisition. However, the dark current error during the acquisition will be at its maximum. In most cases, this noise will be minimal to negligible.

5.3. Acquisition Routine

5.3.1. Sample Preparation

- Place a glass slide on a perfectly horizontal plate covered with aluminum foil. Tape the edges of the glass slide to secure it to the foil (Figure 3A). This step prevents the epoxy from flowing underneath the glass slide and facilitates removing the glass slide after the curing.

- Apply epoxy to the glass slide (a 5 µm thick layer) using a micrometer-adjustable film applicator (Figure 3B), powder the grain, and wait until the permanent slide is cured. Powdering should be performed to minimize grain overlap and evenly distribute grains. Curing time depends on the epoxy, and we suggest using transparent epoxy with a high viscosity to prevent the grains from sinking too deeply.

- Remove the adhesive tape and glass slide from the aluminum foil (Figure 3C).

- Put a few drops of ethanol on the glass slide, just enough to have a thin film of ethanol that does not flow outside the slide (Figure 4A).

- Powder the grains uniformly so that the grains are evenly spaced on the slide (Figure 4B).

- Wait approximately five minutes for the ethanol to evaporate (leaving a dry slide). The slide is ready for photomicrography.

- After photomicrography, grains can be easily scraped from the slide with a laboratory spatula and recovered for another use (Figure 4C).

5.3.2. Photomicrography

- Set up the entire acquisition to respect all protocol recommendations and steps, such as microscope enclosure, calculated camera warm-up period, and white balance calibration.

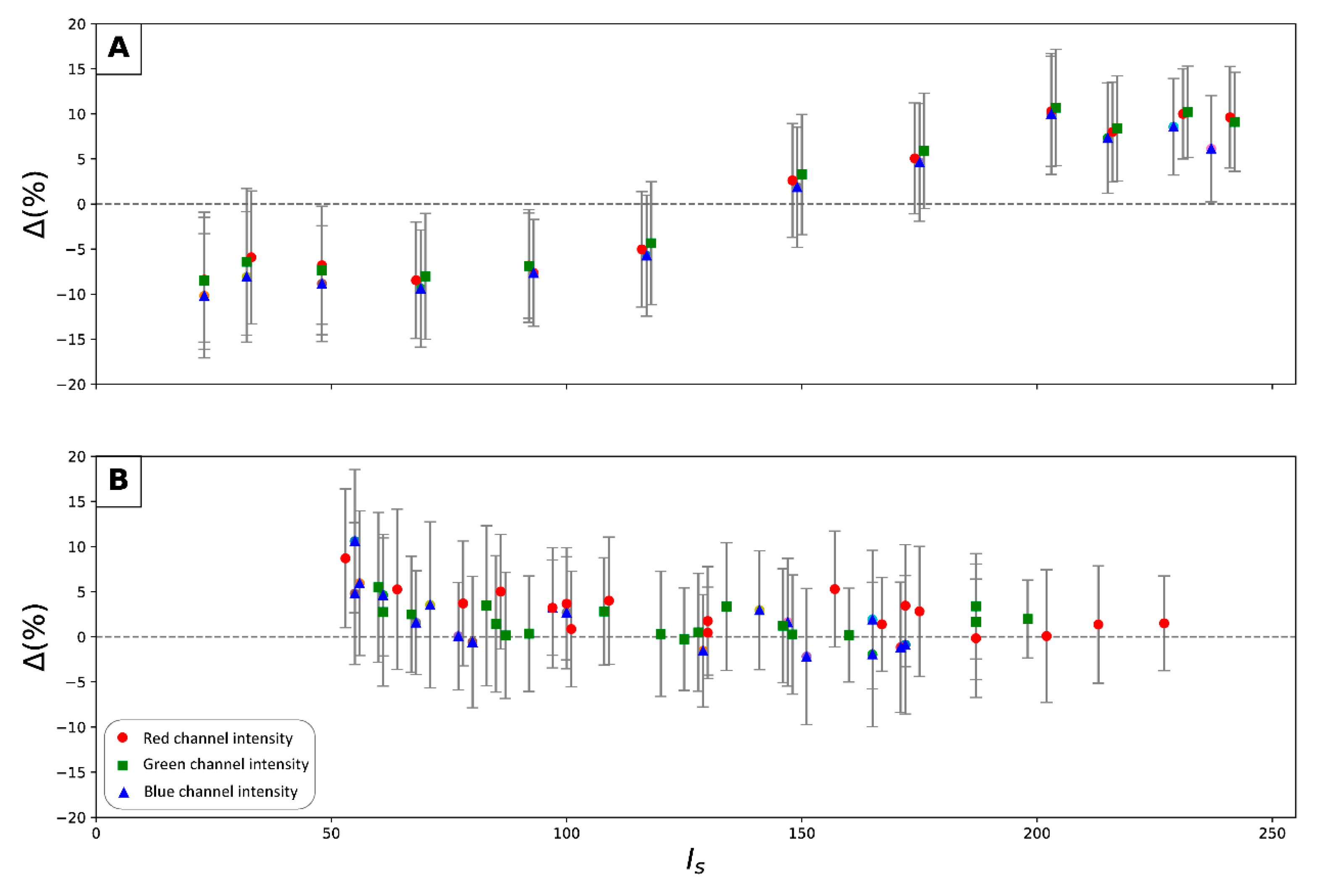

- Acquire a series of images of the color gauge at various times and moments of the day to ensure that the color variation is not related to the ambient light change.

- Segment the color gauge from the sample holder for the entire picture series to obtain only the region with the given color(s). For example, if the color gauge comprises several patches having different color codes, subdivide the image to achieve one image per given color code.

- For each cropped image, compute the mean pixel intensity value of the acquisition () and the standard deviation () for each channel over the entire series.

- Extract theoretical pixel intensity values for a given zone () for the color gauge from the manufacturer’s data sheet for the same color space.

- Compute the difference () between the theoretical pixel intensity values of the color gauge () and the mean pixel intensity values of the acquisition () using Equation (10).

6. An Application of the Protocol

6.1. Microscope Enclosure and Sample Holder

6.2. Acquisition Settings

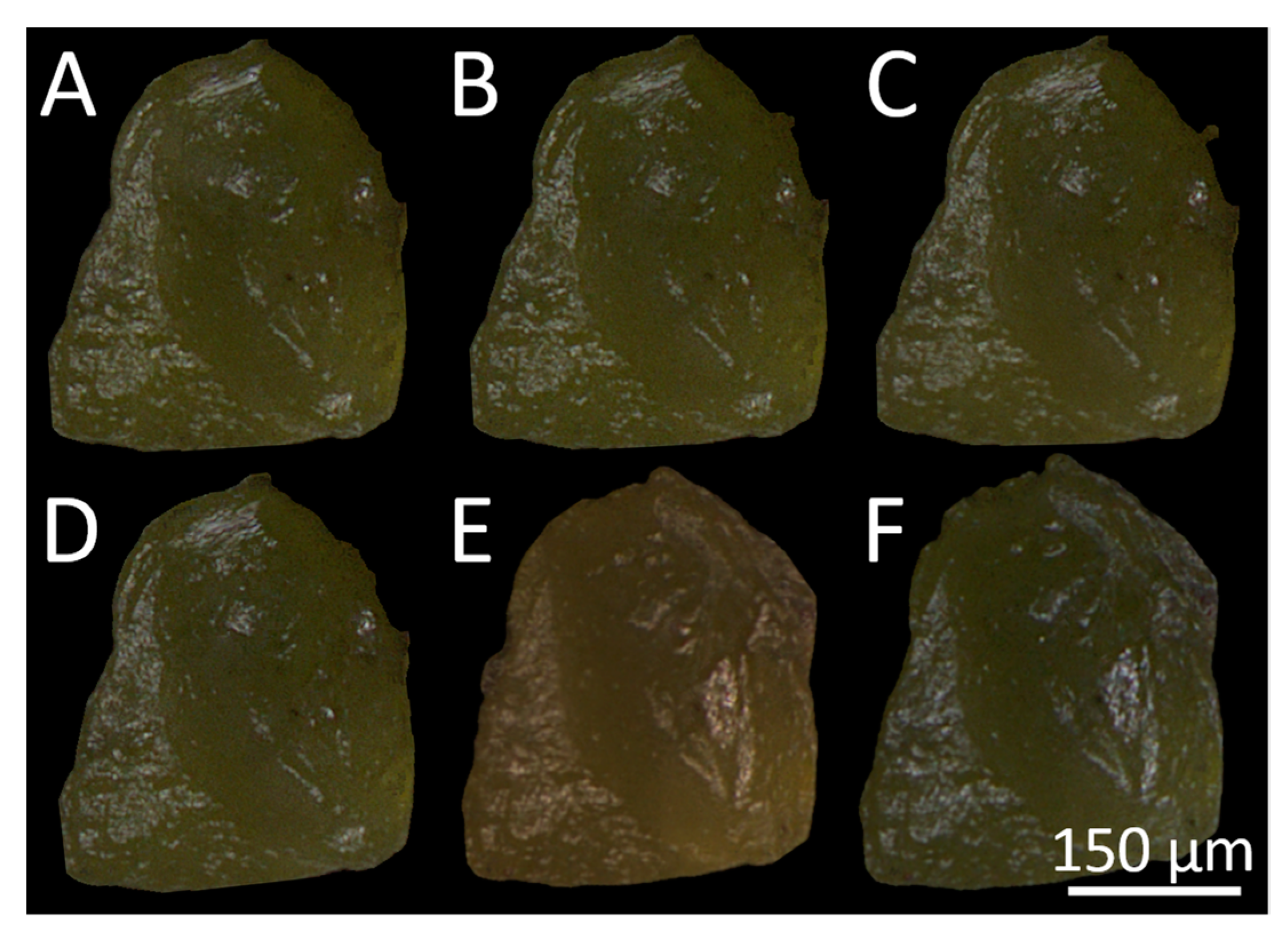

6.3. Machine Learning Requirements and Sample Preparation

6.4. Machine Learning Model

7. Protocol Application Results

7.1. Warm-Up Period

7.2. Color Gauge Information and Analysis

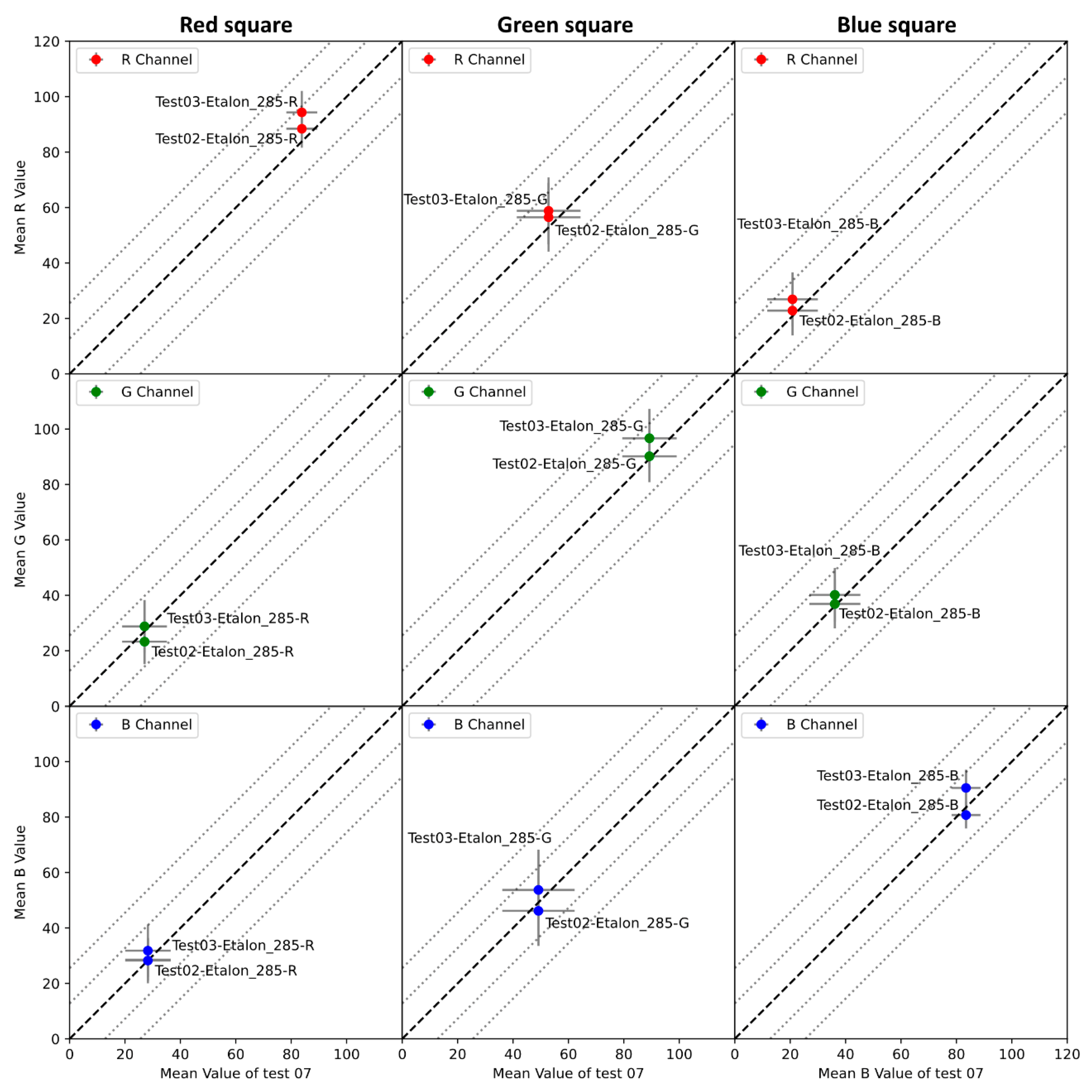

7.2.1. Reproducibility Analysis

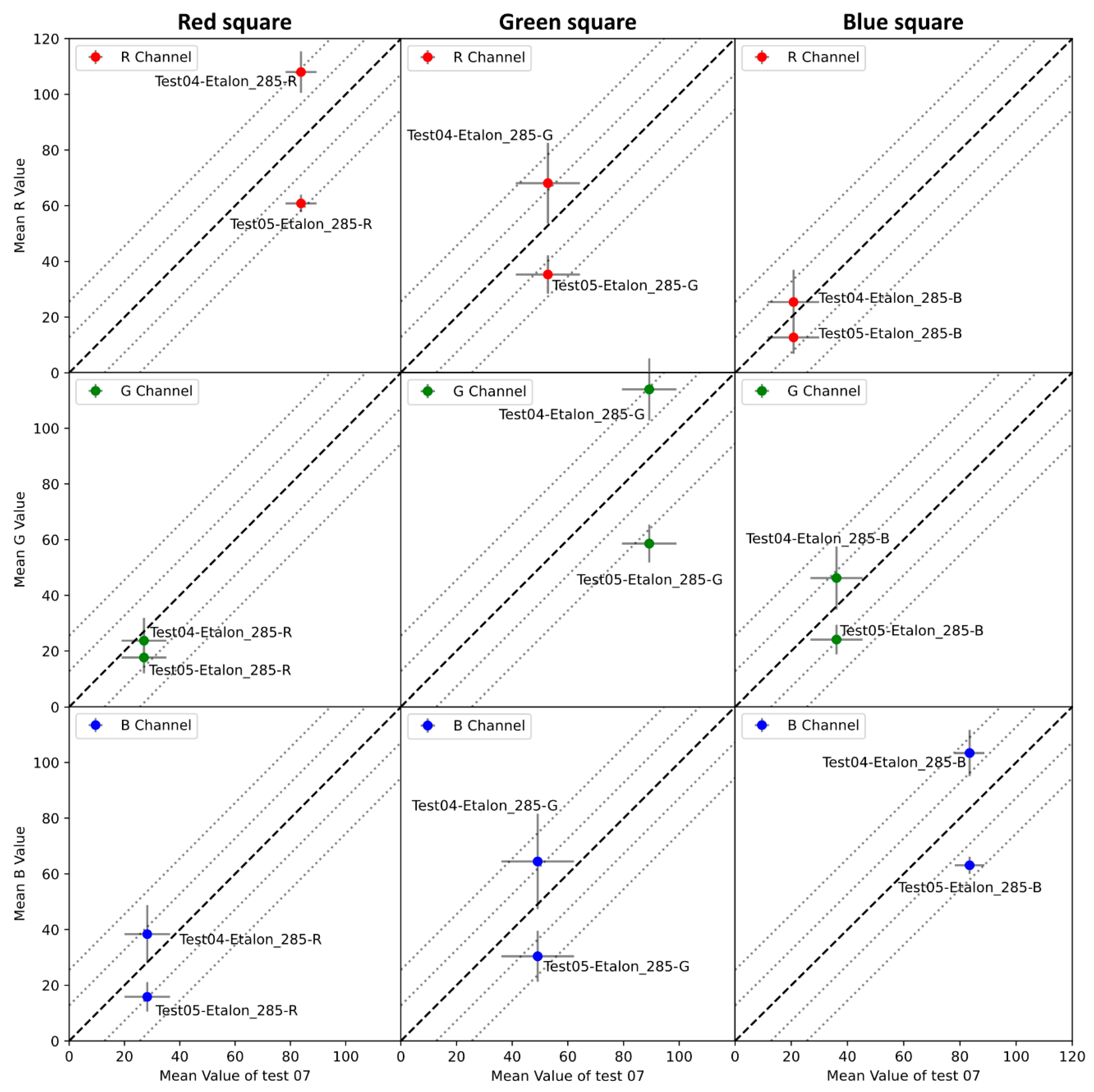

7.2.2. Parameters Impact

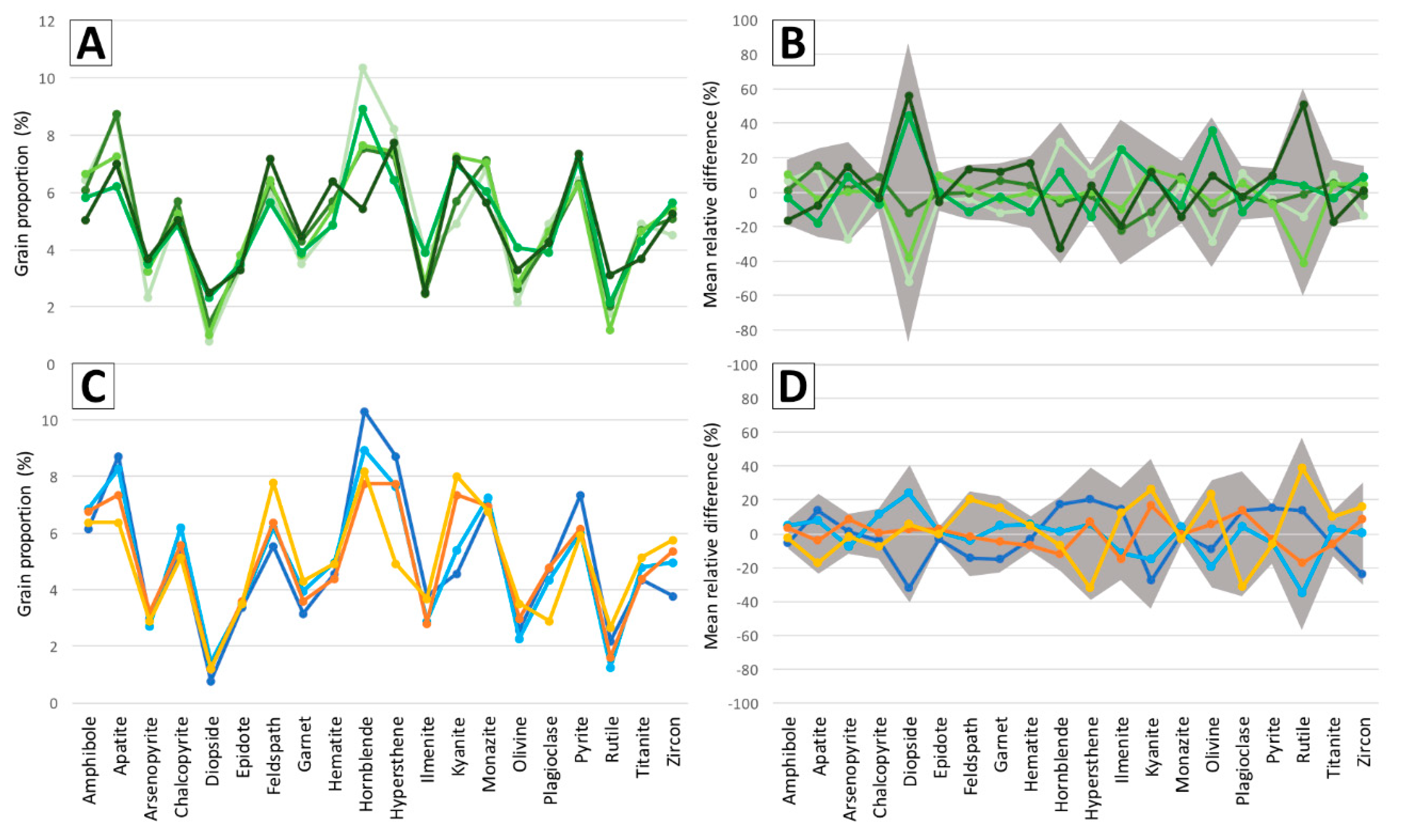

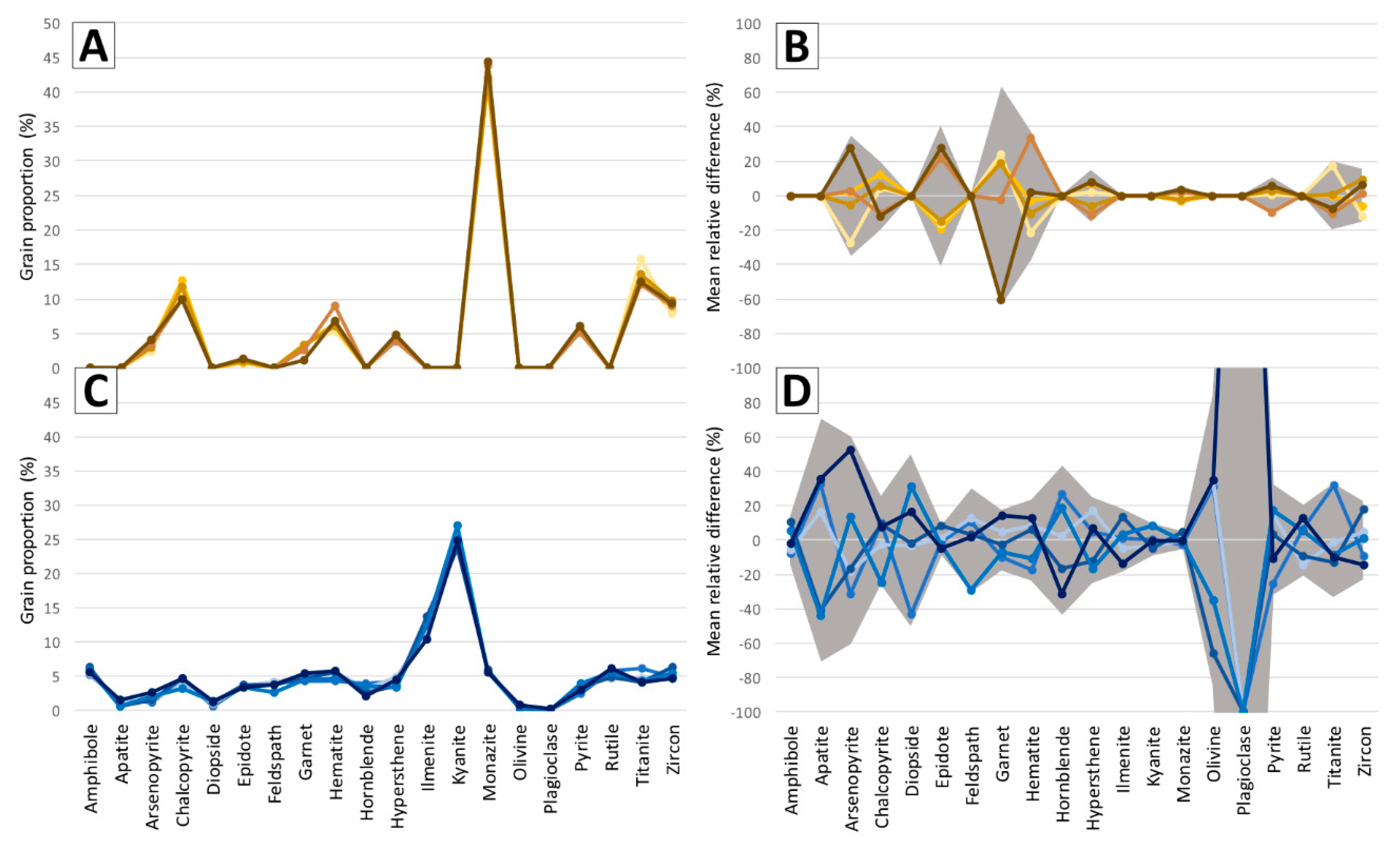

7.3. Impact of Warm-Up Period and Room Lighting on Classification Results

8. Discussion

8.1. Grain-Mounting Technique

8.2. Cameras and Microscopes

8.3. Computer Settings

8.4. Exposure and White Balance Calibration

8.5. Color Gauge Analysis

8.6. Classification Results

9. Conclusions

- Mount the grains or rock without alteration in the useful optical properties for the study.

- Choose between a petrographic microscope for thin section and an on-axis zoom microscope for grains, ideally.

- Compute the ideal image size and your storage capacity and act accordingly.

- Microscope noise sources:

- Create an enclosure for the microscope, as it prevents external light and dust pollution.

- Compute two or three times the warm-up period at different times of the day, if your laboratory is air-conditioned and the exposure time is small it should not have any impact on the image quality. If the error is high or not stabilizing, measure the camera temperature or cool down the camera.

- Use a color gauge to calibrate and study your acquisition parameters.

- Choose the objective based on the spatial resolution required for your study, the camera resolution should be superior to that of the microscope. And use the same equipment for the whole study.

- Adequate documentation on photomicrograph acquisition should be included with images.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Averill, S. The Application of Heavy Indicator Mineralogy in Mineral Exploration with Emphasis on Base Metal Indicators in Glaciated Metamorphic and Plutonic Terrains. Geol. Soc. Lond. Spec. Publ. 2001, 185, 69–81. [Google Scholar] [CrossRef]

- McClenaghan, M.B.; Kjarsgaard, B. Indicator Mineral and Surficial Geochemical Exploration Methods for Kimberlite in Glaciated Terrain; Examples from Canada. In Mineral Deposits of Canada: A Synthesis of Major Deposit-Types, District Metallogeny, the Evolution of Geological Provinces, and Exploration Methods; Goodfellow, W.D., Ed.; Geological Association of Canada: St. John’s, NL, Canada, Mineral Deposits Division, Special Publication; 2007; Volume 5, pp. 983–1006. [Google Scholar]

- Ndlovu, B.; Farrokhpay, S.; Bradshaw, D. The Effect of Phyllosilicate Minerals on Mineral Processing Industry. Int. J. Miner. Process. 2013, 125, 149–156. [Google Scholar] [CrossRef]

- Courtin-Nomade, A.; Bril, H.; Bény, J.-M.; Kunz, M.; Tamura, N. Sulfide Oxidation Observed Using Micro-Raman Spectroscopy and Micro-X-Ray Diffraction: The Importance of Water/Rock Ratios and pH Conditions. Am. Mineral. 2010, 95, 582–591. [Google Scholar] [CrossRef]

- Maitre, J.; Bouchard, K.; Bédard, L.P. Mineral Grains Recognition Using Computer Vision and Machine Learning. Comput. Geosci. 2019, 130, 84–93. [Google Scholar] [CrossRef]

- Chow, B.H.Y.; Reyes-Aldasoro, C.C. Automatic Gemstone Classification Using Computer Vision. Minerals 2022, 12, 60. [Google Scholar] [CrossRef]

- Hao, H.; Jiang, Z.; Ge, S.; Wang, C.; Gu, Q. Siamese Adversarial Network for Image Classification of Heavy Mineral Grains. Comput. Geosci. 2022, 159, 105016. [Google Scholar] [CrossRef]

- Iglesias, J.C.Á.; Santos, R.B.M.; Paciornik, S. Deep Learning Discrimination of Quartz and Resin in Optical Microscopy Images of Minerals. Miner. Eng. 2019, 138, 79–85. [Google Scholar] [CrossRef]

- Jia, L.; Yang, M.; Meng, F.; He, M.; Liu, H. Mineral Photos Recognition Based on Feature Fusion and Online Hard Sample Mining. Minerals 2021, 11, 1354. [Google Scholar] [CrossRef]

- Leroy, S.; Pirard, E. Mineral Recognition of Single Particles in Ore Slurry Samples by Means of Multispectral Image Processing. Miner. Eng. 2019, 132, 228–237. [Google Scholar] [CrossRef]

- Ramil, A.; López, A.J.; Pozo-Antonio, J.S.; Rivas, T. A Computer Vision System for Identification of Granite-Forming Minerals Based on RGB Data and Artificial Neural Networks. Measurement 2018, 117, 90–95. [Google Scholar] [CrossRef]

- Santos, R.B.M.; Augusto, K.S.; Iglesias, J.C.Á.; Rodrigues, S.; Paciornik, S.; Esterle, J.S.; Domingues, A.L.A. A Deep Learning System for Collotelinite Segmentation and Coal Reflectance Determination. Int. J. Coal Geol. 2022, 263, 104111. [Google Scholar] [CrossRef]

- Allen, J.E. Estimation of Percentages in Thin Sections—Considerations of Visual Psychology. J. Sediment. Res. 1956, 26, 160–161. [Google Scholar] [CrossRef]

- Folk, R.L. A Comparison Chart for Visual Percentage Estimation. J. Sediment. Res. 1951, 21, 32–33. [Google Scholar]

- Murphy, C.P.; Kemp, R.A. The Over-Estimation of Clay and the under-Estimation of Pores in Soil Thin Sections. J. Soil Sci. 1984, 35, 481–495. [Google Scholar] [CrossRef]

- Goldstone, R.L. Feature Distribution and Biased Estimation of Visual Displays. J. Exp. Psychol. Hum. Percept. Perform. 1993, 19, s564–s579. [Google Scholar] [CrossRef]

- Pirard, E.; Lebichot, S. Image Analysis of Iron Oxides under the Optical Microscope. In Applied Mineralogy: Developments in Science and Technology; ICAM: Sainte-Anne-de-Bellevue, QC, Canada, 2004; pp. 153–156. ISBN 978-85-98656-02-1. [Google Scholar]

- Gibney, E. Could Machine Learning Fuel a Reproducibility Crisis in Science? Nature 2022, 608, 250–251. [Google Scholar] [CrossRef] [PubMed]

- Ball, P. Is AI Leading to a Reproducibility Crisis in Science? Nature 2023, 624, 22–25. [Google Scholar] [CrossRef]

- Pirard, E. Multispectral Imaging of Ore Minerals in Optical Microscopy. Mineral. Mag. 2004, 68, 323–333. [Google Scholar] [CrossRef]

- Fueten, F. A Computer-Controlled Rotating Polarizer Stage for the Petrographic Microscope. Comput. Geosci. 1997, 23, 203–208. [Google Scholar] [CrossRef]

- Inoué, S.; Oldenbourg, R. Optical Instruments: Microscopes. In Handbook of Optics, 2nd ed.; McGraw-Hill: New York, NY, USA, 1995; Volume 2, pp. 17.1–17.52. [Google Scholar]

- Inoué, S.; Spring, K.R. Video Microscopy: The Fundamentals, 2nd ed.; Plenum Press: New York, NY, USA, 1997; ISBN 0-306-45531-5. [Google Scholar]

- Hain, R.; Kähler, C.J.; Tropea, C. Comparison of CCD, CMOS and Intensified Cameras. Exp. Fluids 2007, 42, 403–411. [Google Scholar] [CrossRef]

- Centen, P. 14—Complementary Metal-Oxide-Semiconductor (CMOS) and Charge Coupled Device (CCD) Image Sensors in High-Definition TV Imaging. In High Performance Silicon Imaging, 2nd ed.; Durini, D., Ed.; Woodhead Publishing Series in Electronic and Optical Materials; Woodhead Publishing: Sawston, UK, 2020; pp. 437–471. ISBN 978-0-08-102434-8. [Google Scholar]

- Jerram, P.; Stefanov, K. 9—CMOS and CCD Image Sensors for Space Applications. In High Performance Silicon Imaging, 2nd ed.; Durini, D., Ed.; Woodhead Publishing Series in Electronic and Optical Materials; Woodhead Publishing: Sawston, UK, 2020; pp. 255–287. ISBN 978-0-08-102434-8. [Google Scholar]

- Gritchenko, A.S.; Eremchev, I.Y.; Naumov, A.V.; Melentiev, P.N.; Balykin, V.I. Single Quantum Emitters Detection with Amateur CCD: Comparison to a Scientific-Grade Camera. Opt. Laser Technol. 2021, 143, 107301. [Google Scholar] [CrossRef]

- Süsstrunk, S.; Buckley, R.; Swen, S. Standard RGB Color Spaces. In Proceedings of the 7th Color Imaging Conference, Scottsdale, AZ, USA, 16–19 November 1999; Society for Imaging Science and Technology: Springfield, VA, USA, 1999; Volume 1999, pp. 127–134. [Google Scholar]

- Lamoureux, S.F.; Bollmann, J. Image Acquisition. In Image Analysis, Sediments and Paleoenvironments; Francus, P., Ed.; Springer: Dordrecht, The Netherlands, 2004; pp. 11–34. ISBN 978-1-4020-2122-0. [Google Scholar]

- Latif, G.; Bouchard, K.; Maitre, J.; Back, A.; Bédard, L.P. Deep-Learning-Based Automatic Mineral Grain Segmentation and Recognition. Minerals 2022, 12, 455. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A Survey of Visual Transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 1–21. [Google Scholar] [CrossRef]

| Component | Details |

|---|---|

| Microscope | BX53M from Olympus |

| Camera | SC50 CMOS from Olympus |

| Objective | UPLFLN4XP from Olympus: and |

| Image resolution | 1920 × 2560 pixels |

| Exposure time | 5.993 ms |

| Color space | Adobe RGB |

| Light source type | Annular LED light |

| Light source intensity | Maximum |

| White balance | 18% gray square |

| Box enclosure | Yes |

| 20 |

| Permanent | Temporary | |

|---|---|---|

| Mineral species for learning | Several per glass slide | One per glass slide |

| Mineral species to identify unknown | Several per glass slide | Several per glass slide |

| Manufacturing time | 24 to 48 h | Less than 10 min |

| Conservation | Permanent (vacuum proof) | Temporary |

| Lighting type | Transmitted light Reflected light Annular light Directional light | Transmitted light Reflected light Annular light Directional light |

| Polarization | Available | Available |

| Specific risk | Mounting failure | Visual sorting errors |

| Mineral | Training (1) | Validation (2) | Testing (3) | Total |

|---|---|---|---|---|

| Amphibole | 105 | 22 | 23 | 150 |

| Apatite | 98 | 21 | 21 | 140 |

| Arsenopyrite | 71 | 15 | 16 | 102 |

| Chalcopyrite | 57 | 12 | 12 | 81 |

| Diopside | 102 | 22 | 21 | 145 |

| Epidote | 105 | 22 | 23 | 150 |

| Feldspar | 70 | 15 | 15 | 100 |

| Garnet | 105 | 22 | 23 | 150 |

| Hematite | 74 | 16 | 16 | 106 |

| Hornblende | 55 | 12 | 11 | 78 |

| Hypersthene | 105 | 22 | 23 | 150 |

| Ilmenite | 105 | 22 | 23 | 150 |

| Kyanite | 105 | 22 | 23 | 150 |

| Monazite | 105 | 22 | 23 | 150 |

| Olivine | 105 | 22 | 23 | 150 |

| Plagioclase | 105 | 22 | 23 | 150 |

| Pyrite | 105 | 22 | 23 | 150 |

| Rutile | 40 | 9 | 8 | 57 |

| Titanite | 90 | 19 | 20 | 129 |

| Zircon | 89 | 19 | 19 | 127 |

| Test | Parameter Variation | Respect Pirard’s Protocol (without Correction) |

|---|---|---|

| Test 1 | No warming period | No |

| Test 2 | No microscope enclosure and incandescent room lighting | Yes |

| Test 3 | No microscope enclosure and LED room lighting | Yes |

| Test 4 | Exposure time change: 8.991 ms | No |

| Test 5 | Exposure time change: 2.996 ms | No |

| Test 6 | Medium light intensity | No |

| Test 7 | Protocol fully respected | Yes |

| Test 8 | Medium light intensity, exposure time of 8.991 ms | No |

| Image | Mean R Intensity Value | Mean G Intensity Value | Mean B Intensity Value |

|---|---|---|---|

| A | 50.55 ± 30.61 | 48.52 ± 29.95 | 26.94 ± 21.13 |

| B | 49.30 ± 30.27 | 47.37 ± 29.61 | 25.36 ± 20.22 |

| C | 48.96 ± 30.17 | 46.80 ± 29.34 | 26.41 ± 20.60 |

| D | 48.97 ± 30.09 | 46.77 ± 29.26 | 26.80 ± 21.01 |

| E | 45.99 ± 35.38 | 38.05 ± 29.63 | 19.76 ± 17.80 |

| F | 42.43 ± 32.52 | 41.34 ± 32.10 | 27.08 ± 24.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Back, A.L.; Bédard, L.P.; Maitre, J.; Bouchard, K. From Rocks to Pixels: A Protocol for Reproducible Mineral Imaging and its Applications in Machine Learning. Minerals 2024, 14, 51. https://doi.org/10.3390/min14010051

Back AL, Bédard LP, Maitre J, Bouchard K. From Rocks to Pixels: A Protocol for Reproducible Mineral Imaging and its Applications in Machine Learning. Minerals. 2024; 14(1):51. https://doi.org/10.3390/min14010051

Chicago/Turabian StyleBack, Arnaud L., L. Paul Bédard, Julien Maitre, and Kévin Bouchard. 2024. "From Rocks to Pixels: A Protocol for Reproducible Mineral Imaging and its Applications in Machine Learning" Minerals 14, no. 1: 51. https://doi.org/10.3390/min14010051