A Rotational Invariant Neural Network for Electrical Impedance Tomography Imaging without Reference Voltage: RF-REIM-NET

Abstract

:1. Introduction

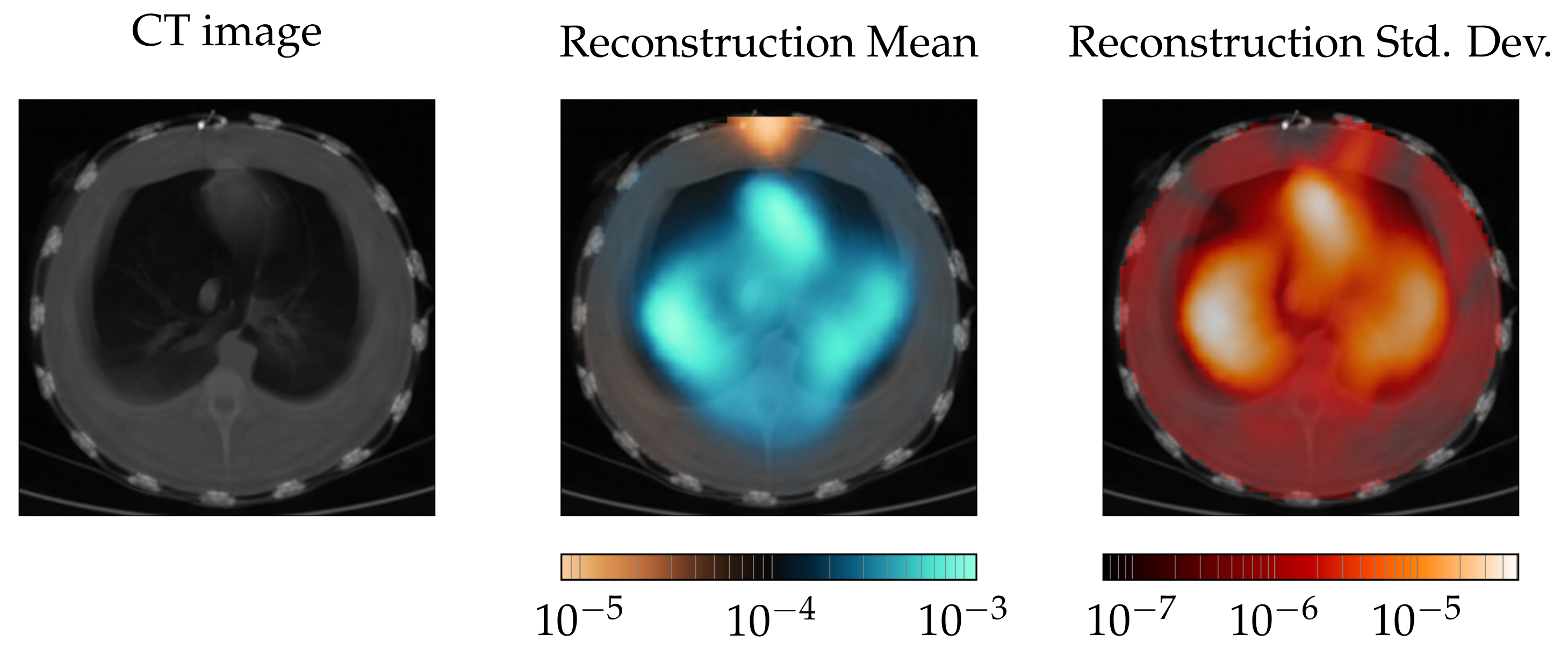

- We use real-world animal trial data with CT references to confirm that RF-REIM-NET gives meaningful results in such a setting.

- Our training data are unbiased, as we used a conductivity range bigger than what is expected in the thorax region and did not try to model the conductivity distributions typically encountered in the thorax region.

- We present a method for time-effective data augmentation using the existing training data.

- Even though RF-REIM-NET uses fully connected layers, it still preserves the rotational invariance of adjacent measurements.

2. Materials and Methods

2.1. Fundamentals

2.2. Electrical Impedance Maps

2.3. Training Data Set

2.3.1. Basic Object Shapes

2.3.2. Transformation of the Basic Objects

2.3.3. Conductivity Range

2.3.4. Electrode Contact Impedance

2.3.5. Measurement Noise

2.3.6. Rotation of the Data

2.3.7. Alpha-Blending

2.3.8. Conclusion on Trainign Dataset

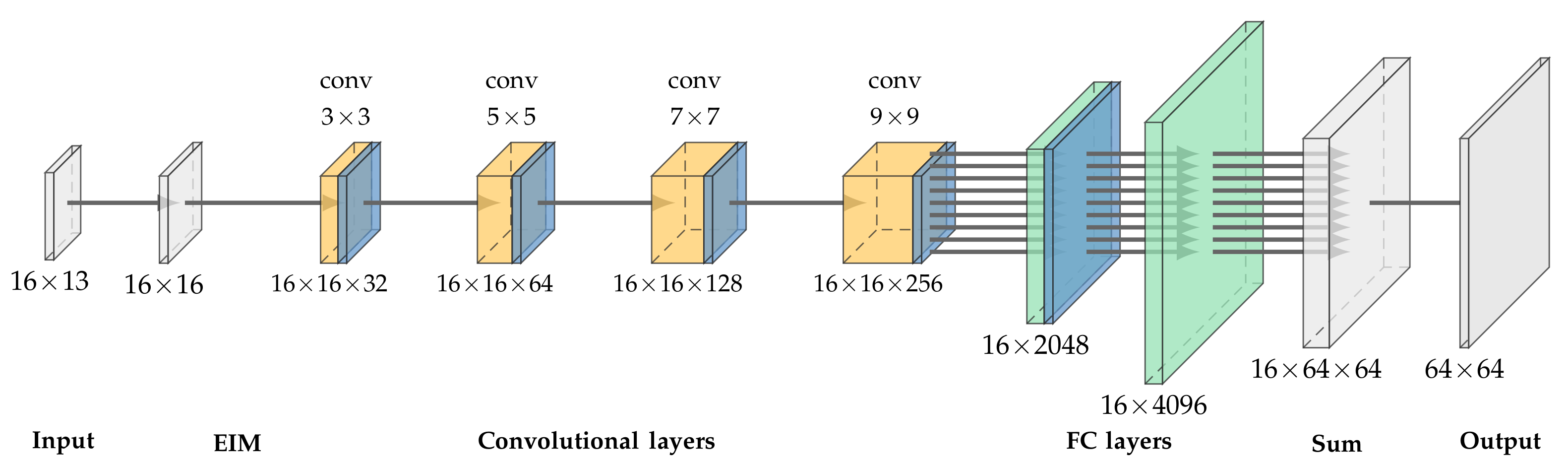

2.4. On the ANN Structure

2.5. Training of the Neural Network

2.6. Evaluating RF-REIM-NET

2.6.1. Amplitude Response (AR)

2.6.2. Position Error (PE)

denotes the x-component of the center of gravity,

denotes the x-component of the center of gravity,  denotes the y-component accordingly, is the ground truth x-position and the ground truth y-position. The mean and the std of the PE should be low.

denotes the y-component accordingly, is the ground truth x-position and the ground truth y-position. The mean and the std of the PE should be low.2.6.3. Ringing (RNG)

2.7. Evaluation Data

2.7.1. FEM Data

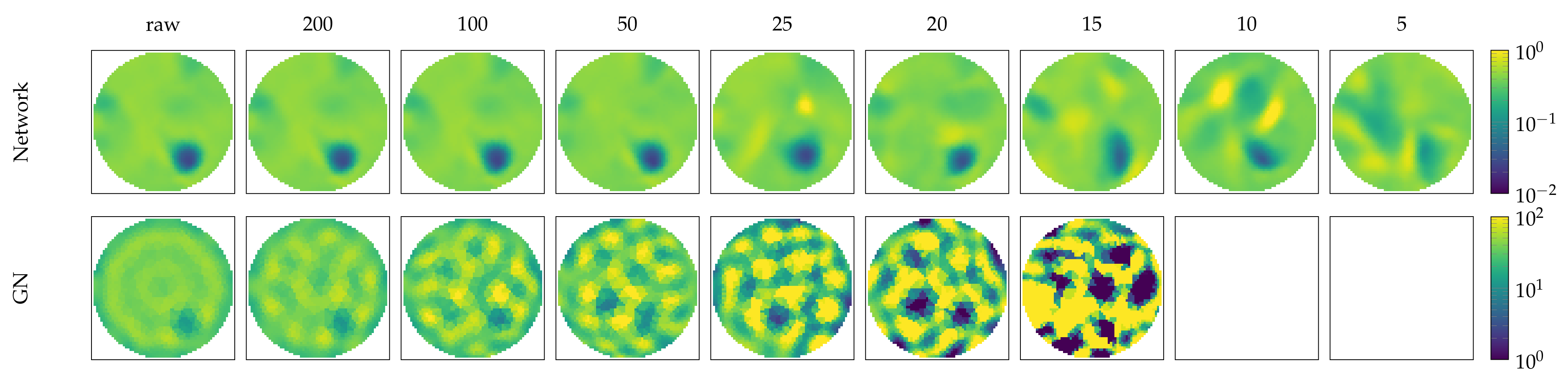

2.7.2. Noise Performance on FEM Data

2.7.3. Tank Data

2.7.4. Experimental Data

3. Results

3.1. Noise Comparison on Simulated Data

3.2. Tank Results

3.3. Experimental Data

3.4. Discussion

4. Conclusions and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EIT | Electrical Impedance Tomography |

| FEM | Finite Element Method |

| ANN | Artifical Neural Network |

| EIM | Electrical Impedance Map |

| GN | Gauss–Newton |

| AR | Amplitude Response |

| PE | Position Error |

| RNG | Ringing |

References

- Hallaji, M.; Seppänen, A.; Pour-Ghaz, M. Electrical impedance tomography-based sensing skin for quantitative imaging of damage in concrete. Smart Mater. Struct. 2014, 23, 085001. [Google Scholar] [CrossRef]

- Kruger, M.; Poolla, K.; Spanos, C.J. A class of impedance tomography based sensors for semiconductor manufacturing. In Proceedings of the 2004 American Control Conference, Boston, MA, USA, 30 June–2 July 2004; Volume 3, pp. 2178–2183. [Google Scholar]

- Yang, Y.; Wu, H.; Jia, J.; Bagnaninchi, P.O. Scaffold-based 3-D cell culture imaging using a miniature electrical impedance tomography sensor. IEEE Sens. J. 2019, 19, 9071–9080. [Google Scholar] [CrossRef] [Green Version]

- Meier, T.; Luepschen, H.; Karsten, J.; Leibecke, T.; Großherr, M.; Gehring, H.; Leonhardt, S. Assessment of regional lung recruitment and derecruitment during a PEEP trial based on electrical impedance tomography. Intensive Care Med. 2008, 34, 543–550. [Google Scholar] [CrossRef] [PubMed]

- Hentze, B.; Muders, T.; Luepschen, H.; Maripuu, E.; Hedenstierna, G.; Putensen, C.; Walter, M.; Leonhardt, S. Regional lung ventilation and perfusion by electrical impedance tomography compared to single-photon emission computed tomography. Physiol. Meas. 2018, 39, 065004. [Google Scholar] [CrossRef]

- Abascal, J.F.P.; Arridge, S.R.; Atkinson, D.; Horesh, R.; Fabrizi, L.; De Lucia, M.; Horesh, L.; Bayford, R.H.; Holder, D.S. Use of anisotropic modelling in electrical impedance tomography; description of method and preliminary assessment of utility in imaging brain function in the adult human head. Neuroimage 2008, 43, 258–268. [Google Scholar] [CrossRef]

- Leonhardt, S.; Cordes, A.; Plewa, H.; Pikkemaat, R.; Soljanik, I.; Moehring, K.; Gerner, H.J.; Rupp, R. Electric impedance tomography for monitoring volume and size of the urinary bladder. Biomed. Eng./Biomed. Tech. 2011, 56, 301–307. [Google Scholar] [CrossRef]

- Hong, S.; Lee, K.; Ha, U.; Kim, H.; Lee, Y.; Kim, Y.; Yoo, H.J. A 4.9 mΩ-sensitivity mobile electrical impedance tomography IC for early breast-cancer detection system. IEEE J. Solid-State Circuits 2014, 50, 245–257. [Google Scholar] [CrossRef]

- Putensen, C.; Hentze, B.; Muenster, S.; Muders, T. Electrical impedance tomography for cardio-pulmonary monitoring. J. Clin. Med. 2019, 8, 1176. [Google Scholar] [CrossRef] [Green Version]

- Costa, E.L.; Lima, R.G.; Amato, M.B. Electrical impedance tomography. In Yearbook of Intensive Care and Emergency Medicine; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009; pp. 394–404. [Google Scholar]

- Borsic, A.; Graham, B.M.; Adler, A.; Lionheart, W.R. Total Variation Regularization in Electrical Impedance Tomography. 2007. Available online: http://eprints.maths.manchester.ac.uk/id/eprint/813 (accessed on 10 February 2022).

- Vauhkonen, M.; Vadasz, D.; Karjalainen, P.A.; Somersalo, E.; Kaipio, J.P. Tikhonov regularization and prior information in electrical impedance tomography. IEEE Trans. Med. Imaging 1998, 17, 285–293. [Google Scholar] [CrossRef]

- Kaipio, J.P.; Kolehmainen, V.; Somersalo, E.; Vauhkonen, M. Statistical inversion and Monte Carlo sampling methods in electrical impedance tomography. Inverse Probl. 2000, 16, 1487. [Google Scholar] [CrossRef]

- Kolehmainen, V.; Somersalo, E.; Vauhkonen, P.; Vauhkonen, M.; Kaipio, J. A Bayesian approach and total variation priors in 3D electrical impedance tomography. In Proceedings of the 20th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Volume 20 Biomedical Engineering Towards the Year 2000 and Beyond (Cat. No. 98CH36286), Hong Kong, China, 1 November 1998; IEEE: Washington, DC, USA, 1998; Volume 2, pp. 1028–1031. [Google Scholar]

- Isaacson, D.; Mueller, J.L.; Newell, J.C.; Siltanen, S. Reconstructions of chest phantoms by the D-bar method for electrical impedance tomography. IEEE Trans. Med. Imaging 2004, 23, 821–828. [Google Scholar] [CrossRef]

- Adler, A.; Lionheart, W.R. Uses and abuses of EIDORS: An extensible software base for EIT. Physiol. Meas. 2006, 27, S25. [Google Scholar] [CrossRef] [Green Version]

- Kłosowski, G.; Rymarczyk, T. Using neural networks and deep learning algorithms in electrical impedance tomography. Informatyka Automatyka Pomiary w Gospodarce i Ochronie Środowiska 2017, 7. [Google Scholar] [CrossRef]

- Hamilton, S.J.; Hauptmann, A. Deep D-bar: Real-time electrical impedance tomography imaging with deep neural networks. IEEE Trans. Med. Imaging 2018, 37, 2367–2377. [Google Scholar] [CrossRef] [Green Version]

- Hu, D.; Lu, K.; Yang, Y. Image reconstruction for electrical impedance tomography based on spatial invariant feature maps and convolutional neural network. In Proceedings of the 2019 IEEE International Conference on Imaging Systems and Techniques (IST), Abu Dhabi, United Arab Emirates, 9–10 December 2019; pp. 1–6. [Google Scholar]

- Tan, C.; Lv, S.; Dong, F.; Takei, M. Image reconstruction based on convolutional neural network for electrical resistance tomography. IEEE Sens. J. 2018, 19, 196–204. [Google Scholar] [CrossRef]

- Paullada, A.; Raji, I.D.; Bender, E.M.; Denton, E.; Hanna, A. Data and its (dis) contents: A survey of dataset development and use in machine learning research. arXiv 2020, arXiv:2012.05345. [Google Scholar] [CrossRef]

- Hasgall, P.; Di Gennaro, F.; Baumgartner, C.; Neufeld, E.; Lloyd, B.; Gosselin, M.; Payne, D.; Klingenböck, A.; Kuster, N. IT’IS Database for Thermal and Electromagnetic Parameters of Biological Tissues; Version 4.0, 15 May 2018; Technical Report, VIP21000-04-0. itis. swiss/database; ScienceOpen, Inc.: Burlington, MA, USA, 2018. [Google Scholar]

- Talman, A.; Chatzikyriakidis, S. Testing the generalization power of neural network models across NLI benchmarks. arXiv 2018, arXiv:1810.09774. [Google Scholar]

- De Teyou, G.K.; Petit, H.; Loumeau, P.; Fakhoury, H.; Le Guillou, Y.; Paquelet, S. Statistical analysis of noise in broadband and high resolution ADCs. In Proceedings of the 2014 21st IEEE International Conference on Electronics, Circuits and Systems (ICECS), Marseille, France, 7–10 December 2014; pp. 490–493. [Google Scholar]

- Frangi, A.; Rosell, J. A theoretical analysis of noise in electrical impedance tomographic images. In Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’ (Cat. No. 97CH36136), Chicago, IL, USA, 30 October–2 November 1997; Volume 1, pp. 433–436. [Google Scholar]

- Porter, T.; Duff, T. Compositing digital images. In Proceedings of the 11th Annual Conference on Computer Graphics and Interactive Techniques, Minneapolis, MN, USA, 23–27 July 1984; pp. 253–259. [Google Scholar]

- Inoue, H. Data augmentation by pairing samples for images classification. arXiv 2018, arXiv:1801.02929. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Luo, W.; Li, Y.; Urtasun, R.; Zemel, R. Understanding the effective receptive field in deep convolutional neural networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 4905–4913. [Google Scholar]

- Azulay, A.; Weiss, Y. Why do deep convolutional networks generalize so poorly to small image transformations? J. Mach. Learn. Res. 2019, 20, 1–25. [Google Scholar]

- Zhang, R. Making convolutional networks shift-invariant again. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 7324–7334. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Liu, J.; Sun, Y.; Xu, X.; Kamilov, U.S. Image restoration using total variation regularized deep image prior. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 7715–7719. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems, 2015. Software. Available online: tensorflow.org (accessed on 10 February 2022).

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015; Conference Track Proceedings. Bengio, Y., LeCun, Y., Eds.; DBLP: Trier, Germany, 2015. [Google Scholar]

- Adler, A.; Arnold, J.H.; Bayford, R.; Borsic, A.; Brown, B.; Dixon, P.; Faes, T.J.; Frerichs, I.; Gagnon, H.; Gärber, Y.; et al. GREIT: A unified approach to 2D linear EIT reconstruction of lung images. Physiol. Meas. 2009, 30, S35. [Google Scholar] [CrossRef] [PubMed]

- Muders, T.; Luepschen, H.; Meier, T.; Reske, A.W.; Zinserling, J.; Kreyer, S.; Pikkemaat, R.; Maripu, E.; Leonhardt, S.; Hedenstierna, G.; et al. Individualized positive end-expiratory pressure and regional gas exchange in porcine lung injury. Anesthesiology 2020, 132, 808–824. [Google Scholar] [CrossRef] [PubMed]

| Algorithm/Metric | AR | PE | RNG |

|---|---|---|---|

| GN | |||

| RF-REIM-NET |

| Algorithm/Metric | AR | PE | RNG |

|---|---|---|---|

| GN | |||

| RF-REIM-NET |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rixen, J.; Eliasson, B.; Hentze, B.; Muders, T.; Putensen, C.; Leonhardt, S.; Ngo, C. A Rotational Invariant Neural Network for Electrical Impedance Tomography Imaging without Reference Voltage: RF-REIM-NET. Diagnostics 2022, 12, 777. https://doi.org/10.3390/diagnostics12040777

Rixen J, Eliasson B, Hentze B, Muders T, Putensen C, Leonhardt S, Ngo C. A Rotational Invariant Neural Network for Electrical Impedance Tomography Imaging without Reference Voltage: RF-REIM-NET. Diagnostics. 2022; 12(4):777. https://doi.org/10.3390/diagnostics12040777

Chicago/Turabian StyleRixen, Jöran, Benedikt Eliasson, Benjamin Hentze, Thomas Muders, Christian Putensen, Steffen Leonhardt, and Chuong Ngo. 2022. "A Rotational Invariant Neural Network for Electrical Impedance Tomography Imaging without Reference Voltage: RF-REIM-NET" Diagnostics 12, no. 4: 777. https://doi.org/10.3390/diagnostics12040777

APA StyleRixen, J., Eliasson, B., Hentze, B., Muders, T., Putensen, C., Leonhardt, S., & Ngo, C. (2022). A Rotational Invariant Neural Network for Electrical Impedance Tomography Imaging without Reference Voltage: RF-REIM-NET. Diagnostics, 12(4), 777. https://doi.org/10.3390/diagnostics12040777