Safety Risk Recognition Method Based on Abnormal Scenarios

Abstract

:1. Introduction

2. Related Research

2.1. Abnormal Scenario Detection Methods

2.2. Construction Risk Scenario Detection Methods

3. Proposed Method

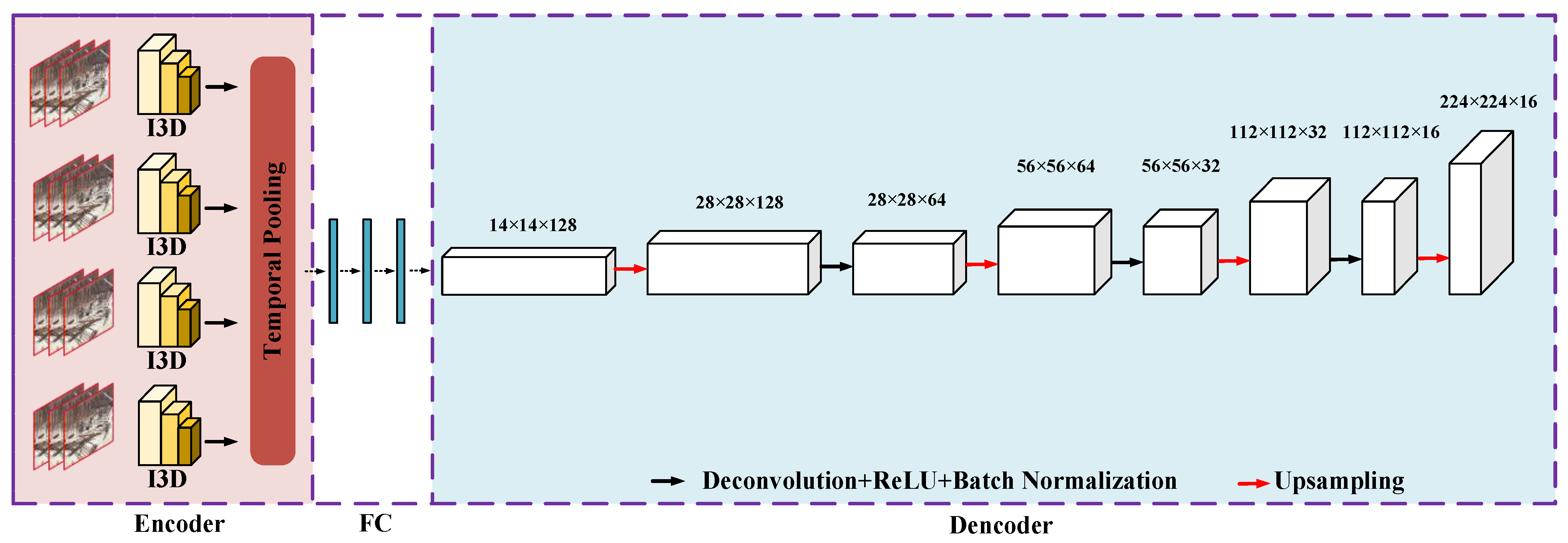

3.1. I3D-AE Video Prediction Model

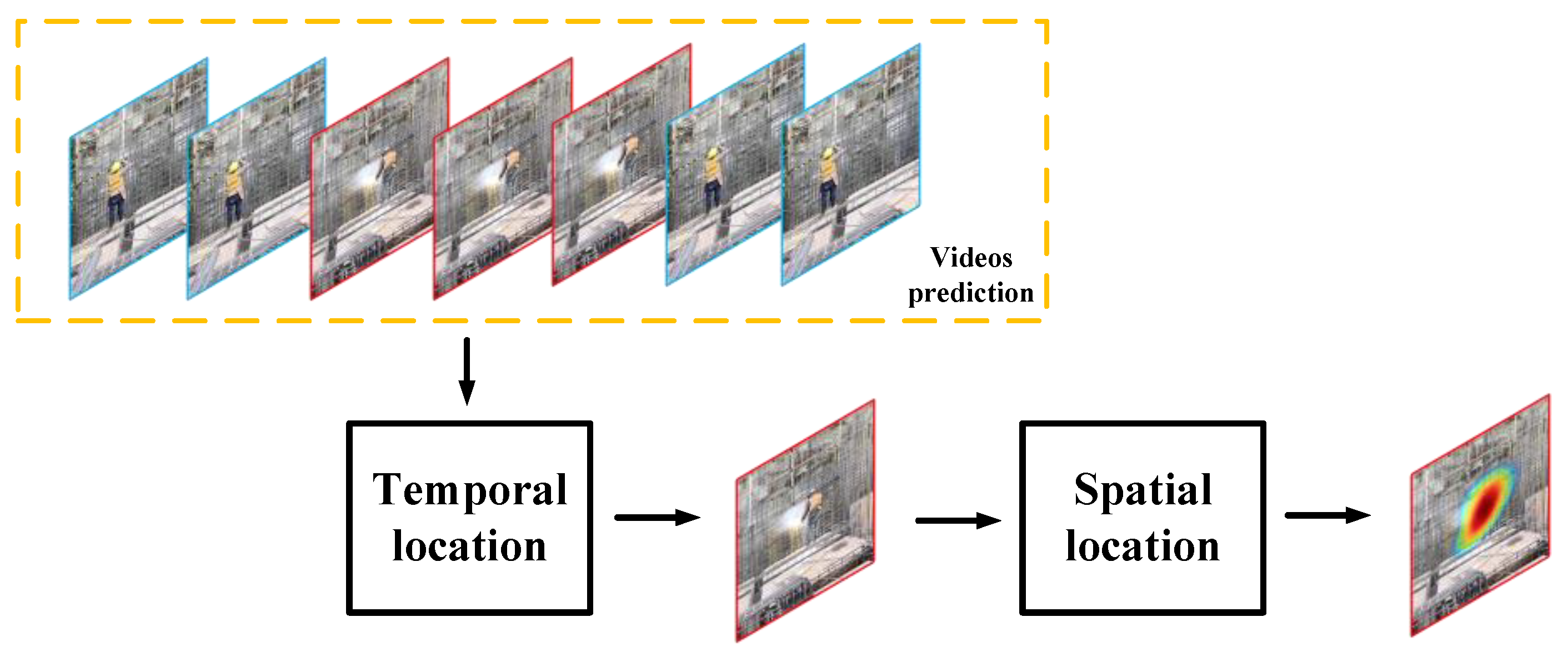

3.2. Spatial and Temporal Locating

3.2.1. Abnormal Scenario Spatial Localization

3.2.2. Abnormal Scenario Temporal Localization

4. Experiments

4.1. Construction Site Dataset

4.2. Evaluation Criteria

- (1)

- Pixel level

- (2)

- Frame level

4.3. Implementation Details

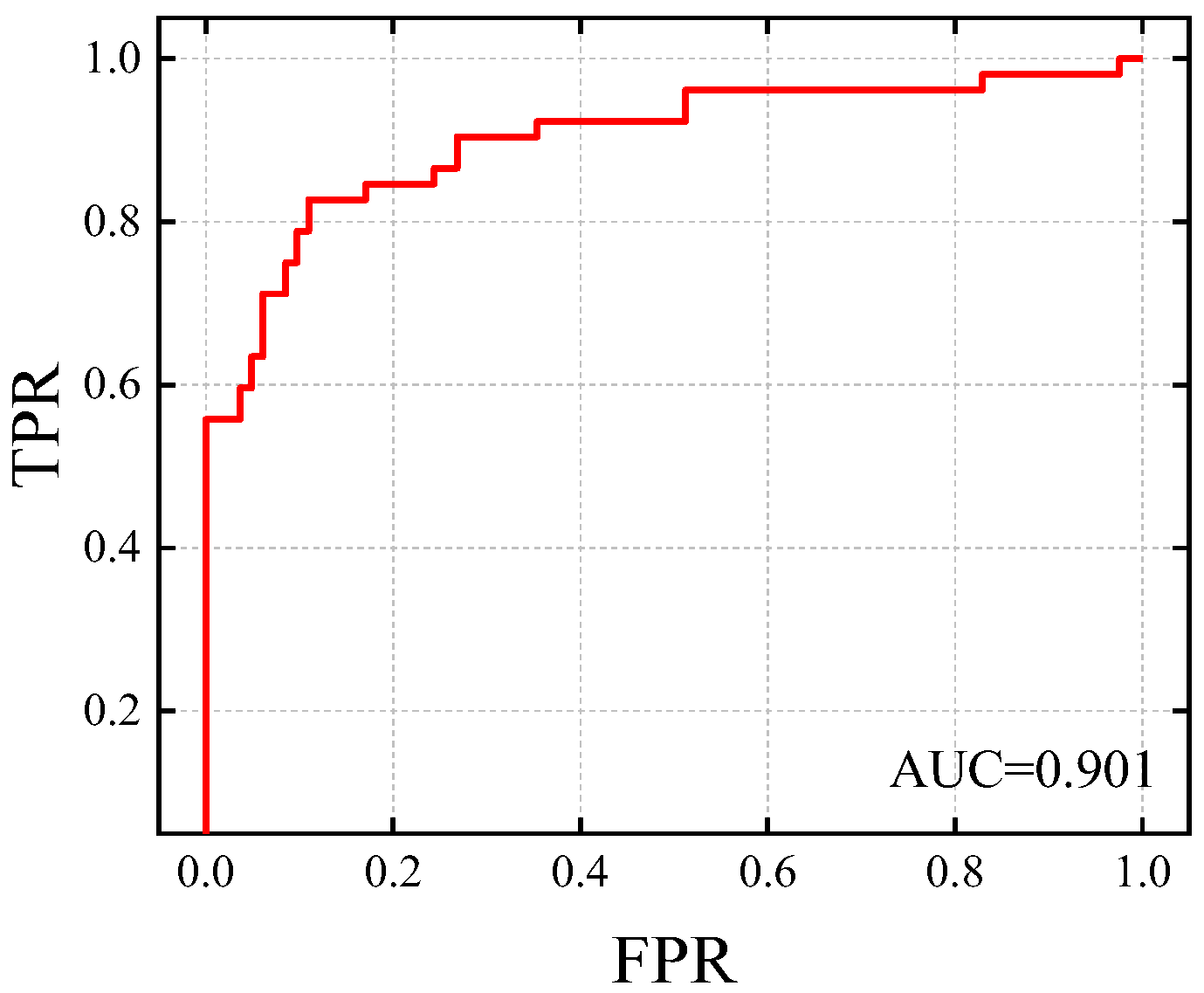

5. Experimental Results

5.1. Visualization of Spatial Localization Results

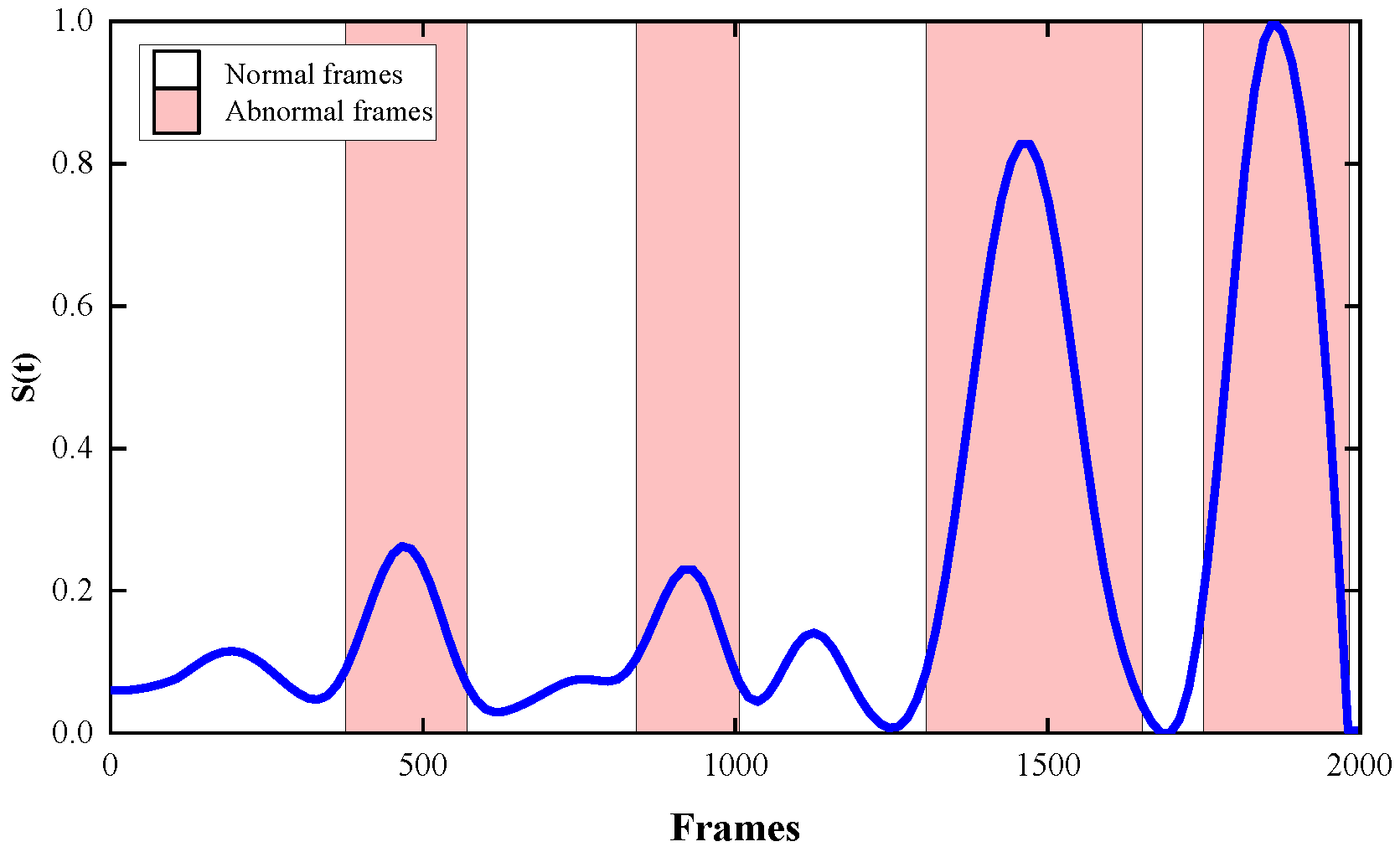

5.2. Temporal Localization Results

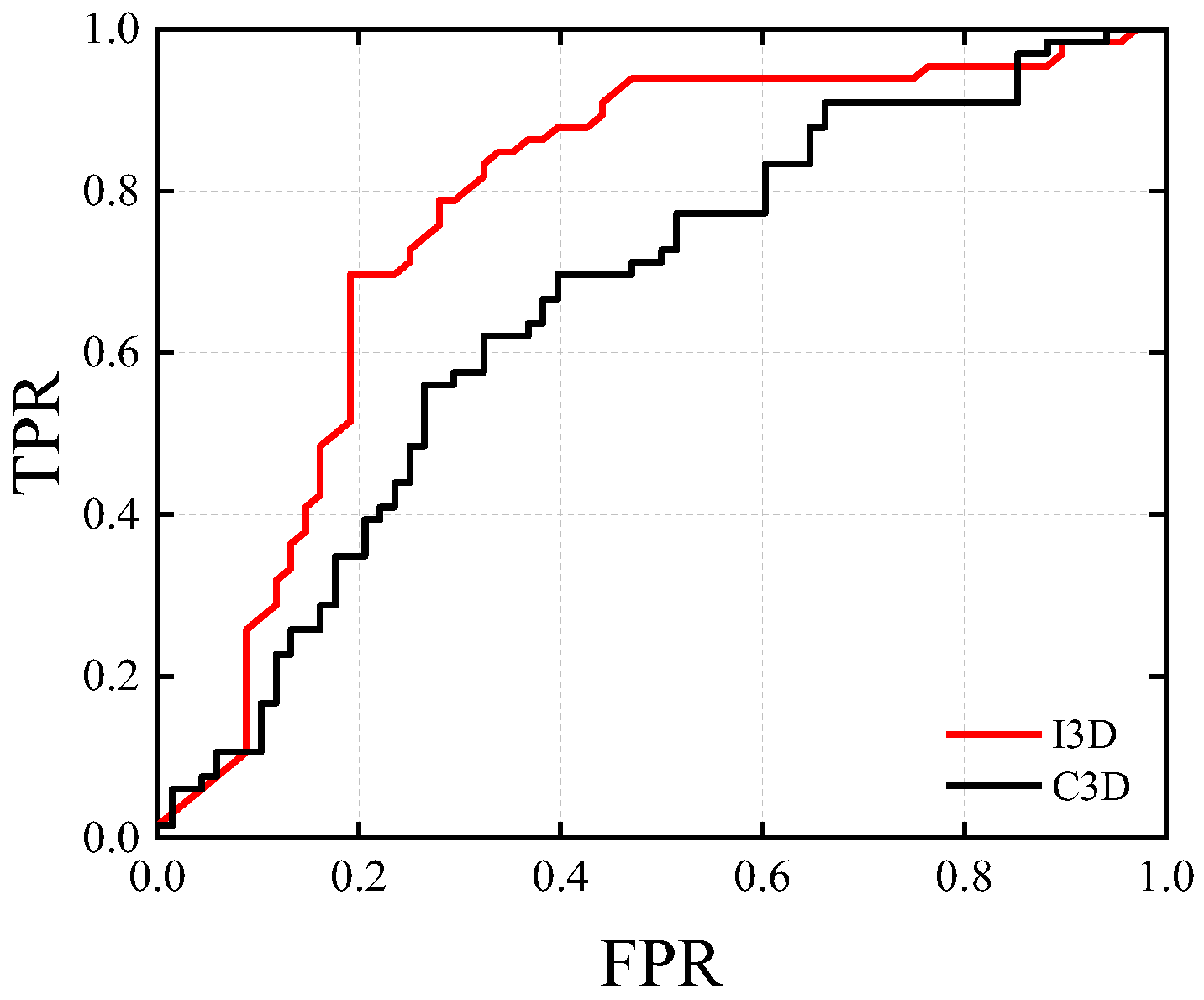

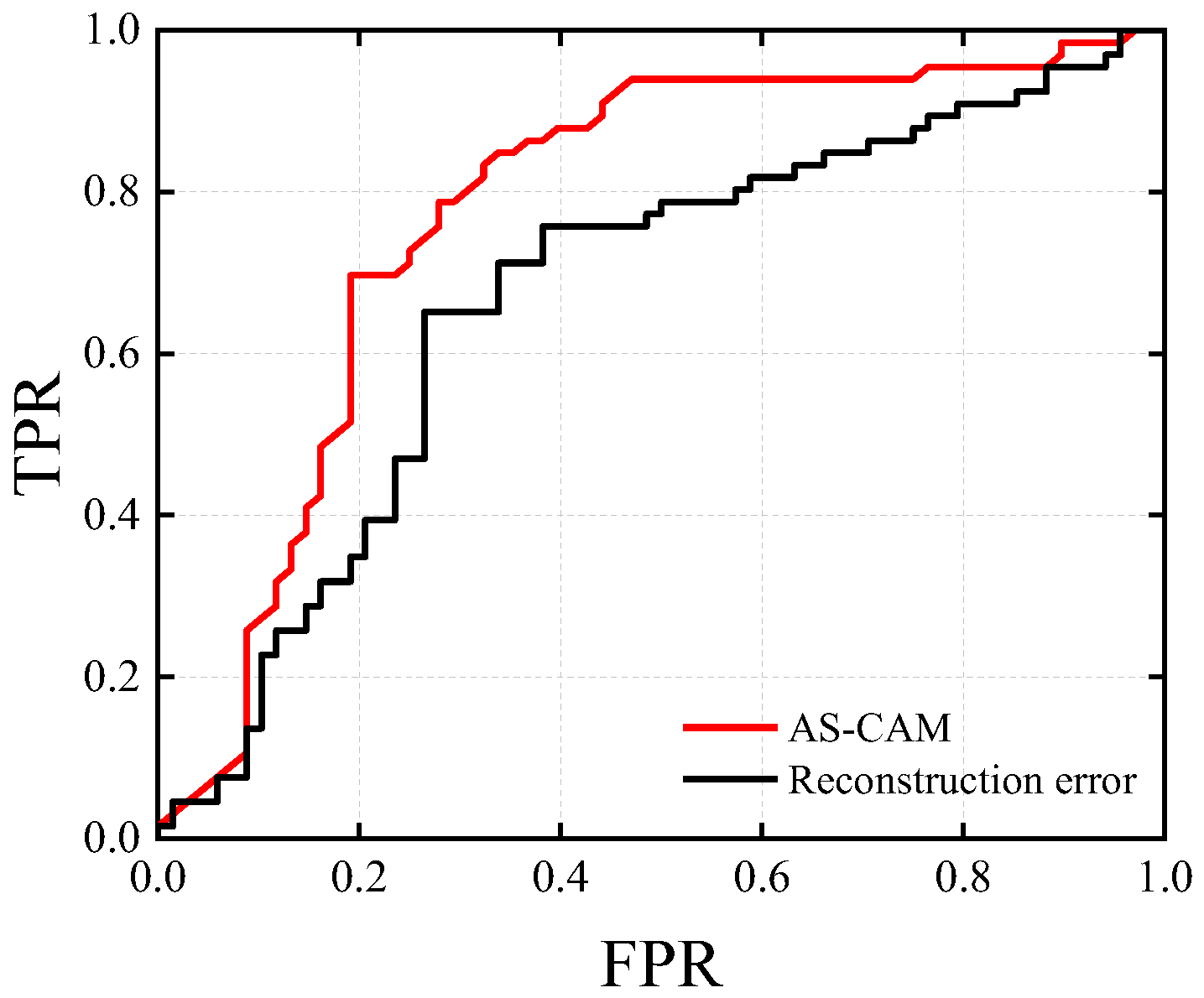

5.3. Ablation Experiments

6. Discussion

- (a)

- In this study, automatic recognition for potential safety risks at construction sites was investigated. The principle is to use the unpredictability that risk scenes have; thus, a video prediction model was used, and when the prediction model demonstrated anomalies, the scene was considered to have the possibility of risk.

- (b)

- Compared with the traditional human safety risk recognition method, the proposed method is an intelligent recognition technique. Compared with the existing techniques based on intelligent algorithms, the proposed method omits the database construction of risk scenes and is a lightweight method.

- (c)

- The proposed method provides a new way of thinking in terms of risk scenario detection. It is not necessary to detect only specific security risks; undirected detection can still be effective for risk recognition.

7. Conclusions

- A new abnormal scenarios detection method for construction sites was proposed, which contains a video prediction model, I3D-AE, a spatial information module, AS-CAM, and a temporal information parameter, St;

- By locating abnormal areas by replacing the reconstruction error with a weighted saliency map, this method can achieve a good localization effect when faced with a complex image;

- Our method was validated with a dataset, and the results show that our method can reach an advanced level at construction sites;

- The proposed approach allows for the recognition of multiple unknown risks, rather than specific risk scenarios, avoids building a database, and saves computing resources.

Author Contributions

Funding

Conflicts of Interest

References

- Zhang, M.; Zhu, M.; Zhao, X. Recognition of High-Risk Scenarios in Building Construction Based on Image Semantics. J. Comput. Civ. Eng. 2020, 34, 04020019. [Google Scholar] [CrossRef]

- Leung, S.-W.; Mak, S.; Lee, B.L. Using a real-time integrated communication system to monitor the progress and quality of construction works. Autom. Constr. 2008, 17, 749–757. [Google Scholar] [CrossRef]

- Park, J.W.; Kim, K.; Cho, Y.K. Framework of Automated Construction-Safety Monitoring Using Cloud-Enabled BIM and BLE Mobile Tracking Sensors. J. Constr. Eng. Manag. 2017, 143, 05016019. [Google Scholar] [CrossRef]

- Kim, S.S. Opportunities for construction site monitoring by adopting first personal view (FPV) of a drone. Smart Struct. Syst. 2018, 21, 139–149. [Google Scholar]

- Shin, J.M.; Kim, S.Y.; Kim, G.H.; Jung, M.G.; Shin, D.W. Ubiquitous Sensor Network for Construction Site Monitoring. Adv. Mater. Res. 2014, 919–921, 388–391. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Yu, Y.; Zhou, C.; Cao, D. Combining deep features and activity context to improve recognition of activities of workers in groups. Comput. Civ. Infrastruct. Eng. 2020, 35, 965–978. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Yang, X.; Yu, Y.; Cao, D. Capturing and understanding workers’ activities in far-field surveillance videos with deep action recognition and bayesian nonparametric learning. Comput. Civ. Infrastruct. Eng. 2018, 34, 333–351. [Google Scholar] [CrossRef]

- Luo, X.; Li, H.; Cao, D.; Yu, Y.; Yang, X.; Huang, T. Towards efficient and objective work sampling: Recognizing workers’ activities in site surveillance videos with two-stream convolutional networks. Autom. Constr. 2018, 94, 360–370. [Google Scholar] [CrossRef]

- Yang, J.; Shi, Z.; Wu, Z. Vision-Based Action Recognition of Construction Workers Using Dense Trajectories; Elsevier Science Publishers B. V.: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Yang, J.; Park, M.-W.; Vela, P.A.; Golparvar-Fard, M. Construction performance monitoring via still images, time-lapse photos, and video streams: Now, tomorrow, and the future. Adv. Eng. Inform. 2015, 29, 211–224. [Google Scholar] [CrossRef]

- Kaskutas, V.; Dale, A.M.; Nolan, J.; Patterson, D.; Lipscomb, H.J.; Evanoff, B.A. Fall hazard control observed on residential construction sites. Am. J. Ind. Med. 2009, 52, 491–499. [Google Scholar] [CrossRef] [Green Version]

- Missliwetz, J. Fatal Impalement Injuries after Falls at Construction Sites. Am. J. Forensic Med. Pathol. 1995, 16, 81–83. [Google Scholar] [CrossRef] [PubMed]

- Kaskutas, V.K.; Dale, A.M.; Lipscomb, H.J.; Evanoff, B.A. Development of the St. Louis audit of fall risks at residential construction sites. Int. J. Occup. Environ. Health 2008, 14, 243–249. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Pradhananga, N.; Teizer, J. Automatic Fall Risk Identification Using Point Cloud Data in Construction Excavation. Int. Conf. Comput. Civ. Build. Eng. 2014, 2014, 981–988. [Google Scholar] [CrossRef] [Green Version]

- Liu, H.; Wang, Y.; Sun, S.; Sun, B. Study on Safety Assessment of Fire Hazard for the Construction Site. In International Symposium on Safety Science and Engineering; ISSSE: Beijing, China, 2012; Volume 43, pp. 369–373. [Google Scholar]

- Tao, K.I. Destech Publicat. The Analysis of Fire Case for Construction Sites and Its Safety Measures. In Proceedings of the International Conference on Social Science, Management and Economics (SSME 2015), Guangzhou, China, 9–10 May 2015. [Google Scholar]

- Tsai, M.-K. Improving efficiency in emergency response for construction site fires: An exploratory case study. J. Civ. Eng. Manag. 2015, 22, 322–332. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Yu, H.; Liu, P. An automated safety risk recognition mechanism for underground construction at the pre-construction stage based on BIM. Autom. Constr. 2018, 91, 284–292. [Google Scholar] [CrossRef]

- Perlman, A.; Sacks, R.; Barak, R. Hazard recognition and risk perception in construction. Saf. Sci. 2014, 64, 22–31. [Google Scholar] [CrossRef]

- Carreira, J.; Zisserman, A. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4724–4733. [Google Scholar]

- Cho, S.; Kwon, J. Abnormal event detection by variation matching. Mach. Vis. Appl. 2021, 32, 80. [Google Scholar] [CrossRef]

- Wei, H.; Xiao, Y.; Li, R.; Liu, X. IEEE Crowd abnormal detection using two-stream Fully Convolutional Neural Networks. In Proceedings of the 10th International Conference on Measuring Technology and Mechatronics Automation (ICMTMA), Changsha, China, 10–11 February 2018; pp. 332–336. [Google Scholar]

- Zhang, J.; Wu, C.; Wang, Y.; Wang, P. Detection of abnormal behavior in narrow scene with perspective distortion. Mach. Vis. Appl. 2018, 30, 987–998. [Google Scholar] [CrossRef]

- Mehmood, A. Abnormal Behavior Detection in Uncrowded Videos with Two-Stream 3D Convolutional Neural Networks. Appl. Sci. 2021, 11, 3523. [Google Scholar] [CrossRef]

- He, C.; Shao, J.; Sun, J. An anomaly-introduced learning method for abnormal event detection. Multimed. Tools Appl. 2017, 77, 29573–29588. [Google Scholar] [CrossRef]

- Sabokrou, M.; Fathy, M.; Hoseini, M. Video anomaly detection and localisation based on the sparsity and reconstruction error of auto-encoder. Electron. Lett. 2016, 52, 1122–1124. [Google Scholar] [CrossRef]

- Ravanbakhsh, M.; Nabi, M.; Mousavi, H.; Sangineto, E.; Sebe, N. Plug-and-play cnn for crowd motion analysis: An application in abnormal event detection. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1689–1698. [Google Scholar]

- Duan, P.; Zhou, J.; Tao, S. Risk events recognition using smartphone and machine learning in construction workers’ material handling tasks. Eng. Constr. Arch. Manag. 2022. [Google Scholar] [CrossRef]

- Park, J.; Lee, H.; Kim, H.Y. Risk Factor Recognition for Automatic Safety Management in Construction Sites Using Fast Deep Convolutional Neural Networks. Appl. Sci. 2022, 12, 694. [Google Scholar] [CrossRef]

- Kim, I.; Lee, Y.; Choi, J. BIM-based hazard recognition and evaluation methodology for automating construction site risk assessment. Appl. Sci. 2020, 10, 2335. [Google Scholar] [CrossRef] [Green Version]

- Su, Y.; Mao, C.; Jiang, R.; Liu, G.; Wang, J. Data-Driven Fire Safety Management at Building Construction Sites: Leveraging CNN. J. Manag. Eng. 2021, 37, 04020108. [Google Scholar] [CrossRef]

- Xiong, R.; Song, Y.; Li, H.; Wang, Y. Onsite video mining for construction hazards identification with visual relationships. Adv. Eng. Inform. 2019, 42, 100966. [Google Scholar] [CrossRef]

- Chattopadhay, A.; Sarkar, A.; Howlader, P.; Balasubramanian, V.N. Grad-cam++: Generalized gradient-based visual explanations for deep convolutional networks. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 839–847. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef] [Green Version]

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High Accuracy Optical Flow Estimation Based on a Theory for Warping, European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2004; pp. 25–36. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM international conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

| Actual Positive Samples | Actual Negative Samples | |

|---|---|---|

| Predicted to be positive samples | TP | FN |

| Predicted to be negative samples | FP | TN |

| Layer | Output Size | Kernel Size | Stride | Pad |

|---|---|---|---|---|

| FC | 14 × 14 × 128 | |||

| Upsampling 1 | 28 × 28 × 128 | |||

| DeConv 1 | 28 × 28 × 64 | 3 × 3 × 3 | 2 | 1 |

| Upsampling 2 | 56 × 56 × 64 | |||

| DeConv 2 | 56 × 56 × 32 | 3 × 3 × 3 | 2 | 1 |

| Upsampling 3 | 112 × 112 × 32 | |||

| DeConv 3 | 112 × 112 × 16 | 3 × 3 × 3 | 2 | 1 |

| Upsampling 4 | 224 × 224 × 16 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Song, B.; Li, D. Safety Risk Recognition Method Based on Abnormal Scenarios. Buildings 2022, 12, 562. https://doi.org/10.3390/buildings12050562

Li Z, Song B, Li D. Safety Risk Recognition Method Based on Abnormal Scenarios. Buildings. 2022; 12(5):562. https://doi.org/10.3390/buildings12050562

Chicago/Turabian StyleLi, Ziqi, Bo Song, and Dongsheng Li. 2022. "Safety Risk Recognition Method Based on Abnormal Scenarios" Buildings 12, no. 5: 562. https://doi.org/10.3390/buildings12050562