Moral Judgments of Human vs. AI Agents in Moral Dilemmas

Abstract

:1. Introduction

1.1. Moral Judgments

1.2. The Dual-Process Theory of Moral Judgment

1.3. Artificial Intelligence as Moral Agents

1.4. Moral Judgments of Human versus AI Agents

1.5. The Current Research

2. Experiment 1

2.1. Materials and Methods

2.1.1. Participants

2.1.2. Materials

2.1.3. Design and Measures

2.2. Results

3. Experiment 2

3.1. Materials and Methods

3.1.1. Participants

3.1.2. Materials

3.1.3. Design and Measures

3.2. Results

4. Experiment 3

4.1. Materials and Methods

4.1.1. Participants

4.1.2. Materials

4.1.3. Design and Measures

4.2. Results

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rabbitt, S.M.; Kazdin, A.E.; Scassellati, B. Integrating socially assistive robotics into mental healthcare interventions: Applications and recommendations for expanded use. Clin. Psychol. Rev. 2015, 35, 35–46. [Google Scholar] [CrossRef] [PubMed]

- Fournier, T. Will my next car be a libertarian or a utilitarian? Who will decide? IEEE Veh. Technol. Mag. 2016, 35, 40–45. [Google Scholar] [CrossRef]

- Angwin, J.; Larson, J.; Surya, M.; Lauren, K. Machine Bias. Available online: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminalsentencing (accessed on 30 December 2022).

- Moulliet, D.; Stolzenbach, J.; Majonek, A.; Völker, T. The expansion of Robo-Advisory in Wealth Management. Available online: https://www2.deloitte.com/content/dam/Deloitte/de/Documents/financialservices/Deloitte-Robo-safe.pdf (accessed on 30 December 2022).

- Shank, D.B.; DeSanti, A.; Maninger, T. When are artificial intelligence versus human agents faulted for wrongdoing? Moral attributions after individual and joint decisions. Inf. Commun. Soc. 2019, 22, 648–663. [Google Scholar] [CrossRef]

- Malle, B.F.; Scheutz, M.; Arnold, T.; Voiklis, J.; Cusimano, C. Sacrifice one for the good of many? People apply different moral norms to human and robot agents. In Proceedings of the 2015 10th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Portland, OR, USA, 2–5 March 2015; pp. 117–124. [Google Scholar]

- Voiklis, J.; Kim, B.; Cusimano, C.; Malle, B.F. Moral judgments of human vs. robot agents. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 775–780. [Google Scholar]

- Bigman, Y.E.; Gray, K. People are averse to machines making moral decisions. Cognition 2018, 181, 21–34. [Google Scholar] [CrossRef]

- Crowley, J. Woman Says Amazon’s Alexa Told Her to Stab Herself in The Heart For ‘The Greater Good’. Available online: https://www.newsweek.com/amazon-echo-tells-uk-woman-stab-herself-1479074 (accessed on 30 December 2022).

- Forrest, C. Robot Kills Worker on Assembly Line, Raising Concerns about Human-Robot Collaboration. Available online: https://www.techrepublic.com/article/robot-kills-worker-on-assembly-line-raising-concerns-about-human-robot-collaboration/ (accessed on 30 December 2022).

- Schwab, K. The Fourth Industrial Revolution. Available online: https://books.google.com/books?hl=en&lr=&id=GVekDQAAQBAJ&oi=fnd&pg=PR7&dq=The+fourth+industrial+revolution&ots=NhKeFDzwhG&sig=SxKMGj8OWFndH_0YSdJMKbknCwA#v=onepage&q=The+fourth+industrial+revolution&f=false (accessed on 30 December 2022).

- Komatsu, T.; Malle, B.F.; Scheutz, M. Blaming the reluctant robot: Parallel blame judgments for robots in moral dilemmas across US and Japan. In Proceedings of the 2021 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 8–11 March 2021; pp. 63–72. [Google Scholar]

- Lee, M.K. Understanding perception of algorithmic decisions: Fairness, trust, and emotion in response to algorithmic management. Big Data Soc. 2018, 5, 1–16. [Google Scholar] [CrossRef]

- Longoni, C.; Bonezzi, A.; Morewedge, C.K. Resistance to medical artificial intelligence. J. Consum. Res. 2019, 46, 629–650. [Google Scholar] [CrossRef]

- Malle, B.F. Moral judgments. Annu. Rev. Psychol. 2021, 72, 293–318. [Google Scholar] [CrossRef]

- De Houwer, J.; Thomas, S.; Baeyens, F. Association learning of likes and dislikes: A review of 25 years of research on human evaluative conditioning. Psychol. Bull. 2001, 127, 853–869. [Google Scholar] [CrossRef]

- Cusimano, C.; Thapa, S.; Malle, B.F. Judgment before emotion: People access moral evaluations faster than affective states. In Proceedings of the 39th Annual Conference of the Cognitive Science Society, London, UK, 26–29 July 2017; pp. 1848–1853. [Google Scholar]

- Niedenthal, P.M.; Rohmann, A.; Dalle, N. What is primed by emotion concepts and emotion words? In The Psychology of Evaluation: Affective Processes in Cognition and Emotion; Musch, J., Klauer, K.C., Eds.; Erlbaum: Mahwah, NJ, USA, 2003; pp. 307–333. [Google Scholar]

- Nichols, S.; Mallon, R. Moral dilemmas and moral rules. Cognition 2006, 100, 530–542. [Google Scholar] [CrossRef]

- Cosmides, L.; Tooby, J. Cognitive adaptations for social exchange. In The Adapted Mind; Barkow, J., Cosmides, L., Tooby, J., Eds.; Oxford University Press: New York, NY, USA, 1992; pp. 163–228. [Google Scholar]

- Stone, V.E.; Cosmides, L.; Tooby, J.; Kroll, N.; Knight, R.T. Selective impairment of reasoning about social exchange in a patient with bilateral limbic system damage. Proc. Natl. Acad. Sci. USA 2002, 99, 11531–11536. [Google Scholar] [CrossRef] [Green Version]

- Gigerenzer, G.; Hug, K. Domain-specific reasoning: Social contracts, cheating, and perspective change. Cognition 1992, 43, 127–171. [Google Scholar] [CrossRef] [Green Version]

- Haidt, J. The emotional dog and its rational tail: A social intuitionist approach to moral judgment. Psychol. Rev. 2001, 108, 814–834. [Google Scholar] [CrossRef]

- Alicke, M.D. Culpable control and the psychology of blame. Psychol. Rev. 2000, 126, 556–574. [Google Scholar] [CrossRef]

- Malle, B.F.; Guglielmo, S.; Monroe, A.E. A theory of blame. Psychol. Inq. 2014, 25, 147–186. [Google Scholar] [CrossRef]

- Mill, J.S. Utilitarianism. In Essays on Ethics, Religion, and Society; Robson, J.M., Ed.; University of Toronto Press: Toronto, ON, Canada, 1969; Volume 10, pp. 203–259. [Google Scholar]

- Kant, I. Deontology: The Ethics of Duty. In Ethics and Values: Basic Readings in Theory and Practice; Keller, D., Ed.; Pearson Custom: Boston, MA, USA, 2002; pp. 77–87. [Google Scholar]

- Greene, J.D.; Sommerville, R.B.; Nystrom, L.E.; Darley, J.M.; Cohen, J.D. An fMRI investigation of emotional engagement in moral judgment. Science 2001, 293, 2105–2108. [Google Scholar] [CrossRef] [Green Version]

- Greene, J.D. Why are VMPFC patients more utilitarian? A dual-process theory of moral judgment explains. Trends Cogn. Sci. 2007, 11, 322–323. [Google Scholar] [CrossRef]

- Greene, J.D. Dual-process morality and the personal/impersonal distinction: A reply to McGuire, Langdon, Coltheart, and Mackenzie. J. Exp. Soc. Psychol. 2009, 45, 581–584. [Google Scholar] [CrossRef]

- Greene, J.D.; Morelli, S.A.; Lowenberg, K.; Nystrom, L.E.; Cohen, J.D. Cognitive load selectively interferes with utilitarian moral judgment. Cognition 2008, 107, 1144–1154. [Google Scholar] [CrossRef] [Green Version]

- Cushman, F.; Young, L.; Hauser, M. The role of conscious reasoning and intuition in moral judgment: Testing three principles of harm. Psychol. Sci. 2006, 17, 1082–1089. [Google Scholar] [CrossRef]

- Schaich Borg, J.; Hynes, C.; Van Horn, J.; Grafton, S.; Sinnott-Armstrong, W. Consequences, action, and intention as factors in moral judgments: An fMRI investigation. J. Cogn. Neurosci. 2006, 18, 803–817. [Google Scholar] [CrossRef]

- Royzman, E.B.; Baron, J. The preference for indirect harm. Soc. Justice Res. 2002, 15, 165–184. [Google Scholar] [CrossRef]

- Waldmann, M.R.; Dieterich, J.H. Throwing a bomb on a person versus throwing a person on a bomb: Intervention myopia in moral intuitions. Psychol. Sci. 2007, 18, 247–253. [Google Scholar] [CrossRef] [PubMed]

- Greene, J.D.; Cushman, F.A.; Stewart, L.E.; Lowenberg, K.; Nystrom, L.E.; Cohen, J.D. Pushing moral buttons: The interaction between personal force and intention in moral judgment. Cognition 2009, 111, 364–371. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yagoda, R.E.; Gillan, D.J. You want me to trust a robot? The development of a human-robot interaction trust scale. Int. J. Soc. Robot. 2002, 4, 235–248. [Google Scholar] [CrossRef]

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Fortunati, L.; Sarrica, M.; Ferrin, G.; Brondi, S.; Honsell, F. Social robots as cultural objects: The sixth dimension of dynamicity? Inf. Soc. 2018, 34, 141–152. [Google Scholar] [CrossRef]

- Gray, H.; Gray, K.; Wegner, D.M. Dimensions of mind perception. Science 2007, 315, 619. [Google Scholar] [CrossRef] [Green Version]

- Gray, K.; Wegner, D.M. Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition 2012, 125, 125–130. [Google Scholar] [CrossRef]

- Gray, K.; Young, L.; Waytz, A. Mind perception is the essence of morality. Psychol. Inq. 2012, 23, 101–124. [Google Scholar] [CrossRef]

- Newcomb, D. You Won’t Need a Driver’s License by 2040. Available online: http://edition.cnn.com/2012/09/18/tech/innovation/ieee-2040-cars (accessed on 14 January 2023).

- Van Arem, B.; Van Driel, C.J.; Visser, R. The impact of cooperative adaptive cruise control on traffic-flow characteristics. IEEE Trans. Intell. Transp. Syst. 2006, 7, 429–436. [Google Scholar] [CrossRef] [Green Version]

- Gao, P.; Hensley, R.; Zielke, A. A roadmap to the future for the auto industry. Available online: https://img.etb2bimg.com/files/retail_files/reports/data_file-A-road-map-to-the-future-for-the-auto-industry-McKinsey-Quarterly-Report-1426754280.pdf (accessed on 30 December 2022).

- Bonnefon, J.F.; Shariff, A.; Rahwan, I. The social dilemma of autonomous vehicles. Science 2016, 352, 1573–1576. [Google Scholar] [CrossRef] [Green Version]

- Komatsu, T. Japanese students apply same moral norms to humans and robot agents: Considering a moral HRI in terms of different cultural and academic backgrounds. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 457–458. [Google Scholar]

- Thomson, J.J. Killing, letting die, and the trolley problem. Monist 1976, 59, 204–217. [Google Scholar] [CrossRef]

- Foot, P. The problem of abortion and the doctrine of the double effect. Oxford. Rev. 1967, 2, 152–161. [Google Scholar]

- Salem, M.; Lakatos, G.; Amirabdollahian, F.; Dautenhahn, K. Would you trust a (faulty) robot? Effects of error, task type and personality on human-robot cooperation and trust. In Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; pp. 141–148. [Google Scholar]

- Robinette, P.; Howard, A.M.; Wagner, A.R. Effect of Robot Performance on Human Robot Trust in Time-Critical Situations. IEEE Trans. Hum. Mach. Syst. 2017, 47, 425–436. [Google Scholar] [CrossRef]

- Waytz, A.; Heafner, J.; Epley, N. The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 2014, 52, 113–117. [Google Scholar] [CrossRef]

- Ho, G.; Wheatley, D.; Scialfa, C.T. Age differences in trust and reliance of a medication management system. Interact. Comput. 2015, 17, 690–710. [Google Scholar] [CrossRef]

- May, D.C.; Holler, K.J.; Bethel, C.L.; Strawderman, L.; Carruth, D.W.; Usher, J.M. Survey of factors for the prediction of human comfort with a non-anthropomorphic robot in public spaces. Int. J. Soc. Robot. 2017, 9, 165–180. [Google Scholar] [CrossRef]

- Haring, K.S.; Matsumoto, Y.; Watanabe, K. How do people perceive and trust a lifelike robot. In Proceedings of the World Congress on Engineering and Computer Science, San Francisco, CA, USA, 23–25 October 2013; pp. 425–430. [Google Scholar]

- Joosse, M.; Lohse, M.; Perez, J.G.; Evers, V. What you do is who you are: The role of task context in perceived social robot personality. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2134–2139. [Google Scholar]

- Niculescu, A.; van Dijk, B.; Nijholt, A.; Li, H.; See, S.L. Making social robots more attractive: The effects of voice pitch, humor and empathy. Int. J. Soc. Robot. 2013, 5, 171–191. [Google Scholar] [CrossRef] [Green Version]

- Huerta, E.; Glandon, T.; Petrides, Y. Framing, decision-aid systems, and culture: Exploring influences on fraud investigations. Int. J. Appl. Inf. Syst. 2012, 13, 316–333. [Google Scholar] [CrossRef]

- Gawronski, B.; Armstrong, J.; Conway, P.; Friesdorf, R.; Hütter, M. Consequences, norms, and generalized inaction in moral dilemmas: The CNI model of moral decision-making. J. Pers. Soc. Psychol. 2017, 113, 343–376. [Google Scholar] [CrossRef]

- Gawronski, B.; Beer, J.S. What makes moral dilemma judgments “utilitarian” or “deontological”? Soc. Neurosci. 2017, 12, 626–632. [Google Scholar] [CrossRef] [PubMed]

- Baron, J.; Goodwin, G.P. Consequences, norms, and inaction: A critical analysis. Judgm. Decis. 2020, 15, 421–442. [Google Scholar] [CrossRef]

- Baron, J.; Goodwin, G.P. Consequences, norms, and inaction: Response to Gawronski et al. (2020). Judgm. Decis. 2021, 16, 566–595. [Google Scholar] [CrossRef]

- Liu, C.; Liao, J. CAN algorithm: An individual level approach to identify consequence and norm sensitivities and overall action/inaction preferences in moral decision-making. Front. Psychol. 2021, 11, 547916. [Google Scholar] [CrossRef]

- Liu, C.; Liao, J. Stand up to action: The postural effect of moral dilemma decision-making and the moderating role of dual processes. PsyCh J. 2021, 10, 587–597. [Google Scholar] [CrossRef] [PubMed]

- Feng, C.; Liu, C. Resolving the Limitations of the CNI Model in Moral Decision Making Using the CAN Algorithm: A Methodological Contrast. Behav. Sci. 2022, 12, 233. [Google Scholar] [CrossRef]

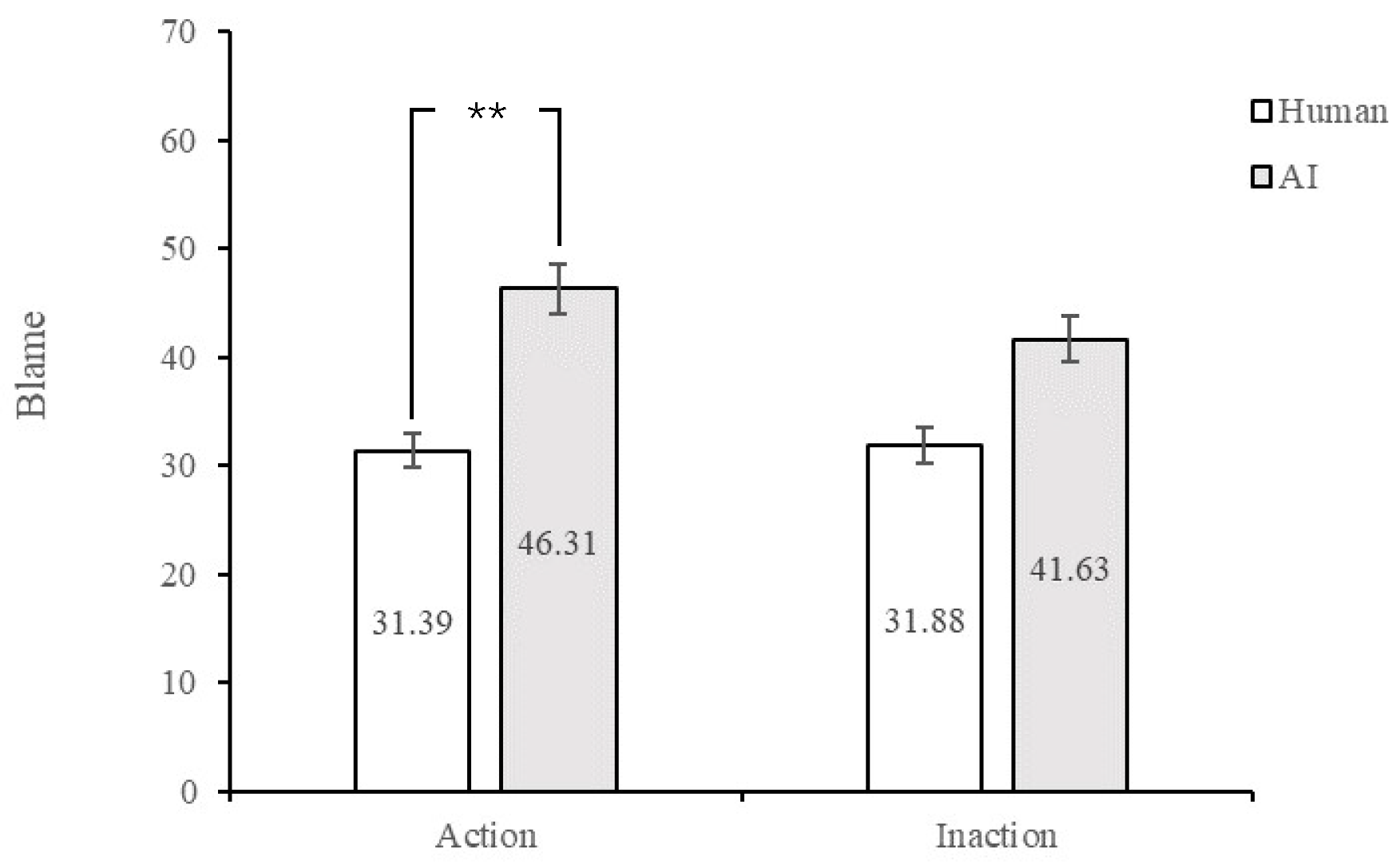

| Agent Type | Action | Morality | Permissibility | Wrongness | Blame |

|---|---|---|---|---|---|

| human agents | action | 60.88 ± 3.97 | 61.14 ± 3.97 | 44.63 ± 4.06 | 31.39 ± 3.78 |

| inaction | 62.49 ± 3.97 | 60.00 ± 3.97 | 44.04 ± 4.06 | 31.88 ± 3.78 | |

| AI agents | action | 52.53 ± 3.90 | 58.04 ± 3.89 | 51.31 ± 3.98 | 46.31 ± 3.71 |

| inaction | 53.74 ± 4.10 | 57.93 ± 4.10 | 46.41 ± 4.19 | 41.63 ± 3.90 | |

| agent type | * | ** | |||

| action | |||||

| agent type * action | |||||

| Agent Type | Action | Morality | Permissibility | Wrongness | Blame |

|---|---|---|---|---|---|

| human agents | action | 35.96 ± 4.27 | 40.90 ± 3.99 | 68.10 ± 4.02 | 52.33 ± 4.06 |

| inaction | 63.43 ± 4.23 | 59.39 ± 3.95 | 41.55 ± 3.97 | 39.20 ± 4.02 | |

| AI agents | action | 39.09 ± 4.32 | 34.43 ± 4.03 | 61.21 ± 4.06 | 53.98 ± 4.11 |

| inaction | 64.12 ± 4.19 | 67.38 ± 3.91 | 37.14 ± 3.93 | 36.90 ± 3.98 | |

| agent type | |||||

| action | ** | ** | ** | ** | |

| agent type * action | |||||

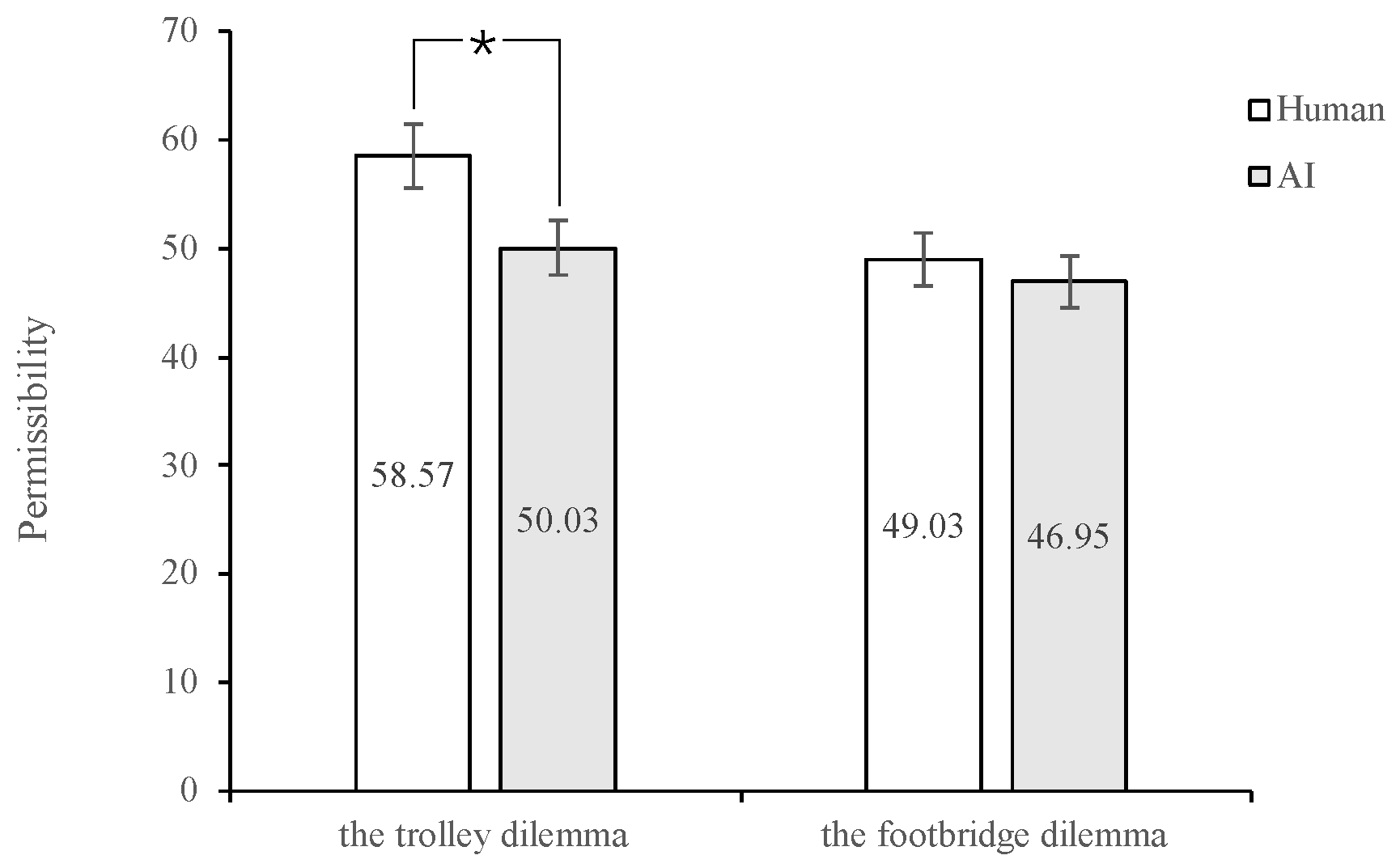

| Dilemma Type | Agent Type | Action | Morality | Permissibility | Wrongness | Blame |

|---|---|---|---|---|---|---|

| the trolley dilemma | human agents | action | 48.74 ± 3.42 | 54.46 ± 3.25 | 54.03 ± 3.46 | 47.67 ± 3.53 |

| inaction | 60.42 ± 3.47 | 62.81 ± 3.31 | 40.36 ± 3.52 | 34.03 ± 3.59 | ||

| AI agents | action | 45.66 ± 3.50 | 51.16 ± 3.34 | 48.31 ± 3.55 | 44.40 ± 3.62 | |

| inaction | 53.17 ± 3.50 | 48.90 ± 3.34 | 47.47 ± 3.55 | 44.40 ± 3.62 | ||

| the footbridge dilemma | human agents | action | 33.87 ± 3.62 | 38.18 ± 3.47 | 65.36 ± 3.53 | 61.97 ± 3.47 |

| inaction | 59.78 ± 3.68 | 60.25 ± 3.53 | 46.71 ± 3.59 | 41.17 ± 3.53 | ||

| AI agents | action | 37.36 ± 3.71 | 40.90 ± 3.56 | 59.79 ± 3.62 | 56.86 ± 3.56 | |

| inaction | 56.19 ± 3.71 | 53.00 ± 3.56 | 44.69 ± 3.62 | 38.91 ± 3.56 | ||

| dilemma type | ** | ** | ** | ** | ||

| agent type | p = 0.060 | |||||

| action | ** | ** | ** | ** | ||

| dilemma type * agent type | p = 0.088 | p = 0.061 | ||||

| dilemma type * action | ** | ** | * | ** | ||

| agent type * action | p = 0.075 | |||||

| dilemma type * agent type * action | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Wu, J.; Yu, F.; Xu, L. Moral Judgments of Human vs. AI Agents in Moral Dilemmas. Behav. Sci. 2023, 13, 181. https://doi.org/10.3390/bs13020181

Zhang Y, Wu J, Yu F, Xu L. Moral Judgments of Human vs. AI Agents in Moral Dilemmas. Behavioral Sciences. 2023; 13(2):181. https://doi.org/10.3390/bs13020181

Chicago/Turabian StyleZhang, Yuyan, Jiahua Wu, Feng Yu, and Liying Xu. 2023. "Moral Judgments of Human vs. AI Agents in Moral Dilemmas" Behavioral Sciences 13, no. 2: 181. https://doi.org/10.3390/bs13020181