Communication with Self-Growing Character to Develop Physically Growing Robot Toy Agent

Abstract

Featured Application

Abstract

1. Introduction

2. Synthetic Character

2.1. Former Researches on Synthetic Character

2.1.1. Synthetic Character Resembling an Animal (Around Year 2000)

- “Every day common sense”

- “The ability to learn”

- “The sense of empathy”

2.1.2. Synthetic Character in the Real-World Application

- Empathy

- Sympathy

2.2. Design Process of Robot Character in Application with Robot “Buddy”

2.2.1. Diary Studies for Collecting User Insight

- Facebook post: Message with the photo

- Instagram post: Digital photo with description

2.2.2. The Goal of Design: Position behind the Uncanny Valley

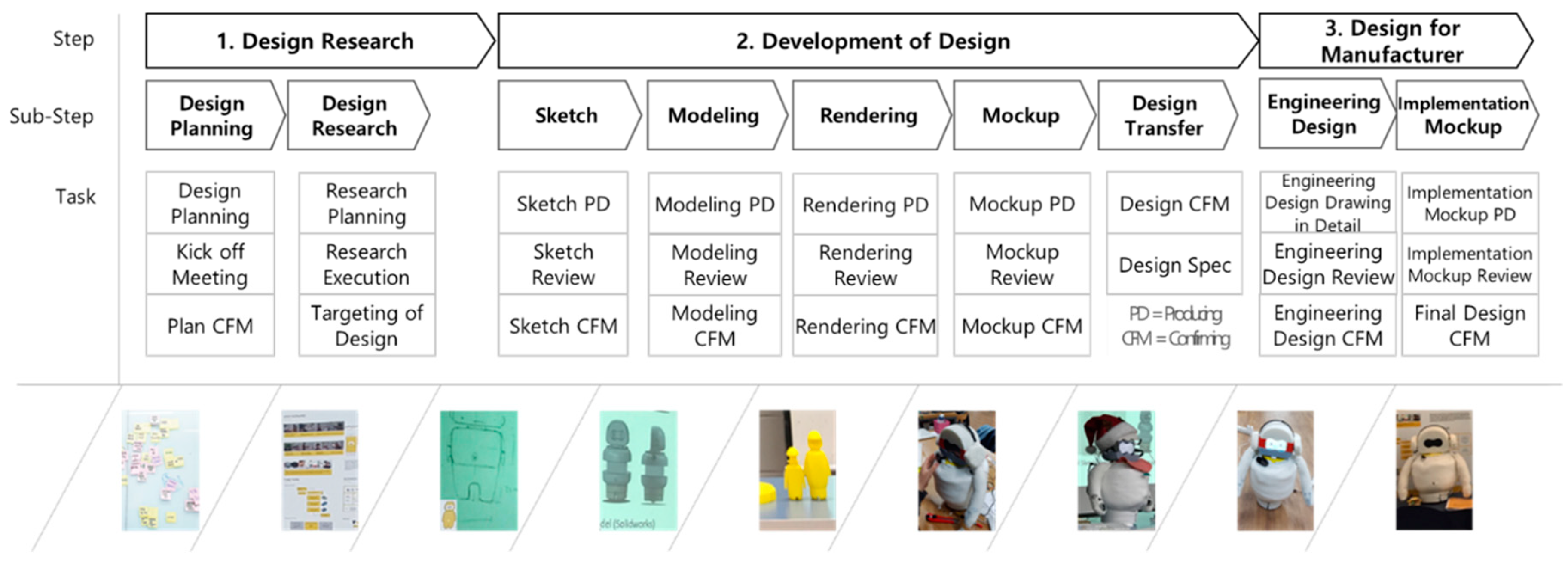

2.2.3. Total Design Process: From Design Research to Development and Implementation

3. Results

3.1. Design Result: Function of Communication

3.1.1. Data Network of the Robot Toy

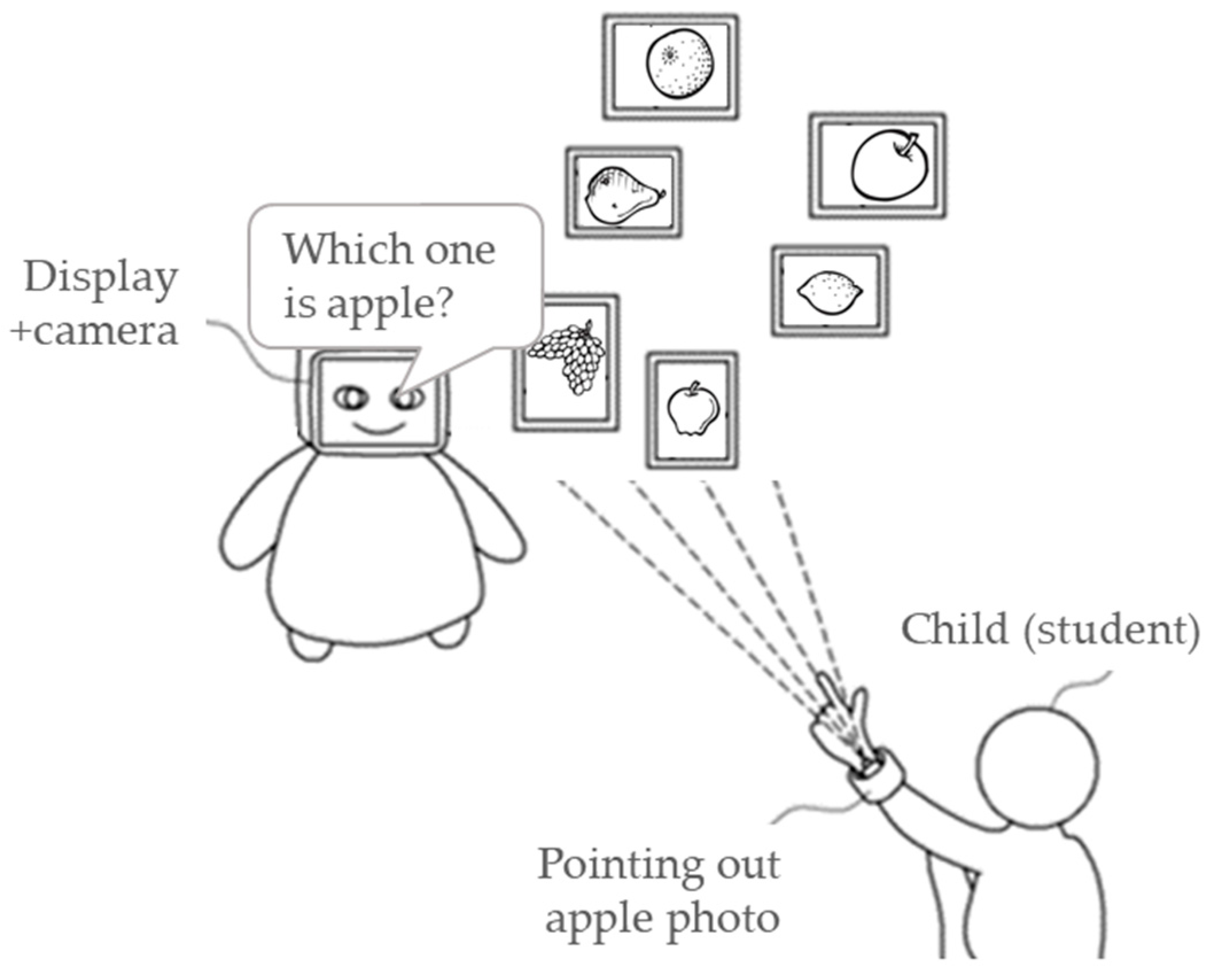

3.1.2. Communication between Robot and Child

3.2. Design Result: Function of Self-Growing

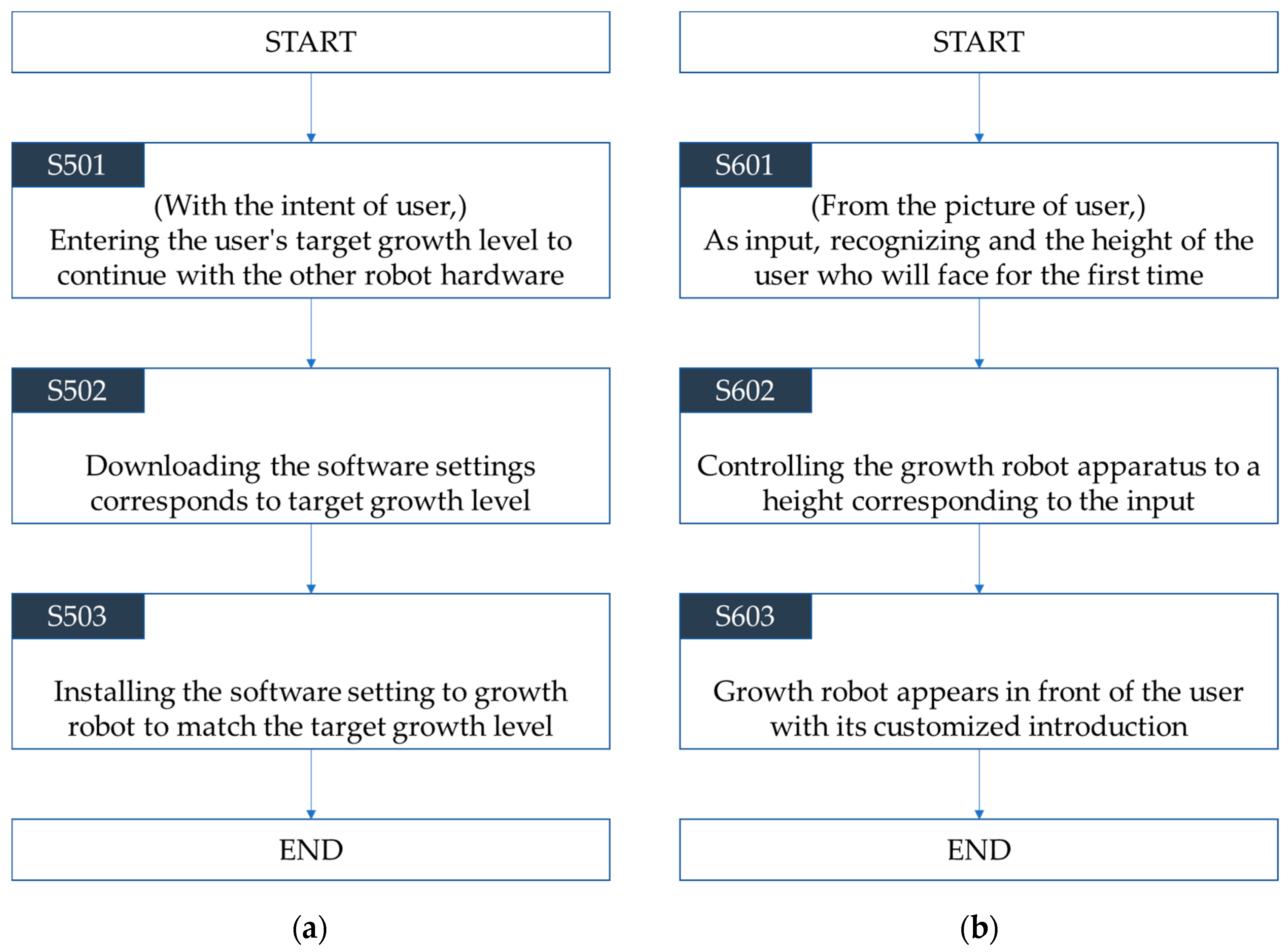

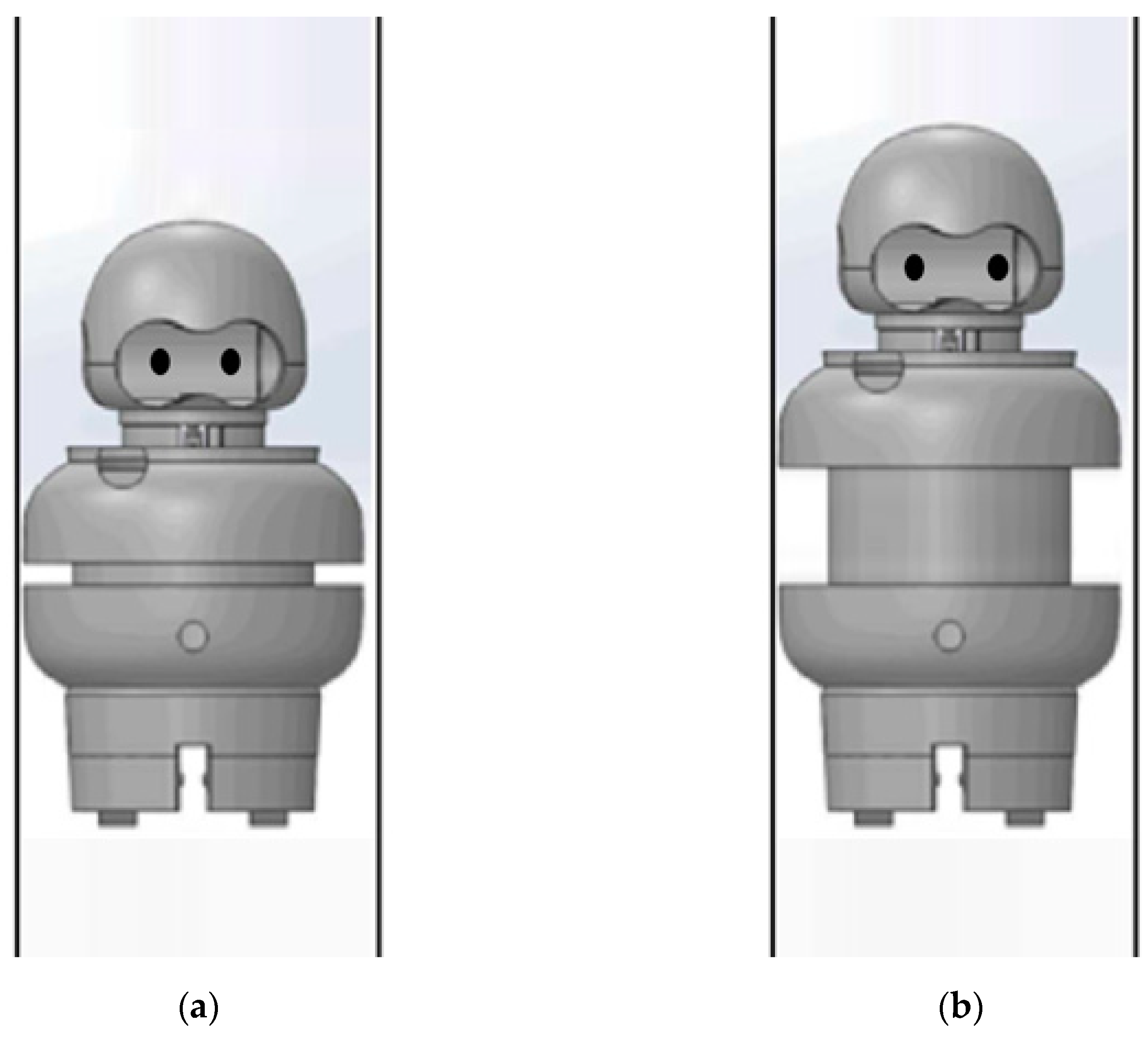

3.2.1. Growth Robot Implementation

3.2.2. Growth Robot Operation

4. Use Case Discussion

5. Conclusions

6. Patents

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Woods, S.; Dautenhahn, K.; Schulz, J. The Design Space of Robots: Investigating Children’s Views. In Proceedings of the 2004 IEEE International Workshop on Robot and Human Interactive Communication, Okayama, Japan, 20–22 September 2004; pp. 47–52. [Google Scholar]

- Woods, S.; Dautenhahn, K.; Schulz, J. Child and adults perspectives on robot appearance. In Proceedings of the Symposium on Robot Companions: Hard Problems and Open Challenges in Robot-Human Interaction, Hatfield, UK, 12–15 April 2005; pp. 126–132. [Google Scholar]

- Osada, J.; Ohnaka, S.; Sato, M. The scenario and design process of childcare robot, PaPeRo. In Proceedings of the 2006 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology, Hollywood, CA, USA, 14–16 June 2006. No. 80. [Google Scholar]

- Decuir, J.D.; Kozuki, T.; Matsuda, V.; Piazza, J. A friendly face in robotics: Sony’s AIBO entertainment robot as an educational tool. Comput. Entertain. 2004, 2, 14. [Google Scholar] [CrossRef]

- Kwak, S.S.; Lee, D.; Lee, M.; Han, J.; Kim, M. The Interaction Design of Teaching Assistant Robots based on Reinforcement Theory: With an Emphasis on the Measurement of Task Performance and Reaction rate. J. Korea Robot. Soc. 2006, 1, 142–150. [Google Scholar]

- Group Overview ‹ Synthetic Characters—MIT Media Lab. Available online: https://www.media.mit.edu/groups/synthetic-characters/overview/ (accessed on 15 May 2019).

- Al-Baali, M.; Caliciotti, A.; Fasano, G.; Roma, M. Quasi-Newton Based Preconditioning and Damped Quasi-Newton Schemes for Nonlinear Conjugate Gradient Methods. NAO 2017. In Numerical Analysis and Optimization; Al-Baali, M., Grandinetti, L., Purnama, A., Eds.; Springer: Muscat, Oman, 2018; pp. 1–21. ISBN 978-3-319-90025-4. [Google Scholar]

- Caliciotti, A.; Fasano, G.; Roma, M. Preconditioning strategies for nonlinear conjugate gradient methods, based on quasi-Newton updates. In Proceedings of the NUMTA-2016, Pizzo Calabro, Italy, 19–25 June 2016; p. 090007. [Google Scholar]

- Kwak, S.S.; Lee, D.; Lee, M.; Han, J.; Kim, M. The Interaction Design of Teaching Assistant Robots based on Reinforcement Theory-With an Emphasis on the Measurement of the Subjects’ Impressions and Preferences. J. Korean Soc. Des. Sci. 2007, 20, 97–106. [Google Scholar]

- Jagger, S. Affective learning and the classroom debate. Innov. Educ. Teach. Int. 2013, 50, 38–50. [Google Scholar] [CrossRef]

- Yoon, S.Y.; Blumberg, B.; Schneider, G.E. Motivation driven learning for interactive synthetic characters. In Proceedings of the 4th International Conference on Autonomous Agents, Barcelona, Spain, 3–7 June 2000; pp. 365–372. [Google Scholar]

- Rodrigues, S.H.; Mascarenhas, S.F.; Dias, J.; Paiva, A. “I can feel it too!”: Emergent empathic reactions between synthetic characters. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009; pp. 1–7. [Google Scholar]

- Hanington, B.; Martin, B. Universal Methods of Design: 100 Ways to Research Complex Problems, Develop Innovative Ideas, and Design Effective Solutions; Rockport Publishers: Beverly, CA, USA, 2012; pp. 66–67. ISBN 978-1-59253-756-3. [Google Scholar]

- Kim, Y.D.; Kim, J.H.; Kim, Y.J. Behavior selection and learning for synthetic character. In Proceedings of the 2004 Congress on Evolutionary Computation, Portland, OR, USA, 19–23 June 2004; pp. 898–903. [Google Scholar]

- My Talking Hank. Available online: https://outfit7.com/apps/my-talking-hank/ (accessed on 18 May 2018).

- Kim, J.; Jeong, H.; Pham, A.; Lee, C.; Soe, T.; Lee, P.; Lee, M.; Kim, S.; Eune, J. Introduction of an Interactive Growing Robot/Toy for Babies. In Advances in Computer Science and Ubiquitous Computing; CUTIE 2017, CSA 2017; Lecture Notes in Electrical Engineering; Park, J., Loia, V., Yi, G., Sung, Y., Eds.; Springer: Singapore, 2017; pp. 120–125. ISBN 978-981-10-7604-6. [Google Scholar]

- Mori, M. Bukimi no tani [the uncanny valley]. Energy 1970, 7, 33–35. [Google Scholar]

- Seyama, J.I.; Nagayama, R.S. The uncanny valley: Effect of realism on the impression of artificial human faces. Presence Teleoper. Virtual Environ. 2007, 16, 337–351. [Google Scholar] [CrossRef]

- Mathur, M.B.; Reichling, D.B. Navigating a social world with robot partners: A quantitative cartography of the Uncanny Valley. Cognition 2016, 146, 22–32. [Google Scholar] [CrossRef] [PubMed]

- Bright Beats Dance Move BeatBo. Available online: https://www.fisher-price.com/en_CA/products/Bright-Beats-Dance-and-Move-BeatBo/ (accessed on 18 May 2019).

- Kim, J.; Jeong, H.; Lee, C.; Pham, A.Y.; Soe, T.; Lee, P.; Lee, M.; Kim, S.W.; Eune, J. Buddy: Interactive Toy that can Play, Grow, and Remember with Baby. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems, Amsterdam, NY, USA, 10–12 May 2017; p. 467. [Google Scholar]

- Eune, J.; Lee, M.; Jeong, H.; Kim, J.; Lee, P.; Lee, C.; Pham, A.Y.; Soe, T. Growing Robot Device and Method for Controlling Operation Thereof. Korean Patent No. 10-2016-0183090, 29 December 2016. [Google Scholar]

| Growth Over Age | 4 Month | 3 Year-Old | 8 Year-Old (TBD) |

|---|---|---|---|

| Height (cm) | 65 cm | 95 cm | 122 cm |

| Height (ft) | 2.13 ft | 3.11 ft 1 | 4.00 ft |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, M.; Kim, J.; Jeong, H.; Pham, A.; Lee, C.; Lee, P.; Soe, T.; Kim, S.-W.; Eune, J. Communication with Self-Growing Character to Develop Physically Growing Robot Toy Agent. Appl. Sci. 2020, 10, 923. https://doi.org/10.3390/app10030923

Lee M, Kim J, Jeong H, Pham A, Lee C, Lee P, Soe T, Kim S-W, Eune J. Communication with Self-Growing Character to Develop Physically Growing Robot Toy Agent. Applied Sciences. 2020; 10(3):923. https://doi.org/10.3390/app10030923

Chicago/Turabian StyleLee, Mingu, Jiyong Kim, Hyunsu Jeong, Azure Pham, Changhyeon Lee, Pilwoo Lee, Thiha Soe, Seong-Woo Kim, and Juhyun Eune. 2020. "Communication with Self-Growing Character to Develop Physically Growing Robot Toy Agent" Applied Sciences 10, no. 3: 923. https://doi.org/10.3390/app10030923

APA StyleLee, M., Kim, J., Jeong, H., Pham, A., Lee, C., Lee, P., Soe, T., Kim, S.-W., & Eune, J. (2020). Communication with Self-Growing Character to Develop Physically Growing Robot Toy Agent. Applied Sciences, 10(3), 923. https://doi.org/10.3390/app10030923