Safety Lighting Sensor Robots Communicate in the Middle of the Highway/Roads †

Abstract

Featured Application

Abstract

1. Introduction

2. State of Art: Data Science in the Road

2.1. A Sensor Communication System for Users near the Point of Issue

2.2. Information App Service for Users far away from the Point of Issue

2.2.1. Probe Data Analytics and Exploring and Visualizing Crashes

2.2.2. The New Jersey Department of Transportation (NJDOT)

3. Process and Method

3.1. Causal Inference with Graphical Model

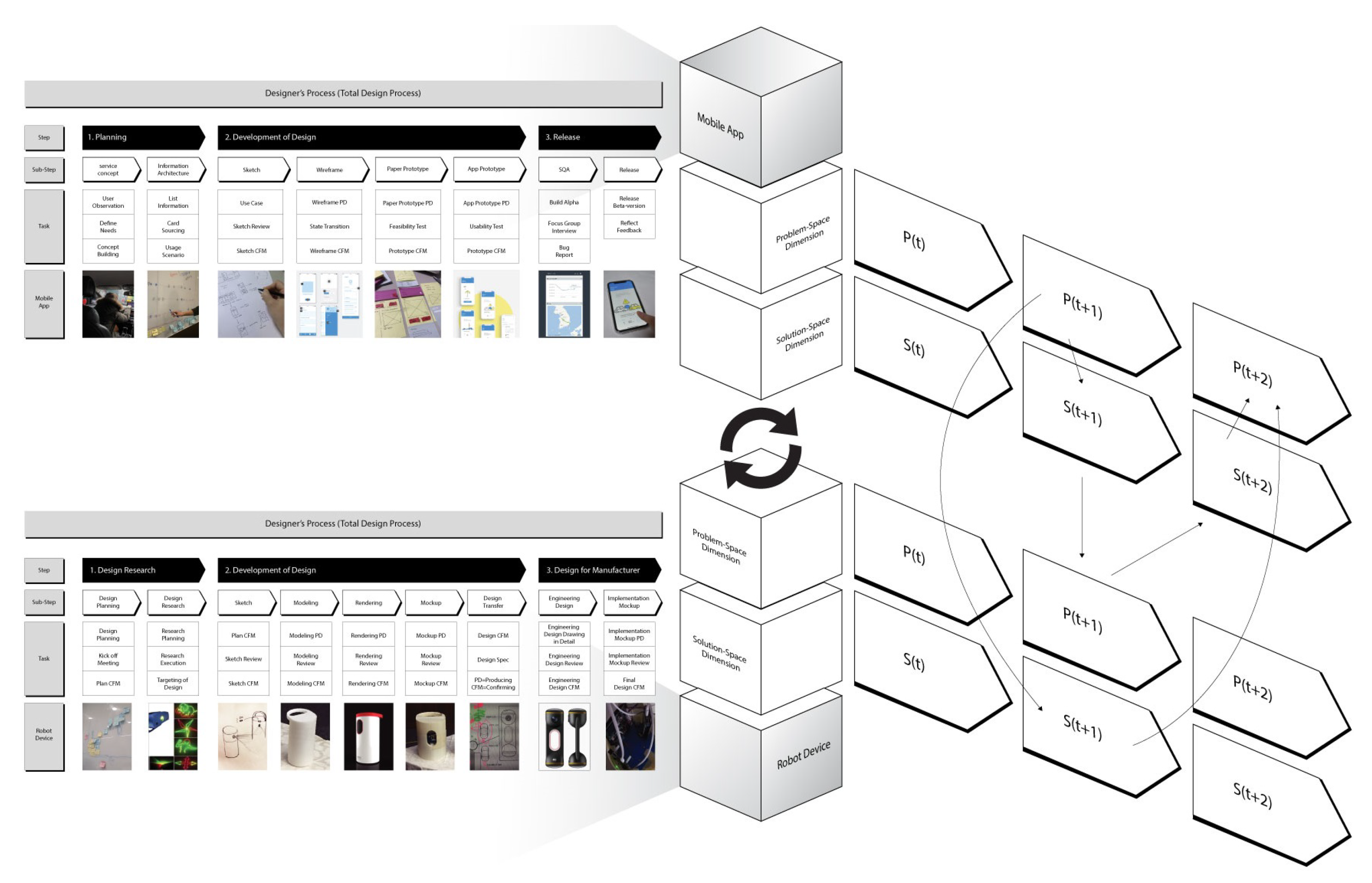

3.2. Total Process: Design Thinking Methodology From Design Research to Development and Implementation

3.3. Method: Design Thinking and Co-Evolution for Problem-Solving

3.3.1. Discover: User Research on a Vehicle Guide System on the Road

3.3.2. Define: Affinity Map and Persona

3.3.3. Develop: Design Draft as a Solution and Progress of the User Findings to Needs

3.3.4. Deliver: Design Implementation

4. Results and Discussion

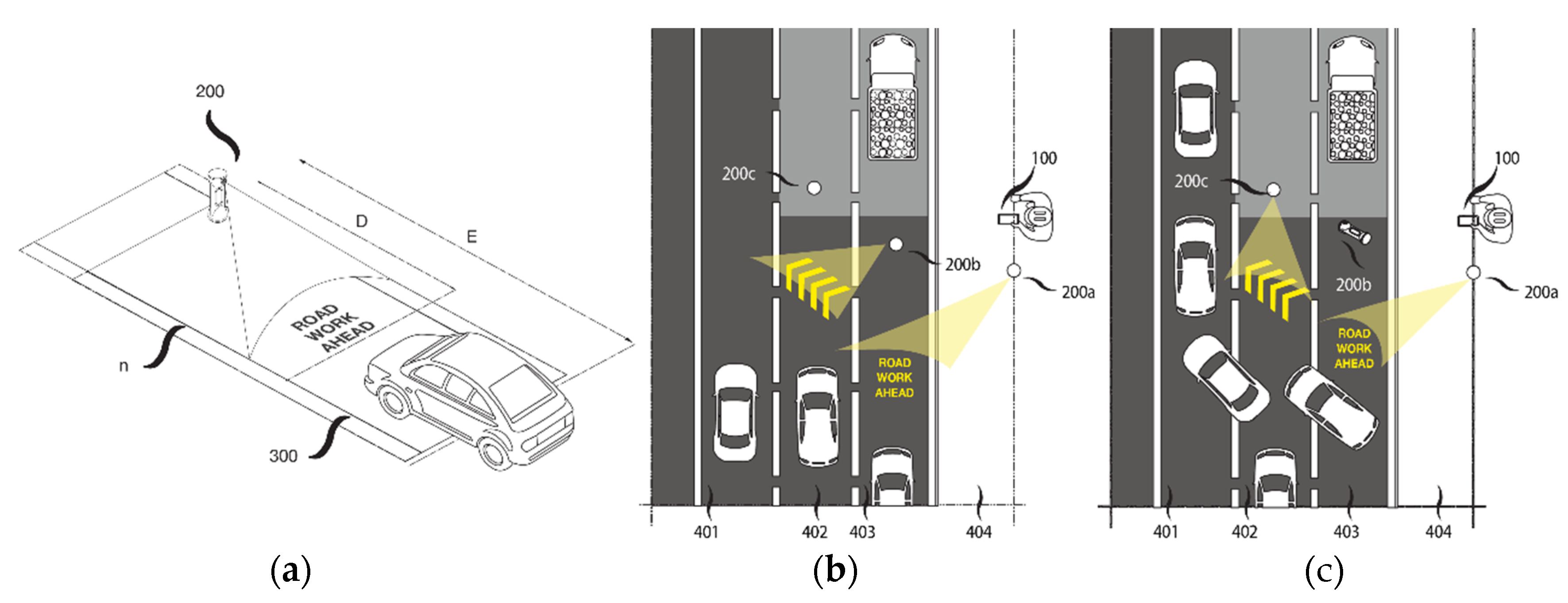

4.1. Sensor Robot Design as Access Point

4.1.1. Recognizing Unit: For Sensing Vehicles

4.1.2. Image Projector (Safety Lighting) for Alerting Signals to Vehicles

4.1.3. Communication Unit for Sharing Information

4.1.4. Message Library: Image Manager Stores at Least One Image

4.1.5. Location Information Unit Determined by Global Positioning System (GPS)

4.1.6. Battery Level Unit: Rechargeable and Replaceable Battery

4.1.7. State Checker Unit: Status Information to Be Written in Distributed Databases

4.1.8. Light-Emitting Unit

4.1.9. Vehicle Guidance Method

- Project an image on the road surface R on which the vehicle is approaching, when the distance D with the vehicle is within a preset range E. For example, E can be set to 200 m, and the robot detects an approaching vehicle when D is detected to be within 200 m. The image is then projected on the road surface R.

- Control the projection angle to project an image on R within D from a vehicle and project the image according to the projection angle.

- Generate access information when the vehicle approaches the robot device.

- Transmit the access information generated by at least one other robot device

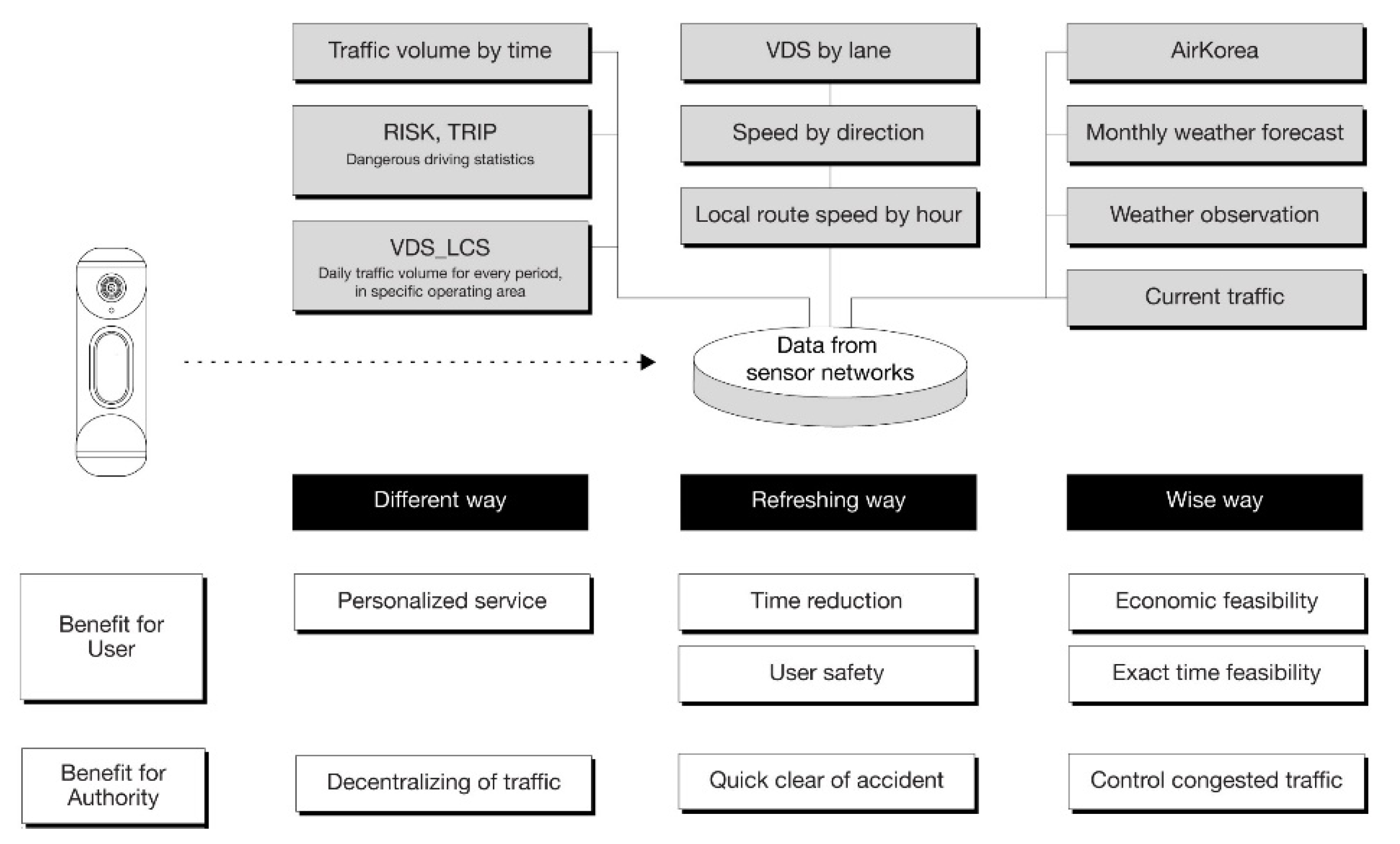

4.2. Mobile App Development

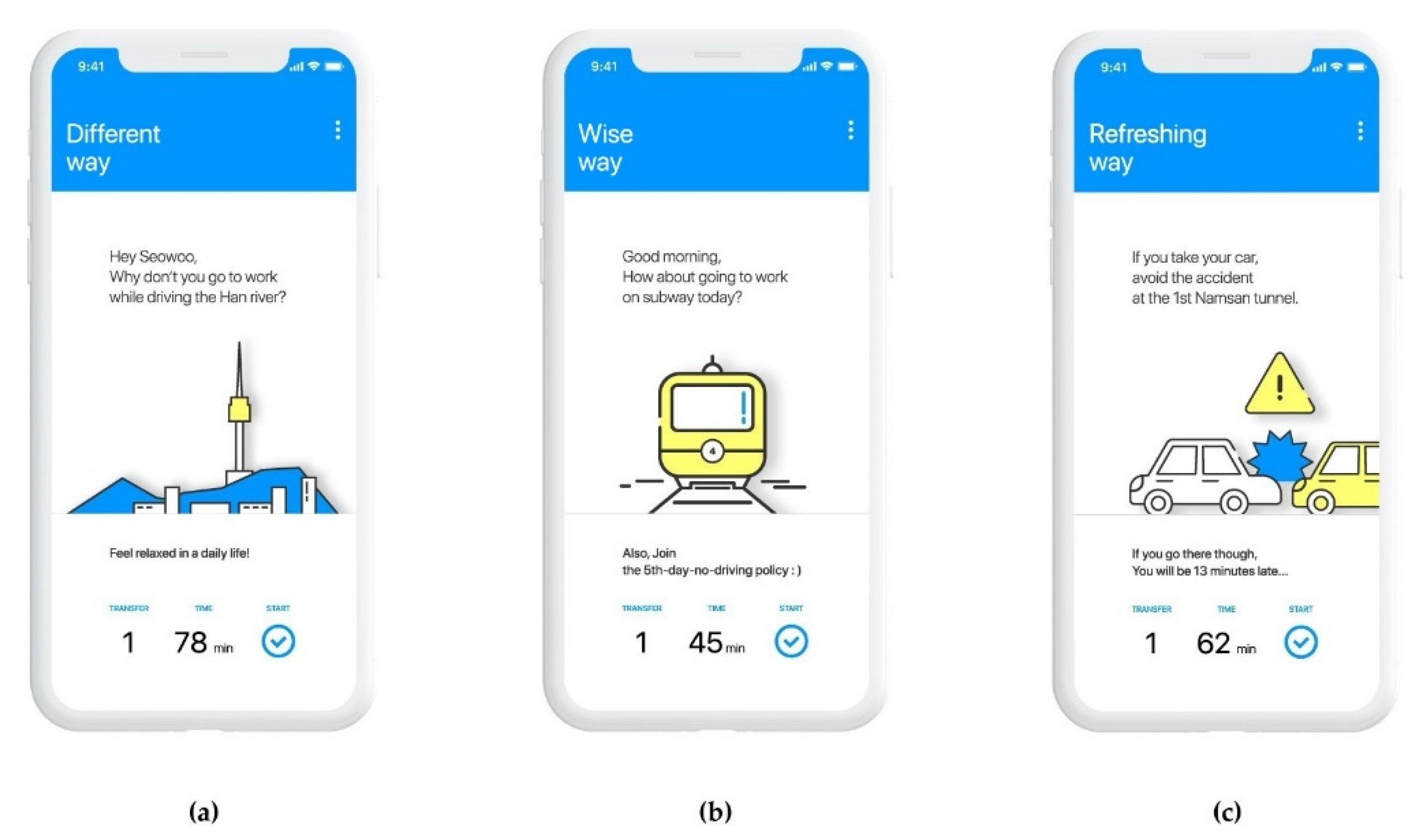

- “Different way to work”

- “Wise way to work”

- “Refreshing way to work”

4.2.1. Case 1: Different Way to Work

- Personalized service for rush hour pass to work

- Decentralized traffic which is congested

- Traffic measurement and notification by time

4.2.2. Case 2: Wise Way to Work

- Current traffic

- Monthly weather forecast

- Weather observations of a specific area around the road, from Korean government open APIs

- Dust - PM10, PM2.5 from “Air Korea” [28]

4.2.3. Case 3: Refreshing way to work

- VDS by lane, speed by direction

- Local route speed by hour

4.3. Summary of Architecture: Sensor Robot to Mobile Application

4.4. Discussion

4.4.1. Quantitative Approach: Causal Inference

4.4.2. Qualitative Approach: Design Thinking Methodology

4.4.3. Information Flow: Gathered from Sensor Robot and Provided to Mobile App for the User

- Causal inference to data from sensor robot

- Sensor robot data to cloud network DB

- DB to information architecture (IA)

- IA to layout

- Layout to display

- Display to state-transition in the mobile app of the user.

5. Conclusions

6. Patents

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| D | Distance (between the sensor robot and approaching vehicle) |

| H | Hole (in the center of the sensor robot) |

| L | light-emitting device |

| P | Projection module |

| R | Road surface |

| S | Sensor module (recognition/recognizing unit = identification unit = detection unit = the detector) |

| T | Top (the upper end) |

| M | Middle (the body) |

| B | Bottom (the lower end) |

| app | application |

| API | Application Program Interface = Application Programming Interface |

| VGN | vehicle guidance network |

| IA | information architecture |

| IDI | in-depth-interview |

| IoT | Internet of Things / Intelligence of Things |

| LoRa | Long Range, a low-power wide-area network |

| RITIS | Regional Integrated Transportation Information System |

| ATMS | Advanced Traffic Management System |

| NJDOT | New Jersey department of transportation |

| OBD | On Board Diagnostics = OBU (unit) |

| INS | insurance (mainly car insurance company) |

| OBS | Observation |

| PM | particulate matter |

| LCS | Lane Control System |

| VDS | Vehicle Detection System |

| WPC | Web Parts Catalog (to figure out which parts are out of order) |

References

- Prasad, M.J.; Arundathi, S.; Anil, N.; Kariyappa, B.S. Automobile black box system for accident analysis. In Proceedings of the 2014 International Conference on Advances in Electronics Computers and Communications, Bangalore, India, 21 June 2014; pp. 1–5. [Google Scholar]

- Jang, J.; Kim, H. Advisory safety speed model using real-time vehicular data at SMART HIGHWAY. J. Korean Soc. Civ. Eng. 2010, 30, 443–451. [Google Scholar]

- Hong, I.K.; Jeon, H.; Eune, J.; Lee, M. Prevention through Design-Based on Safety Lighting for Perception on Highway Repairing Area Scenario. In Proceedings of the KSDS 2016, Seoul, Korea, 21 June 2016; pp. 110–111. [Google Scholar]

- Redmond, L.S.; Mokhtarian, P.L. The positive utility of the commute: Modeling ideal commute time and relative desired commute amount. Transportation 2001, 28, 179–205. [Google Scholar] [CrossRef]

- Chen, S.Y.; Zhang, Y.; Zhang, Y.H.; Yu, J.J.; Zhu, Y.X. Embedded system for road damage detection by deep convolutional neural network. Math. Biosci. Eng. 2019, 16, 7982–7994. [Google Scholar] [CrossRef] [PubMed]

- Jung, E.; Kim, J.; Jeong, S.; Soe, S.; Lee, M. Guidance Application for Drivers’ Safety at Moving to Work. In Proceedings of the HCI Korea 2020, Hongcheon, Korea, 11–14 February 2020; pp. 609–613. [Google Scholar]

- 2019 MOLIT Avengers. Available online: https://github.com/kjm0623v/2019_molit_Avengers (accessed on 20 February 2020).

- Ulleberg, P.; Rundmo, T. Personality, attitudes and risk perception as predictors of risky driving behavior among young drivers. Saf. Sci. 2003, 41, 427–443. [Google Scholar] [CrossRef]

- Davis, G.A. Possible aggregation biases in road safety research and a mechanism approach to accident modeling. Accid. Anal. Prev. 2004, 36, 1119–1127. [Google Scholar] [CrossRef]

- Rahman, M.M.; Strawderman, L.; Lesch, M.F.; Horrey, W.J.; Babski-Reeves, K.; Garrison, T. Modelling driver acceptance of driver support systems. Accid. Anal. Prev. 2018, 121, 134–147. [Google Scholar] [CrossRef]

- Karwa, V.; Slavković, A.B.; Donnell, E.T. Causal inference in transportation safety studies: Comparison of potential outcomes and causal diagrams. AAS 2011, 10, 1428–1455. [Google Scholar] [CrossRef]

- García-Castellano, M.; González-Romo, J.M.; Gómez-Galán, J.A.; García-Martín, J.P.; Torralba, A.; Pérez-Mira, V. ITERL: A Wireless Adaptive System for Efficient Road Lighting. Sensors 2019, 19, 5101. [Google Scholar] [CrossRef] [PubMed]

- Wundersitz, L. Driver distraction and inattention in fatal and injury crashes: Findings from in-depth road crash data. Traffic Inj. Prev. 2019, 20, 696–701. [Google Scholar] [CrossRef] [PubMed]

- Lu, N.; Ma, Y.; Liu, Y. Evaluating Probabilistic Traffic Load Effects on Large Bridges Using Long-Term Traffic Monitoring Data. Sensors 2019, 19, 5056. [Google Scholar] [CrossRef] [PubMed]

- Arokiasami, W.A.; Vadakkepat, P.; Tan, K.C.; Srinivasan, D. Real-Time Path-Generation and Path-Following Using an Interoperable Multi-Agent Framework. Unmanned Syst. 2018, 6, 231–250. [Google Scholar] [CrossRef]

- Leven, P.; Hutchinson, S. A framework for real-time path planning in changing environments. IJRR 2002, 21, 999–1030. [Google Scholar] [CrossRef]

- Pattara-Aticom, W.; Pongraibool, P.; Thajchayapong, S. Estimating road traffic congestion using vehicle velocity. In Proceedings of the 6th International Conference on ITS Telecommunications, Chengdu, China, 21–23 June 2006; pp. 1001–1004. [Google Scholar]

- Shin, S.I.; Park, J.J.; Lee, J.C.; Ha, T.J. Development of user customized path finding algorithm for public transportation information. J. Korean Soc. Civ. Eng. 2008, 28, 317–323. [Google Scholar]

- Regional Integrated Transportation Information System. Available online: https://ritis.org/intro/ (accessed on 16 February 2020).

- CATT Lab. Available online: http://www.catt.umd.edu/news/news_story.php?id=4673 (accessed on 22 February 2020).

- Bauer, J.; Margiotta, R.A.; Pack, M.L. Applying Archived Operations Data in Transportation Planning–A Primer (No. FHWA-HOP-16-082); Federal Highway Administration: Washington, DC, USA, 2016.

- Boukerche, A.; Oliveira, H.A.; Nakamura, E.F.; Loureiro, A.A. Vehicular Ad Hoc Networks: A New Challenge for Localization-Based Systems. Comput. Commun. 2008, 31, 2838–2849. [Google Scholar] [CrossRef]

- Bareinboim, E.; Pearl, J. Causal inference and the data-fusion problem. In Proceedings of the National Academy of Sciences, Washington, DC, USA, 26–27 March 2015; pp. 7345–7352. [Google Scholar]

- Christiaans, H.H.C.M.; Dorst, K.; Roozenburg, N. An empirical study into design thinking. Research in Design Thinking; Delft University Press: Delft, The Netherlands, 1992; pp. 119–125. ISBN 978-9-06275-796-1. [Google Scholar]

- Dorst, K.; Cross, N. Creativity in the Design Process: Co-Evolution of Problem-Solution. Des. Stud. 2001, 22, 425–437. [Google Scholar] [CrossRef]

- Peña-García, A.; Nguyen, T. A Global Perspective for Sustainable Highway Tunnel Lighting Regulations: Greater Road Safety with a Lower Environmental Impact. Int. J. Environ. Res. Public Health 2018, 15, 2658. [Google Scholar] [CrossRef] [PubMed]

- Zaldivar, J.; Calafate, C.T.; Cano, J.C.; Manzoni, P. Providing accident detection in vehicular networks through OBD-II devices and Android-based smartphones. In Proceedings of the 2011 IEEE 36th Conference on Local Computer Networks, Bonn, Germany, 4–7 October 2011; pp. 813–819. [Google Scholar]

- Air Korea. Available online: https://www.airkorea.or.kr/eng (accessed on 20 March 2020).

- Jeong, S. Today’s Commute. Available online: https://https://seowoojeong.com/todays-commute (accessed on 20 March 2020).

- Hong, S.Y.; Eune, J.; Lee, M.; Cho, M.G.; Jin, T.; Hong, I.K.; Jeon, H.; Shin, S.; Jung, E. Safety Device for Guiding Vehicle to Detour Route, Vehicle Guidance Method Using Same, and Vehicle Guidance System Using Same. International Patent No. WO2017065329A1, 20 April 2017. Available online: https://patents.google.com/patent/WO2017065329A1/en/ (accessed on 20 February 2020).

| Goal | Observation Details | |

|---|---|---|

| To identify inconvenience: | Observe the behavioral flow: | |

| Observation | caused by ignorance of road conditions in the | of decision-making in the |

| morning rush hour and to find ways to improve road | participant’s morning schedule | |

| Contextually to identify hidden inconveniences: | Ask participants about the reason: | |

| Interview | that participants experience | that influence driving decisions |

| in the morning rush hour | during the morning commute |

| Car Accident Area (Near) | Prompt Report | Anxious Users | Collect for Accident Facts | When Moving to a Safe Place |

|---|---|---|---|---|

| Distant From the Road (of Issue) | Prior information registration required | Need service proposal | Accurate status record | Choice based on user simulation |

| Index (type) | Traffic Volume | RISK | TRIP | VDS_LCS |

|---|---|---|---|---|

| Data Type Causal Inference | Traffic volume Instrument | Need service Instrument | Accurate status Instrument | Choice based on Confounder |

| Index (Type) | Current Traffic | Monthly W.Forecast | W.OBS.Area | (Dust) PM10 | (Dust) PM2.5 |

|---|---|---|---|---|---|

| Data Type Causal Inference | Traffic volume Instrument | Weather data Confounder | Place data Confounder | Weather data Confounder | Weather data Confounder |

| Index (Type) | VDS by Lane | Speed by Direction | Local Route Speed by Hour |

|---|---|---|---|

| Data Type Causal Inference | Travel time Confounder | Speed data Instrument | Speed data Risk factor |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, M.; Won, J.; Kim, J.; Jeon, H.; Hong, I.; Jung, E.; Jin, T.; Jeong, S.; Ga, S.-H.; Kim, C.-J.; et al. Safety Lighting Sensor Robots Communicate in the Middle of the Highway/Roads. Appl. Sci. 2020, 10, 2353. https://doi.org/10.3390/app10072353

Lee M, Won J, Kim J, Jeon H, Hong I, Jung E, Jin T, Jeong S, Ga S-H, Kim C-J, et al. Safety Lighting Sensor Robots Communicate in the Middle of the Highway/Roads. Applied Sciences. 2020; 10(7):2353. https://doi.org/10.3390/app10072353

Chicago/Turabian StyleLee, Mingu, Jongyoun Won, Jimi Kim, Hyejin Jeon, InKyoung Hong, Eunji Jung, Taehwan Jin, Seowoo Jeong, Seok-Hyun Ga, Chan-Jong Kim, and et al. 2020. "Safety Lighting Sensor Robots Communicate in the Middle of the Highway/Roads" Applied Sciences 10, no. 7: 2353. https://doi.org/10.3390/app10072353

APA StyleLee, M., Won, J., Kim, J., Jeon, H., Hong, I., Jung, E., Jin, T., Jeong, S., Ga, S.-H., Kim, C.-J., & Eune, J. (2020). Safety Lighting Sensor Robots Communicate in the Middle of the Highway/Roads. Applied Sciences, 10(7), 2353. https://doi.org/10.3390/app10072353