In this section, we propose the deep recurrent network to process the piezoelectric sensor data. To make a network that works well with the input sensor data, we need to know about the characterization of sensor data. In addition, to train the network with good performance, input data and the ground-truth data should be well defined. Therefore, we explain our motion recognition method and discuss the characterization of the piezoelectric sensor. Afterwards, we discuss how to obtain sensor data for the training. Finally, the structure of the proposed network using two different recurrent units, RNN and LSTM, is introduced, and the performance between two units is compared.

3.1. Conventional Angle Recognition Method

In our previous study, we used the following relationship between the curvature and the voltage of the piezoelectric sensor [

22]:

where

q is the charge stored in the piezoelectric sensor,

C is capacitance,

v is the piezoelectric voltage,

is electromechanical coupling coefficient,

is the mean curvature, and

is the load resistance.

Applying the Laplace transformation, Equations (

2) and (

3) can be written as:

in the

s domain.

Inserting Equation (

4) into Equation (

5) by substituting for

, we have:

By reorganizing Equation (

6) as the relation between

and

, we have:

When the piezoelectric sensor has low capacitance value and load resistance value (

), Equation (

7) reduces to:

Equation (

8) can be expressed as follows in the time domain after implementing the inverse-Laplace transform:

In the beam structure, the value of the mean curvature is related to the change of the slope between the start and end of the beam, and the slope can be expressed as the angle of the sensor [

22]. From these relations, we derive the following equation using the angular change of the sensor and piezoelectric voltage [

18]:

where

is the angle variation of the piezoelectric sensor,

A is the gain including all proportional terms,

O is the offset,

is the time interval, and

.

3.2. Estimation of Moving State and Offset Voltage

Assigning

in Equation (

9),

is determined with the initial value of mean curvature

. This means that, if

is nonzero, there is a voltage offset. Ideally, the mean curvature is zero at the original position. However, it is difficult to achieve zero mean curvature in a real case. In addition, given that a finger moves continuously in real-time experiment, the initial mean value can have small variation when it comes back to the original position. The difference between original position and varied position also affects the offset value.

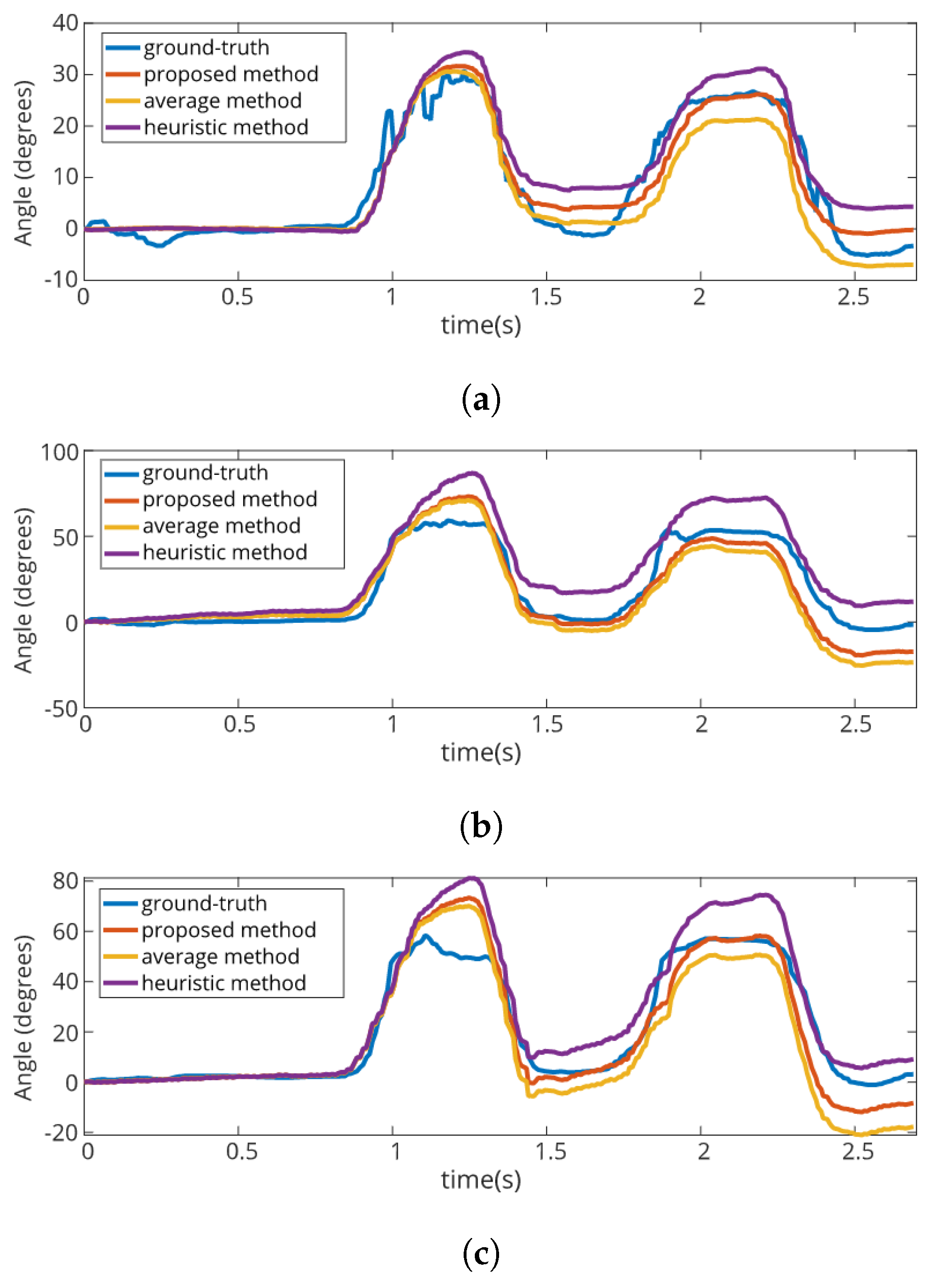

As shown in

Figure 4, calculation of joint angle values using improper offsets results in incorrect results. If

is the same as the

, as shown in

Figure 4a, the joint angle is calculated normally. However, if there is a difference between the estimated offset and real offset of the raw data, as shown in

Figure 4b,c, the error

is integrated as the time

. Therefore, the selection of an appropriate offset voltage value is an important issue. In [

18], we defined the offset value

as the average of 3000 data, such that:

Using the average value is a reasonable choice for following the tendency of the signal. However, the average value can oscillate when data having a large difference are entered, and a slight offset change is applied slowly. To solve this problem, we determined the offset voltage with voltages for non-movement to update

in [

19]. Assuming only curvature change of the sensor results in significant change of piezoelectric voltage sensor, the heuristic moving state

is estimated as follows:

where

is the threshold voltage for deciding the moving state. We consider the finger as moving when

and stopped when

. This method can continuously renew offset change that occurs via variation of

faster than previous one. However, heuristic moving state estimation of Equation (

12) confronts following problems in a real-time system:

If noise is added to v, it is hard to distinguish noise from curvature change.

Input noise can be random value in case that the system is operated in different environment.

When noise is random, is hard to determine.

For these reasons, it is difficult to estimate in noisy environment. Therefore, we propose a network to train a moving state using recurrent neural networks as alternative.

3.3. Data Acquisition

For network training, we need the dataset including the input data and the ground-truth target data which need to be predicted. In this study, we extracted the ground-truth moving state from the finger joint angle measured by leap motion controller. Different from other vision sensors, the leap motion controller facilitates the direct acquisition of the finger joint angle without additional processing. Although the measured angle from the leap motion controller exhibits differences in the real joint angle, as compared to Chophuk et al. [

23], we chose the leap motion controller as a ground-truth sensor due to its characteristic and because it can facilitate large dataset generation.

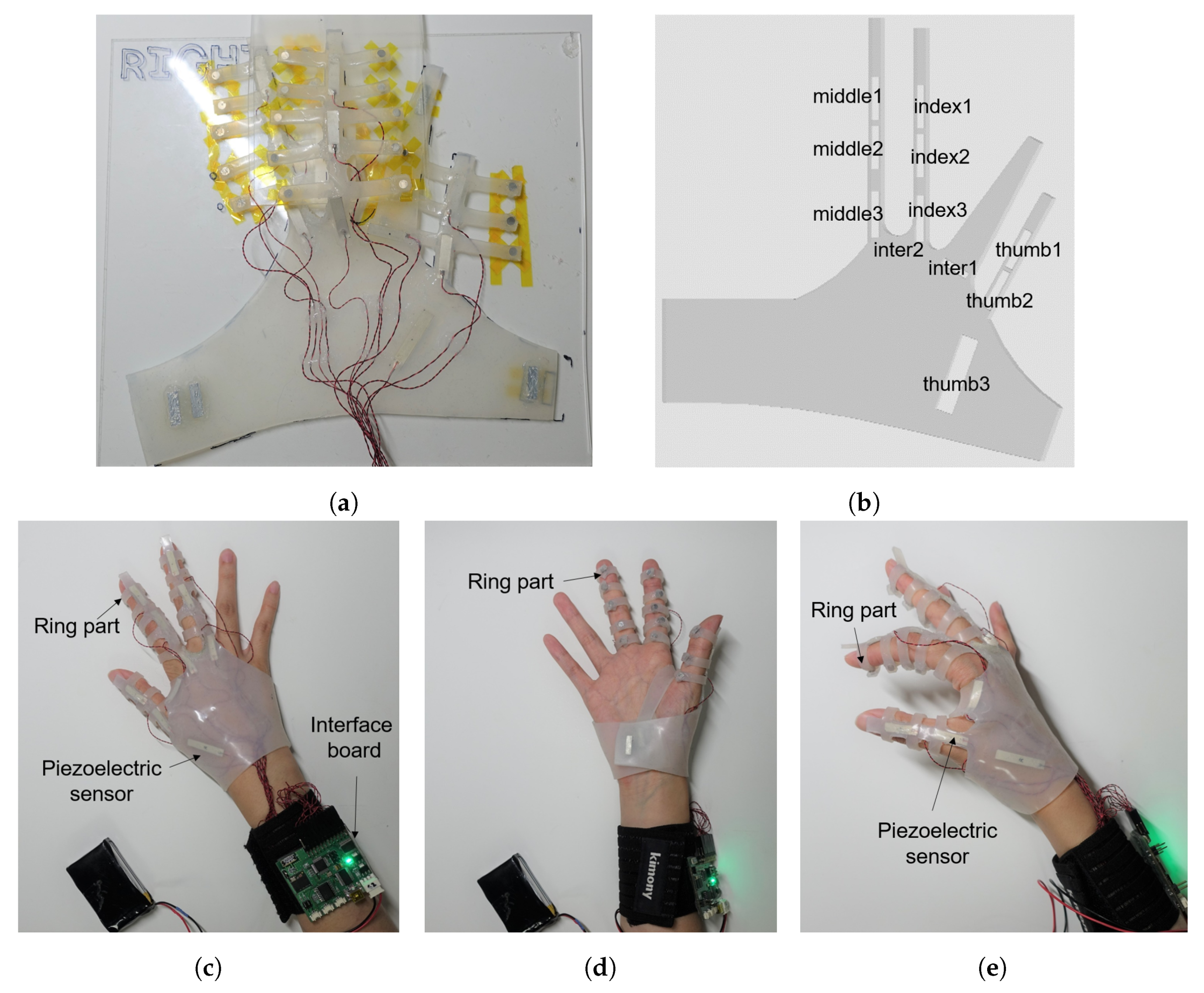

To obtain a dataset for learning, we conducted an experiment using the interface mentioned in

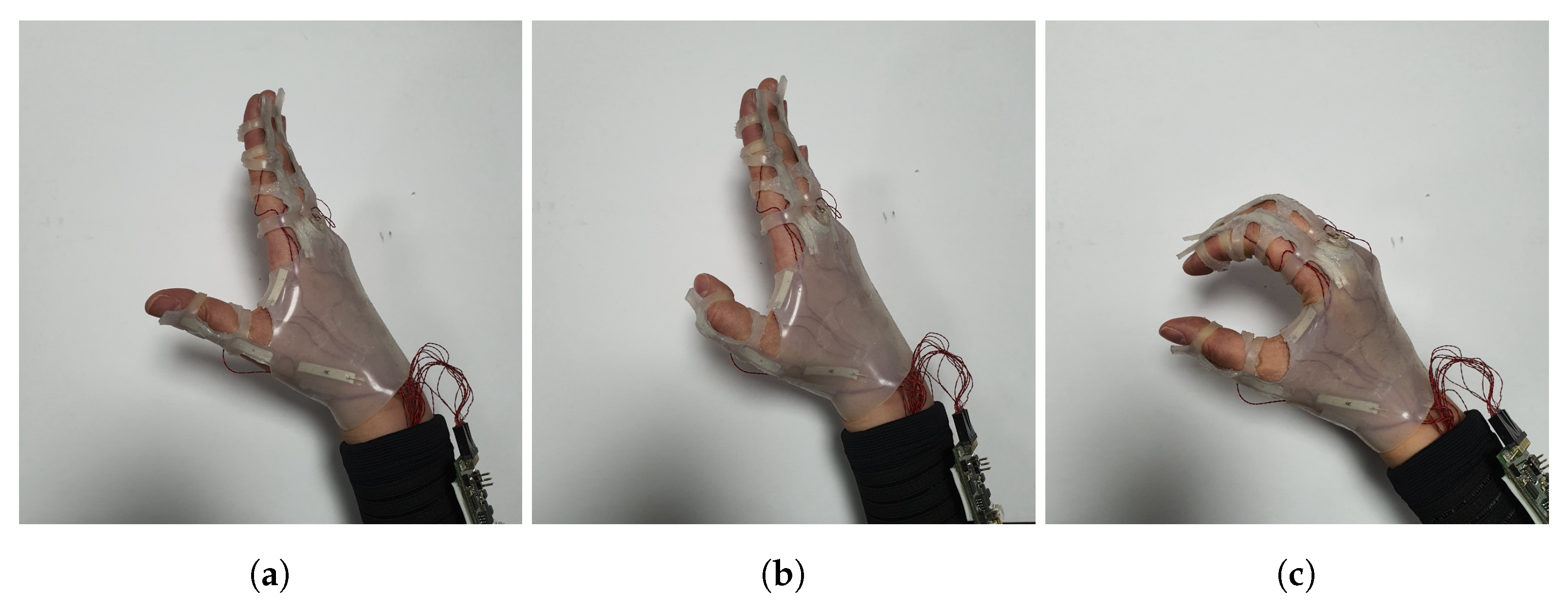

Section 2. For data acquisition, we set up the experiment as follows:

We used the soft sensor located on the glove, and its piezoelectric voltage was measured with the interface board.

Joint angle for acquiring moving state was simultaneously measured with leap motion controller.

Single movement was composed with flexion and extension.

The range of flexion angle was determined randomly between 30 degrees and 90 degrees.

Movements for training set were conducted 1080 times, and those for the test set were conducted 270 times.

The decision of the moving state

is given by:

where

is the threshold angle variation. We assumed that the finger is moving when the difference between the average of 10 recent values and the new value is larger than

. We selected

as 2 degrees based on preliminary tests.

Figure 5 displays the sample of the dataset acquired from the experiment. The network was trained using the input data in

Figure 5a to estimate the moving state in

Figure 5c.

Since our goal was to extract real-time moving state with using piezoelectric sensor outputs, we preprocessed the piezoelectric voltage into sequential input data

using the sliding window method, where

In addition, we defines target data of the network as

. Summaries of the processed training and test sets are shown in

Table 1.

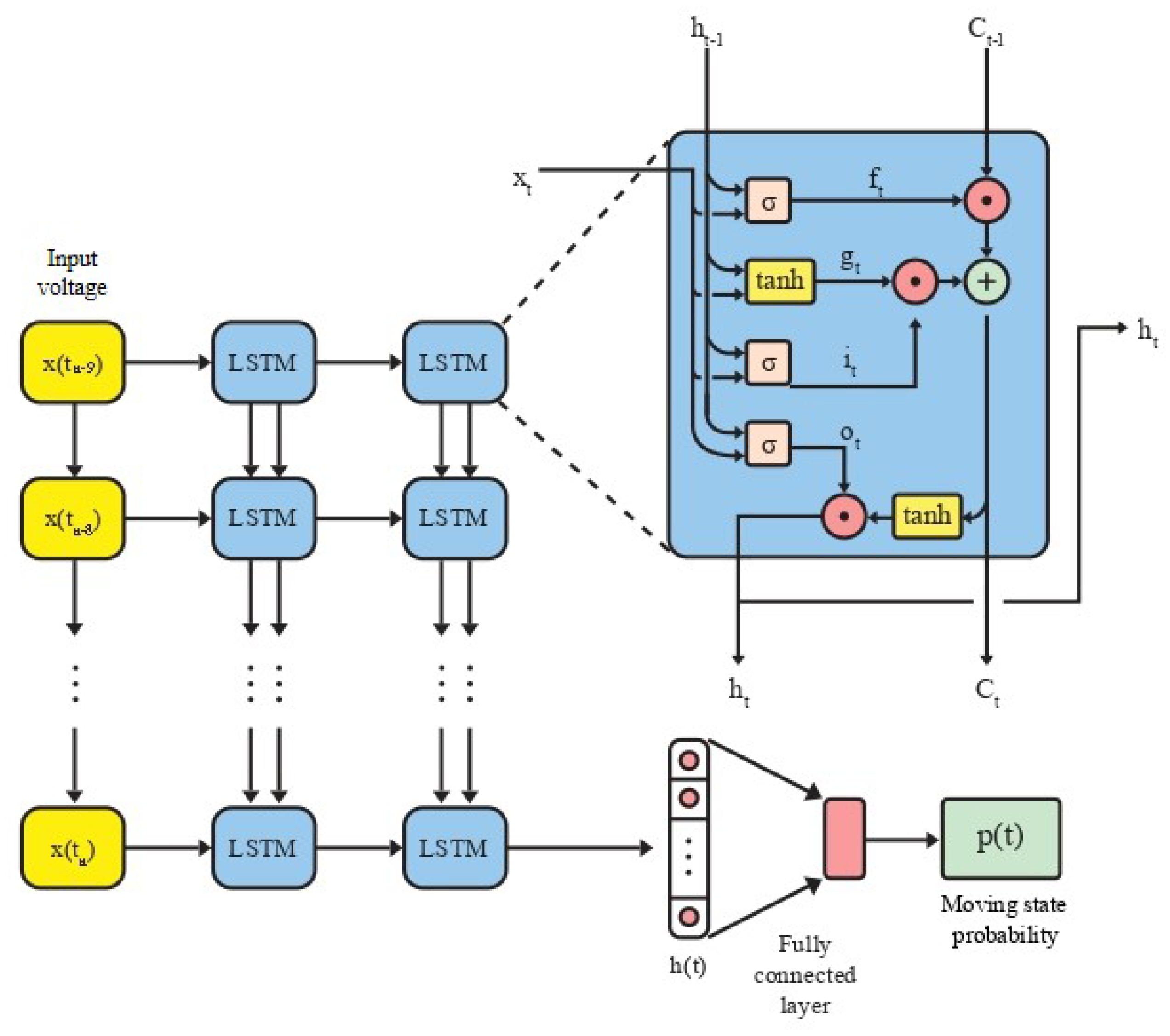

3.4. Proposed Network

To train the moving state of the sensor, we used a recurrent neural network (RNN), which is appropriate for training a sequential dataset. Using input vector

and previous hidden state

, a simple RNN determines the hidden state as follows:

where

,

, and

b are the network parameters.

Given that hidden state

is determined with previous hidden state

, simple RNN can contain sequential information based on the recurrent hidden state. However, when the length of the data becomes larger, the gradient vanishes and explodes. To solve the problem and transfer the hidden layer information deeper, Long Short-Term Memory is proposed in [

24]. Using cell vector

and several gates, the hidden state

is calculated as follows:

where

,

, and

are input gate, forget gate, and output gate vector, respectively;

and

are cell input and output activation functions;

W and

b denote weight matrices and bias vector of the network; ⊙ is the element-wise product of the vectors; and tanh is output activation function.

of LSTM unit can save more sequential information than

of simple RNN unit. The network size of LSTM is four times larger than RNN. We applied both recurrent units into the proposed network and compared the performance.

To implement the real-time moving state estimation, we propose the network as shown in

Figure 6. At each time step

,

passes two recurrent layers and produces the hidden layer

, which contains the sequential information of

. To convert the sequential information into a single moving state, the fully connected layer combines the information of

and determines the final output. Given that state 1 refers to moving and 0 refers to stopping, the final output of the network

states the probability of moving. Therefore, we define the predicted moving state

.

We trained the network for 100 epochs and used stochastic gradient descent (SGD) as the optimizer. In addition, we applied a dropout layer after the final recurrent layer at a rate of 50% rate in training to avoid overfitting.

As the recurrent unit, we used simple RNN and LSTM units and compared the result.

Table 2 displays the result for accuracy of the training set and test set for both methods.

From the result, the model using LSTM unit exhibited slightly higher accuracy for the test and training set. In addition, the test accuracy of the model using the LSTM unit is more consistent than that of the model using simple RNN. The result indicates that only simple substitution for the recurrent unit without network structure change results in performance improvement in the proposed network. As stated in [

25], there exists a modeling weakness of a simple RNN structure, and the LSTM structure is used to overcome the vanishing error [

24,

26]. The vanishing of the error gradient is increased when the long-term dependencies of input–output are enlarged. it means the LSTM unit can transfer the error information deeply than the RNN unit during the training when the training data includes long recurrent time steps. Since the single training data of our work include 10-time steps of sensor data, the LSTM unit can transfer the information deeply than the simple RNN unit when training the network. Therefore, we conclude that the LSTM unit is better suited than the simple RNN unit for soft sensor characterization, and chose the LSTM unit for the proposed network.