Statistical Validation Framework for Automotive Vehicle Simulations Using Uncertainty Learning

Abstract

:1. Introduction

- 1.

- the negligence of uncertainty,

- 2.

- the binary, low-information validation result,

- 3.

- the low extrapolation capability of model reliability,

- 4.

- the small application domain size.

- Summarising the insufficiencies of validation in the automotive domain, which prevent a reliability assessment of simulation models and system safety.

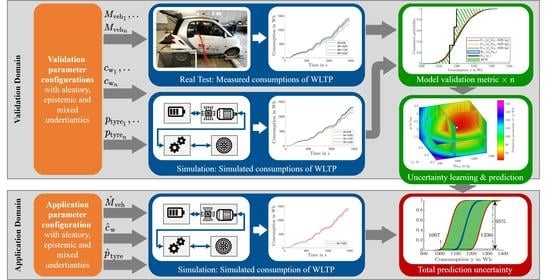

- A statistical VV+UQ framework with uncertainty learning for the precise validation of a large application domain.

- First application of a statistical VV+UQ framework predicting model uncertainties of new parameter configurations.

- Explanation, validation and discussion of the framework with real world data from a prototype electric vehicle on a roller dynamometer.

- Solving the new key requirements of automotive validation and recommending four improvement strategies to allow efficient error targeting for revising automotive M+S processes and total system understanding.

2. Model Validation

2.1. Philosophy of the Science of Validation

2.2. Validation Processes

2.3. Statistical Validation in Automotive Domain

3. Statistical Validation Method

3.1. Concept and Previous Work

3.2. Detailed Explanation

4. System, Model and Parameters

4.1. System Setup, Control and Measurement

4.2. Simulation Model and Verification

4.3. Parameter Identification

5. Validation Domain

5.1. Validation Parameter Configurations

5.2. System and Application Assessment

5.3. Model and Application Assessment

5.4. Validation Metric and Decision-Making

5.5. Validation Uncertainty Learning

6. Application Domain

6.1. Application Parameter Configurations

6.2. Model Simulation and Application Assessment

6.3. Validation Uncertainty Prediction and Integration

6.4. Application Decision-Making

- 1.

- Measure the epistemic parameters more precisely to reduce the blue area.

- 2.

- Control the test setup to reduce the natural variation of the aleatory parameters, resulting in a smaller width of the s-curve.

- 3.

- Use a more detailed model to reduce the inherent model error and validate it in more validation parameter configurations to reduce the prediction uncertainty. This results in a smaller green area.

- 4.

- Use finer steps to reduce numerical uncertainties.

7. Validation and Discussion of the Framework

8. Conclusions

- 1.

- The binary, low-information validation result is solved by the high-information total prediction uncertainty in the form of a p-box.

- 2.

- The negligence of uncertainties is solved by considering uncertainties and non-deterministic simulations.

- 3.

- The low extrapolation capability of the model reliability is solved by uncertainty learning and prediction.

- 4.

- The resulting small application domain is solved by uncertainty prediction in large application domains.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ASME | American Society of Mechanical Engineers |

| AVM | Area Validation Metric |

| CAN | Controller Area Network |

| CDF | Cumulative Distribution Function |

| DoE | Design of Experiment |

| GP | Gaussian Process |

| M+S | Modelling and Simulation |

| OFAT | One Factor at a Time |

| p-box | Probability Box |

| PCE | Polynomial Chaos Expansion |

| SRQ | System Response Quantity of Interest |

| V+V | Verification and Validation |

| VV+UQ | Verification, Validation and Uncertainty Quantification |

| WLTP | Worldwide Harmonised Light Vehicle Test Procedure |

References

- Danquah, B.; Riedmaier, S.; Lienkamp, M. Potential of statistical model verification, validation and uncertainty quantification in automotive vehicle dynamics simulations: A review. Veh. Syst. Dyn. 2020, 1–30. [Google Scholar] [CrossRef]

- Guo, C.; Chan, C.C. Whole-system thinking, development control, key barriers and promotion mechanism for EV development. J. Mod. Power Syst. Clean Energy 2015, 3, 160–169. [Google Scholar] [CrossRef] [Green Version]

- Lutz, A.; Schick, B.; Holzmann, H.; Kochem, M.; Meyer-Tuve, H.; Lange, O.; Mao, Y.; Tosolin, G. Simulation methods supporting homologation of Electronic Stability Control in vehicle variants. Veh. Syst. Dyn. 2017, 55, 1432–1497. [Google Scholar] [CrossRef]

- Danquah, B.; Riedmaier, S.; Rühm, J.; Kalt, S.; Lienkamp, M. Statistical Model Verification and Validation Concept in Automotive Vehicle Design. Procedia CIRP 2020, 91, 261–270. [Google Scholar] [CrossRef]

- Nicoletti, L.; Brönner, M.; Danquah, B.; Koch, A.; Konig, A.; Krapf, S.; Pathak, A.; Schockenhoff, F.; Sethuraman, G.; Wolff, S.; et al. Review of Trends and Potentials in the Vehicle Concept Development Process. In Proceedings of the 2020 Fifteenth International Conference on Ecological Vehicles and Renewable Energies (EVER), Monte-Carlo, Monaco, 10–12 September 2020; pp. 1–15. [Google Scholar] [CrossRef]

- Zimmer, M. Durchgängiger Simulationsprozess zur Effizienzsteigerung und Reifegraderhöhung von Konzeptbewertungen in der frühen Phase der Produktentstehung; Wissenschaftliche Reihe Fahrzeugtechnik Universität Stuttgart; Springer: Wiesbaden, Germany, 2015. [Google Scholar] [CrossRef]

- Kaizer, J.S.; Heller, A.K.; Oberkampf, W.L. Scientific computer simulation review. Reliab. Eng. Syst. Saf. 2015, 138, 210–218. [Google Scholar] [CrossRef] [Green Version]

- Viehof, M.; Winner, H. Research methodology for a new validation concept in vehicle dynamics. Automot. Engine Technol. Inertat. J. WKM 2018, 3, 21–27. [Google Scholar] [CrossRef]

- Tschochner, M.K. Comparative Assessment of Vehicle Powertrain Concepts in the Early Development Phase; Berichte aus der Fahrzeugtechnik Shaker: Aachen, Germany, 2019. [Google Scholar]

- Koch, A.; Bürchner, T.; Herrmann, T.; Lienkamp, M. Eco-Driving for Different Electric Powertrain Topologies Considering Motor Efficiency. World Electr. Veh. J. 2021, 12, 6. [Google Scholar] [CrossRef]

- Sharifzadeh, M.; Senatore, A.; Farnam, A.; Akbari, A.; Timpone, F. A real-time approach to robust identification of tyre–road friction characteristics on mixed- roads. Veh. Syst. Dyn. 2019, 57, 1338–1362. [Google Scholar] [CrossRef]

- Ray, L.R. Nonlinear state and tire force estimation for advanced vehicle control. IEEE Trans. Control Syst. Technol. 1995, 3, 117–124. [Google Scholar] [CrossRef]

- Sharifzadeh, M.; Farnam, A.; Senatore, A.; Timpone, F.; Akbari, A. Delay-Dependent Criteria for Robust Dynamic Stability Control of Articulated Vehicles. Adv. Serv. Ind. Robot. 2018, 49, 424–432. [Google Scholar] [CrossRef]

- Oberkampf, W.L.; Barone, M.F. Measures of agreement between computation and experiment: Validation metrics. J. Comput. Phys. 2006, 217, 5–36. [Google Scholar] [CrossRef] [Green Version]

- Park, I.; Amarchinta, H.K.; Grandhi, R.V. A Bayesian approach for quantification of model uncertainty. Reliab. Eng. Syst. Saf. 2010, 95, 777–785. [Google Scholar] [CrossRef]

- Durst, P.J.; Anderson, D.T.; Bethel, C.L. A historical review of the development of verification and validation theories for simulation models. Int. J. Model. Simul. Sci. Comput. 2017, 08, 1730001. [Google Scholar] [CrossRef]

- Kleindorfer, G.B.; O’Neill, L.; Ganeshan, R. Validation in Simulation: Various Positions in the Philosophy of Science. Manag. Sci. 1998, 44, 1087–1099. [Google Scholar] [CrossRef] [Green Version]

- Popper, K.R. Conjectures and Refutations: The Growth of Scientific Knowledge; Routledge Classics; Routledge: London, UK, 2006. [Google Scholar]

- Popper, K.R. The Logic of Scientific Discovery; Routledge Classics; Routledge: London, UK, 2008. [Google Scholar]

- Oreskes, N.; Shrader-Frechette, K.; Belitz, K. Verification, validation, and confirmation of numerical models in the Earth sciences. Science 1994, 263, 641–646. [Google Scholar] [CrossRef] [Green Version]

- Oberkampf, W.L.; Roy, C.J. Verification and Validation in Scientific Computing; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- IEEE. IEEE Standard Glossary of Software Engineering Terminology; Institute of Electrical and Electronics Engineers Incorporated: Piscataway, NJ, USA, 1990. [Google Scholar]

- DIN EN ISO 9000:2015-11, Qualitätsmanagementsysteme—Grundlagen und Begriffe (ISO 9000:2015). 2015. Available online: https://link.springer.com/chapter/10.1007/978-3-658-27004-9_3 (accessed on 23 February 2021).

- ASME. An overview of the PTC 60/V&V 10: Guide for verification and validation in computational solid mechanics. Eng. Comput. 2007, 23, 245–252. [Google Scholar] [CrossRef]

- DoD. DoD Directive No. 5000.59: Modeling and Simulation (M&S) Management; Deparment of Defense: Washington, DC, USA, 1994. [Google Scholar]

- Neelamkavil, F. Computer Simulation and Modelling; Wiley: Chichester, UK, 1994. [Google Scholar]

- Sargent, R.G. Validation and Verification of Simulation Models. In Proceedings of the 1979 Winter Simulation Conference, San Diego, CA, USA, 3–5 December 1979; pp. 497–503. [Google Scholar] [CrossRef] [Green Version]

- Oberkampf, W.L.; Trucano, T.G. Verification and validation in computational fluid dynamics. Prog. Aerosp. Sci. 2002, 38, 209–272. [Google Scholar] [CrossRef] [Green Version]

- Sargent, R.G. Verification and validation of simulation models. J. Simul. 2013, 7, 12–24. [Google Scholar] [CrossRef] [Green Version]

- Riedmaier, S.; Danquah, B.; Schick, B.; Diermeyer, F. Unified Framework and Survey for Model Verification, Validation and Uncertainty Quantification. Arch. Comput. Methods Eng. 2020, 2, 249. [Google Scholar] [CrossRef]

- Ling, Y.; Mahadevan, S. Quantitative model validation techniques: New insights. Reliab. Eng. Syst. Saf. 2013, 111, 217–231. [Google Scholar] [CrossRef] [Green Version]

- ASME. Standard for Verification and Validation in Computational Fluid Dynamics and Heat Transfer: An American National Standard; The American Society of Mechanical Engineers: New York, NY, USA, 2009; Volume 20. [Google Scholar]

- Funfschilling, C.; Perrin, G. Uncertainty quantification in vehicle dynamics. Veh. Syst. Dyn. 2019, 229, 1–25. [Google Scholar] [CrossRef]

- Langley, R.S. On the statistical mechanics of structural vibration. J. Sound Vib. 2020, 466, 115034. [Google Scholar] [CrossRef]

- Mullins, J.; Ling, Y.; Mahadevan, S.; Sun, L.; Strachan, A. Separation of aleatory and epistemic uncertainty in probabilistic model validation. Reliab. Eng. Syst. Saf. 2016, 147, 49–59. [Google Scholar] [CrossRef] [Green Version]

- Ferson, S.; Oberkampf, W.L.; Ginzburg, L. Model validation and predictive capability for the thermal challenge problem. Comput. Method. Appl. Mech. Eng. 2008, 197, 2408–2430. [Google Scholar] [CrossRef]

- Sankararaman, S.; Mahadevan, S. Integration of model verification, validation, and calibration for uncertainty quantification in engineering systems. Reliab. Eng. Syst. Saf. 2015, 138, 194–209. [Google Scholar] [CrossRef]

- Easterling, R.G. Measuring the Predictive Capability of Computational Methods: Principles and Methods, Issues and Illustrations; Sandia National Labs.: Livermore, CA, USA, 2001. [Google Scholar] [CrossRef] [Green Version]

- Viehof, M. Objektive Qualitätsbewertung von Fahrdynamiksimulationen durch statistische Validierung. Ph.D. Thesis, Technical University of Darmstadt, Darmstadt, Germany, 2018. [Google Scholar]

- Roy, C.J.; Oberkampf, W.L. A comprehensive framework for verification, validation, and uncertainty quantification in scientific computing. Comput. Methods Appl. Mech. Eng. 2011, 200, 2131–2144. [Google Scholar] [CrossRef]

- Mahadevan, S.; Zhang, R.; Smith, N. Bayesian networks for system reliability reassessment. Struc. Saf. 2001, 23, 231–251. [Google Scholar] [CrossRef]

- Riedmaier, S.; Schneider, J.; Danquah, B.; Schick, B.; Diermeyer, F. Non-deterministic Model Validation Methodology for Simulation-based Safety Assessment of Automated Vehicles. Sim. Model. Prac. Theory 2021, 94. [Google Scholar] [CrossRef]

- Trucano, T.G.; Swiler, L.P.; Igusa, T.; Oberkampf, W.L.; Pilch, M. Calibration, validation, and sensitivity analysis: What’s what. Reliab. Eng. Syst. Saf. 2006, 91, 1331–1357. [Google Scholar] [CrossRef]

- Danquah, B. VVUQ Framework. 2021. Available online: https://github.com/TUMFTM/VVUQ-Framework (accessed on 21 February 2021).

- Wacker, P.; Adermann, J.; Danquah, B.; Lienkamp, M. Efficiency determination of active battery switching technology on roller dynamometer. In Proceedings of the Twelfth International Conference on Ecological Vehicles and Renewable Energies, Monte Carlo, Monaco, 11–13 April 2017; pp. 1–7. [Google Scholar] [CrossRef]

- Danquah, B.; Koch, A.; Weis, T.; Lienkamp, M.; Pinnel, A. Modular, Open Source Simulation Approach: Application to Design and Analyze Electric Vehicles. In Proceedings of the 2019 Fourteenth International Conference on Ecological Vehicles and Renewable Energies (EVER), Monte-Carlo, Monaco, 8–10 May 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Danquah, B.; Koch, A.; Pinnel, A.; Weiß, T.; Lienkamp, M. Component Library for Entire Vehicle Simulations. 2019. Available online: https://github.com/TUMFTM/Component_Library_for_Full_Vehicle_Simulations (accessed on 21 February 2021).

- Minnerup, K.; Herrmann, T.; Steinstraeter, M.; Lienkamp, M. Case Study of Holistic Energy Management Using Genetic Algorithms in a Sliding Window Approach. World Electr. Veh. J. 2019, 10, 46. [Google Scholar] [CrossRef] [Green Version]

- Steinstraeter, M.; Lewke, M.; Buberger, J.; Hentrich, T.; Lienkamp, M. Range Extension via Electrothermal Recuperation. World Electr. Veh. J. 2020, 11, 41. [Google Scholar] [CrossRef]

- Schmid, W.; Wildfeuer, L.; Kreibich, J.; Buechl, R.; Schuller, M.; Lienkamp, M. A Longitudinal Simulation Model for a Fuel Cell Hybrid Vehicle: Experimental Parameterization and Validation with a Production Car. In Proceedings of the 2019 Fourteenth International Conference on Ecological Vehicles and Renewable Energies (EVER), Monte Carlo, Monaco, 8–10 May 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Kalt, S.; Erhard, J.; Danquah, B.; Lienkamp, M. Electric Machine Design Tool for Permanent Magnet Synchronous Machines. In Proceedings of the 2019 Fourteenth International Conference on Ecological Vehicles and Renewable Energies (EVER), Monte Carlo, Monaco, 8–10 May 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Richards, S.A. Completed Richardson extrapolation in space and time. Commun. Numer. Methods Eng. 1997, 13, 573–582. [Google Scholar] [CrossRef]

- Wacker, P.; Wheldon, L.; Sperlich, M.; Adermann, J.; Lienkamp, M. Influence of active battery switching on the drivetrain efficiency of electric vehicles. In Proceedings of the 2017 IEEE Transportation Electrification Conference (ITEC), Chicago, IL, USA, 22–24 June 2017; pp. 33–38. [Google Scholar] [CrossRef]

- Devore, J.L. Probability and Statistics for Engineering and the Sciences, 8th ed.; Brooks/Cole, Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Greenwood, J.; Sandomire, M. Sample Size Required for Estimating the Standard Deviation as a Per Cent of its True Value. J. Am. Stat. Assoc. 1950, 45, 257–260. [Google Scholar] [CrossRef]

- Roy, C.J.; Balch, M.S. A Holistic Approach to Uncertainty Quantification with Application to Supersonic Nozzle Thrust. Int. J. Uncert. Quant. 2012, 2, 363–381. [Google Scholar] [CrossRef] [Green Version]

- Schmeiler, S. Enhanced Insights from Vehicle Simulation by Analysis of Parametric Uncertainties; Fahrzeugtechnik, Dr. Hut: Munich, Germany, 2020. [Google Scholar]

- Wilson, A.; Adams, R.A. Gaussian Process Kernels for Pattern Discovery and Extrapolation. In Proceedings of the 30th International Conference on Machine Learning, PMLR, Atlanta, GA, USA, 17–19 June 2013; Volume 28, pp. 1067–1075. [Google Scholar]

- Dubreuil, S.; Berveiller, M.; Petitjean, F.; Salaün, M. Construction of bootstrap confidence intervals on sensitivity indices computed by polynomial chaos expansion. Reliab. Eng. Syst. Saf. 2014, 121, 263–275. [Google Scholar] [CrossRef] [Green Version]

- Working, H.; Hotelling, H. Applications of the Theory of Error to the Interpretation of Trends. J. Am. Stat. Assoc. 1929, 24, 73–85. [Google Scholar] [CrossRef]

- Miller, R.G. Simultaneous Statistical Inference; Springer: New York, NY, USA, 1981. [Google Scholar] [CrossRef]

- Lieberman, G.J. Prediction Regions for Several Predictions from a Single Regression Line. Technometrics 1961, 3, 21. [Google Scholar] [CrossRef]

- Brahim-Belhouari, S.; Bermak, A. Gaussian process for nonstationary time series prediction. Comput. Stat. Data Anal. 2004, 47, 705–712. [Google Scholar] [CrossRef]

| Description | Variable | Value |

|---|---|---|

| Vehicle speed | ||

| Vehicle mass | ||

| Axle load distribution | ||

| Tyre pressure | ||

| Tyre radius | ||

| Roll resistance coeff. | ||

| Gear ratio | ||

| Eff. motor | ||

| Eff. power electronic | ||

| Auxiliary power | ||

| Front surface | A | |

| Aerodynamic drag coeff. | ||

| Air density | ||

| Dyna. calibration | ||

| Voltage power supply |

| 1 | 0.37 | ||||||

| 2 | 0.37 | ||||||

| 3 | 0.37 | ||||||

| 4 | 0.37 | ||||||

| 5 | 0.27 | ||||||

| 6 | 0.32 | ||||||

| 7 | 0.42 | ||||||

| 8 | 0.37 | ||||||

| 9 | 0.37 | ||||||

| 10 | 0.37 |

| No. | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | ||||||||

| 2 |

| Time | Location | |

|---|---|---|

| Measure one configuration three times | Test bench | |

| Calculate model uncertainty of one config. | Cluster | |

| Calculate model uncertainty of all ten configs. | Cluster | |

| Train uncertainty learning model | Cluster | |

| Predict new parameter configuration | Cluster |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Danquah, B.; Riedmaier, S.; Meral, Y.; Lienkamp, M. Statistical Validation Framework for Automotive Vehicle Simulations Using Uncertainty Learning. Appl. Sci. 2021, 11, 1983. https://doi.org/10.3390/app11051983

Danquah B, Riedmaier S, Meral Y, Lienkamp M. Statistical Validation Framework for Automotive Vehicle Simulations Using Uncertainty Learning. Applied Sciences. 2021; 11(5):1983. https://doi.org/10.3390/app11051983

Chicago/Turabian StyleDanquah, Benedikt, Stefan Riedmaier, Yasin Meral, and Markus Lienkamp. 2021. "Statistical Validation Framework for Automotive Vehicle Simulations Using Uncertainty Learning" Applied Sciences 11, no. 5: 1983. https://doi.org/10.3390/app11051983