A Fingerprint-Based Verification Framework Using Harris and SURF Feature Detection Algorithms

Abstract

:1. Introduction

- Correlation-based matching: In this approach, two fingerprints are placed on top of each other and the correlation among the corresponding pixels is matched for various alignments (different displacement and rotations). The principal disadvantage of correlation-based matching is its computational complexity. Moreover, it requires an accurate position of the recording point and is affected by non-linear distortion.

- Minutiae-based matching: This approach is the most common and used method, where the fundamental fingerprint comparison is conducted by fingerprint analysts. Minutiae are extracted from two fingerprint images and stored as point sets in the 2-dimensional plane. Minutiae-based matching mainly consists of gaining alignment between template and input minutiae sets which lead to the greatest number of minutiae pairs [5].

- Pattern-based (image-based) matching: This approach compares the basic fingerprint patterns (whorl, arch, and loop) between a candidate’s fingerprint and the pre-stored template, which needs the fingerprint images to be aligned in the same direction. The employed algorithm detects a central point in the image for this reason. The template in the pattern-based matching algorithm contains the size, type, and orientation of the patterns in the alignment fingerprint image. The template image is compared graphically with the candidate to define the similarity degree.

2. Related Work

3. Proposed Methodology

3.1. System Model Block Diagram

3.2. Fingerprint Image Preprocessing

3.2.1. Histogram Equalization

3.2.2. Normalization

3.2.3. Image Segmentation

3.2.4. Fourier Transform

3.2.5. Ridge Orientation Estimation

3.2.6. Ridge Frequency Estimation

3.2.7. Gabor Filtering

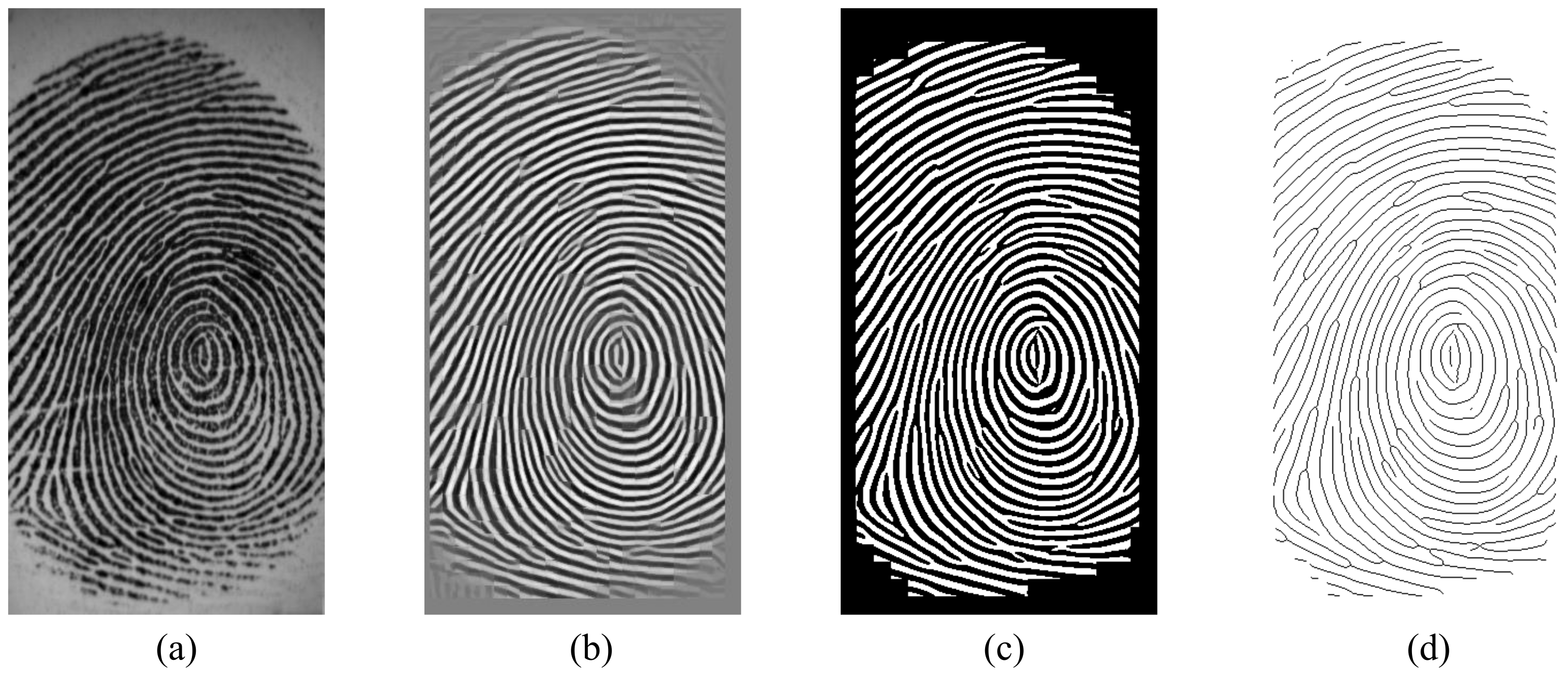

3.2.8. Binarization and Thinning

3.3. Fingerprint Feature Extraction

3.4. Harris Corner Detection

- Once an eigenvalue ( or ) is large and considerably greater than the other, then an edge exists.

- If and the values are small (near zero), then this pixel point is a flat region.

- If and they have large positive values, then this pixel point is a corner.

3.5. Speeded Up Robust Feature (SURF) Algorithm

3.6. Fingerprint Feature Matching

4. Experimental Results

Discussion of Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Maltoni, D.; Maio, D.; Jain, A.K.; Prabhakar, S. Handbook of Fingerprint Recognition; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Sahu, D.; Shrivas, R. Fingerprint reorganization using minutiae based matching for identification and verification. Int. J. Sci. Res. 2013, 5, NOV163751. [Google Scholar]

- Tripathi, M.; Shrivastava, D. Designing of Fingerprint Recognition System Using Minutia Extraction and Matching. Int. J. Sci. Eng. Comput. Technol. 2015, 5, 120–126. [Google Scholar]

- Patel, H.; Asrodia, P. Fingerprint matching using two methods. Int. J. Eng. Res. Appl. 2012, 2, 857–860. [Google Scholar]

- Shukla, P.; Abhishek, R. Fingerprint Recognition System. Int. J. Eng. Dev. Res. 2014, 2, 3140–3150. [Google Scholar]

- Lourde, M.; Khosla, D. Fingerprint Identification in Biometric SecuritySystems. Int. J. Comput. Electr. Eng. 2010, 2, 852–855. [Google Scholar] [CrossRef] [Green Version]

- Muhsain, N.F. Fingerprint Recognition using Prepared Codebook and Back-propagation. AL-Mansour J. 2011, 15, 31–45. [Google Scholar]

- Ali, M.M.; Mahale, V.H.; Yannawar, P.; Gaikwad, A. Fingerprint recognition for person identification and verification based on minutiae matching. In Proceedings of the IEEE 6th International Conference on Advanced Computing (IACC), Bhimavaram, India, 27–28 February 2016; pp. 332–339. [Google Scholar]

- Kuban, K.H.; Jwaid, W.M. A novel modification of surf algorithm for fingerprint matching. J. Theor. Appl. Inf. Technol. 2018, 96, 1–12. [Google Scholar]

- Ahmad, A.; Ismail, S.; Jawad, M.A. Human identity verification via automated analysis of fingerprint system features. Int. J. Innov. Comput. Inf. Control. IJICIC 2019, 15, 2183–2196. [Google Scholar]

- Patel, M.B.; Parikh, S.M.; Patel, A.R. An improved approach in fingerprint recognition algorithm. In Smart Computational Strategies: Theoretical and Practical Aspects; Springer: Berlin/Heidelberg, Germany, 2019; pp. 135–151. [Google Scholar]

- Kumar, R. Orientation Local Binary Pattern Based Fingerprint Matching. SN Comput. Sci. 2020, 1, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Awasthi, G.; Fadewar, D.; Siddiqui, A.; Gaikwad, B.P. Analysis of Fingerprint Recognition System Using Neural Network. In Proceedings of the 2nd International Conference on Communication & Information Processing (ICCIP), Singapore, 26–29 November 2020. [Google Scholar]

- Appati, J.K.; Nartey, P.K.; Owusu, E.; Denwar, I.W. Implementation of a Transform-Minutiae Fusion-Based Model for Fingerprint Recognition. Int. J. Math. Math. Sci. 2021, 2021, 5545488. [Google Scholar] [CrossRef]

- Haftu, T. Performance Analysis and Evaluation of Image Enhancement Techniques for Automatic Fingerprint Recognition System using Minutiae Extraction. Ph.D. Thesis, Addis Ababa University, Addis Ababa, Aithiops, 2018. [Google Scholar]

- Kumar, N.; Verma, P. Fingerprint image enhancement and minutia matching. Int. J. Eng. Sci. Emerg. Technol. (IJESET) 2012, 2, 37–42. [Google Scholar]

- Sepasian, M.; Balachandran, W.; Mares, C. Image enhancement for fingerprint minutiae-based algorithms using CLAHE, standard deviation analysis and sliding neighborhood. In Proceedings of the World congress on Engineering and Computer Science, San Francisco, CA, USA, 22–24 October 2008; pp. 22–24. [Google Scholar]

- Bhargava, N.; Kumawat, A.; Bhargava, R. Fingerprint Matching of Normalized Image based on Euclidean Distance. Int. J. Comput. Appl. 2015, 120, 20–23. [Google Scholar] [CrossRef]

- Chaudhari, A.S.; Lade, S.; Pande, D.S. Improved Technique for Fingerprint Segmentation. Int. J. Adv. Res. Comput. Sci. Manag. Stud. 2014, 2, 402–411. [Google Scholar]

- Geteneh, A. Designing Fingerprint Based Verification System Using Image Processing: The Case of National Examination. Ph.D. Thesis, Bahir Dar University, Bahir Dar, Ethiopia, 2019. [Google Scholar]

- Azad, P.; Asfour, T.; Dillmann, R. Combining Harris interest points and the SIFT descriptor for fast scale-invariant object recognition. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 4275–4280. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Automatic detection of COVID-19 using pruned GLCM-Based texture features and LDCRF classification. Comput. Biol. Med. 2021, 137, 104781–104791. [Google Scholar] [CrossRef] [PubMed]

- Wu, C. Advanced Feature Extraction Algorithms for Automatic Fingerprint Recognition Systems; Citeseer: Princeton, NJ, USA, 2007; Volume 68. [Google Scholar]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. In Proceedings of the 4th Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; pp. 147–151. [Google Scholar]

- Bakheet, S.; Al-Hamadi, A. Robust hand gesture recognition using multiple shape-oriented visual cues. EURASIP J. Image Video Process. 2021, 2021, 1–18. [Google Scholar] [CrossRef]

- Bakheet, S.; Al-Hamadi, A. A Framework for Instantaneous Driver Drowsiness Detection Based on Improved HOG Features and Naïve Bayesian Classification. Brain Sci. 2021, 11, 240–254. [Google Scholar] [CrossRef] [PubMed]

- Du, G.; Su, F.; Cai, A. Face recognition using SURF features. In Proceedings of the MIPPR 2009: Pattern Recognition and Computer Vision, Yichang, China, 30 October–1 November 2009; International Society for Optics and Photonics: Bellingham, WA, USA, 2009; Volume 7496. [Google Scholar]

- Fingerprint Verification Competition FVC2000. Available online: http://bias.csr.unibo.it/fvc2000/ (accessed on 22 September 2021).

- Fingerprint Verification Competition FVC2002. Available online: http://bias.csr.unibo.it/fvc2002/ (accessed on 22 September 2021).

- Maio, D.; Maltoni, D.; Cappelli, R.; Wayman, J.L.; Jain, A.K. FVC2002: Second fingerprint verification competition. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 3, pp. 811–814. [Google Scholar]

- Vitello, G.; Conti, V.; Vitabile, S.; Sorbello, F. Fingerprint quality evaluation in a novel embedded authentication system for mobile users. Mob. Inf. Syst. 2015, 2015, 401975. [Google Scholar] [CrossRef]

- Chavan, S.; Mundada, P.; Pal, D. Fingerprint authentication using gabor filter based matching algorithm. In Proceedings of the International Conference on Technologies for Sustainable Development (ICTSD), Mumbai, India, 4–6 February 2015; pp. 1–6. [Google Scholar]

- Francis-Lothai, F.; Bong, D.B. A fingerprint matching algorithm using bit-plane extraction method with phase-only correlation. Int. J. Biom. 2017, 9, 44–66. [Google Scholar]

| User | Recognized Samples | ACC (%) |

|---|---|---|

| 1 | 8 | 100 |

| 2 | 8 | 100 |

| 3 | 8 | 100 |

| 4 | 6 | 75 |

| 5 | 7 | 87.5 |

| 6 | 8 | 100 |

| 7 | 6 | 75 |

| 8 | 7 | 87.5 |

| 9 | 8 | 100 |

| 10 | 8 | 100 |

| Total | 74 | 92.5% |

| User | Recognized Samples | ACC (%) |

|---|---|---|

| 1 | 8 | 100 |

| 2 | 7 | 87.5 |

| 3 | 8 | 100 |

| 4 | 7 | 87.5 |

| 5 | 8 | 100 |

| 6 | 8 | 100 |

| 7 | 7 | 87.5 |

| 8 | 8 | 100 |

| 9 | 8 | 100 |

| 10 | 7 | 87.5 |

| Total | 76 | 95% |

| Dataset | Recognition Accuracy | |||

|---|---|---|---|---|

| Our Method | Chavan et al. [32] | Ahmad et al. [10] | Ali et al. [8] | |

| DB1-FVC2000 | 92.5% | 82.95% | 82.43% | 80.03% |

| Dataset | Recognition Accuracy | |||

|---|---|---|---|---|

| Our Method | Muhsain [7] | Awasthi et al. [13] | Francis-Lothai et al. [33] | |

| DB1-FVC2002 | 95% | 94% | 91.10% | 81.16% |

| Stage | Elapsed Time (s) |

|---|---|

| Enhancement | 3.786178 |

| Feature extraction | 0.139466 |

| Recognition | 0.106853 |

| Total | 4.032497 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bakheet, S.; Al-Hamadi, A.; Youssef, R. A Fingerprint-Based Verification Framework Using Harris and SURF Feature Detection Algorithms. Appl. Sci. 2022, 12, 2028. https://doi.org/10.3390/app12042028

Bakheet S, Al-Hamadi A, Youssef R. A Fingerprint-Based Verification Framework Using Harris and SURF Feature Detection Algorithms. Applied Sciences. 2022; 12(4):2028. https://doi.org/10.3390/app12042028

Chicago/Turabian StyleBakheet, Samy, Ayoub Al-Hamadi, and Rehab Youssef. 2022. "A Fingerprint-Based Verification Framework Using Harris and SURF Feature Detection Algorithms" Applied Sciences 12, no. 4: 2028. https://doi.org/10.3390/app12042028