Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation

Abstract

1. Introduction

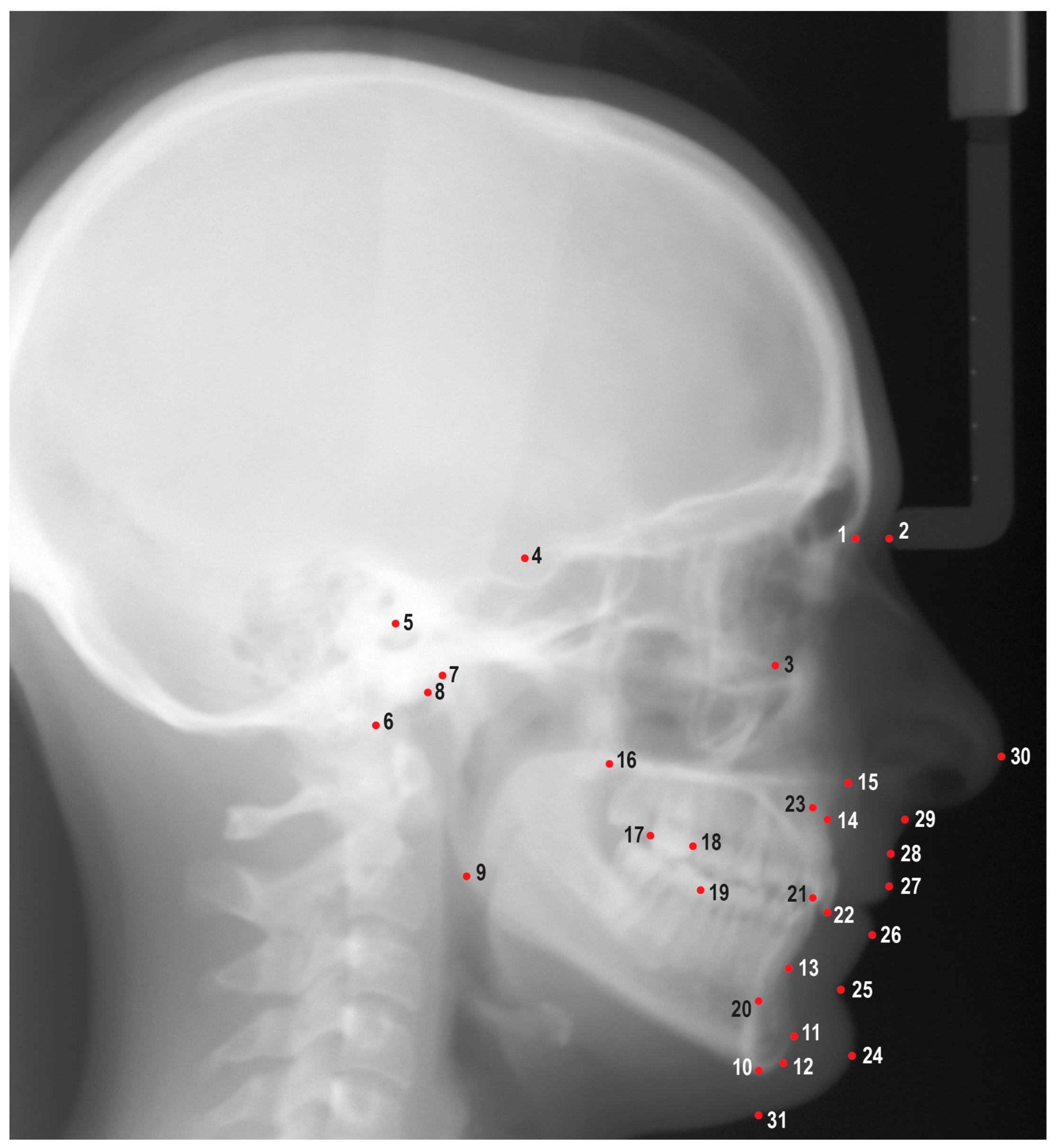

2. Materials and Methods

2.1. Sample Size Justification

2.2. Data Collection

2.3. Software

2.4. Data Analyses

2.5. Statistical Analyses

3. Results

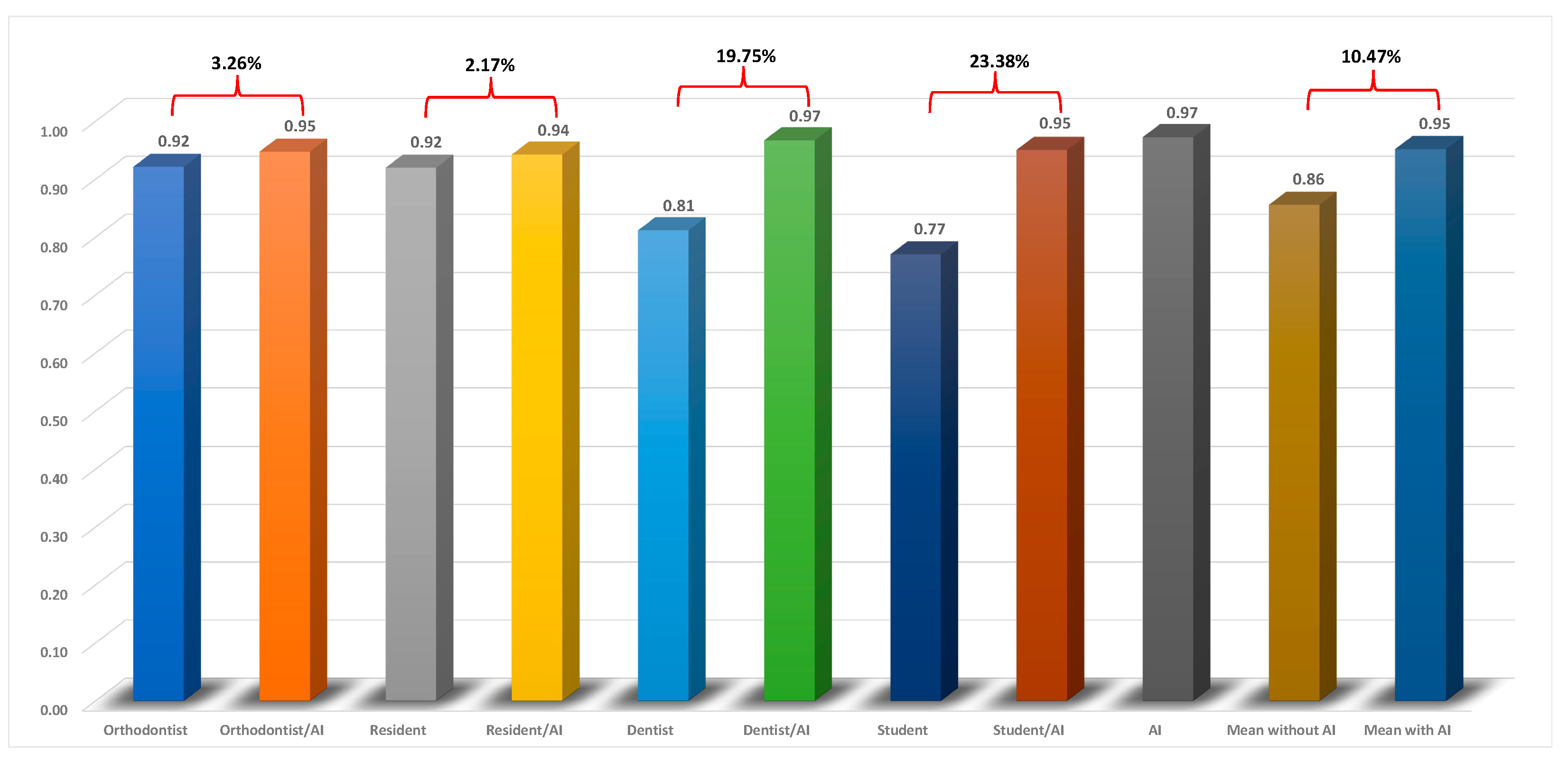

3.1. Precision Analysis

3.2. Accuracy Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Leonardi, R.; Giordano, D.; Maiorana, F.; Spampinato, C. Automatic cephalometric analysis. Angle Orthod. 2008, 78, 145–151. [Google Scholar] [CrossRef] [PubMed]

- Albarakati, S.F.; Kula, K.S.; Ghoneima, A.A. The reliability and reproducibility of cephalometric measurements: A comparison of conventional and digital methods. Dentomaxillofac. Radiol. 2012, 41, 11–17. [Google Scholar] [CrossRef]

- Liu, J.K.; Chen, Y.T.; Cheng, K.S. Accuracy of computerized automatic identification of cephalometric landmarks. Am. J. Orthod. Dentofac. Orthop. 2000, 118, 535–540. [Google Scholar] [CrossRef]

- Durão, A.P.; Morosolli, A.; Pittayapat, P.; Bolstad, N.; Ferreira, A.P.; Jacobs, R. Cephalometric landmark variability among orthodontists and dentomaxillofacial radiologists: A comparative study. Imaging Sci. Dent. 2015, 45, 213–220. [Google Scholar] [CrossRef] [PubMed]

- Hung, K.; Montalvao, C.; Tanaka, R.; Kawai, T.; Bornstein, M.M. The use and performance of artificial intelligence applications in dental and maxillofacial radiology: A systematic review. Dentomaxillofac. Radiol. 2020, 49, 20190107. [Google Scholar] [CrossRef] [PubMed]

- Cohen, A.M.; Ip, H.H.; Linney, A.D. A preliminary study of computer recognition and identification of skeletal landmarks as a new method of cephalometric analysis. Br. J. Orthod. 1984, 11, 143–154. [Google Scholar] [CrossRef] [PubMed]

- Ren, R.; Luo, H.; Su, C.; Yao, Y.; Liao, W. Machine learning in dental, oral and craniofacial imaging: A review of recent progress. PeerJ 2021, 9, e11451. [Google Scholar] [CrossRef]

- Park, J.H.; Hwang, H.W.; Moon, J.H.; Yu, Y.; Kim, H.; Her, S.B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.J. Automated identification of cephalometric landmarks: Part 1-Comparisons between the latest deep-learning methods YOLOV3 and SSD. Angle Orthod. 2019, 89, 903–909. [Google Scholar] [CrossRef]

- Hwang, H.-W.; Park, J.-H.; Moon, J.-H.; Yu, Y.; Kim, H.; Her, S.-B.; Srinivasan, G.; Aljanabi, M.N.A.; Donatelli, R.E.; Lee, S.-J. Automated Identification of Cephalometric Landmarks: Part 2-Might It Be Better Than human? Angle Orthod. 2019, 90, 69–76. [Google Scholar] [CrossRef]

- Yao, J.; Zeng, W.; He, T.; Zhou, S.; Zhang, Y.; Guo, J.; Tang, W. Automatic localization of cephalometric landmarks based on convolutional neural network. Am. J. Orthod. Dentofac. Orthop. 2022, 161, e250–e259. [Google Scholar] [CrossRef]

- Jeon, S.; Lee, K.C. Comparison of cephalometric measurements between conventional and automatic cephalometric analysis using convolutional neural network. Prog. Orthod. 2021, 22, 14. [Google Scholar] [CrossRef]

- Junaid, N.; Khan, N.; Ahmed, N.; Abbasi, M.S.; Das, G.; Maqsood, A.; Ahmed, A.R.; Marya, A.; Alam, M.K.; Heboyan, A. Development, Application, and Performance of Artificial Intelligence in Cephalometric Landmark Identification and Diagnosis: A Systematic Review. Healthcare 2022, 10, 2454. [Google Scholar] [CrossRef]

- Bao, H.; Zhang, K.; Yu, C.; Li, H.; Cao, D.; Shu, H.; Liu, L.; Yan, B. Evaluating the accuracy of automated cephalometric analysis based on artificial intelligence. BMC Oral Health 2023, 23, 191. [Google Scholar] [CrossRef] [PubMed]

- Serafin, M.; Baldini, B.; Cabitza, F.; Carrafiello, G.; Baselli, G.; Del Fabbro, M.; Sforza, C.; Caprioglio, A.; Tartaglia, G.M. Accuracy of automated 3D cephalometric landmarks by deep learning algorithms: Systematic review and meta-analysis. Radiol. Med. 2023, 128, 544–555. [Google Scholar] [CrossRef] [PubMed]

- Moon, J.H.; Hwang, H.W.; Yu, Y.; Kim, M.G.; Donatelli, R.E.; Lee, S.J. How much deep learning is enough for automatic identification to be reliable? Angle Orthod. 2020, 90, 823–830. [Google Scholar] [CrossRef]

- Kim, J.; Kim, I.; Kim, Y.-J.; Kim, M.; Cho, J.-H.; Hong, M.; Kang, K.-H.; Lim, S.-H.; Kim, S.-J.; Kim, Y.H.; et al. Accuracy of automated identification of lateral cephalometric landmarks using cascade convolutional neural networks on lateral cephalograms from nationwide multi-centres. Orthod. Craniofac. Res. 2021, 24, 59–67. [Google Scholar] [CrossRef] [PubMed]

- Silva, T.P.; Hughes, M.M.; Menezes, L.D.S.; de Melo, M.F.B.; Takeshita, W.M.; Freitas, P.H.L. Artificial intelligence-based cephalometric landmark annotation and measurements according to Arnett’s analysis: Can we trust a bot to do that? Dentomaxillofac. Radiol. 2022, 51, 20200548. [Google Scholar] [CrossRef]

- Schwendicke, F.; Chaurasia, A.; Arsiwala, L.; Lee, J.H.; Elhennawy, K.; Jost-Brinkmann, P.G.; Demarco, F.; Krois, J. Deep learning for cephalometric landmark detection: Systematic review and meta-analysis. Clin. Oral Investig. 2021, 25, 4299–4309. [Google Scholar] [CrossRef]

- Broch, J.; Slagsvold, O.; Røsler, M. Error in landmark identification in lateral radiographic headplates. Eur. J. Orthod. 1981, 3, 9–13. [Google Scholar] [CrossRef] [PubMed]

- Baumrind, S.; Frantz, R.C. The reliability of head film measurements. 1. Landmark identification. Am. J. Orthod. 1971, 60, 111–127. [Google Scholar] [CrossRef] [PubMed]

- Trpkova, B.; Major, P.; Prasad, N.; Nebbe, B. Cephalometric landmarks identification and reproducibility: A meta analysis. Am. J. Orthod. Dentofac. Orthop. 1997, 112, 165–170. [Google Scholar] [CrossRef] [PubMed]

- Stabrun, A.E.; Danielsen, K. Precision in cephalometric landmark identification. Eur. J. Orthod. 1982, 4, 185–196. [Google Scholar] [CrossRef] [PubMed]

| Measurements | Orthodontist | Orthodontist AI | Resident | Resident AI | Dentist | Dentist AI | Student | Student AI | AI | Mean without AI | Mean with AI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| SNA (°) | 0.92 | 0.93 | 0.91 | 0.93 | 0.71 | 0.98 | 0.39 | 0.94 | 0.97 | 0.73 | 0.95 |

| SN-Palatal Plane (°) | 0.93 | 0.93 | 0.89 | 0.98 | 0.62 | 0.96 | 0.74 | 0.96 | 0.92 | 0.80 | 0.96 |

| SNB (°) | 0.96 | 0.94 | 0.92 | 0.98 | 0.77 | 0.98 | 0.92 | 0.96 | 0.99 | 0.89 | 0.97 |

| FMA (°) | 0.95 | 0.93 | 0.94 | 0.98 | 0.88 | 0.97 | 0.88 | 0.98 | 0.99 | 0.91 | 0.97 |

| SN-MP (°) | 0.99 | 0.95 | 0.95 | 0.99 | 0.88 | 0.98 | 0.95 | 0.99 | 1.00 | 0.94 | 0.98 |

| Y-Axis (°) | 0.97 | 0.96 | 0.92 | 0.98 | 0.79 | 0.98 | 0.93 | 0.97 | 0.99 | 0.90 | 0.97 |

| ANB (°) | 0.81 | 0.91 | 0.84 | 0.73 | 0.60 | 1.00 | 0.13 | 0.89 | 0.91 | 0.60 | 0.88 |

| ANS-PNS (mm) | 0.78 | 0.92 | 0.86 | 0.91 | 0.58 | 0.98 | 0.65 | 0.93 | 0.99 | 0.72 | 0.94 |

| Co-Gn (mm) | 0.95 | 0.96 | 0.96 | 0.99 | 0.82 | 0.98 | 0.88 | 0.97 | 1.00 | 0.90 | 0.98 |

| Ba-S-N (°) | 0.97 | 0.96 | 0.94 | 0.98 | 0.87 | 0.94 | 0.87 | 0.96 | 1.00 | 0.91 | 0.96 |

| U1-SN (°) | 0.95 | 0.96 | 0.93 | 0.95 | 0.91 | 0.93 | 0.90 | 0.95 | 0.98 | 0.92 | 0.95 |

| U1-NA (°) | 0.93 | 0.96 | 0.96 | 0.94 | 0.92 | 0.93 | 0.90 | 0.95 | 0.97 | 0.93 | 0.95 |

| U1-NA (mm) | 0.88 | 0.92 | 0.90 | 0.81 | 0.71 | 0.96 | 0.22 | 0.91 | 0.91 | 0.68 | 0.90 |

| L1-MP (°) | 0.94 | 0.96 | 0.95 | 0.96 | 0.96 | 0.98 | 0.90 | 0.93 | 0.99 | 0.94 | 0.96 |

| L1-NB (°) | 0.92 | 0.98 | 0.94 | 0.94 | 0.93 | 0.97 | 0.84 | 0.88 | 0.99 | 0.91 | 0.94 |

| L1-NB (mm) | 0.94 | 0.96 | 0.96 | 0.98 | 0.94 | 1.00 | 0.92 | 0.97 | 0.99 | 0.94 | 0.98 |

| Interincisal Angle (°) | 0.96 | 0.98 | 0.96 | 0.96 | 0.96 | 0.96 | 0.90 | 0.94 | 0.99 | 0.95 | 0.96 |

| Upper Lip to E-Plane (mm) | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.98 | 0.96 | 0.98 | 1.00 | 0.98 | 0.99 |

| Lower Lip to E-Plane (mm) | 1.00 | 0.99 | 0.99 | 0.99 | 0.99 | 0.99 | 0.97 | 0.99 | 1.00 | 0.99 | 0.99 |

| N′-Sn′ (mm) | 0.75 | 0.93 | 0.85 | 0.97 | 0.67 | 0.98 | 0.85 | 0.97 | 0.99 | 0.78 | 0.96 |

| Sn′-Me′ (mm) | 0.95 | 0.97 | 0.97 | 0.96 | 0.95 | 0.82 | 0.85 | 0.94 | 1.00 | 0.93 | 0.92 |

| N-ANS (mm) | 0.94 | 0.92 | 0.75 | 0.98 | 0.56 | 0.99 | 0.81 | 0.94 | 0.89 | 0.77 | 0.96 |

| ANS-Me (mm) | 0.98 | 0.99 | 0.99 | 0.99 | 0.99 | 1.00 | 0.98 | 0.99 | 0.98 | 0.99 | 0.99 |

| Ar-Go (mm) | 0.96 | 0.92 | 0.93 | 0.99 | 0.81 | 0.97 | 0.91 | 0.99 | 0.98 | 0.90 | 0.97 |

| NA-Apo (°) | 0.83 | 0.87 | 0.84 | 0.70 | 0.59 | 1.00 | 0.13 | 0.90 | 0.91 | 0.60 | 0.87 |

| FH-Npo (°) | 0.81 | 0.95 | 0.88 | 0.95 | 0.71 | 0.94 | 0.65 | 0.94 | 0.96 | 0.76 | 0.95 |

| Mean | 0.92 | 0.95 | 0.92 | 0.94 | 0.81 | 0.97 | 0.77 | 0.95 | 0.97 | 0.86 | 0.95 |

| Measurements | F-Value | p-Value | Pairwise Comparison |

|---|---|---|---|

| SNA (°) | 1.31 | 0.24 | |

| SN-Palatal Plane (°) | 1.97 | 0.05 | |

| SNB (°) | 3.98 | <0.01 | 1 < 5, 2 < 5, 3 < 5, 4 < 5, 7 < 5, 8 < 5, 5 > 6, 5 > 9 |

| FMA (°) | 9.18 | <0.01 | 1 > 7, 2 > 7, 3 > 7, 4 > 7, 7 < 8, 7 < 5, 7 < 6, 7 < 9 |

| SN-MP (°) | 5.51 | <0.01 | 1 > 3, 1 > 5, 2 < 5, 3 < 4, 3 < 8, 3 > 5, 3 < 6, 3 < 9, 4 > 5, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| Y-Axis (°) | 6.83 | <0.01 | 1 > 5, 2 > 5, 3 > 5, 4 > 5, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| ANB (°) | 2348.9 | <0.01 | 1 < 8, 2 < 7, 2 < 8, 3 < 8, 4 < 8, 7 < 8, 8 > 5, 8 > 6, 8 > 9 |

| ANS-PNS (mm) | 2.71 | 0.01 | 1 < 2, 2 > 3, 2 > 7, 2 > 8, 2 > 5, 3 > 7, 4 > 7, 7 < 5, 7 < 6, 7 < 9 |

| Co-Gn (mm) | 2.4 | 0.02 | 1 < 2, 1 < 5, 2 > 7, 3 < 5, 4 < 5, 7 < 5, 5 > 6, 5 > 9 |

| Ba-S-N (°) | 3.04 | <0.01 | 1 > 5, 2 > 5, 3 < 7, 4 > 5, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| U1-SN (°) | 1.25 | 0.27 | |

| U1-NA (°) | 1.59 | 0.13 | |

| U1-NA (mm) | 0.9 | 0.52 | |

| L1-MP (°) | 2.12 | 0.03 | 1 < 3, 1 < 7, 3 > 4, 3 > 5, 4 < 7, 7 > 5, 5 < 6, 5 < 9 |

| L1-NB (°) | 0.65 | 0.74 | |

| L1-NB (mm) | 1.14 | 0.34 | |

| Interincisal Angle (°) | 0.42 | 0.9101 | |

| Upper Lip to E-Plane (mm) | 1.05 | 0.4 | |

| Lower Lip to E-Plane (mm) | 1.68 | 0.1 | |

| N′-Sn′ (mm) | 7.27 | <0.01 | 1 < 2, 1 < 3, 1 < 4, 1 < 7, 1 < 8, 1 > 5, 1 < 6, 1 < 9, 2 > 5, 3 > 5, 4 > 5, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| Sn′-Me′ (mm) | 0.89 | 0.53 | |

| N-ANS (mm) | 6.21 | <0.01 | 1 > 5, 2 > 5, 3 > 5, 4 > 5, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| ANS-Me (mm) | 3.87 | 0.0003 | 1 > 3, 1 > 7, 1 > 5, 1 > 9, 2 > 3, 2 > 7, 2 > 5, 2 > 9, 3 < 4, 3 < 8, 4 > 7, 4 > 5, 4 > 9, 7 < 8, 7 < 6 |

| Ar-Go (mm) | 1.18 | 0.31 | |

| NA-Apo (°) | 1.3 | 0.24 | |

| FH-Npo (°) | 1.98 | 0.05 | 2 < 7, 3 < 7, 4 < 7, 7 > 6, 7 > 9, 5 > 6 |

| Measurements | Orthodontist | Orthodontist AI | Resident | Resident AI | Dentist | Dentist AI | Student | Student AI | AI | Mean without AI | Mean with AI |

|---|---|---|---|---|---|---|---|---|---|---|---|

| SNA (°) | 1.67 | 1.20 | 1.69 | 1.66 | 3.24 | 1.61 | 3.57 | 1.77 | 1.86 | 2.54 | 1.56 |

| SN-Palatal Plane (°) | 1.50 | 1.18 | 1.66 | 1.10 | 2.87 | 1.51 | 1.76 | 1.47 | 1.58 | 1.95 | 1.32 |

| SNB (°) | 1.17 | 0.86 | 1.38 | 1.22 | 2.93 | 1.31 | 1.42 | 1.24 | 1.49 | 1.73 | 1.16 |

| FMA (°) | 1.41 | 1.65 | 3.00 | 2.91 | 1.81 | 1.52 | 3.05 | 2.21 | 3.18 | 2.32 | 2.07 |

| SN-MP (°) | 1.20 | 1.36 | 1.44 | 2.51 | 2.98 | 1.33 | 2.00 | 1.62 | 2.99 | 1.91 | 1.71 |

| Y-Axis (°) | 0.83 | 0.77 | 1.26 | 0.92 | 3.29 | 1.12 | 1.36 | 0.94 | 1.09 | 1.69 | 0.94 |

| ANB (°) | 0.97 | 0.71 | 1.07 | 0.66 | 1.21 | 0.78 | 2.90 | 0.91 | 0.70 | 1.54 | 0.77 |

| ANS-PNS (mm) | 2.21 | 1.78 | 3.44 | 2.36 | 3.35 | 1.88 | 2.56 | 1.58 | 2.06 | 2.89 | 1.90 |

| Co-Gn (mm) | 2.49 | 1.66 | 1.68 | 1.30 | 2.99 | 1.38 | 2.44 | 1.48 | 1.37 | 2.40 | 1.46 |

| Ba-S-N (°) | 3.03 | 2.76 | 3.36 | 2.38 | 5.74 | 3.39 | 3.89 | 3.17 | 1.99 | 4.01 | 2.93 |

| U1-SN (°) | 2.19 | 1.84 | 3.25 | 1.94 | 5.78 | 2.99 | 4.69 | 3.48 | 2.39 | 3.98 | 2.56 |

| U1-NA (°) | 2.62 | 1.75 | 3.24 | 2.42 | 4.24 | 3.17 | 5.36 | 3.28 | 2.52 | 3.87 | 2.66 |

| U1-NA (mm) | 1.05 | 0.84 | 1.35 | 0.85 | 1.53 | 1.04 | 3.78 | 1.04 | 0.80 | 1.93 | 0.94 |

| L1-MP (°) | 1.96 | 2.19 | 2.88 | 2.60 | 2.38 | 2.04 | 3.18 | 2.61 | 3.08 | 2.60 | 2.36 |

| L1-NB (°) | 1.87 | 1.86 | 2.70 | 1.98 | 2.28 | 2.21 | 4.37 | 2.56 | 2.16 | 2.81 | 2.15 |

| L1-NB (mm) | 0.55 | 0.46 | 0.56 | 0.49 | 0.52 | 0.56 | 0.84 | 0.58 | 0.58 | 0.62 | 0.52 |

| Interincisal Angle (°) | 2.60 | 1.96 | 4.16 | 2.86 | 4.21 | 3.82 | 7.12 | 4.47 | 2.73 | 4.52 | 3.28 |

| Upper Lip to E-Plane (mm) | 0.33 | 0.40 | 0.43 | 0.35 | 0.37 | 0.34 | 0.30 | 0.57 | 0.85 | 0.36 | 0.42 |

| Lower Lip to E-Plane (mm) | 0.47 | 0.47 | 0.44 | 0.39 | 0.45 | 0.47 | 0.38 | 0.49 | 0.83 | 0.44 | 0.46 |

| N′-Sn′ (mm) | 1.92 | 1.38 | 1.48 | 1.38 | 2.33 | 1.75 | 1.39 | 1.44 | 2.60 | 1.78 | 1.49 |

| Sn′-Me′ (mm) | 1.72 | 1.74 | 1.81 | 1.59 | 1.15 | 1.64 | 1.84 | 1.63 | 1.81 | 1.63 | 1.65 |

| N-ANS (mm) | 0.94 | 1.21 | 1.73 | 1.08 | 2.80 | 1.45 | 1.11 | 1.10 | 1.49 | 1.65 | 1.21 |

| ANS-Me (mm) | 1.16 | 1.32 | 1.38 | 1.07 | 1.60 | 1.13 | 1.25 | 0.82 | 1.49 | 1.35 | 1.09 |

| Ar-Go (mm) | 1.76 | 1.63 | 1.91 | 3.10 | 1.97 | 1.65 | 2.77 | 2.60 | 3.11 | 2.10 | 2.25 |

| NA-Apo (°) | 1.63 | 1.31 | 1.82 | 1.17 | 2.45 | 1.38 | 4.27 | 1.42 | 1.26 | 2.54 | 1.32 |

| FH-Npo (°) | 1.60 | 1.31 | 2.51 | 1.60 | 1.83 | 1.20 | 2.20 | 1.47 | 1.66 | 2.04 | 1.40 |

| Mean | 1.57 | 1.37 | 1.99 | 1.61 | 2.55 | 1.64 | 2.68 | 1.77 | 1.83 | 2.20 | 1.60 |

| Measurements | F-Value | p-Value | Pairwise Comparison |

|---|---|---|---|

| SNA (°) | 4.4 | <0.0001 | 1 < 7, 1 < 5, 2 < 7, 2 < 5, 3 < 5, 4 < 7, 4 < 5, 7 > 6, 7 > 9, 8 < 5, 8 > 9, 5 > 6, 5 > 9 |

| SN-Palatal Plane (°) | 6.82 | <0.0001 | 1 > 5, 2 > 5, 3 < 4, 3 > 5, 4 > 7, 4 > 8, 4 > 5, 4 > 6, 7 > 5, 7 < 9, 8 > 5, 8 < 9, 5 < 6, 5 < 9, 6 < 9 |

| SNB (°) | 22.21 | <0.0001 | 1 < 3, 1 < 7, 1 < 5, 1 > 9, 2 < 3, 2 < 7, 2 < 5, 2 > 9, 3 > 4, 3 < 5, 3 > 6, 3 > 9, 4 < 7, 4 < 8, 4 < 5, 7 < 5, 7 > 6, 7 > 9, 8 < 5, 8 > 6, 8 > 9, 5 > 6, 5 > 9, 6 > 9 |

| FMA (°) | 11.92 | <0.0001 | 1 < 3, 1 < 4, 1 > 7, 1 < 9, 2 < 3, 2 < 4, 2 > 7, 2 < 8, 2 < 9, 3 > 7, 3 > 5, 3 > 6, 4 > 7, 4 > 5, 4 > 6, 7 < 8, 7 < 5, 7 < 6, 7 < 9, 8 > 5, 5 < 9, 6 < 9 |

| SN-MP (°) | 27 | <0.0001 | 1 < 4, 1 < 8, 1 > 5, 1 < 9, 2 < 4, 2 < 8, 2 > 5, 2 < 9, 3 < 4, 3 < 8, 3 > 5, 3 < 9, 4 > 7, 4 > 8, 4 > 5, 4 > 6, 7 > 5, 7 < 9, 8 > 5, 8 < 9, 5 < 6, 5 < 9, 6 < 9 |

| Y-Axis (°) | 23.89 | <0.0001 | 1 > 7, 1 > 5, 1 < 9, 2 > 7, 2 > 5, 2 < 9, 3 < 4, 3 > 5, 3 < 9, 4 > 7, 4 > 8, 4 > 5, 4 > 6, 7 < 8, 7 > 5, 7 < 6, 7 < 9, 8 > 5, 8 < 9, 5 < 6, 5 < 9, 6 < 9 |

| ANB (°) | 0.4 | 0.9209 | |

| ANS-PNS (mm) | 12.8 | <0.0001 | 1 > 3, 1 > 4, 1 > 5, 2 > 3, 2 > 4, 2 > 5, 3 < 4, 3 < 7, 3 < 8, 3 < 6, 3 < 9, 4 < 7, 4 < 8, 4 > 5, 4 < 6, 4 < 9, 7 > 5, 8 > 5, 5 < 6, 5 < 9 |

| Co-Gn (mm) | 16.56 | <0.0001 | 1 > 2, 1 > 3, 1 > 4, 1 > 7, 1 > 8, 1 > 6, 1 > 9, 2 > 4, 2 > 7, 2 > 8, 2 < 5, 2 > 6, 2 > 9, 3 > 7, 3 < 5, 4 > 7, 4 < 5, 7 < 5, 8 < 5, 5 > 6, 5 > 9 |

| Ba-S-N (°) | 11.69 | <0.0001 | 1 > 5, 1 < 9, 2 > 5, 2 < 9, 3 < 4, 3 > 5, 3 < 9, 4 > 5, 4 > 6, 4 < 9, 7 > 5, 7 < 9, 8 > 5, 8 < 9, 5 < 6, 5 < 9, 6 < 9 |

| U1-SN (°) | 30.85 | <0.0001 | 1 < 3, 1 < 7, 1 < 8, 1 < 5, 1 < 6, 1 > 9, 2 < 3, 2 < 7, 2 < 8, 2 < 5, 2 < 6, 2 > 9, 3 > 4, 3 < 7, 3 < 5, 3 > 9, 4 < 7, 4 < 8, 4 < 5, 4 < 6, 4 > 9, 7 > 8, 7 < 5, 7 > 6, 7 > 9, 8 < 5, 8 > 6, 8 > 9, 5 > 6, 5 > 9, 6 > 9 |

| U1-NA (°) | 12.01 | <0.0001 | 1 < 7, 1 < 5, 1 > 9, 2 < 3, 2 < 7, 2 < 8, 2 < 5, 2 < 6, 3 < 7, 3 > 9, 4 < 7, 4 < 8, 4 < 5, 4 > 9, 7 > 8, 7 > 5, 7 > 6, 7 > 9, 8 > 9, 5 > 9, 6 > 9 |

| U1-NA (mm) | 0.43 | 0.9016 | |

| L1-MP (°) | 7.38 | <0.0001 | 1 < 7, 1 > 9, 2 < 7, 2 > 9, 3 > 4, 3 > 8, 3 > 9, 4 < 7, 4 > 9, 7 > 8, 7 > 5, 7 > 6, 7 > 9, 8 > 9, 5 > 9, 6 > 9 |

| L1-NB (°) | 1.51 | 0.156 | |

| L1-NB (mm) | 4.83 | <0.0001 | 1 > 5, 1 < 6, 2 > 7, 2 > 5, 3 > 5, 3 < 6, 3 < 9, 4 > 7, 4 > 5, 7 < 6, 7 < 9, 8 > 5, 8 < 6, 8 < 9, 5 < 6, 5 < 9 |

| Interincisal Angle (°) | 7.95 | <0.0001 | 1 > 3, 1 > 7, 1 > 8, 1 > 5, 2 > 3, 2 > 7, 2 > 8, 2 > 5, 2 > 6, 3 > 7, 3 < 9, 4 > 7, 4 > 5, 4 < 9, 7 < 8, 7 < 6, 7 < 9, 7 < 9, 5 < 6, 5 < 9, 6 < 9 |

| Upper Lip to E-Plane (mm) | 6.28 | <0.0001 | 1 > 3, 1 > 8, 1 > 9, 2 > 8, 2 > 9, 3 < 7, 3 < 5, 3 < 6, 3 > 9, 4 > 8, 4 > 9, 7 > 8, 7 > 9, 8 < 5, 8 < 6, 5 > 9, 6 > 9 |

| Lower Lip to E-Plane (mm) | 4.31 | <0.0001 | 1 < 7, 1 < 6, 1 > 9, 2 < 6, 2 > 9, 3 > 9, 4 < 6, 4 > 9, 7 > 9, 8 < 6, 8 > 9, 5 > 9, 6 > 9 |

| N′-Sn′ (mm) | 20.29 | <0.0001 | 1 < 2, 1 < 3, 1 < 4, 1 < 7, 1 < 8, 1 > 5, 1 < 6, 1 < 9, 2 > 5, 2 < 9, 3 > 5, 3 < 6, 3 < 9, 4 > 5, 4 < 9, 7 > 5, 7 < 6, 7 < 9, 8 > 5, 8 < 9, 5 < 6, 5 < 9, 6 < 9 |

| Sn′-Me′ (mm) | 7.62 | <0.0001 | 1 > 5, 1 > 6, 2 > 5, 2 > 6, 2 > 9, 3 > 5, 3 > 6, 4 > 5, 4 > 6, 7 > 5, 7 > 6, 8 > 5, 8 > 6, 5 < 9, 6 < 9 |

| N-ANS (mm) | 12 | <0.0001 | 1 > 3, 1 > 5, 2 > 3, 2 > 5, 3 < 4, 3 > 5, 3 < 6, 3 < 9, 4 > 5, 7 > 5, 7 < 9, 8 > 5, 5 < 6, 5 < 9 |

| ANS-Me (mm) | 4.76 | <0.0001 | 1 < 8, 1 > 5, 2 < 8, 3 < 8, 4 > 5, 7 < 8, 7 > 5, 8 > 5, 8 > 9, 5 < 6, 6 > 9 |

| Ar-Go (mm) | 22.38 | <0.0001 | 1 > 3, 1 > 4, 1 > 7, 1 > 8, 1 > 5, 1 > 6, 1 > 9, 2 > 3, 2 > 4, 2 > 7, 2 > 8, 2 > 5, 2 > 6, 2 > 9, 3 > 4, 3 > 7, 3 > 8, 3 > 9, 4 < 5, 4 < 6, 7 < 5, 7 < 6, 8 < 5, 8 < 6, 5 > 9, 6 > 9 |

| NA-APo (°) | 0.8 | 0.6021 | |

| FH-NPo (°) | 15.05 | <0.0001 | 1 < 3, 1 < 7, 1 > 5, 2 < 3, 2 < 4, 2 < 7, 2 > 5, 2 < 9, 3 > 4, 3 > 8, 3 > 5, 3 > 6, 3 > 9, 4 > 8, 4 > 5, 4 > 6, 7 > 8, 7 > 5, 7 > 6, 8 > 5, 5 < 6, 5 < 9, 6 < 9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panesar, S.; Zhao, A.; Hollensbe, E.; Wong, A.; Bhamidipalli, S.S.; Eckert, G.; Dutra, V.; Turkkahraman, H. Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation. Appl. Sci. 2023, 13, 6921. https://doi.org/10.3390/app13126921

Panesar S, Zhao A, Hollensbe E, Wong A, Bhamidipalli SS, Eckert G, Dutra V, Turkkahraman H. Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation. Applied Sciences. 2023; 13(12):6921. https://doi.org/10.3390/app13126921

Chicago/Turabian StylePanesar, Sumer, Alyssa Zhao, Eric Hollensbe, Ariel Wong, Surya Sruthi Bhamidipalli, George Eckert, Vinicius Dutra, and Hakan Turkkahraman. 2023. "Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation" Applied Sciences 13, no. 12: 6921. https://doi.org/10.3390/app13126921

APA StylePanesar, S., Zhao, A., Hollensbe, E., Wong, A., Bhamidipalli, S. S., Eckert, G., Dutra, V., & Turkkahraman, H. (2023). Precision and Accuracy Assessment of Cephalometric Analyses Performed by Deep Learning Artificial Intelligence with and without Human Augmentation. Applied Sciences, 13(12), 6921. https://doi.org/10.3390/app13126921