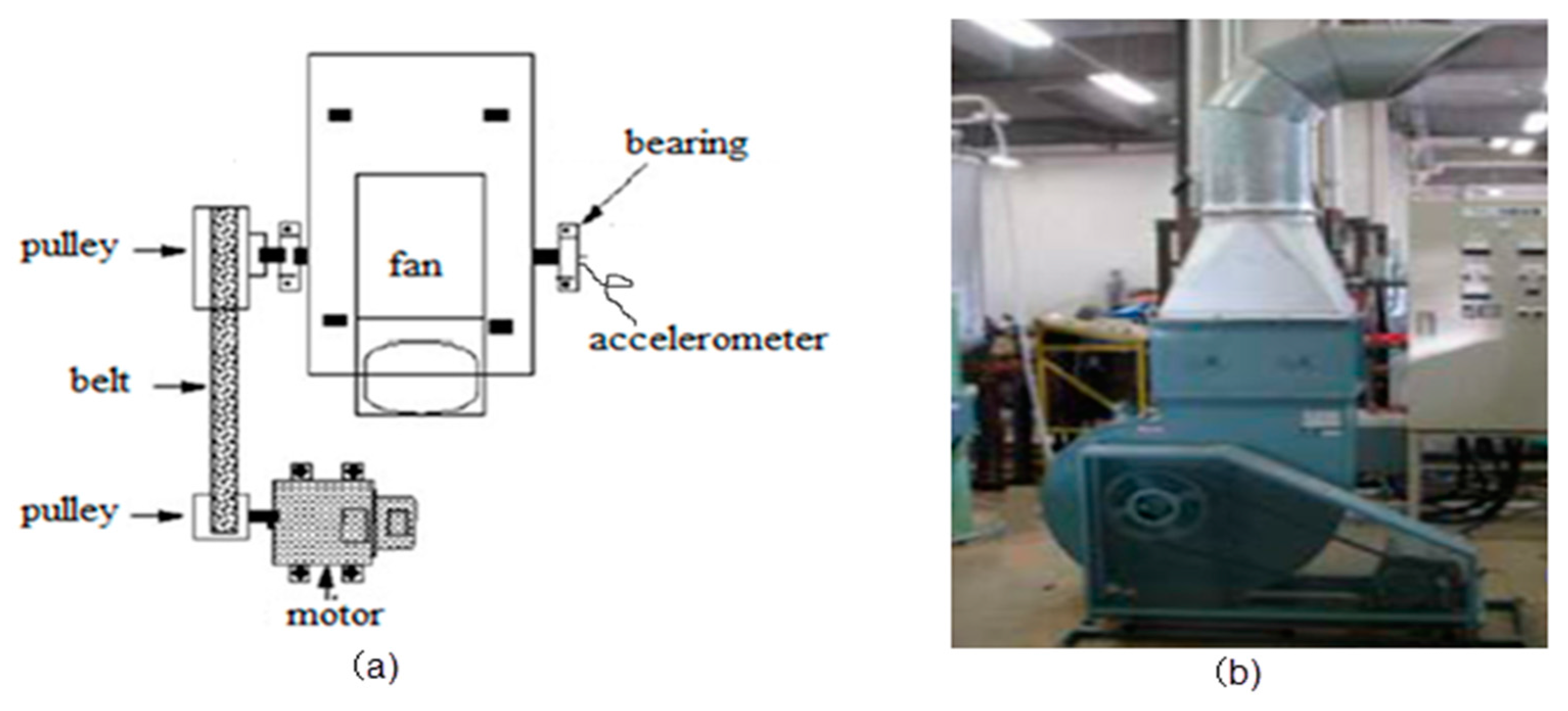

5.1. Test Platform

To verify the effectiveness of the method presented in this paper for the analysis of vibration signals from actual measurements, a rolling bearing fault signal of a centrifugal fan is analyzed.

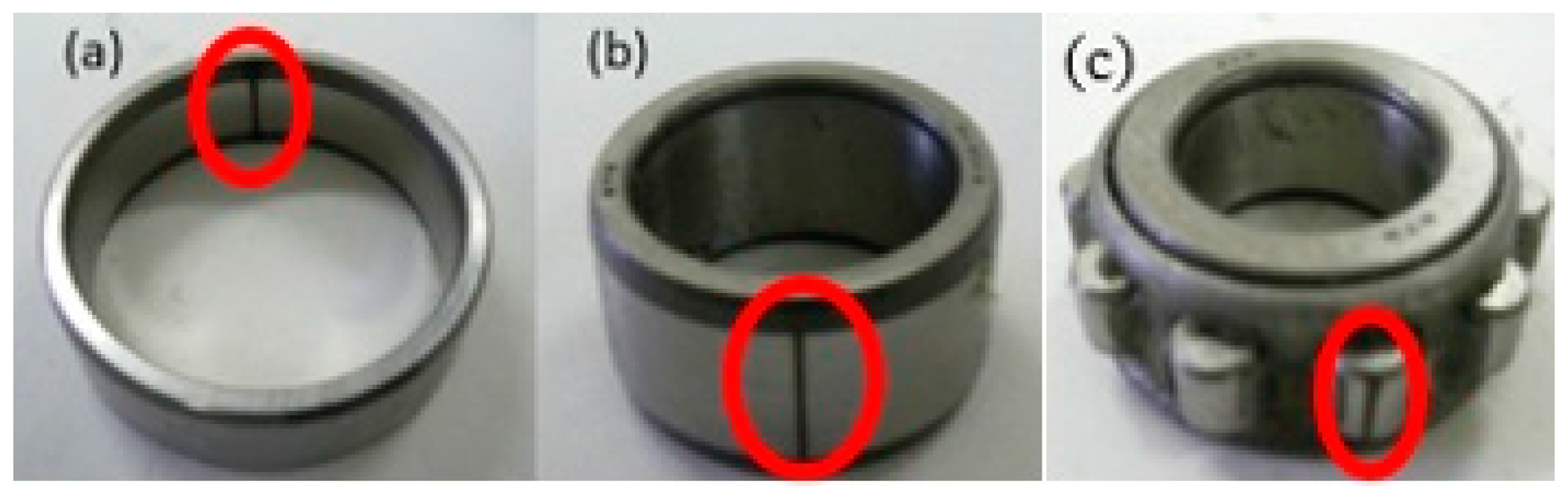

Figure 6 shows the centrifugal fan test platform used in this test. Based on a rolling bearing fault in an actual engineering project, a wire-cutting machine is employed to produce tiny dents in the rolling bearing’s outer ring, inner ring, and roller in the fan test bed to simulate early stage faults and defects in the outer ring, inner ring, and roller. Details are shown in

Figure 7. A PCB MA352A60 accelerometer (PCB MA352A60, PCB Piezotronics Inc., New York, NY, USA) is fixed at the top of the bearing pedestal via a screw to collect vibration signal data in the vertical direction, including the rolling bearing’s normal vibration signal and fault signals of the outer ring, inner ring, and roller. The signals are amplified via a sensor signal regulator (PCB ICP Model 480C02, PCB Piezotronics Inc., New York, NY, USA) and transmitted to a signal recorder (Scope Coder DL750,Yokogawa Co. Ltd., Tokyo, Japan). In the test, the rotation speed is set to 1000 rpm, the sampling frequency

fs is 50 kHz, and the sampling duration is 10 s. The size of the data collected is 2,000,000, and the data length of each state is 500,000.

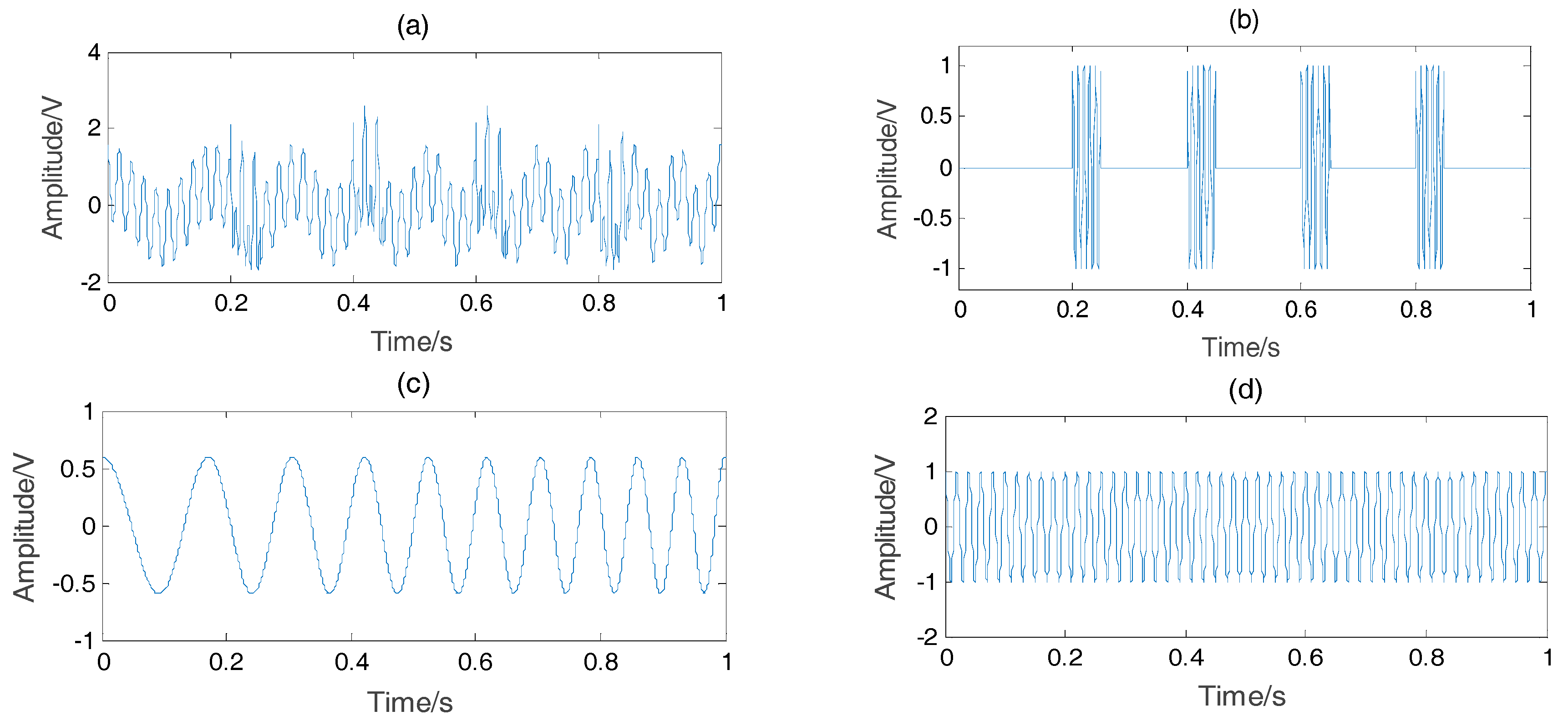

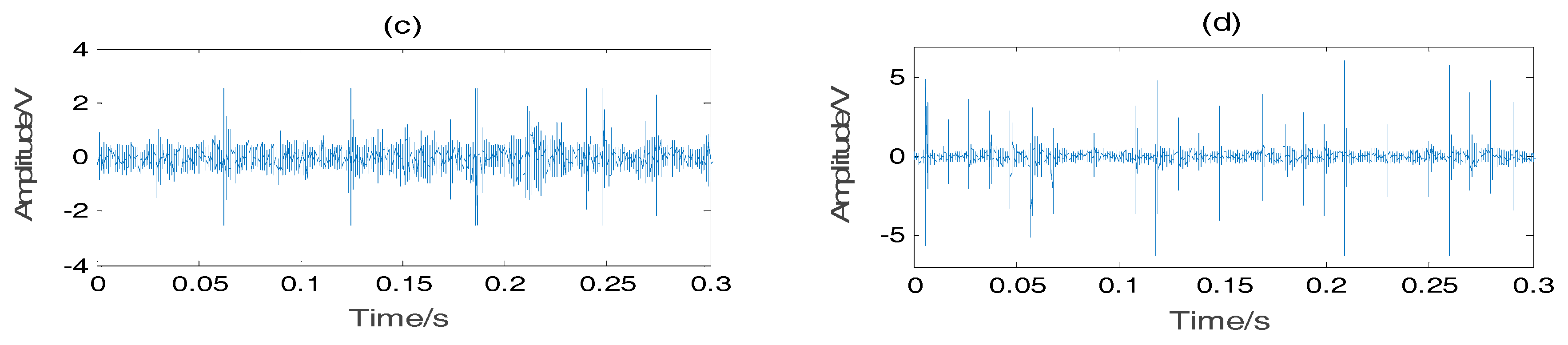

Figure 8 shows the original vibration signal in each state. The bearings that are utilized, the specifications of the test bearing, the size of the faults, and other necessary information are listed in

Table 1.

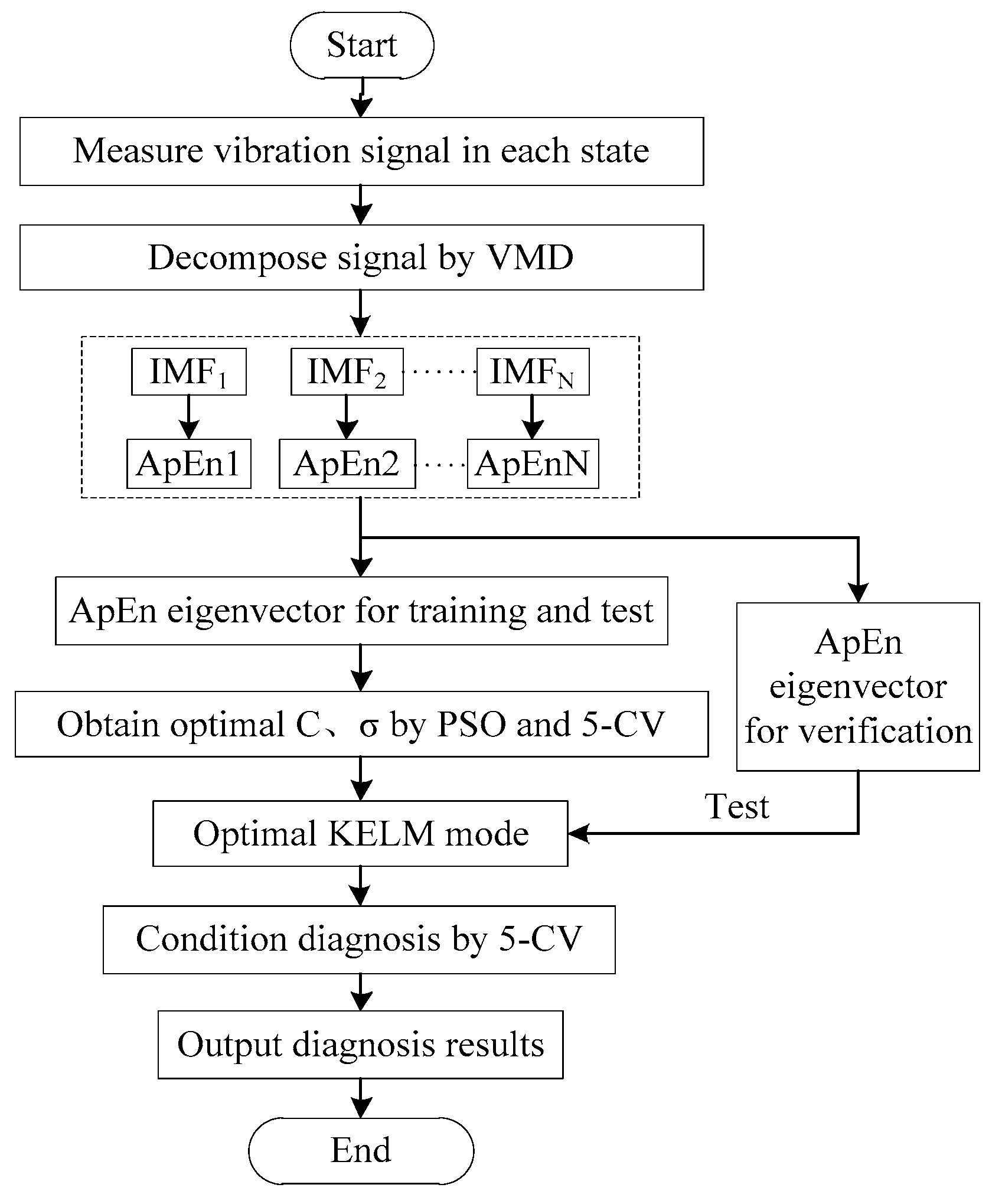

5.2. Condition Detection via the Proposed Method

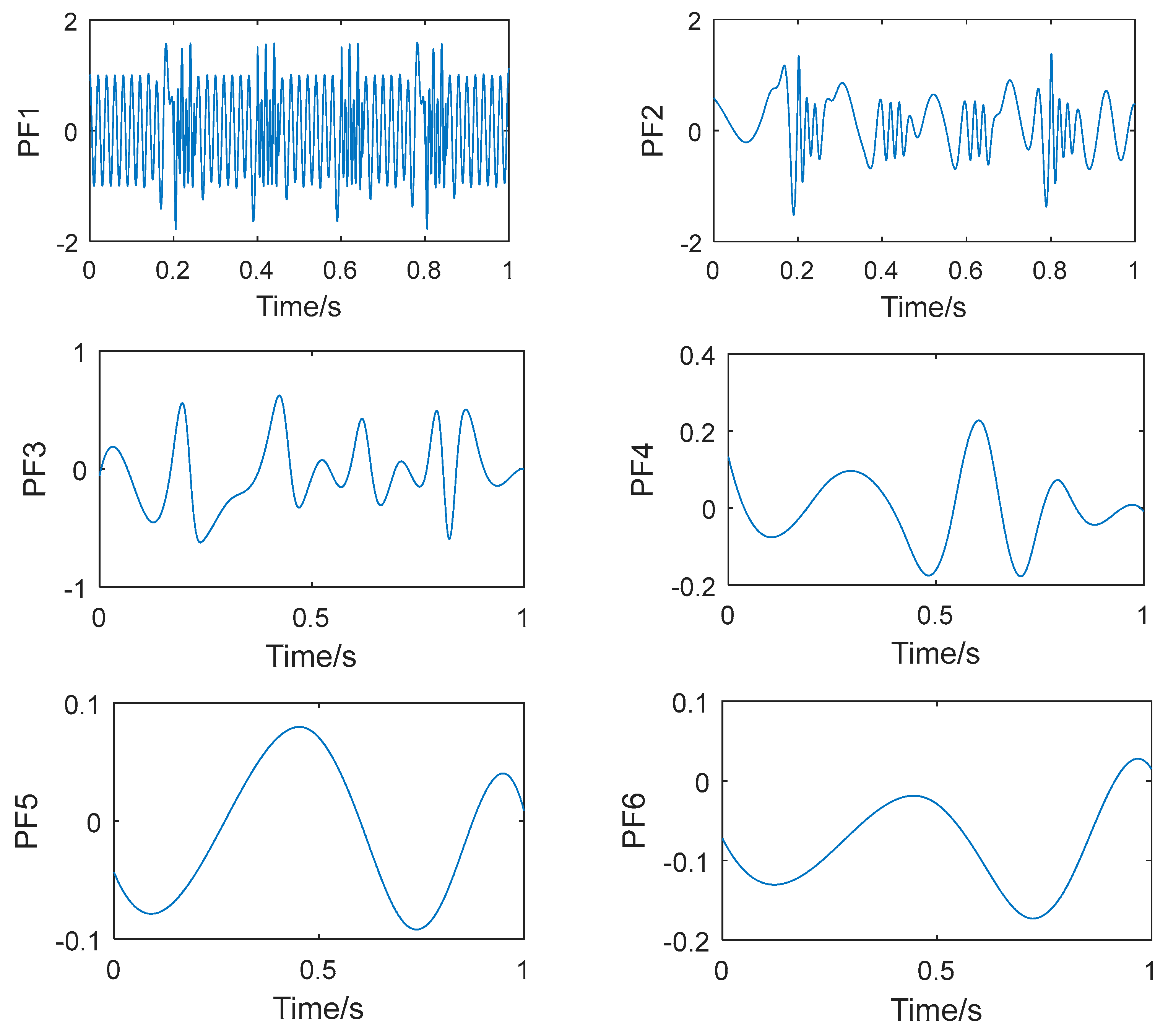

Figure 9 shows the procedure of the fault diagnosis method proposed in this paper. First, the vibration signal is decomposed via the VMD method introduced in

Section 2.

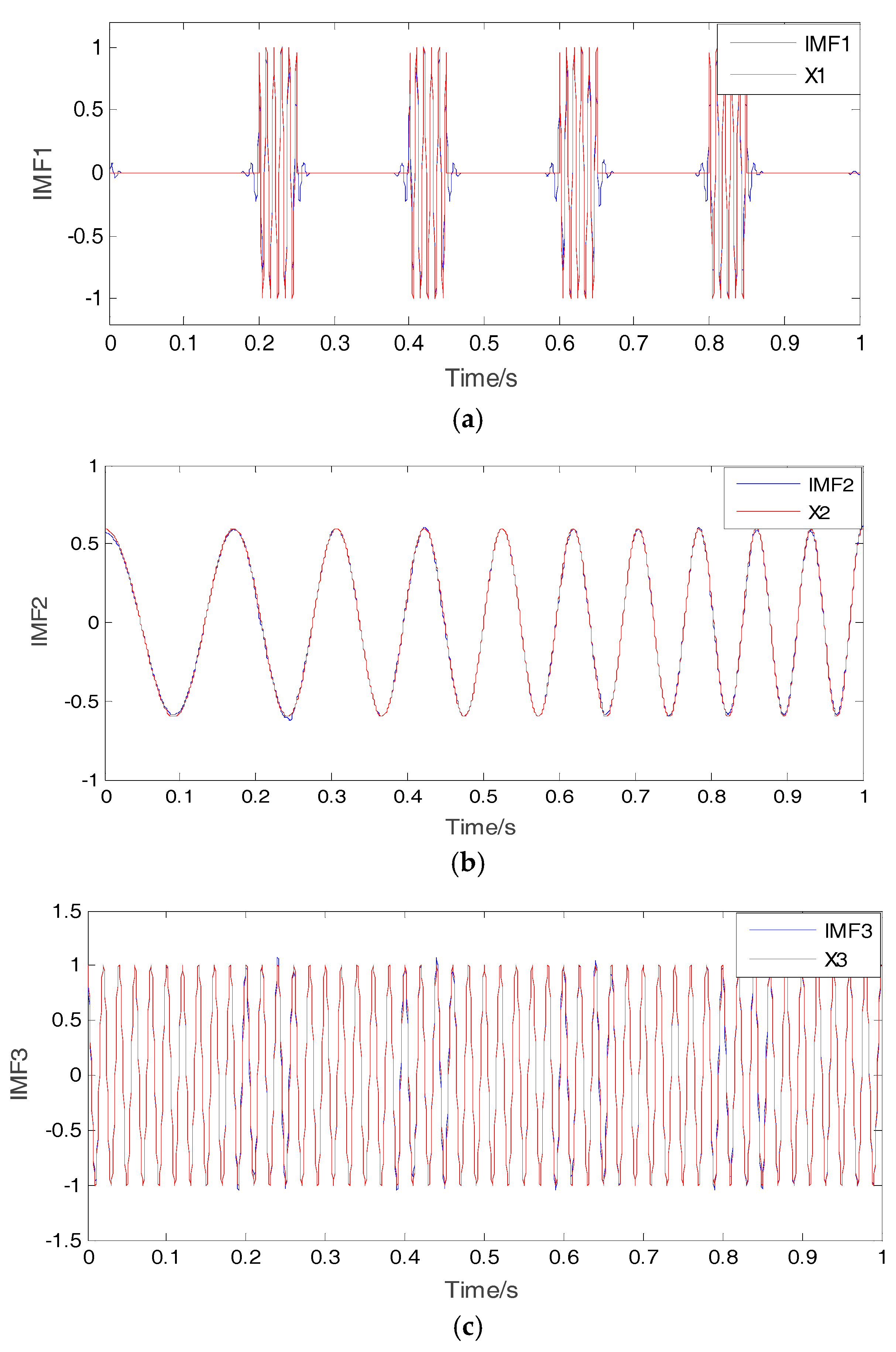

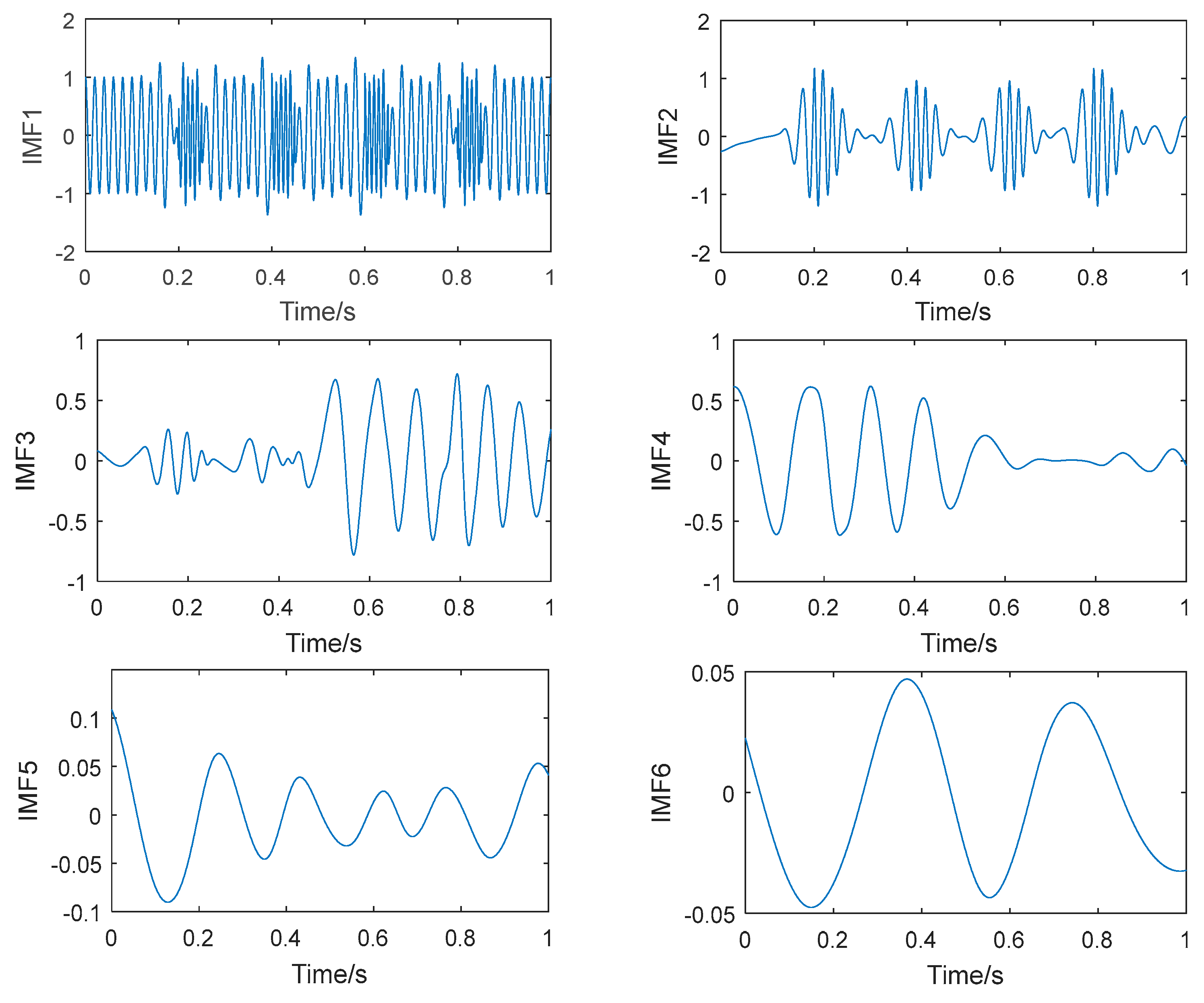

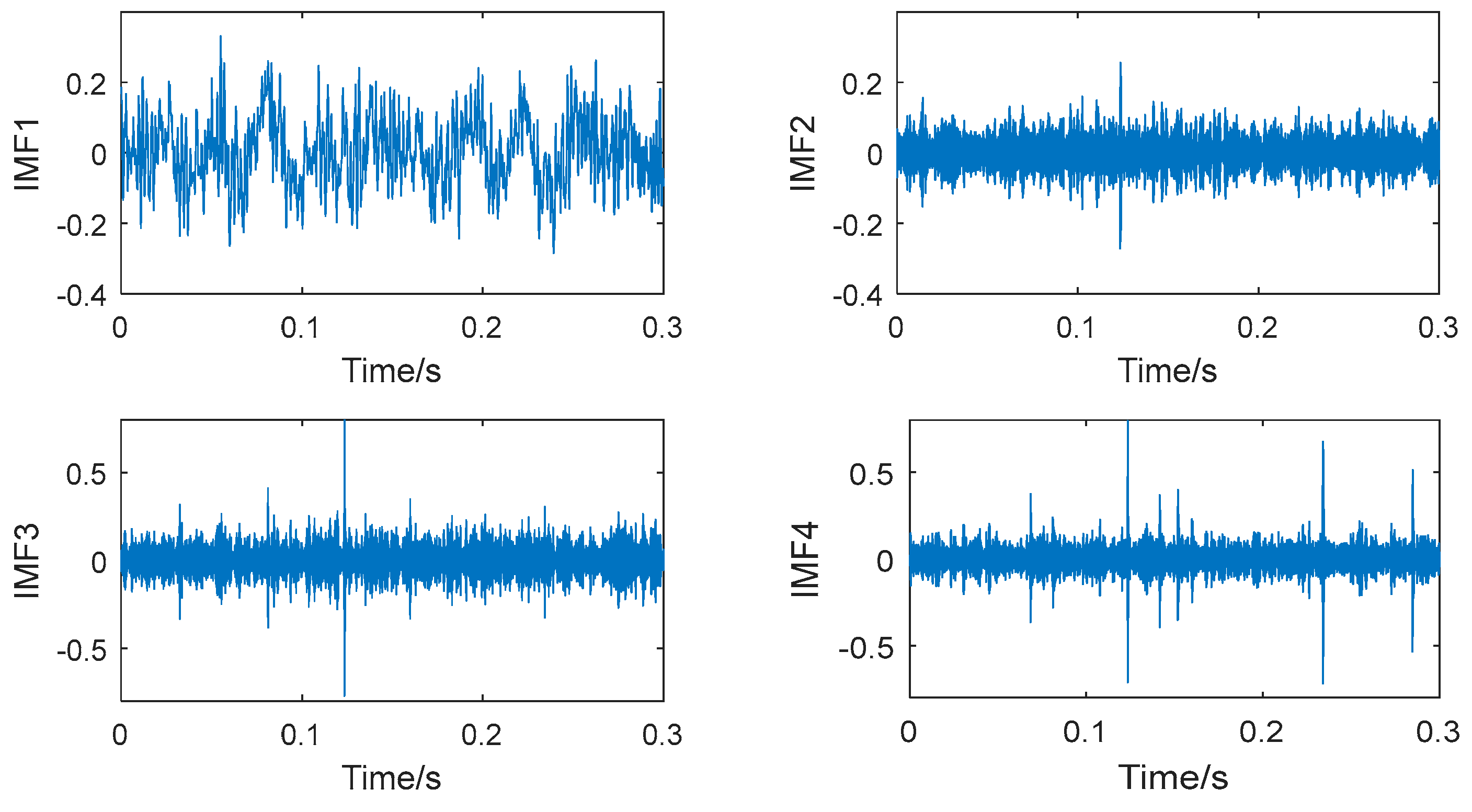

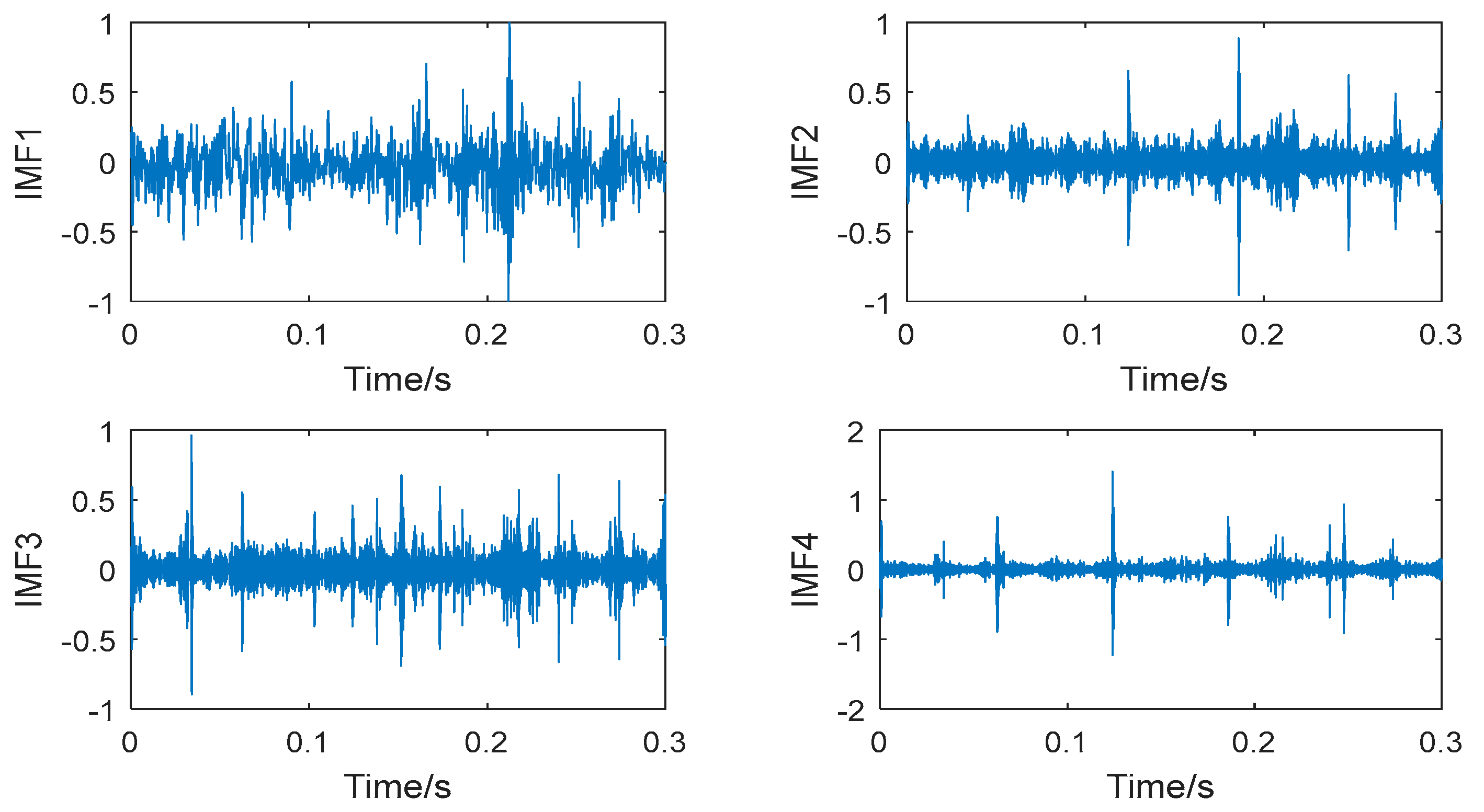

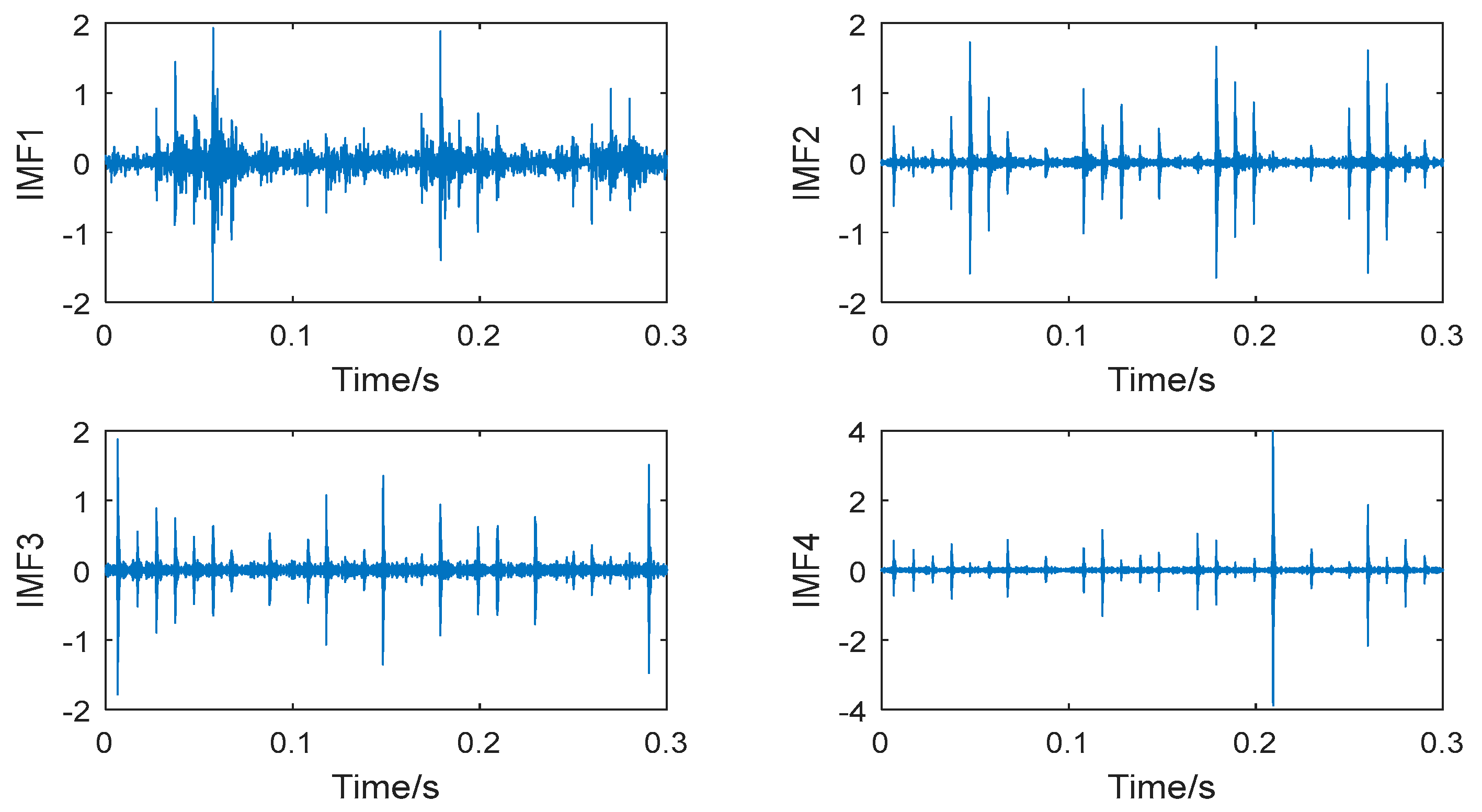

Figure 10,

Figure 11,

Figure 12 and

Figure 13 show the decomposition results of the collected vibration signals of the bearing in each state. Each state has four corresponding decomposed IMF components, and 300

ApEn values are calculated for each IMF. An eigenvector is created from the

ApEn of each component.

To explain the efficiency of

ApEn, we compare the sensitivity of

ApEn with RMS, skewness, and kurtosis by the detection index method (DI) [

26].

Suppose that x1 and x2 are the SP values calculated from the signals measured in state 1 and state 2, respectively, and their average and standard deviation are μ and σ. The DI is calculated by

The Distinction Rate (DR) is defined as

It is obvious that the larger the value of the DI, the larger the value of the DR will be, and therefore, the better the SP will be. Thus, the DI can be used as the index of quality to evaluate the distinguishing sensitivity of the SP.

Table 2 lists the DI values of each SP. The distribution information of the

ApEn is shown in

Table 3. From

Table 2, the DI values of

ApEn are higher than RMS, skewness, and kurtosis; that is to say, the sensitivity of

ApEn for bearing diagnosis is higher than that of other SPs.

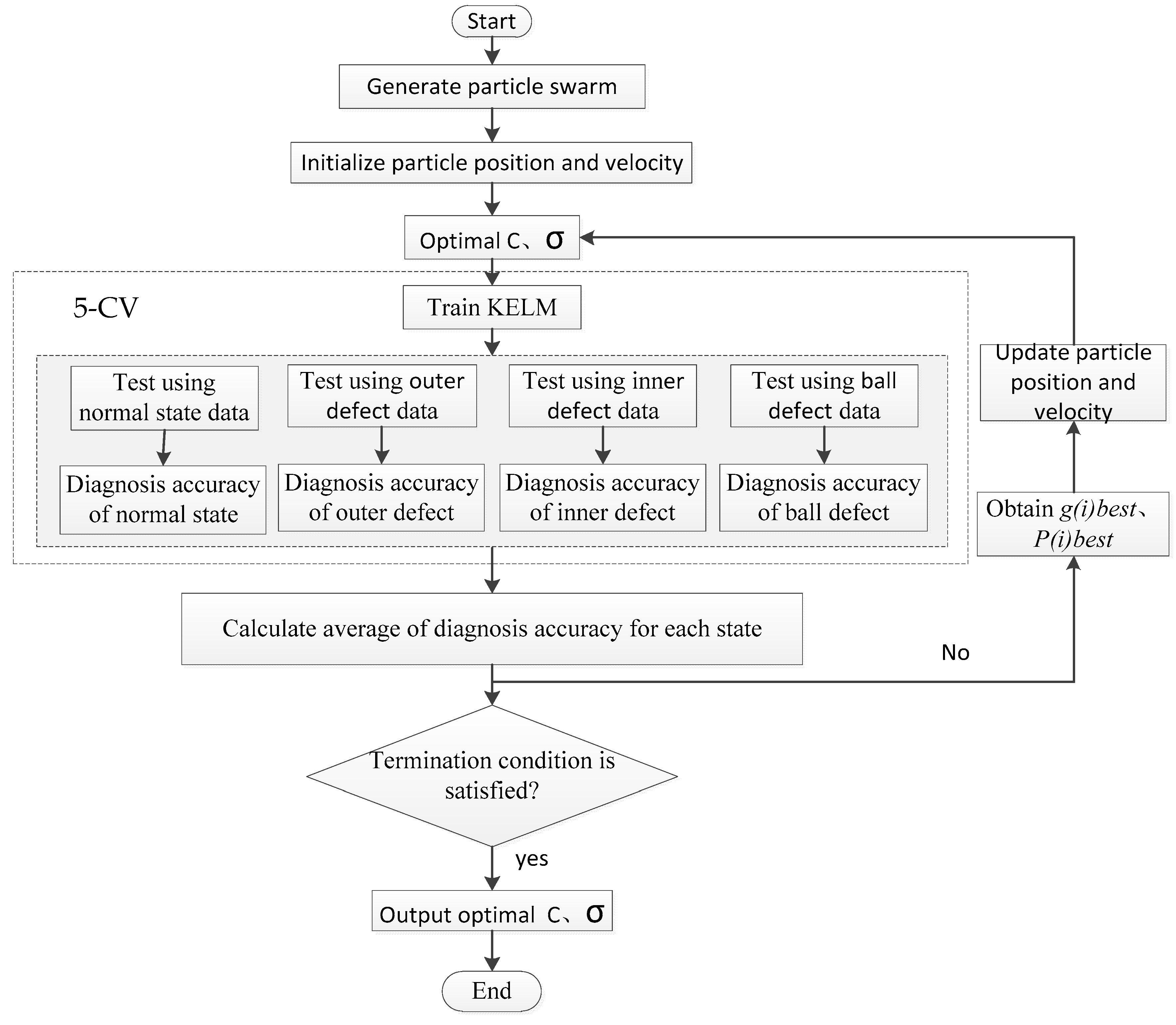

The KELM parameters are optimized via the PSO algorithm to obtain the KELM training model with optimal parameters. The process of KELM model training and optimization of the parameters

C and

σ is shown in

Figure 14. Assume that a particle swarm contains 50 particles, the acceleration factors

η1 and

η2 are 2, and the maximum number of iterations is 1000. In order to minimize possible effects of data outliers, a fivefold cross validation method (5-CV) is adopted for parameter optimization and condition identification. The calculated

ApEn sample is randomly partitioned into five subsamples. Of the five subsamples, three subsamples are retained as the training data, one subsample is used as test data for parameter optimization, and the remaining one subsample is used as verification data for condition identification of the bearing. As an example, some PSO-KELM training data are listed in

Table 4.

After parameter optimization, the bearing condition identification is performed by the optimal KELM and 5-CV method. The verification result shown in

Table 5 demonstrates that the normal state average diagnosis accuracy reaches 100%, the outer ring defect state average fault diagnosis accuracy reaches 90.3%, the outer ring defect state average fault diagnosis accuracy reaches 85.7%, the roller element defect state average fault diagnosis accuracy reaches 96.3%, and the overall accuracy reaches 93.08%. These diagnosis results demonstrate the effectiveness of the fault diagnosis method proposed in this paper.

To further verify the effectiveness of the PSO-KELM algorithm proposed in this paper, the backpropagation (BP) neural network, conventional ELM and SVM algorithms, and the PSO-KELM algorithm proposed in this paper are compared. When the BP neural network and conventional ELM and KELM algorithms are used for bearing state identification and diagnosis, the vibration data are identical to those for the PSO-KELM algorithm. Additionally, vibration signal features are extracted via VMD and the

ApEn method introduced in

Section 2 and

Section 3. In the SVM, a one-against-one method [

27] is used to establish a multiclass SVM system. The RBF kernel function is also employed, and the penalty coefficients

C and kernel function parameter σ of the SVM are optimized by using grid search. The grid search range of

C and σ are 2

−8~2

8, and 2

−14~2

14, respectively, and the search step is 0.1.

As shown in

Table 5, when the diagnosis is based on a BP neural network, the normal state diagnosis accuracy reaches 90%, the outer ring defect state fault diagnosis accuracy is 68.8%, some outer ring defect vibration data are classified incorrectly as in the normal state, the inner ring defect state fault diagnosis accuracy is only 51.7%, nearly half of inner ring defect vibration data are incorrectly classified into outer ring defect and normal states, the roller element defect state fault diagnosis accuracy reaches 88.6%, and the overall accuracy is 74.75%. When the diagnosis is performed using the ELM method, the normal state diagnosis accuracy reaches 97.2%, the outer ring defect state fault diagnosis accuracy is 79%, the outer ring defect state fault diagnosis accuracy is 66%, the roller element defect state fault diagnosis accuracy reaches 95.6%, and the overall accuracy is 85.5%. When the diagnosis is based on the multiclass SVM method, the normal state diagnosis accuracy reaches 100%, the outer ring defect state fault diagnosis accuracy reaches 88.7%, the outer ring defect state fault diagnosis accuracy reaches 77.4%, the roller element defect state fault diagnosis accuracy reaches 96.8%, and the overall accuracy is 90.73%. A comparison of the results of the above four diagnosis methods reveals that the PSO-KELM algorithm has the highest identification accuracy for the normal bearing, outer ring defect, and inner defect states, in addition to the highest overall diagnosis accuracy.

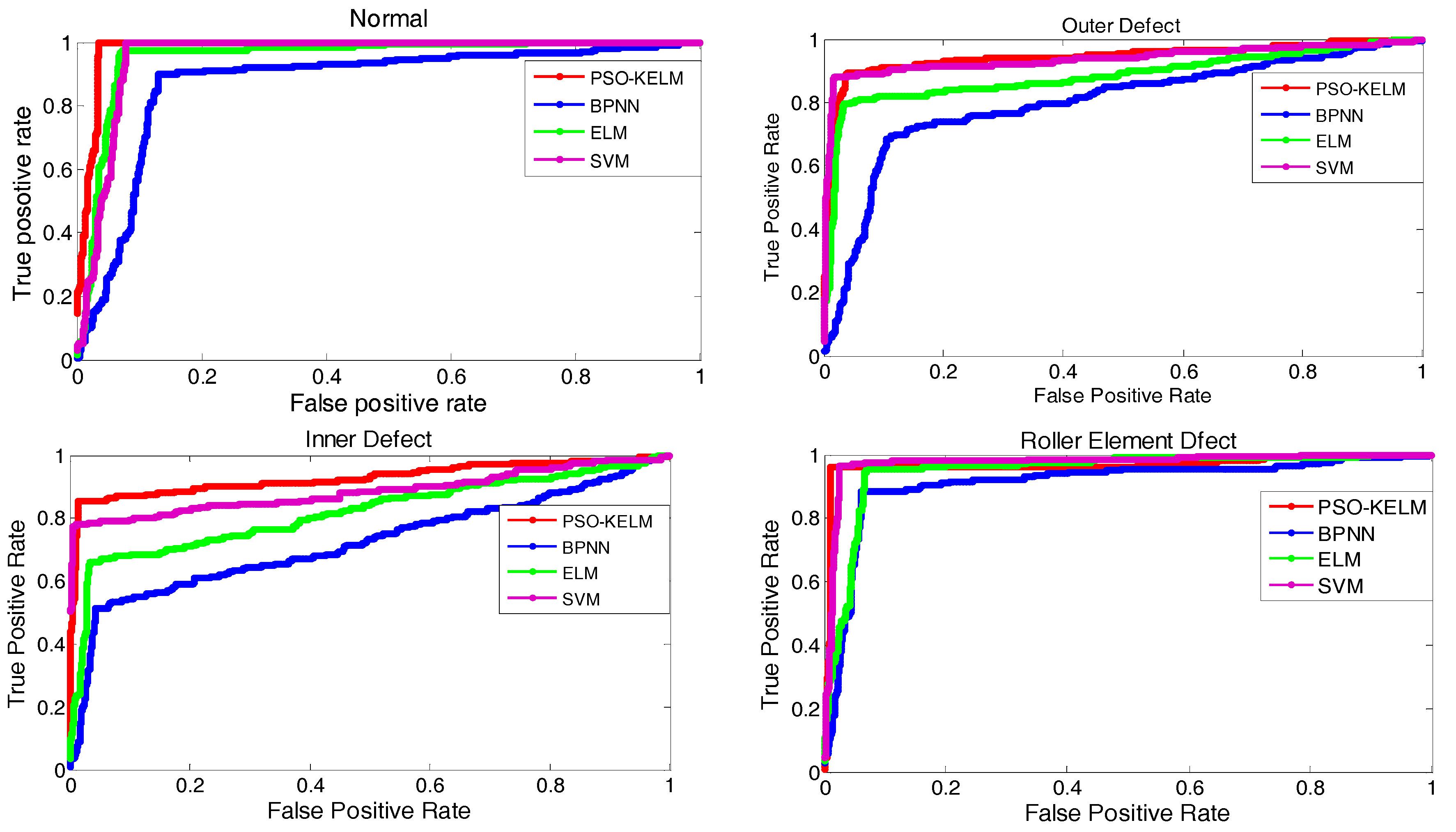

In this study, a receiver operating characteristic (ROC) curve and area under the ROC curve (AUC) are also employed to evaluate the performance of the different classifiers.

Figure 15 shows the ROC curve of each classifier for condition diagnosis, and the corresponding AUC values are listed in

Table 6. As shown in

Figure 15 and

Table 6, the AUC values of PSO-KELM are higher than that of the BP neural network, ELM, and SVM algorithms, which shows that PSO-KELM has the best classification performance.

To further verify the diagnostic capability of the method proposed in this paper in various operating conditions, the bearing vibration signal in two kinds of failure dimensions are measured for condition diagnosis, and the measuring speed is set to 600 rpm, 800 rpm, 1000 rpm, and 1200 rpm, respectively. The diagnostic results are listed in

Table 7. From

Table 7, with the increase in rotating speed and fault dimension, the diagnostic accuracy of the PSO-KELM algorithm is improved. The overall accuracy of PSO-KELM in various operating conditions is greater than 80%, and the highest accuracy can reach 95.02%.

The above diagnosis results demonstrate the effectiveness of the fault diagnosis method proposed in this paper for rolling bearing state monitoring and identification.